Proactive Model for Handling Conflicts in Sensor Data Fusion Applied to

Robotic Systems

Gilles Neyens and Denis Zampunieris

Department of Computer Science, University of Luxembourg, Luxembourg

Keywords:

Decision Support System, System Software, Proactive Systems, Robotics.

Abstract:

Robots have to be able to function in a multitude of different situations and environments. To help them

achieve this, they are usually equipped with a large set of sensors whose data will be used in order to make

decisions. However, the sensors can malfunction, be influenced by noise or simply be imprecise. Existing

sensor fusion techniques can be used in order to overcome some of these problems, but we believe that data

can be improved further by computing context information and using a proactive rule-based system to detect

potentially conflicting data coming from different sensors. In this paper we will present the architecture and

scenarios for a generic model taking context into account.

1 INTRODUCTION

Robots generally have to be able to adapt to all kinds

of different situations. In order to manage this task,

they have to combine data from several different sen-

sors which will then be used by the robot to make de-

cisions. Sensor fusion is used to achieve this and re-

duce the uncertainty the resulting information would

have in case the sensors were used individually.

A lot of these techniques are carried out on a set of

homogeneous sensors while others exist that are used

on heterogeneous sensors, like for example to calcu-

late a more accurate position of the robot (Bostanci

et al., 2018; Nemra and Aouf, 2010).

Similarly, there exists work on integrating a trust

model in to the data fusion process(Chen et al., 2017).

The approach is calculating trust values for each sen-

sor based on historical data of a sensor, the current

data, and a threshold representing the upper bound of

the maximum allowed difference between the current

data and the historical data.

While the previous method attributes trust or con-

fidence values to sensors based on historical data, it

is only based on the data of the same sensor. Other

methods try to improve this by taking context into

account like (Tom and Han, 2014) or (Akbari et al.,

2017) in which they use rules to adapt the parameters

of a kalman filter based on the current context.

The model we propose in this paper will be set be-

tween the sensors and the final decision making of the

robot, and will work together with other fusion meth-

ods in order to improve the information that the robot

receives. The goal is to reduce the probability of mal-

functioning or corrupted sensors negatively affecting

the robot. The system will in a first step use classifi-

cation algorithms tailored for each sensor in order to

obtain confidence/trust values for each sensor and in

a second step use a priori knowledge about relations

between sensors and a proactive rule-based system to

further refine the confidence for each sensor and po-

tentially improve the data based on the current con-

text.

In the next section, we are going to give a quick

overview of existing works in the field. In section 3

we will propose our model and in section 4 the inter-

nal workings of the model will get explained in detail.

Finally, we will conclude and give some prospects for

future work.

2 STATE OF THE ART

In the past years several sensor fusion methods have

been used to aggregate data coming from different

sensors. Depending on the level of fusion, different

techniques have been used. In robotics the most pop-

ular method for low-level data is the Kalman filter

which was developed in 1960 (Kalman, 1960) along

with its variants the extended Kalman filter or the un-

scented Kalman filter who are often used in naviga-

tion systems (Hide et al., 2003; Sasiadek and Wang,

1999), but also other methods like the particle filter.

468

Neyens, G. and Zampunieris, D.

Proactive Model for Handling Conflicts in Sensor Data Fusion Applied to Robotic Systems.

DOI: 10.5220/0007835504680474

In Proceedings of the 14th International Conference on Software Technologies (ICSOFT 2019), pages 468-474

ISBN: 978-989-758-379-7

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

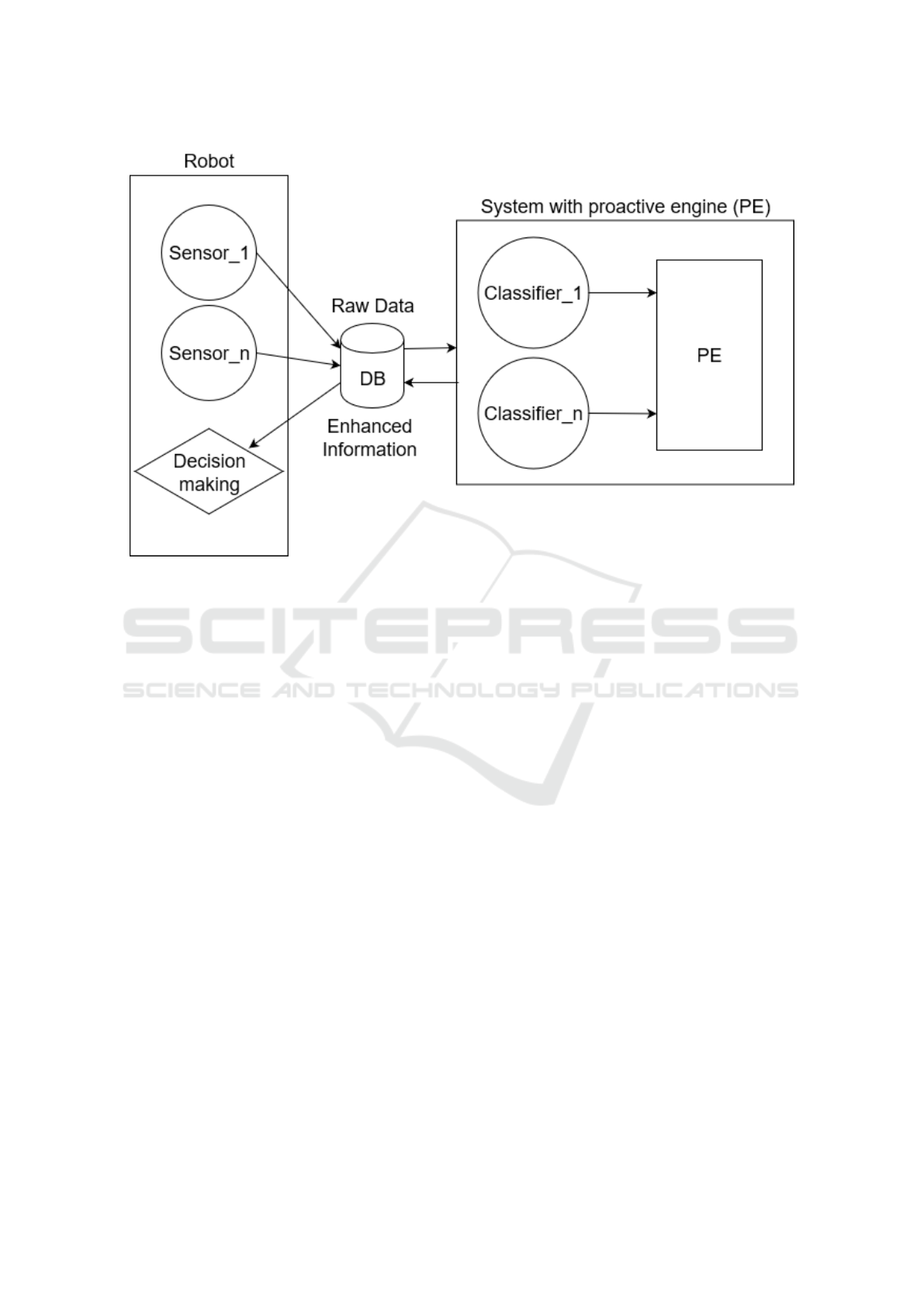

Figure 1: System architecture.

On a decision level other methods like the Dempster

Shafer theory (Dempster, 1968; Shafer, 1976) is used.

For the purpose of this paper, we are going to

focus on work done on Kalman and particle filters.

Some time ago, studies which used either of these,

often did not take sensor failures and noise into ac-

count, which made these solutions vulnerable in harsh

and uncertain environments. A recent study partly ad-

dressed these issues by using a particle filter method

in combination with recurrent neural networks (Tu-

ran et al., 2018). This solution managed to correctly

identify situations in which sensors were failing but

did not yet take sensor noise into account. In (Bader

et al., 2017) the authors propose a fault tolerant ar-

chitecture for sensor fusion using Kalman filters for

mobile robot localization. The detection rate of the

faults injected was 100%, however the diagnosis and

recovery rate is lower at 60%. The study in (Yazd-

khasti and Sasiadek, 2018) proposed two new exten-

sions to the kalman filter, the Fuzzy Adaptive Iter-

ated Extended Kalman Filter and the Fuzzy Adap-

tive Unscented Kalman Filter in order to make the fu-

sion process more resistant to noise. In (Kordestani

et al., 2018), the authors used the extended kalman

filter in combination with bayesian method and man-

aged to detect and predict not only individual failures

but also simultaneous occurring failures, however no

fault handling was proposed.

3 MODEL

3.1 Architecture

The general architecture of our model is shown in

Fig. 1. The sensors of the robot send their data to

our system where the classifiers attribute a confidence

value for each sensor individually (Neyens and Zam-

punieris, 2017). The proactive engine then uses the

results from the classifiers as well as the data in the

knowledge base in order to further check the trustwor-

thiness of the different sensors by detecting and re-

solving potential conflicts between sensors (Neyens,

2017; Neyens and Zampunieris, 2018).

The robot itself will pass the data coming from the

sensors to our system where it will get processed. It

then will get enhanced data along with information on

which sensors to trust from our system, which it will

then use to make a decision.

In our system the data will first get processed

by some classification algorithms, like for example

Hidden Markov Models or Neural Networks, in or-

der to determine if a sensor is failing or still work-

ing correctly, along with a confidence value for each

sensor. The results from the classification will then

be passed to our rule-based engine where it will get

passed through a series of steps which will be de-

scribed in Fig. 2.

Proactive Model for Handling Conflicts in Sensor Data Fusion Applied to Robotic Systems

469

Table 1: Sensor registration table.

Sensor name List of properties Minimum confidence Grace period History Length

GPS position, speed 0.8 15s 1000

Accelerometer acceleration 0.8 12s 800

Light lightlevel 0.5 30s 50

... ... ... ... ...

First we will describe what information our sys-

tem needs in order to fulfill its tasks. For every sensor

we need to know different variables as shown in ta-

ble 1. The name of the sensor is the identifier for a

given sensor and will be unique. The list of proper-

ties are the properties that can be computed from the

data from a given sensor, for example for a GPS it

would be position and speed. The minimum confi-

dence is the minimum value for the confidence com-

puted based on the classifiers for a sensor in order to

trust a sensor. Grace period is the time a sensor will

still be used for computations after deemed as untrust-

worthy. The history length is the amount of past data

kept for a given sensor.

In order for the system to know how different

properties affect each other or how they can be cal-

culated we need a knowledge base for these proper-

ties. The properties can either affect other properties

(e.g. a low light level could affect the camera and thus

image recognition modules) or they can be calculated

based on past data and other properties (e.g. position).

For the first case, a range of values is defined for each

property that defines the allowed values for a property

before it starts to affect other properties. For the sec-

ond case, a list of operations is needed, that allows the

system to know how to use past data and other prop-

erties to calculate an estimate of the given property.

Finally, the scenarios described in section 4 should

be able to synchronize between themselves and oper-

ate on the same data as the scenarios in the previous

steps of the flow did. For this, we divide the data into

chunks and attribute a number called execution num-

ber to each chunk. This number along with the execu-

tion step number then allows each scenario to decide

on which data it should currently operate.

3.2 Proactive System

We use Artificial Neural Networks (ANNs) to first

analyse the data, but only basing the model on them

would make it very difficult to follow decisions. Also,

as we want the system to be able to react to all kinds of

different contexts, training the ANNs would be very

difficult. Therefore we believe that using a rule-based

system is better suited for this task. In our model we

use the proactive engine developed in our team over

the years.

The concept of proactive computing was intro-

duced in 2000 by Tennenhouse (Tennenhouse, 2000)

as systems working for and on behalf of the user on

their own initiative (Salovaara and Oulasvirta, 2004).

Based on this concept a proactive engine (PE) which

is a rule-based system was developed (Zampunieris,

2006). The rules running on the engine can be con-

ceptually regrouped into scenarios with each scenario

regrouping rules that achieve a common goal (Zam-

punieris, 2008; Shirnin et al., 2012). A Proactive Sce-

nario is the high-level representation of a set of Proac-

tive Rules that is meant to be executed on the PE. It

describes a situation and a set of actions to be taken in

case some conditions are met (Dobrican et al., 2016).

The PE executes these rules periodically. The sys-

tem consists of two FIFO queues called currentQueue

and nextQueue. The currentQueue contains the rules

that need to be executed at the current iteration, while

the nextQueue contains the rules that were generated

during the current iteration. At the end of each itera-

tion the rules from the nextQueue will be added to the

currentQueue and the next Queue will be emptied.

A rule consists of any number of input parameters and

five execution steps (Zampunieris, 2006). These five

steps have each a different role in the execution of the

rule.

1. Data acquisition

During this step the rule gathers data that is impor-

tant for its subsequent steps. This data is provided

by the context manager of the proactive engine,

which can obtain this data from different sources

such as sensors or a simple database.

2. Activation guards

The activation guards will perform checks based

on the context information whether or not the con-

ditions and actions part of the rule should be exe-

cuted. If the checks are true, the activated variable

of this rule will be set to true.

3. Conditions

The objective of the conditions is to evaluate the

context in greater detail than the activation guards.

If all the conditions are met as well, the Actions

part of the rule is unlocked.

4. Actions

This part consists of a list of instructions that will

be performed if the activation guards and condi-

ICSOFT 2019 - 14th International Conference on Software Technologies

470

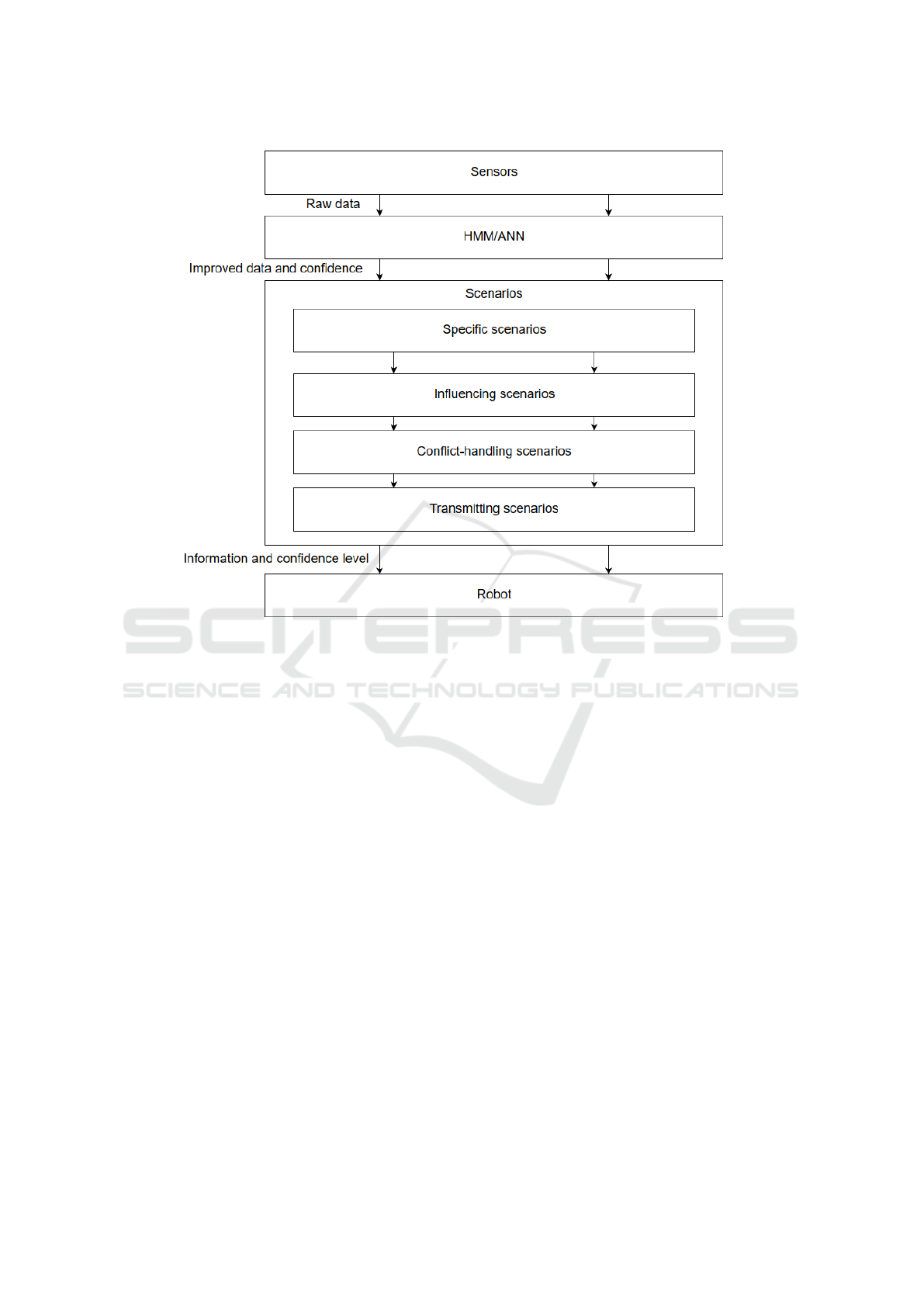

Figure 2: Scenario Flow.

tion tests are passed.

5. Rule generation

The rule generation part will be executed indepen-

dently whether the activation guards and condi-

tion checks were passed or not. I this section the

rule creates other rules in the engine or in some

cases just clones itself.

d a t a = g e t D a t a ( d a t a R e q u e s t ) ;

a c t i v a t e d = f a l s e ;

i f ( c h e c k A c t i v a t i o n G u a r d s ( ) ) {

a c t i v a t e d = t r u e ;

i f ( c h e c k C o n d i t i o n s ( ) ) {

e x e c u t e A c t i o n s ( ) ;

}

}

g e n e r a t e N e w R u l e s ( ) ;

d i s c a r d R u l e F r o m S y s t e m ( c u r r e n t R u l e ) ;

Figure 3: The algorithm to run a rule.

During an iteration of the PE, each rule is executed

one by one. The algorithm to execute a rule is pre-

sented in Fig. 3. The data acquisition part of the rule

is run first and if it fails none of the other parts of the

rule is executed. The rules can then be regrouped into

scenarios. Multiple scenarios can be run at the same

time and can trigger the activation of other scenarios

and rules.

4 SCENARIOS

In Fig. 2, the flow of the data in the system is de-

scribed. It gets first passed from the sensors of the

robot to our system where the classifiers assign a con-

fidence value to the sensors. It will then get passed on

to the rule-based system where the confidences will

get updated and the data will get improved using dif-

ferent scenarios. Finally, the data and confidence level

will get sent back to the robot. This section is dedi-

cated to the different types of scenarios running on the

rule-based engine.

4.1 Scenario Flow

1. Specific scenarios

During this step scenarios specifically designed

for a single sensor try to further improve the data

and/or confidence of the sensor.

Proactive Model for Handling Conflicts in Sensor Data Fusion Applied to Robotic Systems

471

2. Influencing scenarios

This is the first step in which context information

will be taken into account in order to adapt the

confidence of some sensors. Data coming from

some sensors as well as their confidence will be

used in order to compute the context and to decide

whether the confidence of related sensors should

be adapted.

3. Conflict-handling scenarios

In a third step, the system will solve the conflicts

that can occur between the data/information com-

ing from different sensors. Those conflicts can ei-

ther occur because the some sensors give data for

the same property (e.g.: distance to object based

on infrared and ultrasonic sensors) or because the

property that a sensor gives the data for can be

computed based on several other sensors (e.g. po-

sition). Based on the confidence attributed to the

sensors in the previous steps it will then be de-

cided which sensors to trust.

4. Transmitting scenarios

Finally the output information and confidence lev-

els coming from our system will then be trans-

ferred to the robot, either through the initial sen-

sor channels or through an additional virtual sen-

sor channel if the robot’s configuration allows it.

4.2 Implementation

In the system itself, the scenarios are implemented as

a set of rules. They can be put into 3 main categories.

1. Check for data

These rules check whether a Scenario has any data

to operate on and triggers the next rules in the sce-

nario if this is the case. The skeleton for these

rules is generic for which the pseudo code can be

seen in Fig. 4.

2. Computation

These rules are specific for every step described in

the previous section and can be very different de-

pending on the step, sensor and property they are

operating on. An example of a computation rule

is shown in Fig. 5 which computes how the con-

fidence of an infrared sensor is changed based on

the amount of light around the robot which could

potentially affect the accuracy of the infrared dis-

tance sensor.

3. Save data

These rules simply save the results from the com-

putation in an appropriate manner. The skeleton

for these rules is generic as well and the pseudo

code is shown in Fig. 6.

d a t a A c q u i s i t i o n ( ) {

d a t a E x i s t s = c h e c k D a t a E x i s t s ( s e n s o r

, p r o p e r t y , e x e c u t i o n n u m b e r ,

EXECUTION STEP ) ;

}

b o o l e a n a c t i v a t i o n G u a r d s ( ) {

r e t u r n d a t a E x i s t s ;

}

b o o l e a n c o n d i t i o n s ( ) {

r e t u r n t r u e ;

}

a c t i o n s ( ) {

t h i s . e x e c u t i o n n u m b e r ++;

}

b o o l e a n r u l e s G e n e r a t i o n ( ) {

ad d R ul e ( s e l f ) ;

s t a r t S c e n a r i o s ( e x e c u t i o n n u m b e r ,

EXECUTION STEP ) ;

r e t u r n t r u e ;

}

Figure 4: Generic check data rule.

4.3 Example

In this section, we are going to discuss an example

with multiple sensors that could lead to conflicting in-

formation, that need to be resolved. The situation is

the following: A GPS has been working correctly un-

til recently, but now it has started to malfunction giv-

ing a slightly inaccurate position as the GPS antenna

is coated in ice. The system executes its loop:

1. As the position is only slightly inaccurate, the

classifiers still attribute a high confidence value to

the GPS.

2. For the same reason, the specific sensor scenarios

do not detect that anything is wrong and do not

change the confidence value.

3. One of the influencing scenarios uses the temper-

ature sensor and camera, detects that the tempera-

ture is below 0 and that there might be snow and

because the confidences of these sensors are high

enough concludes that it might have an effect on

the GPS and thus updates the confidence of the

GPS by calling the updateConfidence() function

like shown in Fig. 5.

4. One of the conflict handling scenarios compares

the current values given by the GPS to a value cal-

culated based on the old position, speed, accelera-

tion and direction and detects that the discrepancy

ICSOFT 2019 - 14th International Conference on Software Technologies

472

d a t a A c q u i s i t i o n ( ) {

l i g h t d a t a = g e t D a t a ( ” l i g h t s e n s o r ” , ”

l i g h t l e v e l ” , e x e c u t i o n n u m b e r ,

EXECUTION STEP ) ;

i n f r a r e d d a t a = g e t D a t a ( ”

i n f r a r e d s e n s o r ” , ” d i s t a n c e ” ,

e x e c u t i o n n u m b e r ,

EXECUTION STEP ) ;

}

b o o l e a n a c t i v a t i o n G u a r d s ( ) {

r e t u r n t r u e ;

}

b o o l e a n c o n d i t i o n s ( ) {

r e t u r n g e t L i g h t L e v e l ( l i g h t d a t a )>

t h r e s h o l d ;

}

a c t i o n s ( ) {

i n f r a r e d c o n f i d e n c e = g e t C o n f i d e n c e (

i n f r a r e d d a t a ) ;

l i g h t c o n f i d e n c e = g e t C o n f i d e n c e (

l i g h t d a t a ) ;

l i g h t l e v e l = g e t L i g h t L e v e l (

l i g h t d a t a ) ;

n e w c o n f i d e n c e = u p d a t e C o n f i d e n c e (

i n f r a r e d c o n f i d e n c e ,

l i g h t c o n f i d e n c e , l i g h t l e v e l ) ;

ad d R ul e ( Sa v eR u le ( g e t V a l u e (

i n f r a r e d d a t a ) , ” i n f r a r e d s e n s o r

” , ” d i s t a n c e ” , n e w c o n f i d e n c e ,

e x e c u t i o n n u m b e r ,

EXECUTION STEP +1) ) ;

}

b o o l e a n r u l e s G e n e r a t i o n ( ) {

t h i s . e x e c u t i o n n u m b e r ++ ;

r e t u r n t r u e ;

}

Figure 5: Influencing rule.

between the two is above a threshold. As the GPS

confidence currently is quite low and the average

of the confidences of the sensors used to calculate

an estimated position is higher and every single

one of them is above a minimum threshold, the

values for the position given by the GPS are re-

placed by the calculated ones.

5. Finally the calculated values are passed to the

robot.

d a t a A c q u i s i t i o n ( ) {

}

b o o l e a n a c t i v a t i o n G u a r d s ( ) {

r e t u r n t r u e ;

}

b o o l e a n c o n d i t i o n s ( ) {

r e t u r n t r u e ;

}

a c t i o n s ( ) {

i n s e r t D a t a ( val u e , s e n s o r , p r o p e r t y ,

c o n f i d e n c e , e x e c u t i o n n u m b e r ,

EXECUTION STEP ) ) ;

}

b o o l e a n r u l e s G e n e r a t i o n ( ) {

r e t u r n t r u e ;

}

Figure 6: Save rule.

5 CONCLUSION

In this paper, we proposed a generic model for

context-based handling in sensor data fusion. In this

model, we use proactive scenarios running on a rule-

based engine in addition to of a layer of classifiers

in order to improve quality and the trust level of the

information that the robot finally uses to make his de-

cisions for reaching its objectives.

The proposed model takes the context of the robot

and its environment into account, which allows it to

better adapt to changes in the overall situation than

traditional sensor fusion techniques on their own.

6 FUTURE WORK

As some robots in the real world have to be able to

operate in different situations, in the next steps we

want to verify and validate different properties for our

generic model like robustness and resilience as these

properties are amongst the main ones requested for

these robots and are two important properties that our

model tries to provide them with respect to decision-

making based on multiple sensors data flows. These

properties can be tested by deliberately making sen-

sors fail or malfunction and checking whether the out-

put is correct. To allow us to have full control and

knowledge about the experiments, they will be done

in the simulation environment Webots. It also allows

us to generate the amount of data necessary to train

the first layer of the model.

Proactive Model for Handling Conflicts in Sensor Data Fusion Applied to Robotic Systems

473

REFERENCES

Akbari, A., Thomas, X., and Jafari, R. (2017). Automatic

noise estimation and context-enhanced data fusion of

imu and kinect for human motion measurement. In

2017 IEEE 14th International Conference on Wear-

able and Implantable Body Sensor Networks (BSN),

pages 178–182. IEEE.

Bader, K., Lussier, B., and Sch

¨

on, W. (2017). A fault toler-

ant architecture for data fusion: A real application of

kalman filters for mobile robot localization. Robotics

and Autonomous Systems, 88:11 – 23.

Bostanci, E., Bostanci, B., Kanwal, N., and Clark, A. F.

(2018). Sensor fusion of camera, gps and imu using

fuzzy adaptive multiple motion models. Soft Comput-

ing, 22(8):2619–2632.

Chen, Z., Tian, L., and Lin, C. (2017). Trust model of wire-

less sensor networks and its application in data fusion.

Sensors, 17(4):703.

Dempster, A. P. (1968). A generalization of bayesian infer-

ence. Journal of the Royal Statistical Society. Series

B (Methodological), 30(2):205–247.

Dobrican, R.-A., Neyens, G., and Zampunieris, D. (2016).

A context-aware collaborative mobile application for

silencing the smartphone during meetings or impor-

tant events. International Journal On Advances in In-

telligent Systems, 9(1&2):171–180.

Hide, C., Moore, T., and Smith, M. (2003). Adaptive

kalman filtering for low-cost ins/gps. The Journal of

Navigation, 56(1):143–152.

Kalman, R. E. (1960). A new approach to linear filtering

and prediction problems. Transactions of the ASME–

Journal of Basic Engineering, 82(Series D):35–45.

Kordestani, M., Samadi, M. F., Saif, M., and Khorasani, K.

(2018). A new fault prognosis of mfs system using in-

tegrated extended kalman filter and bayesian method.

IEEE Transactions on Industrial Informatics, pages

1–1.

Nemra, A. and Aouf, N. (2010). Robust ins/gps sensor fu-

sion for uav localization using sdre nonlinear filtering.

IEEE Sensors Journal, 10(4):789–798.

Neyens, G. (2017). Conflict handling for autonomic sys-

tems. In 2017 IEEE 2nd International Workshops on

Foundations and Applications of Self* Systems (FAS*

W), pages 369–370. IEEE.

Neyens, G. and Zampunieris, D. (2017). Using hid-

den markov models and rule-based sensor media-

tion on wearable ehealth devices. In Procedings of

the 11th International Conference on Mobile Ubiqui-

tous Computing, Systems, Services and Technologies,

Barcelona, Spain 12-16 November 2017. IARIA.

Neyens, G. I. F. and Zampunieris, D. (2018). A rule-based

approach for self-optimisation in autonomic ehealth

systems. In ARCS Workshop 2018; 31th Interna-

tional Conference on Architecture of Computing Sys-

tems, pages 1–4. VDE.

Salovaara, A. and Oulasvirta, A. (2004). Six modes of

proactive resource management: a user-centric typol-

ogy for proactive behaviors. In Proceedings of the

third Nordic conference on Human-computer interac-

tion, pages 57–60. ACM.

Sasiadek, J. and Wang, Q. (1999). Sensor fusion based on

fuzzy kalman filtering for autonomous robot vehicle.

In Robotics and Automation, 1999. Proceedings. 1999

IEEE International Conference on, volume 4, pages

2970–2975. IEEE.

Shafer, G. (1976). A Mathematical Theory of Evidence.

Princeton University Press.

Shirnin, D., Reis, S., and Zampunieris, D. (2012). Design of

proactive scenarios and rules for enhanced e-learning.

In Proceedings of the 4th International Conference on

Computer Supported Education, Porto, Portugal 16-

18 April, 2012, pages 253–258. SciTePress–Science

and Technology Publications.

Tennenhouse, D. (2000). Proactive computing. Communi-

cations of the ACM, 43(5):43–50.

Tom, K. and Han, A. (2014). Context-based sensor selec-

tion. US Patent 8,862,715.

Turan, M., Almalioglu, Y., Gilbert, H., Araujo, H., Cemgil,

T., and Sitti, M. (2018). Endosensorfusion: Particle

filtering-based multi-sensory data fusion with switch-

ing state-space model for endoscopic capsule robots.

In 2018 IEEE International Conference on Robotics

and Automation (ICRA), pages 1–8. IEEE.

Yazdkhasti, S. and Sasiadek, J. Z. (2018). Multi sensor

fusion based on adaptive kalman filtering. In Ad-

vances in Aerospace Guidance, Navigation and Con-

trol, pages 317–333, Cham. Springer International

Publishing.

Zampunieris, D. (2006). Implementation of a proactive

learning management system. In Proceedings of” E-

Learn-World Conference on E-Learning in Corporate,

Government, Healthcare & Higher Education”, pages

3145–3151.

Zampunieris, D. (2008). Implementation of efficient proac-

tive computing using lazy evaluation in a learning

management system (extended version). International

Journal of Web-Based Learning and Teaching Tech-

nologies, 3:103–109.

ICSOFT 2019 - 14th International Conference on Software Technologies

474