Towards a Functional and Technical Architecture for e-Exams

Nour El Mawas

1

, Jean-Marie Gilliot

2

, Maria Teresa Segarra Montesinos

2

, Marine Karmann

2

and

Serge Garlatti

2

1

CIREL (EA 4354), University of. Lille, Lille, France

2

LabSTICC, IMT Atlantique, Brest, France

Keywords: Lifelong Learning, e-Exam, Certification’s Authentication, Programming Course.

Abstract: In the context of lifelong learning, student learning is online or computer-mediated. However, schools and

universities are still using the traditional style of paper-based evaluations even if technological environments

and learning management systems are used during lectures and exercises. This paper proposes a functional

and technical e-exam solution in order to allow learners and students to do e-exam in universities’ classrooms

and dedicated centres. We evaluate our approach in an object-oriented programming and databases course.

The experimental study involved students from the first and second year of a Master degree in an engineering

school. The results show that (1) Students' knowledge is better assessed during the e-exam, (2) the technical

environment is easier to master than the paper environment, and (3) students are able to apply the

competencies developed during the lessons in the e-exam. This research work is dedicated to Education and

Computer Science active communities and more specifically to directors of learning centres / Universities’

departments, and the service of information technology and communication for education (pedagogical

engineers) who meet difficulties in evaluating students’ in a secure environment.

1 INTRODUCTION

Lifelong learning is an important asset, subject of

educational policies in Europe, including

development of Key competencies (Council of the

European Union 2006). In order to support lifelong

learning, assessment needs to be seen as an

indispensable aspect of lifelong learning (Boud,

2000). This includes formative and summative

learning in order to enable learners to support their

own learnings. Whereas, Boud proposes the principle

of sustainable assessment, where formative

assessment should be central as key enabler, he also

acknowledges the need for assessment for

certification purposes.

In the meanwhile certification opportunities have

become more accessible, notably because of MOOCs

development. More than 100 million students have

now signed for at least one MOOC (Class Central

2018). Certification is now an available opportunity

for lifelong learners, especially in an employability

perspective.

However, the development of effective

certification models is hardly considered. Fluck

(2019) acknowledges that literature on e-exams is

very scarce. Peer assessment is more adequate as

formative than summative assessment (Falchikov and

Goldfinch, 2000). Proctoring (Morgan and Millin,

2011) is only one of the many solutions that can be

proposed to ensure fair assessment. Across the key

subjects with e-exams, one can notice learner

authentication (Smiley, 2003), and controlling

fairness of exam environment.

(Rytkönen and Myyry, 2014) identify four main

types of e-exams: When the time and place of the

exam situation are defined by the organization, the

electronic exams are either called computer

classroom exams or Bring Your Own Device

(BYOD) exams. If the time is restricted but not the

place, the exams are online exams. When the exam

room is always the same but the students can select

when to take the exam, the exams are called

electronic exam room exams. Finally, if the time and

place are both free within a time period, then you can

call the exams online exam periods or online

assignments. In our context, the e-exam is related the

computer classroom exams with the idea that the

classroom is not only the university’s classroom. It

can be in an exam center room outside the campus.

El Mawas, N., Gilliot, J., Montesinos, M., Karmann, M. and Garlatti, S.

Towards a Functional and Technical Architecture for e-Exams.

DOI: 10.5220/0007861205730581

In Proceedings of the 11th International Conference on Computer Supported Education (CSEDU 2019), pages 573-581

ISBN: 978-989-758-367-4

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

573

In this contribution, we propose an original

architecture, hybrid between online assessment and

more classical exams rooms in universities, enabling

a dissemination of e-exams opportunities in third

places.

The paper is organized as follows. Section 2

presents some research work done in the area of

online environment and e-exams. Section 3 presents

existing solutions to insure e-exams. Section 4 details

our functional and technical architecture for e-exams.

Section 5 introduces our case study. Section 6

presents the results of the case study. Section 7

summarizes the conclusion of this paper and presents

its perspectives.

2 RELATED WORK

In this section, we present important features that

need to be in an e-exam solution.

(Casey et al., 2005) identify three type of activities

that must be handled to ensure academic integrity in

the online environment, namely (i) improper access

to resources during assessment (ii) plagiarism and

(iii) contract cheating, meaning using a paid or unpaid

surrogate for course assessment. The authors propose

a review of techniques to deter academic dishonesty,

concluding that for formative exams all techniques

should be used.

Along these techniques, online proctoring like

ProctorU (Morgan and Millin, 2011) are the most

advanced solution, including detection techniques

against improper use of external resources and learner

authentication to counter contract cheating. This kind

of solution provide a flexible schedule for the student.

However, the candidate must be able to isolate

himself in a quiet room, with an effective network

connection, which is not possible in all cases.

The process of authentication is commonly

completed through the use of logon user identification

and passwords, and the knowledge of the password is

assumed to guarantee that the user is authentic

(Ramzan, 2007). However, authentication cannot be

viewed as an effective mechanism for verifying user

identity because a password can be shared between

users. That is why Bailie and Jortberg (2019) outline

the importance of the learner’s identity verification in

e-exams.

According to (Baron and Crooks, 2005), requiring

students to complete exams in a proctored testing

center is probably the best way to avoid the possibility

of cheating. Some universities provide exam rooms,

ensuring authentication, and a similar environment to

paper exams, but this solution cannot scale up to a

broader access, and are available only for specific

sessions with specific schedule and where all

candidates must pass the same exam.

Fluck (2019) recently proposed a review of e-

exams solutions. As a result, he also notices that

assessment integrity is a very sensitive issue,

proposing a similar list of software techniques. Other

features acknowledged are (i) accessibility to provide

equitable access for students with disabilities, and (ii)

architecture and affordance for higher order thinking.

Concerning accessibility, he observes that

accessibility have been tackled by allowing students

with disabilities to use a computer, sometimes with an

additional time allowance. This practice continues to

be relevant in e-exams context.

In order to achieve higher order thinking

assessment, Fluck notices that using quizzes

generally conduct to lower order thinking

assessments and advocates for the use of rich media

documents and professional software. In the domain

of software development, (Wyer and Eisenbach,

1999) provide an extensive solution that aims to

ensure assessment integrity, while providing the same

software environment during practice and exams.

(Dawson, 2016) confirms that in an e-exam,

students must have prohibited internet access or

controlled access to the internet based on the context.

Students must not have access to any file, resource via

the network / Universal Serial Bus, or via a prohibited

program such as screen sharing or chat tools. (Pagram

et al., 2018) clarify the importance of the auto-saving

feature in an e-exam. The e-exam solution can have

an auto-save or save to the cloud. This leads to

improvements in the exam efficiency and would also

address student problems with the PC and protect

student data in the event of a crash.

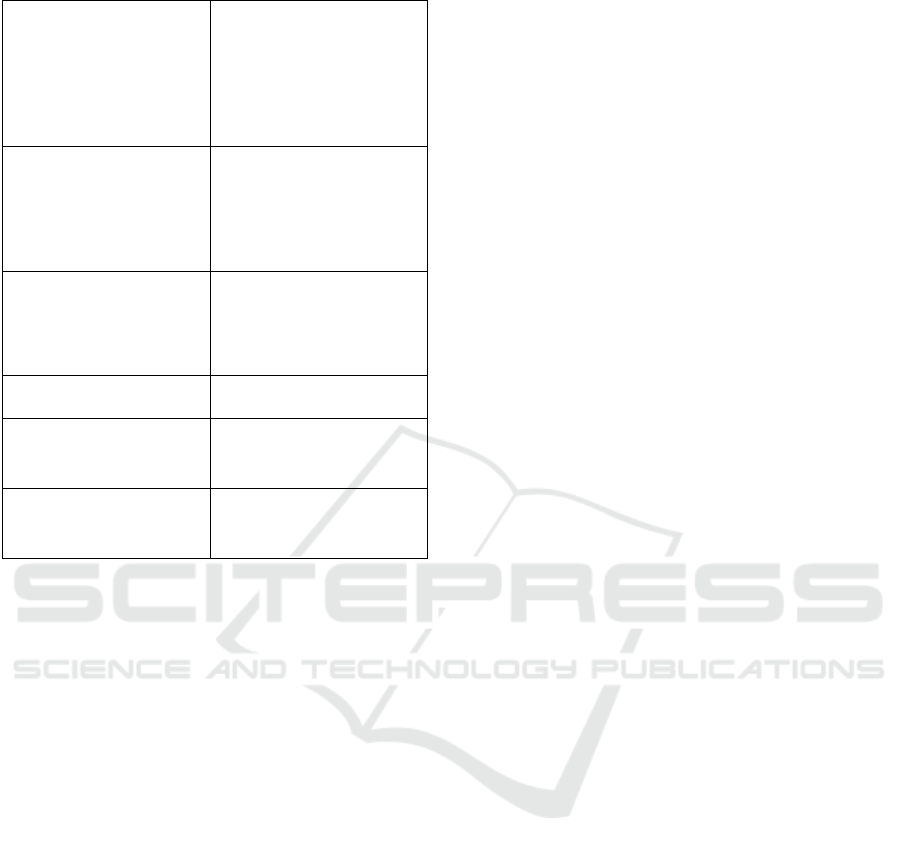

Table 1: Important features for an e-exam solution.

Feature code

Feature label

F1

control the access to the internet /

local resources

F2

authentication

F3

learner’s identity verification

F4

regular backup of learners’ answers

F5

same software environment during

practice

The previous existing works enable us to define

important features for an e-exam solution (see Table

1). The solution must control the access to the internet

/ local resources. It must ensure authentication and

learner’s identity verification. It must also enable

regular backup of learners’ answers in case of a

technical problem during the e-exam. The solution

must allow the use of rich media documents and

CSEDU 2019 - 11th International Conference on Computer Supported Education

574

professional software providing the same software

environment during practice and exams.

3 EXISTING e-EXAM

SOLUTIONS

In this section, we consider existing e-exam solutions.

He (2006) presents a web-based educational

assessment system by applying Bloom’s taxonomy to

evaluate student learning outcomes and teacher

instructional practices in real time. In the Test

Module, the instructor can design a new test or query

existing tests. The system performance is rather

encouraging with experimentation in science and

mathematics courses of two high schools.

Guzmán and Conejo (2005) proposed an online

examination system called System of Intelligent

Evaluation using Tests for Tele-education (SIETTE).

SIETTE is a web-based environment to generate and

construct adaptive tests. It can include different types

of items: true/false, multiple choice, multiple

response, fill-in-the-blank, etc. It can be used for

instructional objectives, via combining adaptive

student self-assessment test questions with hints and

feedback. SIETTE incorporates several security

mechanisms, such as test access restrictions by

groups, Internet protocol (IP) addresses, or users.

Exam (Rytkönen, 2015) is a web-based system

used by a consortium of 20 universities in Finland

where the teacher and students get different types of

webpage. Also, the exams in the Exam system are

constructed so as to be managed on the server. Exam

is composed of an examining system and an exam

video monitoring system. All the classrooms and

computers have their individual IP addresses. When

the student enters the exam room, the video

monitoring turns automatically on, and the building

janitors are able to see the live stream. The exam

situation is also recorded so that the teacher is able to

watch the exam situation afterwards, if there is a

reason to suspect cheating or other issues.

Another example of e-exam systems is the

Educational Testing Service (ETS) (Stricker, 2004)

that is used to run the International English Language

Testing System (IELTS), the Graduate Record

Examination (GRE) and the Test of English as a

Foreign Language (TOEFL). These tests evaluate

every year the ability to use and understand English

of millions of people from hundreds of different

countries. Formerly deployed as traditional paper-

based supervised tests, they have been reengineered

to be computer-based.

Learning Management Systems (LMSs) like

Moodle provide an environment where teachers can

maintain course materials and assignments (Kuikka et

al., 2014). These LMSs support features for

examination purposes, but they are not actually

intended for e-exams. For example, Moodle has no

separate exam feature, but does contain tasks and

objects that could be used for e-assessment such as

assignment and test activities.

For the sake of clarity, S1 refers to the web-based

educational assessment system by applying Bloom’s

taxonomy, S2 to SIETTE, S3 to Exam, S4 to ETS,

and S5 to LMSs.

Table 2: Comparison between existing e-exam solutions

and our required features.

F1

F2

F3

F4

F5

S1

-

✓

-

-

-

S2

✓

✓

-

-

-

S3

✓

✓

✓

-

-

S4

-

✓

-

✓

-

S5

-

✓

-

-

-

Across the table 2, we found that no existing

solutions for e-exams meets our needed features

detailed in table 1. This led us to think deeply about a

functional and technical solution of e-exams. To

highlight all these ideas we are going to detail in the

next section our approach that includes all these

features and provides innovative solutions in this

domain.

4 OUR SOLUTION

Our aim is to propose an hybrid solution, giving the

opportunity to provide flexible access to proctored e-

exams in third places like libraries, town halls,

Fabrication Laboratories (FabLabs), … by providing

a mobile system, that ensure learner authentication,

assessment integrity, access to any available exam, in

various dedicated environments, that enables higher

order thinking assessment. Local authorized persons

will ensure a classical face-to-face proctoring.

The system is composed of a box, namely the

classroom Server that hosts a web-server, ensures a

gateway role, provides an authentication facility and

allows regular backup of learners’ answers.

Candidates connect their device to this classroom

server in order to be allowed to participate. This two

side client/server architecture gives the opportunity to

propose a modular architecture with different options

depending on examination needs.

Towards a Functional and Technical Architecture for e-Exams

575

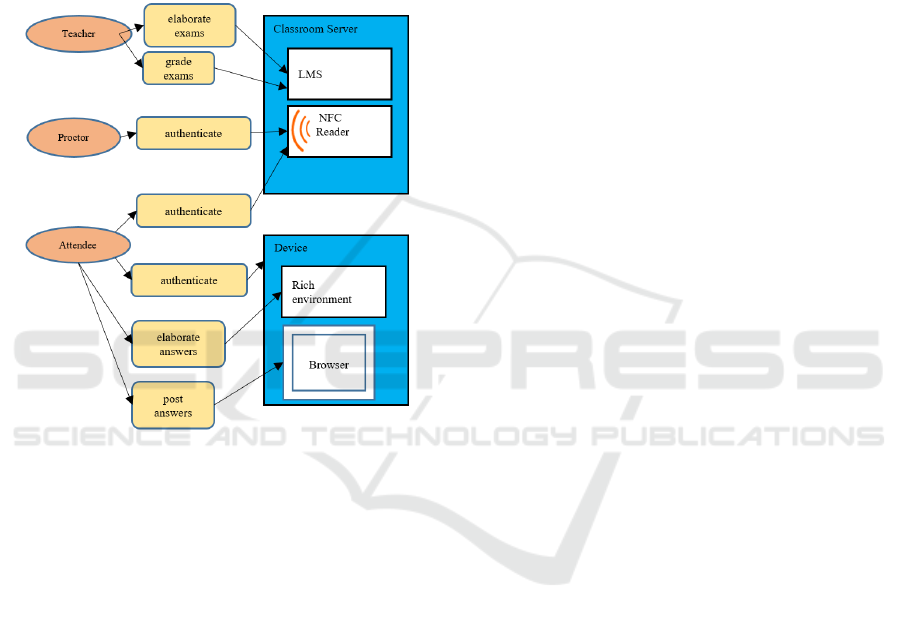

4.1 Our Functional Architecture

As (Casey et al., 2005) noted all parts of the

architecture must integrate mechanisms in order to

ensure academic integrity. Our functional

architecture is depicted in figure 1.

Teachers can provide course and elaborate exams

on a reference Learning Management System (LMS)

environment, and the Classroom Server will

synchronize itself to this environment in order to

locally provide courses and exams when deployed.

Figure 1: Our functional architecture.

In order to start an exam session, an authorized

proctor will authenticate itself to the Classroom

Server, thanks to a secure authentication system.

After this initialization, attendees are authorized to

enter the session. They will first provide an ID proof

to the secure authentication system (a NFC reader) in

order to be acknowledged as candidate. Secondly,

they will be able to choose an available exam on their

device and connect themselves. Using the device,

they are allowed to use the software environment

needed to elaborate answers for the exam, to access

relevant resources on the Classroom Server LMS.

They can post their answers to the LMS via a standard

browser. Attendee’s data in the software environment

and posted answers are regularly saved on a separate

storage in the Classroom Server.

Access to resources is controlled on the classroom

server side, by giving access to relevant

documentation and by controlling access to the web.

If necessary, candidates’ devices may run a controlled

environment in order to avoid local resources access.

Cheating by communicating to others is technically

avoided by controlling connections as the classroom

server is the gateway of the classroom. In addition

proctors are able to directly monitor screens. Where

appropriate, the classroom server can work offline,

preventing all external connections.

The Classroom Server provides an authentication

system ensuring surrogate avoidance at a similar or

better level than paper exams.

As the system is hosted in a public place, the

attendee doesn’t need to provide facilities such as

quiet room and effective network connection.

Moreover, he is allowed to pass any exam he is

subscribed for.

Finally, the device is standard, meaning that it

provides standard accessibility features, rich media

interactions, and possibly professional software

access similar than those provided during practice.

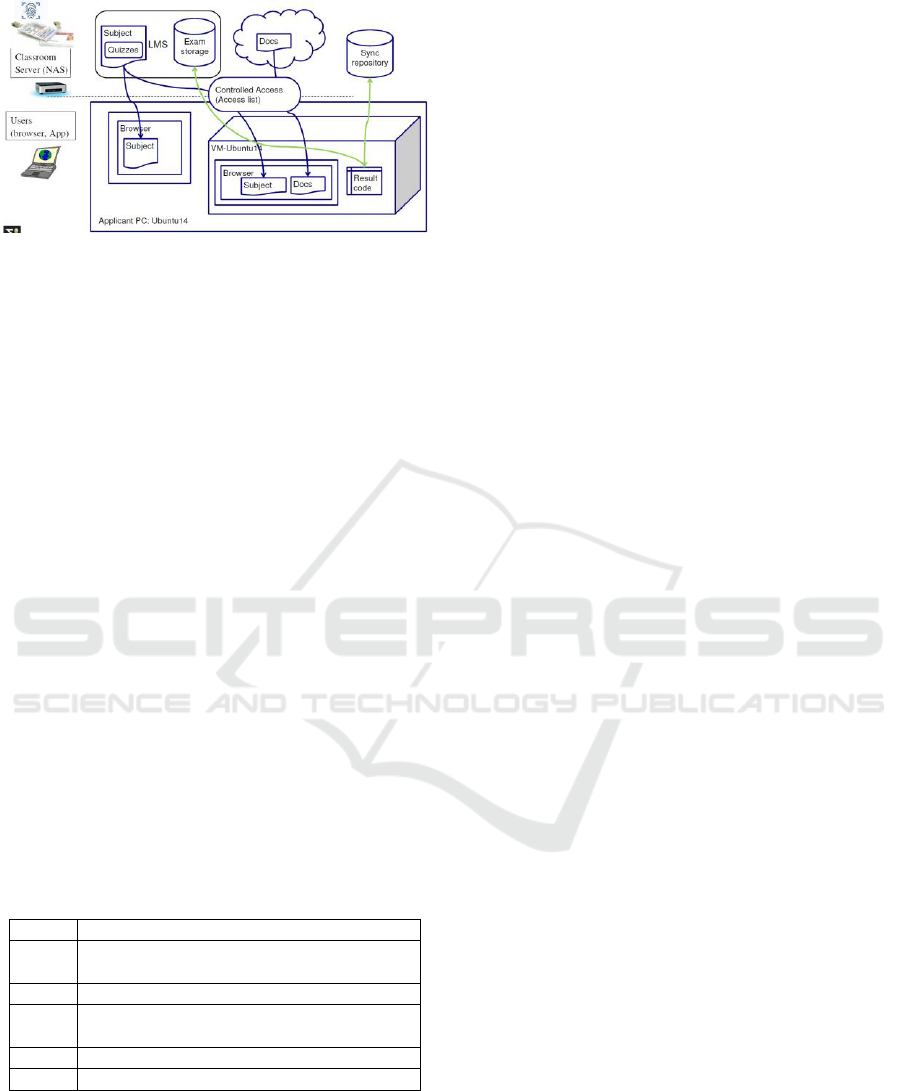

4.2 Our Technical Architecture

We have designed a proof of concept architecture

based on the MOOCTAB results (El Mawas et al.,

2018). Technically, the Classroom Server solution is

based on the MOOCTAB box system that permit

local offline access to MOOCs and further

synchronization to online MOOCs.

The authentication is based on visual verification

by local proctors. Additionally, a RFID reader is

provided that can check an ID card, and verify that

the candidate applied to an e-exam. This

authentication enables e-exam access to the attendee

for a specified time.

The MOOCTAB box hosts a MOOC LMS server,

namely edX. The attendee can then connect to this

server to access the examination instruction and all

necessary resources (documents, simulators, editors,

results upload, etc.).

If the Classroom Server is offline, this will be the

only resources available to the attendee. Otherwise

the Classroom Server serve as a gateway filtering

available external resources.

The edX server can be synchronized with external

edX server. This means that any interaction in a

MOOC session can be further synchronized with an

online edX server. This enables a seamless e-exam

exploitation on an edX server providing all necessary

facilities for correction, and marks’ exploitation.

A synchronization service may be provided to

guarantee that the attendee may not lose information

during examination if he works on his own device.

CSEDU 2019 - 11th International Conference on Computer Supported Education

576

Figure 2: Our technical architecture.

The attendee device can be anyone relevant for

the assessment. The MOOCTAB project was

specifically dedicated to tablets, meaning that

preferred device was a tablet. This enables local

authentication that has to be compliant with the server

side, for example fingerprint recognition

corresponding to the ID card.

In our experiment, we aimed to adapt a

professional software environment. Thus the

attendee’s device was a PC, with a professional IDE

(Eclipse). In this case, we chose that the PC will boot

on a specific Linux distribution in order to control the

environment, similar to those provided during

practice. This solution is similar to the one proposed

by (Wyer and Eisenbach, 1999). As the management

of the attendees is done by the Classroom Server, our

solution is more flexible as neither specific room

preparation nor attendee’s assignment to specific PC

is required. In order to be identified the attendee has

to connect to the LMS server in order to access to his

assessment.

Providing two different environments, tablet and

software development PCs demonstrate the

modularity of the proposed solution.

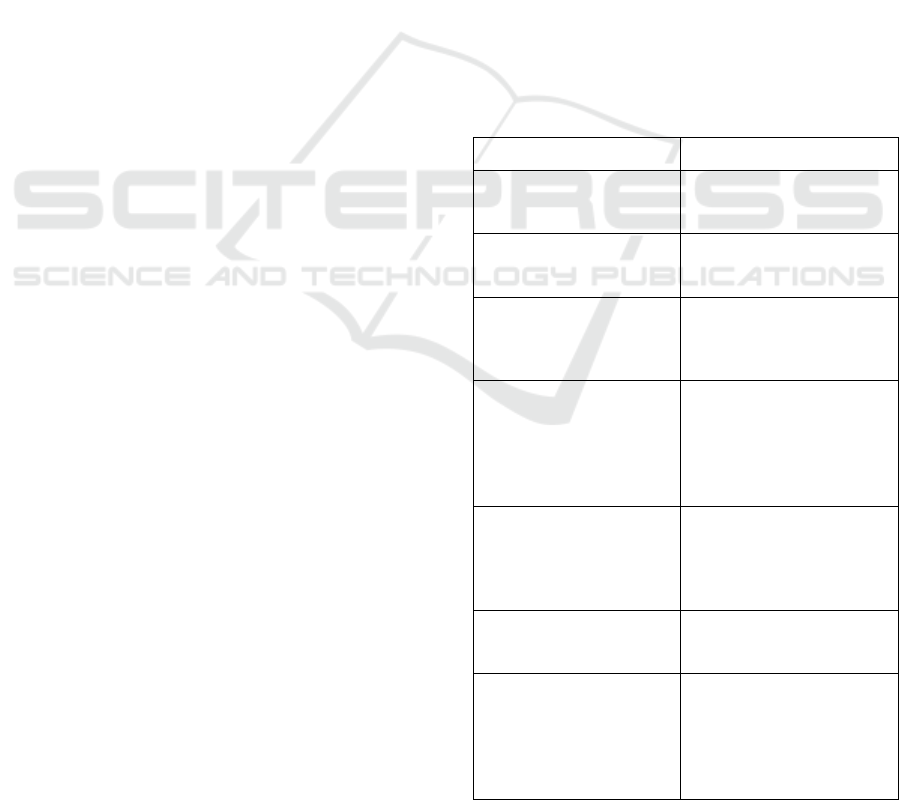

Table 3: Correspondence between our solution and the

required features.

Our solution

F1

Classroom Server (online and offline

modes)

F2

Logon user identification and password

F3

Fingerprint recognition + ID card with NFC

reader

F4

Classroom Server Separate storage

F5

Any software environment

Table 3 shows the correspondence between our

solution and the required features. The access control

to internet / local resources is ensured thanks to the

online and offline mode of the Classroom Server.

Attendees are authenticated via the logon user

identification and the password. Fingerprint

recognition and ID card with a NFC reader allow

learner’s identity verification. The Classroom Server

Separate storage provides regular backup of learners’

answers. In our proposed solution, we can use any

software environment that is why the teacher can

choose the use of the same software environment

during practice in the e-exam.

5 CASE STUDY

5.1 Overview of Our Case Study

We have used and evaluated the MOOCTAB

platform to manage the exam of an 84 hours, object-

oriented programming and databases course. The

course is followed by students in the first and second

year of a Master degree in an Engineering School.

The course considers two aspects when

developing object-oriented (OO) applications that

access a database: analysis and design (of the OO

program and the database) and programming and

database utilization.

Since the course took place the first time, its

evaluation mainly consisted on a traditional paper-

based, proctored exam. Questions always include a

MCQ (Multiple Choice Questions) to evaluate

knowledge, and exercises to evaluate a subset of skills

developed during the course: problem analysis,

solution design, and programming and database

utilization. Evaluating paper-based MCQs can be

error-prone but can be easily automated. Exercises to

evaluate skills are very different in nature.

On one hand, answers to problem analysis and

solution design exercises are expected to be graphical

diagrams; and no particular tool is recommended to

be used to create them during the course. Evaluating

them on a paper-based, proctored exam seems to be

“aligned with skills” developed during the course.

On the other hand, programming and database use

exercises are expected to be lines of code or issues

encountered when programming (mainly resolving

bugs and compilation errors) and students are

provided with special tools that help on such tasks

during the course. Evaluating them on a paper-based,

proctored exam raises two important issues:

1. Alignment with skills is not guaranteed as

students are not provided with the same tools as

during the course,

2. Very frequently, students are not rigorous

enough to properly write code making its evaluation

difficult and error-prone.

We decided to tackle these issues by evolving the

exam and in particular exercises to evaluate skills.

Towards a Functional and Technical Architecture for e-Exams

577

Problem analysis and design exercises are decided to

remain unchanged. Programming exercises are

designed so that students use computer to do them.

5.2 Design of e-Exam Content

Three types of exercises were chosen for the e-exam.

They are presented below.

Type 1: Exercises to Write Code from a

Program Design: Students were given a UML class

diagram and a UML sequence diagram (well-known,

spreadly used standards to describe the structure and

dynamics of a program) and they had to write (part

of) the corresponding code. These exercises evaluate

students on their understanding of the used standards

and their skills to write a code that conforms to a

specification.

These exercises were not different from the ones

on the paper-based exam. Expectations relate to the

better-written lines of code thanks to the use of the

computer.

Type 2: Exercises to Give a Solution for a

Particular Compilation Error: These are two-steps

exercises: we ask students to give her interpretation

of the error (why the error occurs?) and a solution.

These exercises evaluate students’ skills on solving

such errors while understanding them. Indeed, tools

used to write programs help on solving compilation

errors.

Even if these exercises were not different form the

ones on the paper-based exam, error interpretation is

much more important in an e-exam. Indeed, the

programming tool used by students (as all

programming tools) already proposes a solution to a

compilation error. Therefore, students are able to give

a solution (the one proposed by the tool) without

understanding why they give them.

Type 3: Exercises to Write Code to Access a

Relational Database: Relational databases are the

most common type of databases used by programs.

The language used to access them is the SQL

language and is an ANSI and ISO standard since mid-

80’s. For such exercises, we intend to evaluate

students’ skills to write SQL requests that are correct

and minimal (the simplest request among all the

possible ones).

5.3 Case Study Settings

The evaluation included a group of four voluntary

students who were assessed by using the MOOCTAB

exam environment. The examination took place in

class, outside the normal hours of study. Once the e-

exam was taken, the researchers wanted to assess the

affordance, acceptability and overall experience of

the students as they prepared and took the exam. To

do so, the researchers prepared an online

questionnaire and received the students who

volunteered for an interview to explain their answers

to the questionnaire.

The questions are presented in Table 4 and are

organized to evaluate three important criteria: the

students’ knowledge in the e-exam, the e-exam

technical environment, and the applied competencies

in the e-exam.

Questions 1 to 5 were related to the first

evaluation criterion, they were specifically testing the

overall opinion of the students about e-exams and the

self-efficacy estimations on how they dealt with the

exam itself. Questions 6 to 9 to the second evaluation

criterion and were more specifically testing aspects

like how the students generally perceived their

performance during the exam. Finally, questions 10

to 12 were related to the third evaluation criterion and

were evaluating more generally how the students felt

about generalizing the dispositive of e-exams.

Table 4: Survey questions.

Question

Answer scale

Q1. What’s your general

opinion about e-exam?

- Not favorable

- Neutral

- Favorable

Q2. What’s your level of

competencies in

computational sciences?

- Bad

- average

- Good

Q3. Do you think you

managed to mobilize the

necessary knowledge to

pass this exam?

- Disagree

- Not really

- Agree

Q4. Do you think the e-

exam allows you to show

better that you master the

knowledge of the course

than in the paper

examination?

- No

- a bit

- Yes

Q5. If you had to

compare a classical paper

examination and this e-

exam would you say that

you are:

- Not favorable

- Neutral

- Favorable

Q6. When you found out

that you’ll have to take

an e-exam, you were:

- Anxious

- Neutral

- Enthusiastic

Q7. Take an exam in

similar conditions to

those experienced in

class was for you:

- An element of difficulty

- Had no effect on my

answers

- An element of

facilitation

CSEDU 2019 - 11th International Conference on Computer Supported Education

578

Table 4: Survey questions (Cont.).

Q8. During the e-

exam, would you say

you were:

- Less at ease than with

paper exams

- Neither less nor more at

ease than with paper

exams

- More at ease than with

paper exams

Q9. The developing

environment (Eclipse)

seemed to you:

- Hard to work with

- A bit hard to work with

- Neither hard nor easy to

work with

- A bit easy to work with

- Easy to work with

Q10. Have you

encountered difficulties

to upload your file on

EdX?

- Not at all

- Some difficulties

- Yes

- I was unable to upload

my file

Q11. Why did you accept

to take an e-exam?

(Free text)

Q12. Was the e-exam

meeting your

expectations?

- No

- A bit

- Yes

Q13. Would you like for

every exam to be

organized this way

- No

- A bit

- Yes

6 CASE STUDY RESUTS

ANALYSIS

All participants have a positive overall view of e-exam

(Q.1). Three of them identify they have a good skill

level in computational sciences and one of them

considers his skill level as “average” (Q.2). They all

believe that they have been successful in mobilizing

the necessary skills to succeed (Q.3). Two of them

estimated that the digital environment helped them “a

bit” to better show their skill in computational

sciences than the traditional examination. And the

two others did not perceive any differences in that

aspect (Q.4). Three of them were favorable in their e-

exam comparison to a traditional examination, one

participant was neutral (Q.5). Three of them were

excited to have a digital exam. But it should be noted

that one of the participants stated that he was worried

about the examination (Q.6). Two of them said that

the digital test was a facilitating factor in their

examinations, the other two felt that it had no effect

on their answers (Q.7 and Q.8). All have also found

the Eclipse environment easy to use (Q.9).

Technically speaking, two of them declared no

difficulty to upload their document on EdX, one

found it difficult, and another was unable to do it (Q.

10). The interview highlighted the fact that the

instructions were not clearly given and so at least one

of the participant misused the digital environment.

All agreed to participate in order to further develop

these kinds of initiatives and to generalize the use of

screens. For one of them: "That's the way computer

science exams should be done." (Q.11). Regarding

their expectations, it seems that the digital

examination has answered either "a little" for one of

them or "quite" for the other three (Q.12). And all

would like to see the device extended to all computer

exams (Q.13). These generally positive remarks are

reflected in oral interviews, where the students

reiterate their appreciation of the care taken to

reproduce environments that are closer to the

conditions of exercises in the practical work. They

also reiterate their desire to see the device extended to

all exams in computer courses. Based on our

evaluation criteria (see Section 5.3), we analyze

below the results of our case study.

6.1 Students’ Knowledge in the e-Exam

Based on these results, it seems that we have some

evidence that students' knowledge is better assessed

(Q.1 to 5) during the digital exam than with a

traditional exam. In fact, most of the students'

responses were favorable in terms of greater ease of

mobilizing their knowledge, so it seems that it can be

said that this better alignment is conducive to better

quality evaluation at least from the point of view of

the students. Indeed, during the interviews, all

expressed their general appreciation of a device that

allowed them to better account for their knowledge:

"for sure I was more comfortable than if it had been

on paper, on paper, it's longer ... well, it's simpler and

then it's also more logical ", " When you think about

it, what's the point of doing a digital exam on paper?

". This result supports Biggs (1996) who think that

students’ knowledge is better assessed during the e-

exam because this type of exam is more aligned with

the way lessons are organized.

6.2 The e-Exam Technical

Environment

Overall, the technical environment does not at all

represent a factor of difficulty for students. On the

contrary, apart from the elements related to the

clarification of instructions (see next section), they

tended to say that the digital evaluation environment

was a facilitating element for their delivery. The fact

that it is the same environment as the one used in

class, of course, played, and most of the time during

Towards a Functional and Technical Architecture for e-Exams

579

the interviews, the students underlined that they were

comfortable with the tools: "This is the environment

we know, we just had to authenticate, it was clear and

we used to work like that. " When asked if they were

comfortable during the exam, they gave evasive

answers that make their feedback difficult to

interpret: "Yes ... It was not that complicated", "I felt

rather at the comfortable what... ". This difficulty in

answering the question could come from the fact that

no clear difficulty has been identified and that the

computer work has a natural character in them and

that therefore the situation was not exceptional. We

can deduce that this habit of the digital environment

is really a point on which we must rely to put students

in optimal examination situations and that do not add

additional difficulties.

6.3 Applied Competencies in the

e-Exam

In terms of the interviews and the results of the

questionnaires, it seems that the participants were

more able to use the skills developed by the courses

during the digital exam than if they had to go through

a paper exam. Indeed, during the interview, one of the

participants stressed the importance of: "developing

solutions like this for other courses that do practical

work on machines", and this, to end up in an

examination situation more "close to the courses",

and thus pedagogically relevant. One of the

participants even showed a particular appetite for

innovative evaluation devices: "I loved the peer

review at a MOOC I followed, I thought it was great

[...] it would be necessary for the teachers to test more

things like that!". We find the same kind of remarks

in the questionnaire when one of the participants

specified: "That's the way computer science exams

should be done.", "Because it could be interesting for

the future students of info and practice for the

MOOC"(Q. 11).

It should be noted, however, that it is important to

clearly specify and give the instructions since this was

deplored by the students and negatively impacted the

experience and the rendering quality of one of them

(Q.10). During the interview, the main problem

identified by three of the participants was precisely

related to the need to clarify the instructions verbally

and not to rest on the fact that the instructions are

already written in the document of the review: "The

biggest problem is the distinction of the question from

the statement. Speak orally to read the instructions. It

would be nice for the teachers to be more explicit

about the expectations of the exam. "One of the

participants even remarked to us:" I did not think

about reading the instructions, it was not clear that 'it

was necessary to use the digital environment'. These

verbatim highlight the fact that the human

relationship remains important in the context of

digital examination. The students encountered

difficulties in understanding the expectations and

would have liked the teacher to be clearer about what

to do. In conclusion, it can be noted that students are

better able to apply the skills developed during the

lessons when they are exposed to a situation of

evaluation similar to those experienced during the

lessons. However, these results must be weighted in

light of the need to clearly explain the expectations

and the technical aspects of the exercise.

To conclude, we can say that it would be wise to

generalize the experiment to a larger class group,

because the acceptability, the affordance and the

feeling of success of the participants are globally very

encouraging.

7 CONCLUSIONS

This study addresses the problem of the use of e-

exams to evaluate students. The main questions of the

study are how to control the access to the internet /

local resources, how to authenticate and verify

learner's identity, what are the approach allow regular

backup of learners’ answers, and how to promote the

use of the same software environment during practice

in the e-exam.

We investigate the problem from its theoretical

background, and we consider existing e-exam

solutions in order to see if any existing approach can

meet our requirements. Unfortunately, no one can

respond to our needs. To achieve this, our approach is

proposed as a functional and technical solution to our

problem. Thanks to this solution, the access to the

internet / local resources is controlled via the

classroom server. Authentication is ensured thanks to

the logon user identification and the password.

Identity verification are provided by the use of

fingerprint recognition and ID card with a NFC

reader. Regular backup of learners’ answers are made

by the Classroom Server Separate storage. In our

proposed solution, we have no constraint about the

software environment that is why the teacher use the

same software environment during practice in the e-

exam. Our solution provide a flexible way to pass

proctored exams, enabling subject diversity like

online systems, and face-to-face facilities of exam

rooms (isolation and access control). From a technical

point of view, this approach is lighter than online

proctoring systems.

CSEDU 2019 - 11th International Conference on Computer Supported Education

580

Our solution was tested on students from the first

and second year of a Master degree in an engineering

school. The results show that students' knowledge is

better assessed during the digital exam than with a

traditional exam. Moreover, students’ didn’t find any

difficulties related to the e-exam environment. In

addition, students were more able to use the skills

developed by the courses during the digital exam than

if they had to go through a paper exam.

Now we will deploy our solution in different

universities and engineering schools in order to

evaluate our approach on large scale. This research

work has broad impacts because the proposed e-exam

solution can be easily adapted to support different

programs and disciplines. We want also to collect

traces about student results in the e-exam in order to

better understand the learning process and improve

the method of teaching and the evaluation process in

a lifelong learning perspective.

ACKNOWLEDGEMENTS

This research was supported by The ITEA 2 Massive

Online Open Course Tablet (MOOCTAB) project.

REFERENCES

Bailie, J. L., & Jortberg, M. A. (2009). Online learner

authentication: Verifying the identity of online users.

Journal of Online Learning and Teaching, 5(2), 197-

207.

Baron, J., and Crooks, S. M. (2005). Academic integrity in

web based distance education. TechTrends, 49(2), 40-

45.

Biggs, J. (1996). Enhancing teaching through constructive

alignment. Higher education, 32(3), 347-364.

Boud, D. (2000) Sustainable Assessment: Rethinking

assessment for the learning society, Studies in

Continuing Education, 22:2, 151-167, DOI:

10.1080/713695728

Casey, K., Casey, M., and Griffin, K. (2018). Academic

integrity in the online environment: teaching strategies

and software that encourage ethical behavior.

Copyright 2018 by Institute for Global Business

Research, Nashville, TN, USA, 58.

Class Central (2018) By the numbers: MOOCs in 2018,

retrieved online. https://www.class-central.com/report/

mooc-stats-2018/ Dec. 2018

Council, E. (2006). Recommendation of the European

Parliament and the Council of 18 December 2006 on

key competencies for lifelong learning. Brussels:

Official Journal of the European Union, 30(12), 2006.

Dawson, P. (2016). Five ways to hack and cheat with

bring‐ your‐ own‐ device electronic examinations.

British Journal of Educational Technology, 47(4), 592-

600.

El Mawas, N., Gilliot, J. M., Garlatti, S., Euler, R., and

Pascual, S. (2018). Towards Personalized Content in

Massive Open Online Courses. In 10th International

Conference on Computer Supported Education.

SCITEPRESS-Science and Technology Publications.

Falchikov, N., and Goldfinch, J. (2000). Student peer

assessment in higher education: A meta-analysis

comparing peer and teacher marks. Review of

educational research, 70(3), 287-322.

Fluck, A. E. (2019). An international review of e-exam

technologies and impact. Computers and Education,

132, 1-15.

Kuikka, M., Kitola, M., & Laakso, M. J. (2014). Challenges

when introducing electronic exam. Research in

Learning Technology, 22.

Morgan, J., and Millin, A. (2011). Online proctoring

process for distance-based testing. U.S. Patent

Application No 13/007,341, 21 juill. 2011.

Pagram, J., Cooper, M., Jin, H., & Campbell, A. (2018).

Tales from the Exam Room: Trialing an E-Exam

System for Computer Education and Design and

Technology Students. Education Sciences, 8(4), 188.

Ramzan, R. (2007, May 17). Phishing and Two-Factor

Authentication Revisited. Retreived March 2, 2019,

from https://www.symantec.com/connect/blogs/

phishing- and-two-factor-authentication-revisited

Rytkönen, A. and Myyry, L. (2014). Student experiences

on taking electronic exams at the University of

Helsinki. In Proceedings of World Conference on

Educational Media and Technology 2014, pp. 2114-

2121. Association for the Advancement of Computing

in Education (AACE).

Rytkönen, A. (2015). Enhancing feedback through

electronic examining. In EDULEARN15 Proceedings

7th International Conference on Education and New

Learning Technologies. IATED Academy.

Smiley, G. (2013). Investigating the role of multibiometric

authentication on professional certification e-

examination (Doctoral dissertation, Nova Southeastern

University).

Stricker, L. J. (2004). The performance of native speakers

of English and ESL speakers on the computer-based

TOEFL and GRE General Test. Language Testing,

21(2), 146-173.

Wyer, M., and Eisenbach, S. (2001, September). Lexis

exam invigilation system. In Proceedings of the

Fifteenth Systems Administration Conference (LISA

XV)(USENIX Association: Berkeley, CA) (p. 199).

Towards a Functional and Technical Architecture for e-Exams

581