Medical Imaging Processing Architecture on ATMOSPHERE Federated

Platform

Ignacio Blanquer

1,2 a

,

´

Angel Alberich-Bayarri

2,3 b

, Fabio Garc

´

ıa-Castro

3

, George Teodoro

4

,

Andr

´

e Meirelles

4

, Bruno Nascimento

5

, Wagner Meira Jr.

5

and Antonio L. P. Ribeiro

5

1

Instituto de Instrumentaci

´

on para Imagen Molecular, Universitat Polit

`

ecnica de Val

`

encia, Valencia, Spain

2

GIBI230, Biomedical Imaging Research Group-IIS La Fe, Valencia, Spain

3

QUIBIM, Valencia, Spain

4

Universidade Nacional de Brasilia, Brasilia, Brazil

5

Universidade Federal de Minas Gerais, Belo Horizonte, Minas Gerais, Brazil

Keywords:

Cloud Federation, Privacy Preservation, Medical Imaging.

Abstract:

This paper describes the development of applications in the frame of the ATMOSPHERE platform. ATMO-

SPHERE provides means for developing container-based applications over a federated cloud offering measur-

ing the trustworthiness of the applications. In this paper we show the design of a transcontinental application

in the frame of medical imaging that keeps the data at one end and uses the processing capabilities of the

resources available at the other end. The applications are described using TOSCA blueprints and the federa-

tion of IaaS resources is performed by the Fogbow middleware. Privacy guarantees are provided by means of

SCONE and intensive computing resources are integrated through the use of GPUs directly mounted on the

containers.

1 INTRODUCTION

Cloud federated infrastructures are normally used to

distribute requests to balance workloads. Cloud burst-

ing and metascheduling in cloud orchestration en-

ables multiple providers to deal with resource de-

mand peaks that cannot be fulfilled locally. Moreover,

Cloud federation also opens the door to other require-

ments such as complementing cloud offerings with

additional resource configurations or dealing with re-

strictions based on geographic boundaries.

ATMOSPHERE exemplifies one of this usages

with the support of elastic container-based applica-

tions on top of a federated IaaS and using secure

storage and a federated network. In the specific

case of ATMOSPHERE, applications are a combina-

tion of several levels of services involving the actual

medical imaging applications and the execution en-

vironment. On the top, application developers create

medical-image processing applications for model cre-

ation, data analysis, and production. The applications

also include different dependencies in software com-

a

https://orcid.org/0000-0003-1692-8922

b

https://orcid.org/0000-0002-5932-2392

ponents with different restrictions and requirements.

Applications may include functions for the provision-

ing of metrics to the trustworthiness system and the

adaptation of the application.

1.1 Description of the Use Case

The use case focuses on the early diagnosis of the

Rheumatic Heart Disease (RHD). The RHD is a dis-

ease that can be easily treated in its early stages and

it remains the main cause of heart valve disease in

the developing world. However, it may produce se-

vere damage to the heart if it remains untreated, in-

cluding severe sequelae and death. In addition, early

detection is currently limited, since no single test ac-

curately provides early diagnosis of RHD.

The model building application is a conventional

model-driven image analysis algorithm that allows

the extraction of features that are then fed into a clas-

sifier by means of deep learning techniques based

on Convolutional Neural Networks (CNNs). This

model is used to build a classifier should serve as a

first screening to differentiate between normal and ab-

normal (screen-positive: definite or borderline RHD)

Blanquer, I., Alberich-Bayarri, Á., García-Castro, F., Teodoro, G., Meirelles, A., Nascimento, B., Meira Jr., W. and Ribeiro, A.

Medical Imaging Processing Architecture on ATMOSPHERE Federated Platform.

DOI: 10.5220/0007876705890594

In Proceedings of the 9th International Conference on Cloud Computing and Services Science (CLOSER 2019), pages 589-594

ISBN: 978-989-758-365-0

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

589

echo-cardio studies.

The training is based on a large database includes

4615 studies that contain several echocardio videos

and demographic data, classified into three categories

(Definite RHD, Borderline and Normal). The first

step is to differentiated the views (e.g. Parasternal

long axis, right ventricular inflow, basal short axis,

short axis at mid or mitral level, etc.) for their appro-

priate classification.

2 SOFTWARE ARCHITECTURE

2.1 Application Types

We differentiate between two types of applications:

On the one hand, we consider Pre-processing appli-

cations, which build the classifying models or ex-

tract relevant features that require complex process-

ing and are used by users knowledgeable in comput-

ing. These application are typically computationally-

intensive and require multiple executions over the

same data to fine tune the working parameters. On the

other hand, production applications are used to clas-

sify images, using the trained models from the previ-

ous application and do not require (individually) in-

tensive computing, although they may be accessed by

multiple users simultaneously or applied to multiple

input data concurrently.

An application in the context of ATMOSPHERE in-

cludes mainly three components:

• The final application, which comprises the inter-

face layer and the processing back-end.

• The application dependencies, coded in Docker-

files that the automatic system will use to build

the container images.

• The virtual infrastructure blueprint, which in-

cludes third-party components for managing the

resources and the execution back-end.

2.2 Application Development

Figure 1 shows a detailed diagram of the interac-

tions at the level of the development and deployment,

which are closely coupled. In this figure, we differen-

tiate with a colour code the services that are included

in the ATMOSPHERE platform. Grey boxes relate to

external services.

The development cycle (top part of figure 1) in-

volves three main interactions: First, the management

of the source code of the application, which involves

(*) https://github.com/eubr-atmosphere/d42 applications

(**) https://github.com/grycap/ec3

Figure 1: Deployment use cases from the Application de-

veloper perspective.

writing the code and the tests. Second, the specifica-

tion of the software dependencies, by defining con-

tainers and/or Ansible roles that install the dependen-

cies required. Third, the customization of the appli-

cation with the trustworthiness properties to satisfy

the requirements of a specific execution. The data for

these three main blocks may be stored and managed

in the same repository. The deployment cycle (bottom

part of figure 1) involves two steps:

• Building of the container where the dependencies

are embedded. This process will be automatic,

once the proper processing pipelines are defined.

• Deploying the Virtual Infrastructure to run it.

3 IMPLEMENTATION OF THE

ARCHITECTURE

3.1 Application Back-end

The application is based on an Elastic Kubernetes

(kub, 2019) cluster, which will be deployed along

with the infrastructure:

• A Kubernetes cluster, to manage the containers

and services.

• A CLUES (de Alfonso et al., 2013) elasticity

manager, linked to Kubernetes and the Deploy-

ment Service, which will request the deployment

of additional nodes as new resources are requested

due to the deployment of new pods.

• A storage service, based on a shared volume, ex-

posed to the pods within the deployment. Eventu-

ally, a database may be instantiated.

3.2 Application Topology

From the Application Developer point of view, two

components are required:

IWFCC 2019 - Special Session on Federation in Cloud and Container Infrastructures

590

• A set of Docker containers with the processing

code and the dependencies. The containers are

build up on top of the base images provided by

ATMOSPHERE, as provided in Docker Hub EU-

BraATMOSPHERE organization

1

. The catalog

include images supporting Machine Learning and

image processing software.

• A Jupyter Notebook instance with the notebooks

with the code to implement the processing actions

and its visualization. This Notebook will run in

a container deployed on Kubernetes, with the li-

braries needed to run Kubernetes jobs and to ac-

cess the data from a shared volume.

3.3 Data Access Layer

ATMOSPHERE will provide two types of secure ac-

cess. As the interfaces will be standard (POSIX and

ODBC/JDBC), this will not affect application devel-

opment, which could be implemented using standard

packages and finally substituting them by the ATMO-

SPHERE Data Management components. ATMO-

SPHERE will also provide insecure file systems, and

local volumes to store data that do not need special

protection. By insecure we do not mean that they are

not access protected, but that they are not executed in

an encrypted environment.

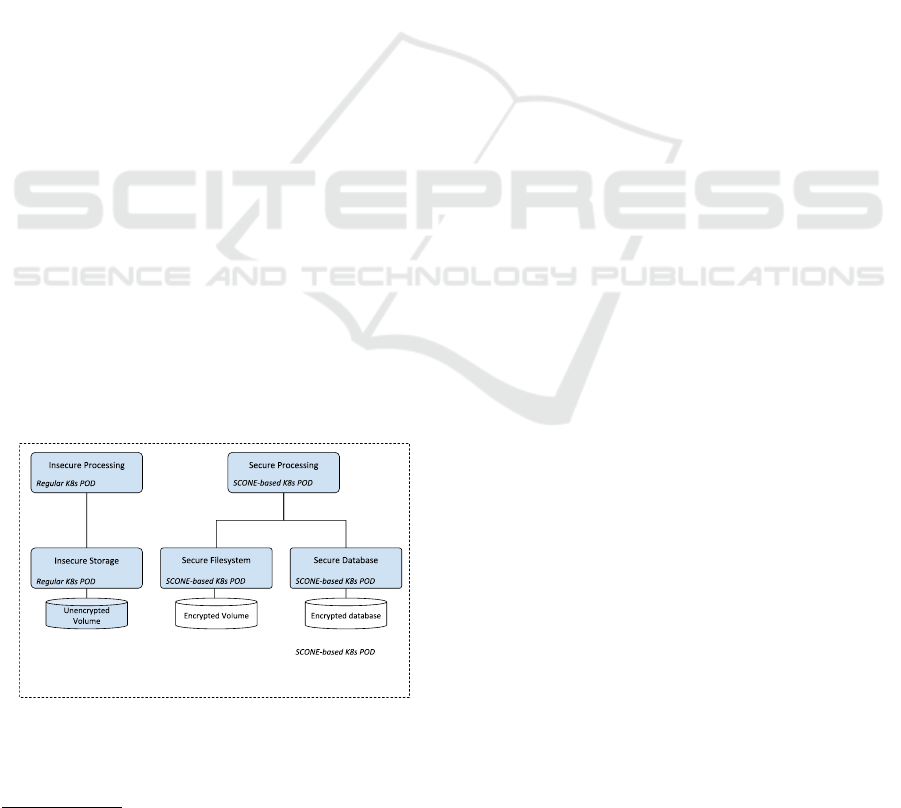

Figure 2 shows the mapping of the storage com-

ponents into the ATMOSPHERE services. Data that

does not need special protection, such as totally

anonymised data, public data or intermediate data

may fall in this category. The application will include

an NFS component that may use a Kubernetes vol-

ume for persistent storage. Data will be accessible

only through the internal network, where all the ap-

plications are deployed.

Kubernetes cluster

sshfsnfs ODBC/JDBC

Figure 2: Architecture of the storage solutions for the appli-

cations in ATMOSPHERE.

The second scenario is much more complex and re-

1

https://cloud.docker.com/u/eubraatmosphere/repository/list

flects the added value of ATMOSPHERE. Sensitive

data must be stored encrypted and access keys will

only be provided in a secure environment. Moreover,

processing will only be possible in a protected con-

tainer running on an enclave. This way we can guar-

antee that the system administrator, or anyone that has

granted access to the physical or virtual resources is

not able to access the data. It is important to state

that all the communication takes place in the private

overlay network, using secure protocols. This model

is valid for both the filesystem and the databases. Ac-

cess control will be available at volume level. Differ-

ent tenants will have separate deployments. Even if

there are different applications in the same federated

network, access control will guarantee that only the

users authorised for accessing a volume will be ac-

cepted. Despite there will be no fine-grain file-level

authorization, the model fits quite well the require-

ments and expectancy of the users.

3.4 Deployment

The application is implemented as a set of services

delivered inside containers deployed by Kubernentes.

The first part is to deploy a Kubernetes cluster on top

of the federated offering.

For this purpose, we make use of Elastic Com-

pute Clusters in the Cloud (EC3) (Calatrava et al.,

2016)(Caballer et al., 2015). EC3 provides the capa-

bility of deploying self-managed clusters in different

cloud backends, including Fogbow (Brasileiro et al.,

2016). EC3 self-manages the Kubernetes cluster by

adding or reducing working nodes to the cluster de-

pending on the workload. The cluster is expanded

along a federated network and resource matching is

performed by the affinity of the pods and deployment

to the storage and the resource depdendencies.

3.5 Implementation

The application leverages the federation model to im-

plement anscenario where data should not be stored

outside of the boundaries of a specific datacentre,

while processing capabilities are located in other dat-

acentre. For this purpose, we identify two scenarios

depending on the privacy requirements of the data to

be processed.

Privacy-sensitive data must be securely processed.

The data will be stored encrypted in a persistent stor-

age and it will be accessed through inside a secure

container (using SCONE (Arnautov et al., 2016) and

VALLUM (Arnautov et al., 2018)). Despite the data is

stored only in the authorized site, the processing con-

tainers can run on any site, accessing the data through

Medical Imaging Processing Architecture on ATMOSPHERE Federated Platform

591

a secure connection.

Not privacy-sensitive data has less restrictions.

They can be accessed remotely and transferred to re-

sources out of the secure boundaries. The result of

the processing (classifiers, error estimations) can be

safely stored in a persistent storage in any site, and

can be accessed only with the application credentials.

Figure 3 shows the architecture of the application.

K8s F/E K8s WNK8s WN

K8s F/E

K8s WN

K8s WN

ub16_sshfspy

3-client

ub16_sshfs

K8s WN

K8s

Persis.

vol.

/mnt/volume/volid

/share/volid

/mnt/share

/volid

mnt/share/

volid

minikube-no

tebook

/mnt/share

/volid

/mnt

/.ip

ytho

n/..

/mnt

/.ip

ytho

n/..

/mnt

/.ip

ytho

n/..

kubectl

SCONE

processing

container

SCONE

storage

container

K8s WN

K8s

Persis.

vol.

/share/volid

/mnt/share

/volid

mnt/share/

volid

Regular

container

/mnt/share

/volid

/mnt

/.ip

ytho

n/..

/mnt/.ipyt

hon/..

/mnt/.ipy

thon/..

K8s WN

mnt/share/

volid

/mnt/.ipy

thon/..

K8s WN

K8s

Persis.

vol.

/share/volid

kubectl

GPUs

GPUs

Figure 3: Architecture of the applications in ATMO-

SPHERE.

The distributed execution environment is provided by

an iPython Parallel cluster, the interface is provided

by a Jupyter notebook and the storage is managed

through a ssh server and sshfs. For this purpose, AT-

MOSPHERE has built the following containers:

• Front-end container. It includes Python3, Jupyter

notebook, sshfs client, Tensorflow and Kuber-

netes client tool. It mounts the Kubernetes creden-

tials to enable managing the deployment, starts

the iPcontroller service and mounts via sshfs the

remote filesystem.

• Processing worker. It includes Python3, sshfs

client and Tensorflow, and runs the ipengine ser-

vice that binds to the ipcontroller service, and

mounts via sshfs the remote filesystem. The con-

tainers access the GPUs mounting the device from

the VM, which is connected to the Hardware via

PCI passthrough.

• File Server. It is basically a ssh server that mounts

an encrypted filesystem and obtains the creden-

tials using VALLUM.

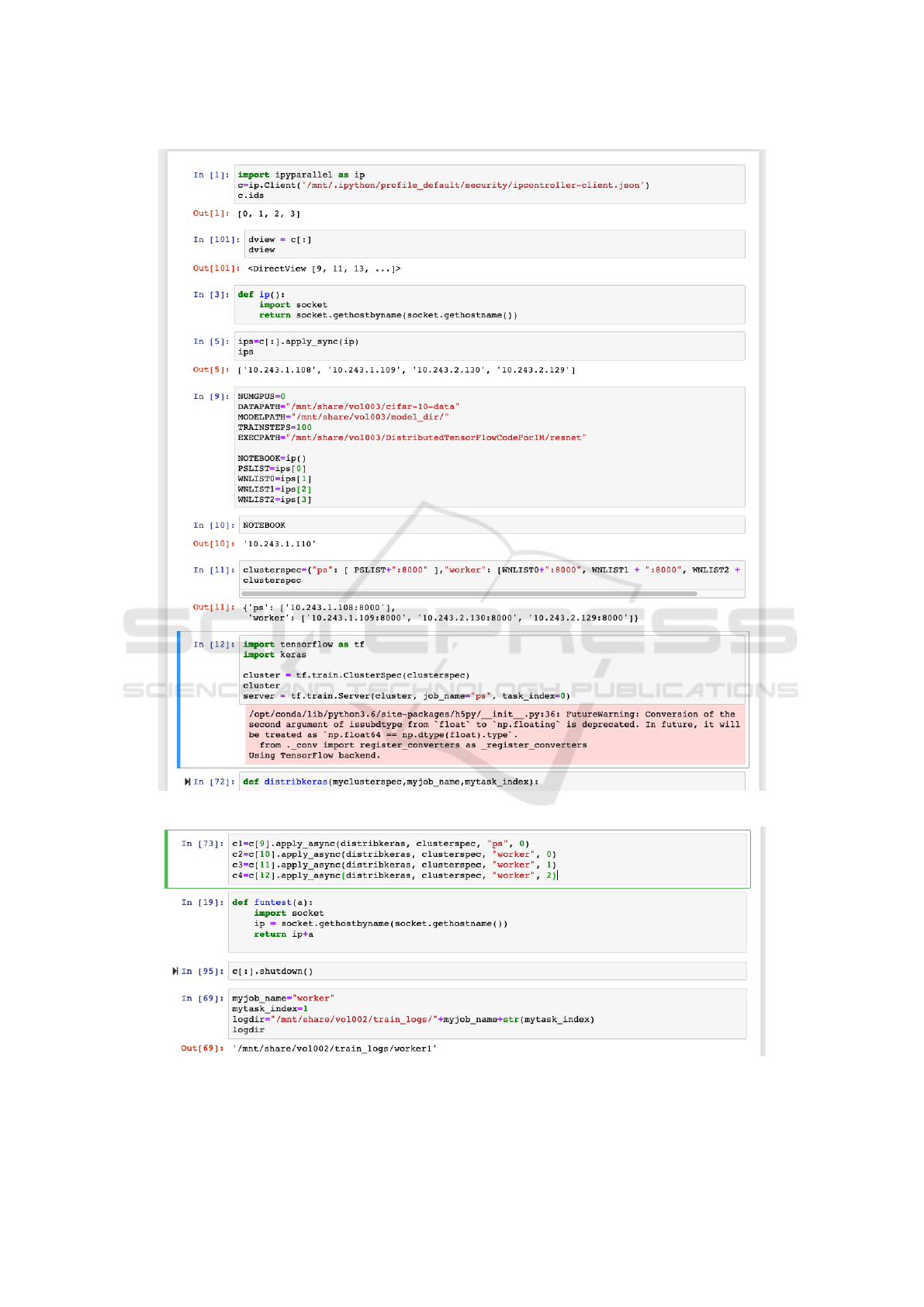

3.6 Parallel Execution

The parallel application uses the ipyparallel module

to run the Tensorflow application on all the nodes.

A client can connect to the cluster to run a callable

function in the cluster using the configuration of the

cluster, which is available in the shared directory. The

execution goes through three phases: The setup of the

execution cluster, the preparation of the iPython clus-

ter and the execution of the distributed classifier.

The cluster is defined by means of a Kuber-

netes application available in a GitHub repository

2

.

2

https://github.com/eubr-atmosphere/d42 applications/

tree/master/integrated

This application uses a set of Docker containers

that automate the process of setting up the clus-

ter. The base containers is available in Docker

Hub

3

under the organization eubraatmosphere, the

name eubraatmosphere autobuild and the tag

ub16 ssfstf11-client, which run the command

ipengine using a shared directory that stores the

ipcontroller-engine.json file needed for the

setup of the cluster.

The setup of the cluster for the execution of

the distributed tensorflow(Tensorflow, 2019) is per-

formed through the definition of a cluster context that

defines three types of actors: master, parameter server

and worker. Master process manages the execution,

Workers are the nodes that perform the most compu-

tation intensive work and Parameter Servers are re-

sources that store the variables needed by the workers,

such as the weights variables needed for the networks.

Figure 4 shows the commands required for building

up context information to run the applications.

Once the cluster is setup and the configuration is

obtained, the ipyparallel module enables running the

same python function over several nodes in the clus-

ter. This eases the management and monitoring of the

execution of a distributed tensoflow application, as it

provides a centralised console to follow up the status

of the processing. With the combination of Jupyter

notebook, the user can even retrieve the model in an

object and use it for error evaluation and prediction on

an interactive console. Figure 5 show the code needed

for the execution.

The last step is the use of GPU accelerators for

the computation intensive training. For this purpose,

GPUs connected through PCI Pass through to the Vir-

tual Machines. Containers mount the device directly

from the Virtual Machine, and the matching between

resources and containers is performed through the

Kubernetes affinity.

4 CONCLUSIONS

The paper shows the architecture of a container-based

medical application that leverages the use of feder-

ated infrastructures to gather computing-intensive re-

sources with secure storage. The application is de-

signed with the components provided by the ATMO-

SPHERE platform.

The applications benefit from ATMOSPHERE in

several senses:

• Provide a simplified scenario to train and build the

models, capable of managing parallel computing

3

https://hub.docker.com/

IWFCC 2019 - Special Session on Federation in Cloud and Container Infrastructures

592

Figure 4: Preparation of the cluster.

Figure 5: Execution of Distributed Keras through the cluster.

Medical Imaging Processing Architecture on ATMOSPHERE Federated Platform

593

resources. Model training requires intensive com-

puting resources to be provisioned. Data scien-

tists should not be charged with the burden of set-

ting up this environment, and despite that there

are several solutions in public clouds, there is no

solution as flexible as ATMOSPHERE is.

• Provide a seamless transition from model build-

ing to production. ATMOSPHERE provides a de-

velopment scenario that supports building models

and publishing them as web services which can

be called safely for obtaining a diagnosis on an

image.

• To be able to work seamlessly in an interconti-

nental federated infrastructure, without having to

specify geographical boundaries and trusting in

the cloud services to select them according to re-

strictions in data or performance.

• To securely access and process data with the guar-

antees that neither the data owner can have access

to the processing code nor the application devel-

oper can retrieve the data out of the system.

Clinical measures on the outcomes of RHD cases, be-

fore and after the application of these approaches, will

allow an assessment of the results and compare them

with the expected benefits.

ACKNOWLEDGEMENTS

The work in this article has been co-funded by

project ATMOSPHERE, funded jointly by the Eu-

ropean Commission under the Cooperation Pro-

gramme, Horizon 2020 grant agreement No 777154

and the Brazilian Minist

´

erio de Ci

ˆ

encia, Tecnologia e

Inovac¸

˜

ao (MCTI), number 51119.

The authors also want to acknowledge the re-

search grant from the regional government of the Co-

munitat Valenciana (Spain), co-funded by the Euro-

pean Union ERDF funds (European Regional De-

velopment Fund) of the Comunitat Valenciana 2014-

2020, with reference IDIFEDER/2018/032 (High-

Performance Algorithms for the Modelling, Simula-

tion and early Detection of diseases in Personalized

Medicine).

REFERENCES

(2019). Kubernetes web site. https://kubernetes.io. Ac-

cessed: 29-12-2018.

Arnautov, S., Brito, A., Felber, P., Fetzer, C., Gregor,

F., Krahn, R., Ozga, W., Martin, A., Schiavoni, V.,

Silva, F., Tenorio, M., and Th

¨

ummel, N. (2018).

Pubsub-sgx: Exploiting trusted execution environ-

ments for privacy-preserving publish/subscribe sys-

tems. In 2018 IEEE 37th Symposium on Reliable Dis-

tributed Systems (SRDS), pages 123–132.

Arnautov, S., Trach, B., Gregor, F., Knauth, T., Martin, A.,

Priebe, C., Lind, J., Muthukumaran, D., O’Keeffe, D.,

Stillwell, M. L., Goltzsche, D., Eyers, D., Kapitza,

R., Pietzuch, P., and Fetzer, C. (2016). SCONE: Se-

cure linux containers with intel SGX. In 12th USENIX

Symposium on Operating Systems Design and Imple-

mentation (OSDI 16), pages 689–703, Savannah, GA.

USENIX Association.

Brasileiro, F., Vivas, J. L., d. Silva, G. F., Lezzi, D., Diaz,

C., Badia, R. M., Caballer, M., and Blanquer, I.

(2016). Flexible federation of cloud providers: The

eubrazil cloud connect approach. In 2016 30th In-

ternational Conference on Advanced Information Net-

working and Applications Workshops (WAINA), pages

165–170.

Caballer, M., Blanquer, I., Molt

´

o, G., and de Alfonso, C.

(2015). Dynamic Management of Virtual Infrastruc-

tures. Journal of Grid Computing, 13(1):53–70.

Calatrava, A., Romero, E., Molt

´

o, G., Caballer, M., and

Alonso, J. M. (2016). Self-managed cost-efficient vir-

tual elastic clusters on hybrid Cloud infrastructures.

Future Generation Computer Systems, 61:13–25.

de Alfonso, C., Caballer, M., Alvarruiz, F., and Hern

´

andez,

V. (2013). An energy management system for cluster

infrastructures. Computers & Electrical Engineering,

39(8):2579 – 2590.

Tensorflow (2019). Distributed tensorflow.

https://github.com/tensorflow/examples/blob/master/

community/en/docs/deploy/distributed.md. Accessed:

28-2-2019.

IWFCC 2019 - Special Session on Federation in Cloud and Container Infrastructures

594