PORTOS: Proof of Data Reliability for Real-World Distributed

Outsourced Storage

Dimitrios Vasilopoulos, Melek

¨

Onen and Refik Molva

EURECOM, Sophia Antipolis, France

Keywords:

Secure Cloud Storage, Proofs of Reliability, Reliable Storage, Verifiable Storage.

Abstract:

Proofs of data reliability are cryptographic protocols that provide assurance to a user that a cloud storage

system correctly stores her data and has provisioned sufficient redundancy to be able to guarantee reliable

storage service. In this paper, we consider distributed cloud storage systems that make use of erasure codes

to guarantee data reliability. We propose a novel proof of data reliability scheme, named PORTOS, that on

the one hand guarantees the retrieval of the outsourced data in their entirety through the use of proofs of

data possession and on the other hand ensures the actual storage of redundancy. PORTOS makes sure that

redundancy is stored at rest and not computed on-the-fly (whenever requested) thanks to the use of time-

lock puzzles. Furthermore, PORTOS delegates the burden of generating the redundancy to the cloud. The

repair operations are also taken care of by the cloud. Hence, PORTOS is compatible with the current cloud

computing model where the cloud autonomously performs all maintenance operations without any interaction

with the user. The security of the solution is proved in the face of a rational adversary whereby the cheating

cloud provider tries to gain storage savings without increasing its total operational cost.

1 INTRODUCTION

Distributed storage systems guarantee data reliabil-

ity by redundantly storing data on multiple storage

nodes. Depending on the redundancy mechanism,

each storage node may store either a full copy of the

data (replication) or a fragment of an encoded ver-

sion of it (erasure codes). In the context of a trusted

environment such as an on-premise storage system,

this type of setting is sufficient to guarantee data reli-

ability against accidental data loss caused by random

hardware failures or software bugs.

With the prevalence of cloud computing, out-

sourcing data storage has become a lucrative op-

tion for many enterprises as they can meet their

rapidly changing storage requirements without hav-

ing to make tremendous upfront investments for in-

frastructure and maintenance. However, in the cloud

setting, outsourcing raises new security concerns with

respect to misbehaving or malicious cloud providers.

An economically motivated cloud storage provider

could opt to “take shortcuts” when it comes to data

maintenance in order to maximize its returns and thus

jeopardizing the reliability of user data. In addition,

cloud storage providers currently do not assume any

liability in case data is lost or damaged. Hence, cloud

users may be reluctant to adopt cloud storage services

for applications that are otherwise perfectly suited

for the cloud such as archival storage or backups.

As a result, technical solutions that aim at allowing

users to verify the long-term integrity and reliability

of their data, would be beneficial both to customers

and providers of cloud storage services.

Focusing on the long term integrity requirement,

the literature features a number of solutions for ver-

ifiable outsourced storage. Notably, proofs of re-

trievability (PoR) (Juels and Kaliski, 2007; Shacham,

H. and Waters, B., 2008) and data possession (PDP)

(Ateniese et al., 2007) are cryptographic mechanisms

that enable a cloud user to efficiently verify that her

data is correctly stored by an untrusted cloud storage

provider.

PoRs and PDPs have been extended in order to

ensure data reliability over time introducing the no-

tion of proofs of data reliability (Curtmola et al.,

2008; Bowers et al., 2009; Armknecht et al., 2016;

Vasilopoulos et al., 2018). In addition to the integrity

of a user’s outsourced data, a proof of data relia-

bility provides the user with the assurance that the

cloud storage provider has provisioned sufficient re-

dundancy to be able to guarantee reliable storage ser-

vice. In a straightforward approach, the cloud user

Vasilopoulos, D., Önen, M. and Molva, R.

PORTOS: Proof of Data Reliability for Real-World Distributed Outsourced Storage.

DOI: 10.5220/0007927301730186

In Proceedings of the 16th International Joint Conference on e-Business and Telecommunications (ICETE 2019), pages 173-186

ISBN: 978-989-758-378-0

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

173

locally generates the necessary redundancy and sub-

sequently stores the data together with the redundancy

on multiple storage nodes. Previous work has estab-

lished that, in the case of erasure code-based stor-

age systems, the relation between the data and re-

dundancy symbols should stay hidden from the cloud

storage provider, otherwise, the latter could store a

portion of the encoded data only and compute any

missing symbols upon request. Similarly, in the case

of replication based storage systems, each storage

node should store a different replica; otherwise, the

cloud storage provider could simply store a single

replica. As a result, most proof of data reliability

schemes (Curtmola et al., 2008; Chen and Curtmola,

2017; Bowers et al., 2009; Chen et al., 2015) re-

quire some interaction with the user to repair dam-

aged data. Hence these schemes are at odds with auto-

matic maintenance that is a key feature of cloud stor-

age systems.

In this paper, we propose PORTOS, a proof of data

reliability scheme tailored to distributed cloud storage

systems that makes use of erasure codes to guaran-

tee data reliability. PORTOS achieves the following

properties against a rational cloud storage provider:

• Data reliability against t storage node failures:

PORTOS uses a systematic linear erasure code

to add redundancy to the outsourced data which

thereafter stores across multiple storage nodes.

The system can tolerate the failure of up to t stor-

age nodes, and successfully reconstruct the origi-

nal data using the contents of the surviving ones.

• Data and redundancy integrity: PORTOS lever-

ages a PDP scheme to verify the integrity of the

outsourced data and it further takes advantage of

the homomorphic properties of the PDP tags, in

order to verify the integrity of the redundancy.

Moreover, thanks to the combination of the PDP

scheme with erasure codes, PORTOS can provide

a cloud user with the assurance that she can re-

cover her data in their entirety.

• Automatic data maintenance by the cloud storage

provider: In PORTOS, the cloud storage provider

has the means to generate the required redun-

dancy, detect storage node failures and repair cor-

rupted data entirely on its own without any in-

teraction with the user conforming to the current

cloud model. This setting, however, allows a ma-

licious cloud storage provider to delete a portion

of the encoded data and compute any missing

symbols upon request. To defend against such an

attack, PORTOS relies on time-lock puzzles, and

masks the data, making the symbol regeneration

process time-consuming. In this way, a rational

cloud is provided with a strong incentive to con-

form to the proof of data reliability protocol.

• Real-world cloud storage architecture: PORTOS

conforms to the current model of erasure-code-

based distributed storage systems. Moreover, it

does not make any assumption regarding the sys-

tem’s underlying technology as opposed to prior

proof of data reliability schemes that also allow

for automatic data maintenance by the cloud stor-

age provider.

In summary, we make the following contributions

in this paper:

• We propose a new formal definition for proofs of

data reliability, which is more generic than the

definitions presented in prior work (Armknecht

et al., 2016).

• We present a novel proof of data reliability

scheme, named PORTOS, that on the one hand

guarantees that the outsourced data can be re-

trieved in their entirety through the use of proofs

of data possession and on the other hand ensures

the actual storage of redundancy. PORTOS makes

sure that redundancy is stored at rest and not com-

puted on-the-fly (whenever requested) thanks to

the use of time-lock puzzles. Furthermore, POR-

TOS delegates the burden of generating the re-

dundancy, as well as the repair operations, to the

cloud storage provider.

• We show that PORTOS is secure against a rational

adversary, and we further evaluate the impact of

two types of attacks, considering in both cases the

most favorable scenario for the adversary. Finally,

we evaluate the performance of PORTOS.

The remaining of this paper is organized as fol-

lows: In Section 2, we give an overview of prior work

in the field. We describe the formal definition, adver-

sary model, and security requirements of a proof of

data reliability scheme in Section 3. In Section 4, we

introduce PORTOS, a novel data reliability scheme

and we analyze its security in Section 5. Finally, in

Section 6 we analyze the performance of PORTOS.

2 PRIOR WORK

Replication-based Proofs of Data Reliability. The

authors in (Curtmola et al., 2008) propose a proof

of data possession scheme (PDP) which extends the

PDP scheme in (Ateniese et al., 2007) and enables the

client to verify that the cloud provider stores at least

t replicas of her data. In (Chen and Curtmola, 2013;

Chen and Curtmola, 2017), the replica differentiation

SECRYPT 2019 - 16th International Conference on Security and Cryptography

174

mechanism in (Curtmola et al., 2008) is replaced by a

tunable masking mechanism, which allows to shift the

bulk of repair operations to the cloud storage provider

with the user acting as a repair coordinator. In (Leon-

tiadis and Curtmola, 2018), the scheme in (Chen and

Curtmola, 2017) is extended in order to construct a

proof of data reliability protocol that allows for cross-

user file-level deduplication. The authors in (Barsoum

and Hasan, 2012; Barsoum and Hasan, 2015) pro-

pose a multi-replica dynamic PDP scheme that en-

ables clients to update/insert selected data blocks and

to verify multiple replicas of their outsourced files.

The scheme in (Etemad and K

¨

upc¸

¨

u, 2013), extends

the dynamic PDP scheme in (Erway et al., 2009) in or-

der to transparently support replication in distributed

cloud storage systems. In (Armknecht et al., 2016),

the authors propose a multi-replica PoR scheme that

delegates the replica construction to the cloud storage

provider. The scheme uses tunable puzzles as part of

its replication mechanism in order to force the cloud

storage provider to store the replicas at rest.

Erasure-code-based Proofs of Data Reliability.

The authors in (Bowers et al., 2009) propose HAIL

which provides a high availability and integrity layer

for cloud storage. HAIL uses erasure codes in order

to guarantee data retrievability and reliability among

distributed storage servers, and enables a user to de-

tect and repair data corruption. The work in (Chen

et al., 2015) redesigns parts of (Bowers et al., 2009)

in order to achieve a more efficient repair phase that

shifts the bulk computations to the cloud side. In

(Chen et al., 2010), the authors present a remote

data checking scheme for network-coding-based dis-

tributed storage systems that minimizes the commu-

nication overhead of the repair component compared

to erasure coding-based approaches. The work in (Le

and Markopoulou, 2012) extends the scheme in (Chen

et al., 2010) by improving the repair mechanism in or-

der to reduce the computation cost for the client, and

introducing a third party auditor. Based on the intro-

duction of this new entity, the authors in (Thao and

Omote, 2016) design a network-coding-based PoR

scheme in which the repair mechanism is executed

between the cloud provider and the third party auditor

without any interaction with the client. The authors in

(Bowers et al., 2011) propose RAFT, an erasure-code-

based protocol that can be seen as a proof of fault tol-

erance. RAFT relies on technical characteristics of ro-

tational hard drives in order to construct a time-based

challenge, that enables a client to verify that her en-

coded data is stored at multiple storage nodes within

the same data center. In (Vasilopoulos et al., 2018),

the authors propose POROS, a proof of data reliabil-

ity scheme for erasure-code-based cloud storage sys-

tems which combines PDP with time constrained op-

erations in order to force the cloud storage provider to

store the redundancy at rest. POROS dictates that all

the redundancy is stored permuted on a single stor-

age node and leverages the technical characteristics

of rotational hard drives to set a time threshold for

the generation of the proof. Both schemes enable the

outsourcing of the data encoding to the cloud storage

provider as well as automatic maintenance operations

without any interaction with the client.

Most of the proof of data reliability schemes pre-

sented above share a common system model, where

the user generates the required redundancy locally,

before uploading it together with the data to the cloud

storage provider. Furthermore, when corruption is de-

tected, the cloud cannot repair it autonomously, be-

cause either it expects some input from another entity

or all computations are performed by the client. The

solution in (Armknecht et al., 2016) outsources to the

cloud the redundancy generation, however, it relies

on replication to provide data reliability and hence is

not directly comparable to our proof of data reliability

scheme.

PORTOS is directly comparable with the work

in (Bowers et al., 2011) and in (Vasilopoulos et al.,

2018), in the sense that it also uses erasure codes to

guarantee data reliability and it delegates the burden

of generating the redundancy, as well as the repair op-

erations, to the cloud storage provider. Nonetheless,

in contrast to these schemes, our solution does not

make any assumption regarding the underlying stor-

age technology. Namely, both schemes rely on tech-

nical characteristics of rotational hard drives in order

to set a time-threshold for the cloud servers to respond

to a read request for a set of data blocks. Further-

more, unlike in PORTOS, the challenge verification

in (Bowers et al., 2011) requires that a copy of the

encoded data is stored locally at the user. Lastly, the

proof of data reliability scheme in (Vasilopoulos et al.,

2018) requires that all redundancy symbols are stored

the result on a single storage node without fragmenta-

tion. On the contrary, PORTOS does not deviate from

the standard model of erasure-code based distributed

storage systems.

PORTOS: Proof of Data Reliability for Real-World Distributed Outsourced Storage

175

3 PROOFS OF DATA

RELIABILITY

3.1 Environment

We consider a setting where a user U produces a data

object D from a file D and subsequently outsources

D to an untrusted cloud storage provider C who com-

mits to store D in its entirety across a set of n storage

nodes {S

( j)

}

1≤ j≤n

with reliability guarantee t: stor-

age service guarantee against t storage node failures.

We define a proof of data reliability scheme as a pro-

tocol executed between the cloud storage provider C

with its storage nodes {S

( j)

}

1≤ j≤n

on the one hand

and a verifier V on the other hand. The aim of such

a protocol is to enable V to check and validate (i) the

integrity of D and, (ii) whether the reliability guaran-

tee t is satisfied. In order not to cancel out the storage

and performance advantages of the cloud, all this ver-

ification should be performed without V downloading

the entire content associated to D from {S

( j)

}

1≤ j≤n

.

We consider two different settings for a proof of data

reliability scheme: a private one where U is the veri-

fier and a public one where V can be any third party

except for C.

Formal Definition. A proof of data reliability

scheme comprises seven polynomial time algorithms:

Setup (1

λ

,t) → ({S

( j)

}

1≤ j≤n

, param

system

): This algo-

rithm takes as input the security parameter λ and

the reliability parameter t, and returns the set of

storage nodes {S

( j)

}

1≤ j≤n

, the system parameters

param

system

, and the specification of the redun-

dancy mechanism.

Store (1

λ

, D) → (K

U

, D, param

D

): This randomized al-

gorithm invoked by the user U takes as input the

security parameter λ and the to-be-outsourced file

D, and outputs the user key K

U

, the verifiable data

object D, which also includes a unique identifier

fid, and optionally, a set of data object parame-

ters param

D

. In a private proof of data reliability

scheme K

U

:= sk is the user’s secret key, whereas

in a public one K

U

:= (sk, pk) is the user’s pri-

vate/public key pair.

GenR (D,param

system

, param

D

) → (

˜

D): This algo-

rithm takes as input the verifiable data object

D, the system parameters param

system

, and op-

tionally, the data object parameters param

D

, and

outputs the data object

˜

D. Algorithm GenR may

be invoked either by the user U, when the user

generates the redundancy on her own; or by C,

when the redundancy computation is entirely out-

sourced to the cloud storage provider. Depending

on the redundancy mechanism,

˜

D may comprise

multiple copies of D or an encoded version of

it. Additionally, algorithm GenR generates the

necessary integrity values that will further help

for the integrity verification of

˜

D’s redundancy.

Chall (K

V

, param

system

) → (chal): This stateful and

probabilistic algorithm invoked by the verifier V

takes as input the verifier key K

V

and the system

parameters param

system

, and outputs a challenge

chal. In a private proof of data reliability scheme

the verifier key is the user’s secret key (K

V

:= sk),

whereas in a public one the verifier key is the

user’s public key (K

V

:= pk).

Prove (chal,

˜

D) → (proof): This algorithm invoked by

C takes as input the challenge chal and the data

object

˜

D, and returns C’s response proof of data

reliability.

Verify (K

V

, chal, proof, param

D

) → (dec): This deter-

ministic algorithm invoked by V takes as input

C’s proof corresponding to a challenge chal, the

verifier key K

V

, and optionally, the data object

parameters param

D

, and outputs a decision

dec ∈ {accept, reject} indicating a successful

or failed verification of the proof, respectively.

In a private proof of data reliability scheme the

verifier key is the user’s secret key (K

V

:= sk),

whereas in a public one the verifier key is the

user’s public key (K

V

:= pk).

Repair (

∗

˜

D,param

system

, param

D

) → (

˜

D): This al-

gorithm takes as input a corrupted data object

∗

˜

D together with its parameters param

D

and

the system parameters param

system

, and either

reconstructs

˜

D or outputs a failure symbol ⊥.

Algorithm Repair may be invoked either by U

or C depending on the proof of data reliability

scheme.

3.2 Adversary Model

Similar to (Armknecht et al., 2016; Vasilopou-

los et al., 2018), we consider an adversary model

whereby the cloud storage provider C is rational, in

the sense that C decides to cheat only if it achieves

some cost savings. For a proof of data reliability

scheme that deals with the storage of data and its re-

dundancy, a rational adversary would try to save some

storage space without increasing its overall opera-

tional cost. The overall operational cost is restricted to

the maximum number n of storage nodes {S

( j)

}

1≤ j≤n

whereby each of them has a bounded capacity of stor-

age and computational resources. More specifically,

assume that for some proof of data reliability scheme

there exists an attack which allows C to produce a

SECRYPT 2019 - 16th International Conference on Security and Cryptography

176

valid proof of data reliability while not fulfilling the

reliability guarantee t. If in order to mount this at-

tack, C has to provision either more storage resources

or excessive computational resources compared to the

resources required when it implements the protocol in

a correct manner, then a rational C will choose not to

launch his attack.

3.3 Security Requirements

A proof of data reliability scheme oughts to fulfill the

following requirements.

Req 0 : Correctness. A proof of data reliability

scheme should be correct: if both the cloud storage

provider C and the verifier V are honest, then on in-

put chal sent by the verifierV, using algorithm Chall,

algorithm Prove (invoked by C) generates a Proof

of Data Reliability proof such that algorithm Verify

yields accept with probability 1. Put differently, an

honest C should always be able to pass the verifica-

tion of proof of data reliability.

Req 1 : Extractability. It is essential for any proof

of data reliability scheme to ensure that an honest

user U can recover her file D with high probability.

This guarantee is formalized using the notion of ex-

tractability introduced in (Juels and Kaliski, 2007;

Shacham, H. and Waters, B., 2008). If a cloud stor-

age provider C can convince an honest verifier V with

high probability that it is storing the data object D to-

gether with its respective redundancy, then there ex-

ists an extractor algorithm that given sufficient inter-

action with C can extract the file D. To define this

requirement, we consider a game between an adver-

sary A and an environment, where A plays the role of

the prover likewise in (Shacham, H. and Waters, B.,

2008). The environment simulates all honest users

and verifiers, and it further provides A with oracles

for the algorithms Setup , Store , Chall , and Verify . A

interacts with the environment and requests O

Store

to

compute the tuple (sk, D, param

D

) for several chosen

files D and for different honest users. Thereafter, A

invokes a series of proof of data reliability executions

by interacting with O

Chall

and O

Verify

. Finally, A picks

a user U and a tuple (sk, D, param

D

), corresponding

to some file D and simulates a cheating cloud stor-

age provider C

0

. Let C

0

succeed in making algorithm

Verify yield dec := accept in an non-negligible ε frac-

tion of proof of data reliability executions. We say

that the proof of data reliability scheme meets the ex-

tractability guarantee, if there exists an extractor algo-

rithm such that given sufficient interactions with C

0

, it

recovers D.

Req 2 : Soundness of Redundancy Generation. In

addition to the extractability guarantee a proof of data

reliability scheme should ensure the soundness of the

redundancy generation mechanism. This entails that,

in the face of data corruption, the original file D can

be effectively reconstructed using the generated re-

dundancy. Hence, in proof of data reliability schemes

wherein algorithm GenR is implemented by the cloud

storage provider C, it is crucial to ensure that the latter

performs this operation in a correct manner. Namely,

an encoded data object should either consist of actual

codeword symbols or all replicas should be genuine

copies of the data object. In other words, the only

way C can produce a valid proof of data reliability is

by correctly generating the redundancy.

Req 3 : Storage Allocation Commitment. A cru-

cial aspect of a Proof of Data Reliability scheme, is

forcing a cloud storage provider C to store at rest

the outsourced data object D together with the rele-

vant redundancy. This requirement is formalized sim-

ilarly to the storage allocation guarantee introduced

in (Armknecht et al., 2016). A cheating cloud stor-

age provider C

0

that participates in the above men-

tioned extractability game (see Req 1), and dedicates

only a fraction of the storage space required for stor-

ing both D and its redundancy in their entirety, cannot

convince the verifier V to accept its proof with over-

whelming probability.

4 PORTOS

In this section, we present PORTOS: a proof of data

reliability scheme. PORTOS is based on the use of

erasure codes to offer reliable storage with automatic

maintenance. A user U sends a data object D to a

cloud storage provider C, which in turn encodes D

using a systematic linear (k, n)-MDS code (Xing and

Ling, 2003), and thereupon stores it across a set of n

storage nodes {S

( j)

}

1≤ j≤n

with reliability guarantee

against t storage node failures. Moreover, in POR-

TOS, C has the means to generate the required re-

dundancy, detect storage node failures, and repair cor-

rupted data, entirely on its own, without any interac-

tion with U.

Such setting, however, presents a cheating C with

the opportunity to misbehave, for instance, by not

fulfilling the storage allocation commitment require-

ment and computing the redundancy symbols on-the-

fly upon request. To prevent such behavior, PORTOS

leverages time-lock puzzles in order to augment the

resources (storage and computational) a cheating C

has to provision in order to produce a valid proof of

PORTOS: Proof of Data Reliability for Real-World Distributed Outsourced Storage

177

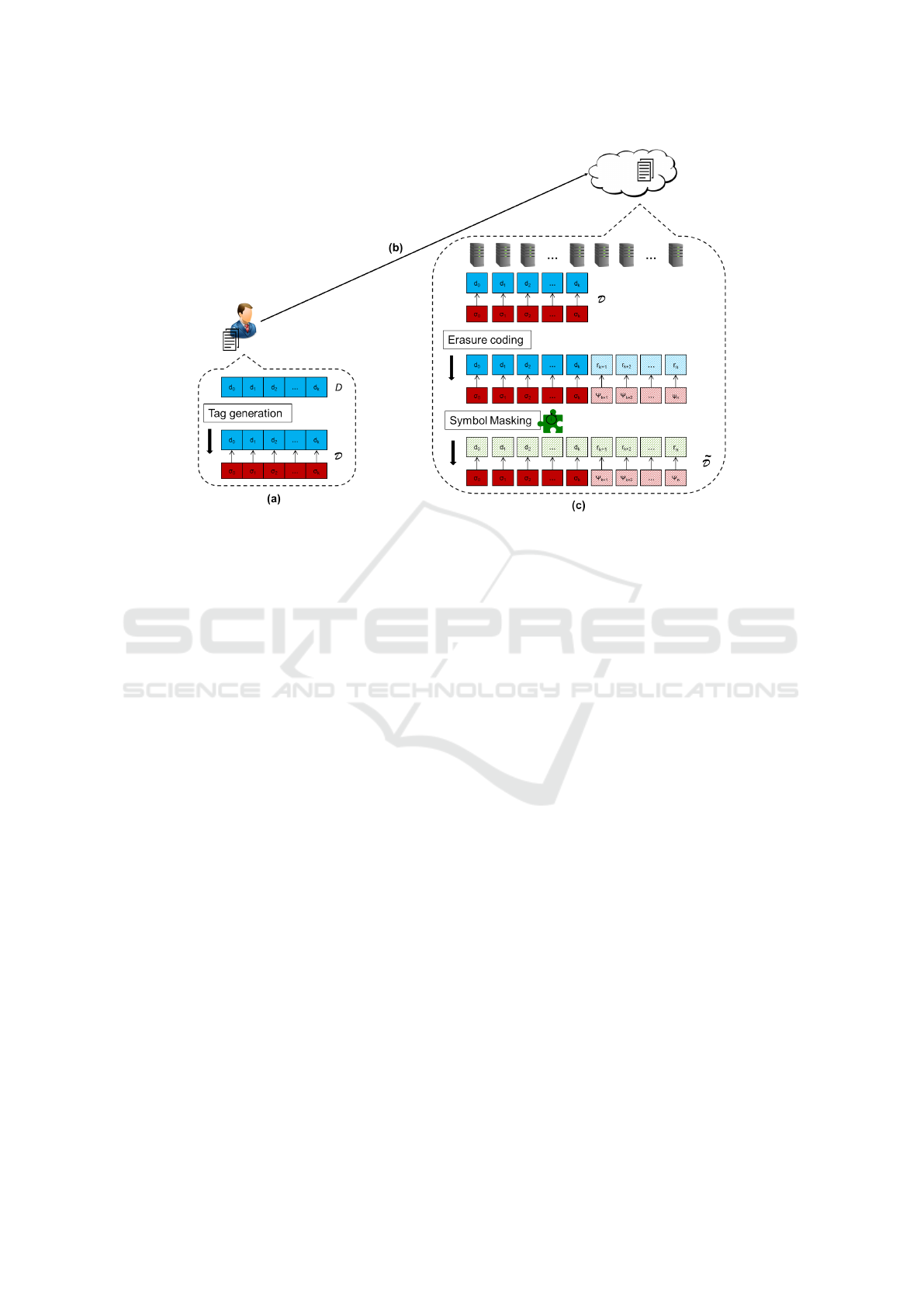

Figure 1: Overview of PORTOS outsourcing process: (a) The user U computes the linearly-homomorphic tags for the original

data symbols; (b) U outsources the data object D to cloud storage provider C; (c) Using G, C applies the systematic erasure

code on both data symbols and their tags yielding the redundancy symbols and their corresponding tags; thereafter, C derives

the masking coefficients and masks all data and redundancy symbols.

data reliability. Nonetheless, this mechanism does not

incur any additional storage or computational cost to

an honest C that generates the same proof. Moreover,

the puzzle difficulty can be adapted to C’s computa-

tional capacity as it evolves over time. Therefore, a

rational C is provided with a strong incentive to con-

form to the proof of data reliability protocol rather

than attempt to misbehave. Figure 1 depicts the steps

of PORTOS outsourcing process.

4.1 Building Blocks

MDS Codes. Maximum distance separable (MDS)

codes (Xing and Ling, 2003; Suh and Ramchandran,

2011) are a class of linear block erasure codes used

in reliable storage systems that achieve the highest

error-correcting capabilities for the amount of stor-

age space dedicated to redundancy. A (k, n)-MDS

code encodes a data segment of size k symbols into a

codeword comprising n code symbols. The input data

symbols and the corresponding code symbols are ele-

ments of a finite field F

q

, where q is a large prime and

k ≤ n ≤ q. In the event of data corruption, the original

data segment can be reconstructed from any set of k

code symbols. Furthermore, up to n− k + 1 corrupted

symbols can be repaired. The new code symbols can

either be identical to the lost ones, in which case we

have exact repair, or can be functionally equivalent, in

which case we have functional repair where the orig-

inal code properties are preserved.

A systematic linear MDS-code has a generator

matrix of the form G = [I

k

| P] and a parity check ma-

trix of the form H = [−P

>

| I

n−k

]. Hence, in a system-

atic code, the code symbols of a codeword include the

data symbols of the original segment. Reed-Solomon

codes (Xing and Ling, 2003) are a typical example of

MDS codes, their generator matrix G can be easily

defined for any given values of (k, n), and are used by

a number of storage systems (Blaum et al., 1994).

Linearly–homomorphic Tags. PORTOS’s in-

tegrity guarantee derives from the use of linearly-

homomorphic tags proposed, for the design of the

private PoR scheme in (Shacham, H. and Waters, B.,

2008).

This scheme consists of the following algorithms:

SW.Store (1

λ

, D) → (fid, sk, D): This randomized al-

gorithm invoked by the user U, first picks

a pseudo-random function PRF : {0, 1}

λ

×

{0, 1}

∗

→ Z

q

, together with its pseudo-randomly

generated key k

prf

∈ {0, 1}

λ

and a non-zero ele-

ment α

R

← Z

q

, and subsequently computes a ho-

momorphic authentication tag for each symbol d

i

of the file D as follows:

σ

i

= αd

i

+ PRF(k

prf

, i) ∈ Z

q

, for 1 ≤ i ≤ n.

SECRYPT 2019 - 16th International Conference on Security and Cryptography

178

Algorithm SW.Store then picks a unique identifier

fid, and terminates its execution by outsourcing

to the cloud storage provider C the authenticated

data object:

D :=

fid; {d

i

}

1≤i≤n

; {σ

i

}

1≤i≤n

.

SW.Chall (fid, sk) → (chal) : This algorithm invoked

by U picks l random elements ν

c

∈ Z

q

and l ran-

dom symbol indices i

c

, and sends to C the chal-

lenge

chal :=

(i

c

, ν

c

)

1≤c≤l

.

SW.Prove (D, chal) → (proof) : Upon receiving the

challenge chal, C invokes this algorithm which

computes the proof proof = (µ, τ) as follows:

µ =

∑

(i

c

,ν

c

)∈chal

ν

c

d

i

c

, τ =

∑

(i

c

,ν

c

)∈chal

ν

c

σ

i

c

.

SW.Verify (sk, proof, chal) → (dec) : This algorithm

invoked by U, verifies that the following equation

holds:

τ

?

= αµ +

∑

(i

c

,ν

c

)∈chal

ν

c

PRF(k

prf

, i

c

).

If proof is well formed, algorithm SW.Verify out-

puts dec := accept; otherwise it returns dec :=

reject.

Thanks to the unforgeability of homomorphic

tags, a malicious C cannot corrupt outsourced data

whilst eluding detection.

Time-lock Puzzles. A time-lock puzzle is a cryp-

tographic function that requires the execution of a

predetermined number of sequential exponentiation

computations before yielding its output. The RSA-

based puzzle of (Rivest et al., 1996) requires the re-

peated squaring of a given value β modulo N, where

N := p

0

q

0

is a publicly known RSA modulus, p

0

and

q

0

are two safe primes

1

that remain secret, and T is

the number of squarings required to solve the puzzle,

which can be adapted to the solver’s capacity of squar-

ings modulo N per second. Thereby, T defines the

puzzle’s difficulty. Without the knowledge of the se-

cret factors p

0

and q

0

, there is no faster way of solving

the puzzle than to begin with the value β and perform

T squarings sequentially. On the contrary, an entity

that knows p

0

and q

0

, can efficiently solve the puzzle

by first computing the value e := 2

T

(mod φ(N)) and

subsequently computing β

e

(mod N).

1

such that 2 is guaranteed to have a large order modulo

φ(N) where φ(N) = (p

0

− 1)(q

0

− 1)

PORTOS leverages the cryptographic puzzle of

(Rivest et al., 1996) to build a mechanism that en-

ables a user U to increase the computational load of a

misbehaving cloud storage provider C. To this end, C

is required to generate a set of pseudo-random values,

called masking coefficients, which are combined with

the symbols of the encoded data object D. C is ex-

pected to store at rest the masked data. More specif-

ically, in the context of algorithm Store , U outputs

two functions: the function maskGen which is sent to

C together with D and is used by algorithms GenR and

Repair ; and the function maskGenFast which is used

by U within the scope of algorithm Verify .

maskGen((i, j), param

m

) → m

( j)

i

: This function takes

as input the indices (i, j), and the tuple

param

m

:= (N, T , PRG

mask

, η

m

) comprising the

RSA modulus N := p

0

q

0

, the squaring coefficient

T , a pseudo-random generator PRG

mask

: Z

N

×

{0, 1}

∗

→ Z

N

2

, and a seed η

m

∈ Z

N

.

Function maskGen computes the masking coeffi-

cient m

( j)

i

as follows:

m

( j)

i

:=

PRG

mask

(η

m

, i k j)

2

T

(mod N).

maskGenFast((i, j), (p

0

, q

0

), param

m

) → m

( j)

i

: In addi-

tion to (i, j) and param

m

:= (N, T , PRG

mask

, η

m

),

this function takes as input the secret fac-

tors (p

0

, q

0

). Knowing p

0

and q

0

, function

maskGenFast efficiently computes the masking

coefficient m

( j)

i

by first computing the value e:

φ(N) := (p

0

− 1)(q

0

− 1), e := 2

T

(mod φ(N)),

m

( j)

i

:=

PRG

mask

(η

m

, i k j)

e

(mod N).

The puzzle difficulty can be adapted to the com-

putational capacity of C as it evolves over time such

that the evaluation of the function maskGen requires

a noticeable amount of time to yield m

( j)

i

. Further-

more, the masking coefficients are at least as large as

the respective symbols of D, hence storing the coeffi-

cients, as a method to deviate from our data reliability

protocol, would demand additional storage resources

which is at odds with C’s primary objective.

4.2 Protocol Specification

We now describe in detail the algorithms of PORTOS.

Setup (1

λ

,t) → ({S

( j)

}

1≤ j≤n

, param

system

) : Algorithm

Setup first picks a prime number q, whose size

2

such that its output is guaranteed to have a large order

modulo N.

PORTOS: Proof of Data Reliability for Real-World Distributed Outsourced Storage

179

is chosen according to the security parameter

λ. Afterwards, given the reliability parameter

t, algorithm Setup yields the generator matrix

G = [I

k

| P] of a systematic linear (k, n)-MDS

code in Z

q

, for k < n < q and t ≤ n − k + 1. In

addition, algorithm Setup chooses n storage nodes

{S

( j)

}

1≤ j≤n

that are going to store the encoded

data: the first k of them are data nodes that will

hold the actual data symbols, whereas the rest

n − k are considered as redundancy nodes.

Algorithm Setup then terminates its execution by

returning the storage nodes {S

( j)

}

1≤ j≤n

and the

system parameters param

system

:= (k, n, G, q).

U.Store (1

λ

, D, param

system

) → (sk, D, param

D

,

maskGenFast): On input security parameter

λ, file D ∈ {0, 1}

∗

and, system parameters

param

system

, this randomized algorithm first splits

D into s segments, each composed of k data

symbols. Hence D comprises s · k symbols in

total. A data symbol is an element of Z

q

and is

denoted by d

( j)

i

for 1 ≤ i ≤ s and 1 ≤ j ≤ k.

Algorithm U.Store also picks a pseudo-random

function PRF : {0, 1}

λ

× {0, 1}

∗

→ Z

q

, together

with its pseudo-randomly generated key k

prf

∈

{0, 1}

λ

, and a non-zero element α

R

← Z

q

. Here-

after, U.Store computes for each data symbol a lin-

early homomorphic MAC as follows:

σ

( j)

i

= αd

( j)

i

+ PRF(k

prf

, i k j) ∈ Z

q

.

In addition, algorithm U.Store produces a time-

lock puzzle by generating an RSA modulus N :=

p

0

q

0

, where p

0

and, q

0

are two randomly-chosen

safe primes of size λ bits each, and specifies

the puzzle difficulty coefficient T , and the time

threshold T

thr

. Thereafter, algorithm U.Store

picks a pseudo-random generator PRG

mask

: Z

N

×

{0, 1}

∗

→ Z

N

3

together with a random seed η

m

R

←

Z

N

, and constructs the functions maskGen and

maskGenFast as described in Section 4.1

maskGen

(i, j), param

D

→ m

( j)

i

,

maskGenFast

(i, j), (p

0

, q

0

), param

D

→ m

( j)

i

.

Finally, algorithm U.Store picks a pseudo-random

generator PRG

chal

: {0, 1}

∗

→ [1, s]

l

and a unique

identifier fid.

Algorithm U.Store then terminates its execution

by returning the user key

sk :=

fid, (α, k

prf

), (p

0

, q

0

, maskGenFast)

,

3

such that its output is guaranteed to have a large order

modulo N.

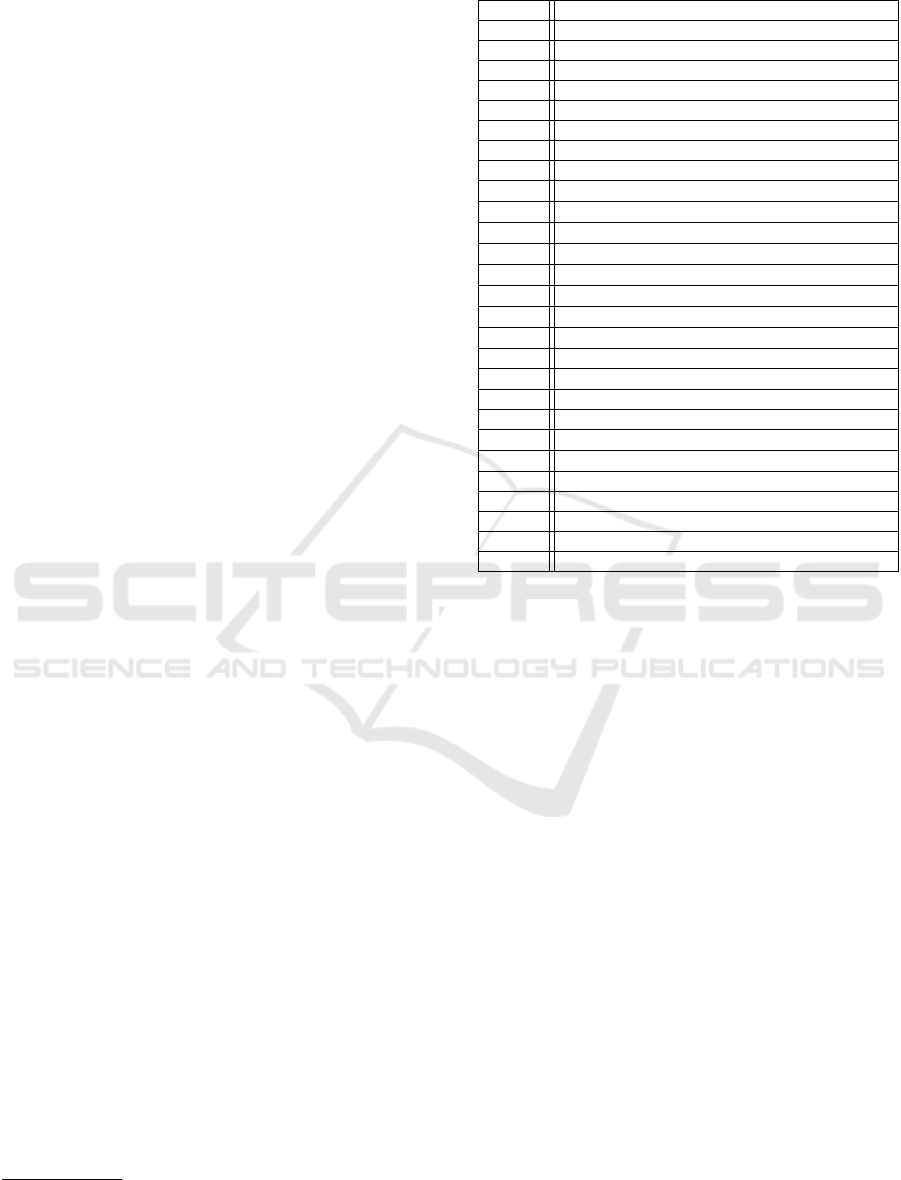

Table 1: Notation used in the description of PORTOS.

Notation Description

D File to-be-outsourced

D Outsourced data object (D consists of data and PDP tags)

˜

D Encoded and masked data object

S Storage node

G Generator matrix of the (n,k)-MDS code

α, k

prf

Secret key used by the linearly homomorphic tags

j Storage node index, 1 ≤ j ≤ n, (there are n S s in total)

i Data segment index, 1 ≤ i ≤ s, (D consist of s segments)

d

( j)

i

Data symbol, 1 ≤ j ≤ k and 1 ≤ i ≤ s

˜

d

( j)

i

Masked data symbol, 1 ≤ j ≤ k and 1 ≤ i ≤ s

σ

( j)

i

Data symbol tag, 1 ≤ j ≤ k and 1 ≤ i ≤ s

r

( j)

i

Redundancy symbol, k + 1 ≤ j ≤ n and 1 ≤ i ≤ s

˜r

( j)

i

Masked redundancy symbol, k + 1 ≤ j ≤ n and 1 ≤ i ≤ s

ψ

( j)

i

Redundancy symbol tag, k + 1 ≤ j ≤ n and 1 ≤ i ≤ s

m

( j)

i

Masking coefficient, 1 ≤ j ≤ n and 1 ≤ i ≤ s

η

m

Random seed used to generate m

( j)

i

p

0

, q

0

Primes for RSA modulus N := p

0

q

0

of the time-lock puzzle

T Time-lock puzzle’s difficulty coefficient

l Size of the challenge

T

thr

Time threshold for the proof generation

i

( j)

c

Indices of challenged symbols, 1 ≤ j ≤ n and 1 ≤ c ≤ l

η

( j)

Random seed used to generate i

( j)

c

, 1 ≤ j ≤ n

ν

c

Challenge coefficients, 1 ≤ c ≤ l

˜µ

( j)

Aggregated data/redundancy symbols, 1 ≤ j ≤ n

τ

( j)

Aggregated data/redundancy tags, 1 ≤ j ≤ n

J

f

Set of failed storage nodes

J

r

Set of surviving storage nodes

the to-be-outsourced data object together with the

integrity tags

D :=

n

fid; {d

( j)

i

}

1≤ j≤k

1≤i≤s

; {σ

( j)

i

}

1≤ j≤k

1≤i≤s

o

,

and the data object parameters

param

D

:=

PRG

chal

, maskGen,

param

m

:= (N, T , η

m

, PRG

mask

)

.

C.GenR (D, param

system

, param

D

) → (

˜

D): Upon re-

ception of data object D, algorithm C.GenR starts

computing the redundancy symbols {r

( j)

i

}

k+1≤ j≤n

1≤i≤s

by multiplying each segment d

i

:=

d

(1)

i

, . . . , d

(k)

i

with the generator matrix G = [I

k

| P]:

d

i

· [I

k

| P] =

d

(1)

i

, . . . , d

(k)

i

| r

(k+1)

i

, . . . , r

(n)

i

.

Similarly, algorithm C.GenR multiplies

the vector of linearly-homomorphic tags

σ

i

:=

σ

(1)

i

, . . . , σ

(k)

i

with G:

σ

i

· [I

k

| P] =

σ

(1)

i

, . . . , σ

(k)

i

| ψ

(k+1)

i

, . . . , ψ

(n)

i

.

One can easily show that {ψ

( j)

i

}

k+1≤ j≤n

1≤i≤s

are

the linearly-homomorphic authenticators of

{r

( j)

i

}

k+1≤ j≤n

1≤i≤s

.

SECRYPT 2019 - 16th International Conference on Security and Cryptography

180

Thereafter, algorithm C.GenR generates the mask-

ing coefficients using the function maskGen:

{m

( j)

i

}

1≤ j≤n

1≤i≤s

:= maskGen

(i, j), param

m

(mod q),

and then, masks all data and redundancy symbols

as follows:

{

˜

d

( j)

i

}

1≤ j≤k

1≤i≤s

← {d

( j)

i

+ m

( j)

i

}

1≤ j≤k

1≤i≤s

, (1)

{˜r

( j)

i

}

k+1≤ j≤n

1≤i≤s

← {r

( j)

i

+ m

( j)

i

}

k+1≤ j≤n

1≤i≤s

. (2)

At this point, algorithm C.GenR deletes all mask-

ing coefficients {m

( j)

i

}

1≤ j≤n

1≤i≤s

and terminates its ex-

ecution by returning the encoded data object

˜

D :=

fid ;

{

˜

d

( j)

i

}

1≤ j≤k

1≤i≤s

{˜r

( j)

i

}

k+1≤ j≤n

1≤i≤s

{σ

( j)

i

}

1≤ j≤k

1≤i≤s

{ψ

( j)

i

}

k+1≤ j≤n

1≤i≤s

,

and by storing the data symbols {

˜

d

( j)

i

}

1≤ j≤k

1≤i≤s

to-

gether with {σ

( j)

i

}

1≤ j≤k

1≤i≤s

and the redundancy sym-

bols {˜r

( j)

i

}

k+1≤ j≤n

1≤i≤s

together with {ψ

( j)

i

}

k+1≤ j≤n

1≤i≤s

at

the corresponding storage nodes.

U.Chall (fid, sk, param

system

) → (chal): Provided with

the object identifier fid, the secret key sk, and the

system parameters param

system

, algorithm U.Chall

generates a vector (ν

c

)

l

c=1

of l random elements

in Z

q

together with a vector of n random seeds

(η

( j)

)

n

j=1

, and then, terminates by sending to all

storage nodes {S

( j)

}

1≤ j≤n

the challenge

chal :=

fid,

(η

( j)

)

n

j=1

, (ν

c

)

l

c=1

.

C.Prove (chal,

˜

D, param

D

) → (proof): On input of

challenge chal :=

fid,

(η

( j)

)

n

j=1

, (ν

c

)

l

c=1

,

object parameters param

D

:=

(PRG

chal

, maskGen, param

m

), and data ob-

ject D each storage node {S

( j)

}

1≤ j≤n

invokes

an instance of this algorithm and computes the

response tuple (˜µ

( j)

, τ

( j)

) as follows:

It first derives the indices of the requested symbols

and their respective tags

(i

( j)

c

)

l

c=1

:= PRG

chal

(η

( j)

), for 1 ≤ j ≤ n,

and subsequently, it computes the following linear

combination

˜µ

( j)

←

∑

l

c=1

ν

c

˜

d

( j)

i

( j)

c

, if 1 ≤ j ≤ k

∑

l

c=1

ν

c

˜r

( j)

i

( j)

c

, if k + 1 ≤ j ≤ n,

(3)

τ

( j)

←

∑

l

c=1

ν

c

σ

( j)

i

( j)

c

, if 1 ≤ j ≤ k

∑

l

c=1

ν

c

ψ

( j)

i

( j)

c

, if k + 1 ≤ j ≤ n.

(4)

Algorithm C.Prove terminates its execution by re-

turning the set of tuples:

proof :=

(˜µ

( j)

, τ

( j)

)

1≤ j≤n

.

U.Verify (sk, chal,proof, maskGenFast, param

D

) → (dec):

On input of user key sk := (α, k

prf

, p

0

, q

0

), chal-

lenge chal :=

fid,

(η

( j)

)

n

j=1

, (ν

c

)

l

c=1

,

proof proof :=

(˜µ

( j)

, τ

( j)

)

1≤ j≤n

, function

maskGenFast, and data object parameters

param

D

:= (PRG

chal

, maskGen, param

m

), this

algorithm first checks if the response time of all

storage nodes {S

( j)

}

1≤ j≤n

is shorter than the

time threshold T

thr

. If not algorithm U.Verify

terminates by outputting dec := reject; otherwise

it continues its execution and checks that all

tuples (˜µ

( j)

, τ

( j)

) in proof are well formed as

follows:

It first derives the indices of the requested symbols

and their respective tags

(i

( j)

c

)

l

c=1

:= PRG

chal

(η

( j)

), for 1 ≤ j ≤ n,

and it generates the corresponding masking coef-

ficients

{m

( j)

i

( j)

c

}

1≤ j≤n

1≤c≤l

:= maskGenFast

(i

( j)

c

, j), (p

0

, q

0

), param

D

Subsequently, it computes

˜

τ

( j)

:= τ

( j)

+ α ·

l

∑

c=1

ν

c

m

( j)

i

( j)

c

, (5)

and then it verifies that the following equations

hold

˜

τ

( j)

?

=

α˜µ

( j)

+

∑

l

c=1

ν

c

PRF(k

prf

, i

( j)

c

k j),

if 1 ≤ j ≤ k,

α˜µ

( j)

+

∑

l

c=1

ν

c

prf

i

( j)

c

· G

( j)

,

if k + 1 ≤ j ≤ n,

(6)

where G

( j)

denotes the j

th

column of generator

matrix G, and

prf

i

( j)

c

:=

PRF(k

prf

, i

( j)

c

k 1), . . . , PRF(k

prf

, i

( j)

c

k k)

is

the vector of PRFs for segment i

( j)

c

.

If the responses from all storage nodes

{S

( j)

}

1≤ j≤n

are well formed, algorithm U.Verify

outputs dec := accept; otherwise it returns

dec := reject.

PORTOS: Proof of Data Reliability for Real-World Distributed Outsourced Storage

181

C.Repair (

∗

˜

D, J

f

, param

system

, param

D

, maskGen) → (

˜

D):

On input of a corrupted data object

∗

˜

D and a set of

failed storage node indices J

f

⊆ [1, n], algorithm

C.Repair first checks if |J| > n − k + 1, i.e., the

lost symbols cannot be reconstructed due to an

insufficient number of remaining storage nodes

{S

( j)

}

1≤ j≤n

. In this case, algorithm C.Repair

terminates outputting ⊥; otherwise, it picks

a set of k surviving storage nodes {S

( j)

}

j∈J

r

,

where J

r

⊆ [1, n] \ J

f

and, computes the masking

coefficients {m

( j)

i

}

j∈J

r

1≤i≤s

and {m

( j)

i

}

j∈J

f

1≤i≤s

, using

the function maskGen, together with the parity

check matrix H = [−P

>

| I

n−k

].

Thereafter algorithm C.Repair unmasks the sym-

bols held in {S

( j)

}

j∈J

r

and reconstructs the orig-

inal data object D using H. Finally, algo-

rithm C.Repair uses the generation matrix G and

the coefficients {m

( j)

i

}

j∈J

f

1≤i≤s

to compute and sub-

sequently mask the content of storage nodes

{S

( j)

}

j∈J

f

.

Algorithm C.Repair then terminates by outputting

the repaired data object

˜

D.

5 SECURITY ANALYSIS

Req 0 : Correctness. We now show that the verifi-

cation Equation 6 has to hold if algorithm C.Prove is

executed correctly. In particular, Equation 6 consists

of two parts: the first one defines the verification of

the proofs

(˜µ

( j)

, τ

( j)

)

1≤ j≤k

generated by the data

storage nodes {S

( j)

}

1≤ j≤k

; and the second part corre-

sponds to the proofs

(˜µ

( j)

, τ

( j)

)

k+1≤ j≤n

generated

by the redundancy storage nodes {S

( j)

}

k+1≤ j≤n

. By

definition the following equality holds:

σ

( j)

i

= αd

( j)

i

+ PRF(k

prf

, i k j), ∀1 ≤ i ≤ s, 1 ≤ j ≤ k (7)

We begin with the first part of Equation 6. By

plugging Equations 5 and 4 to

˜

τ

( j)

we get

˜

τ

( j)

= τ

( j)

+ α ·

l

∑

c=1

ν

c

m

( j)

i

( j)

c

=

l

∑

c=1

ν

c

σ

( j)

i

( j)

c

+ α ·

l

∑

c=1

ν

c

m

( j)

i

( j)

c

.

Thereafter, by Equation 7 we get

˜

τ

( j)

=

l

∑

c=1

ν

c

αd

( j)

i

( j)

c

+ PRF(k

prf

, i

( j)

c

k j)

+ α ·

l

∑

c=1

ν

c

m

( j)

i

( j)

c

= α ·

l

∑

c=1

ν

c

d

( j)

i

( j)

c

+ m

( j)

i

( j)

c

+

l

∑

c=1

ν

c

PRF(k

prf

, i

( j)

c

k j).

Finally, by plugging Equations 1 and 3 we get

˜

τ

( j)

= α˜µ

( j)

+

l

∑

c=1

ν

c

PRF(k

prf

, i

( j)

c

k j).

As regards to the second part of Equa-

tion 6 that defines the verification of the proofs

(˜µ

( j)

, τ

( j)

)

k+1≤ j≤n

generated by the redundancy

storage nodes {S

( j)

}

k+1≤ j≤n

, we observe that for

all c ∈ [1, l] it holds that redundancy symbols

r

( j)

i

( j)

c

= d

i

( j)

c

· G

( j)

and tags ψ

( j)

i

( j)

c

= σ

i

( j)

c

· G

( j)

, whereby

G

( j)

is the j

th

column of generator matrix G,

d

i

( j)

c

:= (d

(1)

i

( j)

c

, . . . , d

(k)

i

( j)

c

) is the vector of data symbols

for segment i

c

, and σ

i

( j)

c

:= (σ

(1)

i

( j)

c

, . . . , σ

(k)

i

( j)

c

) is the

corresponding vector of linearly homomorphic tags.

Hence, by Equation 7 the following equality always

holds:

ψ

( j)

i

( j)

c

= (αd

i

( j)

c

+ prf

i

( j)

c

) · G

( j)

= αr

( j)

i

( j)

c

+ prf

i

( j)

c

· G

( j)

,

where

prf

i

( j)

c

:=

PRF(k

prf

, i

( j)

c

k 1), . . . , PRF(k

prf

, i

( j)

c

k k)

is the vector of PRFs for segment i

( j)

c

. Thereby, given

the same straightforward calculations as in the case of

data storage nodes {S

( j)

}

1≤ j≤k

, we derive the follow-

ing equality:

˜

τ

( j)

= α˜µ

( j)

+

l

∑

c=1

ν

c

prf

i

( j)

c

· G

( j)

.

Req 1 : Extractability. We now show that POR-

TOS ensures, with high probability, the recovery of

an outsourced file D. To begin with, we observe

that algorithms C.Prove and U.Verify can be seen as

a distributed version of the algorithms SW.Prove and

SW.Verify of the private PoR scheme in (Shacham,

H. and Waters, B., 2008) executed across all stor-

age nodes {S

( j)

}

1≤ j≤n

. More precisely, we assume

that the MDS-code parameters (k, n) outputted by al-

gorithm Setup , fulfill the retrievability requirements

stated in (Shacham, H. and Waters, B., 2008), in ad-

dition to the reliability guarantee t.

We argue that given a sufficient number of inter-

actions with a cheating cloud storage provider C

0

, the

user U eventually gathers linear combinations of at

least k ≤ ρ ≤ n code symbols for each segment of data

object D. These linear combinations are of the form

˜µ

( j)

←

∑

l

c=1

ν

c

˜

d

( j)

i

( j)

c

, for 1 ≤ j ≤ k

∑

l

c=1

ν

c

˜r

( j)

i

( j)

c

, for k + 1 ≤ j ≤ n,

SECRYPT 2019 - 16th International Conference on Security and Cryptography

182

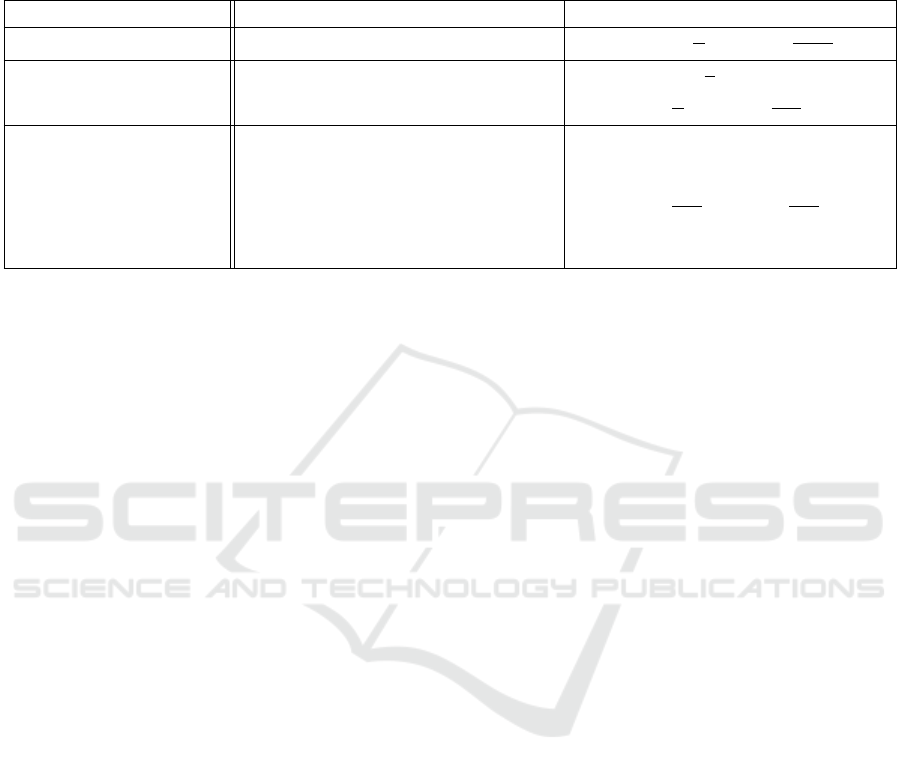

Table 2: Evaluation of the response time and the effort required by a storage node S to generate its response. The cheating

cloud storage provider C

0

tries to deviate from the correct protocol execution in two ways: (i) by storing the data object D

encoded but unmasked; and (ii) by not storing the data object

˜

D in its entirety. RTT is the round trip time between the user U

and C; Π is the number of computations S can perform in parallel; and T

puzzle

:= T ·T

exp

is the time required by S to generate

one masking coefficient.

Scenario: Response generation complexity for one S S ’s Response Time

Honest C: 2l mult + 2(l + 1) add T

resp

= RTT +

l

2l

Π

m

T

mult

+

l

2(l−1)

Π

m

T

add

T

0

resp

1

= RTT +

l

l

Π

m

T

puzzle

C

0

stores D unmasked: lT exp+2l mult + (3l − 2) add +

l

2l

Π

m

T

mult

+

l

3l−2

Π

m

T

add

S with missing symbol:

T exp +2l mult + (2l + k − 1) add

C

0

deletes up to s(n − k + 1) k S s participating in symbol generation: T

0

resp

2

= RTT + T

puzzle

symbols from

˜

D: T exp + (2l + 1) mult + (2l − 1) add +

l

2l+1

Π

m

T

mult

+

l

2l−1

Π

m

T

add

Remaining S s:

2l mult + 2(l − 1) add

for known coefficients (ν

c

)

l

c=1

and known indices i

( j)

c

and j. Furthermore, U can efficiently derive the un-

masked expressions

µ

( j)

←

∑

l

c=1

ν

c

d

( j)

i

( j)

c

, for 1 ≤ j ≤ k

∑

l

c=1

ν

c

r

( j)

i

( j)

c

, for k + 1 ≤ j ≤ n

by computing the masking coefficients {m

( j)

i

( j)

c

}

1≤ j≤n

1≤c≤l

using the function maskGenFast, and subtracting

from ˜µ

( j)

the corresponding linear combination

∑

l

c=1

ν

c

m

( j)

i

( j)

c

.

Hereby, the extractability arguments given in

(Shacham, H. and Waters, B., 2008) can be applied

to the aggregated output of algorithms C.Prove and

U.Verify . More precisely, given that C

0

succeeds in

making algorithm U.Verify yield dec := accept in an ε

fraction of the interactions, and the indices i

( j)

c

of the

challenge a chosen at random, then user U has at its

disposal at least ρ − ε > k correct code symbols for

each segment of data object D. Therefore, user U is

able to reconstruct the data object D using the parity

check matrix H = [−P

>

| I

n−k

].

Req 2 : Soundness of Redundancy Generation. In

PORTOS, the cloud storage provider C is the party

that generates the redundancy of the outsourced data

object D. Namely, the redundancy symbols and

{r

( j)

i

}

k+1≤ j≤n

1≤i≤s

and their tags {ψ

( j)

i

}

k+1≤ j≤n

1≤i≤s

are com-

puted by applying a linear combination over the

original data symbols {d

( j)

i

}

1≤ j≤n

1≤i≤s

and their tags

{σ

( j)

i

}

1≤ j≤n

1≤i≤s

, respectively. Hence, following Theo-

rem 4.1 in (Shacham, H. and Waters, B., 2008), if

the pseudo-random function PRF is secure, then no

cheating cloud storage provider C

0

will cause a veri-

fier V to accept in a proof of data reliability instance,

except by responding with values

˜µ

( j)

←

l

∑

c=1

ν

c

˜r

( j)

i

( j)

c

, for k + 1 ≤ j ≤ n,

τ

( j)

←

l

∑

c=1

ν

c

ψ

( j)

i

( j)

c

, for k + 1 ≤ j ≤ n.

that are computed correctly: i.e., by computing the

pair (˜µ, τ) using values ˜r

( j)

i

( j)

c

and ψ

( j)

i

( j)

c

which are the

output of algorithm C.GenR .

Req 3 : Storage Allocation Commitment. We now

show that a rational cheating cloud storage provider

C

0

cannot produce a valid proof of data reliability as

long as the time threshold T

thr

is tuned properly.

In essence, PORTOS consists of parallel proof

of data possession challenges over all storage nodes

{S

( j)

}

1≤ j≤n

: a challenge for each symbol of the code-

word. It follows that when a proof of data reliability

challenge contains symbols which are not stored at

rest, the relevant storage nodes cannot generate their

part of the proof unless C

0

is able to generate the miss-

ing symbols. Hereafter, we analyze the effort that C

0

has to put in order to output a valid proof of data relia-

bility in comparison to the effort an honest cloud stor-

age provider C has to put in order to output the same

proof. Given that the computational effort required

by C and C

0

can be translated into their response time

T

resp

and T

0

resp

, we can determine the lower and upper

bounds for the time threshold T

thr

.

A fundamental design feature of PORTOS is that

C

0

has to compute a masking coefficient for each sym-

bol of the encoded data object D. We observe that the

PORTOS: Proof of Data Reliability for Real-World Distributed Outsourced Storage

183

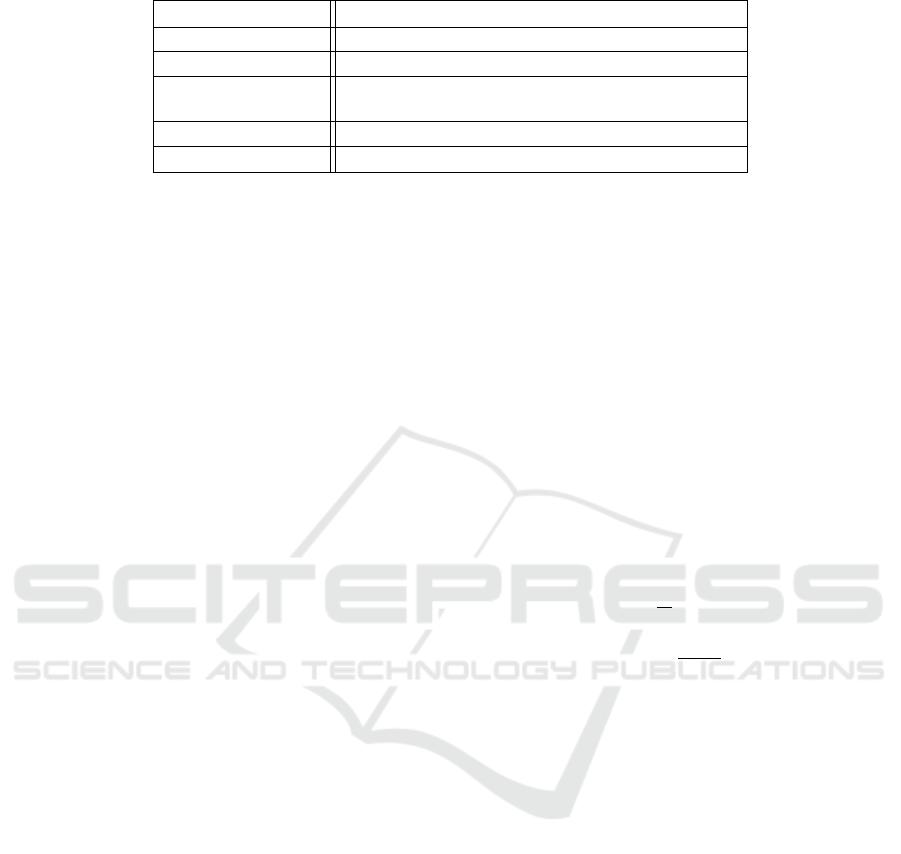

Table 3: PORTOS computation, communication, and storage costs.

Scheme PORTOS with Symbol Tags

U.Store complexity: sk PRF + sk mult + sk add

C.Prove complexity: n PRG

chal

+ 2nl mult + 2n(l + 1) add

n PRG

chal

+ 2nl exp +kl(n − k + 1) PRF

U.Verify complexity: +2n(l + 1) + kl(n − k) mult + (n −k)(kl + k + 2) add

Storage cost: 2× the size of D

Bandwidth: 2n symbols

masking coefficients have the same size as D’s sym-

bols. Hence, assuming that D cannot be compressed

more (e.g because it has been encrypted by the user),

a strategy whereby C

0

is storing the masking coeffi-

cients would effectively double the required storage

space. Moreover, a strategy whereby C

0

does not store

the content of up to n − k + 1 storage nodes and yet it

stores the corresponding masking coefficients, would

increase C

0

’s operational cost without yielding any

storage savings. Given that strategies that rely on stor-

ing the masking coefficients do not yield any gains in

terms of either storage savings or overall operational

cost, C

0

is left with two reasonable ways to deviate

from the correct protocol execution:

(i) The first one is to store the data object D en-

coded but unmasked. Although this approach

does not offer any storage savings, it signif-

icantly reduces the complexity of storing and

maintaining D at the cost of a more expensive

proof generation. More specifically, in order to

compute a PORTOS proof, C

0

has to generate 2l

masking coefficients {m

( j)

i

( j)

c

}

1≤ j≤n

1≤c≤l

.

(ii) The second way C

0

may misbehave, is by not

storing the data object

˜

D in its entirety and

hence generating the missing symbols involved

in a PORTOS challenge on-the-fly. In particu-

lar, C

0

can drop up to s(n −k + 1) symbols of

˜

D

either by not provisioning up to n − k + 1 stor-

age nodes; or by uniformly dropping symbols

from all n storage nodes {S

( j)

}

1≤ j≤n

, ensuring

that it preserves at least k symbols for each data

segment.

In order to determine the lower bound for the time

threshold T

thr

we evaluate the response time T

resp

of

an honest cloud storage provider C. Additionally, for

each type of C

0

’s malicious behavior we evaluate its

response time, and determine the upper bound for

the time threshold T

thr

as T

0

resp

= min(T

0

resp

1

, T

0

resp

2

),

where T

0

resp

1

and T

0

resp

2

denote C

0

’s response time

when it opts to keep D unmasked and delete sym-

bols of

˜

D, respectively. Concerning the evaluation of

T

0

resp

2

, we consider the most favorable scenario for

C

0

where it has to generate only one missing sym-

bol for a PORTOS challenge. Table 2 presents the

effort required by a storage node S in order to out-

put its response, for each of the scenarios described

above, together with the corresponding response time.

For the purposes of our analysis, we assume that all

storage nodes {S

( j)

}

1≤ j≤n

have a bounded capacity

of Π concurrent threads of execution, that compu-

tations —exponentiations, multiplications, additions,

etc.— require a minimum execution time, and that

T

add

T

exp

and T

add

T

mult

. Furthermore, we as-

sume that {S

( j)

}

1≤ j≤n

are connected with premium

network connections (low latency and high band-

width), and hence the communication among them

has negligible impact on C

0

response time. As given in

Table 2, Req 3 is met as long as the time threshold T

thr

is tuned such that it fulfills the following relations:

T

thr

> RTT

max

+

2l

Π

T

mult

(Lower bound),

T

thr

< RTT

max

+ T

puzzle

+

2l + 1

Π

T

mult

(Upper bound),

where RTT

max

is the worst-case RTT and T

puzzle

:= T ·

T

exp

is the time required by S to evaluate the function

maskGen. By carefully setting the puzzle difficulty

coefficient T , we can guarantee that T

resp

T

0

resp

and

RTT

max

T

0

resp

− T

resp

, and hence make our proof of

data reliability scheme robust against network jitter.

Finally, notice that PORTOS can adapt to C

0

’s com-

putational capacity as it evolves over time by tuning

T accordingly.

6 PERFORMANCE ANALYSIS

Table 3 summarizes the performance of PORTOS in

terms of computation, communication, and storage

costs. The computational effort required to verify

the response of a redundancy node {S

( j)

}

k+1≤ j≤n

is

much higher than the effort required to verify the re-

sponse of a data node {S

( j)

}

1≤ j≤k

. Namely, in addi-

tion to the 2nl exponentiations required by the func-

tion maskGenFast to generate the masking coeffi-

cients of the nl involved symbols, algorithm U.Verify

evaluates kl PRFs and k + 2(l + 1) multiplications in

SECRYPT 2019 - 16th International Conference on Security and Cryptography

184

order to verify the response of each redundancy node,

compared to the l PRFs and 2(l + 1) multiplications

it has to compute for the response of each data node.

We conclude that compared to existing erasure-code

based proof of data reliability schemes, namely (Bow-

ers et al., 2009; Chen et al., 2015; Vasilopoulos et al.,

2018), we achieve comparable computational gain

while enabling data repair at the cloud side. Neverthe-

less, we observe that storage and bandwidth costs re-

main important. In order to improve the performance

of our scheme and reduce these costs, we propose a

new version of PORTOS which namely implements

the storage efficient variant of the linearly homomor-

phic tags introduced in (Shacham, H. and Waters, B.,

2008). More specifically, instead of generating one

tag per symbol, the new algorithm U.Store computes

a linearly homomorphic tag for a data segment, com-

prising k symbols. Due to space constrains, we omit

the description of this new solution which will be in-

cluded in an extended technical report, that will be-

come available after the review process.

7 CONCLUSION

In this paper, we proposed PORTOS, a novel proof

of data reliability solution for erasure-code-based dis-

tributed cloud storage systems. PORTOS enables

users to verify the retrievability of their data, as well

as the integrity of its respective redundancy. More-

over, in PORTOS the cloud storage provider gener-

ates the required redundancy and performs data re-

pair operations without any interaction with the user,

thus conforming to the current cloud model. Thanks

to the combination of PDP with time-lock puzzles,

PORTOS provides a rational cloud storage provider

with a strong incentive to provision sufficient redun-

dancy, which is stored at rest, guaranteeing this way

a reliable storage service.

REFERENCES

Armknecht, F., Barman, L., Bohli, J.-M., and Karame, G. O.

(2016). Mirror: Enabling proofs of data replication

and retrievability in the cloud. In Proceedings of

the 25th USENIX Conference on Security Symposium,

SEC’16.

Ateniese, G., Burns, R., Curtmola, R., Herring, J., Kissner,

L., Peterson, Z., and Song, D. (2007). Provable data

possession at untrusted stores. In Proceedings of the

14th ACM Conference on Computer and Communica-

tions Security, CCS ’07.

Barsoum, A. F. and Hasan, M. A. (2012). Integrity veri-

fication of multiple data copies over untrusted cloud

servers. In Proceedings of the 12th IEEE/ACM Inter-

national Symposium on Cluster, Cloud and Grid Com-

puting, CCGRID ’12.

Barsoum, A. F. and Hasan, M. A. (2015). Provable mul-

ticopy dynamic data possession in cloud computing

systems. IEEE Transactions on Information Forensics

and Security, 10.

Blaum, M., Brady, J., Bruck, J., and Menon, J. (1994).

Evenodd: An optimal scheme for tolerating double

disk failures in raid architectures. In Proceedings of

the 21st Annual International Symposium on Com-

puter Architecture, ISCA ’94.

Bowers, K. D., Juels, A., and Oprea, A. (2009). Hail: A

high-availability and integrity layer for cloud storage.

In Proceedings of the 16th ACM Conference on Com-

puter and Communications Security, CCS ’09.

Bowers, K. D., van Dijk, M., Juels, A., Oprea, A., and

Rivest, R. L. (2011). How to tell if your cloud files

are vulnerable to drive crashes. In Proceedings of the

18th ACM Conference on Computer and Communica-

tions Security, CCS ’11.

Chen, B., Ammula, A. K., and Curtmola, R. (2015). To-

wards server-side repair for erasure coding-based dis-

tributed storage systems. In Proceedings of the 5th

ACM Conference on Data and Application Security

and Privacy, CODASPY ’15.

Chen, B. and Curtmola, R. (2013). Towards self-repairing

replication-based storage systems using untrusted

clouds. In Proceedings of the Third ACM Conference

on Data and Application Security and Privacy, CO-

DASPY ’13.

Chen, B. and Curtmola, R. (2017). Remote data integrity

checking with server-side repair. Journal of Computer

Security, 25.

Chen, B., Curtmola, R., Ateniese, G., and Burns, R. (2010).

Remote data checking for network coding-based dis-

tributed storage systems. In Proceedings of the 2010

ACM Workshop on Cloud Computing Security Work-

shop, CCSW ’10.

Curtmola, R., Khan, O., Burns, R., and Ateniese, G. (2008).

Mr-pdp: Multiple-replica provable data possession. In

Proceedings of the 28th International Conference on

Distributed Computing Systems, ICDCS ’08.

Erway, C., K

¨

upc¸

¨

u, A., Papamanthou, C., and Tamassia, R.

(2009). Dynamic provable data possession. In Pro-

ceedings of the 16th ACM Conference on Computer

and Communications Security, CCS ’09.

Etemad, M. and K

¨

upc¸

¨

u, A. (2013). Transparent, dis-

tributed, and replicated dynamic provable data posses-

sion. In Proceedings of the 11th International Confer-

ence on Applied Cryptography and Network Security,

ACNS’13.

Juels, A. and Kaliski, Jr., B. S. (2007). Pors: Proofs of

retrievability for large files. In Proceedings of the 14th

ACM Conference on Computer and Communications

Security, CCS ’07.

Le, A. and Markopoulou, A. (2012). Nc-audit: Auditing

for network coding storage. In Proceedings of Inter-

national Symposium on Network Coding, NetCod ’12.

PORTOS: Proof of Data Reliability for Real-World Distributed Outsourced Storage

185

Leontiadis, I. and Curtmola, R. (2018). Secure storage with

replication and transparent deduplication. In Proceed-

ings of the Eighth ACM Conference on Data and Ap-

plication Security and Privacy, CODASPY ’18.

Rivest, R. L., Shamir, A., and Wagner, D. A. (1996). Time-

lock puzzles and timed-release crypto. Technical re-

port, Cambridge, MA, USA.

Shacham, H. and Waters, B. (2008). Compact proofs of re-

trievability. In Proceedings of the 14th International

Conference on the Theory and Application of Cryp-

tology and Information Security: Advances in Cryp-

tology, ASIACRYPT ’08.

Suh, C. and Ramchandran, K. (2011). Exact-repair mds

code construction using interference alignment. IEEE

Trans. Inf. Theor., 57(3).

Thao, T. P. and Omote, K. (2016). Elar: Extremely

lightweight auditing and repairing for cloud security.

In Proceedings of the 32nd Annual Conference on

Computer Security Applications, ACSAC ’16.

Vasilopoulos, D., Elkhiyaoui, K., Molva, R., and Onen, M.

(2018). Poros: Proof of data reliability for outsourced

storage. In Proceedings of the 6th International Work-

shop on Security in Cloud Computing, SCC ’18.

Xing, C. and Ling, S. (2003). Coding Theory: A First

Course. Cambridge University Press, New York, NY,

USA.

SECRYPT 2019 - 16th International Conference on Security and Cryptography

186