A New Trust Architecture for Smart Vehicular Systems

Emna Jaidane

1

, Mohamed Hamdi

2

, Taoufik Aguili

1

and Tai Hoon Kim

3

1

Communication Systems Laboratory, National Engineering School of Tunis, Tunis El Manar University, Tunis, Tunisia

2

El Ghazala Innovation Park, Tunis, Tunisia

3

University of Tasmania, Australia

Keywords:

Optical Flow, Timestamp, Blackbox, Authentic Traces.

Abstract:

Modern vehicles are often equipped with event recording capabilities through blackbox systems. These allow

the collection of different types of traces that can be used for multiple applications including self driving,

post-accident processing and drive monitoring. In this paper, we develop a new trust architecture to enrich

the existing blackbox models by adding timestamped optical flows. Our approach provides a tool to collect

authentic traces regarding the mobile objects that interfere with the environment of the car, which is comple-

mentary to the events considered by the systems available in the litterature.

1 INTRODUCTION

An intelligent vehicle is a vehicle enhanced with per-

ception, reasoning, and actuating devices that enable

monitoring and automation of driving tasks. The

motivation of building intelligent vehicles is mak-

ing driving experience safer, more economic and effi-

cient. Intelligent vehicles are equipped with sensors,

radars, lasers and Global Positioning System (GPS)

that allow to the vehicle road scene understanding.

Smart vehicles assist the driver in driving monitor-

ing by doing actions like safe lane following, obstacle

avoidance, overtaking control and avoiding dangerous

situations. There are many other functionnalities im-

plemented in smart vehicles rendering them fully au-

tomated vehicles that use detailed maps to safely drive

and navigate itself with no human interaction, the ve-

hicle can not only drive itself but it can be parked on

its own. Smart vehicles are also equipped with au-

tomatic traffic accident detection systems that detect

accident and in some cases send notifications to con-

cerned authorities (Broggi et al., 2008).

To realize the above describted functions, different

types of traces are collected by vehicle’s sensors from

the surrounding environment. Collected data must be

authentic to permit to the vehicle taking the correct

decision. This paper addresses the security of col-

lected traces during driving. We focus on information

related to moving objects detection. Moving objects

can influence the driving behaviour either through dis-

traction or by causing a danger that may require an

active response. Mobile objects are detected by cam-

eras installed on board vehicles. Depending on the

movement of the camera relative to the objects, three

cases can be distinguished:

• Static camera and moving objects: In this case,

images have a fixed background, which can help

in moving objects detection.

• Moving camera and static objects: reference

points for movement can be chosen since objects

are known as static.

• Moving camera and moving objects: This is the

case discussed in our paper, their is no static back-

ground or objects that can be taken as references.

The camera is installed on board a vehicle and has

the same view as the driver. The maximum value

of vehicle’s velocity is equal to 90 km/h.

Information about objects motion can be known by

optical flow calculation (Agarwal et al., 2016). Opti-

cal flow is calculated from the recorded scene to have

an estimate of the apparent motion. The optical flow

describes the direction and time rate of pixels in a time

slot of two consequent images. A two dimensional ve-

locity vector, carrying on the direction and the veloc-

ity of motion is assigned to each pixel of the picture.

Optical flow values are saved in the vehicle blackbox.

The objective of optical flow calculation is having a

feedback of events that happen during driving expe-

rience because these events can be used as authentic

traces for self driving, post-accident processing and

drive monitoring applications.

Jaidane, E., Hamdi, M., Aguili, T. and Kim, T.

A New Trust Architecture for Smart Vehicular Systems.

DOI: 10.5220/0007959103810386

In Proceedings of the 16th International Joint Conference on e-Business and Telecommunications (ICETE 2019), pages 381-386

ISBN: 978-989-758-378-0

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

381

To be used as an authentic trace, optical flow values

must be protected against modification since their cre-

ation and must be verified with time values to have a

trace when events have occured. To do this, we pro-

pose to timestamp optical flow matrix saved in the

blackbox.

This paper is organized as follow: In section 2, we

present a litterature review of mobile objects detec-

tion techniques. In section 3, we present optical flow

basics and timestamping in vehicular networks. In

section 4, we expose our approach for optical flow

timestamping, we illustrate our proposal with a prac-

tical use case in section 5 and we conclude our paper

in section 6.

2 LITERATURE REVIEW

There are three methods for mobile objects tracking

which are frame difference, background subtraction

and optical flow. Frame difference method is based

on the difference between pixels to find the moving

object. There is a frame which is taken as a refer-

ence. The difference between the reference frame and

the current frame is calculated. The moving object is

detected from the found difference. In (Singla, 2014),

the video is captured by a static camera, the difference

between the two consecutive frames is calculated, and

then it is transformed to Gray image and filtered us-

ing Gauss low pass filter and binarized at the end. Ex-

perimental results show motion in the binary image

found by calculating the difference between the two

frames. In (Joshi, 2014), an algorithm is developed

to calculate the speed of moving vehicles and detect

those which violate the speed limits. Video is cap-

tured by a static camera, tracking of the moving object

and calculation of the velocity of the object is done

using segmentation in a first step, which separates re-

gions of the image. Segmentation is done using frame

difference algorithm.

The inconvenient of the temporal differencing method

is that it cannot detect slow changes, for this reason,

background subtraction method is used. This method

is based on extracting background which is the region

of the image without motion. The absolute differ-

ence between the background model and each instan-

taneous frame is used to detect the moving object.

(N.Arun Prasath, 2015) uses the background subtrac-

tion as a first step to estimate vehicle speed. After

conversion of the video into frames, the background

is extracted to detect moving vehicles. After that, fea-

ture extraction and vehicle tracking are done to de-

termine the speed. An algorithm that detects moving

objects in high secured environments is proposed in

(Singh et al., 2014). Detection is done online and of-

fline. In Offline detection, the video is divided into

frames. Moving object is detected by separating the

foreground from a static background. When the mov-

ing object is known, it is marked by a rectangular box.

When the object moves, the alarm is activated. The

position of the centroid of the object is calculated, and

the distance and the velocity of the object are deter-

mined. A system for monitoring traffic rules violation

is presented in (Gupta, 2015) to monitor the velocity

limits violation and detect the registered license plate

number. To monitor velocity limits violation, veloc-

ity is calculated. The first step of velocity calcula-

tion is vehicle tracking which is done by background

subtraction. Each frame is subtracted from the back-

ground model. The blobs that are found as result of

subtraction correspond to moving objects.

Background subtraction is a widely used approach for

detecting moving objects from static cameras.

Optical flow technique is used for moving objects

tracking. It provides an apparent change of mov-

ing object location between two frames. It insulates

the moving objects from the static background ob-

jects. Optical flow estimation is represented by a two-

dimensional vector assigned to each pixen of the im-

age and represents velocities of each point of an im-

age sequence.

Optical flow was used in many works for mobile on-

jects tracking; (Garcia-Dopico et al., 2014) presents

a system for the search and detection of moving ob-

jects in a sequence of images captured by a camera

installed in a vehicle. The proposed system is based

on optical flow analysis to detect and identify moving

objects as perceived by a driver. The proposed method

consists of three stages. In the first stage, the optical

flow is calculated for each image of the sequence, as

a first estimate of the apparent motion. In the second

stage, two segmentation processes are addressed: the

optical flow itself and the images of the sequence. In

the last stage, the results of these two segmentation

processes are combined to obtain the movement of

the objects present in the sequence, identifying their

direction and magnitude.

(Indu et al., 2011) proposes a method to estimate vehi-

cle speed from video sequences acquired with a fixed

mounted camera. The vehicle motion is detected and

tracked along the frames using optical flow algorithm.

The distance traveled by the vehicle is calculated us-

ing the movement of the centroid over the frames and

the speed of the vehicle is estimated.

In many works, optical flow was combined with other

techniques. In (Guo-Wu Yuan, 2014), Optical flow

was combined with frame difference method. In this

work, optical flow for Harris corners is calculated, and

WINSYS 2019 - 16th International Conference on Wireless Networks and Mobile Systems

382

to localize the complete moving area, the method of

frame difference is used. Optical flow was used in

(DharaTrambadia, ) with background subtraction. In

this work, tracking of moving objects or persons is

done using Adaptive Gaussian Mixture Modeling and

optical flow. Adaptive Gaussian Mixture Modeling is

used for complex environment but it is not a complete

object tracking method, it is used with optical flow

which is used for simple background. The foreground

is extracted using Gaussian Mixture Modelling tech-

niques.

3 OPTICAL FLOW AND

TIMESTAMPING

This section deals with optical flow method descrip-

tion, and the need for timestamping optical flow in

vehicular context.

3.1 Optical Flow and Smart Cars

Optical flow gives the displacement of each pixel of

an image compared to the previous image. The dis-

placement vector is called optical flow vector. It is

used for motion segmentation for tracking moving ob-

jects. It is possible to find the moving objects in video

frames by calculating the value of optical flow. If the

value is significant, this means that the object is mov-

ing. If the value is very small, the object is static. In

1981, two differential-based optical flow algorithms

were proposed by Horn and Schunck and by Lucas

and Kanade. Horn’s algorithm assumes that the mo-

tion field is the 2D projection of the 3D motion of

surfaces. The optical flow is the apparent motion of

the brightness patterns in the image. The flow is for-

mulated as a global energy functional which is then

sought to be minimized.

E =

Z Z

h

(Ixu + Iyv + It)

2

+ α

2

k

∇u

k

2

+

k

∇v

k

2

i

dxdy

where I

x

, I

y

and I

t

are the derivatives of the image

intensity values along the x, y and time dimensions

respectively.

−→

V = [u(x, y), v(x, y)]

T

is the optical flow vector, and the parameter α is

a regularization constant. Larger values of α lead

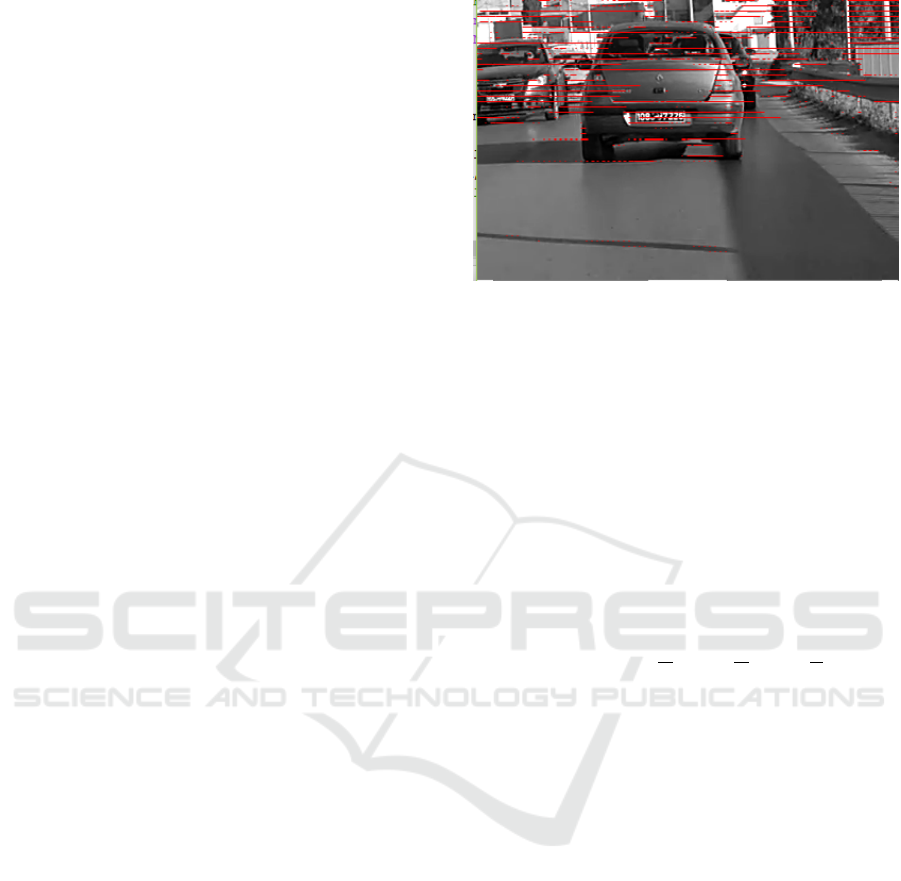

to a smoother flow. Figure 1 represents motion vec-

tors calculated using Horn and Schunck method for

video frames recorded with a static camera installed

on board a moving vehicle. Red lines represent opti-

cal flow vectors.

Figure 1: Optical flow vectors calculated with Horn and

Schunck Method.

The Lucas-Kanade approach assumes that the flow re-

mains constant in a local neighborhood of the pixel

under consideration, and solves the basic optical

flow equations for all the pixels in that neighbor-

hood by the least squares method. The Lucas-Kanade

algorithm computes the optical flow by calculating

the spatio-temporal derivatives of intensity of the im-

ages. This algorithm assumes that intensity of the im-

age remains constant between the frames of the se-

quence.

Using Taylor Series, the expression can be written as:

I(x, y, t) = I(x + dx, y + dy, t + dt)

I (x, y, t) = I (x, y, t) + (

∂I

dx

)dx + (

∂I

dy

)dy + (

∂I

dt

)dt

Ix × u + Iy × v + f t = 0 Optical flow equation

The optical flow equation can be assumed to be true

for all the pixels in a window centered at the point

p(x,y). Considering a window of [n × n] pixels:

Ix(p1)u + Iy(p1)v = −It(p1)

Ix(p2)u + Iy(p2)v = −It(p2)

.

.

.

Ix(pn)u + Iy(pn)v = −It(pn)

where p1, p2, ...., pn are pixels within the window,

and Ix(p1), Ix(p2), ..., Ix(pn) are the partial deriva-

tives of the image I according to the space variables

x,y and time t, measured at the point pi and the current

time.

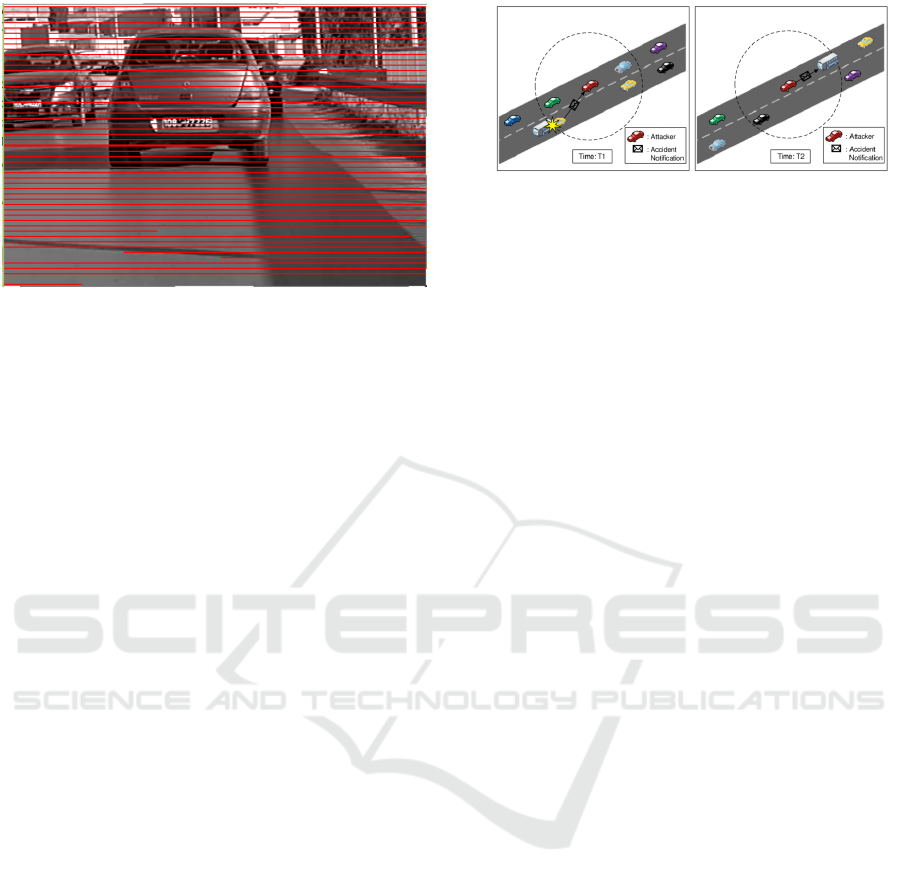

Figure 2 represents motion vectors calculated us-

ing Lucas Kanade method for video frames recorded

with a static camera installed on board a moving ve-

hicle. Red lines represent optical flow vectors.

A New Trust Architecture for Smart Vehicular Systems

383

Figure 2: Optical flow vectors calculated with Lucas

Kanade Method.

3.2 Timestamping Architecture

VANETs are a key technology for enabling safety and

infotainment applications in the context of smart ve-

hicles. To connect smart vehicles, several issues must

be addressed. There are important issues related to

security. Various techniques are used to ensure se-

curity in VANETs, bellow we cite few cryptography

techniques:

• Public Key infrastructure (PKI): it is used for user

authentication. It is based on the use of a public

key, known by other users in the network and it

is used for message encryption, and a private key,

which is known only by the owner and is used for

message decryption. PKI is based on the use of

a Certification Authority (CA) that is charged of

issuing certificates, signing the messages digitally

and providing public and private keys to ensure

users authentication.

• Digital signature: Vehicle sends messages after

encrypting it using the receiver public key (to en-

sure data protection) then digitally signs it (to en-

sure data authentication)

• Timestamp series: This technique is used to pre-

vent Sybil attack in VANET. It is not possible

that two vehicles go through different RSUs at the

same time with the same timestamp. If a vehicle

sends a timestamp message that issued by passed

RSUs and this message has the same timestamps

series with other messages, Sybil attack will be

detected.

Generally, Timestamp is used in VANET to ensure the

message freshness. According to (Galaviz-Mosqueda

et al., 2017), packets sent in VANETs are commonly

composed by:

PACKET= (M, (sig

n

(M, T), Cert

n

)

sig

n

: Digital signature of node n over the concate-

Figure 3: Replay attack scenario.

nation of a message M and a timestamp T to ensure

message freshness to prevent replay attack.

Cert

n

: certification of node n.

Receiving node can verify packet authenticity by ver-

ifying the signature sig.

Figure 3 (Sakiz and Sen, 2017) illustrates a replay at-

tack, in which accident notification message gener-

ated and sent in T1 was sent a second time in T2.

4 PROPOSED APPROACH

(Wolfgang and Delp, 1998) presents an overview of

image security techniques with applications in multi-

media systems. Images can be protected againt illegal

uses using encryption, authentication and timestamps.

In this paper, we present a scheme for timestamping

optical flow matrix. Let OP be the data to be times-

tamped, and Y = H(OP) be the hash of OP. A request

named R, is sent to a third party timestamping service

(TSS) to ask for a timestamp. The syntax of the re-

quest is as follows:

(R

n

)= (Y

n

, t

n

)

The TSS then produces a certificate, C

n

:

C

n

= (n, t

n

, I

n

, Y

n

; L

n

)

L

n

= (t

n−1

, I

n−1

, Y

n−1

, H(L

n−1

))

• n: The request number

• I

n

: The owner’s identification string

• t

n

: the time of the request

• L

n

: The linking string, it is the concatenation of

the previous request time, the identity string, the

document hash and the linking string hash.

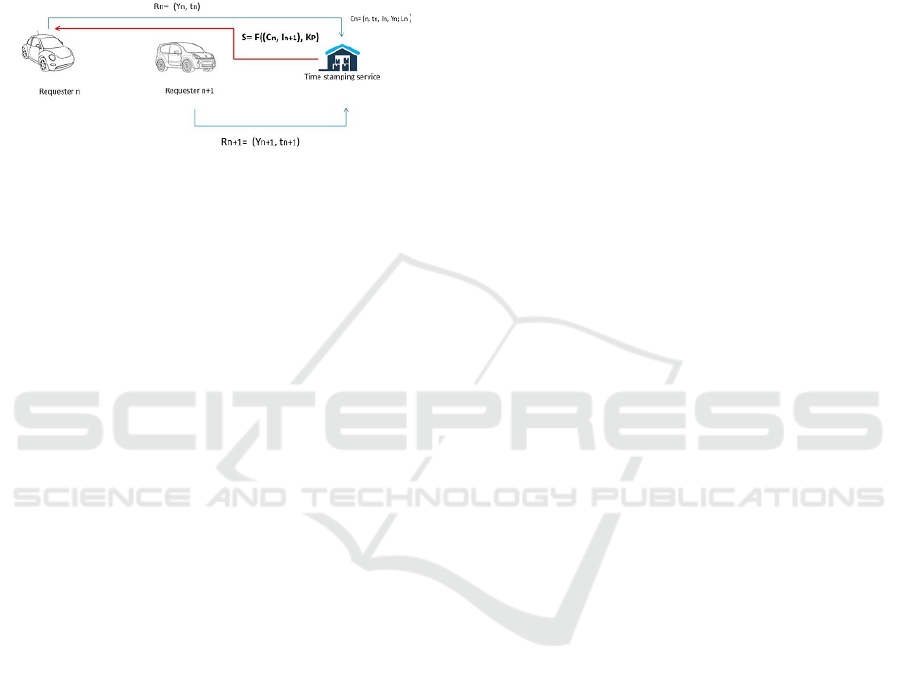

The TSS waits for the next request I

n+1

, then concate-

nates it to C

n

, the timestamp returned to the user is: S=

F((C

n

, I

n+1

), K

P

)

• F: The encryption function

• K

P

: The private key of the TSS

A requester for a timestamp authenticates in a first

step the TSS signature on the stamp. The requester

can then ask for I

n−1

(embedded in L

n

) to show her

timestamp. The quantities in L

n

must match those in

WINSYS 2019 - 16th International Conference on Wireless Networks and Mobile Systems

384

C

n−1

. The hash of L

n−1

must also match the value of

H(L

n−1

) present in L

n

. If any value does not match,

one of the two timestamps is false. In practice, the

linking string contains information from the last N re-

quests. This distributes the authentication responsi-

bility, since any one of the N requesters may verify

the timestamp. The proposed process is illustrated by

Figure 4.

Figure 4: Proposed process of timestamping optical flow.

5 CASE STUDY

Traffic accidents are confusing events. How they oc-

cur, who or what caused them, and why they occurred

are facts that police must determine. The accident

investigator plays an important role in the process of

investigation and reporting on the contributing factors

to an accident. His report is important to the police

services, traffic enforcement, vehicle owners and

insurance companies. The accident investigator uses

a wide variety of tools at the accident scene. There

are also vehicle technology that assist the investigator

in establishing the causes of the accident. Vehicle

blackbox can tell a lot of information concerning

the accident: Amount of travel prior to the accident,

driving style prior to the accident, speeds achieved

and maintained, unauthorised stops, speed, detail

prior to the accident, significant braking, . . . . It

tells also date, time, location, speed, distance and

engine Rpm. We take the example of an accident

that occurs and investigators use data saved in the

blackbox for investigation. The image with optical

flow vectors is used for marking the detected object

in the image/video frame. The ability to verify

the integrity of files is important for investigators.

Especially if files are to be used as evidence in court,

the ability to prove that a file existed in a certain state

at a specific time and was not altered since is crucial.

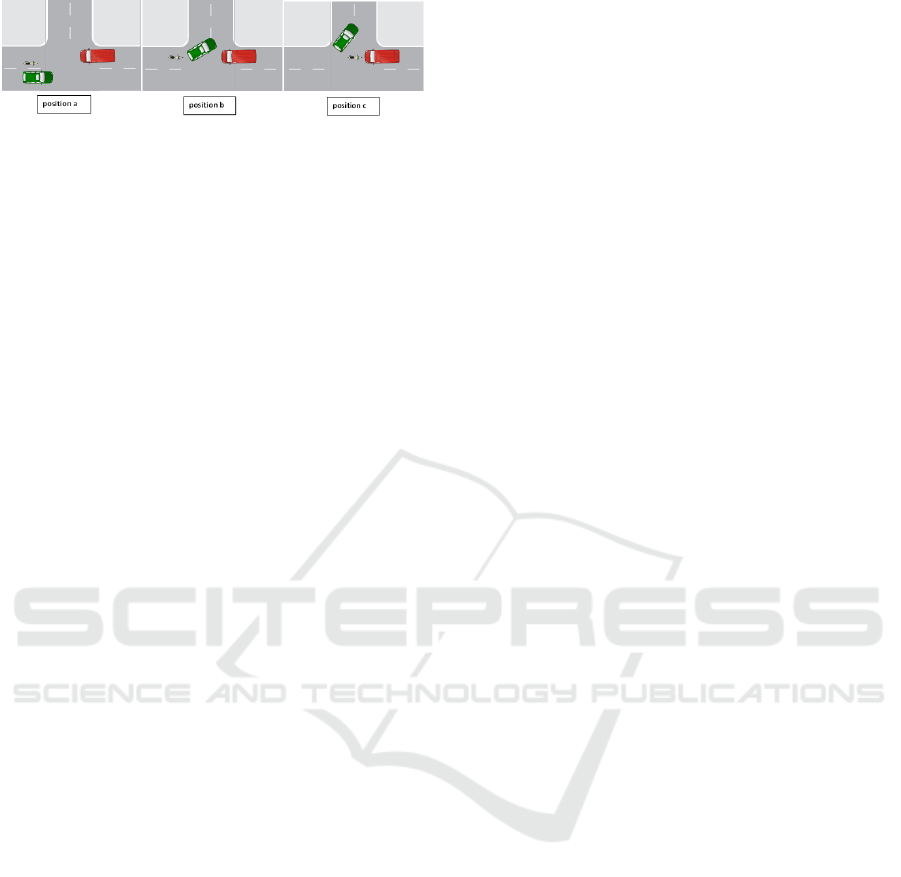

We propose the accident scenario illustrated by

Figure 5. The motorcycle tries to overtake the green

vehicle that changes direction and turn on the left.

(positions a and b). The result was a crash with the

red vehicle (position c) which captured the motorcy-

cle since it was trying to do the overtake operation.

Red vehicles uses blackbox system for recording

events that happen during the driving experience.

Mobile objects are tracked using the optical flow

technique. Optical flow matrix are stored in the

blackbox. Requests for timestamping are sent ac-

cording to the steps described in Section 4. Green

vehicle and red vehicle send the following requests

for timestamping:

(R

Gn

)= (Y

Gn

, t

n

)

(R

Rn+1

)= (Y

Rn+1

, t

n+1

)

Certificates are produced by Timestamping Service:

C

Gn

= (n, t

n

, I

Gn

, Y

Gn

; L

n

)

C

Rn+1

= (n+1, t

n+1

, I

Rn+1

, Y

Rn+1

; L

n+1

)

The timestamps returned to vehicles are:

SR= F((C

Gn

, I

Gn+1

), K

P

)

SG= F((C

Rn+1

, I

Rn+2

), K

P

)

• n: The request number sent by the green vehicle

• n+1: The request number sent by the red vehicle

• I

Rn+1

: The Red vehicle’s Identification string

• I

Gn

: The Green vehicle’s Identification string

• R

Gn

: Request sent by the Green vehicle

• R

Rn+1

: Request sent by the Red vehicle

• Y

Rn+1

: Hash of optical flow matrix recorded by

Red vehicle

• Y

Gn

: Hash of optical flow matrix recorded by

Green vehicle

• t

n

: the Time of the request sent by the green vehi-

cle

• t

n+1

: the Time of the request sent by the red vehi-

cle

• L

n

, L

n+1

: Linking strings

• C

Gn

: Certificate of Green vehicle

• C

Rn+1

: Certificate of Red vehicle

• SG: Timestamp returned to Green vehicle

• SR: Timestamp returned to Red vehicle

Motorcycle tracking is timestamped by TG and

TR described above. When analyzing saved data

in the red’s vehicle blackbox, investigator can

make sure that the crash occured after the green

vehicle tuns on the left and the motorcycle was

trying to overtake it, according to saved optical

flow in the red’s vehicle blackbox system that in-

dicates positions of the green vehicle and the mo-

torcycle.

A New Trust Architecture for Smart Vehicular Systems

385

Figure 5: Accident scenario.

6 CONCLUSIONS

In this paper, we proposed to timestamp optical flow

used for mobile object tracking to be used as an au-

thentic trace for smart vehicle applications. We ex-

posed a timestamping process and we illustrate it by

a post-accident investigation use case.

REFERENCES

Agarwal, A., Gupta, S., and Singh, D. (2016). Review of

optical flow technique for moving object detection.

pages 409–413.

Broggi, A., Zelinsky, A., Parent, M., and E. Thorpe, C.

(2008). Intelligent Vehicles, pages 1175–1198.

DharaTrambadia, ChintanVarnagar, P. Moving object de-

tection and tracking using hybrid approach in real time

to improve accuracy. International Journal of In-

novative Research in Computer and Communication

Engineering, pages ISSN(Online): 2320–9801, ISSN

(Print): 2320–9798.

Galaviz-Mosqueda, A., Morales-Sandoval, M., Villarreal-

Reyes, S., Galeana-Zapi

´

en, H., Rivera-Rodr

´

ıguez,

R., and Alonso-Arevalo, M. A. (2017). Multi-

hop broadcast message dissemination in vehicular ad

hoc networks: A security perspective review. In-

ternational Journal of Distributed Sensor Networks,

13(11):1550147717741263.

Garcia-Dopico, A., Pedraza, J. L., Nieto, M., P

´

erez, A.,

Rodr

´

ıguez, S., and Osendi, L. (2014). Locating mov-

ing objects in car-driving sequences. EURASIP Jour-

nal on Image and Video Processing, 2014(1):24.

Guo-Wu Yuan, Jian Gong, M.-N. D. H. Z. D. X. (2014).

A moving objects detection algorithm based on three

frame difference and sparse optical flow. Information

Technology Journal, 13:1863–1867.

Gupta, T. P. (2015). Vehicle detention using high accuracy

edge detection. Int. J. Adv. Eng, 1(1):28–32.

Indu, S., Gupta, M., and Bhattacharyya, A. (2011). Vehi-

cle tracking and speed estimation using optical flow

method. Int. J. Engineering Science and Technology,

3(1):429–434.

Joshi, S. (2014). Vehicle speed determination using image

processing. In International Workshop on Computa-

tional Intelligence (IWCI).

N.Arun Prasath, G.Sivakumar, N. (2015). Vehicle speed

measurement and number plate detection using real

time embedded system. In Network and Complex Sys-

tems, ISSN 2224-610X (Paper) ISSN 2225-0603 (On-

line) Vol.5, No.3.

Sakiz, F. and Sen, S. (2017). A survey of attacks and de-

tection mechanisms on intelligent transportation sys-

tems: Vanets and iov. Ad Hoc Networks, 61:33–50.

Singh, T., Sanju, S., and Vijay, B. (2014). A new algorithm

designing for detection of moving objects in video. In-

ternational Journal of Computer Applications, 96(2).

Singla, N. (2014). Motion detection based on frame differ-

ence method. International Journal of Information &

Computation Technology, 4(15):1559–1565.

Wolfgang, R. B. and Delp, E. J. (1998). Overview of im-

age security techniques with applications in multime-

dia systems. In Multimedia Networks: Security, Dis-

plays, Terminals, and Gateways. Vol. 3228. Interna-

tional Society for Optics and Photonics.

WINSYS 2019 - 16th International Conference on Wireless Networks and Mobile Systems

386