Evolutionary Techniques in Lattice Sieving Algorithms

Thijs Laarhoven

a

Eindhoven University of Technology, The Netherlands

Keywords:

Evolutionary Algorithms, Applications, Cryptography, Lattice Sieving.

Abstract:

Lattice-based cryptography has recently emerged as a prominent candidate for secure communication in the

quantum age. Its security relies on the hardness of certain lattice problems, and the inability of known lattice

algorithms, such as lattice sieving, to solve these problems efficiently. In this paper we investigate the simi-

larities between lattice sieving and evolutionary algorithms, how various improvements to lattice sieving can

be viewed as applications of known techniques from evolutionary computation, and how other evolutionary

techniques can benefit lattice sieving in practice.

1 INTRODUCTION

Cryptography. To protect digital communication

between two parties against eavesdroppers, tech-

niques from the field of cryptography are widely

used, ensuring that only the legitimate parties are

able to extract the contents of the exchanged mes-

sages. Although most of the currently deployed sys-

tems, such as RSA encryption (Rivest et al., 1978) and

Diffie–Hellman key exchange (Diffie and Hellman,

1976), are considered reasonably efficient and secure

against “classical” adversaries, a breakthrough work

of Shor (Shor, 1997) demonstrated that most exist-

ing solutions are completely insecure when quantum

computers become a reality – even if building an effi-

cient quantum computer may still be decades away,

nothing stops adversaries from storing encrypted

communication with classical technologies now, and

decrypting the contents when a quantum computer

has been built. Classical cryptographic methods

therefore pose a risk even today, and academia and in-

dustry worldwide are increasingly shifting their atten-

tion towards new, quantum-proof cryptographic prim-

itives ((ETSI), 2019; (NIST), 2017).

Lattice-based Cryptography and Cryptanalysis.

Among the proposed methods for quantum-safe cryp-

tography, lattice-based cryptography has established

itself as a leading candidate, offering versatile, ad-

vanced, and efficient cryptographic designs with

small key sizes (Regev, 2005; Micciancio and Regev,

a

https://orcid.org/0000-0002-2369-9067

2009). Its security relies on the hardness of certain

lattice problems, such as the shortest and closest vec-

tor problems, and a crucial aspect of designing lattice-

based cryptographic primitives is cryptanalysis: ana-

lyzing the security of these schemes, and accurately

assessing the hardness of the underlying problems.

After all, overestimating the true costs of solving

these problems would lead to overly optimistic secu-

rity estimates and insecure schemes, while underesti-

mating these costs would lead to unnecessarily large

parameters. In practice, the only way to accurately

choose parameters and to assess the hardness of these

problems is to consider state-of-the-art algorithms for

solving these problems, and estimating their costs for

large parameters.

Lattice Sieving. Currently, the fastest known

method for solving most hard lattice problems is lat-

tice sieving (Ajtai et al., 2001). This method has

the best known scaling of the time complexity with

the lattice dimension. Asymptotic cost estimates for

sieving of (Becker et al., 2016; Laarhoven, 2016)

have now been extensively used for choosing param-

eters in various lattice-based cryptographic schemes;

see e.g. (Alkim et al., 2016; Bos et al., 2018). Un-

til recently lattice sieving was often not considered

as practical for low dimensions as lattice enumera-

tion (Gama et al., 2010), but recent work has truly

demonstrated the superiority of lattice sieving in prac-

tice as well (Albrecht et al., 2019; Struck, 2019).

Given the above, it is crucial that we obtain a good

understanding of lattice sieving algorithms, and how

they fit in the bigger picture of algorithms in general.

Laarhoven, T.

Evolutionary Techniques in Lattice Sieving Algorithms.

DOI: 10.5220/0007968800310039

In Proceedings of the 11th International Joint Conference on Computational Intelligence (IJCCI 2019), pages 31-39

ISBN: 978-989-758-384-1

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

31

1.1 Contributions

In this paper we describe how lattice sieving can natu-

rally be viewed as an evolutionary algorithm, and we

describe how various improvements to lattice sieving

from recent years can be traced back to closely re-

lated computational techniques in dealing with evolv-

ing populations. Besides describing this novel con-

nection, and investigating this new relation between

AI and cryptography, we list opportunities for lat-

tice sieving to benefit from techniques known from

the field of evolutionary algorithms, and experimen-

tally assess some of these for their impact on the per-

formance on state-of-the-art lattice sieving methods

1

.

We further briefly discuss the main results, and pro-

vide ideas for future research on this intersection of

artificial intelligence and cryptography.

Outline. The remainder of the paper is organized as

follows. In Section 2 we briefly cover notation and

some preliminaries on lattices and lattice sieving al-

gorithms. Section 3 describes (basic) lattice sieving in

the framework of evolutionary algorithms. Section 4

covers previous advances in lattice sieving, and how

they relate to techniques from evolutionary compu-

tation, while Section 5 covers those techniques from

the field of AI that have not yet been applied to lattice

sieving. Section 6 describes experiments with these

techniques, and Section 7 concludes with a discussion

on the relation between these fields, and avenues for

further exploration.

2 PRELIMINARIES

Lattices. Mathematically speaking, a d-

dimensional lattice represents a discrete subgroup of

R

d

. More concretely, given a set of d independent

vectors B = {b

1

,... ,b

d

} ⊂ R

d

, we define the lattice

generated by the basis B as follows:

L = L (B) :=

(

d

∑

i=1

λ

i

b

i

: λ

i

∈ Z

)

. (1)

Intuitively, working with lattices can be seen as doing

linear algebra over the integers; a point is in the lattice

if and only if it can be described by an integer linear

combination of the basis vectors. A crucial property

1

A previous paper (Ding et al., 2015) attempted to use

genetic techniques for solving hard lattice problems, but at-

tempted to apply these techniques to lattice enumeration,

and did not succeed in obtaining an efficient, competitive

algorithm. The more natural and novel relation with lattice

sieving is explored here.

of lattices for algorithms considered in this paper is

that if both v,w ∈ L , then also mv+ nw ∈ L for m,n ∈

Z: we can combine lattice vectors to form new lattice

vectors. Examples of lattices include Z

d

(all integer

vectors), and the 2D hexagonal lattice.

The Shortest Vector Problem (SVP). Although

describing how lattice-based cryptography works falls

outside the scope of this paper (interested readers may

refer to e.g. (Regev, 2006; Micciancio and Regev,

2009)), an important aspect of this area of cryptogra-

phy is that its security relies on the hardness of lattice

problems such as the shortest vector problem (SVP).

Given a description of a lattice, this problem asks to

find a non-zero vector s ∈ L with smallest Euclidean

norm:

ksk = min

06=v∈L

kvk.

kxk

2

:=

∑

d

i=1

x

2

i

(2)

Although for e.g. L = Z

d

this problem is easy,

for random lattices this problem is known to be

hard (Khot, 2004), and becomes increasingly diffi-

cult as the dimension d increases. Currently the

fastest known methods for solving SVP in high di-

mensions are based on lattice sieving, and asymp-

totically the fastest algorithm has a time complex-

ity scaling as 2

0.292d+o(d)

classically (Becker et al.,

2016), or 2

0.265d+o(d)

when using quantum comput-

ers (Laarhoven, 2016).

2

Benchmarks on random lat-

tices (Struck, 2019) further demonstrate the practical-

ity of sieving algorithms up to dimensions d ≈ 150.

Beyond these dimensions, the time and memory com-

plexities are too large for academic testing (Albrecht

et al., 2019).

Evolutionary Algorithms (EAs). Let us briefly

also recall the basics of evolutionary algorithms.

These algorithms model the flow of evolution as

found in nature, where a population evolves and

adapts to its environmental circumstances to allow

for optimal survival rates of the species. Algo-

rithm 1 presents pseudo-code of evolutionary algo-

rithms, which model this process from an algorithmic

point of view, and the key components are briefly dis-

cussed below.

2

Recall that lattice-based cryptography is advertised as

quantum-safe, even though faster quantum algorithms exist

for solving these problems than with classical computers.

This is because the scaling of the best time complexity re-

mains exponential in d, whereas for e.g. RSA or discrete

logarithm settings, the best known attack costs decrease

from (sub)exponential to only polynomial in the security

parameter when using a quantum computer (Shor, 1997).

ECTA 2019 - 11th International Conference on Evolutionary Computation Theory and Applications

32

Algorithm 1: Outline of evolutionary algorithms.

1: Initialize a population with random candidate so-

lutions

2: Evaluate each candidate for their fitness

3: repeat

4: Select parents for breeding

5: Recombine parents to form new children

6: Mutate some of the resulting offspring

7: Evaluate new candidates for their fitness

8: Select individuals for the next generation

9: until A termination condition is satisfied

Initialization. Initially a random sample of mem-

bers from the species is generated. The stronger the

initial initial population, the less time the population

needs to evolve to its optimal form, but commonly

the initialization process is less important than the

regenerational steps.

Parent Selection. Ideally, individual members of a

population should breed only if this process is likely

to result in genetically strong offspring. For this,

parents should be selected based on some (fitness)

criteria that guarantees that this will often be the case.

Recombination. Given two members of the popula-

tion, recombination (crossover) defines a method for

(randomly) generating a child from these two parents.

This child should inherit fitness properties from its

parents, to guarantee that the species improves over

time.

Mutation. Mutations of individual members of the

population stimulate genetic diversity, preventing an

early convergence to local optima. Mutations are

not always present in evolutionary algorithms, and if

present often only occur with very small probability.

Fitness Evaluation. In most settings, the population

has a certain goal, e.g. to survive for as long as

possible, or to bring individuals closer to an optimal

solution. For this it is important to be able to test

the fitness of individual members of the population.

Here we will restrict ourselves to absolute fitness

functions, where individuals can be ranked based on

their fitness levels.

Survivor Selection. In evolutionary algorithms, gen-

erally only the fittest members of the population are

allowed to survive between generations (survival of

the fittest). This is often enforced by selecting the

subset of individuals with the highest fitness levels for

the next generation, and discarding the remainder of

the population.

For more on commonly used techniques in evolu-

tionary algorithms, we refer the interested reader to

e.g. (B

¨

ack, 1996; B

¨

ack et al., 2000a; B

¨

ack et al.,

2000b; Coello et al., ).

3 LATTICE SIEVING AS AN EA

As outlined above, lattice sieving is currently the

fastest method for solving problems such as SVP, and

understanding this algorithm well is therefore cru-

cial for accurately designing efficient quantum-secure

communication protocols. Understanding its relation

with techniques from other fields may prove useful

as well, and might inspire further advances in lattice

sieving in the future. One of our main contributions is

studying this relation between lattice sieving and evo-

lutionary algorithms, and below we will show how

lattice sieving can naturally be phrased as an evolu-

tionary method.

Original Description. As a starting point, let us

take the Nguyen–Vidick sieve (Nguy

ˆ

en and Vidick,

2008), which was the first practical, heuristic lattice

sieving algorithm for solving SVP. This method starts

by sampling a list P of exponentially many random,

rather long lattice vectors, e.g. by taking small, ran-

dom integer linear combinations of the basis vectors.

The idea of lattice sieving is then to iteratively apply

a sieve to P to form a new list P

0

of shorter lattice

vectors, which will then replace P. For this, the algo-

rithm considers all pairs of vectors v,w ∈ P, and sees

whether u = v − w forms a shorter lattice vector than

either v or w. If this is the case, we keep u for the

next list P

0

, and we discard the longest of v, w for the

next generation. After repeatedly applying this sieve,

the vectors in the list become shorter and shorter, and

ultimately the list P will contain most of the shortest

vectors in the lattice, including the solution.

As an Evolutionary Algorithm. This algorithm

can naturally be viewed as an evolutionary process,

and Algorithm 2 describes this method in pseudo-

code. Below we briefly describe how the previously

listed key components of evolutionary methods

appear in lattice sieving.

Initialization. Commonly, the initial population of

lattice vectors is generated by using a discrete Gaus-

sian sampler over the lattice, guaranteeing that (1)

these vectors can be sampled quickly, and (2) the re-

sulting sampled vectors are not unnecessarily long.

Evolutionary Techniques in Lattice Sieving Algorithms

33

Parent Selection. Two members (vectors) v, w from

the population are considered for breeding if they are

relatively nearby in space, so that u = v − w is shorter

than at least one of its parents.

Recombination. Then, given that v,w are ap-

propriate parents for reproduction, recombination

deterministically results in the child u = v − w ∈ L ,

which is also in the lattice and is hopefully a shorter

lattice vector.

Mutation. Existing lattice sieving methods do not

include mutations; offspring is deterministically gen-

erated from the parents, and no individual mutations

take place.

Fitness Evaluation. As the goal of the algorithm is

to obtain short (non-zero) lattice vectors, a natural

measure for the fitness of individual population

members v ∈ P is their Euclidean norm kvk: the

smaller this norm, the fitter.

Survivor Selection. After offspring has been pro-

duced, selections are made locally: if a child has a

lower norm than one of its parents, we discard the

longest of the parent vectors and replace it with the

child.

Similarities and Differences. Lattice sieving can

naturally be viewed as an evolutionary algorithm, as

the core procedure consists of generating a large pop-

ulation, and doing simple, local recombinations on

pairs of vectors to form shorter lattice vectors, which

replace the longer ones in the initial population. The

actual computational operations in sieving rely on the

following very elementary property of lattices:

v,w ∈ L =⇒ v − w ∈ L (3)

By only recombining suitable pairs of parents, we

guarantee that the members of the population become

increasingly fit.

Arguably the biggest differences compared to the

standard evolutionary model are that (1) mutations do

not exist at all in existing lattice sieving approaches;

and (2) parents are directly replaced by their chil-

dren, rather than the survivor selection happening on

a population-wide, global scale. Note also that re-

combination and offspring generation happen deter-

ministically – there is no randomness in computing

the child u = v − w from the parents v, w.

Complexity Estimates. For completeness, let us

also give a high-level description of the main argu-

mentation for the time and space complexities of lat-

tice sieving. First, if v,w have equal Euclidean norms

Algorithm 2: Evolutionary lattice sieving.

Input: A description (basis) of a lattice L

Output: A population P ⊂ L of short lattice vectors

1: Initialize a population P ⊂ L of random lattice

vectors

2: repeat

3: for all Potential parents v,w ∈ P do

4: Generate the (potential) offspring u = v −w

5: if kuk < kvk and/or kuk < kwk then

6: Replace the longest parent with u

7: end if

8: end for

9: until P contains sufficiently short lattice vectors

10: return P

(similar norms occur often in high-dimensional siev-

ing instances), then the difference vector u = v − w

is a shorter vector than v, w if and only if v, w have

a mutual angle φ <

π

3

. The probability of this occur-

ring, for e.g. uniformly random vectors v,w of unit

length, is proportional to sin(

π

3

)

d

= (

3

4

)

d/2

due to vol-

ume arguments of hyperspherical caps. To guarantee

that the next generation has approximately the same

size as the previous one, so that after a potentially

large number of iterations we will not end up with

an empty list, we need |P

0

| ≈ |P|

2

· (

3

4

)

d/2

≈ |P|, as

there are |P|

2

pairs of parents in P, and they produce

good offspring (a short difference vector) with prob-

ability approximately (

3

4

)

d/2

. Solving for |P| gives

|P| ≈ (

4

3

)

d/2

≈ 2

0.208d

as an estimate of the memory

complexity (population size) needed to succeed, and

since all pairs of vectors are considered for generat-

ing offspring, this gives a quadratic time complex-

ity scaling as |P|

2

≈ (

4

3

)

d

≈ 2

0.415d

, as argued in e.g.

(Nguy

ˆ

en and Vidick, 2008).

3

4 PAST SIEVING TECHNIQUES

The previous section described how the most basic

lattice sieving algorithm could be viewed as an evolu-

tionary algorithm. Over time, various improvements

have been proposed for lattice sieving, and many of

them naturally relate to techniques that have been pre-

viously studied in the context of evolutionary compu-

tation as well.

Multi-parent Offspring and Tuple Lattice Sieving.

A technique discussed in e.g. (Eiben et al., 1994)

3

The number of applications of the sieve necessary to

converge to a solution, is only polynomial in d, and neg-

ligible compared to the exponential running time for each

application of the sieve.

ECTA 2019 - 11th International Conference on Evolutionary Computation Theory and Applications

34

is the relevance of scenarios where offspring is pro-

duced by more than two parents. By selecting more

parents and inheriting the best genes from all of them,

stronger offspring can sometimes be generated than

with two parents. This idea has been studied in the

context of lattice sieving as tuple lattice sieving (Bai

et al., 2016; Herold et al., 2018), where tuples of up

to k ≥ 2 vectors are recombined to generate shorter

lattice vectors. In general, this approach leads to bet-

ter memory complexities than the standard approach

(smaller populations suffice to guarantee a productive

evolution process) but to worse time complexities till

convergence (finding suitable k-tuples of parents for

recombination requires more work).

Genetic Segregation and Nearest Neighbor

Searching. A common technique in evolutionary

algorithms, related to niching and speciation, is to

subdivide the search space into mutually disjoint

regions, and letting different subspecies of the

population coevolve separately, with recombinations

happening only within each subpopulation. This

closely relates to the application of techniques from

nearest neighbor searching (Indyk and Motwani,

1998) to lattice sieving (Laarhoven, 2015; Becker

et al., 2016; Laarhoven, 2016). By dividing the

high-dimensional search space into regions, and

separately recombining parents in each of these

region, one generally still finds good parents for

producing better offspring, while saving a lot of time

on attempting to mate parents which are unsuitable

couples for reproduction.

Progressive Preferences and Progressive Lattice

Sieving. For finding optimal solutions in the entire

search space, some methods in EA have proposed a

progressive approach, where initially only a subset of

the constraints (search space) is studied to find local

solutions, before expanding to a wider search space

and finding global solutions (Coello et al., ). Simi-

lar ideas have recently been explored in the context of

lattice sieving (Laarhoven and Mariano, 2018; Ducas,

2018), starting to sieve in a sublattice of the original

lattice before widening the search space. This heuris-

tically and practically accelerates the time until con-

vergence.

Island Models and Parallelization. When attempt-

ing to parallelize evolutionary algorithms, one natu-

rally faces the question how to subdivide the popula-

tion for separate processing, and how to then merge

the local results to find global optima (B

¨

ack et al.,

2000b). For lattice sieving, this topic has been studied

in e.g. (Mariano et al., 2017; Albrecht et al., 2019),

where initially parts of the population were recom-

bined on individual nodes, and the individuals then

migrated across different nodes to guarantee repro-

duction with all suitable mates.

Crowding and Replacing Parents Directly. Per-

haps the most common method in evolutionary com-

putation for selecting the next generation is to gener-

ate offspring, sort all individuals by their fitness, and

then take the fittest ones for the next generation. Lat-

tice sieving commonly applies a form of crowding,

where each time a child is produced, one of its parents

is discarded for the next generation. This guarantees

that each ‘corner’ of space only contains few vectors,

and allows for the complexity estimates from the pre-

vious section based on pairwise angles between vec-

tors.

5 NEW SIEVING TECHNIQUES

Besides existing methods from EA, which have al-

ready been studied in the context of sieving, perhaps

of more interest are those techniques that have not yet

been considered to improve sieving algorithms. Be-

low we consider three main concepts, which will then

be evaluated experimentally as well.

Encodings. Commonly, evolutionary algorithms do

not work directly with the population, but with en-

codings of the population that allow for more natural,

genetic recombinations and mutations. Besides the

direct application of evolutionary techniques to lat-

tice sieving, working with lattice vectors in terms of

their coordinates, one could also encode members of

the population differently, i.e. by encoding a lattice

vector v by its coefficient vector λ in the basis B:

v = (v

1

,. .. ,v

d

) =

d

∑

i=1

λ

i

b

i

encode

−→ λ = (λ

1

,. .. ,λ

d

).

Recombining vectors can be done the same as before,

where subtracting two coordinate vectors equivalently

corresponds to subtracting their coefficient vectors in

terms of the basis. In some cases it may be more

convenient to work with the vectors directly (i.e. to

compute fitness), but for mutations discussed below,

working with encodings is more natural.

Mutations. In existing lattice sieving methods,

population members never undergo any unitary mu-

tations. Note that the common technique of mod-

ifying single genes of an individual does not quite

Evolutionary Techniques in Lattice Sieving Algorithms

35

make sense for lattice sieving: by modifying a co-

ordinate, one may step outside the lattice, and the al-

gorithm will no longer solve the right problem. With

the encoding described above, mutations can be ap-

plied more naturally by sometimes adding random,

small noise to generated offspring, which would cor-

respond to adding short combinations of basis vec-

tors. Note that as lattice sieving algorithms progress,

such short combinations of basis vectors are typically

much longer than the generated children, and muta-

tions generally decrease the fitness; if at all useful,

mutations would have to be done very sporadically.

Global Selection. Finally, existing lattice sieving

approaches always do updates to the population on

a local scale: one or both of the parents are replaced

by the children, to guarantee that the list has the pair-

wise reduction property outlined in Section 3 for argu-

ing heuristic bounds on the population size. Instead,

it may be beneficial to keep parents more often, and

to make a final selection for the next generation on a

global scale: order all generated offspring and parents

by their fitness, and keep the strongest members.

6 EXPERIMENTS

We have implemented the basic lattice sieving ap-

proach outlined in Section 3, as well as (1) the concept

of global selection for lattice sieving (rather than local

replacements), and (2) mutations based on the repre-

sentation of vectors in the lattice basis. We have tested

these algorithms on a 40-dimensional random lattice

from the SVP challenge website (Struck, 2019), start-

ing with an LLL-reduced basis of the lattice and let-

ting the algorithm run for a number of generations,

until no more progress is made between successive

generations. The initial population size was set to

1500 lattice vectors, and each successive generation

was also limited to using a population of at most this

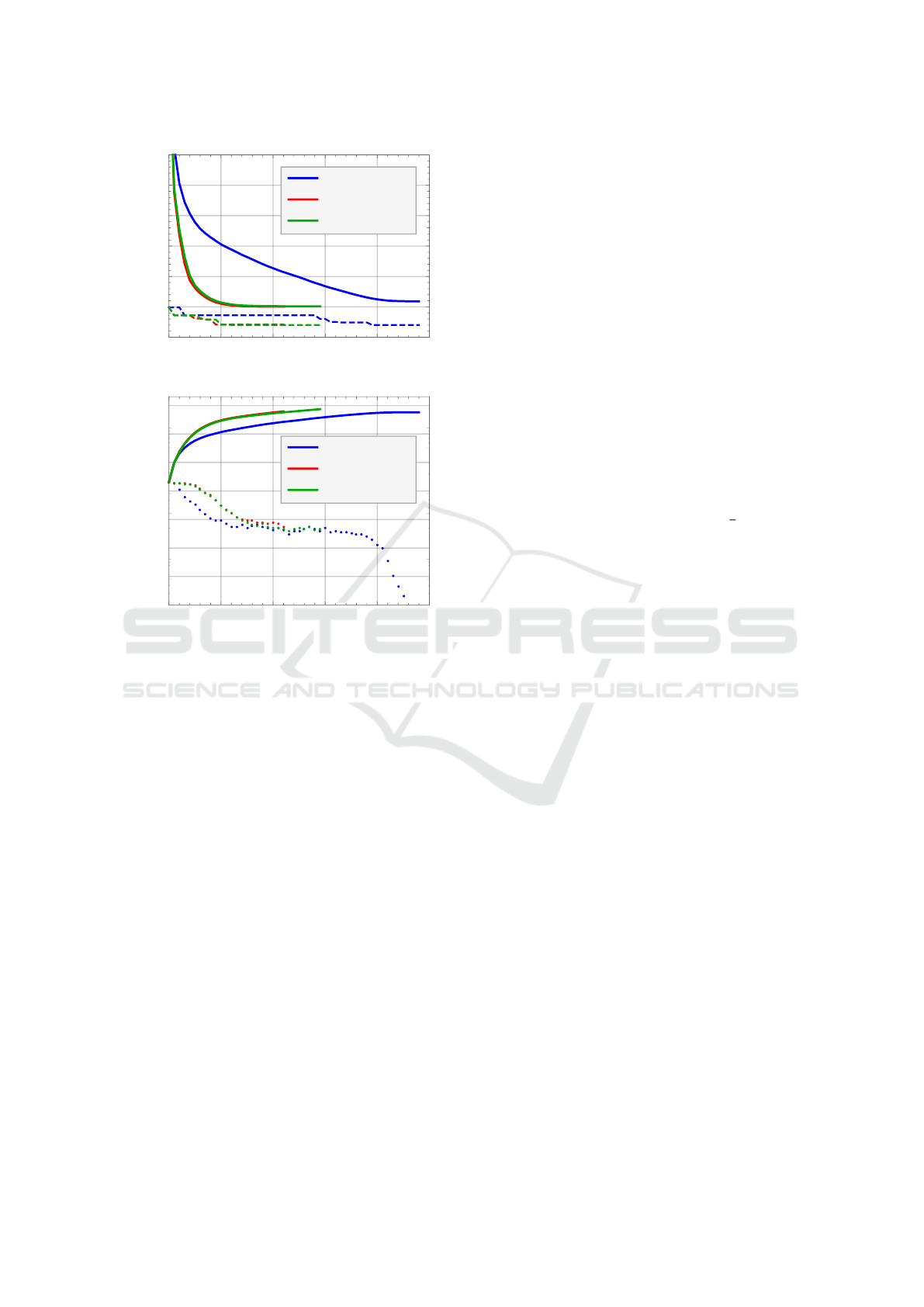

size. Figure 1a shows the experimental results of the

average (and minimum) fitness per generation against

the generation number, and below we discuss the ef-

fects of these modifications in more detail.

Original Approach. For the original sieving ap-

proach, we look for pairs v,w ∈ P such that u = v − w

is a shorter vector than one of its parents. If this is

the case, we replace the longer of the two parent vec-

tors with this child, and we continue until all (un-

modified) parent vectors have been compared to see

if their offspring leads to an improvement in the pop-

ulation. This is the basic algorithm from (Nguy

ˆ

en and

Vidick, 2008; Laarhoven, 2016), and the blue line in

Figure 1a shows how the average norms of the vec-

tors in the population decrease over time. The dashed

blue line further shows the progression in terms of the

norm of the shortest member of the population, and

in our example run it took 39 generations for the al-

gorithm to find the shortest non-zero vector s ∈ L of

norm ksk ≈ 1702. After 48 generations and 20 sec-

onds the algorithm terminated with an average fitness

(`

2

-norm) of 2092, and a final population size of 1386

lattice vectors, having done 17300 updates to the pop-

ulation.

Global Selection. For this variant, we considered

all children u = v − w for potential insertion in the

population for the next generation, and at the end

we simply selected the 1500 shortest/fittest members

for survival. As the red curves in Figure 1 show,

this decreases the number of generations needed for

convergence, the average norm of vectors decreases

faster between generations, and the vectors get re-

placed more quickly than before. After 22 generations

and 1.5 seconds on the same machine, the algorithm

converged to a population of 1500 short lattice vec-

tors of average length 2004, having done 17800 vec-

tor replacements. Unfortunately, the algorithm failed

to find a shortest vector, and the shortest vector in the

final population had norm 1709.

Mutations. For this variant, not depicted in Fig-

ure 1, we performed sieving with local updates (chil-

dren replacing their direct parents), but we added oc-

casional mutations of children – in 10% of all cases

we added/removed single basis vectors to children to

create more diversity in the population.

However, in combination with local updates, mu-

tations are not very successful, and over time the aver-

age norm of vectors in the population only increased.

This is inherent to the local updates, where mutations

commonly increase the norm and lead to a local up-

date to the population that only leads to longer lattice

vectors. With a global survivor selection procedure,

bad mutations can be filtered out for the next genera-

tion, but with local updates this is not the case.

Global Selection and Mutations. The final vari-

ant in our experiments uses both global selection and

occasional mutations of children, described above.

In this particular example, adding mutations to the

global selection sieve indeed helped: after 29 gen-

erations and 1.9 seconds, we converged to a popu-

lation of 1500 lattice vectors with average Euclidean

norm 2008 and minimum norm 1702, corresponding

to the shortest non-zero vector in the lattice (having

ECTA 2019 - 11th International Conference on Evolutionary Computation Theory and Applications

36

Original sieve

Global selection

GS + Mutations

0 10 20 30 40 50

1500

2000

2500

3000

3500

4000

4500

Fitness (ℓ

2

-norm)

(a) Fitness levels (average/minimum) per generation

Original sieve

Global selection

GS + Mutations

0 10 20 30 40 50

50

100

500

1000

5000

10

4

Generation gap (#vectors)

(b) Replaced vectors (cumulative/separated) per genera-

tion

Figure 1: (Figure 1a) depicts the average fitness (thick)

and best fitness (dashed) per generation – lower `

2

-norms

correspond to a higher fitness level. (Figure 1b) depicts

the number of surviving children per generation (generation

gap) – the higher the gap, the faster the evolution. Both

graphs depict the original sieve (blue), the sieve with global

updates (red), and the sieve with both global selection and

occasional mutations (green).

done 19300 updates to the population). This in con-

trast with the earlier global selection algorithm with-

out mutations, where we converged to a local solution

of larger norm 1709. The time till convergence as well

as the number of generations needed for convergence

are a bit worse compared to not using mutations, but

we did find the optimal solution with this variant.

Discussion. The results in Figure 1a demonstrate

what we might expect to happen when using these

modifications. Using a global survivor selection ap-

proach (rather than the local replacements in existing

sieving algorithms), the overall quality of the popu-

lation improves faster in each generation, and the al-

gorithm converges more quickly towards an optimal

solution (i.e. a shortest non-zero vector of the lattice).

With only the global selection modification we fur-

ther noticed we were “unlucky” in converging to a

local solution of norm 1709, rather than the shortest

vector in the lattice of norm 1702. With the extra ran-

domness generated by the genetic mutations, we did

eventually find the shortest vector in our population,

although the number of iterations till convergence in-

creased slightly. We expect this behavior to appear

in other examples too: mutations commonly will not

increase the performance, but may help in preventing

convergence towards local optima.

On the Absence of Global Selection in Sieving.

As the idea of population-wide survivor selection ap-

pears very natural, and various more advanced tech-

niques have already been considered in the context of

lattice sieving, one might wonder why this idea has

not yet been applied to sieving. Perhaps the main rea-

son for this is that the complexity estimates of lattice

sieving, described in Section 3, crucially rely on the

population having the property that any two vectors

v,w ∈ P have a pairwise angle of at least

π

3

; otherwise,

we would find the child u = v − w as a shorter child,

and one of the parents would have been replaced with

u. Given that all pairs of vectors are relatively far

apart in terms of their pairwise angles, this then allows

us to use sphere packing bounds (Nguy

ˆ

en and Vidick,

2008) to obtain heuristic upper bounds on the popula-

tion size and, consequently, on the running time of the

algorithm. When we do updates globally, and select

only the fittest members for the next generation, we

no longer have these heuristic guarantees for the time

and space complexities of sieving. So even though in

practice, as our experiments indicated, this global se-

lection modification only appears to improve the pop-

ulation quality, from a complexity-theoretic point of

view this modification is somewhat counter-intuitive.

7 CONCLUSION

In this paper we demonstrated a new, natural connec-

tion between lattice sieving algorithms used in crypt-

analysis on the one hand, and techniques in evolu-

tionary algorithms on the other hand. We analyzed

how ideas and terminology in both fields relate, and

how certain ideas from EA that have not yet been ap-

plied to lattice sieving may be of interest for improv-

ing sieving algorithms. In particular, the idea of a

global selection procedure appears promising, and al-

though from a certain point of view this modification

is somewhat unnatural, experiments suggest that this

may well benefit the performance of lattice sieving in

practice. Note that we have only tested these modi-

fications with a basic sieve in a low-dimensional lat-

Evolutionary Techniques in Lattice Sieving Algorithms

37

tice (d = 40), and analyzing how this modification in-

teracts with other existing improvements and tweaks

to state-of-the-art lattice sieving implementations (see

e.g. (Albrecht et al., 2019)) is left for future work.

Part of the aim of this work is also to stimulate a

further exchange of ideas between both fields, as sev-

eral existing ideas which have turned out to be use-

ful in lattice sieving have been studied in the context

of evolutionary computation long ago, and may well

have been introduced to lattice sieving sooner, had

ideas between both fields been exchanged sooner. In-

terested readers from the area of AI may wish to refer

to (Laarhoven, 2016) for an overview of lattice siev-

ing techniques; to (Becker et al., 2016) for the current

theoretical state-of-the-art in terms of lattice sieving;

and to (Albrecht et al., 2019) for what is currently

(as of early 2019) the fastest lattice sieving method in

practice. Given the similarities between lattice siev-

ing and evolutionary computation, there may well be

further ways to improve lattice sieving with existing

techniques from AI.

Besides the relation with lattice sieving discussed

here, some other techniques in the broader field of

cryptanalysis also follow a similar procedure of (1)

generating a random, large population; (2) combining

members in this population to form better solutions;

and (3) ultimately finding a solution in the final pop-

ulation. We explicitly state two examples:

• The Blum–Kalai–Wasserman (BKW) Algo-

rithm.

One of the fastest known methods for attacking

cryptographic schemes based on the hardness of

learning parity with noise (LPN) and learning

with errors (LWE) (Regev, 2005; Regev, 2006) is

the BKW algorithm (Blum et al., 2003). From a

high-level point of view, one starts with a list of

integer vectors, and tries to find short combina-

tions that cancel out many of the coordinates, thus

leading to vectors with many zeros.

• Decoding Random (Binary) Linear Codes.

For understanding the security of state-of-the-

art code-based cryptographic schemes (McEliece,

1978; Bernstein et al., 2009), the fastest known

attacks solve a decoding problem for random bi-

nary, linear codes. These also commonly start

by generating a large population of {0,1}-strings,

and then forming combinations to cancel out

many of the coordinates and obtain a vector with

low Hamming weight (May and Ozerov, 2015).

Both approaches can similarly be interpreted as evo-

lutionary algorithms, and we leave a further study of

this relation for future work.

ACKNOWLEDGMENTS

The author is supported by a Veni Innovational

Research Grant from NWO under project number

016.Veni.192.005.

REFERENCES

Ajtai, M., Kumar, R., and Sivakumar, D. (2001). A sieve

algorithm for the shortest lattice vector problem. In

STOC, pages 601–610.

Albrecht, M., Ducas, L., Herold, G., Kirshanova, E.,

Postlethwaite, E., and Stevens, M. (2019). The gen-

eral sieve kernel and new records in lattice reduction.

In EUROCRYPT.

Alkim, E., Ducas, L., P

¨

oppelmann, T., and Schwabe, P.

(2016). Post-quantum key exchange – a new hope.

In USENIX Security Symposium, pages 327–343.

B

¨

ack, T. (1996). Evolutionary Algorithms in Theory and

Practice: Evolution Strategies, Evolutionary Pro-

gramming, Genetic Algorithms. Oxford University

Press.

B

¨

ack, T., Fogel, D. B., and Michalewicz, Z., editors

(2000a). Evolutionary Computation 1: Basic Algo-

rithms and Operators. IOP Publishing.

B

¨

ack, T., Fogel, D. B., and Michalewicz, Z., editors

(2000b). Evolutionary Computation 2: Advanced Al-

gorithms and Operators. IOP Publishing.

Bai, S., Laarhoven, T., and Stehl

´

e, D. (2016). Tuple lattice

sieving. In ANTS, pages 146–162.

Becker, A., Ducas, L., Gama, N., and Laarhoven, T. (2016).

New directions in nearest neighbor searching with ap-

plications to lattice sieving. In SODA, pages 10–24.

Bernstein, D. J., Buchmann, J., and Dahmen, E., editors

(2009). Post-quantum cryptography. Springer.

Blum, A., Kalai, A., and Wasserman, H. (2003). Noise-

tolerant learning, the parity problem, and the statisti-

cal query model. Journal of the ACM, 50(4):506–519.

Bos, J., Ducas, L., Kiltz, E., Lepoint, T., Lyubashevsky, V.,

Schanck, J. M., Schwabe, P., and Stehl

´

e, D. (2018).

CRYSTALS – Kyber: a CCA-secure module-lattice-

based KEM. In Euro S&P, pages 353–367.

Coello, C. A., Lamont, G. B., and Veldhuizen, D. A. V.

Evolutionary Algorithms for Solving Multi-Objective

Problems (2nd edition). Springer.

Diffie, W. and Hellman, M. E. (1976). New directions in

cryptography. IEEE Transactions on Information The-

ory, 22(6):644–654.

Ding, D., Zhu, G., and Wang, X. (2015). A genetic algo-

rithm for searching the shortest lattice vector of SVP

challenge. In GECCO, pages 823–830.

Ducas, L. (2018). Shortest vector from lattice sieving: a few

dimensions for free. In EUROCRYPT, pages 125–145.

Eiben, A. E., Rau

´

e, P. E., and Ruttkay, Z. (1994). Ge-

netic algorithms with multi-parent recombination. In

Davidor, Y., Schwefel, H.-P., and M

¨

anner, R., editors,

Parallel Problem Solving from Nature — PPSN III,

pages 78–87, Berlin, Heidelberg. Springer Berlin Hei-

delberg.

ECTA 2019 - 11th International Conference on Evolutionary Computation Theory and Applications

38

(ETSI), T. (2019). Quantum-safe cryptography.

Gama, N., Nguy

ˆ

en, P. Q., and Regev, O. (2010). Lattice

enumeration using extreme pruning. In EUROCRYPT,

pages 257–278.

Herold, G., Kirshanova, E., and Laarhoven, T. (2018).

Speed-ups and time-memory trade-offs for tuple lat-

tice sieving. In PKC, pages 407–436.

Indyk, P. and Motwani, R. (1998). Approximate nearest

neighbors: Towards removing the curse of dimension-

ality. In STOC, pages 604–613.

Khot, S. (2004). Hardness of approximating the short-

est vector problem in lattices. Journal of the ACM,

52(5):789–808.

Laarhoven, T. (2015). Sieving for shortest vectors in lattices

using angular locality-sensitive hashing. In CRYPTO,

pages 3–22.

Laarhoven, T. (2016). Search problems in cryptography.

PhD thesis, Eindhoven University of Technology.

Laarhoven, T. and Mariano, A. (2018). Progressive lattice

sieving. In PQCrypto, pages 292–311.

Mariano, A., Laarhoven, T., and Bischof, C. (2017). A par-

allel variant of LDSieve for the SVP on lattices. In

PDP, pages 23–30.

May, A. and Ozerov, I. (2015). On computing nearest neigh-

bors with applications to decoding of binary linear

codes. In EUROCRYPT, pages 203–228.

McEliece, R. J. (1978). A public-key cryptosystem based

on algebraic coding theory. The Deep Space Network

Progress Report, pages 114–116.

Micciancio, D. and Regev, O. (2009). Lattice-based cryp-

tography, chapter 5 of (Bernstein et al., 2009), pages

147–191. Springer.

Nguy

ˆ

en, P. Q. and Vidick, T. (2008). Sieve algorithms for

the shortest vector problem are practical. Journal of

Mathematical Cryptology, 2(2):181–207.

(NIST), T. (2017). Post-quantum cryptography.

Regev, O. (2005). On lattices, learning with errors, random

linear codes, and cryptography. In STOC, pages 84–

93.

Regev, O. (2006). Lattice-based cryptography. In CRYPTO,

pages 131–141.

Rivest, R. L., Shamir, A., and Adleman, L. (1978). A

method for obtaining digital signatures and public-

key cryptosystems. Communications of the ACM,

21(2):120–126.

Shor, P. W. (1997). Polynomial-time algorithms for prime

factorization and discrete logarithms on a quantum

computer. SIAM Journal on Computing, 26(5):1484–

1509.

Struck, P. (2019). SVP challenge. http://latticechallenge.

org/svp-challenge/.

Evolutionary Techniques in Lattice Sieving Algorithms

39