Fault Detection of Elevator System using Deep Autoencoder Feature

Extraction for Acceleration Signals

Krishna Mohan Mishra and Kalevi J. Huhtala

Unit of Automation Technology and Mechanical Engineering, Tampere University, Tampere, Finland

Keywords:

Elevator System, Deep Autoencoder, Fault Detection, Feature Extraction, Random Forest.

Abstract:

In this research, we propose a generic deep autoencoder model for automatic calculation of highly informative

deep features from the elevator time series data. Random forest algorithm is used for fault detection based on

extracted deep features. Maintenance actions recorded are used to label the sensor data into healthy or faulty.

Avoiding false positives are performed with the rest of the healthy data in terms of validation of the model to

prove its efficacy. New extracted deep features provide 100% accuracy in fault detection along with avoiding

false positives, which is better than existing features. Random forest was also used to detect faults based on

existing features to compare results. New deep features extracted from the dataset with deep autoencoder

random forest outperform the existing features. Good classification and robustness against overfitting are key

characteristics of our model. This research will help to reduce unnecessary visits of service technicians to

installation sites by detecting false alarms in various predictive maintenance systems.

1 INTRODUCTION

In recent years, apartments, commercial facilities and

office buildings are using elevator systems more ex-

tensively. Nowadays, urban areas comprised of 54%

of the world’s population (Desa, 2014). Therefore,

proper maintenance and safety are required by ele-

vator systems. Development of predictive and pre-

emptive maintenance strategies will be the next step

for improving the safety of elevator systems, which

will also increase the lifetime and reduce repair costs

whilst maximizing the uptime of the system (Ebeling,

2011), (Ebeling and Haul, 2016). Predictive mainte-

nance policy are now being opted by elevator produc-

tion and service companies for providing better ser-

vice to customers. They are estimating the remaining

lifetime of the components responsible for faults and

remotely monitoring faults in elevators. Fault detec-

tion and diagnosis are required by elevator systems

for healthy operation (Wang et al., 2009).

State of the art include fault diagnosis methods

having feature extraction methodologies based on

deep neural networks (Zhang et al., 2017), (Jia et al.,

2016), (Bulla et al., 2018) and convolutional neural

networks (Xia et al., 2018), (Jing et al., 2017) for ro-

tatory machines similar to elevator systems. Fault de-

tection methods for rotatory machines are also using

support vector machines (Mart

´

ınez-Rego et al., 2011)

and extreme learning machines (Yang and Zhang,

2016). However, to improve the performance of tra-

ditional fault diagnosis methods, we have developed

an intelligent deep autoencoder model for feature ex-

traction from the data and random forest performs the

fault detection in elevator systems based on extracted

features.

In the last decade, highly meaningful statistical

patterns have extracted with neural networks (Cal-

imeri et al., ) from large-scale and high-dimensional

datasets. Elevator ride comfort has also been im-

proved via speed profile design using neural networks

(Seppala et al., 1998). Nonlinear time series model-

ing (Lee, 2014) is one of the successful application

of neural networks. Relevant features can be self-

learned from multiple signals using a deep learning

network (Fern

´

andez-Varela et al., ). Deep learning al-

gorithms are frequently used in areas such as knowl-

edge engineering (Mohamed et al., 2017), text anal-

ysis (Chatterjee and Bhardwaj, 2010), ontology de-

velopment (Alkhatib et al., 2017), intelligent trans-

portation (Hina et al., 2017) and data analysis (Hen-

riques and Stacey, 2012). Autoencoding is a pro-

cess based on feedforward neural network (H

¨

anninen

and K

¨

arkk

¨

ainen, 2016) for nonlinear dimension re-

duction with natural transformation architecture. Au-

toencoders (Albuquerque et al., 2018) are very power-

ful as nonlinear feature extractors. Autoencoders can

336

Mishra, K. and Huhtala, K.

Fault Detection of Elevator System using Deep Autoencoder Feature Extraction for Acceleration Signals.

DOI: 10.5220/0008347403360342

In Proceedings of the 11th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2019), pages 336-342

ISBN: 978-989-758-382-7

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

extract features of high interest from sensor data for

increasing the generalization ability of machine learn-

ing models (Huet et al., 2016). Autoencoders have

been studied for decades and were first introduced

by LeCun (Fogelman-Soulie et al., 1987). Tradition-

ally, autoencoders have two main features i.e. fea-

ture learning and dimensionality reduction. Autoen-

coders and latent variable models (Madani and Vlajic,

2018) are theoretically related, which promotes them

to be considered as one of the most compelling sub-

space analysis techniques. Feature extraction method

based on autoencoders are used in systems like induc-

tion motor (Sun et al., 2016) and wind turbines (Jiang

et al., 2018) for fault detection, different from elevator

systems as in our research.

In our previous research, elevator key perfor-

mance and ride quality features were calculated from

mainly acceleration signals of raw sensor data, which

we call here existing features. Random forest has

classified these existing features to detect faults. Ex-

pert knowledge of the domain is required to calcu-

late existing domain specific features from raw sensor

data but there will be loss of information to some ex-

tent. To avoid these implications, an automated fea-

ture extraction technique based on deep autoencoder

approach is developed for raw sensor data in all x,

y and z directions and random forest is used to de-

tect faults based on these deep features. The rest of

this paper is organized as follows. Section 2 presents

the methodology of the paper including deep autoen-

coder and random forest algorithms. Then, section 3

includes the details of experiments performed, results

and discussion. Finally, section 4 concludes the paper

and presents the future work.

2 METHODOLOGY

In this research, we have used 12 different existing

features describing the motion and vibration of an el-

evator. These features are derived from raw sensor

data for fault detection and diagnostics of multiple

faults. In this research, as an extension to the work

of our previous research (Mishra and Huhtala, 2019),

we have developed an automated feature extraction

technique for raw sensor data, to compare the results

using new extracted deep features. We have analyzed

almost one week of the data from one traction elevator

in this research. Around 200 rides per day are usually

produced by an elevator. Robustness of the algorithm

is tested by large dataset because each ride includes

around 5000 rows of the data. Data is divided into two

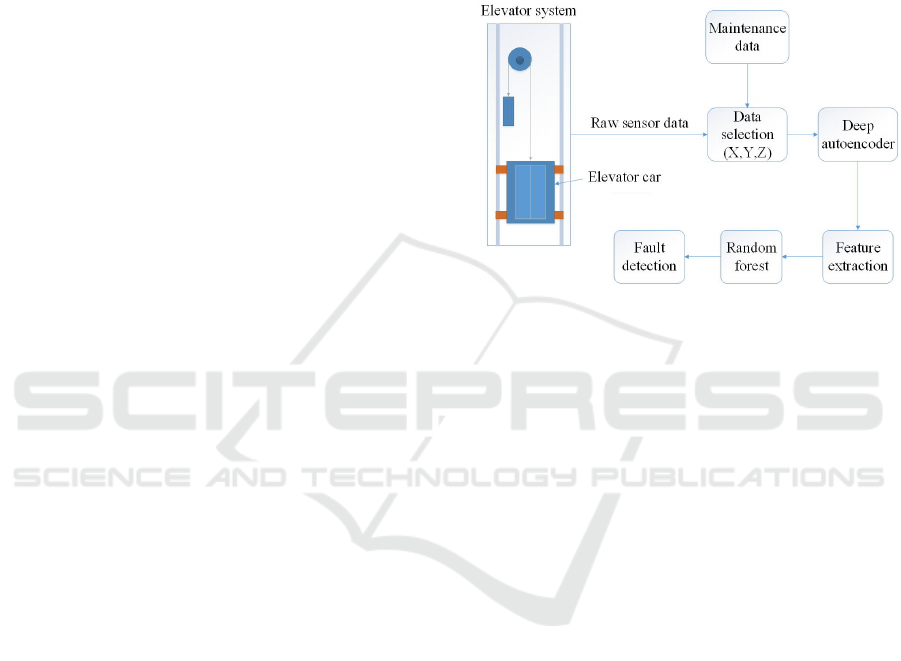

parts 70% for training and rest 30% for testing. Figure

1 shows the fault detection approach used in this pa-

per, which includes raw sensor data in all x, y and z di-

rections extracted based on time periods provided by

the maintenance data. Data collected from an elevator

system is fed to the deep autoencoder model for fea-

ture extraction and then random forest performs the

fault detection task based on extracted deep features.

We are extracting features from all the three x, y and

z components of the acceleration signals, which is as

an extension to the work of our previous research.

Figure 1: Fault detection approach.

2.1 Deep Autoencoder

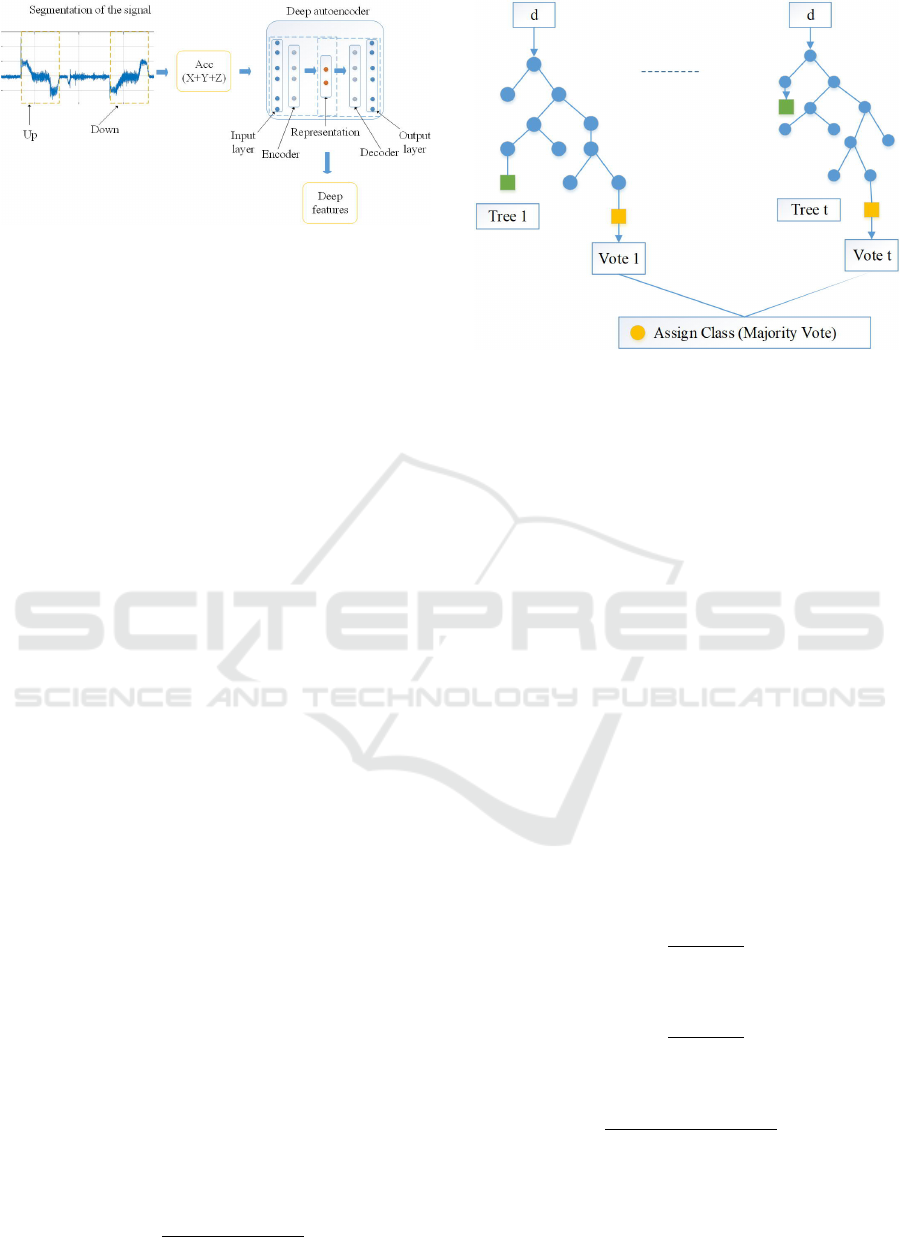

We have developed a deep autoencoder model based

on deep learning autoencoder feature extraction

methodology. A basic autoencoder is built on feed-

forward neural network with a fully connected three-

layer network including one hidden layer. Input and

output layer of a typical autoencoder have same num-

ber of neurons and reproduces output as its inputs. We

are using a five layer deep autoencoder (see Figure 2)

including input, output, encoder, decoder and repre-

sentation layers, which is a different approach than in

(Jiang et al., 2018), (Vincent et al., 2008). In our ap-

proach, we first analyze the data to find the most fre-

quent floor pattern and then feed the segmented raw

sensor data windows in up and down directions sep-

arately to the deep autoencoder model to extract new

deep features from the raw data. Lastly, we apply ran-

dom forest as a classifier for fault detection based on

new deep features extracted from the data.

The encoder transforms the input x into corrupted

input data x

’

using hidden representation h through

nonlinear mapping

h = f (W

1

x

’

+ b) (1)

where f(.) is a nonlinear activation function as

the sigmoid function, W

1

∈ R

k×m

is the weight ma-

trix and b ∈ R

k

the bias vector to be optimized in

encoding with k nodes in the hidden layer (Vincent

et al., 2008). Then, with parameters W

2

∈ R

m×k

and

Fault Detection of Elevator System using Deep Autoencoder Feature Extraction for Acceleration Signals

337

Figure 2: Deep autoencoder feature extraction approach

(Acc represents acceleration signal).

c ∈ R

m

, the decoder uses nonlinear transformation to

map hidden representation h to a reconstructed vector

x

”

at the output layer.

x

”

= g(W

2

h + c) (2)

where g(.) is again nonlinear function (sigmoid

function). In this study, the weight matrix is W

2

=W

1

T

,

which is tied weights for better learning performance

(Japkowicz et al., 2000).

2.2 Random Forest

Random forest is type of ensemble classifier selecting

a subset of training samples and variables randomly

to produce multiple decision trees (Breiman, 2001).

High data dimensionality and multicollinearity can be

handled by a RF classifier while imbalanced data af-

fect the results of the RF classifier. It can also be used

for sample proximity analysis, i.e. outlier detection

and removal in train set (Belgiu and Dr

˘

agut¸, 2016).

The final classification accuracy of RF is calculated

by averaging the probabilities of assigning classes re-

lated to all produced trees (t). Testing data (d) that

is unknown to all the decision trees is used for eval-

uation by voting method. Selection of the class is

based on the maximum number of votes (see Figure

3). Random forest classifier provides variable impor-

tance measurement that helps in reducing the dimen-

sions of hyperspectral data in order to identify the

most relevant features of data, and helps in selecting

the most suitable reason for classification of a certain

target class.

Specifically, let sensor data value v

l

t

have training

sample l

th

in the arrived leaf node of the decision tree

t ∈ T , where l ∈ [1, ..., L

t

] and the number of train-

ing samples is L

t

in the current arrived leaf node of

decision tree t. The final prediction result is given by

(Huynh et al., 2016):

µ =

∑

t∈T

∑

l∈[1,...,L

t

]

v

l

t

∑

t∈T

L

t

(3)

Figure 3: Classification phase of random forest classifier.

All classification trees providing a final decision

by voting method are given by (Liu et al., 2017):

H(a) = argmax

y

j

∑

i∈[1,2,...,Z]

I(h

i

(a) = y

j

) (4)

where j= 1,2,...,C and the combination model is

H(a) , the number of training subsets are Z depending

on which decision tree model is h

i

(a) , i ∈ [1, 2, ..., Z]

while output or labels of the P classes are y

j

, j=

1,2,...,P and combined strategy is I(.) defined as:

I(x) =

(

1, h

i

(a) = y

j

0, otherwise

(5)

where output of the decision tree is h

i

(a) and i

th

class label of the P classes is y

j

, j= 1,2,...,P .

2.3 Evaluation Parameters

Evaluation parameters used in this research are de-

fined with the confusion matrix in Table 1.

The rate of positive test result is sensitivity,

Sensitivity =

T P

T P + FN

× 100% (6)

The ratio of a negative test result is specificity,

Specificity =

T N

T N + FP

× 100% (7)

The overall measure is accuracy,

Accuracy =

T P + T N

T P + FP + T N + FN

× 100% (8)

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

338

Table 1: Confusion matrix.

Predicted (P) (N)

Actual (P) True positive (TP) False negative (FN)

(N) False positive (FP) True negative (TN)

3 RESULTS AND DISCUSSION

In this research, first, we selected the most frequent

floor patterns from the data, i.e. floor patterns which

consist of the maximum number of rides between spe-

cific floor combinations. The next step includes the

selection of faulty rides in all x, y and z directions

from the most frequent floor patterns based on time

periods provided by the maintenance data. An equal

amount of healthy rides are also selected and labelled

as class 0 for healthy, with class 1 for faulty rides. Fi-

nally, the deep autoencoder model is used for feature

extraction from the data.

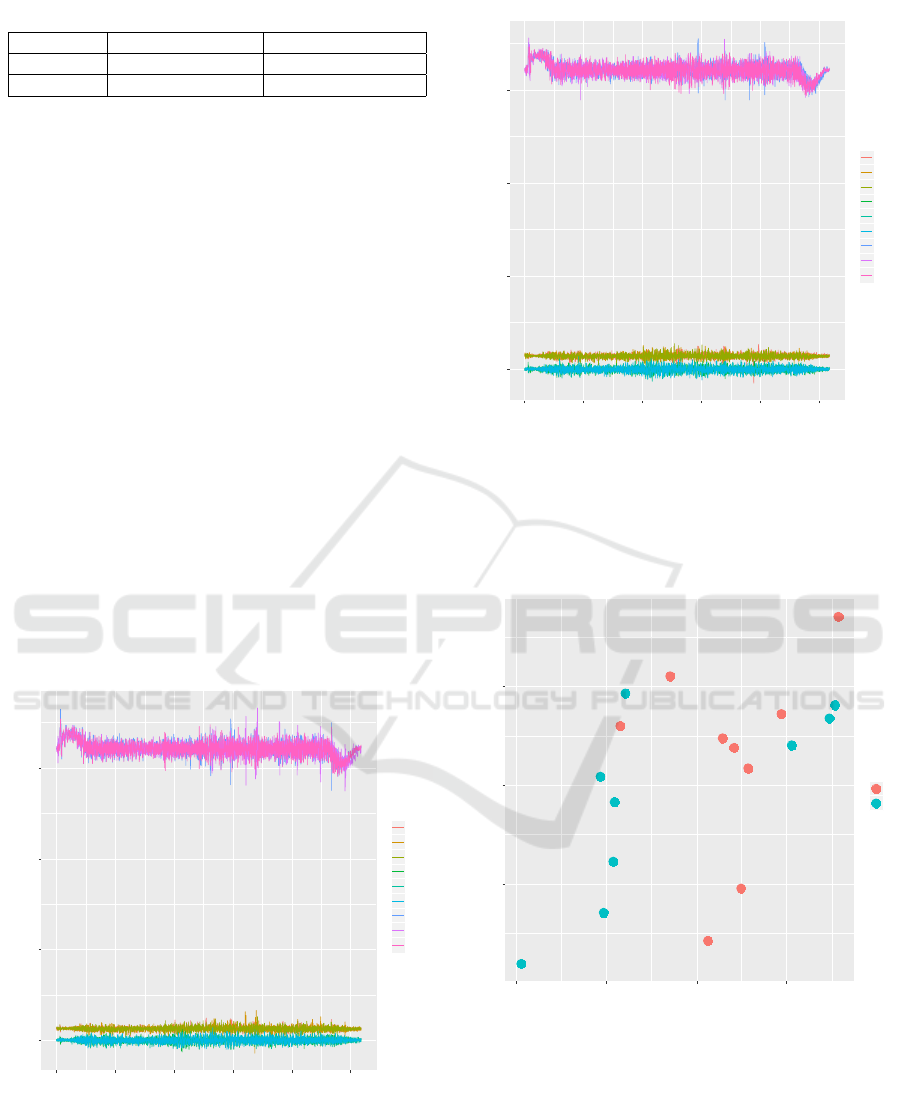

3.1 Up Movement

We have analyzed up and down movements separately

because the traction based elevator usually produces

slightly different levels of vibration in each direction.

First, we have selected the floor patterns 0 to 6 and

faulty rides based on time periods provided by the

maintenance data as shown in Figure 4.

0

3000

6000

9000

0 1000 2000 3000 4000 5000

Samples

Acc all directions

variable

V1

V2

V3

V4

V5

V6

V7

V8

V9

Faulty rides (floor:0-6)

Figure 4: Rides from faulty data.

Then, we have selected an equal number of rides

for healthy data, as shown in Figure 5. The next

step is to label both the healthy and faulty rides with

class labels 0 and 1 respectively. Healthy and faulty

rides with class labels are fed to the deep autoencoder

0

3000

6000

9000

0 1000 2000 3000 4000 5000

Samples

Acc all directions

variable

V1

V2

V3

V4

V5

V6

V7

V8

V9

Healthy rides (floor:0-6)

Figure 5: Rides from healthy data.

model and the generated deep features are shown in

Figure 6. These are called as deep features or la-

tent features in deep autoencoder terminology, which

shows hidden representations of the data. The ex-

-0.4

0.0

0.4

-0.6 -0.3 0.0 0.3

Feature axis 1

Feature axis 2

class

0

1

Deep features (floor:0-6)

Figure 6: Extracted deep autoencoder features (visualiza-

tion of the features w.r.t class variable).

tracted deep features are fed to the random forest

algorithm for classification and the results provide

100% accuracy in fault detection, as shown in Table

2. We have also calculated accuracy in terms of avoid-

ing false positives from both features and found that

the new deep features generated in this research out-

perform the existing features. We have used the rest

of the healthy rides similar as Figure 5 to analyze the

Fault Detection of Elevator System using Deep Autoencoder Feature Extraction for Acceleration Signals

339

number of false positives. These healthy rides are la-

belled as class 0 and fed to the deep autoencoder to

extract new deep features from the data, as presented

in Figure 7. These new deep features are then clas-

sified with the pre-trained deep autoencoder random

forest model to test the efficacy of the model in terms

of false positives.

-0.3

0.0

0.3

0.6

-0.6 -0.3 0.0 0.3 0.6

Feature axis 1

Feature axis 2

class

0

Features from rest of the healthy rides (floor:0-6)

Figure 7: Extracted deep features (only healthy rides).

Table 2 presents the results for upward movement

of the elevator in terms of accuracy, sensitivity and

specificity. We have also included the accuracy of

avoiding false positives as evaluation parameters for

this research. The results show that the new deep

features provide better accuracy in terms of avoid-

ing false positives from the data, which is helpful in

detecting false alarms for elevator predictive main-

tenance strategies. False positives equal to 1 means

100% detection of healthy data, which means no false

alarms. It is extremely helpful in reducing the unnec-

essary visits of maintenance personnel to installation

sites.

Table 2: Fault detection analysis (False positives field re-

lated to analyzing rest of the healthy rides after the training

and testing phase).

Deep features Existing features

Accuracy 1 1

Sensitivity 1 1

Specificity 1 1

False positives 1 0.88

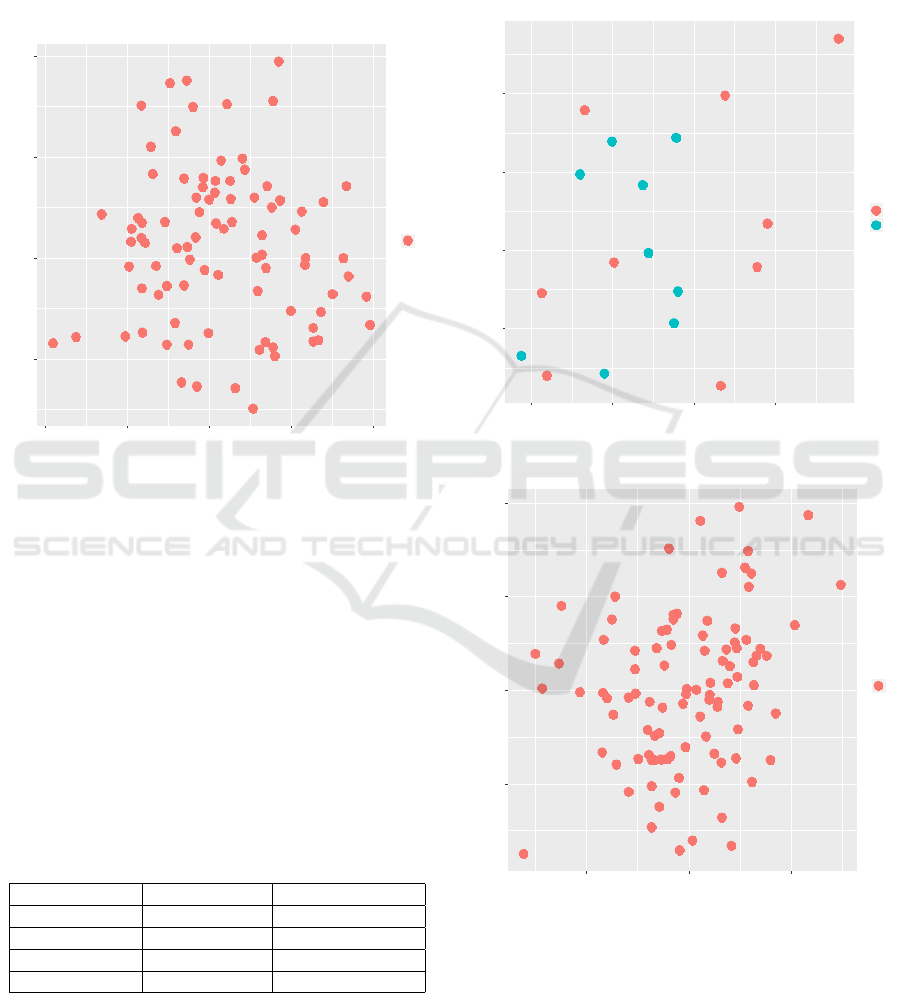

3.2 Down Movement

For downward motion, just as in the case of up move-

ment, we feed both healthy and faulty rides with class

labels to the deep autoencoder model for the extrac-

tion of new deep features, as shown in Figure 8.

-0.2

0.0

0.2

0.4

-0.6 -0.3 0.0 0.3

Feature axis 1

Feature axis 2

class

0

1

Deep features (floor:6-0)

Figure 8: Extracted deep features.

-0.3

0.0

0.3

0.6

-0.4 0.0 0.4

Feature axis 1

Feature axis 2

class

0

Features from rest of the healthy rides (floor:6-0)

Figure 9: Extracted deep features (only healthy rides).

Finally, the new extracted deep features are clas-

sified with random forest model, and the results are

shown in Table 3. After this, the rest of the healthy

rides with class label 0 is used to analyze the num-

ber of false positives. The extracted deep features are

presented in Figure 9.

Table 3 presents the results for fault detection with

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

340

deep autoencoder random forest model in the down-

ward direction. The results are similar to the upward

direction, but we can see significant change in terms

of accuracy when analyzing the number of false posi-

tives with new deep features.

Table 3: Fault detection analysis.

Deep features Existing features

Accuracy 1 1

Sensitivity 1 1

Specificity 1 1

False positives 1 0.52

4 CONCLUSIONS AND FUTURE

WORK

In this research, we propose a novel fault detection

technique for health monitoring of elevator systems.

We have developed a generic model for automated

feature extraction and fault detection in the health

state monitoring of elevator systems. Our approach

with new extracted deep features provided 100% ac-

curacy in detecting faults and in avoiding false pos-

itives. The results show that we have succeeded in

developing a generic model, which can also be appli-

cable to other machine systems for automated feature

extraction and fault detection. The results are useful

in terms of detecting false alarms in elevator predic-

tive maintenance. If the analysis results are utilized

to allocate maintenance resources, the approach will

also reduce unnecessary visits of maintenance person-

nel to installation sites. Our developed model can also

be used for solving diagnostics problems with auto-

matically generated highly informative deep features

in different predictive maintenance solutions. New

deep features extracted by our model outperforms the

existing features calculated from the same raw sensor

dataset. No prior domain knowledge is required for

the automated feature extraction approach. Robust-

ness against overfitting and dimensionality reduction

are the two main characteristics of our model. Our

generic model is feasible as shown by the experimen-

tal results, which will increase the safety of passen-

gers. Robustness of our model is tested in the case

of a large dataset, which proves the efficacy of our

model.

In future work, we will extend our approach on

more elevators including multiple floor patterns and

real-world big data cases to validate its potential for

other applications and improve its efficacy.

REFERENCES

Albuquerque, A., Amador, T., Ferreira, R., Veloso, A., and

Ziviani, N. (2018). Learning to rank with deep autoen-

coder features. In 2018 International Joint Conference

on Neural Networks (IJCNN), pages 1–8. IEEE.

Alkhatib, W., Herrmann, L. A., and Rensing, C. (2017).

Onto. kom-towards a minimally supervised ontology

learning system based on word embeddings and con-

volutional neural networks. In KEOD, pages 17–26.

Belgiu, M. and Dr

˘

agut¸, L. (2016). Random forest in remote

sensing: A review of applications and future direc-

tions. ISPRS Journal of Photogrammetry and Remote

Sensing, 114:24–31.

Breiman, L. (2001). Random forests. Machine learning,

45(1):5–32.

Bulla, J., Orjuela-Ca

˜

n

´

on, A. D., and Fl

´

orez, O. D. (2018).

Feature extraction analysis using filter banks for faults

classification in induction motors. In 2018 Interna-

tional Joint Conference on Neural Networks (IJCNN),

pages 1–6. IEEE.

Calimeri, F., Marzullo, A., Stamile, C., and Terracina, G.

Graph based neural networks for automatic classifica-

tion of multiple sclerosis clinical courses. In Proceed-

ings of the European Symposium on Artificial Neural

Networks, Computational Intelligence and Machine

Learning (ESANN 18)(2018, forthcoming).

Chatterjee, N. and Bhardwaj, A. (2010). Single document

text summarization using random indexing and neural

networks. In KEOD, pages 171–176.

Desa (2014). World urbanization prospects, the 2011 revi-

sion. Population Division, Department of Economic

and Social Affairs, United Nations Secretariat.

Ebeling, T. (2011). Condition monitoring for elevators–an

overview. Lift Report, 6:25–26.

Ebeling, T. and Haul, M. (2016). Results of a field trial aim-

ing at demonstrating the permanent detection of ele-

vator wear using intelligent sensors. In Proc. ELEV-

CON, pages 101–109.

Fern

´

andez-Varela, I., Athanasakis, D., Parsons, S.,

Hern

´

andez-Pereira, E., and Moret-Bonillo, V. Sleep

staging with deep learning: a convolutional model.

In Proceedings of the European Symposium on Artifi-

cial Neural Networks, Computational Intelligence and

Machine Learning (ESANN 2018).

Fogelman-Soulie, F., Robert, Y., and Tchuente, M. (1987).

Automata networks in computer science: theory and

applications. Manchester University Press and Prince-

ton University Press.

H

¨

anninen, J. and K

¨

arkk

¨

ainen, T. (2016). Comparison

of four-and six-layered configurations for deep net-

work pretraining. In European Symposium on Artifi-

cial Neural Networks, Computational Intelligence and

Machine Learning.

Henriques, G. and Stacey, D. A. (2012). An ontology-based

framework for syndromic surveillance method selec-

tion. In KEOD, pages 396–400.

Hina, M. D., Thierry, C., Soukane, A., and Ramdane-

Cherif, A. (2017). Ontological and machine learning

Fault Detection of Elevator System using Deep Autoencoder Feature Extraction for Acceleration Signals

341

approaches for managing driving context in intelligent

transportation. In KEOD, pages 302–309.

Huet, R., Courty, N., and Lef

`

evre, S. (2016). A new penal-

isation term for image retrieval in clique neural net-

works. In European Symposium on Artificial Neural

Networks, Computational Intelligence and Machine

Learning (ESANN).

Huynh, T., Gao, Y., Kang, J., Wang, L., Zhang, P., Lian,

J., and Shen, D. (2016). Estimating ct image from

mri data using structured random forest and auto-

context model. IEEE transactions on medical imag-

ing, 35(1):174.

Japkowicz, N., Hanson, S. J., and Gluck, M. A. (2000).

Nonlinear autoassociation is not equivalent to pca.

Neural computation, 12(3):531–545.

Jia, F., Lei, Y., Lin, J., Zhou, X., and Lu, N. (2016). Deep

neural networks: A promising tool for fault character-

istic mining and intelligent diagnosis of rotating ma-

chinery with massive data. Mechanical Systems and

Signal Processing, 72:303–315.

Jiang, G., Xie, P., He, H., and Yan, J. (2018). Wind turbine

fault detection using a denoising autoencoder with

temporal information. IEEE/ASME Transactions on

Mechatronics, 23(1):89–100.

Jing, L., Wang, T., Zhao, M., and Wang, P. (2017). An adap-

tive multi-sensor data fusion method based on deep

convolutional neural networks for fault diagnosis of

planetary gearbox. Sensors, 17(2):414.

Lee, C.-C. (2014). Gender classification using m-estimator

based radial basis function neural network. In Signal

Processing and Multimedia Applications (SIGMAP),

2014 International Conference on, pages 302–306.

IEEE.

Liu, Z., Tang, B., He, X., Qiu, Q., and Liu, F. (2017).

Class-specific random forest with cross-correlation

constraints for spectral–spatial hyperspectral image

classification. IEEE Geoscience and Remote Sensing

Letters, 14(2):257–261.

Madani, P. and Vlajic, N. (2018). Robustness of deep

autoencoder in intrusion detection under adversarial

contamination. In Proceedings of the 5th Annual Sym-

posium and Bootcamp on Hot Topics in the Science of

Security, page 1. ACM.

Mart

´

ınez-Rego, D., Fontenla-Romero, O., and Alonso-

Betanzos, A. (2011). Power wind mill fault detection

via one-class ν-svm vibration signal analysis. In Neu-

ral Networks (IJCNN), The 2011 International Joint

Conference on, pages 511–518. IEEE.

Mishra, K. M. and Huhtala, K. (2019). Fault detection of el-

evator systems using multilayer perceptron neural net-

work. In 24th IEEE Conference on Emerging Tech-

nologies and Factory Automation (ETFA). IEEE.

Mohamed, R., El-Makky, N. M., and Nagi, K. (2017). Hy-

bqa: Hybrid deep relation extraction for question an-

swering on freebase. In KEOD, pages 128–136.

Seppala, J., Koivisto, H., and Koivo, H. (1998). Modeling

elevator dynamics using neural networks. In Neural

Networks Proceedings, 1998. IEEE World Congress

on Computational Intelligence. The 1998 IEEE Inter-

national Joint Conference on, volume 3, pages 2419–

2424. IEEE.

Sun, W., Shao, S., Zhao, R., Yan, R., Zhang, X., and Chen,

X. (2016). A sparse auto-encoder-based deep neural

network approach for induction motor faults classifi-

cation. Measurement, 89:171–178.

Vincent, P., Larochelle, H., Bengio, Y., and Manzagol, P.-

A. (2008). Extracting and composing robust features

with denoising autoencoders. In Proceedings of the

25th international conference on Machine learning,

pages 1096–1103. ACM.

Wang, P., He, W., and Yan, W. (2009). Fault diagnosis of

elevator braking system based on wavelet packet algo-

rithm and fuzzy neural network. In Electronic Mea-

surement & Instruments, 2009. ICEMI’09. 9th Inter-

national Conference on, pages 4–1028. IEEE.

Xia, M., Li, T., Xu, L., Liu, L., and de Silva, C. W.

(2018). Fault diagnosis for rotating machinery us-

ing multiple sensors and convolutional neural net-

works. IEEE/ASME Transactions on Mechatronics,

23(1):101–110.

Yang, Z.-X. and Zhang, P.-B. (2016). Elm meets rae-elm:

A hybrid intelligent model for multiple fault diagnosis

and remaining useful life predication of rotating ma-

chinery. In Neural Networks (IJCNN), 2016 Interna-

tional Joint Conference on, pages 2321–2328. IEEE.

Zhang, R., Peng, Z., Wu, L., Yao, B., and Guan, Y. (2017).

Fault diagnosis from raw sensor data using deep neu-

ral networks considering temporal coherence. Sen-

sors, 17(3):549.

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

342