A Comparative Evaluation of Visual and Natural Language Question

Answering over Linked Data

Gerhard Wohlgenannt

1 a

, Dmitry Mouromtsev

1 b

, Dmitry Pavlov

2

, Yury Emelyanov

2

and Alexey Morozov

2

1

Faculty of Software Engineering and Computer Systems, ITMO University, St. Petersburg, Russia

2

Vismart Ltd., St. Petersburg, Russia

Keywords:

Diagrammatic Question Answering, Visual Data Exploration, Knowledge Graphs, QALD.

Abstract:

With the growing number and size of Linked Data datasets, it is crucial to make the data accessible and useful

for users without knowledge of formal query languages. Two approaches towards this goal are knowledge

graph visualization and natural language interfaces. Here, we investigate specifically question answering (QA)

over Linked Data by comparing a diagrammatic visual approach with existing natural language-based systems.

Given a QA benchmark (QALD7), we evaluate a visual method which is based on iteratively creating diagrams

until the answer is found, against four QA systems that have natural language queries as input. Besides other

benefits, the visual approach provides higher performance, but also requires more manual input. The results

indicate that the methods can be used complementary, and that such a combination has a large positive impact

on QA performance, and also facilitates additional features such as data exploration.

1 INTRODUCTION

The Semantic Web provides a large number of struc-

tured datasets in form of Linked Data. One central

obstacle is to make this data available and consum-

able to lay users without knowledge of formal query

languages such as SPARQL. In order to satisfy spe-

cific information needs of users, a typical approach

are natural language interfaces to allow question an-

swering over the Linked Data (QALD) by translating

user queries into SPARQL (Diefenbach et al., 2018;

L

´

opez et al., 2013).

As an alternative method, (Mouromtsev et al.,

2018) propose a visual method of QA using an iter-

ative diagrammatic approach. The diagrammatic ap-

proach relies on the visual means only, it requires

more user interaction than natural language QA, but

also provides additional benefits like intuitive insights

into dataset characteristics, or a broader understand-

ing of the answer and the potential to further explore

the answer context, and finally allows for knowledge

sharing by storing and sharing resulting diagrams.

In contrast to (Mouromtsev et al., 2018), who

present the basic method and tool for diagrammatic

a

https://orcid.org/0000-0001-7196-0699

b

https://orcid.org/0000-0002-0644-9242

question answering (DQA), here we evaluate DQA

in comparison to natural language QALD systems.

Both approaches have different characteristics, there-

fore we see them as complementary rather than in

competition.

The basic research goals are: i) Given a dataset

extracted from the QALD7 benchmark

1

, we evaluate

DQA versus state-of-the-art QALD systems. ii) More

specifically, we investigate if and to what extent DQA

can be complementary to QALD systems, especially

in cases where those systems do not find a correct an-

swer. iii) Finally, we want to present the basic outline

for the integration of the two methods.

In a nutshell, users that applied DQA found the

correct answer with an F1-score of 79.5%, compared

to a maximum of 59.2% for the best performing

QALD system. Furthermore, for the subset of ques-

tions where the QALD system could not provide a

correct answer, users found the answer with 70% F1-

score with DQA. We further analyze the characteris-

tics of questions where the QALD or DQA, respec-

tively, approach is better suited.

The results indicate, that aside from the other ben-

efits of DQA, it can be a valuable component for in-

tegration into larger QALD systems, in cases where

1

https://project-hobbit.eu/challenges/qald2017

Wohlgenannt, G., Mouromtsev, D., Pavlov, D., Emelyanov, Y. and Morozov, A.

A Comparative Evaluation of Visual and Natural Language Question Answering over Linked Data.

DOI: 10.5220/0008364704730478

In Proceedings of the 11th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2019), pages 473-478

ISBN: 978-989-758-382-7

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

473

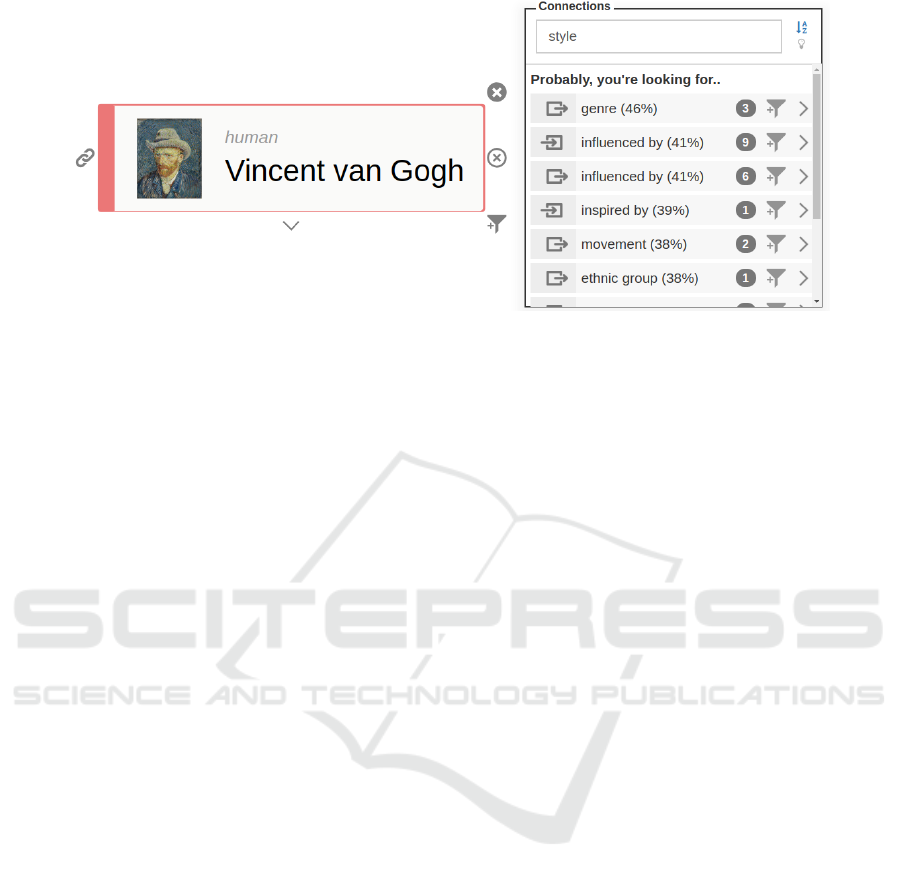

Figure 1: After placing the Wikidata entity Van Gogh onto the canvas, searching properties related to his “style” with Ontodia

DQA tool.

those systems cannot find an answer, or when the user

wants to explore the answer context in detail by visu-

alizing the relevant nodes and relations. Moreover,

users can verify answers given by a QALD system

using DQA in case of doubt.

This publication is organized as follows: After the

presentation of related work in Section 2, and a brief

system description of the DQA tool in Section 3, the

main focus of the paper is on evaluation setup and re-

sults of the comparison of DQA and QALD, including

a discussion, in Section 4. The paper concludes with

Section 5.

2 RELATED WORK

As introduced in (Mouromtsev et al., 2018) we un-

derstand diagrammatic question answering (DQA) as

the process of QA relying solely on visual explo-

ration using diagrams as a representation of the un-

derlying knowledge source. The process includes (i)

a model for diagrammatic representation of seman-

tic data which supports data interaction using em-

bedded queries, (ii) a simple method for step-by-step

construction of diagrams with respect to cognitive

boundaries and a layout that boosts understandabil-

ity of diagrams, (iii) a library for visual data explo-

ration and sharing based on its internal data model,

and (iv) an evaluation of DQA as knowledge under-

standing and knowledge sharing tool. (Eppler and

Burkhard, 2007) propose a framework of five per-

spectives of knowledge visualization, which can be

used to describe certain aspects of the DQA use cases,

such as its goal to provide an iterative exploration

method, which is accessible to any user, the possi-

bility of knowledge sharing (via saved diagrams), or

the general purpose of knowledge understanding and

abstraction from technical details.

Many tools exist for visual consumption and in-

teraction with RDF knowledge bases, however, they

are not designed specifically towards the question

answering use case. (Dud

´

a

ˇ

s et al., 2018) give an

overview of ontology and Linked Data visualization

tools, and categorize them based on the used visual-

ization methods, interaction techniques and supported

ontology constructs.

Regarding language-based QA over Linked Data,

(Kaufmann and Bernstein, 2007) discuss and study

the usefulness of natural language interfaces to

ontology-based knowledge bases in a general way.

They focus on usability of such systems for the end

user, and conclude that users prefer full sentences

for query formulation and that natural language in-

terfaces are indeed useful.

(Diefenbach et al., 2018) describe the challenges

of QA over knowledge bases using natural languages,

and elaborate the various techniques used by existing

QALD systems to overcome those challenges. In the

present work, we compare DQA with four of those

systems using a subset of questions of the QALD7

benchmark. Those systems are: gAnswer (Zou et al.,

2014) is an approach for RDF QA that has a “graph-

driven” perspective. In contrast to traditional ap-

proaches, which first try to understand the question,

and then evaluate the query, in gAnswer the intention

of the query is modeled in a structured way, which

leads to a subgraph matching problem. Secondly,

QAKiS (Cabrio et al., 2014) is QA system over struc-

tured knowledge bases such as DBpedia that makes

use of relational patterns which capture different ways

to express a certain relation in a natural language in

order to construct a target-language (SPARQL) query.

Further, Platypus (Pellissier Tanon et al., 2018) is a

QA system on Wikidata. It represents questions in an

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

474

Figure 2: Answering the question: Who is the mayor of Paris?

internal format related to dependency-based compo-

sitional semantics which allows for question decom-

position and language independence. The platform

can answer complex questions in several languages by

using hybrid grammatical and template-based tech-

niques. And finally, also the WDAqua (Diefenbach

et al., 2018) system aims for language-independence

and for being agnostic of the underlying knowledge

base. WDAqua puts more importance on word se-

mantics than on the syntax of the user query, and fol-

lows a processes of query expansion, SPARQL con-

struction, query ranking and then making an answer

decision.

For the evaluation of QA systems, several

benchmarks have been proposed such as WebQues-

tions (Berant et al., 2013) or SimpleQuestions (Bor-

des et al., 2015). However, the most popular bench-

marks in the Semantic Web field arise from the QALD

evaluation campaign (L

´

opez et al., 2013). The re-

cent QALD7 evaluation campaign includes task 4:

“English question answering over Wikidata”

2

which

serves as basis to compile our evaluation dataset.

3 SYSTEM DESCRIPTION

The DQA functionality is part of the Ontodia

3

tool.

The initial idea of Ontodia was to enable the explo-

ration of semantic graphs for ordinary users. Data

exploration is about efficiently extracting knowledge

from data even in situations where it is unclear what

is being looked for exactly (Idreos et al., 2015).

The DQA tool uses an incremental approach to ex-

ploration typically starting from a very small number

of nodes. With the context menu of a particular node,

relations and related nodes can be added until the dia-

gram fulfills the information need of the user. Figure 1

gives an example of a start node, where a user wants

to learn more about the painting style of Van Gogh.

To illustrate the process, we give a brief example

here. More details about the DQA tool, the motivation

for DQA and diagram-based visualizations are found

in previous work (Mouromtsev et al., 2018; Wohlge-

nannt et al., 2017).

2

https://project-hobbit.eu/challenges/qald2017/qald2017-

challenge-tasks/#task4

3

http://ontodia.org

As for the example, when attempting to answer a

question such as “Who is the mayor of Paris?” the

first step for a DQA user is finding a suitable start-

ing point, in our case the entity Paris. The user enters

“Paris” into the search box, and can then investigate

the entity on the tool canvas. The information about

the entity stems from the underlying dataset, for ex-

ample Wikidata

4

. The user can – in an incremental

process – search in the properties of the given entity

(or entities) and add relevant entities onto the canvas.

In the given example, the property “head of govern-

ment” connects the mayor to the city of Paris, Anne

Hidalgo. The final diagram which answers the given

question is presented in Figure 2.

4 EVALUATION

Here we present the evaluation of DQA in comparison

to four QALD systems.

4.1 Evaluation Setup

As evaluation dataset, we reuse questions from the

QALD7 benchmark task 4 “QA over Wikidata”.

Question selection from QALD7 is based on the prin-

ciples of question classification in QA (Moldovan

et al., 2000). Firstly, it is necessary to define ques-

tion types which correspond to different scenarios of

data exploration in DQA, as well as the type of ex-

pected answers and the question focus. The question

focus refers to the main information in the question

which help a user find the answer. We follow the

model of (Riloff and Thelen, 2000) who categorize

questions by their question word into WHO, WHICH,

WHAT, NAME, and HOW questions. Given the ques-

tion and answer type categories, we created four ques-

tionnaires with nine questions each

5

resulting in 36

questions from the QALD dataset. The questions

were picked in equal number for five basic question

categories.

20 persons participated in the DQA evaluation –

14 male and six female from eight different countries.

The majority of respondents work within academia,

4

https://www.wikidata.org

5

https://github.com/ontodia-org/DQA/wiki/

Questionnaires1

A Comparative Evaluation of Visual and Natural Language Question Answering over Linked Data

475

Table 1: Overall performance of DQA and the four QALD tools – measured with precision, recall and F1 score.

DQA WDAqua askplatyp.us QAKiS gAnswer

Precision 80.1% 53.7% 8.57% 29.6% 57.5%

Recall 78.5% 58.8% 8.57% 25.6% 61.1%

F1 79.5% 56.1% 8.57% 27.5% 59.2%

however seven users were employed in industry. 131

diagrams (of 140 expected) were returned by the

users.

The same 36 questions were answered using four

QALD tools: WDAqua

6

(Diefenbach et al., 2018),

QAKiS

7

(Cabrio et al., 2014), gAnswer

8

(Zou et al.,

2014) and Platypus

9

(Pellissier Tanon et al., 2018).

For the QALD tools, a human evaluator pasted the

questions as is into the natural language Web inter-

faces, and submitted them to the systems. Typically

QALD tools provide a distinct answer, which may be

a simple literal, or a set of entities which represent

the answer, and which can be compared to the gold

standard result. However, the WDAqua system, some-

times, additionally to the direct answer to the ques-

tion, provides links to documents related to the ques-

tion. We always chose the answer available via direct

answer.

To assess the correctness of the answers given

both by participants in the DQA experiments, and by

the QALD system, we use the classic information re-

trieval metrics of precision (P), recall (R), and F1.

P measures the fraction of relevant (correct) answer

(items) given versus all answers (answer items) given.

R is the faction of correct answer (parts) given divided

by all correct ones in the gold answer, and F1 is the

harmonic mean of P and R. As an example, if the

question is “Where was Albert Einstein born?” (gold

answer: “Ulm”), and the system gives two answers

“Ulm” and “Bern”, then P =

1

2

, R = 1 and F1 =

2

3

.

For DQA four participants answered each ques-

tion, therefore we took the average P, R, and F1 val-

ues over the four evaluators as the result per question.

The detailed answers by the participants and available

online

10

.

4.2 Evaluation Results and Discussion

Table 1 presents the overall evaluation metrics of

DQA, and the four QALD tools studied. With the

given dataset, WDAqua (56.1% F1) and gAnswer

6

http://qanswer-frontend.univ-st-etienne.fr

7

http://qakis.org

8

http://ganswer.gstore-pku.com

9

https://askplatyp.us

10

https://github.com/ontodia-org/DQA/wiki/Experiment-I-

results

(59.2% F1) clearly outperform askplatyp.us (8.6%

F1) and QAKiS (27.5% F1). Detailed results per ques-

tion including the calculation of P, R and F1 scores

are available online

11

. DQA led to 79.5% F1 (80.1%

precision and 78.5% recall).

In further evaluations, we compare DQA results to

WDAqua in order to study the differences and poten-

tial complementary aspects of the approaches. We se-

lected WDAqua as representative of QALD tools, as it

provides state-of-the-art results, and is well grounded

in the Semantic Web community.

12

Comparing DQA and WDAqua, the first interest-

ing question is: To what extend is DQA helpful on

questions that could not be answered by the QALD

system? For WDAqua the overall F1 score on our test

dataset is 56.1%. For the subset of questions where

WDAqua had no, or only a partial, answer, DQA

users found the correct answer in 69.6% of cases. On

the other hand, the subset of questions that DQA users

(partially) failed to answer, were answered correctly

by WDAqua with an F1 of 27.3%. If DQA is used

as a backup method for questions not correctly an-

swered with WDAqua, then overall F1 can be raised

to 85.0%. The increase from 56.1% to 85.0% demon-

strates the potential of DQA as complementary com-

ponent in QALD systems.

As expected, questions that are difficult to an-

swer with one approach are also harder for the other

approach – as some questions in the dataset or just

more complex to process and understand than others.

However, almost 70% of questions not answered by

WDAqua could still be answered by DQA. As ex-

amples of cases which are easier to answer for one

approach than the other, a question that DQA users

could answer, but where WDAqua failed is: “What

is the name of the school where Obama’s wife stud-

ied?”. This complex question formulation is hard to

interpret correctly for a machine. In contrast to DQA,

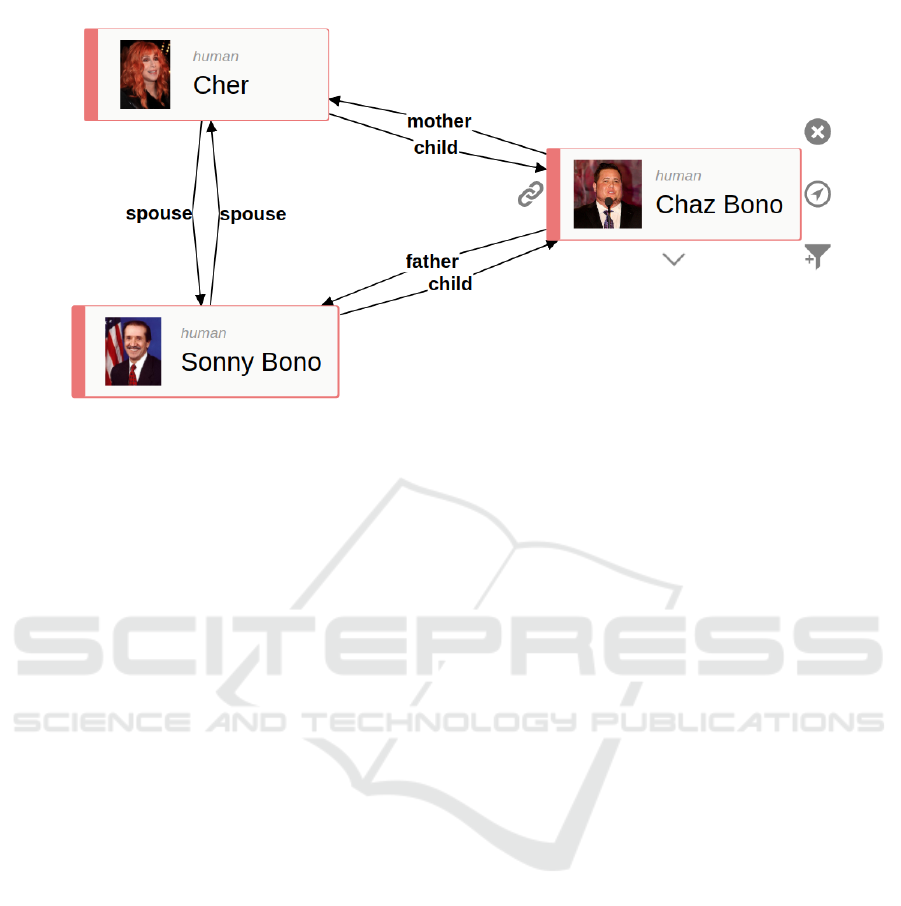

QALD systems also struggled with “Who is the son

of Sonny and Cher?”. This question needs a lot of

real-world knowledge to map the names Sonny and

Cher to their corresponding entities. The QALD sys-

11

https://github.com/gwohlgen/DQA evaluations/blob/

master/nlp eval.xlsx

12

Furthermore, at the time of paper writing the gAnswer

online demo was not available any more, support for this

tools seems limited.

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

476

Figure 3: Answering the question: Who is the son of Sonny and Cher? with DQA.

tem needs to select the correct Cher entity from mul-

tiple options in Wikidata, and also to understand that

“Sonny” refers to the entity Sonny Bono. The re-

sulting answer diagram is given in Figure 3. More

simple questions, like “Who is the mayor of Paris?”

were correctly answered by WDAqua, but not by all

DQA users. DQA participants in this case struggled

to make the leap from the noun “mayor” to the head-

of-government property in Wikidata.

Regarding the limits of DQA, this method has dif-

ficulties when the answer can be obtained only with

joins of queries, or when it is hard to find the initial

starting entities related to question focus. For exam-

ple, a question like “Show me the list of African birds

that are extinct.” typically requires an intersection of

two (large) sets of candidates entities, ie. all African

birds and extinct birds. Such a task can easily be rep-

resented in a SPARQL query, but is hard to address

with diagrams, because it would require placing, and

interacting with, a huge amount of nodes on the ex-

ploration canvas.

Overall, the experiments indicate, that addition-

ally to the use cases where QALD and DQA are use-

ful on their own, there is a lot of potential in com-

bining the two approaches, especially by providing a

user the opportunity to explore the dataset with DQA

if QALD did not find a correct answer, or when a

user wants to confirm the QALD answer by check-

ing in the underlying knowledge base. Furthermore,

visually exploring the dataset provides added benefits,

like understanding the dataset characteristics, sharing

of resulting diagrams (if supported by the tool), and

finding more information related to the original infor-

mation need.

For the integration of QALD and DQA, we envi-

sion two scenarios. The first scenario addresses plain

question answering, and here DQA can be added to a

QALD system for cases where a user is not satisfied

with a given answer. The QALD Web interface can

for example have a Explore visually with diagrams

button, which brings the user to a canvas on which

the entities detected by the QALD system within the

question and results (if any) are displayed on the can-

vas as starting nodes. The user will then explore the

knowledge graph and find the answers in the same

way as the participants in our experiments. The first

scenario can lead to a large improvement in answer

F1 (see above).

The second scenario of integration of QALD and

DQA focuses on the exploration aspect. Even if the

QALD system provides the correct answer, a user

might be interested to explore the knowledge graph

to validate the result and to discover more interest-

ing information about the target entities. From an im-

plementation and UI point of view, the same Explore

visually with diagrams button and pre-population of

the canvas can be used. Both scenarios also provide

the additional benefits of potentially saving and shar-

ing the created diagrams, which elaborate the relation

between question and answer.

5 CONCLUSIONS

In this work, we compare two approaches to answer

questions over Linked Data datasets: a visual di-

agrammatic approach (DQA) which involves itera-

tive exploration of the graph, and a natural language-

based (QALD). The evaluations show, that DQA can

be a helpful addition to pure QALD systems, both

A Comparative Evaluation of Visual and Natural Language Question Answering over Linked Data

477

regarding evaluation metrics (precision, recall, and

F1), and also for dataset understanding and further

exploration. The contributions include: i) a compara-

tive evaluation of four QALD tools and DQA with a

dataset extracted from the QALD7 benchmark, ii) an

investigation into the differences and potential com-

plementary aspects of the two approaches, and iii) the

proposition of integration scenarios for QALD and

DQA.

In future work we plan to study the integration of

DQA and QALD, especially the aspect of automat-

ically creating an initial diagram from a user query,

in order to leverage the discussed potentials. We en-

vision an integrated tool, that uses QALD as basic

method to find an answer to a question quickly, but

also allows to explore the knowledge graph visually

to raise answer quality and support exploration with

all its discussed benefits.

ACKNOWLEDGEMENTS

This work was supported by the Government of

the Russian Federation (Grant 074-U01) through the

ITMO Fellowship and Professorship Program.

REFERENCES

Berant, J., Chou, A., Frostig, R., and Liang, P. (2013).

Semantic parsing on freebase from question-answer

pairs. In Proceedings of the 2013 Conference on

Empirical Methods in Natural Language Processing,

pages 1533–1544.

Bordes, A., Usunier, N., Chopra, S., and Weston, J. (2015).

Large-scale simple question answering with memory

networks. arXiv preprint arXiv:1506.02075.

Cabrio, E., Sachidananda, V., and Troncy, R. (2014). Boost-

ing qakis with multimedia answer visualization. In

Presutti, V. e. a., editor, ESWC 2014, pages 298–303.

Springer.

Diefenbach, D., Both, A., Singh, K., and Maret, P. (2018).

Towards a question answering system over the seman-

tic web.

Dud

´

a

ˇ

s, M., Lohmann, S., Sv

´

atek, V., and Pavlov, D. (2018).

Ontology visualization methods and tools: a survey

of the state of the art. The Knowledge Engineering

Review, 33.

Eppler, M. J. and Burkhard, R. A. (2007). Visual repre-

sentations in knowledge management: framework and

cases. Journal of knowledge management, 11(4):112–

122.

Idreos, S., Papaemmanouil, O., and Chaudhuri, S. (2015).

Overview of data exploration techniques. In Proceed-

ings of the ACM SIGMOD International Conference

on Management of Data, pages 277–281, Melbourne,

Australia.

Kaufmann, E. and Bernstein, A. (2007). How useful are

natural language interfaces to the semantic web for ca-

sual end-users? In The Semantic Web, pages 281–294.

Springer.

L

´

opez, V., Unger, C., Cimiano, P., and Motta, E. (2013).

Evaluating question answering over linked data. J.

Web Sem., 21:3–13.

Moldovan, D., Harabagiu, S., Pasca, M., Mihalcea, R.,

Girju, R., Goodrum, R., and Rus, V. (2000). The struc-

ture and performance of an open-domain question an-

swering system. In Proceedings of the 38th annual

meeting on association for computational linguistics,

pages 563–570. Association for Computational Lin-

guistics.

Mouromtsev, D., Wohlgenannt, G., Haase, P., , Pavlov,

D., Emelyanov, Y., and Morozov, A. (2018). A di-

agrammatic approach for visual question answering

over knowledge graphs. In ESWC (Posters and Demos

Track), volume 11155 of CEUR-WS, pages 34–39.

Pellissier Tanon, T., Dias De Assuncao, M., Caron, E., and

Suchanek, F. M. (2018). Demoing Platypus – A Mul-

tilingual QA Platform for Wikidata. In ESWC.

Riloff, E. and Thelen, M. (2000). A rule-based ques-

tion answering system for reading comprehension

tests. In Proceedings of the 2000 ANLP/NAACL Work-

shop on Reading comprehension tests as evaluation

for computer-based language understanding sytems-

Volume 6, pages 13–19. Association for Computa-

tional Linguistics.

Wohlgenannt, G., Klimov, N., Mouromtsev, D.,

Razdyakonov, D., Pavlov, D., and Emelyanov,

Y. (2017). Using word embeddings for visual

data exploration with ontodia and wikidata. In

BLINK/NLIWoD3@ISWC, ISWC, volume 1932.

CEUR-WS.org.

Zou, L., Huang, R., Wang, H., Yu, J. X., He, W., and Zhao,

D. (2014). Natural language question answering over

rdf: A graph data driven approach. In Proc. of the

2014 ACM SIGMOD Conf. on Management of Data,

SIGMOD ’14, pages 313–324. ACM.

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

478