PET-TYPE ROBOT COMMUNICATION SYSTEM FOR MENTAL

CARE OF SINGLE-RESIDENT ELDERIES

Toshiyuki Maeda

Department of Management Information, Hannan University

5-4-33, Amamihigashi, Matsubara, Osaka, 580-8502 JAPAN

Kazumi Yoshida, Hisao Niwa and Kazuhiro Kayashima

Pin Change Co.,Ltd.

2-4-5, Minamishinagawa, Shinagawa, Tokyo, 140-0004 JAPAN

Keywords:

Telecare network application, Elderies’ welfare, Human-computer interaction, Human-robots interface, Net-

work communication, Pet-type robot, Autonomous control

Abstract:

This paper presents a pet-type robot communication system for mental care of single-resident elderies. The

robot can communicate with the people autonomously, and also it is Internet-accessible and so that allows the

people to communicate with others, directly or using the communication server. The system consists of pet-

type robots and the information center. The pet-type robot can treat not only as an information terminal, but

as a pet, which can talk to user(s), give information of the local communities, watch over them and send some

information to carers at the information center if needed. Considering necessity and sufficiency, the robot has

four motors; one for both ears, one for both eyes, one for the nose, and one for the neck. Motions generated

by the motors symbolize emotions of the robot, which is essential for our object. We have demonstrated and

examined some features of this robot system for elderies and got some good evaluation.

1 INTRODUCTION

Recently, the rate of elderies is getting higher and

higher and, in accordance with that, welfare facilities

and tools using advanced technologies have been de-

veloped(Bolmsj et al., 1995; Clarkson et al., 2003).

Many of them are, however, for aiding persons with

manipulation disabilities, or for supporting physical

works of carers and elderies, but for mental activi-

ties. Especially in Japan, single-resident elderies tend

to be isolated with local communities which may lead

loneliness of those, and so it is very important to com-

municate those elderies with others on mental aspect.

Pet-type robot system is one important candidate to

solve those sorts of humane problems, and there have

already been several researches(Matsukawa et al.,

1996; Maeda et al., 2002; Ohkawa et al., 1998; Maeda

et al., 2003), though they are not focussed enough.

We here introduce a new pet-type robot communica-

tion system which consists of pet-type robots and the

information center.

In this paper we explain some concept and features

of our system, and later we discuss some examination.

We here have demonstrated and examined some fea-

tures of this robot system for elderies, using some of

the robots, and certified some effects of our system.

Relievability & Reliability

Interface for

aged people

Mental care

Conversation

Button

Communication

Information Interchange

announce

Conversation robot

type terminal

Network accesibility

Pet-type

Information terminal

Figure 1: System concept.

2 CONCEPT OF PET-TYPE

ROBOT COMMUNICATION

SYSTEM

Figure 1 shows the basic concept of our system. In

the following subsections, we explain each aspect in

detail.

322

Maeda T., Yoshida K., Niwa H. and Kayashima K. (2004).

PET-TYPE ROBOT COMMUNICATION SYSTEM FOR MENTAL CARE OF SINGLE-RESIDENT ELDERIES.

In Proceedings of the First International Conference on Informatics in Control, Automation and Robotics, pages 322-325

DOI: 10.5220/0001139003220325

Copyright

c

SciTePress

2.1 Interface Usability

Pet-type robots should satisfy following requirements

with view of elderies’ usability:

(i) They should not give a feeling of machines,

or electronic equipments. For elderies, those

“Hard” equipments are very tough to touch, and

that causes not to use frequently.

(ii) They should be able to give some conversation

with contact and/or speech, that let users feel

kind, or easy to communicate.

(iii) They should not force users to use.

To solve above problems, we introduce the follow-

ing features:

(i) We designed robots body as stuffed toy bears.

That enables robot to be more emotional, which

is essential for pet-type robots(Fujita, 1999).

(ii) We have developed speech input (recognition)

and speech output (synthesis or construction),

and other multi-modal interface for conversation

(explained below). Those techniques realize be-

havior of pets, and also offer affordance(Gibson

and Walk, 1960).

(iii) We designed user interface as easy and simple as

possible. For instance, a user does not need to

operate any mechanical equipment for telecom-

munication such as making a telephone call (dis-

cussed below).

2.2 Elderies’ information terminal

The robot should, furthermore, treat as an informa-

tion terminal as well as a fake pet. For realizing

those requirements, the robot is net-accessible and

that feature allows the people to communicate not

only with carers, but also with relatives, friends, etc.

That is quite important for elderies, especially single-

resident, not to feel alone. As described, communica-

tion is very important for our system and we discuss

as below in details.

3 COMMUNICATION FEATURES

Figure 2 shows the network diagram of our system.

All robots, or terminals, are connected to Internet,

which means the robots are regarded as Internet-

accessible terminals. Furthermore, those treat a tele-

phone if required. In the following subsections, func-

tions are described.

pet1

pet2

pet3

Internet

Information Center

family/friend/...

(mobile) phonecall

Figure 2: System network diagram.

3.1 Internet connectivity

We have several versions of robot systems. Some are

connected to Internet by cable TV networks, and oth-

ers by cellular phone. The latter systems are aimed

for portability as pseudo-pets.

3.2 Communication for surveillance

The robot has a camera to watch over user(s). Images

can be taken by the camera, and are sent to the infor-

mation center via Internet. That helps carers to get

informations easily for watching over. The robot can

send images in regular intervals, and that offers some

of continual watching, or lifeguard.

3.3 Multi-modal interface

Speech techniques are, furthermore, used to some in-

formation exchange with the robot. Those speech

operation makes those people feel naturally and

friendlily, and then improves usability very much.

The current system supports the following invocation

of communication;

• “Telephone button ON!”: by this speech command,

a user can take a telephone call, or catch a call.

• “Tell!”: supports voice mails. At that time, a

user can choose “(normal) mails”, or “special (mes-

sages)”.

• “Bulletin board!”: opens voice BBS. In the situa-

tion, a user can designate “Answer” for followup

of others’ message, or “Question” for invocation of

new a thread (theme or topic) in the BBS.

Those are mainly for communication with others

through Internet and/or telephone networks, which

helps user(s) to communicate with relatives and

friends easily, as there are still some amount of people

who are not Internet-accessible.

PET-TYPE ROBOT COMMUNICATION SYSTEM FOR MENTAL CARE OF SINGLE-RESIDENT ELDERIES

323

3.4 Autonomous conversation

Besides networking communication, autonomous

communication is strongly required for single-

resident elderies user, as the pet robot could be a

partner and then that may avoid the user from loneli-

ness. Autonomous communication consists of speech

recognition and speech generation. Speech media are

very useful for elderies, who are not accustomed to

use computers straightforwardly.

The robot at the moment can tell his name, current

date/time to the user(s). The robot can also use over

200 Japanese words, including

• “Good morning!”,

• “Wake up!”,

• “Bye Bye”,

• etc.

If a user talk to the robot “Wake up!”, the the robot

talks back to the user the greeting and gives one ar-

bitrary health advice at random, which give user(s)

some feelings of “live creature”. Furthermore, for the

purpose of getting friendly, the robot can sing several

short songs.

Note that those speech is not synthesized but just

composed from parts of speech pieces, which were

previously recorded and edited from human speech.

This composed method found to be more natural to

listen to, and that leads quite important characteris-

tics for elderies to be easy to communicate rather than

synthesized ones.

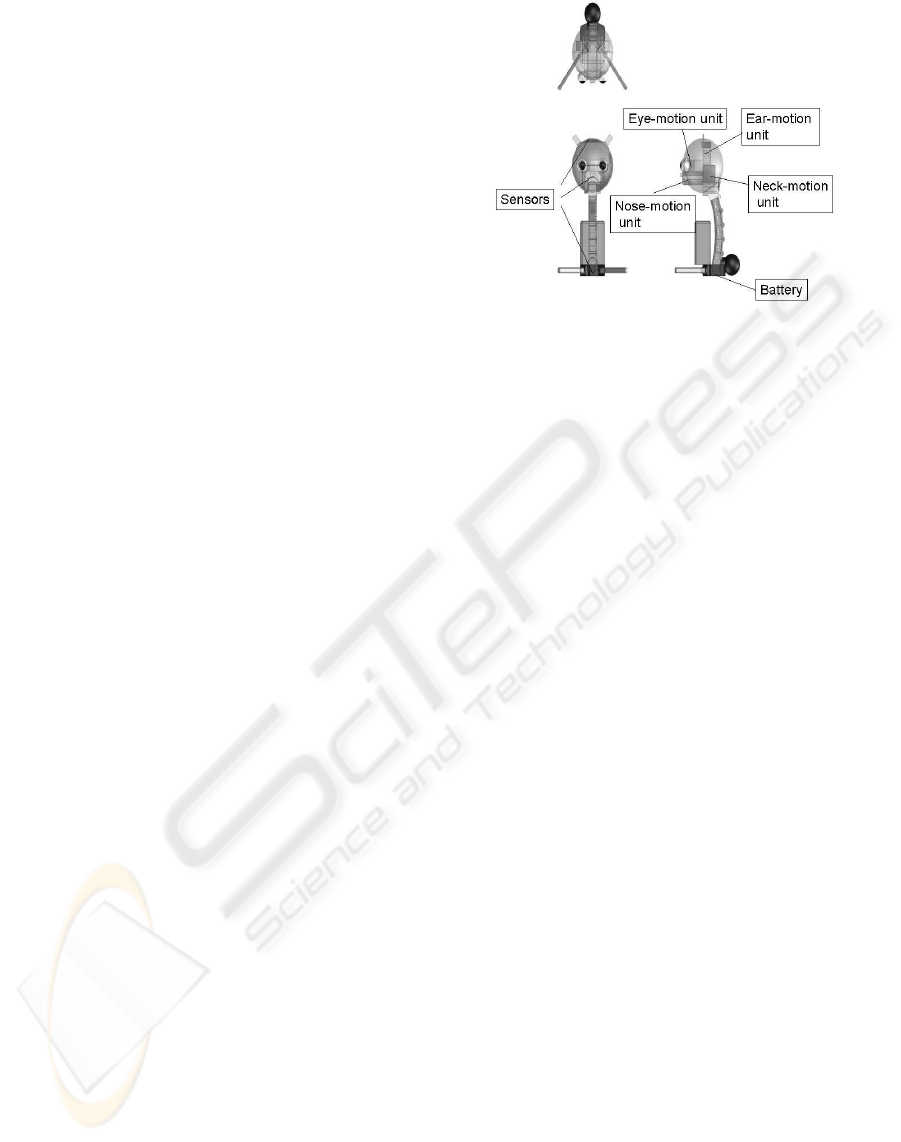

4 SYSTEM ARCHITECTURE

To reinforce interaction, including communication

with user(s), more naturally and friendlily, the robot

has some sensors and motors.

Figure 3 presents functions of the robot skeleton.

Those components enable the robot to behave

much more like a real (living) pet, which afford to be

more friendly and easy to contact (Gibson and Walk,

1960).

Sensors work for catching some signals of friend-

liness, which makes the robot cheer up/down, as well

as for interrupting its action, speech, etc. Considering

necessity and sufficiency, the robot has four motors;

one for both ears, one for both eyes, one for the nose,

and one for the neck. Motions generates by those mo-

tors symbolize emotions of the robot, which is essen-

tial for our object. For instance, the head, followed by

neck, can move vertically, which imply the emotion

of bowing, and horizontally, which imply negation.

The robot consists of two units, stuffed toy unit and

control unit, and those two are connected by a serial

line. the toy (doll) part has motors and sensors, and

Figure 3: Functions of the skeleton.

motion commands and sensed signals are sent to the

control unit. To afford user(s) to use easily, or pleas-

antly, a camera is embedded into a toy camera, mi-

crophones are set at both ears, and a speaker is set

just under the mouth.

To/from the information center, communication, or

information interchange is done through the Internet.

Telephone calls are not to use VoIP protocol, but done

using facility of cellular phones through public tele-

phone network.

5 DISCUSSION

We have examined a field test in Ikeda-city in Japan.

Targets are 7 single-resident elderies, 3 males (ave.

83 years old) and 4 females (ave. 78 years old). Each

person use the pet-type robot ave. 62 days, and in

that period we have done 4 interviews and 2 question-

naires.

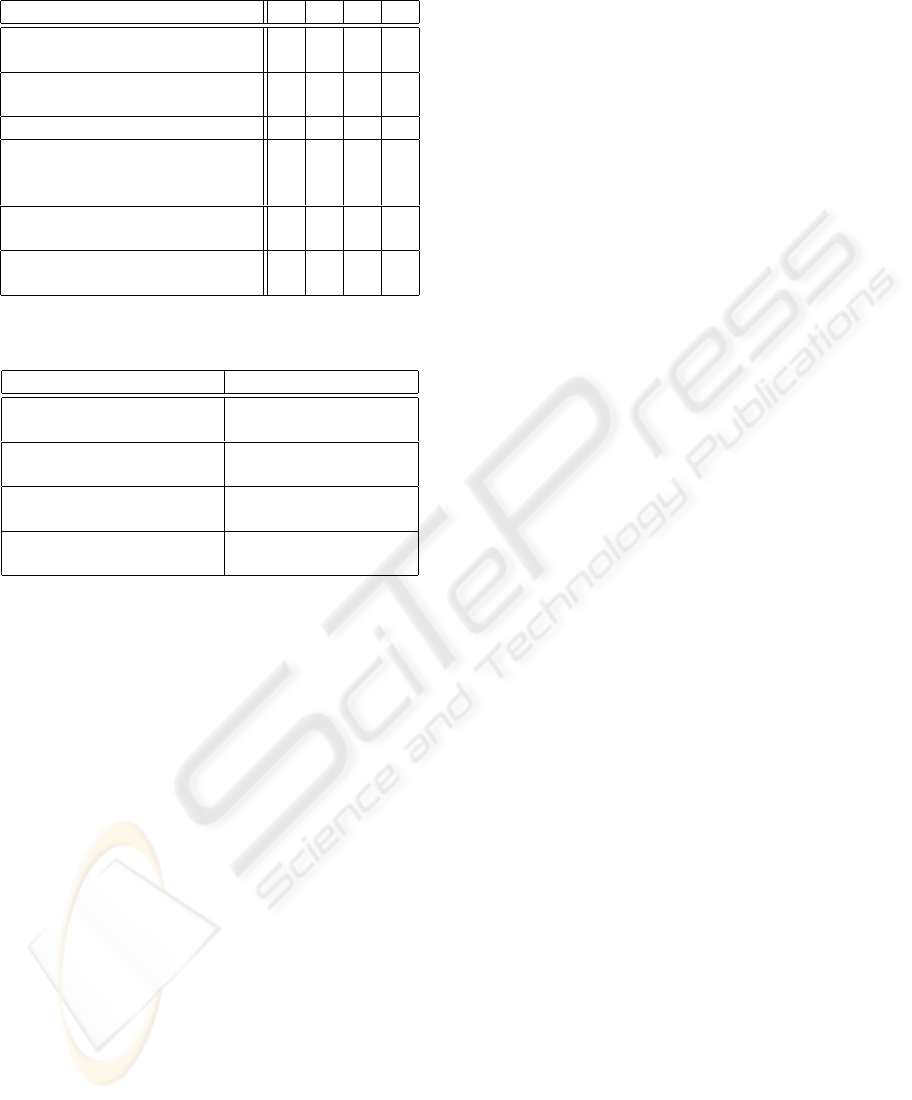

Table 1 presents questionnaire results, where “Q”

stands for Questions, “V” for “Very good”, “G” for

“Good”, “N” for “Not so good”, and “B” for “Bad”.

It actually shows our system can be used quite well,

though it is not complete. Especially, the item of “un-

derstanding” is not of good point which may cause

from insufficiency of speech recognition, and thus the

function of speech recognition and autonomous con-

versation should be improved.

Table 2 shows statistics of conversation data, de-

rived from log-messages in the center (server). The

system is used Approximately each two days, which

seems to be moderate. Users turn on the switch

twice a day, which may causes from mail checking

on morning and evening . Conversation time is about

6 minutes, and it may be enough for get and give in-

formation. Conversation success rate is counted by

hand from logs of the server. 46.8 % is not so good

ICINCO 2004 - ROBOTICS AND AUTOMATION

324

Table 1: Questionnaire results

Q V G N B

How frequently do you think

the robot understand you?

0 0 6 1

Do you feel the design of the

robot is good?

2 3 0 2

Does the robot speak well? 2 3 2 0

How do you feel by listen-

ing to the delivered messages

from the center ?

4 2 1 0

Do you feel friendliness with

the robot?

2 3 1 1

Do you think you feel lonely

without the robot?

2 2 1 2

Table 2: Usability statistics.

Item Average

Active rate (actual used

days per monitored days)

49%

Power-on frequency (per

day)

1.9 times

Conversation time (per

one conversation)

5 minutes 55 seconds

Conversation success

rate

46.8%

and we have to improve this factor.

6 CONCLUSION

We developed a pet-type robot communication sys-

tem including the information center, and certify

some of our system’s effect.

We need more field tests for analyzing our system

more precisely, as we have not done enough amount

of examinations. Furthermore the contents, which

consists of conversation sets and speech programs,

should be reconsidered for the more comfortable and

pleasant interaction.

ACKNOWLEDGEMENT

Part of this study was supported by Telecommunica-

tions Advanced Organization of Japan. For field test,

we have collaboration with Ikeda-city and Sawayaka

Kousha in Osaka Prefecture in Japan. We greatly ap-

preciate those support and collaboration.

REFERENCES

Bolmsj, G., Neveryd, H., and Eftring, H. (1995). Robotics

in Rehabilitation. IEEE Transactions on Rehabilita-

tion Engineering, 3:77–83.

Clarkson, J., Coleman, R., Keates, S., and Lebbon, C., ed-

itors (2003). Inclusive Design: Design for the whole

population. Springer-Verlag UK.

Fujita, M. (1999). Emotional Expressions of a Pet-

type Robot. Journal of Robotics Society of Japan,

17(7):947–951.

Gibson, E. J. and Walk, R. D. (1960). The Visual Cliff.

Scientific American, 202:64–72.

Maeda, T., Yoshida, K., Kayashima, K., and Maeda, Y.

(2002). Mechatronical Features of Net-accessible Pet-

type Robot for Aged People’s Welfare. In Proceedings

of the 3rd China-Japan Symposium on Mechatronics,

pages 212–217.

Maeda, T., Yoshida, K., Niwa, H., Kayashima, K., and

Maeda, Y. (2003). Net-accessive Pet-Type Robot for

Aged People’s Welfare. In Proceedings 2003 IEEE In-

ternational Symposium on Computational Intelligence

in Robotics and Automation (CIRA 2003), pages 130–

133.

Matsukawa, Y., Maekawa, H., Maeda, T., and Kayashima,

K. (1996). A Appearance for affordance of electric

toys. –Implementation–. In Proceedings of The Gen-

eral Conference of The Institute of Electronics, Infor-

mation, and Communication Engineers, volume A–

14, pages A–355.

Ohkawa, K., Shibata, T., and Tanie, K. (1998). A generation

method of evaluation for a robot considering relation

with other robots. Journal of Robotics and Mecha-

tronics, 39(3):284–288.

PET-TYPE ROBOT COMMUNICATION SYSTEM FOR MENTAL CARE OF SINGLE-RESIDENT ELDERIES

325