ROBUST IMAGE SEGMENTATION BY TEXTURE SENSITIVE

SNAKE UNDER LOW CONTRAST ENVIRONMENT

Shu-Fai WONG and Kwan-Yee Kenneth WONG

Department of Computer Science and Information Systems

The University of Hong Kong

Keywords:

Image Processing Applications, Image Segmentation, Texture Analysis, Medical Image Analysis

Abstract:

Robust image segmentation plays an important role in a wide range of daily applications, like visual surveil-

lance system, computer-aided medical diagnosis, etc. Although commonly used image segmentation methods

based on pixel intensity and texture can help finding the boundary of targets with sharp edges or distinguished

textures, they may not be applied to images with poor quality and low contrast. Medical images, images cap-

tured from web cam and images taken under dim light are examples of images with low contrast and with

heavy noise. To handle these types of images, we proposed a new segmentation method based on texture clus-

tering and snake fitting. Experimental results show that targets in both artificial images and medical images,

which are of low contrast and heavy noise, can be segmented from the background accurately. This segmen-

tation method provides alternatives to the users so that they can keep using imaging device with low quality

outputs while having good quality of image analysis result.

1 INTRODUCTION

Image segmentation is one of the hot topics in the

field of image processing and computer vision. Ro-

bust image segmentation provides solution to back-

ground subtraction and object detection. Recent tech-

nology depends heavily on robust object location such

that people detection in visual surveillance applica-

tion, organ detection in computer-aided medical di-

agnosis and surgery, and background elimination in

video compression can be made possible and reliable.

Image segmentation is commonly done by two ma-

jor approaches, namely pixel intensity analysis and

texture analysis. A comprehensive survey on segmen-

tation using intensity analysis can be found in (Pal and

Pal, 1993) and those on texture segmentation can be

found in (Chellappa and Manjunath, 2001). In pixel

intensity analysis, the color intensity or the grayscale

level of each pixel will be analysed independently.

Segmentation is done by grouping pixels according

to the similarity of their intensity value. The ma-

jor problem of such approach is that it works under

the strong assumption of targets with homogeneous

intensity value. In addition, analysing pixels inde-

pendently is error-prone under non-Gaussian noise

model. To relax the assumption and limitation, new

segmentation approach using texture was developed.

In texture analysis, a texture model is used to describe

a region of interest. Patches with similar features in

texture model will be grouped together and form a

larger patch. Segmentation is then done by grouping

of such patches. The major problem in using texture

analysis is to find an optimal texture model (e.g. size

of the patch, intensity pattern) and this is time con-

suming and computationally complicated.

In daily applications, images are of low quality

and with heavy noise. Medical images, images cap-

tured from webcam and images taken under dim light

are the examples of such kinds of images. To seg-

ment target from such images, traditional segmenta-

tion approaches may not be an ideal choice. Segmen-

tation using intensity will be affected by the heavy

noise while segmentation by texture will be not ro-

bust enough to be used in daily applications.

In this paper, we proposed a supervised texture

analysis algorithm that combine the advantage of both

intensity analysis and texture analysis to segment tar-

get from image with low contrast and heavy noise.

The proposed algorithm will learn the edge texture

pattern from the image during learning phase. The

edge pattern can be discovered in the testing image

afterward. A snake is then fitted towards the edge.

430

WONG S. and Kenneth WONG K. (2004).

ROBUST IMAGE SEGMENTATION BY TEXTURE SENSITIVE SNAKE UNDER LOW CONTRAST ENVIRONMENT.

In Proceedings of the First International Conference on Informatics in Control, Automation and Robotics, pages 430-434

DOI: 10.5220/0001146104300434

Copyright

c

SciTePress

The testing image can then be segmented. The size

of the edge texture patch is deterministic as the edge

along certain direction will not occupy large number

of pixel cells. The pattern can then be learnt and clas-

sified as in intensity analysis. The texture patch is

used instead of intensity of single pixel, such that the

pattern itself is not susceptible to the noise.

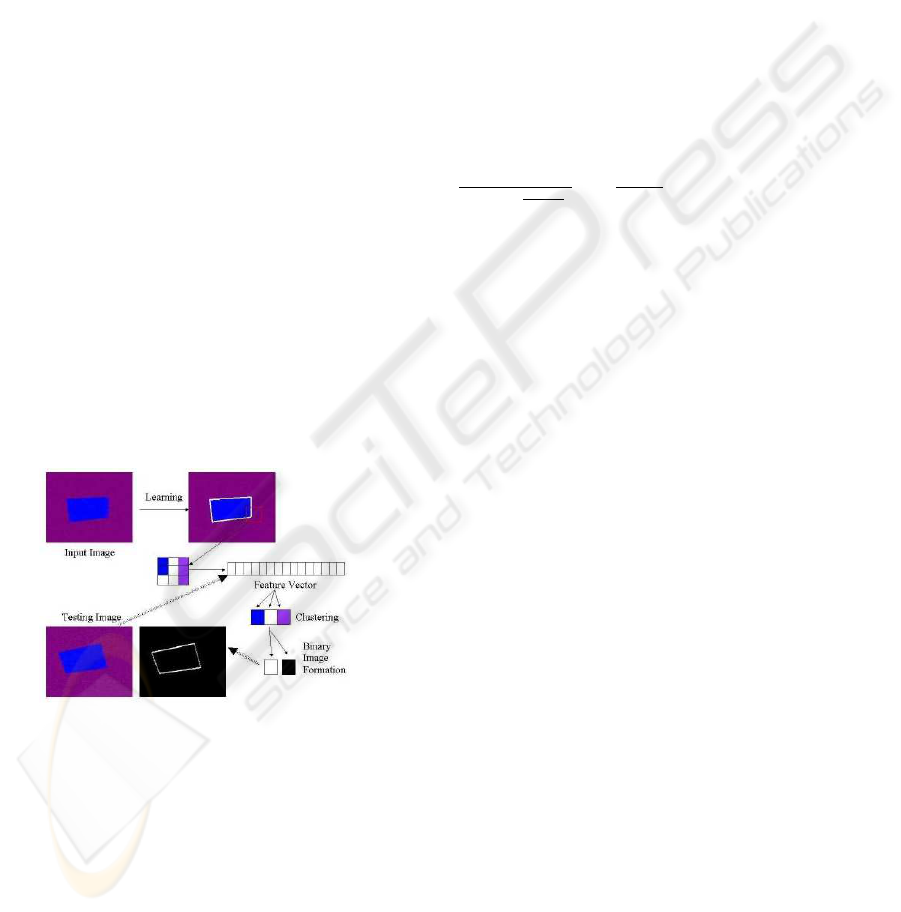

2 SYSTEM ARCHITECTURE

The whole system performs analysis in 2 phases,

namely learning phase and testing phase. During the

learning phase, both the image and the edge infor-

mation will be analysed by the system. The system

will extract texture feature from the image. The rep-

resentation of texture feature will be discussed in Sec-

tion 3. Such feature will be clustered into groups. The

groups is then further classified into edge or non-edge

texture according to the edge information given. The

association between the texture feature and the final

group is then established. The clustering algorithm

will be explored in Section 4. During testing phase,

the texture feature of testing image will be extracted.

Finally, classification can be done by comparing the

cluster means and the model energy and by applying

the cluster association rule. Based on the classifica-

tion result, foreground can be extracted. The details

will be given in Section 5. The flow is summarized in

figure 1.

Figure 1: Logic flow of the proposed system.

3 TEXTURE ANALYSIS BY MRF

Markov Random Field was first developed for texture

analysis, e.g. (Cross and Jain, 1983). It can be used to

describe a texture and make prediction on the inten-

sity value of a certain pixel given the intensity value

of its neighborhood. The theories related to Markov

Random Field can be found in (Chellappa and Jain,

1993).

In Markov Random Field, the neighborhood is

defined as clique elements. Consider that S =

{s

1

, s

2

, .., s

P

} is a set of pixels inside the image, and

N = {N

s

|s ∈ S} is the neighborhoods of the set

of pixels. In the system, the neighborhoods are the 8

pixels that with chessboard distance 1 away from the

target pixel.

Assuming X = {x

s

|s ∈ S} is the random vari-

ables (the intensity value) for every pixel inside an

image, where x

s

∈ L and L = {0, 1, .., 255}. Be-

sides, we have a class set for texture pattern, Ω =

{ω

S

1

, ω

S

2

, ..., ω

S

P

} where ω

S

i

∈ M and M is the set

of available classes. In the proposed system, we have

only two classes, the edge and the non-edge classes.

In Markov chain analysis, the conditional prob-

ability of certain pixel being certain class is given

by Gibbs distribution according to Hammersley-

Clifford theorem. The density function is π(ω) =

1

P

ω

exp(

−U (ω)

T

)

exp(

−U(ω)

T

), where T is the temper-

ature constant, which is used in stimulated anneal-

ing. The energy term can be further represented as

U(ω, x

i

) = V

1

(ω, x

i

) +

P

i

0

∈N

i

β

i,i

0

δ(x

i

, x

i

0

),where

V

1

(ω, x

i

) represents the potential for pixel with cer-

tain intensity value belongs to certain class and the

δ(x

i

, x

i

0

) is the normalised correlation between pixel

at s

i

and those at s

i

0

.

When the texture is being learnt by the feature

learning module, the set of β

i,i

0

is estimated accord-

ing to the requirement that the probability of its as-

sociated texture class will be maximised. The esti-

mation algorithm used in the system is simulated an-

nealing. The set of β

i,i

0

corresponds to the correlation

value and thus represents the configuration of the pix-

els such that it can be classified as that texture class.

In the system, this set of estimated β will be used as

texture feature vector. It will be used as input of sup-

port vector machine such that the association between

texture feature and texture class can be formed.

4 TEXTURE CLUSTERING

USING K-MEAN ANALYSIS

K-mean clustering algorithm has been widely used in

application that need unsupervised classification. Al-

though there is a learning set in the proposed system,

the noise in the image will greatly reduce the relia-

bility of the learning set. To identify those outliers in

the learning set, unsupervised clustering will be per-

formed first. The implementation of k-mean cluster-

ing algorithm can be found in (Duda et al., 2000).

After performing k-mean clustering on the feature

vectors β, supervised association will be done. Given

several clusters after k-mean clustering, some of them

correspond to a edge patch with gradient change a

ROBUST IMAGE SEGMENTATION BY TEXTURE SENSITIVE SNAKE UNDER LOW CONTRAST

ENVIRONMENT

431

along certain direction while the remaining corre-

spond to the non-edge patch, noisy patch or corner

patch. Labeling of the cluster is then done accord-

ing to the association information from the learning

set. Outliers will be ignored during the labeling stage.

Finally, an association between texture feature vector

and “edge and non-edge” classes can be formed.

In testing stage, classification can be done simply

by extracting the texture feature and then using the

association rule formed. Applying such classifica-

tion scheme on the whole image, a binary map can be

formed with ‘1’ means edge and ‘0’ means non-edge.

5 TEXTURE SEGMENTATION BY

SNAKE FITTING

Active contour (Kass et al., 1987) had been used in

pattern location and tracking (Blake and Isard, 1998)

for a long time. It is good at attaching to object with

strong edge and irregular shape. The snake can be

interpreted as parametric curve v(s) = [x(s), y(s)].

In the proposed system, the initial position of the

active contour is the bounding box of the searching

window. The active contour will move according to

the energy function, E

∗

snake

=

R

1

0

{[E

int

(v(s))] +

[E

texture

(v(s))] + [E

con

(v(s))]}ds, where E

int

rep-

resents the internal energy of the snake due to

bending, E

texture

represents the texture-based image

forces, and E

con

represents the external constraint

forces. The snake is said to be fitted if the E

∗

snake

is minimised.

The equation is the same as the traditionally used

snake-equation but with texture-based image force re-

places the original “edge-based” image force. Since

the image is of noisy and low contrast, noise will in-

troduce dozens of distracting edges under pixel-based

analysis. If the “edge-based” energy is used, the cor-

responding snake will be highly unstable and inac-

curate. Thus, texture-based energy is used in the

proposed system. Texture energy is lower near the

patch that shows texture changes towards edge texture

and thus the snake will shrink towards strong edge in

the binary texture binary map described in Section 4.

Texture represents a patch of pixels instead of a sin-

gle pixel and texture-based analysis is more tolerant

to noise compare with pixel-based analysis. Thus,

texture-based analysis is a much reliable than edge-

based analysis for snake implementation.

6 EXPERIMENT AND RESULT

The proposed system was implemented using Visual

C++ under Microsoft Windows. The experiments

were done on a P4 2.26 GHz computer with 512M

Ram running Microsoft Windows.

6.1 Experiment 1: Artificial Noisy

and Low Contrast Images

In this experiment, the classifier is trained to recog-

nize edge in artificial images with heavy noise and

of low contrast. A texture-sensitive snake is then fit-

ted towards the texture-edge from the initial position

close to the real edge. The result is shown in figure 2.

It shows that the binary image (texture map) match

the edge quite well. The snake can fit toward the

edge quite well too. The relative root-mean-square

error (i.e. the relative distance between the control

points and the real edge) is less than 5% when com-

paring with ground truth images. The processing time

is around 10s where the images with average size 267

x 255 pixels.

Figure 2: The first row shows the segmentation result of

using noisy image while the second row shows the result

of using low contrast image. The left-most images are the

testing images. Middle images are the corresponding binary

map after final classification. The right-most images show

the result of snake-fitting.

6.2 Experiment 2: Low Contrast

and Noisy Medical Image

In this experiment, the backbone has to be segmented

from the medical image with poor quality and low

contrast. Actually, the image may not be segmented

easily manually. The result of segmentation is showed

in figure 3. It shows that the snake can fit some of the

backbones very well. The accuracy cannot be deter-

mined here due to no ground truth image provided.

The processing time is around 18s where the image

with size 600 x 450 pixels.

ICINCO 2004 - ROBOTICS AND AUTOMATION

432

Figure 3: The left-most image is the given medical image.

The second left image shows enlarged portion of the first

image. The third image is the corresponding binary map.

The last image is the result of snake-fitting.

6.3 Experiment 3: Comparison with

other approaches

In this experiment, pixel-based analysis, texture-

based analysis and traditional snake-fitting are per-

formed on images used in experiment 1 and 2. The

results are shown in figure 4, figure 5 and fig-

ure 6 respectively. From the results, it seems that

the proposed method performs better than these ap-

proaches. Pixel-based analysis cannot perform well

without knowing the optimal similarity-tolerant level.

In texture-based analysis, color patches extracted are

not semantically connected (i.e. color patches can be

corners, edges and heterogeneous region) and there

are so many clusters (in black color) are unclassified

due to the noisy nature. Traditional snake approach is

sensitive to cluttered background and image noise.

Figure 4: The result of applying pixel-based analysis is

shown. These rows show the result of using artificial noisy

image, low contrast image and medical image respectively.

The images on first column are the testing images, the im-

ages on the second column are resultant images on low

similarity-tolerant level, while images on the third column

are resultant images on high similarity-tolerant level. The

two largest clusters consists of pixels with similar attributes

are indicated by black and white. Those unclassified and

small pixel patches are kept in original color.

Figure 5: The result of applying texture-based analysis is

shown. The rows are ordered in the same way as in Figure 4.

The images on first column are the testing images while

those on second column are the resultant image. Patches

with similar texture are grouped together and form a color

cluster. Those small patches cannot be grouped together are

indicated by black color.

Figure 6: The results of using snake approach are shown.

The rows are ordered in the same way as in Figure 4. The

first column shows the input image. The second column

shows the edge detection result on unsmoothed input image

while the third column shows the edge detection result on

smoothed input image. The fourth column shows the equal-

ized result of the resultant image on the third column. The

last column shows the snake applied on the the input image

with reference to the edge map on the third column.

7 CONCLUSIONS

There is a demand of segmenting a target from im-

ages with low contrast and heavy noise in application

like medical imaging. However, commonly used im-

age segmentation approach can only work properly

if the input image has homogeneous intensity or tex-

ture of high quality. The proposed segmentation al-

gorithm aims at segmenting a target from an image of

low quality by texture pattern extraction and cluster-

ing. To have better and more accurate segmentation

result, a snake is fitted towards to edge pattern such

that the boundary of the target can be captured. It

combines the advantages of using pixel analysis and

texture analysis such that it can segment the target

from an image of low quality in the experiments.

ROBUST IMAGE SEGMENTATION BY TEXTURE SENSITIVE SNAKE UNDER LOW CONTRAST

ENVIRONMENT

433

REFERENCES

Blake, A. and Isard, M. (1998). Active Contours. Springer.

Chellappa, R. and Jain, A. (1993). Markov Random Fields:

Theory and Applications. Academic Press.

Chellappa, R. and Manjunath, B. (2001). Texture classifi-

cation and segmentation. In FIU01, page Chapter 8.

Cross, G. and Jain, A. (1983). Markov random field texture

models. PAMI, 5(1):25–39.

Duda, R. O., Hart, P. E., and Stork, D. G. (2000). Pattern

Classification. John Wiley and Sons, Inc., second edi-

tion.

Kass, M., Witkin, A., and Terzopoulos, D. (1987). Snakes:

Active contour models. In Proc. Int. Conf. on Com-

puter Vision, pages 259–268.

Pal, N. and Pal, S. (1993). A review on image segmentation

techniques. PR, 26(9):1277–1294.

ICINCO 2004 - ROBOTICS AND AUTOMATION

434