EVALUATION OF RECOMMENDER SYSTEMS THROUGH

SIMULATED USERS

Miquel Montaner, Beatriz L

´

opez and Josep Llu

´

ıs de la Rosa

Institut d’Inform

`

atica i Aplicacions - Universitat de Girona

Campus Montilivi, 17071 Girona, Spain

Keywords:

recommender systems, evaluation procedure, user simulation, profile discovering

Abstract:

Recommender systems have proved really useful in order to handle with the information overload on the Inter-

net. However, it is very difficult to evaluate such a personalised systems since this involves purely subjective

assessments. Actually, only very few recommender systems developed over the Internet evaluate and discuss

their results scientifically. The contribution of this paper is a methodology for evaluating recommender sys-

tems: the ”profile discovering procedure”. Based on a list of item evaluations previously provided by a real

user, this methodology simulates the recommendation process of a recommender system over time. Besides,

an extension of this methodology has been designed in order to simulate the collaboration among users. At

the end of the simulations, the desired evaluation measures (precision and recall among others) are presented.

This methodology and its extensions have been successfully used in the evaluation of different parameters and

algorithms of a restaurant recommender system.

1 INTRODUCTION

Recommender systems help users to locate items, in-

formation sources and people related to their interest

and preferences (Sanguesa et al., 2000). This involves

the construction of user models and the ability to an-

ticipate and predict user preferences. Many recom-

mender systems have been developed from several

years ago applied to very different domains (Mon-

taner et al., 2003). Unfortunately, only a few of them

evaluate and discuss their results scientifically. This

situation is caused by the difficulty of acquiring re-

sults that can be used to compute evaluation measures.

As a consequence, to date, it is very difficult to de-

termine how well recommender systems work, since

this involves purely subjective assessments. However,

advances on recommender systems require the devel-

opment of a comparative framework. Our work is in

this line.

The contribution of this paper is a new method-

ology to evaluate recommender systems that we call

“profile discovering”. The aim of this method is to

provide the different steps to design the simulation of

the execution of a recommender system with several

users over time. The most interesting characteristic

of our methodology is that the tastes, interests and

preferences of the users are not invented in the sim-

ulation process. The “profile discovering procedure”

bases the results of the simulations on real informa-

tion about users. In particular, each user to be simu-

lated has to provide a list of evaluated items. Then,

the method simulates the recommendation process of

each user and when information about them is re-

quired, it is obtained from the lists. Therefore, the

simulation can be seen as a progressive discovery of

the lists of evaluated items (user profiles). After the

simulations, the methodology provides a set of results

from the point of view of different measures.

The main properties of this methodology are:

• The simulation process does not invent the user

evaluations, they are extracted from real user pro-

files.

• The recommendation process considers the devel-

opment of the user profile over time.

• Large-scale experiments are carried out quickly.

• Experiments are repeatable and perfectly con-

trolled.

This paper also proposes an extensions for the “pro-

file discovering procedure” that incorporates the col-

laboration among users into the recommendation pro-

cess.

303

Montaner M., López B. and Lluís de la Rosa J. (2004).

EVALUATION OF RECOMMENDER SYSTEMS THROUGH SIMULATED USERS.

In Proceedings of the Sixth International Conference on Enterprise Information Systems, pages 303-308

DOI: 10.5220/0002622703030308

Copyright

c

SciTePress

The “profile discovering procedure” and its exten-

sion allow us to test the performance of the param-

eters of the recommendation process in order to tune

functions and algorithms. Moreover, it is a suitable in-

strument to compare different recommender systems,

an inconceivable experiment so far.

The outline of this paper is as follows: the next

section describes some evaluation techniques for rec-

ommender systems that have been used in the cur-

rent state-of-the-art. Then, our proposal for evalu-

ating recommender systems, namely what we have

called “profile discovering” is presented in section 3.

Following, its extension for performing multi-agent

collaboration is detailed in section 4. Then, some ex-

perimental results are shown in section 5 and, finally,

section 6 concludes this paper.

2 RELATED WORK

In the current state-of-the-art, recommender systems

use one of the following approaches in order to ac-

quire the results for evaluating the performance of

their systems: a real environment, an evaluation en-

vironment, the logs of the system or a user simulator.

First, results obtained in a real environment with

real users is the best way to evaluate a recommender

system. Unfortunately, only a few commercial sys-

tems like Amazon.com (Amazon, 2003) can show

real results based on their economic effect thanks to

their information on real users.

Second, evaluation environments are an alternative

for some systems to be evaluated in the laboratory by

letting a set of users interact with the system over a

period of time. Usually, the results are not reliable

enough because the users know the system or the pur-

pose of the evaluation. An original approach was ac-

complished by NewT (Sheth, 1994); in addition to the

numerical data collected in the evaluation sessions, a

questionnaire was also distributed to the users to get

feedback on the subjective aspects of the system. The

main problem of the real and the evaluation environ-

ments is that repetition of the experiments, in order to

evaluate different algorithms and parameters, is im-

possible.

Third, the analysis or validation of the logs ob-

tained in a real or evaluation environment with real

users is a common technique used to evaluate rec-

ommender systems. A frequently used technique is

the “10-fold cross-validation technique” (Mladenic,

1996). It consists of predicting the relevance (e.g.,

ratings) of examples recorded in the logs and, then,

comparing them with the real evaluations. These ex-

periments are perfectly repeatable, provided that the

tested parameters do not affect the evolution of the

user profile and the recommendation process. For ex-

ample, the log being validated would be very different

if another recommendation algorithm had been tested.

Therefore, since the majority of the parameters condi-

tion the recommendation process over time, generally,

experiments cannot be repeated.

Finally, a few systems are evaluated through simu-

lated users. Important issues such as learning rates

and variability in learning behaviour across hetero-

geneous populations can be investigated using large

collections of simulated users whose design was tai-

lored to explore those issues. This enables large-scale

experiments to be carried out quickly and also guar-

antees that experiments are repeatable and perfectly

controlled. It also allows researchers to focus on and

study the behaviour of each sub-component of the

system, which would otherwise be impossible in an

unconstrained environment. For instance, Holte and

Yan conducted experiments using an automated user

called Rover rather than human users (Holte and Yan,

1996). NewT (Sheth, 1994) and Casmir (Berney and

Ferneley, 1999) also used a user simulator to evaluate

the performance of systems. The main shortcoming

of this technique is that, at present, it is impossible

to simulate the real behaviour of a user. Users are

far too complicated to predict, at every moment, their

feelings, their emotions, their moods, their anxieties

and, therefore, their actions.

3 “PROFILE DISCOVERING”

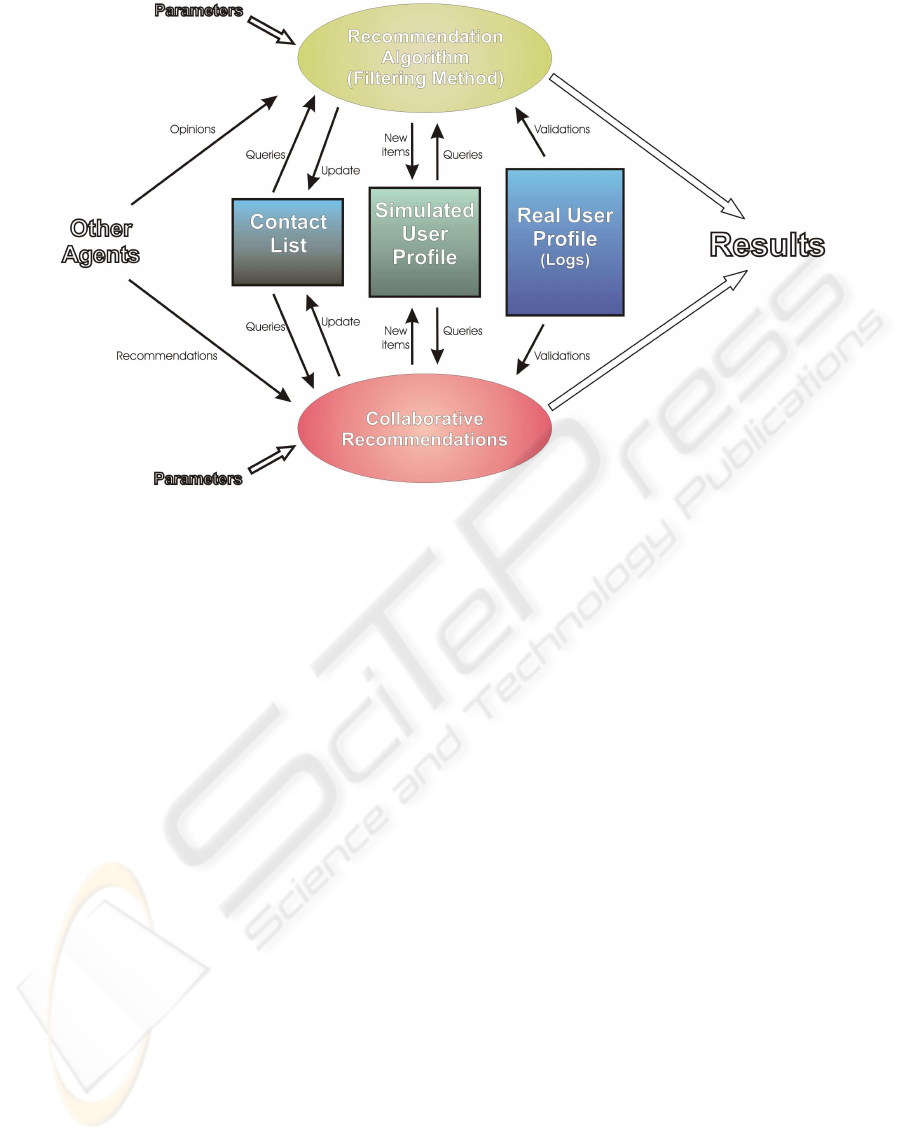

In order to solve all the shortcomings of the current

techniques while benefitting from their advantages,

we propose a method of results acquisition called “the

profile discovering procedure” (see Figure 1). This

technique can be seen as an hybrid approach between

real or laboratory evaluation, log analysis and user

simulation.

First of all, it is necessary to obtain as many item

evaluations from real users as possible. It is desir-

able to obtain these user evaluations through a real or

laboratory evaluation although it implies a relatively

long period of time. However, it is also possible and

faster to get the user evaluations through a question-

naire containing all the items which the users have to

evaluate. We call the list of real item evaluations of a

given user A, the A’s real user profile (RU P ).

Once the real user profiles are available, the simula-

tion process; that is the profile discovering procedure

starts. It consists on the following steps:

1. Generation of an initial user profile (U P ) from the

real user profile (RU P , U P ⊂ RU P ).

2. Emulation of the real recommendation process: a

new item (r) is recommended from the U P .

3. Validation of the recommendation:

ICEIS 2004 - INFORMATION SYSTEMS ANALYSIS AND SPECIFICATION

304

Figure 1: “Profile Discovering” Evaluation Procedure.

• If r ∈ RU P , then r is considered as a discovered

item and is added to U P (U P = U P ∪ {r}).

• Otherwise, r is rejected

4. Repeat 2 and 3 until the end of the simulation.

When the simulation process starts, it is desirable to

initially know as much as possible from the user in or-

der to provide satisfactory recommendations from the

very beginning. Analysing the different initial profile

generation techniques (see (Montaner et al., 2003)),

namely: manual generation, empty approach, stereo-

typing and training set, we found different advantages

and drawbacks. The training set approach depends to-

tally on the profile learning technique: the user just

gives a list of evaluated items and the learning tech-

nique generates the profile. There is nothing to annoy

the users and the users easily define their preferences.

Therefore, in our approach, the training set seems to

be the best choice, although the others can also be

used in our framework. Thus, the first step of the sim-

ulation consists in the extraction of an initial item set

from the RU P in order to generate the initial U P .

Then, the simulator emulates the recommendation

of new items over time. In particular, it executes a

recommendation process cycle by cycle, where a cy-

cle is a day in the real world and corresponds to steps

2 and 3 of the profile discovering procedure. During

the simulated day, the recommendation algorithm rec-

ommends a group of items based on the information

contained in the U P . All the functions, constraints

and constants involved in the recommendation pro-

cess are parameters of the simulator (for example, the

time of the simulation, the recommendation algorithm

or the learning parameters).

After each recommendation the simulator checks

its success. In order to do that, the user’s assessments

are needed. Instead of inventing them like other sim-

ulators do, the profile discovering simulator looks up

the real user profile containing the real evaluations of

the user. Thus, if the item is contained in the RUP ,

the simulator can check the user’s opinion and clas-

sify the recommendation as a success or a failure ac-

cordingly. This discovered item is learned in the U P

and a new item is recommended.

Once the simulation has finished, the initial U P

will have evolved in a more complete profile that is

called the discovered user profile (DU P ). More-

over, the method provides results on how many rec-

ommendations have been made or how many suc-

cessful/unsuccessful items have been recommended.

Thus, based on the DU P and the final simulation re-

sults, different metrics are evaluated such as preci-

sion, recall and diversity (see (Montaner, 2003) for

more details about some measures analysed).

4 “PROFILE DISCOVERING

WITH COLLABORATION”

An extended version of the profile discovering evalua-

tion procedure has been designed in order to simulate

the collaboration among users in a recommender sys-

tem. User collaboration is a frequent technique used

in open environments since it has been proved to im-

prove recommendation results (Good et al., 1999).

If the current techniques proposed in the state-of-

the-art do not allow the proper evaluation of single

user, neither are they valid for evaluating a commu-

nity of collaborating users. Thus, we propose the

EVALUATION OF RECOMMENDER SYSTEMS THROUGH SIMULATED USERS

305

”profile discovering evaluation procedure with col-

laboration”. The main idea of this new technique

is essentially the same as the profile discovering but

it takes into account the opinions and recommenda-

tions of other users in the system. The simulation is

also performed cycle by cycle. At every cycle new

users enter into the simulation process and the recom-

mender system recommends new items to each user

based on the simulated user profile with the collabo-

ration from the other users. Thus, the profile discov-

ering evaluation procedure with collaboration consists

of the following steps:

1. Initial Profile Generation: as in the profile discov-

ering procedure, an initial U P is generated as from

the RU P contained in the logs.

2. Contact List Generation: each user has a list of

users with which to collaborate. We refer to these

lists as contact list and the users contained in them

as friends. There are several techniques to fill

up such list: direct comparison among user pro-

files (the collaborative filtering approach), ”playing

agents procedure” (Montaner et al., 2002),... Thus,

the simulator emulates the process where each user

looks for friends with a technique that is a parame-

ter of the simulator.

3. Recommendation Process: the simulator recom-

mends new items to each user based on their U P

and with the collaboration of their friends (see Fig-

ure 2). Furthermore, users can also receive collab-

orative recommendations by directly asking for in-

teresting items to their friends. Finally, after each

recommendation, the simulator checks its success

based on the user’s assessments contained in the

RU P .

4. Profile Adaptation: besides classifying the recom-

mendation as a success or a failure, the simulator

has to adjudge on the collaboration of the other

users. Such information is used in order to adapt

the contact list of the recommender systems to the

most recent outcomes. The parameters controlling

the modification of the contact list are parameters

of the system.

5. Repeat 2-4 (a cycle) until the end of the simulation.

Finally, when the simulation duration is exhausted,

several metrics are analysed and the results are pre-

sented.

5 EXPERIMENTAL RESULTS

The proposed methodology was implemented in order

to evaluate GenialChef

1

, a restaurants recommender

1

GenialChef was awarded the prize for the best univer-

sity project at the E-TECH 2003.

system developed within the IRES Project

2

.

The contributions of GenialChef are a CBR rec-

ommendation algorithm with a forgetting mecha-

nism and a mechanism of collaboration based on

trust (Montaner, 2003). All these contributions were

deeply tested with the methodologies proposed in this

paper. In particular, the CBR recommendation algo-

rithm, the initial profile generation technique and the

different parameters regarding the forgetting mech-

anism were evaluated by means of the “profile dis-

covering procedure” and several ”cross-validations

through profile discovering”. Then, the method to

generate the contact lists, the different parameters

concerning how and when to collaborate and the func-

tions and parameters to adapt the contact lists to the

outcomes were evaluated with the “profile discover-

ing with collaboration”. A snapshot of simulator in-

terface with the different parameters used in the sim-

ulations are shown in Figure 3.

One of the most interesting experiments that we

performed with this user simulator is the compari-

son of the information filtering methods used in the

recommendation process of GenialChef (Montaner,

2003) with the ones provided in the state-of-the-art.

In particular, the opinion-based filtering method and

the collaborative filtering method through trust are

compared to the typical information filtering meth-

ods: content-based filtering and collaborative filter-

ing. Thanks to the simulator, the same set of user pro-

files where submitted to the different methods, get-

ting comparable results. Figure 4 shows the precision

of the recommender system when different combina-

tions of information filtering methods are applied. Y-

axis represents the precision of the system and x-axis

represents how much tolerant users are when adding

new friends to their contact lists, ranging from 0.4 (al-

most all the other users in the system are considered as

friends) to 1.0 (nobody is considered as friend). Ex-

ecuting all the filtering methods upon the same user

simulator, we can guarantee that the results are com-

parable and assure that the information filtering meth-

ods proposed (Simulation5) improve the performance

of the typical ones.

6 CONCLUSIONS

This paper is focussed on the evaluation of recom-

mender systems. Due to the lack of evaluation proce-

dures for such a personalised systems, we have car-

ried out an important work on how these systems

can be evaluated scientifically. The main purpose is

that this evaluation procedure be as similar as possi-

ble to an evaluation performed with real users. Our

2

The IRES Project was awarded the special prize at the

AgentCities Agent Technology Competition (2003).

ICEIS 2004 - INFORMATION SYSTEMS ANALYSIS AND SPECIFICATION

306

Figure 2: Recommendation Process in Profile Discovering with Collaboration.

proposal, the “profile discovering procedure”, is a

methodology that simulates users based on a list of

item evaluations provided by real users. Therefore,

the evaluation is only based on real information and

does not invent what users think about items.

Besides, a extension have been designed as a com-

plement to this methodology in order to simulate the

collaboration among users.

Therefore, the methodology proposed and its ex-

tension allow researchers to carry out large-scale and

perfectly controlled experiments quickly in order to

test their recommender systems and, what is also very

important, compare their whole systems with others.

The next step in our work is to improve our

methodology in order to incorporate information

about the context of the users and their emotional fac-

tors like in (Mart

´

ınez, 2003). We believe that such

information can provide simulated users with a be-

haviour more similar to the users of the real world.

REFERENCES

Amazon (2003). http://www.amazon.com.

Berney, B. and Ferneley, E. (1999). CASMIR: Infor-

mation retrieval based on collaborative user pro-

filing. In Proceedings of PAAM’99, pp. 41-56.

Good, N., Schafer, J., Konstan, et al. (1999). Combin-

ing collaborative filtering with personal agents

for better recommendations. In Proceedings of

AAAI, vol 35, pp. 439-446. AAAI Press.

Holte, R. C. and Yan, N. Y. (1996). Inferring what a

user is not interested in. AAAI Spring Symp. on

ML in Information Access. Stanford.

Mart

´

ınez, J. (2003). Agent Based Tool to Support

the Configuration of Work Teams. PhD Thesis

Project, UPC.

Mladenic, D. (1996). Personal WebWatcher: Imple-

mentation and design. TR IJS-DP-7472, De-

partment of Intelligent Systems, J.Stefan Insti-

tute, Slovenia.

Montaner, M. (2003). Collaborative recommender

agents based on CBR and trust. PhD Thesis in

Computer Engineering. Universitat de Girona.

Montaner, M., L

´

opez, B., and de la Rosa, J. L. (2002).

Opinion-based filtering through trust. In Pro-

ceedings of CIA’02. LNAI 2446., pp. 164-178.

Montaner, M., L

´

opez, B., and de la Rosa, J. L. (2003).

A taxonomy of recommender agents on the In-

ternet. Artificial Intelligence Review, volume

19:4, pp. 285-330. Kluwer Academic Publishers.

Sanguesa, R., Cort

´

es, U., and Faltings, B. (2000).

W9. Workshop on recommender systems. In Au-

tonomous Agents, 2000. Barcelona, Spain.

Sheth, B. (1994). A learning approach to person-

alized information filtering. M.S. Thesis, Mas-

sachusetts Institute of Technology.

EVALUATION OF RECOMMENDER SYSTEMS THROUGH SIMULATED USERS

307

Figure 3: Interface of the Simulator.

Figure 4: Precision of the system with different IF methods.

Simulation1 - CBF

Simulation2 - CBF+OBF

Simulation3 - CBF+OBF+CF

Simulation4 - CBF+OBF+CF’

Simulation5 - CBF+OBF+CFT

ICEIS 2004 - INFORMATION SYSTEMS ANALYSIS AND SPECIFICATION

308