ROBUST APPEARANCE MATCHING WITH FILTERED

COMPONENT ANALYSIS

Fernando De la Torre, Alvaro Collet, Jeffrey F. Cohn and Takeo Kanade

Robotics Institute, Carnegie Mellon University, Pittsburgh, USA

Keywords:

Appearance Models, principal component analysis, Multi-band representation, learning filters.

Abstract:

Appearance Models (AM) are commonly used to model appearance and shape variation of objects in images.

In particular, they have proven useful to detection, tracking, and synthesis of people’s faces from video. While

AM have numerous advantages relative to alternative approaches, they have at least two important drawbacks.

First, they are especially prone to local minima in fitting; this problem becomes increasingly problematic as

the number of parameters to estimate grows. Second, often few if any of the local minima correspond to

the correct location of the model error. To address these problems, we propose Filtered Component Analysis

(FCA), an extension of traditional Principal Component Analysis (PCA). FCA learns an optimal set of filters

with which to build a multi-band representation of the object. FCA representations were found to be more

robust than either grayscale or Gabor filters to problems of local minima. The effectiveness and robustness of

the proposed algorithm is demonstrated in both synthetic and real data.

1 INTRODUCTION

Component Analysis (CA) methods such as Principal

Component Analysis (PCA) have been widely applied

in visual, graphics, and signal processing tasks over

the last two decades. PCA is a key learning compo-

nent of Appearance Models (AM). AM have proven

especially powerful for face tracking and synthesis

relative to alternative approaches (e.g. optical flow)

(Blanz and Vetter, 1999; Matthews and Baker, 2004;

Cootes and Taylor, 2001b; de la Torre and Black,

2003; Black and Jepson, 1998).

In applications such as face detection and track-

ing, the goal is to search for a minimum residual be-

tween the image and the model across rigid (e.g. ro-

tation and translation) and non-rigid parameters. For

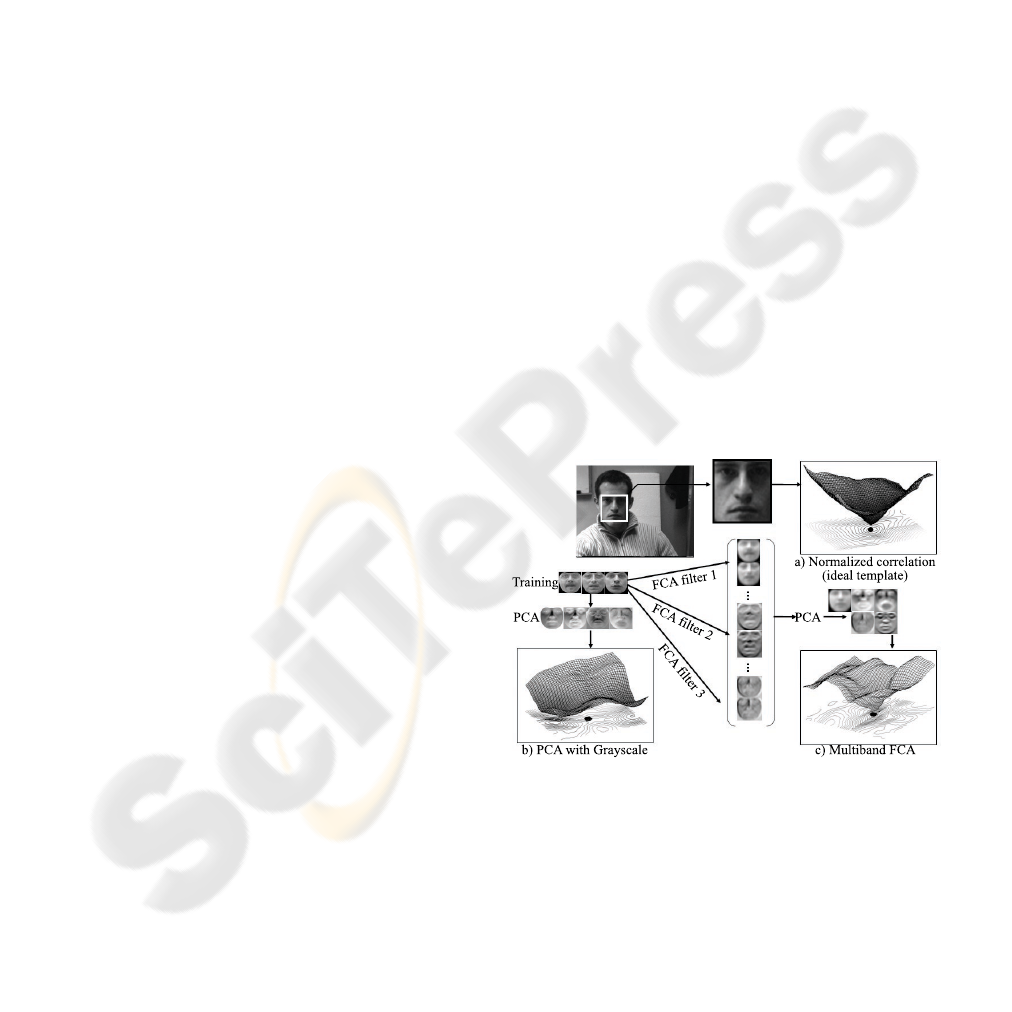

instance, consider fig. 1, in which a face has been

placed in an arbitrary image. In fig. 1.a, we plot

the normalized correlation surface error between the

ideal template (face) and the image in a 40 ×40 patch

centered in the middle of the face. This surface error

has nice local properties: it has just one well defined

global minimum that corresponds to the expected lo-

cation of the face. However, if we learn a generic PCA

a) Normalized correlation

(ideal template)

FCA filter 1

FCA filter 2

FCA filter 3

PCA

c) Multiband FCAb) PCA with Grayscale

Training

PCA

Figure 1: a). Normalized correlation error surface of the

image with the face in a 40 ×40 patch. b) Error function

with a generic graylevel appearance model. The black dot

denotes the optimal position of the face. c) Error function

of a multiband learned representation. The location of the

face corresponds to the minimum of the function.

model of the facial appearance variation from training

data and try to locate the face again, two undesirable

effects may occur. First, the location of the optimal

207

De la Torre F., Collet A., F. Cohn J. and Kanade T. (2007).

ROBUST APPEARANCE MATCHING WITH FILTERED COMPONENT ANALYSIS.

In Proceedings of the Second International Conference on Computer Vision Theory and Applications - IFP/IA, pages 207-212

Copyright

c

SciTePress

parameter (translation) fails to correspond to the lo-

cation of the face (delineated by the the black dot in

the figure), see fig. (1.b). Second, many local minima

may be found. Even if a gradient descent algorithm

begins close to the correct solution, the occurrence of

local minima is likely to divert convergence from the

desired solution.

The aim of this paper is to explore the use of a new

technique, Filtered Component Analysis (FCA). FCA

learns a multiband representation of the image that re-

duces the number of local minima and improves gen-

eralization relative to PCA. Fig. (1.c) shows the main

goal of the paper. By building a multiband representa-

tion with FCA, we are able to locate the minimum in

the right location (black dot) and eliminate most local

minima close to the optimal one.

2 PREVIOUS WORK

This section reviews work on subspace tracking and

the role of representation in subspace analysis.

2.1 Subspace Detection and Tracking

Subspace trackers build the object’s appearance/shape

representation from the PCA of a set of training sam-

ples. Let d

i

∈ℜ

d×1

(see notation

1

) be the i sample of

a training set D ∈ ℜ

d×n

and B ∈ ℜ

d×k

the first k prin-

cipal components. B contains the directions of maxi-

mum variation of the data. The principal components

maximize max

B

∑

n

i=1

||B

T

d

i

||

2

2

= ||B

T

B||

F

, with the

constraint B

T

B = I. The columns of B form an ortho-

normal basis that spans the principal subspace. If the

effective rank of D is much less than d, we can ap-

proximate the column space of D with k << d princi-

pal components. The data d

i

can be approximated as

a linear combination of the principal components as

d

i

≈Bc

i

where c

i

= B

T

d

i

are the coefficients obtained

by projecting the training data onto the principal sub-

space.

Once the model has been learned (i.e. B is

known), tracking is achieved by finding the para-

meters a of the geometric transformation f(x, a) that

1

Bold capital letters denote a matrix D, bold lower-case

letters a column vector d. d

j

represents the j column of the

matrix D. d

i j

denotes the scalar in the row i and column

j of the matrix D and the scalar i-th element of a column

vector d

j

. All non-bold letters will represent variables of

scalar nature. ||x||

2

=

√

x

T

x designates Euclidean norm of

x. The vec(D) operator transforms D ∈ ℜ

d×n

into an dn-

dimensional vector by stacking the columns. ◦ denotes the

Hadamard or point-wise product. ⊗ denotes convolution.

1

k

∈ ℜ

k×1

is a vector of ones. I

k

∈ ℜ

k×k

is the identity.

aligns the data w.r.t. the subspace. Given an image d

i

,

subspace trackers or detectors find a and c

i

that min-

imize: min

c

i

,a

||d

i

(f(x, a)) −Bc

i

||

2

2

(or some normal-

ized error). In the case of an affine transformation,

f(x, a) =

a

1

a

2

+

a

3

a

4

a

5

a

6

x −x

c

y −y

c

where

a = (a

1

, a

2

, a

3

, a

4

, a

5

, a

6

) are the affine parameters and

x = (x

1

, y

1

, ···, x

n

, y

n

) is a vector containing the coor-

dinates of the pixels to track. If a = (a

1

, a

2

) is just

translation, the search can be done efficiently over

the whole image using the Fast Fourier Transform

(FFT). For a = (a

3

= a

6

, a

5

= a

4

), that is, for simi-

larity transformation, the search also can be done ef-

ficiently in the log-polar representation of the image

with the FFT.

2.2 Representation in Subspace

Analysis

Most work on AM uses some sort of normalized

graylevel to build the representation. However, re-

gions of graylevel values can suffer from large am-

biguities, camera noise, and changes in illumination.

More robust representation can be achieved by local

combination of pixels through filtering. Filtering of

the visual array is a key element of the primate visual

system (Rao and Ballard, 1995).

Representations for subspace recognition were ex-

plored by Bischof et al. (Bischof et al., 2004). In

the training stage, they built a subspace by filter-

ing the PCA-graylevel basis with steerable filters. In

the recognition phase, they filtered the test images

and performed robust matching, obtaining improved

recognition performance over graylevel. Cootes et. al

(Cootes and Taylor, 2001a) found that a non-linear

representation of edge structure could improve the

performance of model subspace matching and recog-

nition. De la Torre et al. (de la Torre et al., 2000)

found that subspace tracking was improved by using

a multiband representation created by filtering the im-

ages with a set of Gaussian filters and its derivatives.

Our work differs in several aspects from previous

work. First, we explicitly learn an optimal set of spa-

tial filters adapted to the object of interest rather than

using hand-picked ones. Once the filters are learned,

we build a multiband representation of the image that

has improved error surfaces with which to fit AM. We

evaluate quantitatively the properties of the error sur-

faces and show how FCA outperforms current meth-

ods in appearance based detection.

VISAPP 2007 - International Conference on Computer Vision Theory and Applications

208

3 FILTERED COMPONENT

ANALYSIS

Many component analysis methods (PCA, LDA, etc)

build data models based on the second order statistics

(covariance matrices) of the signal. In particular, PCA

finds a linear transformation that decorrelates the data

by exploiting the correlation across samples. PCA

models the correlation across pixels of different im-

ages, but not the spatial statistics within each of the

images. In this section, we propose Filtered Compo-

nent Analysis (FCA) that learns a bank of orthogonal

filters that decorrelate the spatial statistics of a set of

images. Once the FCA filters are learned, we build a

multi-band representation that generalizes better and

is more robust to different types of noise.

3.1 Learning Spatial Correlation

Previous research (de la Torre et al., 2000; Bischof

et al., 2004; Cootes and Taylor, 2001a) has shown

the importance of representation in AM. However, re-

searchers have used hand-picked filters to represent

the signal. Instead, FCA will learn a set of orthog-

onal spatial filters optimal for variance preservation.

Variance preservation of image spatial statistics is a

realistic assumption to build a generative model for

detection or tracking appearance.

Given a set of training images, D

d×n

, our aim is

to model the spatial statistics of the signal by learning

the filter F that minimizes:

E

1

(F, µ

µ

µ) = min

F,µ

µ

µ

n

∑

i=1

||d

i

⊗F −µ

µ

µ||

2

2

(1)

Recall that ⊗ denotes convolution, and µ

µ

µ =

1

n

∑

n

i=1

d

i

⊗F is the mean of the filtered signal. If µ

µ

µ

is known, the optimal F can be achieved by solving:

Avec(F) = b A =

∑

n

i=1

∑

(x,y)

d

(x,y)

i

d

(x,y)

i

T

b =

∑

n

i=1

∑

(x,y)

µ

µ

µ

(x,y)

◦d

(x,y)

i

(2)

where (x, y) is the domain where the convolution is

valid and d

(x,y)

i

is a patch of the filter size ( f

x

, f

y

)

centered at the coordinates (x, y). The matrix A can

be computed efficiently in space or frequency from

the autocorrelation function of d

i

. Analogously, b is

estimated from the cross-correlation between d

i

and

µ

µ

µ. Alternatively, one could use the integral image

(Lewis, 1995) to efficiently compute eq. 2.

Without imposing any constraints on the filter co-

efficients, the optimal solution of eq. 1 is given by

µ

µ

µ = 0 and F = 0 (although an iterative algorithm will

rarely converge to this solution). To avoid this trivial

solution, we impose that the sum of squared coeffi-

cients is 1, i.e. vec(F)

T

vec(F) = 1. The latter con-

straint can be elegantly solved by noticing that the

convolution is a linear operator, and , eq. 2 can be

rewritten as:

E

2

(F) = min

F

n

∑

i=1

||(d

i

−µ

µ

µ

0

) ⊗F||

2

2

(3)

where µ

µ

µ

0

=

1

n

∑

n

i=1

d

i

is the sample mean. Now

Eq. 3 can be solved by finding the eigenvec-

tor with smallest eigenvalue of A =

∑

n

i=1

∑

(x,y)

(d

i

−

µ

µ

µ

0

)

(x,y)

(d

i

−µ

µ

µ

0

)

(x,y)

T

(see eq. 2).

3.2 Learning a Multiband

Representation

In this section, we will build a multiband represen-

tation of the signal that preserves most spatial corre-

lation among a given training set. In particular, we

will find a set of filters F

1, ···,F

that decorrelate the

spatial statistics of the image and are orthogonal to

each other. Observe that FCA is analogous to PCA

but now rather than decorrelating the signal with the

covariance of the data, we decorrelate the spatial sta-

tistics.

In our particular tracking application, we are inter-

ested in finding a set of filters that preserve the spatial

statistics of the object of interest and has minimal re-

sponse to background. This filter set can be obtained

by maximizing E

F CA

(F

1, ···,F

):

E

F CA

=

F

∑

f =1

n

∑

i=1

||d

i

⊗F

f

||

2

2

−λ

n

2

∑

j=1

||d

b

j

⊗F

f

||

2

2

(4)

where d

b

j

denotes the j

th

sample of the background.

Let T = [vec(F

1

) vec(F

2

) ··· vec(F

F

)] be a matrix

of all the vectorized filters, the filters should satisfy

T

T

T = I

F×F

. After making the derivatives with re-

spect to F

f

, it can be shown that the optimal solutions

satisfies the following eigenvalue problem:

max

F

1, ···,F

∑

f

i=1

||(A −λBα)vec(F

i

)||

2

2

(5)

A =

∑

n

i=1

∑

(x,y)

d

(x,y)

i

d

(x,y)

i

T

α =

max(A)

max(B)

B =

∑

n

2

j=1

∑

(x,y)

d

b

j

(x,y)

d

b

j

(x,y)

T

s.t. vec(F

i

)

T

vec(F

j

) = 0 ∀i 6= j and

vec(F

i

)

T

vec(F

i

) = 1 ∀i

If λ is large, the set of filters will predominantly can-

cel the background. If λ is small the filters will be

adapted to the object.With λ close to one the filters

will achieve trade-off between modeling the signal

(i.e object) and removing the background. Typically

ROBUST APPEARANCE MATCHING WITH FILTERED COMPONENT ANALYSIS

209

0 ≤ λ ≤ 2. α is an artificially introduced parameter

that normalizes the energies of A and B.

The solution of eq. 5 is given by the leading eigen-

vectors of (A −λαB). At this point, it is interesting to

consider again the analogy with PCA. PCA will find

the leading eigenvectors of

∑

n

i=1

d

i

d

T

i

whereas FCA

will find the leading eigenvectors (assuming λ = 0)

A =

∑

n

i=1

∑

(x,y)

d

(x,y)

i

d

(x,y)

i

T

. While PCA finds the di-

rections of maximum variation of the covariance ma-

trix, FCA finds the directions of maximum variation

of the sum of all overlapping patches.

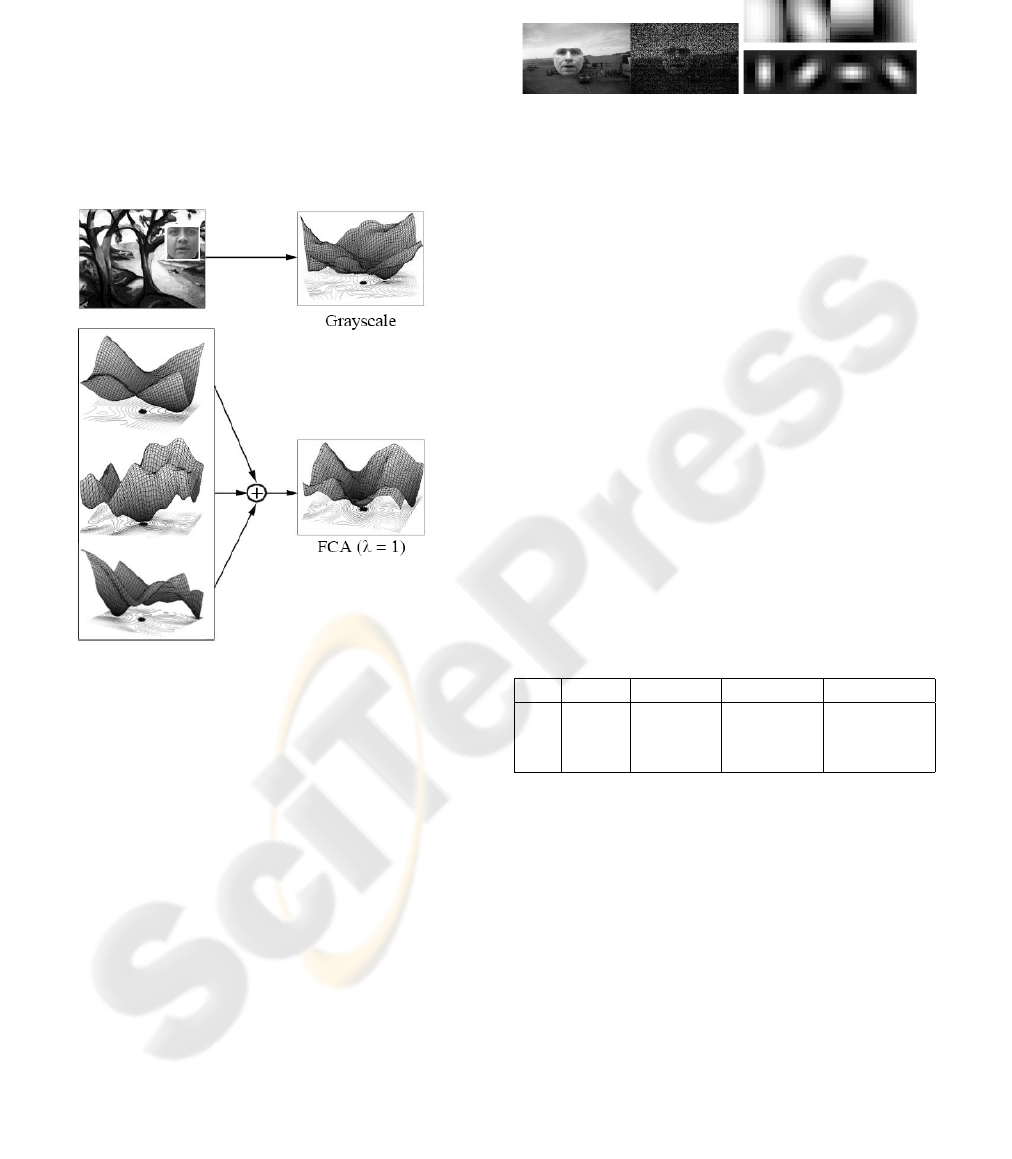

Figure 2: a) Training images of faces and background. b)

FCA filters for λ = 0, λ = 1 and size 11 ×11.

Fig. (2.a) shows many examples of faces and

background patches. Fig.(2.b) shows the set of FCA

filters for λ = 0 and λ = 1 for size 11 ×11. Observe

that the first FCA filter is an average filter, and the

other filters are differential filters at different orienta-

tions and scales.

3.3 Multiband Subspace Detection

In subspace detection, PCA is computed from a set of

training images. After the training stage, the goal is to

detect the object of interest over different orientation,

scales and translations. If the scale and orientation is

known, dectection can be achieved finding the trans-

lational parameters a = (a

1

, a

2

) that minimize:

E

3

= min

c

i

,a

||d

i

(x + a) −Bc

i

||

2

2

||d

i

(x + a)||

2

2

(6)

Evaluating eq. 6 at each location (x, y) can be com-

putationally expensive. For a particular position (x, y)

computing the coefficients (i.e. c

i

) is equivalent to

correlating the image with each basis of subspace B,

and stacking all values for each pixel. For large re-

gions, this correlation is performed efficiently in the

frequency domain using the Fast Fourier Transform

(FFT) (i.e. C

1

= b

T

1

I = IFFT (FFT (b

1

) ◦FFT (I))).

Similarly, the local energy term, ||d

i

(x + a)||

2

2

, can

be computed efficiently using the convolution in the

space or frequency domain. Alternatively, these ex-

pressions can be computed efficiently using the inte-

gral image (Lewis, 1995).

In multiband tracking, we represent an image as

a concatenation of filtered images. For a particular

image d

i

and a set of filters (F

1

, ···, F

f

), there are

several ways to modify eq. 6:

E

4

=

∑

F

f =1

β

f

||d

i

⊗F

f

−B

f

c

i

||

2

2

||d

i

⊗F

f

||

2

2

(7)

E

5

=

∑

F

f =1

β

f

||d

i

⊗F

f

−B

f

c

f

i

||

2

2

||d

i

⊗F

f

||

2

2

(8)

Parameters β

f

are the eigenvalues of (A −λαB), ob-

tained by FCA. E

4

filters the training images and

builds PCA based on the set of stacked filtered im-

ages. E

5

computes an independent PCA for each rep-

resentation such that the coefficients for each image

are uncoupled (i.e. c

f

i

differs for each filter).

4 EXPERIMENTS

To test the validity of our approach, we have per-

formed several sets of experiments in face detection

and facial feature tracking. The first set of experi-

ments consists on detecting a face embedded in an

arbitrary image (see fig. 1) using a generic model. In

the second set, we test the ability of FCA to improve

tracking in Active Appearance Models (Cootes and

Taylor, 2001b; Blanz and Vetter, 1999; Matthews and

Baker, 2004; de la Torre et al., 2000).

In all experiments a generic face model was built

from 150 subjects from the IBM ViaVoice AV data-

base (Neti et al., 2000), after aligning the data with

Procrustes Analysis(Cootes and Taylor, 2001b). Once

the FCA filters are learned, a multi-band represen-

tation is built for each of the 150 images, and PCA

is computed retaining 80% of the total energy. For

comparison purposes, multi-band PCA is also done

for other representations (e.g. Gabor, graylevel and

derivatives. In the experiments, we consider Gabor

Filters because of the good results reported by other

researchers in the area. In addition, these filters have

been shown to possess optimal localization proper-

ties in both spatial and frequency domain and thus are

well suited for tracking problems.

4.1 Understanding FCA

In order to compute FCA 150 subjects are selected

randomly from the IBM database. We also extract

2000 random patches from several images of the IBM

that do not contain faces. Using these training sam-

ples, we learn FCA filters at 5 different scales (3 ×3,

5 ×5, 7 ×7, 9×9 and 11×11 pixels), using eq. 5 for

different λ values.

VISAPP 2007 - International Conference on Computer Vision Theory and Applications

210

Given a new face image not present in the train-

ing set, we embedded it in a bigger background im-

age (see fig. 3). Then, we efficiently search over all

translations looking for a minimum of the subspace

model. Fig. 3 shows an example of the error surface

for each of the FCA bands, in comparison with the

error surfaces from normalized graylevel. As it can

be observed, Graylevel representation has several lo-

cal minima and the global minimum is misplaced. On

the other hand, the sum of the three FCA bands pro-

duces an error surface with a correctly-placed global

minimum.

Figure 3: Error surfaces for graylevel and each of the bands

for FCA.

4.2 Robustness to Noise/Illumination

This first experiment is designed to test the robust-

ness of FCA to noise and varying illumination condi-

tions. A subset of 100 subjects from the IBM data-

base (not in the training set) are randomly chosen and

embedded in background images. Then, random im-

pulsional noise is added (see fig. 4.a) and the error

in each location is efficiently computed (the orien-

tation and scale is known). To quantitatively com-

pare each filterbank, 3 different surface error statis-

tics have been calculated. Given a patch of 100 ×100

pixels around the optimal location of the face (which

is known beforehand), we compute the following sta-

tistics: 1) distance between the global minimum and

the face center, 2) distance between the correct mini-

mum and closest local minimum, 3) Amount of local

minima. The amount of local minima in an error sur-

face is calculated by counting those pixels with sign

change in x and y derivatives and positive values in

the second derivatives.

Figure 4: a) Original image and test image with added im-

pulsional noise. b) FCA(11,4) and Gabor (8,4).

Table 1 shows the average results for the described

error statistics for three representations: a set of four

11 ×11 pixels FCA filters (see fig. 4.a), the best-

performing 11 ×11 pixels Gabor filter (see fig. 4.b)

and the normalized graylevel. In all our experiments,

we report the results of the set of Gabor filters that

performs the best over several scales. A global mini-

mum is said to be correct if it falls within a region of

3 ×3 pixels around the theoretical minimum. All the

representations have similar accuracy; however, the

amount of local minima is very high in the grayscale,

and both grayscale and Gabor fail to provide a suffi-

ciently high global-closest minimum margin in com-

parison with FCA filters. These results are quite sta-

ble across spatial domains of the FCA filter sets and

have therefore been omitted in the interest of space.

Table 1: Experiments on noisy data. Statistics: (1) Per-

centage of correct global minimum. (2) distance between

correct and closest local minimum. (3) Average number of

local minima.

gray FCA

λ=0

FCA

λ=0.5

Gabor(8,4)

(1) 98 99 99 99

(2) 9.73 24.36 24.03 19.01

(3) 30.06 1.45 1.49 2.46

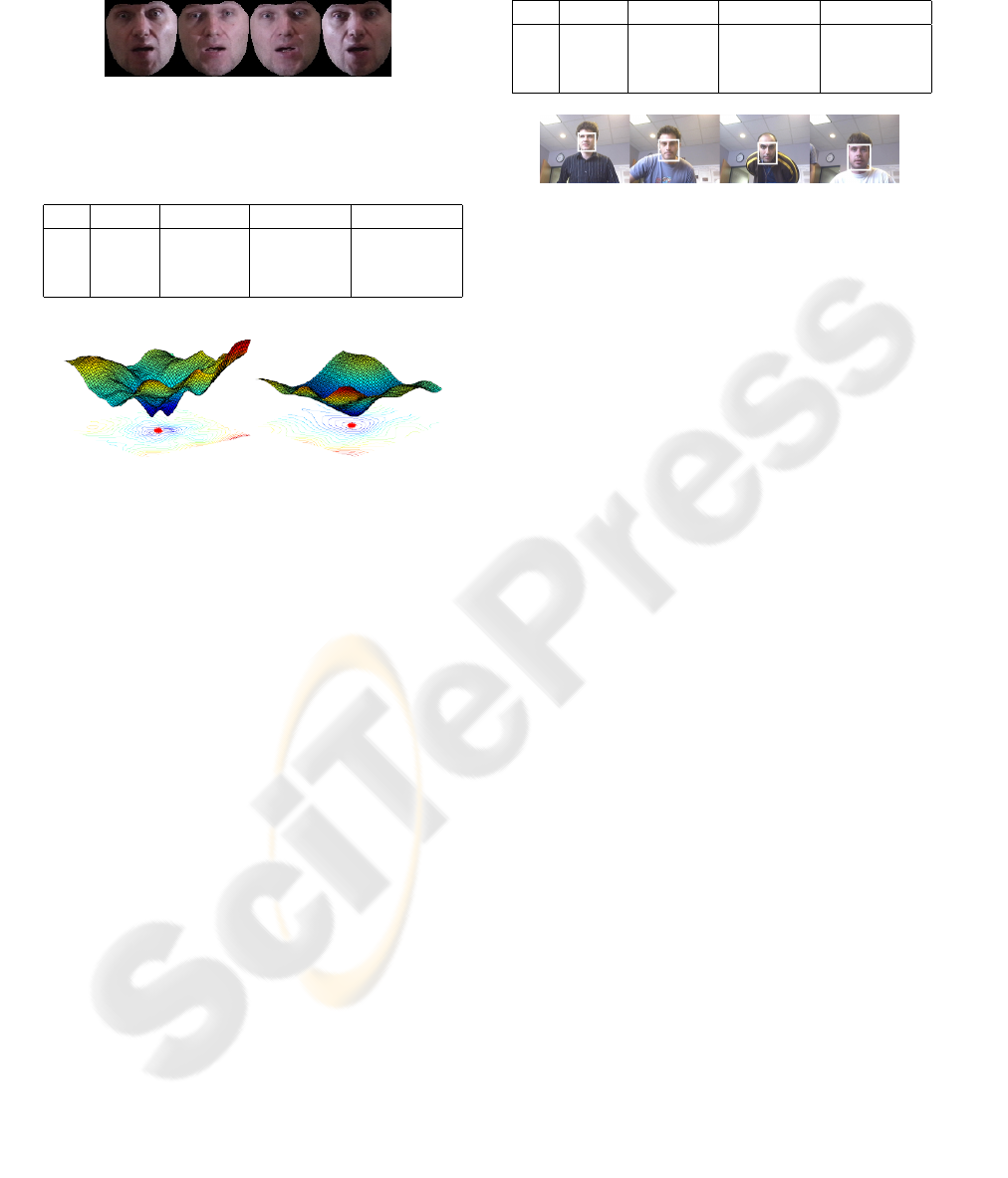

In the next experiment we test the robustness of

FCA to illumination changes. We take 4 images un-

der varying illumination conditions (see fig. 5) for 30

subjects from the PIE database(Sim et al., 2002) (to-

tal 120 images). We embedded this face into an image

and compute the error surfaces. Results from this ex-

periment can be seen in table 2. In this case, FCA

clearly outperforms any other technique in all three

statistics of the error function. The accuracy is higher

than grayscale and Gabor by 33% and 12% resp.,

while keeping the closest minimum 25.37% pixels

further away and the density of local minima is the

lowest one. It is worth noting that the best-performing

filters have been FCA

λ=0

(no background). Fig. 6

shows the error surface for a particular subject; as we

can observe, the properties of FCA are more desirable

ROBUST APPEARANCE MATCHING WITH FILTERED COMPONENT ANALYSIS

211

than graylevel or Gabor filters in terms of location and

density of local minima.

Figure 5: Changes in illumination on the PIE database.

Table 2: Experiments on illumination.(1),(2),(3) see table1.

gray FCA

λ=0

FCA

λ=0.5

Gabor(8,4)

(1) 41 74 73 62

(2) 14.59 26.37 26.04 19.68

(3) 3.28 1.4 1.41 1.92

Figure 6: Error surface for graylevel and FC A

λ=0

(11, 4).

The last experiment of this section explores FCA

performance on images taken in the lab. 10 images

have been collected in the lab (see Fig. 7) with an

inexpensive webcam, and roughly selecting the same

scale manually. Table 3 shows the detection results

of this experiment. As we can see FCA consistently

outperforms other representations that included Ga-

bor and graylevel in all metrics.

5 CONCLUSIONS AND FUTURE

WORK

In this paper, we have proposed FCA to build a multi-

band representation of the image to achieve more

robust fitting and detection with appearance mod-

els. FCA outperforms Gabor, oriented pair filters and

graylevel representations. Additionally, we have in-

troduced quantitative metrics for evaluating the error

surface. FCA has shown promising results, however

future work should consider the use of different con-

straints for the filters (e.g. vec(F)

T

1

f

x

×f

y

= 1). Also,

it will be worth to explore the use of some recent non-

linear filters.

Acknowledgements The work was partially sup-

ported by National Institute of Justice award 2005-IJ-

CX-K067 and NIMH grant. Thanks to Iain Matthews

and Simon Lucey for helpful discussions and com-

ments.

Table 3: Experiments on images taken in the lab.(1), (2), (3)

see table 1.

gray FCA

λ=0

FCA

λ=0.5

Gabor(8,4)

(1) 20 80 80 70

(2) 15.71 18.05 25.52 13.53

(3) 2 2 1.2 2.4

Figure 7: Some test images.

REFERENCES

Bischof, H., Wildenauer, H., and Leonardis, A. (2004). Il-

lumination insensitive recognition using eigenspaces.

Computer Vision and Image Understanding, 1(95):86

– 104.

Black, M. J. and Jepson, A. D. (1998). Eigentracking:

Robust matching and tracking of objects using view-

based representation. International Journal of Com-

puter Vision, 26(1):63–84.

Blanz, V. and Vetter, T. (1999). A morphable model for the

synthesis of 3d faces. In SIGGRAPH.

Cootes, T. and Taylor, C. (2001a). On representing edge

structure for model matching. In CVPR.

Cootes, T. F. and Taylor, C. J. (2001b). Statistical

models of appearance for computer vision. In

http://www.isbe.man.ac.uk/bim/refs.html.

de la Torre, F. and Black, M. J. (2003). Robust parame-

terized component analysis: theory and applications

to 2d facial appearance models. Computer Vision and

Image Understanding, 91:53 – 71.

de la Torre, F., Vitri

`

a, J., Radeva, P., and Melench

´

on, J.

(2000). Eigenfiltering for flexible eigentracking. In In-

ternational Conference on Pattern Recognition, pages

1118–1121.

Lewis, J. P. (1995). Fast normalized cross-correlation. In

Vision Interface.

Matthews, I. and Baker, S. (2004). Active appearance mod-

els revisited. International Journal of Computer Vi-

sion, 60(2):135–164.

Neti, C., Potamianos, G., Luettin, J., Matthews, I., Glotin,

H., Vergyri, D., Sison, J., Mashari, A., and Zhou,

J. (2000). Audio-visual speech recognition. Tech-

nical Report WS00AVSR, Johns Hopkins University,

CLSP.

Rao, R. and Ballard, D. (1995). An active vision architec-

ture based on iconic representations. Artificial Intelli-

gence, 12:441–444.

Sim, T., Baker, S., and Bsat, M. (2002). The cmu pose,

illumination, and expression (pie) database. In IEEE

Conference on Automatic Face and Gesture Recogni-

tion.

VISAPP 2007 - International Conference on Computer Vision Theory and Applications

212