ADAPTIVE AND COOPERATIVE SEGMENTATION SYSTEM FOR

MONO- AND MULTI-COMPONENT IMAGES

Madjid Moghrani, Claude Cariou and Kacem Chehdi

TSI2M Laboratory, University of Rennes 1 / ENSSAT, 6 rue de Kerampont, 22300 Lannion, France

Keywords:

Image segmentation, Classification, Detection, Texture features, Adaptive segmentation, Multi-component

imagery.

Abstract:

We present a cooperative and adaptive system for multi-component image segmentation, in which segmenta-

tion methods used are based upon the classification of pixels represented by statistical features chosen with

respect to the nature of the regions to segment. One originality of this system is its adaptive characteristic:

it allows taking into account the local context in the image to automatically adapt the segmentation process

to the nature of specific regions which can be uniform or textured. The method used for the detection of the

regions’ nature is based on a classification of pixels with respect to the uniformity index of Haralick. Then a

cooperative approach is set up for the textured areas which can combine results incoming from different clas-

sification methods and choose the best result at the pixel level using an assessment index. In order to validate

the system and show the relevance of the adaptive procedure used, experimental results are presented for the

segmentation of synthetic and real multi-component CASI images.

1 INTRODUCTION

Segmentation is a central step in image processing be-

cause it largely conditions the quality of a further in-

terpretation. Referring to the literature, one realizes

that image segmentation is a difficult problem which

is far from being solved and that numerous works

are still dedicated to it (Leung et al., 2004), (Clausi

and Deng, 2005), (Chung et al., 2005). Many ex-

isting methods provide satisfactory results when ap-

plied to a particular image type, or require some prior

knowledge – which is not always available – to per-

form well. Existing segmentation methods are based

either on the merging of pixels with similar charac-

teristics (Jain et al., 1999), some use a spatial-based

strategy, and some others use theoretical approaches

such as fuzzy sets. Moreover, the increasing use of

multi-modal and multispectral imaging systems also

makes this task more and more difficult.

It is generally recognized that using only one seg-

mentation technique cannot handle the sets of all re-

gion primitives in an image, and that a cooperation of

several techniques often provides better results.

In this communication, we present a parallel adap-

tive and cooperative approach for the segmentation by

classification of multi-component images. This ap-

proach is original in the sense of its adaptive abilities.

Indeed, it allows to account for the local context in

the image to reduce the complexity of the segmenta-

tion task. This is done by first separating the uniform

regions (non textured) from the textured regions be-

fore each type of region is processed independently.

This approach, previously developed in (Rosenberger

and Chehdi, 2003) in the case of mono-component

images, is herein optimized and extended to the case

of multi-component images through a scalar scheme.

The organization of this paper will be the follow-

ing. In the next section, we shall first describe our

methodology and the proposed adaptive and coopera-

tive approach in details. Then we shall present some

experiments and results which were obtained by ap-

plying our approach on synthetic and multispectral

CASI images. A conclusion will follow in the last

section.

204

Moghrani M., Cariou C. and Chehdi K. (2007).

ADAPTIVE AND COOPERATIVE SEGMENTATION SYSTEM FOR MONO- AND MULTI-COMPONENT IMAGES.

In Proceedings of the Second International Conference on Signal Processing and Multimedia Applications, pages 200-203

DOI: 10.5220/0002141002000203

Copyright

c

SciTePress

2 THE DEVELOPED SYSTEM

The literature abounds of segmentation techniques

which can be used for mono- and multi-component

images. However, one can categorize them into dif-

ferent classes which are structural methods, statistical

methods and hybrid methods. In the present study,

we have chosen to deal with the statistical approach

of segmentation by unsupervised classification. The

idea is to bring as few prior information as possible to

the classification process, in order for this approach

to preserve its generality and to be able to handle ei-

ther the cases of regions with low and high complexity

content.

However, the amount of possible statistical fea-

tures which are available to operate a classification

rapidly becomes a problem because it provides a large

quantity of information which is not always neces-

sary to reach the segmentation goal. This problem

is eventually more crucial when dealing with multi-

component images, for which the multiplicity of po-

tential features is very large with respect to the true

quantity of information of the image.

The segmentation approach proposed herein can

avoid this problem, by splitting the sets of low and

high complexity regions (typically uniform and tex-

tured regions), in a way to extract adapted statisti-

cal features which will be then used in a classifica-

tion step. This corresponds to the adaptive skills of

our approach. Moreover, a cooperative procedure is

also considered next. Indeed, for the textured regions

which are detected, we set up a competitive scheme

involving several classification methods and an inter-

mediary fusion process. In this way, the processing of

multi-component images is simplified by a fusion of

the segmentation results which are obtained for each

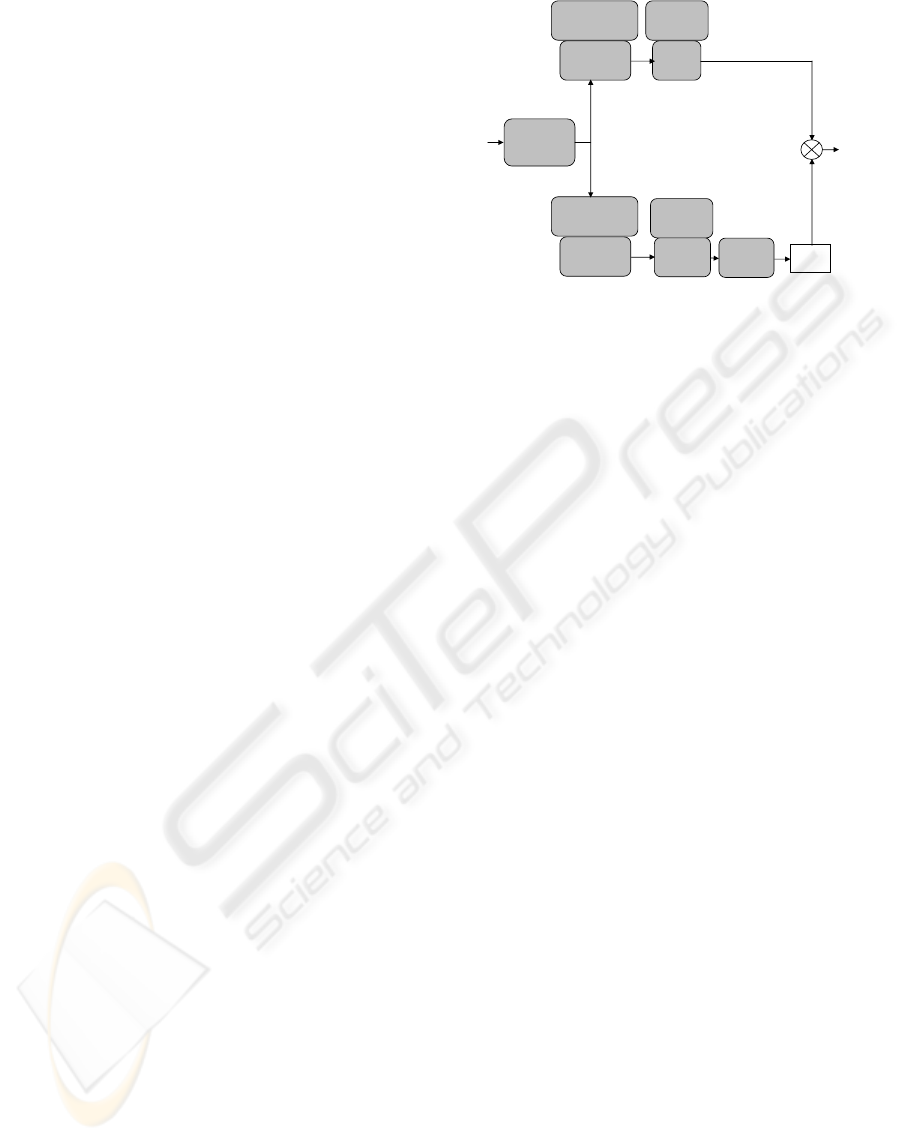

image component. An overview of the proposed sys-

tem for mono-component image is shown in Figure

1. This system is made of three modules: the first

one performs the detection of the regions’ nature, the

second one performs the classification of pixels based

on statistical features selected conditionally to the re-

gions nature, and the last one performs the fusion of

the conditional classifications.

2.1 Detection of the Regions’ Nature

Before we operate the segmentation by pixel classi-

fication in itself, we propose to first identify the low

complexity, uniform regions which are present in a

mono-component image. For this, we make use of the

uniformity index of Haralick (Haralick, 1973), which

is issued from the co-occurrence matrices. The uni-

formity index characterizes the frequency of occur-

Segmentation

result

Image

Fusion operator

+

+

Fusion

Classification

FCM

LBG

FCM

K-means

Detection of the

regions’ nature

Feature

extraction

Feature

extraction

Average

feature

Textured

features

Evaluation

Classification

Figure 1: Overview of the proposed parallel segmentation

system.

rences of identical intensity levels between neighbor-

ing pixels. In our case, it is calculated by averaging

the traces of the co-occurrence matrices computed at

directions 0, 45, 90 and 135 degrees and unit distance

after re-quantization of pixel intensities to 32 levels.

In order to detect the regions’ nature, we propose

to perform a classification of pixels by means of the

fuzzy c-means (FCM) algorithm and to use unifor-

mity measurements into a feature vector for each in-

dividual. This vector corresponds to uniformity in-

dices computed in a multi-resolution scheme within

five analysis windows with different sizes (3x3, 5x5,

9x9, 13x13 and 17x17) surrounding the pixel to which

it is affected.

To validate our approach, we have performed tests

using 100 synthetic images made of three uniform re-

gions (constant plus Gaussian noise with standard de-

viation σ = 5) and two textured regions using Brodatz

textures (Brodatz, 1966). The average correct detec-

tion rate was found to be greater than 94%. Figure 2

shows a result of the detection of the regions’ nature

on two sample synthetic images. The detection re-

sults are visually coherent, the uniform and textured

regions being correctly identified for the three consid-

ered bands.

2.2 Adaptive Partitioning of Uniform

and Textured Regions

2.2.1 Features Extraction

This module consists in extracting the features which

are adapted to the nature of the region under consid-

eration. For uniform regions, we have used a single

feature which is the local average intensity computed

within a 3x3 window, which we assume to be a suf-

ficient statistic to describe low complexity regions.

For textured regions, we have used 23 classical tex-

ADAPTIVE AND COOPERATIVE SEGMENTATION SYSTEM FOR MONO- AND MULTI-COMPONENT IMAGES

205

Figure 2: Detection of the regions’ nature. Column 1 : orig-

inal images; column 2: detection result (textured regions are

in black).

ture features, namely: the first four FOS (first order

statistics) (mean, variance, skewness, kurtosis), and a

set of 19 texture features obtained after reduction of

30 features by the method described in (Rosenberger

and Cariou, 2001).

2.2.2 Adaptive Classification

In a way to obtain an automatic and segmentation sys-

tem, we have chosen to perform the segmentation via

an unsupervised classification approach. For this, we

have selected three classifiers, namely the classical k-

means (MacQueen, 1967), the fuzzy c-means (FCM,

(Bezdek, 1981)), and a modified version of the Linde-

Buzo-Gray classifier (LBG, (Linde et al., 1980)) de-

scribed in (Rosenberger and Chehdi, 2003).

The choice of these techniques is motivated by

their good behavior for the unsupervised classifica-

tion of large datasets, which is interesting for instance

in multispectral image segmentation. To simplify our

system, we have used only the FCM algorithm to clas-

sify pixels which belong to the uniformed regions pre-

viously detected.

For the textured regions, we have chosen to set up

a competition between the three retained classifiers.

This means that the pixels which belong to the tex-

tured regions are classified in parallel with the three

algorithms, providing three different classification re-

sults and corresponding segmentations. The resulting

partitions are then analyzed to keep the most coherent

ones, through an assessment procedure.

2.2.3 Assessment of Classification Results

The assessment of a classification result requires to

set up a measure of the coherence of the result. In

our system, we have adopted the measure of the intra-

class disparity presented in (Rosenberger and Chehdi,

2003) as a coherence measure. We have experimented

this step by using a set of 10 synthetic images (with

ground truth) similar to those presented in Figure 2.

More precisely, we have computed the correct classi-

fication rate and the corresponding assessment index

obtained after processing the textured regions. The

magnitudes of the correlation ratios between the two

variables (FCM: 0.52 ; k-means: 0.78 ; LBG: 0.85)

are enough high to motivate the use of the intra-class

disparity as a measure of the validity of the clusters

provided by the classification method.

2.2.4 Fusion of Parallel Segmentation Results

Fusion is an important task in our system in that it

must take into account the most reliable among inter-

mediary results. Many fusion methods can be consid-

ered (Bloch, 2003), but they generally require some

prior knowledge or information which may not be

available in practice to the user.

In this work, we introduce a fusion method for tex-

tured regions for which competitive classifications are

set up. The fusion is based upon the assessment of

the clusters derived from the previous step, and which

is very simple to implement. Indeed, for every pixel

within textured regions, the output classification is

taken as the result of the classification method which

provided the best assessment index (i.e. the lowest

intra-class disparity) among the three classification

results (given by k-means, FCM, and LBG). Next, the

fusion between the uniform and textured regions is

performed by simply mapping the corresponding seg-

mentations into a final result.

In the case of multispectral images, the fusion of

the classification results obtained for each spectral

band is reported in a final segmentation in a similar

way, by accounting for the assessment index available

for every region in each band.

3 EXPERIMENTAL RESULTS

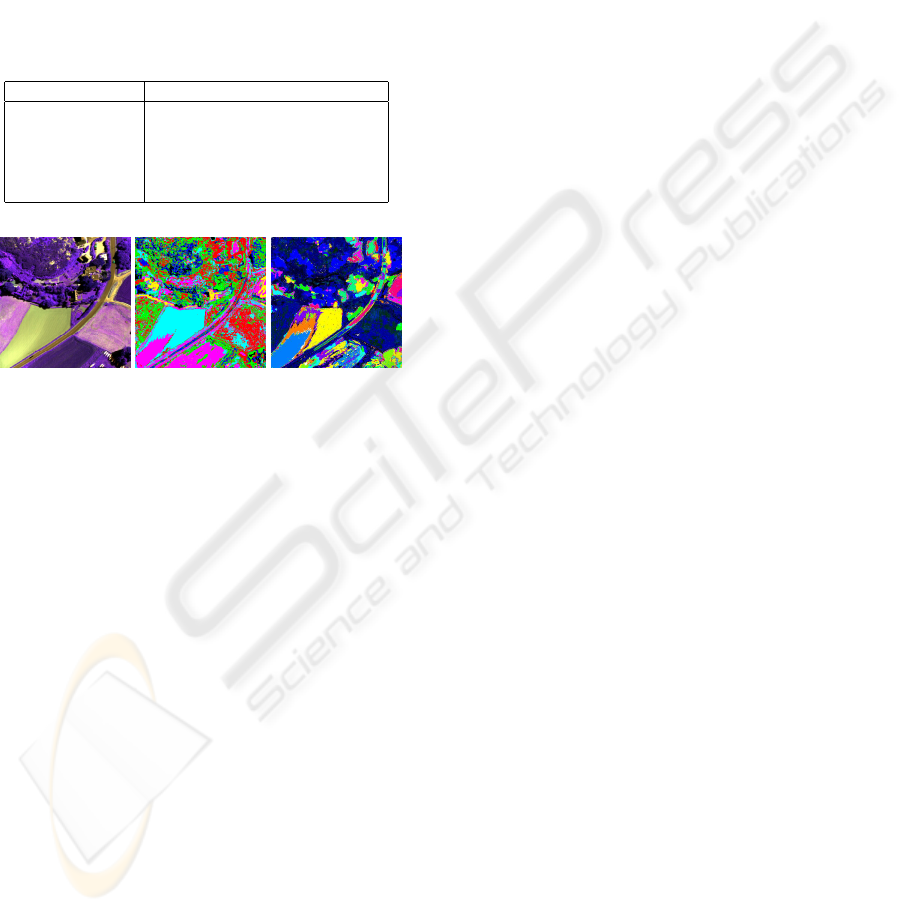

To validate our approach, we have used three syn-

thetic images from the image database described

above, and remote sensing images acquired by a

CASI multispectral sensor. In the case of synthetic

images, Table 1 gives the mean rate of correct regions’

nature detection (RND) as well as the final classifica-

tion mean rate obtained with such a prior detection.

These results show the relevance and the efficiency

of our approach of prior identification of the regions’

nature when compared to the blind approach, i.e. the

use of the same classifier (here the FCM) to segment

the whole image. Figure 3 depicts the segmentation

results obtained for a 3-bands CASI image. In this

case, the RND and the different adapted classifica-

SIGMAP 2007 - International Conference on Signal Processing and Multimedia Applications

206

tions methods are applied band by band, then a fu-

sion procedure takes place at the end. The differ-

ent structures are correctly emphasized (roads, fields,

trees). However, the final fusion result shows an over-

segmentation of the areas which reveals the richness

of the spectral information and the complementarity

of this information in different spectral bands. De-

spite the fact that no ground truth was available to as-

sess this result, one can notice the improvement in the

classification induced by our methodology in compar-

ison with the blind classification.

Table 1: Classification rates for synthetic images.

Average classification rate (%)

RND 99.05

uniform regions 98.83

textured regions 96.91

final 96.69

without RND 73.48

Figure 3: Classification of a multi-band CASI image. Left:

original 3 band image; Middle: fusion result without the

RND; Right: fusion result with prior RND.

4 CONCLUSION

In this communication, we have presented a cooper-

ative and adaptive system dedicated to the segmenta-

tion of mono- and multi-component images. Firstly

we have shown that a prior partitioning of a mono-

component image into two distinct classes is impor-

tant to correctly adapt segmentation methods to the

more or less complex nature of the local information.

Moreover, the gain in computation load is significant

because the feature extraction is drastically reduced

on uniform regions. Secondly, we have introduced

a cooperative process between classification methods

which enables to select the best results from each,

based on the use of an assessment index of cluster

coherence. Our preliminary results on synthetic im-

ages are quite encouraging and at least far better than

a direct classification approach without prior identi-

fication of the regions’ nature. Finally, we have ex-

tended this methodology to multi-component images

and tested it on a real CASI image. Once more, the

results are coherent and better than those obtained by

a direct approach, despite our method deserves to be

validated on a significant set of real images with avail-

able ground truth.

ACKNOWLEDGEMENTS

This work is supported by the European Union and

co-financed by the ERDF and the Regional Council of

Brittany through the Interreg3B project number 190

PIMHAI.

REFERENCES

Bezdek, J. (1981). Pattern recognition with fuzzy objective

function algorithms. Plenum Press, New York.

Bloch, I. (2003). Fusion d’informations en traitement du

signal et des images. Lavoisier, Paris (in French).

Brodatz, P. (1966). Textures: A Photographic Album for

Artists and Designers. Dover Publications, New York.

Chung, R. H. Y., Yung, N. H. C., and Cheung, P. Y. S.

(2005). An efficient parameterless quadrilateral-

based image segmentation method. IEEE Transac-

tions on Pattern Analysis and Machine Intelligence,

27(9):1446–1458.

Clausi, D. and Deng, H. (2005). Design-based texture fea-

ture fusion using Gabor filter an co-occurrence prob-

abilities. IEEE Transactions on Image Processing,

14:925–936.

Haralick, R. (1973). Textural features for image classifica-

tion. IEEE Transactions on Systems, Man, and Cyber-

netics, 3(6):610–621.

Jain, A. K., Murty, M., and Flynn, P. (1999). Data cluster-

ing: a review. ACM computing Surveys, 31(3):265–

322.

Leung, S. H., Wang, S. L., and Lau, W. H. (2004). Lip im-

age segmentation using fuzzy clustering incorporating

an elliptic shape function. IEEE Transactions on Im-

age Processing, 13:63–71.

Linde, Y., Buzo, A., and Gray, R. (1980). An algorithm for

vector quantizer design. IEEE Transaction on Com-

munications, 28(1):84–94.

MacQueen, J. (1967). Some methods for classification and

analysis of multivariate observations. Proceedings of

the Fifth Berkeley Symposium on Mathematical Statis-

tics and Probability, 1:281–297.

Rosenberger, C. and Cariou, C. (2001). Contribution to tex-

ture analysis. In Proc. Intern. Conf. on Quality Con-

trol and Artificial Vision (QCAV 2001), Le Creusot,

France.

Rosenberger, C. and Chehdi, K. (2003). Unsupervised

segmentation of multi-spectral images. In Proc. In-

tern. Conf. on Advanced Concepts for Intelligent Vi-

sion Systems (ACIVS 2003), Ghent, Belgium.

ADAPTIVE AND COOPERATIVE SEGMENTATION SYSTEM FOR MONO- AND MULTI-COMPONENT IMAGES

207