RECURRENT NEURAL NETWORKS APPROACH TO THE

DETECTION OF SQL ATTACKS

Jaroslaw Skaruz

Institute of Computer Science, University of Podlasie, Sienkiewicza 51, 08-110 Siedlce, Poland

Franciszek Seredynski

Institute of Computer Science, Polish Academy of Sciences, Ordona 21, 01-237 Warsaw, Poland

Pascal Bouvry

Faculty of Sciences, Technology and Communication, University of Luxembourg, 6 rue Coudenhove Kalergi, Luxembourg

Keywords:

Recurrent neural network, Elman, Jordan, database security, anomaly detection.

Abstract:

In the paper we present a new approach based on application of neural networks to detect SQL attacks. SQL

attacks are those attacks that take advantage of using SQL statements to be performed. The problem of

detection of this class of attacks is transformed to time series prediction problem. SQL queries are used as a

source of events in a protected environment. To differentiate between normal SQL queries and those sent by

an attacker, we divide SQL statements into tokens and pass them to our detection system, which predicts the

next token, taking into account previously seen tokens. In the learning phase tokens are passed to recurrent

neural network (RNN) trained by backpropagation through time (BPTT) algorithm. Teaching data are shifted

by one token forward in time with relation to input. The purpose of the testing phase is to predict the next

token in the sequence. All experiments were conducted on Jordan and Elman networks using data gathered

from PHP Nuke portal. Experimental results show that the Jordan network outperforms the Elman network

predicting correctly queries of the length up to ten.

1 INTRODUCTION

Large number of Web applications, especially those

deployed for companies to e-business purpose involve

data integrity and confidentiality. Such applications

are written in script languages like PHP embedded in

HTML allowing to establish connection to databases,

retrieving data and putting them in WWW site. Be-

sides that all Web contents is often based on the

retrieved data, a database also stores sensitive user

typed data like credit card numbers and personal in-

formation. Security violations consist in not autho-

rized access and modification of data in the database.

SQL is one of languages used to manage data in

databases. Its statements can be one of sources of

events for potential attacks. One of the ideas to detect

an intruder using SQL statements is to build a profile

of normal behavior and in detection stage compare it

with observed events.

In the literature there are some approaches to in-

trusion detection in Web applications. In (Valeur

et al., 2005) the authors developed anomaly-based

system that learns the profiles of the normal database

access performed by web-based applications using a

number of different models. A profile is a set of

models, to which parts of SQL statement are fed to

in order to train the set of models or to generate an

anomaly score. During training phase models are

built based on training data and anomaly score is cal-

culated. For each model, the maximum of anomaly

score is stored and used to set an anomaly threshold.

During detection phase, for each SQL query anomaly

score is calculated. If it exceeds the maximum of

anomaly score evaluated during training phase, the

query is considered to be anomalous. Decreasing

false positive alerts involves creating models for cus-

tom data types for each application to which this sys-

tem is applied.

Besides that work, there are some other works

on detecting attacks on a Web server which con-

191

Skaruz J., Seredynski F. and Bouvry P. (2007).

RECURRENT NEURAL NETWORKS APPROACH TO THE DETECTION OF SQL ATTACKS.

In Proceedings of the Ninth International Conference on Enterprise Information Systems - AIDSS, pages 191-197

DOI: 10.5220/0002352901910197

Copyright

c

SciTePress

stitutes a part of infrastructure for Web applica-

tions. In (Kruegel and Vigna, 2003) detection sys-

tem correlates the server-side programs referenced by

clients queries with the parameters contained in these

queries. It is similar approach to detection to the

previous work. The system analyzes HTTP requests

and builds data model based on attribute length of re-

quests, attribute character distribution, structural in-

ference and attribute order. In a detection phase built

model is used for comparing requests of clients.

In (Almgren et al., 2000) logs of Web server are

analyzed to look for security violations. However,

the proposed system is prone to high rates of false

alarm. To decrease it, some site-specific available in-

formation should be taken into account which is not

portable.

In this work we present a new approach to in-

trusion detection in Web application. Rather than

building profiles of normal behavior we focus on a

sequence of tokens within SQL statements observed

during normal use of application. Two architectures

of RNN are used to encode stream of such SQL state-

ments.

The paper is organized as follows. The next sec-

tion discusses SQL attacks. In section 3 we present

two architectures of RNN. Section 4 shows training

and testing data used for experiments. Next, section

5 contains experimental results. Last section summa-

rizes results and shows possible future work.

2 SQL ATTACKS

2.1 SQL Injection

SQL injection attack consists in such a manipulation

of an application communicating with a database, that

it allows a user to gain access or to allow it to modify

data for which it has not privileges. To perform an

attack in the most cases Web forms are used to inject

part of SQL query. Typing SQL keywords and con-

trol signs an intruder is able to change the structure of

SQL query developed by a Web designer. It is pos-

sible because parts of SQL statements depend on the

data typed by a user. If variables used in SQL query

are under control of a user, he can modify SQL query

which will cause change of its meaning. Consider an

example of a poor quality code written in PHP pre-

sented below.

$connection=mysql_connect();

mysql_select_db("test");

$user=$HTTP_GET_VARS[’username’];

$pass=$HTTP_GET_VARS[’password’];

$query="select * from users where

login=’$user’ and password=’$pass’";

$result=mysql_query($query);

if(mysql_num_rows($result)==1)

echo "authorization successful"

else

echo "authorization failed";

The code is responsible for authorizing users. User

data typed in a Web form are assigned to variables

user and pass and then passed to the SQL statement.

If retrieved data include one row it means that a user

filled in the form login and password the same as

stored in the database. Because data sent by a Web

form are not analyzed, a user is free to inject any

strings. For example, an intruder can type: ”’ or 1=1

–” in the login field leaving the password field empty.

The structure of SQL query will be changed as pre-

sented below.

$query="select * from users where login

=’’ or 1=1 --’ and password=’’";

Two dashes comments the following text. Boolean

expression 1=1 is always true and as a result user will

be logged with privileges of the first user stored in the

table users.

2.2 Proposed Approach

The way we detect intruders can be easily trans-

formed to time series prediction problem. Accord-

ing to (Nunn and White, 2005) a time series is a se-

quence of data collected from some system by sam-

pling a system property, usually at regular time inter-

vals. One of the goal of the analysis of time series

is to forecast the next value in the sequence based on

values occurred in the past. The problem can be more

precisely formulated as follows:

s

t−2

, s

t−1

, s

t

−→ s

t+1

, (1)

where s is any signal, which is dependent on a solv-

ing problem and t is a current moment in time. Given

s

t−2

, s

t−1

, s

t

, we want to predict s

t+1

. In the prob-

lem of detection SQL attacks, each SQL statement is

divided into some signals, which we further call to-

kens. The idea of detecting SQL attacks is based on

their key feature. SQL injection and XSS attacks in-

volve modification of SQL statement, which lead to

the fact, that the sequence of tokens extracted from a

modified SQL statement is different than the sequence

of tokens derived from a legal SQL statement. For

example, let S means recorded SQL statement and

T

1

, T

2

, T

3

, T

4

, T

5

tokens of this SQL statement. The

original sequence of tokens is as follows:

T

1

, T

2

, T

3

, T

4

, T

5

. (2)

ICEIS 2007 - International Conference on Enterprise Information Systems

192

If an intruder performs an attack, the form of SQL

statement changes. Transformation of the modified

statement to tokens results in different tokens than

these shown in eq.(2). The example of a sequence of

tokens related to modified SQL query is as follows:

T

1

, T

2

, T

mod3

, T

mod4

, T

mod5

. (3)

Tokens number 3, 4, 5 are modified due to an intruder

activity. We assume that intrusion detection system

trained on original SQL statements is able to predict

the next token based on the tokens from the past. If

the token T

1

occurs, the system should predict token

T

2

, next token T

3

is expected. In case of attacks token

T

mod3

occurs which is different than T

3

, which means

that an attack is performed.

Various techniques have been used to analyze time

series (Kendall and Ord, 1999; Pollock, 1999). Be-

sides statistical methods, RNNs have been widely

used for that problem. In our study presented in this

paper we selected two RNNs, the Elman and the Jor-

dan networks.

3 RECURRENT NEURAL

NETWORKS

3.1 General Issues

In comparison to feedforward neural networks RNN

have feedback connections which provide dynamics.

When they process information, output neurons signal

depends on input and activation of neurons in the pre-

vious steps of teaching RNN. However, RNNs suffer

vanishing gradient (Lin et al., 1996). This is the main

reason why gradient-descent algorithms are not suffi-

ciently powerful to map relationship between output

of RNN and input that occur much earlier in time.

In (Lin et al., 1996) the authors compared the El-

man network with neural network based on NARX

model. The model assumes that output neuron signals

from n times in back are passed to the hidden layer

neurons. This partially overcome vanishing gradi-

ent effect. Some researchers introduce modifications

to known architectures of RNN to improve teach-

ing process. In (Drake and Miller, 2002) additional

self-feedback connection to context layer neurons of

the Elman network was added. Experimental results

show that error of network when weight of additional

connection is fixed is smaller than error of the Elman

network.

3.2 RNN Architectures

There are some differences between the Elman and

the Jordan networks. The first is that input signal

for context layer neurons comes from different layers

and the second is that Jordan network has additional

feedback connection in the context layer. While in

the Elman network the size of the context layer is the

same as the size of the hidden layer, in the Jordan net-

work the size of output layer and context layer is the

same. In both networks recurrent connections have

fixed weight equal to 1.0. Networks were trained by

BPTT and the following equations are applied for the

Elman network:

x(k) = [x

1

(k), ..., x

N

(k), v

1

(k− 1), ..., v

K

(k− 1)], (4)

u

j

(k) =

N+K

∑

i=1

w

(1)

ij

x

i

(k), v

j

(k) = f(u

j

(k)), (5)

g

j

(k) =

K

∑

i=1

w

(2)

ij

v

i

(k), y

j

(k) = f(g

j

(k)), (6)

E(k) = 0.5

M

∑

i=1

[y

i

(k) − d

i

(k)]

2

, (7)

δ

(o)

i

(k) = [y

i

(k) − d

i

(k)] f

‘

(g

i

(k)), (8)

δ

(h)

i

(k) = f

‘

(u

i

(k))

K

∑

j=1

δ

(o)

j

(k)w

(2)

ij

, (9)

w

ij

(k+ 1)

(2)

= w

ij

(k)

(2)

+

sql−length

∑

k=1

[v

i

(k)δ

(o)

j

(k)],

(10)

w

ij

(k+ 1)

(1)

= w

ij

(k)

(1)

+

sql−length

∑

k=1

[x

i

(k)δ

(h)

j

(k)].

(11)

In the equations (4)-(11), N, K, M stand for the size

of the input, hidden and output layers, respectively.

x(k) is an input vector, u

j

(k) and g

j

(k) are input sig-

nals provided to the hidden and output layer neurons.

Next, v

j

(k) and y

j

(k) stand for the activations of the

neurons in the hidden and output layer at time k, re-

spectively. The equation (7) shows how RNN error

is computed, while neurons error in the output and

hidden layers are evaluated according to (8) and (9),

respectively. Finally, in the last step values of weights

are changed using formulas (10) for the output layer

and (11) for the hidden layer.

3.3 Training

The training process of RNN is performed as follows.

The tokens of the SQL statement become input of a

network. Activations of all neurons are computed.

RECURRENT NEURAL NETWORKS APPROACH TO THE DETECTION OF SQL ATTACKS

193

Table 1: A part of a list of tokens and their indexes.

token index

... ...

WHERE 7

... ...

FROM string 28

... ...

SELECT string 36

... ...

string=number 47

... ...

INSERT INTO 54

a)

b)

vector 1

vector 2

vector 3

vector 4

SELECT user_password FROM nuke_users WHERE user_id = 2

token 7token 36 token 28 token 47

000000000000000000000000000000000001000000000000000000

000000000000000000000000000100000001000000000000000000

000000100000000000000000000100000001000000000000000000

000000100000000000000000000100000001000000000010000000

Figure 1: Preparation of input data for a neural network:

analysis of a statement in terms of tokens (a), input neural

network data corresponding to the statement (b).

Next, an error of each neuron is calculated. These

steps are repeated until last token has been presented

to the network. Next, all weights are evaluated and

activation of the context layer neurons is set to 0. For

each input data, teaching data are shifted by one to-

ken forward in time with relation to input. Training

a network in such a way ensures that it will posses

prediction capability.

Training data consists of 276 SQL queries without

repetition. The following tokens are considered: key-

words of SQL language, numbers, strings and com-

binations of these elements. We used the collection

of SQL statements to define 54 distinct tokens. Each

token has a unique index. The table 1 shows selected

tokens and their indexes. The indexes are used for

preparation of input data for neural networks. The in-

dex e.g. of a keyword WHERE is 7. The index 28

points to a combination of keyword FROM and any

string. The token with index 36 relates to a grammat-

ical link between SELECT and any string. Finally,

when any string is compared to any number within a

SQL query, the index of a token equals to 47. Figure 1

presents an example of SQL statement, its represen-

tation in the form of tokens and related binary four

inputs of a network. SQL statement is encoded as k

vectors, where k is the number of tokens constituting

the statement (see figure 1). The number of neurons

on the input layer is the same as the number of defined

tokens. Networks have 55 neurons in the output layer.

54 neurons correspond to each token similarly to the

input layer but the neuron 55 is included to indicate

that just processing input data vector is the last within

a SQL query. Training data, which are compared to

the output of the network have value either equals to

0.1 or 0.9. If a neuron number n in the output layer

has small value then it means that the next processing

token can not have index n. On the other hand, if out-

put neuron number n has value of 0.9, then the next

token in a sequence should have index equals to n.

At the beginning, SQL statement is divided into

tokens. The indexes of tokens are: 36, 28, 7 and 47.

Each row is an input vector for RNN (see figure 1). In

the figure 1 the first token that has appeared is 36. As a

consequence, in the first step of training output signal

of all neurons in the input layer is 0 except neuron

number 36, which has value of 1. Next input vectors

indicate current indexes of tokens and the index of

a token that has been processed by RNN. The next

token in a sequence has index equals to 28. It follows

that only neurons 36 and 28 have output signal equal

to 1. The next index of a token is 7, which means

that neurons: 36, 28 and 7 send 1 and all remaining

neurons send 0. Finally, neurons 36, 28, 7, 47 have

activation signal equal to 1. In that moment weights

of RNN are updated and the next SQL statement is

considered.

4 TRAINING AND TESTING

DATA

We evaluated our system using data collected from

PHP Nuke portal(phpnuke.org, ). It is well known

application with many holes in older versions. Sim-

ilarly to (Valeur et al., 2005) we installed this portal

in version 7.5, which is susceptible to some SQL in-

jection attacks. Data without attacks were gathered

by visiting the Web sites using a browser. Each time

a Website is downloaded by a browser either a link

is clicked or filled forms are executed, SQL queries

are sent to a database and logged to a file simulta-

neously. During operation of the portal we collected

nearly 100000 SQL statements. Next, based on this

collection we defined tokens, which are keywords of

SQL and data types. The set of all SQL queries was

divided into 12 subsets, each containing SQL state-

ments of different length. 80% of each data set was

used for training and remaining data used for examin-

ing generalization. Teaching data are shifted one time

forward in time. Data with attacks are the same as

reported in (Valeur et al., 2005).

ICEIS 2007 - International Conference on Enterprise Information Systems

194

0

20

40

60

80

100

2 4 6 8 10 12

RMS

% no. of wrong predicted SQL queries

Index of training data subset

Jordan’s network performance

Jordan RMS

Jordan Verification

Figure 2: Error and number of wrong predicted SQL queries

for each subset of data for Jordan’s network.

5 EXPERIMENTAL RESULTS

Experimental study was divided into four stages. In

the first one, we wanted to evaluate the best param-

eters of both RNNs and learning algorithm. These

features are: a number of neurons in the hidden layer,

α used in momentum, activation function of neurons

in the hidden and output layer, η that determines the

extent of weights update. For the Elman network all

neurons in the hidden layer have sigmoidal activation

function while all neurons in the output layer have

tanh function. For the Jordan network tanh function

was chosen for the hidden layer and sigmoidal func-

tion for the output layer. In the most cases η (training

coefficient) does not exceed 0.2 and α value (used in

momentum) is less than 0.2. Ranges 2-4, 13-14, 15-

16 and 17-20 of the data subset (see Table 2) means

that these subsets include SQL queries of length be-

tween 2 and 4, 13 and 14, 15 and 16, 17 and 20.

All remaining subsets contain fixed length statements.

The number of neurons in the hidden layer was also

evaluated during experiments - 58 neurons were used.

In the second phase of the experimental study we

trained 12 RNNs, one for each training data subset.

From the beginning of the training, the error of the

Jordan network was much smaller than error of the

Elman network. In the next a few epochs the error of

both networks decreased quickly but the Jordan net-

work error remained much smaller than the Elman

network error. Figures 2 and 3 show how error of

networks changes for all subsets of SQL queries. The

figures also depict how well the networks are verified.

Here, a statement is considered as well predicted if for

all input vectors, all neurons in the output layer have

values according to training data. All values pre-

sented in figures are averaged on 10 runs of RNNs.

One can see that nearly for all data subsets the Jor-

0

20

40

60

80

100

2 4 6 8 10 12

RMS

% no. of wrong predicted SQL queries

Index of training data subset

Elman’s network performance

Elman RMS

Elman Verification

Figure 3: Error and number of wrong predicted SQL queries

for each subset of data for Elman’s network.

# SQL statement

1 7

2 2

3 1

4 2

5 2

6 1

7 2

8 0

# index of input vector number of errors

Figure 4: RNN output for an attack.

dan network outperforms the Elman one. Only for

data subsets 11 and 12 (see table 2) the error of the

Jordan network is greater than the error of the Elman

network. Despite of this for all data subsets percent-

age number of wrong predicted SQL queries for the

Jordan network is less than the number of wrong pre-

dicted SQL statements for the Elman network. The

Jordan network is able to predict all tokens of 10

length statements (20.6% false alarms).

In the third part of experiments we checked if

RNNs correctly detect attacks from section 4. Each

experiment was conducted using trained RNNs from

the second stage. Figure 4 presents the typical RNN

output if an attack is performed. The left column

depicts the number of input vector for RNN, while

the right column shows the number of cases in which

the index of the token indicated by network output

is different than the index of the next processed by

RNN token. What is typical for each network is that

nearly each output vector of a network has a few er-

rors. This phenomenon is present for all attacks used

in this work. Based on that observation, a decision

about good or bad verification and generalization of a

RECURRENT NEURAL NETWORKS APPROACH TO THE DETECTION OF SQL ATTACKS

195

# SQL statement

# index of input vector num. of errors−ver num. of errors−gen

1 0 0

2 1 1

3 1 2

4 0 1

5 0 1

6 1 1

7 1 1

8 1 0

Figure 5: RNN output for known and unknown SQL state-

ment.

network can be taken in the correlation with a form of

network output against attacks. Figure 5 shows RNN

output for SQL statements derived from the training

set and that, which was not present in the training set.

The second column (see figure 5) relates to the case

if the SQL query was in the training set and the third

column concerns the SQL query, which was not in the

training set.

It is easy to see that the number of errors during

verification and generalization is much smaller than

the number of errors when an attack is processed by

RNN. Moreover, there is also more output vectors free

of errors. Easily noticeable difference between an at-

tack and normal activity allows us to re-evaluate ob-

tained results presented in figures 2 and 3. Figure 5

presents typical outcomes for all trained RNNs and

our training data. To distinguish between an attack

and a legitimate SQL statement we define the follow-

ing rule for the Jordan network: an attack occurred

if the average number of errors for each output vec-

tor is not less than 2.0 and 80% of output vectors in-

clude any error. When the Elman network is used,

the threshold equals to 1.6 and the percentage of out-

put vectors possessing errors equals to 90%. Apply-

ing these rules ensures that all attacks are detected by

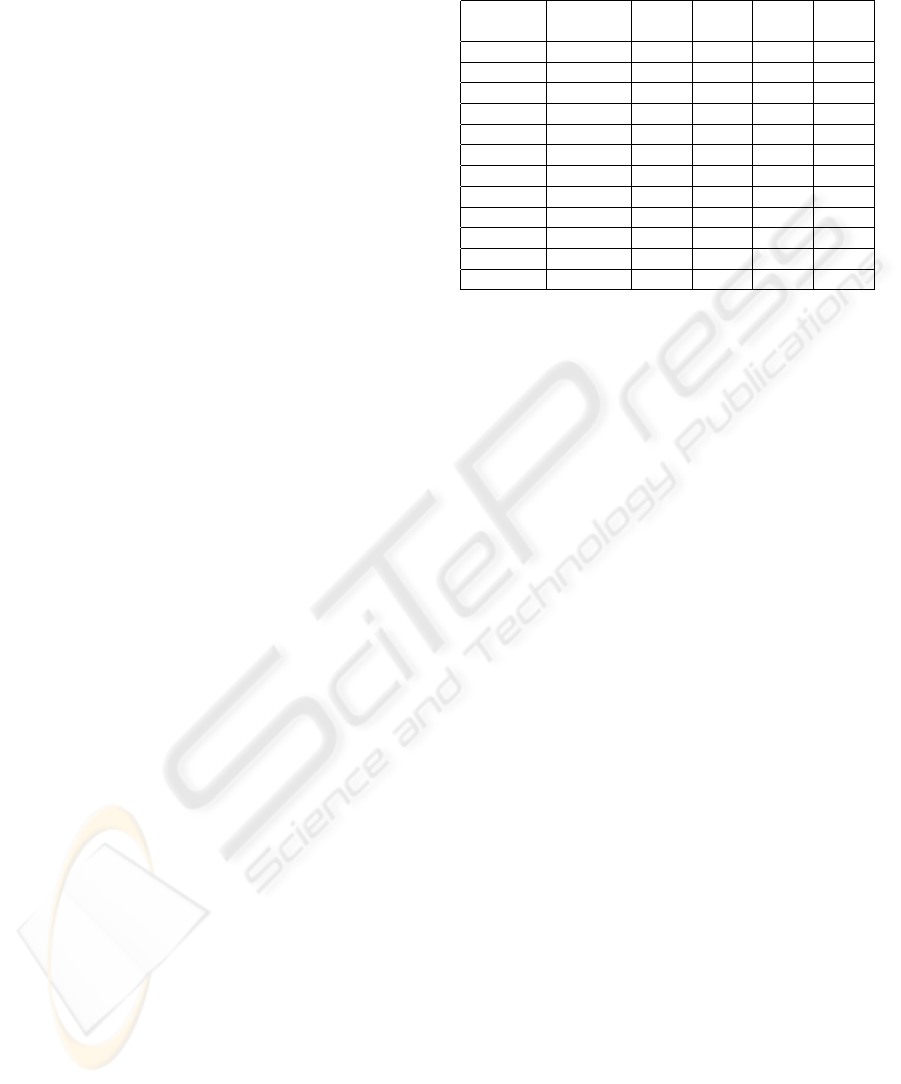

both RNN. The table 2 presents the percentage num-

ber of SQL statements wrongly predicted during ver-

ification and generalization if results were processed

by the rules.

For the most cases the Jordan network outper-

forms the Elman network. Only for data subsets con-

taining statements made from 11 and 12 tokens, the

Elman network is a little better than the Jordan net-

work. The important outcome of defined rules is that

both RNNs thought all statements and only few legit-

imate statements, which were not in the training set

were detected as attacks.

Table 2: Results of verification and generalization of Elman

and Jordan networks.

index of length of Elman Elman Jordan Jordan

data subset data subset ver gen ver gen

1 2-4 0 0 0 0

2 5 0 1.4 0 0

3 6 0 24.2 0 12.8

4 7 0 15.7 0 1.4

5 8 0 5 0 1.6

6 9 0 3.33 0 0

7 10 0 2.5 0 0

8 11 0 10 0 13.33

9 12 0 0 0 6.66

10 13-14 0 0 0 0

11 15-16 0 3.33 0 3.33

12 17-20 0 40 0 13.33

6 CONCLUSIONS

In the paper we have presented a new approach to

detecting SQL-based attacks. The problem of detec-

tion was transformed to time series prediction prob-

lem and two RNNs were examined to show their po-

tential use for such a class of attacks. Deep analysis of

the experimental results lead to the definition of rules

used for distinguishing between an attack and legiti-

mate statement. When these rules are applied, both

networks are completely trained for all SQL queries

included in the all training subsets. The advisable

part of experimental study is to apply defined rules

to the other data set, which can confirm efficiency of

the proposed approach to detecting SQL attacks. In

the future we are going to compare gradient-based al-

gorithms with nature inspired algorithms, especially

coevolutionary genetic algorithms.

REFERENCES

Almgren, M., Debar, H., and Dacier, M. (2000). A

lightweight tool for detecting web server attacks. In

Proceedings of the ISOC Symposium on Network and

Distributed Systems Security.

Drake, P. R. and Miller, K. A. (2002). Improved self-

feedback gain in the context layer of a modified el-

man neural network. In Mathematical and Computer

Modelling of Dynamical Systems.

Kendall, M. and Ord, J. (1999). Time Series.

Kruegel, C. and Vigna, G. (2003). Anomaly detection of

web-based attacks. In Proceedings of the 10th ACM

Conference on Computer and Communication Secu-

rity (CCS ’03).

Lin, T., Horne, B. G., Tino, P., and Giles, C. L. (1996).

Learning long-term dependencies in narx recurrent

ICEIS 2007 - International Conference on Enterprise Information Systems

196

neural networks. In IEEE Transactions on Neural Net-

works.

Nunn, I. and White, T. (2005). The application of antigenic

search techniques to time series forecasting. In Pro-

ceedings of the Genetic and Evolutionary Computa-

tion Conference (GECCO).

phpnuke.org. http://phpnuke.org/.

Pollock, D. (1999). A handbook of time-series analysis,

signal processing and dynamics. In Academic Press.

Valeur, F., Mutz, D., and Vigna, G. (2005). A learning-

based approach to the detection of sql attacks. In Pro-

ceedings of the Conference on Detection of Intrusions

and Malware and Vulnerability Assessment.

RECURRENT NEURAL NETWORKS APPROACH TO THE DETECTION OF SQL ATTACKS

197