TRA

CK AND CUT: SIMULTANEOUS TRACKING AND

SEGMENTATION OF MULTIPLE OBJECTS WITH GRAPH CUTS

Aur

´

elie Bugeau and Patrick P

´

erez

INRIA, Centre Rennes - Bretagne Atlantique, Campus Universitaire de Beaulieu, 35042 Rennes Cedex, France

Keywords:

Tracking, Graph Cuts.

Abstract:

This paper presents a new method to both track and segment multiple objects in videos using min-cut/max-flow

optimizations. We introduce objective functions that combine low-level pixel-wise measures (color, motion),

high-level observations obtained via an independent detection module (connected components of foreground

detection masks in the experiments), motion prediction and contrast-sensitive contextual regularization. One

novelty is that external observations are used without adding any association step. The minimization of these

cost functions simultaneously allows ”detection-before-track” tracking (track-to-observation assignment and

automatic initialization of new tracks) and segmentation of tracked objects. When several tracked objects get

mixed up by the detection module (e.g., single foreground detection mask for objects close to each other), a

second stage of minimization allows the proper tracking and segmentation of these individual entities despite

the observation confusion. Experiments on sequences from PETS 2006 corpus demonstrate the ability of the

method to detect, track and precisely segment persons as they enter and traverse the field of view, even in cases

of occlusions (partial or total), temporary grouping and frame dropping.

1 INTRODUCTION

Visual tracking is an important and challenging prob-

lem. Depending on applicative context under con-

cern, it comes into various forms (automatic or man-

ual initialization, single or multiple objects, still or

moving camera, etc.), each of which being associated

with an abundant literature. In a recent review on vi-

sual tracking (Yilmaz et al., 2006), tracking methods

are divided into three categories: point tracking, sil-

houette tracking and kernel tracking. These three cat-

egories can be recast as ”detect-before-track” track-

ing, dynamic segmentation and tracking based on dis-

tributions (color in particular).

The principle of ”detect-before-track” methods is

to match the tracked objects with observations pro-

vided by an independent detection module. Such a

tracking can be performed with either deterministic or

probabilistic methods. Deterministic methods amount

to matching by minimizing a distance based on cer-

tain descriptors of the object. Probabilistic methods

provide means to take measurement uncertainties into

account and are often based on a state space model of

the object properties.

Dynamic segmentation aims to extract successive

segmentations over time. A detailed silhouette of the

target object is thus sought in each frame. This is

often done by making evolve the silhouette obtained

in the previous frame towards a new configuration

in current frame. It can be done using a state space

model defined in terms of shape and motion parame-

ters of the contour (Isard and Blake, 1998; Terzopou-

los and Szeliski, 1993) or by the minimization of a

contour energy functional. The contour energy in-

cludes temporal information in the form of either tem-

poral gradients (optical flow) (Bertalmio et al., 2000;

Cremers and C. Schn

¨

orr, 2003; Mansouri, 2002) or

appearance statistics originated from the object and

its surroundings in previous images (Ronfard, 1994;

Yilmaz, 2004). In (Xu and Ahuja, 2002) the authors

use graph cuts to minimize such an energy functional.

The advantages of min-cut/max-flow optimization are

its low computational cost, the fact that it converges

to the global minimum without getting stuck in local

minima and that no a priori on the global shape model

is needed.

In the last group of methods (“kernel tracking”),

the best location for a tracked object in the current

frame is the one for which some feature distribution

(e.g., color) is the closest to the reference one for the

447

Bugeau A. and Pérez P. (2008).

TRACK AND CUT: SIMULTANEOUS TRACKING AND SEGMENTATION OF MULTIPLE OBJECTS WITH GRAPH CUTS.

In Proceedings of the Third International Conference on Computer Vision Theory and Applications, pages 447-454

DOI: 10.5220/0001075704470454

Copyright

c

SciTePress

tracked object. The most popular method in this class

is the one of Comaniciu et al. (Comaniciu et al.,

2000; Comaniciu et al., 2003), where approximate

“mean shift” iterations are used to conduct the iter-

ative search. Graph cuts have also been used for illu-

mination invariant kernel tracking in (Freedman and

Turek, 2005).

These three types of tracking techniques have dif-

ferent advantages and limitations, and can serve dif-

ferent purposes. The ”detect-before-track” methods

can deal with the entries of new objects and the exit of

existing ones. They use external observations that, if

they are of good quality, might allow robust tracking.

However this kind of tracking usually outputs bound-

ing boxes only. By contrast, silhouette tracking has

the advantage of directly providing the segmentation

of the tracked object. With the use of recent graph

cuts techniques, convergence to the global minima is

obtained for modest computational cost. Finally ker-

nel tracking methods, by capturing global color dis-

tribution of a tracked object, allow robust tracking at

low cost in a wide range of color videos.

In this paper, we address the problem of mul-

tiple objects tracking and segmentation by combin-

ing the advantages of the three classes of approaches.

We suppose that, at each instant, the moving objects

are approximately known from a preprocessing al-

gorithm. Here, we use a simple background sub-

traction but more complex alternatives could be ap-

plied. An important novelty of our method is that

the use of external observations does not require the

addition of a preliminary association step. The as-

sociation between the tracked objects and the obser-

vations is jointly conducted with the segmentation

and the tracking within the proposed minimization

method. The connected components of the detected

foreground mask serve as high-level observations. At

each time instant, tracked object masks are propa-

gated using their associated optical flow, which pro-

vides predictions. Color and motion distributions are

computed on the objects segmented in previous frame

and used to evaluate individual pixel likelihood in

the current frame. We introduce for each object a

binary labeling objective function that combines all

these ingredients (low-level pixel-wise features, high-

level observations obtained via an independent detec-

tion module and motion predictions) with a contrast-

sensitive contextual regularization. The minimiza-

tion of each of these energy functions with min-

cut/max-flow provides the segmentation of one of the

tracked objects in the new frame. Our algorithm

also deals with the introduction of new objects and

their associated tracker. When multiple objects trig-

ger a single detection due to their spatial vicinity,

the proposed method, as most detect-before-track ap-

proaches, can get confused. To circumvent this prob-

lem, we propose to minimize a secondary multi-label

energy function which allows the individual segmen-

tation of concerned objects.

In section 2, notations are introduced and an

overview of the method is given. The primary en-

ergy function associated to each tracked object is in-

troduced in section 3. The introduction of new objects

and the handling of complete occlusions are also ex-

plained in this section. The secondary energy function

permitting the separation of objects wrongly merged

in the first stage is introduced in section 4. Exper-

imental results are reported in section 5, where we

demonstrate the ability of the method to detect, track

and precisely segment persons and groups, possibly

with partial or complete occlusions and missing ob-

servations. The experiments also demonstrate that the

second stage of minimization allows the segmentation

of individual persons when spatial proximity makes

them merge at the foreground detection level.

2 PRINCIPLE AND NOTATIONS

In all this paper, P will denote the set of N pixels of

a frame from an input image sequence. To each pixel

s of the image at time t is associated a feature vector

z

s,t

= (z

(C)

s,t

,z

(M)

s,t

), where z

(C)

s,t

is a 3-dimensional vector

in RGB color space and z

(M)

s,t

is a 2-dimensional vector

of optical flow values. Using an incremental multi-

scale implementation of Lucas and Kanade algorithm

(Lucas and Kanade, 1981), the optical flow is in fact

only computed at pixels with sufficiently contrasted

surroundings. For the other pixels, color constitutes

the only low-level feature. However, for notational

convenience, we shall assume in the following that

optical flow is available at each pixel.

We assume that, at time t, k

t

objects are tracked.

The i

th

object at time t is denoted as O

(i)

t

and is defined

as a mask of pixels, O

(i)

t

⊂ P .

The goal of this paper is to perform both seg-

mentation and tracking to get the object O

(i)

t

corre-

sponding to the object O

(i)

t−1

of previous frame. Con-

trary to sequential segmentation techniques (Juan and

Boykov, 2006; Kohli and Torr, 2005; Paragios and

Deriche, 1999), we bring in object-level “observa-

tions”. They may be of various kinds (e.g., obtained

by a class-specific object detector, or motion/color de-

tectors). Here we consider that these observations

come from a preprocessing step of background sub-

traction. Each observation amounts to a connected

component of the foreground map after background

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

448

subtraction (figure 1). The connected components are

obtained using the ”gap/mountain” method described

in (Wang et al., 2000) and ignoring small objects. For

the first frame, the tracked objects are initialized as

the observations themselves. We assume that, at each

time t, there are m

t

observations. The j

th

observation

at time t is denoted as M

( j)

t

and is defined as a mask

of pixels, M

( j)

t

⊂ P . Each observation is characterized

by its mean feature vector:

z

( j)

t

=

∑

s∈M

( j)

t

z

s,t

|M

( j)

t

|

. (1)

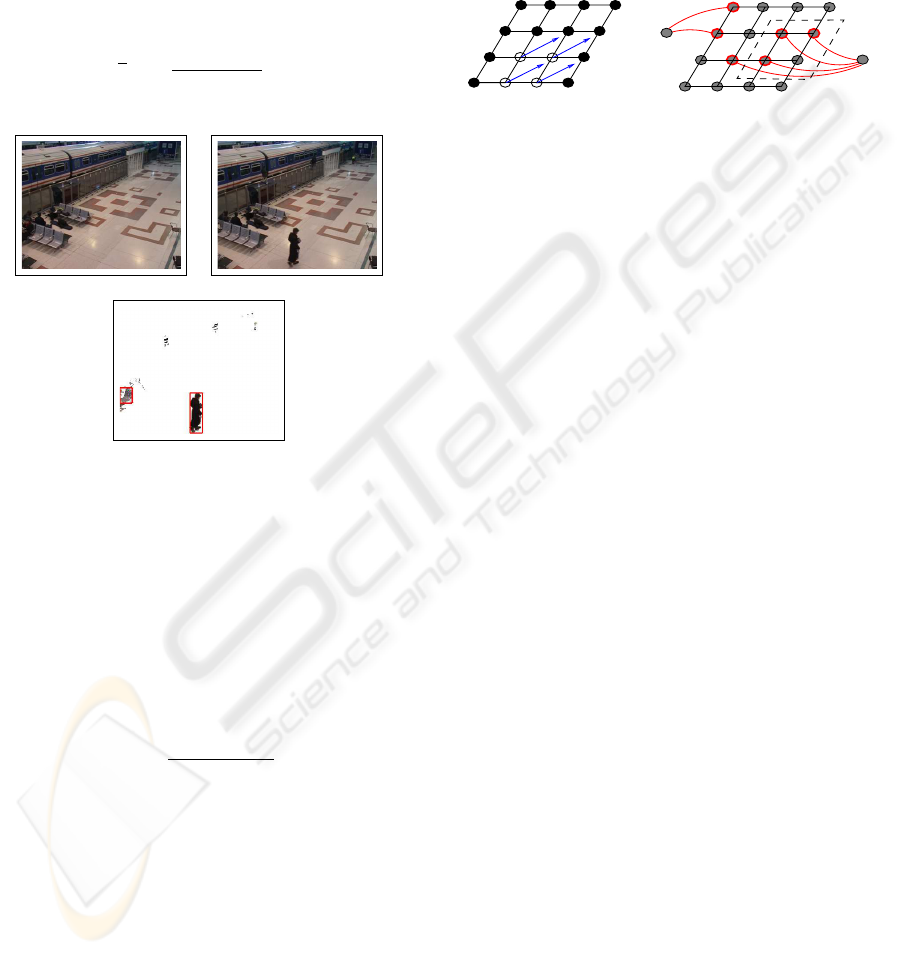

(a) (b)

(c)

Figure 1: Observations obtained with background subtrac-

tion. (a) Reference frame. (b) Current frame. (c) Result of

background subtraction (pixels in black are labeled as fore-

ground) and derived object detections (indicated with red

bounding boxes).

The principle of our algorithm is as follows. A

prediction O

(i)

t|t−1

⊂ P is made for each object i of time

t − 1. We denote as d

(i)

t−1

the mean, over all pixels of

the object at time t − 1, of optical flow values:

d

(i)

t−1

=

∑

s∈O

(i)

t−1

z

(M)

s,t−1

|O

(i)

t−1

|

. (2)

The prediction is obtained by translating each pixels

belonging to O

(i)

t−1

by this average optical flow:

O

(i)

t|t−1

= {s + d

(i)

t−1

,s ∈ O

(i)

t−1

} . (3)

Using this prediction, the new observations, as

well as color and motion distributions of O

(i)

t−1

, an en-

ergy function is built. The energy is minimized using

min-cut/max-flow algorithm (Boykov et al., 2001),

which gives the new segmented object at time t, O

(i)

t

.

The minimization also provides the correspondences

of the object with all the available observations.

3 ENERGY FUNCTIONS

We define one tracker for each object. To each tracker

corresponds, for each frame, one graph and one en-

ergy function that is minimized using the min-cut/

max-flow algorithm (Boykov et al., 2001). Nodes and

edges of the graph can be seen in figure 2.

n

(1)

t

n

(2)

t

Object i at time t-1

O

(i)

t|t−1

Graph for object i at time t

Figure 2: Description of the graph. The left figure is the re-

sult of the energy minimization at time t − 1. White nodes

are labeled as object and black nodes as background. The

optical flow vectors for the object are shown in blue. The

right figure shows the graph at time t. Two observations

are available, each of which giving rise to a special “obser-

vation” node. The pixel nodes circled in red correspond to

the masks of these two observations. Dashed box indicates

predicted mask.

3.1 Graph

The undirected graph G

t

= (V

t

,E

t

) is defined as a set

of nodes V

t

and a set of edges E

t

. The set of nodes

is composed of two subsets. The first subset is the

set of N pixels of the image grid P . The second sub-

set corresponds to the observations: to each obser-

vation mask M

(

j

)

t

is associated a node n

(

j

)

t

. We call

these nodes ”observation nodes”. The set of nodes

thus reads V

t

= P

S

m

t

j=1

n

( j)

t

. The set of edges is di-

vided in two subsets: E

t

= E

P

S

m

t

j=1

E

M

( j)

t

. The set E

P

represents all unordered pairs {s,r} of neighboring el-

ements of P , and E

M

( j)

t

is the set of unordered pairs

{s,n

( j)

t

}, with s ∈ M

( j)

t

.

Segmenting the object O

(i)

t

amounts to assigning a

label l

(i)

s,t

, either background, ”bg”, or object, “fg”, to

each pixel node s of the graph. Associating observa-

tions to tracked objects amounts to assigning a binary

label l

(i)

j,t

(“bg” or “fg”) to each observation node n

( j)

t

.

The set of all the node labels forms L

(i)

t

.

3.2 Energy

An energy function is defined for each object at each

instant. It is composed of unary data terms R

(i)

s,t

and

smoothness binary terms B

(i)

s,r,t

:

E

(i)

t

(L

(i)

t

) =

∑

s∈V

t

R

(i)

s,t

(l

(i)

s,t

) +

∑

{s,r}∈E

t

B

(i)

s,r,t

(1 − δ(l

(i)

s,t

,l

(i)

r,t

)) (4)

TRACK AND CUT: SIMULTANEOUS TRACKING AND SEGMENTATION OF MULTIPLE OBJECTS WITH

GRAPH CUTS

449

3.2.1 Data Term

The data term only concerns the pixel nodes lying in

the predicted regions and the observation nodes. For

all the other pixel nodes, labeling will only be con-

trolled by the neighbors via binary terms. More pre-

cisely, the first part of energy in (4) reads:

∑

s∈V

t

R

(i)

s,t

(l

(i)

s,t

) =

∑

s∈O

(i)

t|t−1

−ln(p

(i)

1

(s,l

(i)

s,t

))+

m

t

∑

j=1

−ln(p

(i)

2

( j,l

j,t

)) .

(5)

Segmented object at time t should be similar, in

terms of motion and color, to the preceding instance

of this object at times t − 1. To exploit this consis-

tency assumption, color and motion distributions of

the object and the background are extracted from pre-

vious image. The distribution p

(i,C)

t−1

for color, respec-

tively p

(i,M)

t−1

for motion, is a Gaussian mixture model

fitted to the set of values {z

(C)

s,t−1

}

s∈O

(i)

t−1

, respectively

{z

(M)

s,t−1

}

s∈O

(i)

t−1

. Under independency assumption for

color and motion, the likelihood of individual pixel

feature z

s,t

according to previous joint model is:

p

(i)

t−1

(z

s,t

) = p

(i,C)

t−1

(z

(C)

s,t

) p

(i,M)

t−1

(z

(M)

s,t

) . (6)

The two distributions for the background are q

(i,M)

t−1

and q

(i,C)

t−1

. They are Gaussian mixture models built

on the sets {z

(M)

s,t−1

}

s∈P \O

(i)

t−1

and {z

(C)

s,t−1

}

s∈P \O

(i)

t−1

respec-

tively. Foreground likelihood at pixel s then reads:

q

(i)

t−1

(z

s,t

) = q

(i,C)

t−1

(z

(C)

s,t

) q

(i,M)

t−1

(z

(M)

s,t

) . (7)

The likelihood p

1

, invoked in (5) within predicted

region, can now be defined as:

p

(i)

1

(s,l) =

p

(i)

t−1

(z

s,t

) if l = “fg”,

q

(i)

t−1

(z

s,t

) if l = “bg” .

(8)

An observation should be used only if it is likely

to correspond to the tracked object. Therefore, we use

a similar definition for p

2

. However we do not eval-

uate the likelihood of each pixel of the observation

mask but only the one of its mean feature z

( j)

t

. The

likelihood p

2

for the observation node n

( j)

t

is defined

as:

p

(i)

2

( j,l) =

p

(i)

t−1

(z

( j)

t

) if l = “fg”,

q

(i)

t−1

(z

( j)

t

) if l = “bg” .

(9)

3.2.2 Binary Term

Following (Boykov and Jolly, 2001), the binary term

between neighboring pairs of pixels {s, r} of P is

based on color gradients and has the form

B

(i)

s,r,t

= λ

1

1

dist(s,r)

e

−

kz

(C)

s,t

−z

(C)

r,t

k

2

σ

2

T

. (10)

As in (Blake et al., 2004), the parameter σ

T

is set to

σ

T

= 4 · h(z

(i,C)

s,t

− z

(i,C)

r,t

)

2

i, where h.i denotes expecta-

tion over a box surrounding the object. For edges be-

tween one pixel node and one observation node, the

binary term is similar:

B

(i)

s,n

( j)

t

,t

= λ

2

e

−

kz

(C)

s,t

−z

( j,C)

t

k

2

σ

2

T

. (11)

Parameters λ

1

and λ

2

are discussed in the experi-

ments.

3.2.3 Energy Minimization

The final labeling of pixels is obtained by minimizing

the energy defined above:

ˆ

L

(i)

t

= argmin

L

(i)

t

E

(i)

t

(L

(i)

t

). (12)

This labeling gives the segmentation of the i-th object

at time t as:

O

(i)

t

= {s ∈ P :

ˆ

l

(i)

s,t

= “fg”}. (13)

3.3 Handling Complete Occlusions

When the number of pixels belonging to a tracked ob-

ject becomes equal to zero, this object is likely to have

disappeared due to either its exit of the field of view

or its complete occlusion. If it is occluded, we want

to recover it as soon as it reappears. Let t

o

be the time

at which the size drops to zero, and S

(i)

t

be the size of

object i at time t. The simplest way to handle occlu-

sions is to keep predicting the object using informa-

tion available just before its complete disappearance:

O

(i)

t|t−1

= {s + (t − t

o

+ 1)d

(i)

t

o

−1

,s ∈ O

(i)

t

o

−1

} ,t > t

o

,

(14)

and minimizing the energy function with

p

(i)

t−1

≡ p

(i)

t

o

−1

, q

(i)

t−1

≡ q

(i)

t

o

−1

. (15)

However, before being completely occluded, an

object is usually partially occluded, which influences

its shape, its motion and the feature distributions.

Therefore, using only information at time t

0

− 1 is not

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

450

sufficient and a more complex scheme must be ap-

plied. To this end, we try to find the instant t

p

at which

the object started to be occluded. A Gaussian distribu-

tion N (S

(i)

,σ

(i)

S

) on the size of the object is built and

updated at each instant. If |N (S

(i)

t

;S

(i)

,σ

(i)

S

) − S

(i)

| <

3σ

(i)

S

, then we consider that the object is partially oc-

cluded and t

p

= t − 1. The prediction and the distri-

butions are finally built on averages over the 5 frames

before t

p

:

O

(i)

t|t−1

= {s +

t −t

p

+ 1

5

t

p

∑

t

0

=t

p

−5

d

(i)

t

0

,s ∈ O

(i)

t

p

}, (16)

while the distributions p

(i)

t−1

and q

(i)

t−1

are now Gaussian

mixture models fitted on sets {z

s,t

0

}

t

0

=t

p

−5...t

p

, s∈O

(i)

t

0

and {z

s,t

0

}

t

0

=t

p

−5...t

p

, s∈P \O

(i)

t

0

respectively. Specific mo-

tion models depending on the application could have

been used but this falls beyond the scope of the paper.

3.4 Creation of New Objects

One advantage of our approach lies in its ability to

jointly manipulate pixel labels and track-to-detection

assignment labels. This allows the system to track and

segment the objects at time t while establishing the

correspondence between an object currently tracked

and all the approximative object candidates obtained

by detection in current frame. If, after the energy min-

imization for an object i, an observation node n

( j)

t

is

labeled as “fg” it means that there is a correspondence

between the i-th object and the j-th observation. If for

all the objects, an observation node is labeled as “bg”

(∀i,

ˆ

l

(i)

t, j

= “bg”), then the corresponding observation

does not match any object. In this case, a new object

is created and initialized with this observation.

4 SEGMENTING MERGED

OBJECTS

Assume now that the results of the segmentations for

different objects overlap, that is ∩

i∈F

O

(i)

t

6=

/

0, where F

denotes the current set of object indices. In this case,

we propose an additional step to determine whether

these objects truly correspond to the same one or if

they should be separated. At the end of this step,

each pixel of ∩

i∈F

O

(i)

t

must belong to only one ob-

ject. For this purpose, a new graph

˜

G

t

= (

˜

V

t

,

˜

E

t

) is

created, where

˜

V

t

= ∪

i∈F

O

(i)

t

and

˜

E

t

is composed of

all unordered pairs of neighboring pixel nodes of

˜

V

t

.

The goal is then to assign to each node s of

˜

V

t

a label

ψ

s

∈ F . Defining

˜

L = {ψ

s

,s ∈

˜

V

t

} the labeling of

˜

V

t

, a

new energy is defined as:

˜

E

t

(

˜

L) =

∑

s∈

˜

V

t

−ln(p

3

(s,ψ

s

))

+ λ

3

∑

{s,r}∈

˜

E

t

1

dist(s,r)

e

−

kz

(C)

s

−z

(C)

r

k

2

σ

2

3

(1 − δ(ψ

s

,ψ

r

)).

(17)

The parameter σ

3

is here set as σ

3

= 4 ·

h(z

(i,C)

s,t

− z

(i,C)

r,t

)

2

i with the averaging being over i ∈ F

and {s,r} ∈

˜

E. The fact that several objects have been

merged shows that their respective feature distribu-

tions at previous instant did not permit to distinguish

them. A way to separate them is then to increase the

role of the prediction. This is achieved by choosing

function p

3

as:

p

3

(s,ψ) =

(

p

(ψ)

t−1

(z

s,t

) if s /∈ O

(ψ)

t|t−1

,

1 otherwise .

(18)

This multi-label energy function is minimized us-

ing the α-expansion and the swap algorithms (Boykov

et al., 1998; Boykov et al., 2001). After this mini-

mization, the objects O

(i)

t

,i ∈ F are updated.

5 EXPERIMENTAL RESULTS

In this section we present various results on a se-

quence from the PETS 2006 data corpus (sequence

1 camera 4). The robustness to partial occlusions and

the individual segmentation of objects that were ini-

tially merged, are first demonstrated. Then we present

the handling of missing observations and of complete

occlusions on other parts of the video. Following

(Blake et al., 2004), the parameter λ

3

was set to 20.

However parameters λ

1

and λ

2

had to be tuned by

hand to get better results. Indeed λ

1

was set to 10

while λ

2

to 2. Also, the number of classes for the

Gaussian mixture models was set to 10.

(a) (b)

Figure 3: Reference frames. (a) Reference frame for sub-

sections 5.1 and 5.2. (b) Reference frame for subsection

5.3.

TRACK AND CUT: SIMULTANEOUS TRACKING AND SEGMENTATION OF MULTIPLE OBJECTS WITH

GRAPH CUTS

451

5.1 Observations at Each Time

First results (figure 4) demonstrate the good behavior

of our algorithm even in the presence of partial oc-

clusions and of object fusion. Observations, obtained

by subtracting reference frame (frame 10 shown on

figure 3(a)) to the current one, are visible in the first

column of figure 4. The second column contains the

segmentation of the objects with the use of the second

energy function. Each tracked object is represented

by a different color. In frame 81, two objects are

initialized using the observations. Note that the con-

nected component extracted with the “gap/mountain”

method misses the legs for the person in the upper

right corner. While this impacts the initial segmen-

tation, the legs are included in the segmentation as

soon as the subsequent frame. Even if from the 102

nd

frame the two persons at the bottom of the frames cor-

respond to only one observation, our algorithm tracks

each person separately (frames 116, 146). Partial oc-

clusions occur when the person at the top passes be-

hind the three other ones (frames 176 and 206), which

is well handled by the method, as the person is still

tracked when the occlusion stops (frame 248).

In figure 5, we show in more details the influence

of the second energy function by comparing the re-

sults obtained with and without it. Before frame 102,

the three persons at the bottom generate three dis-

tinct observations while, passed this instant, they cor-

respond to only one or two observations. Even if the

motions and colors of the three persons are very close,

the use of the secondary multi-label energy function

allows their separation.

5.2 Missing Observations

On figure 6 we illustrate the capacity of the method

to handle missing observations thanks to the predic-

tion mechanism. In our test we have only performed

the background subtraction on one over three frames.

On figure 6, we compare the obtained segmentations

with the ones based on observations at each frame.

First column shows the intermittent observations, the

second one the masks of the objects obtained in case

of missing observations and the last one the masks

with observations at each time. Thanks to the predic-

tion, the results are only partially altered by this dras-

tic temporal subsampling of observations. As one can

see, even if one leg is missing in frames 805 and 806,

it is recovered as soon as a new observation is avail-

able. Conversely, this result also shows that the incor-

poration of observations from the detection module

enables to get better segmentations than when using

only predictions.

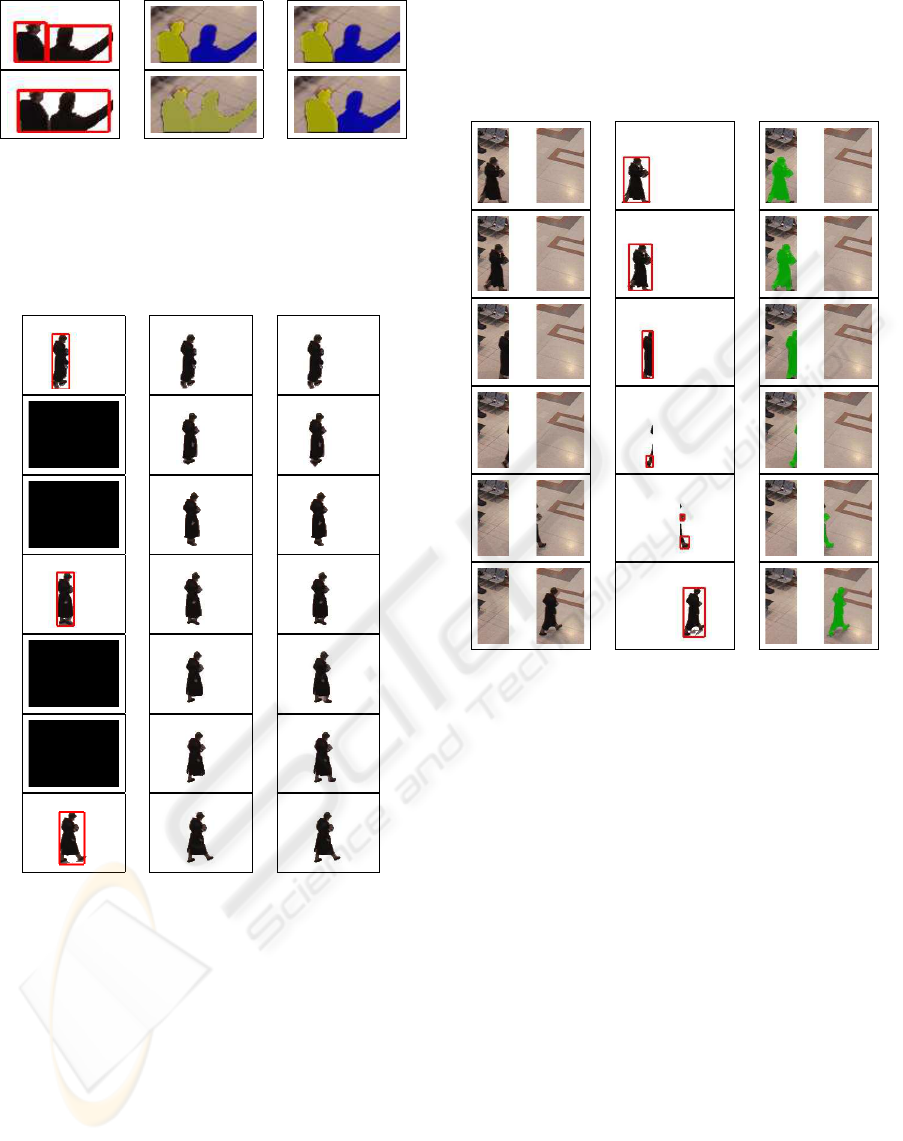

(a) (b)

Figure 4: Results on sequence from PETS 2006 (frames 81,

116, 146, 176, 206 and 248). (a) Result of simple back-

ground subtraction and extracted observations. (b) Tracked

objects on current frame using the secondary energy func-

tion.

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

452

(a) (b) (c)

Figure 5: Separating merged objects with the secondary

minimization (frames 101 and 102). (a) Result of sim-

ple background subtraction and extracted observations. (b)

Segmentations with primary energy functions only. (c) Seg-

mentation after post-processing with the secondary energy

function.

(a) (b) (c)

Figure 6: Results with observations only every 3 frames

(frames 801 to 807) (a) Results of background subtraction

and extracted observations. (b) Masks of tracked object.

(c) Comparison with the masks obtained when there is no

missing observations.

5.3 Complete Occlusions

Results in figure 7 demonstrate the ability of our

method to deal with complete occlusions. In this por-

tion of the video, we added synthetically a vertical

white band in the images in order to generate com-

plete occlusions. The reference frame can be seen on

figure 3(b). On figure 7, the first column contains the

original images (with the white band), the second one

the observations and the last one the obtained segmen-

tations. Our algorithm keeps tracking and segmenting

the object as it progressively disappears and resumes

tracking and segmenting it as soon as it reappears.

(a) (b) (c)

Figure 7: Results with complete occlusions (frames 782,

785, 792, 798, 810 and 824) (a) Original frames. (b) Results

of background subtraction and extracted observations. (c)

Comparison with the masks obtained when there is not any

missing observations.

6 CONCLUSIONS

In this paper we have presented a new method to

simultaneously segment and track objects. Predic-

tions and observations, composed of detected objects,

are introduced in an energy function which is mini-

mized using graph cuts. The use of graph cuts per-

mits the segmentation of the objects at a modest com-

putational cost. A novelty is the use of observation

nodes in the graph which gives better segmentations

but also enables the direct association of the tracked

objects to the observations (without adding any as-

sociation procedure). The algorithm is robust to par-

tial and complete occlusions, progressive illumination

changes and to missing observations. Thanks to the

use of a secondary multi-label energy function, our

method allows individual tracking and segmentation

TRACK AND CUT: SIMULTANEOUS TRACKING AND SEGMENTATION OF MULTIPLE OBJECTS WITH

GRAPH CUTS

453

of objects which where not distinguished from each

other in the first stage. The observations used in this

paper are obtained by a simple background subtrac-

tion based on a single reference frame. Note however

that more complex background subtraction or object

detection could be used as well with no change to the

approach.

As we use feature distributions of objects at pre-

vious time to define current energy functions, our

method breaks down in extreme cases of abrupt il-

lumination changes. However, by adding an external

detector of such changes, we could circumvent this

problem by keeping only the prediction and updating

the reference frame when the abrupt change occurs.

Also, other cues, such as shapes, should probably be

added to improve the results.

Apart from this rather specific problem, several re-

search directions are open. One of them concerns the

design of an unifying energy framework that would

allow segmentation and tracking of multiple objects

while precluding the incorrect merging of similar ob-

jects getting close to each other in the image plane.

Another direction of research concerns the automatic

tuning of the parameters, which remains an open

problem in the recent literature on image labeling

(e.g., figure/ground segmentation) with graph-cuts.

REFERENCES

Bertalmio, M., Sapiro, G., and Randall, G. (2000). Morph-

ing active contours. IEEE Trans. Pattern Anal. Ma-

chine Intell., 22(7):733–737.

Blake, A., Rother, C., Brown, M., P

´

erez, P., and Torr, P.

(2004). Interactive image segmentation using an adap-

tive gmmrf model. In Proc. Europ. Conf. Computer

Vision.

Boykov, Y. and Jolly, M. (2001.). Interactive graph cuts for

optimal boundary and region segmentation of objects

in n-d images. Proc. Int. Conf. Computer Vision.

Boykov, Y., Veksler, O., and Zabih, R. (1998). Markov

random fields with efficient approximations. In Proc.

Conf. Comp. Vision Pattern Rec.

Boykov, Y., Veksler, O., and Zabih, R. (2001). Fast approxi-

mate energy minimization via graph cuts. IEEE Trans.

Pattern Anal. Machine Intell., 23(11):1222–1239.

Comaniciu, D., Ramesh, V., and Meer, P. (2000). Real-

time tracking of non-rigid objects using mean-shift.

In Proc. Conf. Comp. Vision Pattern Rec.

Comaniciu, D., Ramesh, V., and Meer, P. (2003). Kernel-

based optical tracking. IEEE Trans. Pattern Anal. Ma-

chine Intell., 25(5):564–577.

Cremers, D. and C. Schn

¨

orr, C. (2003). Statistical shape

knowledge in variational motion segmentation. Image

and Vision Computing, 21(1):77–86.

Freedman, D. and Turek, M. (2005). Illumination-invariant

tracking via graph cuts. Proc. Conf. Comp. Vision Pat-

tern Rec.

Isard, M. and Blake, A. (1998). Condensation – conditional

density propagation for visual tracking. Int. J. Com-

puter Vision, 29(1):5–28.

Juan, O. and Boykov, Y. (2006). Active graph cuts. In Proc.

Conf. Comp. Vision Pattern Rec.

Kohli, P. and Torr, P. (2005). Effciently solving dynamic

markov random fields using graph cuts. In Proc. Int.

Conf. Computer Vision.

Lucas, B. and Kanade, T. (1981). An iterative technique of

image registration and its application to stereo. Proc.

Int. Joint Conf. on Artificial Intelligence.

Mansouri, A. (2002). Region tracking via level set pdes

without motion computation. IEEE Trans. Pattern

Anal. Machine Intell., 24(7):947–961.

Paragios, N. and Deriche, R. (1999). Geodesic active re-

gions for motion estimation and tracking. In Proc. Int.

Conf. Computer Vision.

Ronfard, R. (1994). Region-based strategies for active con-

tour models. Int. J. Computer Vision, 13(2):229–251.

Terzopoulos, D. and Szeliski, R. (1993). Tracking with

kalman snakes. Active vision, pages 3–20.

Wang, Y., Doherty, J., and Van Dyck, R. (2000). Mov-

ing object tracking in video. Applied Imagery Pattern

Recognition (AIPR) Annual Workshop.

Xu, N. and Ahuja, N. (2002). Object contour tracking us-

ing graph cuts based active contours. Proc. Int. Conf.

Image Processing.

Yilmaz, A. (2004). Contour-based object tracking with

occlusion handling in video acquired using mobile

cameras. IEEE Trans. Pattern Anal. Machine Intell.,

26(11):1531–1536.

Yilmaz, A., Javed, O., and Shah, M. (2006). Object track-

ing: A survey. ACM Comput. Surv., 38(4):13.

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

454