VARIATIONAL BAYES WITH GAUSS-MARKOV-POTTS PRIOR

MODELS FOR JOINT IMAGE RESTORATION AND

SEGMENTATION

Hacheme Ayasso and Ali Mohammad-Djafari

Laboratoire des Signaux et Syst`emes, UMR 8506 (CNRS-SUPELEC-UPS)

SUPELEC, Plateau de Moulon, 3 rue Joliot Curie, 91192 Gif-sur-Yvette Cedex, France

Keywords:

Variational Bayes Approximation, Image Restoration, Bayesian estimation, MCMC.

Abstract:

In this paper, we propose a family of non-homogeneous Gauss-Markov fields with Potts region labels model

for images to be used in a Bayesian estimation framework, in order to jointly restore and segment images de-

graded by a known point spread function and additive noise. The joint posterior law of all the unknowns ( the

unknown image, its segmentation hidden variable and all the hyperparameters) is approximated by a separable

probability laws via the variational Bayes technique. This approximation gives the possibility to obtain prac-

tically implemented joint restoration and segmentation algorithm. We will present some preliminary results

and comparison with a MCMC Gibbs sampling based algorithm.

1 INTRODUCTION

A simple direct model of image restoration problem

is

g(r) = h(r) ∗ f(r) + ε(r)

(1)

where g(r) is the observed image, h(r) is a known

point spread function, f(r) is the unknown image,

and ε(r) is the measurement error. A discretized form

of this relation is

g = Hf + ε (2)

where g,f, and ε are vectors containing samples of

g(r),f(r), and ε(r), and H is a huge matrix whose

elements are determined using h(r) samples.

In a Bayesian framework for such an inverse prob-

lem (B.R. Hunt, 1977), one start by writing the ex-

pression of the posterior law:

p(f|θ,g;M ) =

p(g|f,θ

1

;M ) p(f|θ

2

;M )

p(g|θ;M )

(3)

where p(g|f ,θ

1

;M ), called the likelihood, is ob-

tained using the forward model (2) and the assigned

probability law p

ε

(ε) of the errors, p(f |θ

2

;M ) is the

assigned prior law for the unknown image f and

p(g|θ;M ) =

p(g|f,θ

1

;M ) p(f|θ

2

;M ) dθ. (4)

is the evidence of the model M with hyperparameters

θ = (θ

1

,θ

2

). Assigning Gaussian priors

p(g|f,θ

ε

;M ) = N (Hf ,(1/θ

ε

)I),

p(f|θ

f

;M ) = N (0,Σ

f

)

with Σ

f

= (1/θ

f

)(D

t

D)

−1

(5)

It is easy to show that the post law is also a Gaussian

p(f|g,θ

ε

,θ

f

;M ) ∝ p(g|f,θ

ε

;M )p(f |θ

f

;M )

= N (

b

f ,

ˆ

Σ

f

)

(6)

with

b

Σ

f

= [θ

ε

H

t

H + θ

f

D

t

f

D

f

]

−1

=

1

θ

ε

[H

t

H + λD

t

D]

−1

,

(7)

and

b

f = θ

ε

b

Σ

f

H

t

g = [H

t

H + λD

t

D]

−1

H

t

g (8)

which can also be obtained as the solution that min-

imises:

J

1

(f) = kg − Hfk

2

+ λkDfk

2

(9)

where we can see the link with the classical regular-

ization theory (Tikhonov, 1963).

For more general cases, using the MAP estimate:

b

f = argmax

f

p(f|θ,g;M )

= argmin

f

{J

1

(f)}

(10)

571

Ayasso H. and Mohammad-Djafari A. (2008).

VARIATIONAL BAYES WITH GAUSS-MARKOV-POTTS PRIOR MODELS FOR JOINT IMAGE RESTORATION AND SEGMENTATION.

In Proceedings of the Third International Conference on Computer Vision Theory and Applications, pages 571-576

DOI: 10.5220/0001091805710576

Copyright

c

SciTePress

We have

J

1

(f) = −lnp(g|f ,θ

ε

;M ) − ln p(f|θ

2

;M )

= kg − H fk

2

+ λΩ(f )

(11)

where λ = 1/θ

ε

and Ω(f ) = −ln p(f |θ

2

;M ). Two

family of priors could be distinguished:

separable:

p(f) ∝ exp

"

−θ

f

∑

j

φ( f

j

)

#

(12)

and Markovian

p(f) ∝ exp

"

−θ

f

∑

j

φ( f

j

− f

j− 1

)

#

(13)

where different expressions have been used for the

potential function φ(.), (Bouman and Sauer, 1993;

Green, 1990; Geman and McClure, 1985) with great

success in many applications.

Still, this family of priors can not give a precise

model for the unknown image in many applications,

due to global image homogeneity assumption. For

this reason, we have chosen in paper to use a non-

homogenous prior model which takes into account the

assumption that the unknown image is composed of

finite number of homogenous materials. This implies

the introduction of a hidden image z = {z(r),r ∈ R }

which associates each pixel f(r) with a label (class)

z(r), R represent the whole space of the image sur-

face. All pixels with the same label z(r) = k share the

same properties. Indeed, we use Potts model to rep-

resent the dependence between hidden variable pix-

els, as we will see in the next section. Meanwhile,

we propose two models for the unknown image f, in-

dependent mixture of Gaussian and a Gauss-Markov

model. However, this choice of prior makes it impos-

sible to get analytical expression for the maximum a

posterior (MAP) or posterior mean (PM) estimator.

Consequently, we will use the variational Bayes tech-

nique to calculate an approximated form of this law.

The rest of this paper is organized as follows. In

section 2, we give more details about the proposed

prior models. In section 3, we employ these priors us-

ing the Bayesian framework to obtain a joint posterior

law of the unknowns (image pixels, hidden variable,

and the hyperparameters including the region statisti-

cal parameters and the noise variance). Then in sec-

tion 4, we will use the variational Bayes approxima-

tion in order to have a tractable approximationof joint

posterior law. In section 5, we show an image restora-

tion example. Finally, we conclude this work in sec-

tion 6.

2 PROPOSED

GAUSS-MARKOV-POTTS

PRIOR MODELS

As we introduced in the previous section, the main

assumption here is the piecewise homogeneity of the

restored image. This model corresponds to number

of application where the studied image is composed

of finite number of materials, as example, muscle

and bone or gray-white materials in medical images.

Another application, is the non-destructive imaging

testing (NDT) in industrials applications, where stud-

ied materials are, in general, composed of air-metal

or air-metal-composite. This prior model have al-

ready been used in several works for several applica-

tion (Mohammad-Djafari, Humblot and Mohammad-

Djafari, 2006; F´eron et al., 2005).

In fact, this assumption permits to associate a label

(class) z(r) to each pixel of the image f. The ensem-

ble of this labels z form a K color image, where K

corresponds to the number of materials, and R rep-

resents the entire image pixel area. We call this dis-

crete value variable a hidden field, which represents

the segmentation of the image.

Moreover, all pixels f

k

= { f(r),r ∈ R

k

} which

have the same label k, share the same probabilistic

parameters (class means µ

k

, and class variances v

k

),

k

R

k

= R . Indeed, these pixels have a spatial struc-

ture while we assume here that pixels from differ-

ent class are a priori independent, which is natural

since they belong to different materials. This will

be a key assumption when introducing Gauss-Markov

prior model of source later in this section.

Using the former assumption, we can give the

prior probability law of a pixel knowing the class as a

Gaussian (homogeneity inside the same class).

p( f(r)|z(r) = k,m

k

,v

k

) = N (m

k

,v

k

) (14)

This will give a Mixture of Gaussians (MoG) model

for the pixel p( f(r)). It can be written as follows:

p( f(r)) =

∑

k

a

k

N (m

k

,v

k

) with a

k

= P(z(r) = k)

(15)

Another important point is the prior modeling of

the spatial interaction between different elements of

prior model. This study is concerned with two in-

teractions, pixels of images within the same class

f = { f(r),r ∈ R } and elements of hidden variable

z = {z(r), r ∈ R }. In this paper, we assign Potts

model for hidden field z in order to obtain more ho-

mogeneous classes in the image. Meanwhile, we

present two models for the image pixels f; the first

is independent, while the second is Gauss-Markov

model. In the following, we give the prior probability

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

572

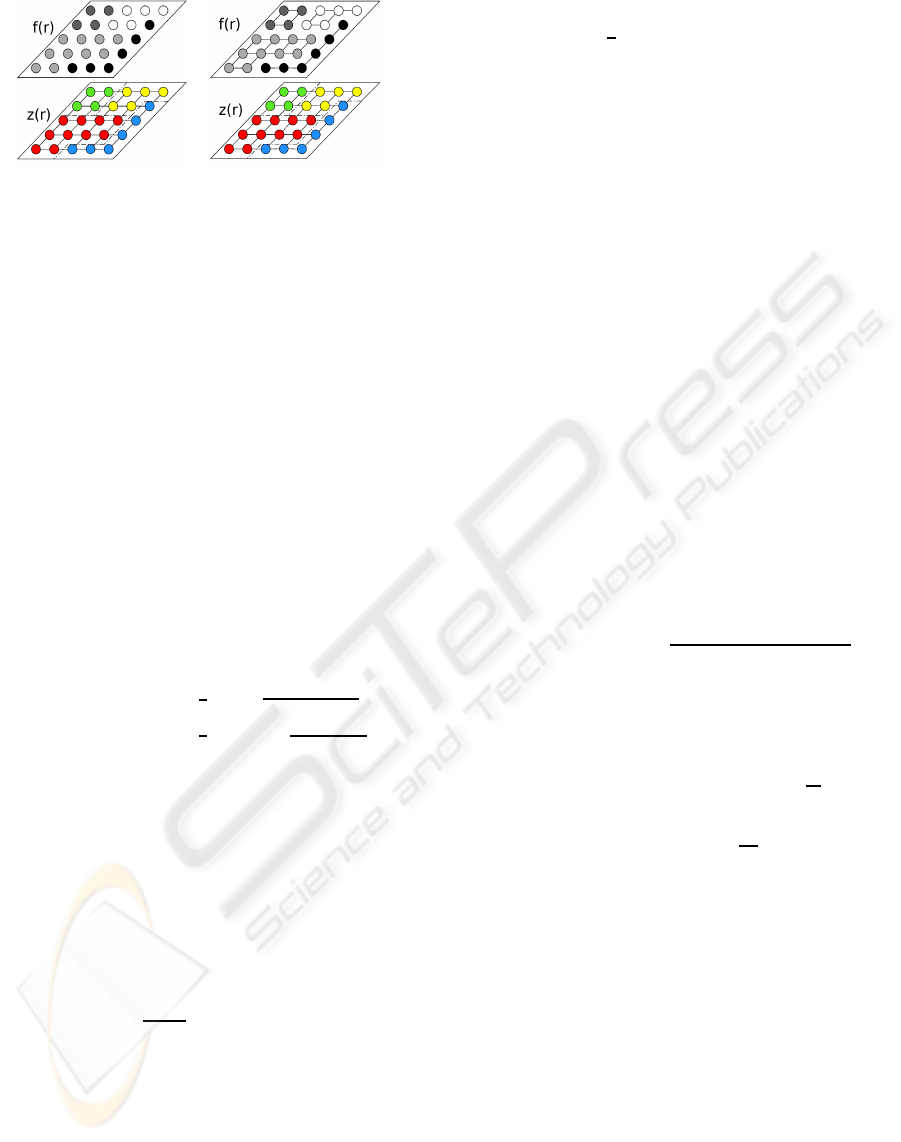

MIG MGM

Figure 1: Proposed a priori model for the images: the im-

age pixels f(r) are assumed to be classified in K classes,

z(r) represents thoses classes (segmentation). In MIG prior,

we assume the image pixels in each class to be independent

while in MGM prior, image pixels these are considered de-

pendent. In both cases, the hidden field values follows Potts

model in the two cases.

of image pixels and hidden field elements for the two

models.

Case 1: Mixture of Independent Gaussians (MIG):

In this case, no prior dependence is assumed for ele-

ments of image f|z:

p( f(r)|z(r) = k) = N (m

k

,v

k

), ∀r ∈ R

p(f|z,m

z

(r),v

z

(r)) =

∏

r∈R

N (m

z

(r),v

z

(r))

(16)

with m

z

(r) = m

k

,∀r ∈ R

k

, v

z

(r) = v

k

,∀r ∈ R

k

, and

p(f|z,m,v)=

∏

r∈R

N (m

z

(r),v

z

(r))

∝exp

h

−

1

2

∑

r∈R

( f(r)−m

z

(r))

2

v

z

(r)

i

∝exp

h

−

1

2

∑

k

∑

r∈R

k

( f(r)−m

k

)

2

v

k

i

(17)

Case 2: Mixture of Gauss-Markovs(MGM):

We present here a more sophisticated model for in-

teraction between image pixels, where we keep the

independence between different classed pixels. The

pixels a region are assumed Markovian with the four

nearest neighbours.

p( f (r)|z(r), f (r

′

),z(r

′

),r

′

∈ V (r)) = N (µ

z

(r),v

z

(r))

(18)

with

µ

z

(r) =

1

|V (r)|

∑

r

′

∈V (r)

µ

∗

z

(r

′

)

µ

∗

z

(r

′

) =

m

z

(r) if z(r

′

) 6= z(r)

f(r

′

) if z(r

′

) = z(r)

v

z

(r) = v

k

∀r ∈ R

k

(19)

We may remark that f|z is a non homogeneous

Gauss-Markov field because the means µ

z

(r) are

functions of the pixel position r.

For both cases, a Potts Markov model will be used

to describe the hidden field prior law for both image

models:

p(z|γ)∝ exp

∑

r∈R

Φ(z(r))

× exp

h

+

1

2

γ

∑

r∈R

∑

,r

′

∈V (r)

δ(z(r) − z(r

′

))

i

(20)

Where Φ(z(r)) is the energy of singleton cliques, and

γ is Potts constant.

The hyperparametersof the model are class means

m

k

, variances v

k

, and finally singleton clique energy

α

z

(r) = Φ(z(r)).

3 BAYESIAN JOINTE

RECONSTRUCTION,

SEGMENTATION AND

CHARACTERIZATION

So far, we have presented two prior models for un-

known image based on the assumption that studied

object is composed of known number of materials.

That led us to the introduction of hidden field which

assigns each pixel a label corresponding to its mater-

ial. Thus, each material can be characterized by statis-

tical properties (m

k

,v

k

,α

k

). Now in order to estimate

the unknown image and its hidden field, we have to

use the Bayesian framework to calculate the joint pos-

terior law.

p(f,z|θ,g;M ) =

p(g|f ,θ

1

) p(f|z,θ

2

) p(z|θ

3

)

p(g|θ)

(21)

This demands the knowledge of p(f |z,θ

2

), and

p(z|θ

3

) which we have already provided in the pre-

vious section, and the model likelihood p(g|f ,θ

1

)

which depends on the error model. Normal choice for

it is the zero mean Gaussian with variance

1

θ

ε

, which

gives:

p(g|f,θ

ε

) = N (Hf ,

1

θ

ε

I) (22)

In fact the previous calculation assumes that we have

the hyperparameters values. This is not true in many

practical applications. Consequently, these parame-

ters have to be estimated jointly with the unknownim-

age. This is possible using the Bayesian framework.

We just need to assign a prior model for each of the

hyperparameters and write the joint posterior law

p(f,z,θ|g;M ) ∝ p(g|f,θ

1

;M ) p(f|z, θ

2

;M )

× p(z|θ

3

;M ) p(θ|M )

(23)

Where θ regroup all the hyperparameters that need

to be estimated which are means m

k

, variances v

k

,

energy singleton α

k

, and error inverse variance θ

ε

.

While, Potts constant γ is chosen to be fixed due to the

difficulty of finding conjugate prior to it. We choose

an Inverse Gamma for the model of the error variance

VARIATIONAL BAYES WITH GAUSS-MARKOV-POTTS PRIOR MODELS FOR JOINT IMAGE RESTORATION

AND SEGMENTATION

573

θ

ε

, Gaussian for the means m

k

, Inverse Gamma for

variances v

k

, and finally a Dirichlet for α

k

.

p(θ

ε

|a

e0

,b

e0

) = G(a

e0

,b

e0

), ∀k

p(m

k

|m

0

,v

0

) = N (m

0

,v

0

), ∀k

p(v

−1

k

|a

0

,b

0

) = G(a

0

,b

0

), ∀k

p(α|α

0

) = D(α

0

,··· ,α

0

)

(24)

where a

e0

,b

e0

, m

0

,v

0

, a

0

,b

0

and α

0

are fixed for a

given problem. In fact, the previous choice of con-

jugate priors is very helpful for the calculation which

we are going to perform in the next section.

4 BAYESIAN COMPUTATION

In the previous section, we have found the necessary

ingredient to obtain the expression of joint posterior

law. However, calculating the joint maximum poste-

rior (JMAP)

(

b

f,

b

z,

b

θ) = arg max

(f ,z,θ)

p(f,z,θ|g;M )

(25)

or the Posterior Means (PM):

b

f =

∑

z

f p(f ,z,θ|g;M ) df dθ

b

θ =

∑

z

θ p(f,z,θ|g;M ) df dθ

b

z =

∑

z

z p(f,z,θ|g;M ) df dθ

(26)

can not be obtained in an analytical form. So, we ex-

plore here two approachs to solve this problem, which

are Monte Carlos technique and variational Bayes ap-

proximation.

Numerical Exploration and Integration via Monte

Carlos techniques. This method aims to solve the

previous problem by generating a great number of

representing samples of the posterior law and then

calculate the desired estimators numerically using

these samples. The main difficulty lays in the gen-

eration of those samples. Markov Chain Monte Car-

los (MCMC) samplers are used generally in this do-

main and they have a great interest because of the ex-

ploration of the whole space of the joint posterior.

Though, the major drawback of this non paramet-

ric approach is the computational cost, where a great

number of iterations is needed to reach the conver-

gence then lots of samples should be generated to ob-

tain a good estimation of the parameters.

Variational or Separable Approximation Tech-

niques. One of the main difficulties to obtain an ana-

lytical estimator is the posterior dependence between

the searched parameters. For this reason, we propose,

in this kind of methods, a separable form of the joint

posterior law, and then we try to find the closest pos-

terior to the original posterior under this constraint.

The idea of approximating a joint probability

law p(x) by a separable law q(x) =

∏

j

q

j

(x

j

) is

not new (Ghahramani and Jordan, 1997; Penny and

Roberts, 1998; Roberts et al., 1998; Penny and

Roberts, 1999). The way to do and the particular

choices of parametric families for q

j

(x

j

) for which the

computations can be done easily have been adressed

more recently in many data mining and classifica-

tion problems (Penny and Roberts, 2002; Roberts and

Penny, 2002; Penny and Friston, 2003; Choudrey and

Roberts, 2003; Penny et al., 2003; Nasios and Bors,

2004; Nasios and Bors, 2006; Friston et al., 2006;

Choudrey and Roberts, 2003). However, the use of

these techniques for Bayesian computation for the in-

verse problems in general and in image restoration in

particular, using this class of prior model, is the orig-

inality of this paper.

To give a synthetic presentation of the approach

we consider the problem of approximating a joint pdf

p(x|M ) by a separable pdf q(x) =

∏

j

q

j

(x

j

). The

first step to do this approximation is to choose a cri-

terion. A natural criterion is the Kullback-Leibler di-

vergence:

KL(q : p) =

q(x)ln

q(x)

p(x|M )

dx

= −H(q) −

ln p(x|M )

q(x)

= −

∑

j

H(q

j

) −

ln p(x|M )

q(x)

(27)

So, the main mathematical problem to study is finding

bq(x) which minimizes KL(q : p).

Using property of the exponential family, this

functional optimization problem can be solved as fol-

lows

q

j

(x

j

) =

1

C

j

exp

h

−

ln p(x|M )

q

− j

i

(28)

where q

− j

=

∏

i6= j

q

i

(x

i

) and C

j

are the normalizing

factors.

However, we may note that, first the expression

of q

j

(x

j

) depends on the expressions of q

i

(x

j

),i 6= j.

Thus the computation can only be done in an iter-

ative way. The second point is that to be able to

compute these solutions we must be able to compute

ln p(x|M )

q

− j

. The only family for which these

computations can be done in an easy way is the conju-

gate exponential families. And here we see the impor-

tance of our choice of priors in the previous section;

In fact there is no rule for choosing the appropriate

separation; nevertheless, this choice must conserve

the strong dependence between variables and break

the weak ones, keeping in mind the computation com-

plexity of posterior law. In this work, we propose a

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

574

strongly separated posterior, where only dependence

between image pixels and hidden fields is conserved.

Notably, we can obtain the posterior law more easily.

q(f,z,θ) =

∏

r

[q( f(r)|z(r))]

∏

r

[q(z(r))]

∏

l

q(θ

l

)

(29)

Applying the approximated posterior expression

(eq.28) on p(f,z,θ|g;M ), we see that optimal so-

lution for q(f,z,θ) have the following form

q(f|z) =

∏

r

N (˜µ

z

(r), ˜v

z

(r))

p(z) =

∏

r

ˆ

α

k

=

∏

r

p(z(r)|˜z(r

′

),r

′

∈ V (r))

p(z(r)|˜z(r

′

)) ∝ ˜c

˜

d

1

˜

d

2

(r) ˜e(r)

q(θ

ε

|

˜

α

e

,

˜

β

e

) = G(

˜

α

e

,

˜

β

e

)

q(m

k

| ˜m

k

, ˜v

k

) = N ( ˜m

k

, ˜v

k

), ∀k

q(v

−1

k

| ˜a

k

,

˜

b

k

) = G( ˜a

k

,

˜

b

k

), ∀k

q(α) ∝ D(

˜

α

1

,··· ,

˜

α

K

)

(30)

where all tilted quantities are defined later.

For the approached posteriors of the unknown image

we distinguish two different results according to the

used prior model.

Case 1: MIG

˜µ

z

(r) = ˜v

z

(r)

˜m

k

¯v

k

+ ˜v

z

(r)θ

ε

∑

s

H(s,r)(g(s) − ˆg

−r

(s))

˜v

z

(r) =

¯v

−1

k

+ θ

ε

∑

s

H

2

(s,r)

−1

ˆg

−r

(s) =

∑

t6=r

H(s,t)

˜

f(t)

¯v

k

= hv

k

i = ( ˜a

k

˜

b

k

)

−1

(31)

Case 2: MGM

˜µ

z

(r) = ˜v

z

(r)

˜µ

∗

z

(r)

¯v

k

+ ˜v

z

(r)θ

ε

∑

s

H(s,r)(g(s) − ˆg

−r

(s))

˜µ

∗

z

(r) =

1

|V (r)|

∑

r

′

∈V (r)

δ(z(r

′

) − ˜z(r))

˜

f(r

′

)

+ (1− δ(z(r

′

) − ˜z(r)) ˜m

z

(r)

˜v

z

(r) =

¯v

−1

k

+ θ

ε

∑

s

H

2

(s,r)

−1

ˆg

−r

(s) =

∑

t6=r

H(s,t)

˜

f(t)

¯v

k

= hv

k

i = ( ˜a

k

˜

b

k

)

−1

(32)

Meanwhile, the posterior law of the hidden field

remains the same, and given by the following relation

˜c = exp[Ψ(

˜

α

k

) − Ψ(

∑

z

˜

α

z

)]

˜

d

1

= exp

1

2

Ψ(

˜

b

k

) + ln( ˜a

k

)

˜

d

2

(r)= exp

1

2

˜µ

2

k

(r)

˜v

k

(r)

−

m

2

k

¯v

k

− ln( ˜v

k

(r))

˜e(r) = exp

−

1

2

γ

∑

r

′

Φ

m

(r,r

′

)

(33)

where Φ

m

(.,.) class projection function see (Ayasso

and Mohammad-Djafari, 2007).

Finally the hyperparameters posterior variables

are,

˜

α

e

=

a

−1

e0

+

1

2

∑

r

E((g(r) − ˜g(r))

2

)

−1

˜

β

e

= b

e0

+

∑

r

1

2

˜m

k

= ˜v

k

m

0

v

0

+ ¯v

k

∑

r

ˆ

α

k

(r)˜µ

k

(r)

˜v

k

=

v

−1

0

+

∑

r

ˆ

α

k

(r)

−1

˜a

k

=

a

−1

0

+

1

2

∑

r

ˆ

α

k

(r) ˜u

k

(r)

−1

˜u

k

= ˜µ

2

k

(r) + ˜v

k

(r) +

m

2

k

− 2 ˜m

k

˜µ

k

(r)

˜

α

k

= α

0

+

∑

r

ˆ

α

k

(r)

(34)

Several observations can be made on the these re-

sults. The most important is that the problem of prob-

ability law optimization turned into simple parametric

computation, which reduces significantly the compu-

tational burden. Indeed, although of the strong chosen

separation, posterior mean value dependencebetween

image pixels and hidden field elements is present in

the equations, which justifies the use of spatially de-

pendent prior model with this independent approxi-

mated posterior. On the other hand, the obtained val-

ues are mutually dependent. One difficulty still re-

mains which is an appropriate choice of an stopping

criterion A subject on which we are working.

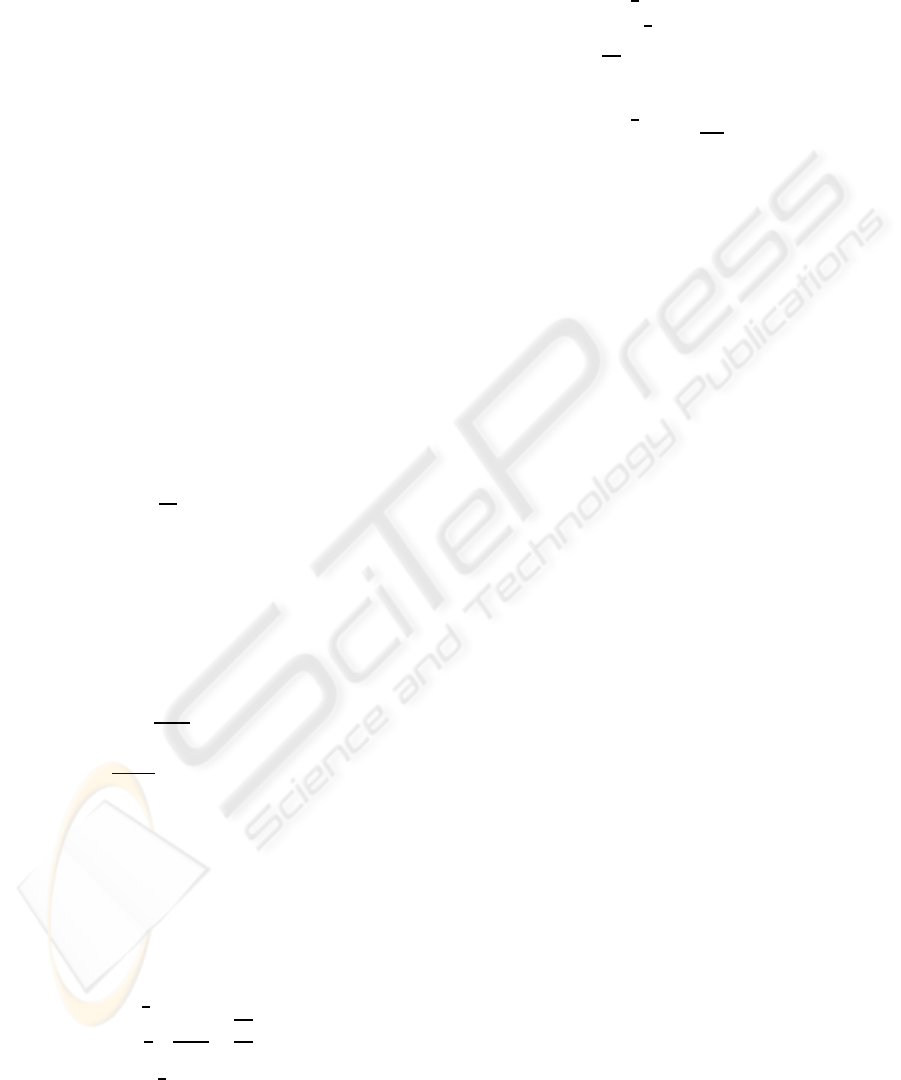

5 NUMERICAL EXPERIMENT

RESULTS

In this section, we apply the proposed methods on

a synthesized restoration problem. The original im-

age, which is composed of two classes, is filtered

by a square shape point spread function(PSF). Then

white Gaussian noise is added in order to obtain the

distorted image. We have used variational approx-

imation method with Gauss-Markov-Potts priors to

jointly restore and segment it. In comparison with

MCMC method, we note that image quality is approx-

imately the same. However, the computation time is

incredibly less in the proposed method in comparison

with MCMC.

6 CONCLUSIONS

A variational Bayes approximation is proposed in

this paper for image restoration. We have intro-

duced a hidden variable to give a more accurate prior

model of the unknown image. Two priors, indepen-

dent Gaussian and Gauss-Markov models were stud-

ied with Potts prior on the hidden field. This method

VARIATIONAL BAYES WITH GAUSS-MARKOV-POTTS PRIOR MODELS FOR JOINT IMAGE RESTORATION

AND SEGMENTATION

575

Original image Distorted image

Restored via MCMC Restored via VB MGM

initial segmentation VB segmentation

Figure 2: The proposed method is tested by a synthesized 2

classes distorted image. Number of classes in each method

were set to 3. There is no great difference in quality be-

tween the two methods, VB MGM, and MCMC. Mean-

while, variational bayes algorithm is noticably faster than

MCMC sampler used here.

was applied to a simple restoration problem, where it

gave promising results.

Still, a number of the aspects regarding this

method have to be studied, including the convergence

conditions, choice of separation and the estimation of

Potts parameter.

REFERENCES

Ayasso, H. and Mohammad-Djafari, A. (2007). Approche

bay´esienne variationnelle pour les probl`emes inverses.

application en tomographie microonde. Technical re-

port, Rapport de stage Master ATS, Univ Paris Sud,

L2S, SUPELEC.

Bouman, C. and Sauer, K. (1993). A generalized Gaussian

image model for edge-preserving MAP estimation.

IEEE Transaction on image processing, pages 296–

310.

Choudrey, R. and Roberts, S. (2003). Variational Mixture of

Bayesian Independent Component Analysers. Neural

Computation, 15(1).

Friston, K., Mattout, J., Trujillo-Barreto, N., Ashburner, J.,

and Penny, W. (2006). Variational free energy and the

laplace approximation. Neuroimage, (2006.08.035).

Available Online.

Geman, S. and McClure, D. (1985). Bayesian image analy-

sis: Application to single photon emission computed

tomography. American Statistical Association, pages

12–18.

Ghahramani, Z. and Jordan, M. (1997). Factorial Hidden

Markov Models. Machine Learning, (29):245–273.

Green, P. (1990). Bayesian reconstructions from emis-

sion tomography data using amodified EM algorithm.

IEEE Transaction on Medical Imaging, pages 84–93.

Nasios, N. and Bors, A. (2004). A variational approach for

bayesian blind image deconvolution. IEEE Transac-

tions on Signal Processing, 52(8):2222–2233.

Nasios, N. and Bors, A. (2006). Variational learning for

gaussian mixture models. IEEE Transactions on Sys-

tems, Man and Cybernetics, Part B, 36(4):849–862.

Penny, W. and Friston, K. (2003). Mixtures of general linear

models for functional neuroimaging. IEEE Transac-

tions on Medical Imaging, 22(4):504–514.

Penny, W., Kiebel, S., and Friston, K. (2003). Variational

bayesian inference for fmri time series. NeuroImage,

19(3):727–741.

Penny, W. and Roberts, S. (1998). Bayesian neural net-

works for classification: how useful is the evidence

framework? Neural Networks, 12:877–892.

Penny, W. and Roberts, S. (1999). Dynamic models for non-

stationary signal segmentation. Computers and Bio-

medical Research, 32(6):483–502.

Penny, W. and Roberts, S. (2002). Bayesian multivari-

ate autoregresive models with structured priors. IEE

Proceedings on Vision, Image and Signal Processing,

149(1):33–41.

Roberts, S., Husmeier, D., Penny, W., and Rezek, I. (1998).

Bayesian approaches to gaussian mixture modelling.

IEEE Transactions on Pattern Analysis and Machine

Intelligence, 20(11):1133–1142.

Roberts, S. and Penny, W. (2002). Variational bayes for gen-

eralised autoregressive models. IEEE Transactions on

Signal Processing, 50(9):2245–2257.

Tikhonov (1963). Solution of incorrectly formulated prob-

lems and the regularization method. Sov. Math., pages

1035–8.

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

576