DETECTION OF ILLICIT TRAFFIC USING NEURAL NETWORKS

Paulo Salvador, Ant´onio Nogueira, Ulisses Franc¸a and Rui Valadas

University of Aveiro, Instituto de Telecomunicac¸˜oes, Aveiro, Portugal

Keywords:

Intrusion Detection System, Firewalls, Port Matching, Protocol Analysis, Syntatic and Semantic Analysis,

Traffic Signature, Traffic Pattern, Neural Networks.

Abstract:

The detection of compromised hosts is currently performed at the network and host levels but any one of

these options presents important security flaws: at the host level, antivirus, anti-spyware and personal firewalls

are ineffective in the detection of hosts that are compromised via new or target-specific malicious software

while at the network level network firewalls and Intrusion Detection Systems were developed to protect the

network from external attacks but they were not designed to detect and protect against vulnerabilities that

are already present inside the local area network. This paper presents a new approach for the identification of

illicit traffic that tries to overcome some of the limitations of existing approaches, while being computationally

efficient and easy to deploy. The approach is based on neural networks and is able to detect illicit traffic based

on the historical traffic profiles presented by ”licit” and ”illicit” network applications. The evaluation of the

proposed methodology relies on traffic traces obtained in a controlled environment and composed by licit

traffic measured from normal activity of network applications and malicious traffic synthetically generated

using the SubSeven backdoor. The results obtained show that the proposed methodology is able to achieve

good identification results, being at the same time computationally efficient and easy to implement in real

network scenarios.

1 INTRODUCTION

The detection of compromised machines is cur-

rently performed at two levels: network and PC.

At the PC level, we have antivirus, anti-spyware

and personal firewalls. All these systems are in-

effective in the detection of hosts that are compro-

mised via new or target-specific malicious software.

Network firewalls and Intrusion Detection Systems

(IDS)(Denning, 1987; Mukherjee et al., 1994; Ilgun

et al., 1995) systems were developed to protect the

network from external attacks, but they are not de-

signed to detect and protect against vulnerabilities

that are already present inside the local area network.

The PC security is commonly compromised by care-

less behaviors of its legit users: a Trojan horse can get

inside a PC via an e-mail message rashly open or by

browsing less safe web sites. Therefore, the firewall is

not able to protect the network and their users because

the attack is made using information that is embedded

in licit traffic. IDSs analyze network traffic by look-

ing for characteristic traffic profiles of compromised

machines or their attacks; these profiles are called sig-

natures. These signature-based systems are effective

only if the compromised host do not simulate licit

traffic. Nowadays, the main goal for taking control of

network machines is the search and retrieval of clas-

sified information, maintaining themselves discreetly

active and making their detection almost impossible

by traditional IDSs. Antivirus and anti-spyware can

only detect and remove already known viruses or Tro-

jan horses. Illicit applications that were recently cre-

ated are discrete, undetectable and are not listed in any

threats database, making its detection and removal

virtually impossible. Personal firewalls could be an

effective tool to avoid PC infection but they require

an average know-how level of the tool functionalities

and special attention by the user, which rarely hap-

pens. A PC user may unconsciously open doors to

a mechanism that turns its PC into a compromised

machine. As soon as the system becomes compro-

mised, the personal firewall becomes ineffective be-

cause the PC’s total or partial control now belongs to

other users.

This paper will present a new approach for the

identification of illicit traffic/applications that tries

to overcome some of the limitations of existing ap-

proaches, while being computationally efficient and

5

Paulo P., Nogueira A., França U. and Valadas R. (2008).

DETECTION OF ILLICIT TRAFFIC USING NEURAL NETWORKS.

In Proceedings of the International Conference on Security and Cryptography, pages 5-12

DOI: 10.5220/0001920800050012

Copyright

c

SciTePress

easy to deploy. The approach is based on neural net-

works and detects illicit traffic based on the histor-

ical traffic profile presented by each network appli-

cation. In a first phase, a neural network model is

conveniently trained using data traces corresponding

to completely/undoubtedly identified traffic profiles.

These traces can result from traffic measurements col-

lected on a real network operating scenario or from

synthetically generated traffic obtained in totally con-

trolled conditions. In a second phase, the trained

model is used to identify new traffic profiles that are

presented as inputs. The results obtained show that

the proposed model is able to achieve good identifica-

tion results. Besides, the computational burden is rel-

atively small and the proposed methodology can be

easily implemented in real network scenarios. Neu-

ral networks have been proposed to detect intrusions

at host level (Debar et al., 1992; Ryan et al., 1997)

and we extended this approach to detect illicit traffic

at network level.

The proposed framework is intended to be incor-

porated in a future platform that will allow on-line de-

tection of illicit traffic: the platform should interact

with traffic analysis systems, should have a database

of utilization profiles from different network users,

an easy-to-update list of illicit traffic signatures and

a system of alert and counter measures.

It is known that several traditional techniques that

have been used to identify IP applications have impor-

tant drawbacks that limit or dissuade their application:

(i) port based analysis presents some obvious limita-

tions since most applications allow users to change

the default port numbers by manually selecting what-

ever port(s) they like, many newer applications are

more inclined to use random ports, thus making ports

unpredictable and there is also a trend for applications

to begin masquerade their function ports within well-

known application ports; (ii) protocol analysis (Jiang

et al., 2005; Chen and Laih, 2008) is ineffective since

IP applications are continuously evolving and there-

fore their signatures can change, application develop-

ers can encrypt traffic making protocol analysis more

difficult, signature-based identification can affect net-

work stability because it has to read and process all

network traffic, protocol analysis is not able to deal

with confidentiality requirements; (iii) syntactic and

semantic analysis of the data flows (Wang and Stolfo,

2004; Jiang et al., 2005) can be a burden to network

stability due to its high processing requirements and is

not appropriate when dealing with confidentiality re-

quirements because, in these situations, it is not pos-

sible to have access to the packet contents.

The paper is organized as follows: section 2 will

present the state of the art on intrusion detection

and prevention systems; section 3 describes the main

ideas behind the proposed detection approach and the

details of the developedframework; section 4 presents

and discusses the most important results and, finally,

section 5 presents the main conclusions of this study.

2 STATE OF THE ART ON

INTRUSION DETECTION AND

PREVENTION

Intrusion detection system (IDS) are software plat-

forms aimed to detect the undesirable remote manip-

ulation of computers from the Internet. IDSs are used

to detect several types of malicious traffic that can

not be detected by a conventional firewall, like net-

work attacks to vulnerable services, attacks directed

to applications, unauthorized logins, access to files

and malware distribution. An IDS includes several

components: (i) sensors, that generate security events;

(ii) a console, to monitor events and alerts and con-

trol the sensors; (iii) a central engine that saves the

events generated by sensors on a database and uses

the system rules to generate security alerts of the re-

ceived events. There are several types of IDSs: Net-

work Intrusion Detection System (NIDS), an inde-

pendent network platform that identifies intrusions by

examining network traffic; Protocol-based Intrusion

Detection System (PIDS), system or agent usually

located in front of a server that monitors and ana-

lyzes communication protocols between a device and

a server; Application Protocol-based Intrusion Detec-

tion System (APIDS), system or agent usually located

in front of a group of servers that monitors and an-

alyzes communication protocols that are specific to

a certain application; Host-based Intrusion Detection

System (HIDS), a PC agent that detects intrusions by

analyzing system calls, application logs, changes on

the file system, among other activities; Hybrid Intru-

sion Detection System (HyIDS), that combines one or

several of the above approaches. For example, Snort

or Prelude can operate as NIDS or HIDS.

An IDS is said to be passive if it simply detects

and alerts. When suspicious or malicious traffic is de-

tected an alert is generated and sent to the administra-

tor or user and it is up to them to take action to block

the activity or respond in some way. A reactive IDS

will not only detect suspicious or malicious traffic

and alert the administrator, but will take pre-defined

proactive actions to respond to the threat. Typically,

this means blocking any further network traffic from

the source IP address or user.

IDSs can be signature-based or anomaly detec-

SECRYPT 2008 - International Conference on Security and Cryptography

6

tion systems. A signature based IDS will monitor

packets on the network and compare them against a

database of signatures or attributes from known mali-

cious threats. This is similar to the way most antivirus

software detects malware. The issue is that there will

be a lag between a new threat being discovered in

the wild and the signature for detecting that threat be-

ing applied to the IDS. During that lag time, the IDS

would be unable to detect the new threat. An IDS

which is anomaly based will monitor network traffic

and compare it against an established baseline. The

baseline will identify what is normal for that network

- what sort of bandwidth is generally used, what pro-

tocols are used, what ports and devices generally con-

nect to each other - and alert the administrator or user

when traffic is detected which is anomalous, or sig-

nificantly different, than the baseline.

An intrusion prevention system (IPS) is a com-

puter security device that exercises access control to

protect computers from exploitation. Intrusion pre-

vention technology is considered by some to be an

extension of IDS technology but it is actually an-

other form of access control, like an application layer

firewall. IPS have many advantages over IDS: (i)

they are designed to sit inline with traffic flows and

prevent attacks in real-time; (ii) most IPS solutions

have the ability to look at (decode) layer 7 protocols

like HTTP, FTP, and SMTP, which provides greater

awareness. There are several types of IPS: Host based

(HIPS), one where the intrusion-prevention applica-

tion is resident on a specific IP address, usually on a

computer; Network based IPS (NIPS) is one where

the IPS application/hardware and any actions taken to

prevent an intrusion on a specific network host is done

from a host with another IP address on the network;

Content based IPS (CIPS) inspects the content of net-

work packets for unique sequences, called signatures,

to detect and hopefully prevent known types of at-

tacks such as worm infections and hacks; Rate based

IPS (RIPS) are primarily intended to preventdenial of

service (DoS) and distributed DoS attacks and work

by monitoring and learning normal network behaviors

- through real-time traffic monitoring and compari-

son with stored statistics, RIPS can identify abnor-

mal rates for certain types of traffic (for example TCP,

UDP or ARP packets, connections per second, pack-

ets per connection, packets to specific ports); Protocol

analyzer IPS (PAIPS) is an IPS that uses a protocol

analyzer to fully decode protocols - once decoded, the

IPS analysis engine can evaluate different parts of the

protocol for anomalous behavior or exploits and the

IPS engine can drop the offending packets.

Snort (www.snort.org) is an open source network

intrusion prevention and detection system utilizing

a rule-driven language, which combines the benefits

of signature, protocol and anomaly based inspection

methods. Snort is the most widely deployed intru-

sion detection and prevention technology worldwide

and has become the de facto standard for the industry.

Snort is capable of performing real-time traffic anal-

ysis and packet logging on IP networks. It can per-

form protocol analysis, content searching/matching

and can be used to detect a variety of attacks and

probes, such as buffer overflows, stealth port scans,

Common Gateway Interfaces (CGI) attacks, Server

Message Blocks (SMB) probes, OS fingerprinting at-

tempts, amongst other features. The system can also

be used for intrusion prevention purposes, by drop-

ping attacks as they are taking place.

OSSEC (www.ossec.net) is an open source HIDS

that performs log analysis, integrity checking, Win-

dows registry monitoring, rootkit detection, real-time

alerting and active response. It runs on most operat-

ing systems, including Linux, OpenBSD, FreeBSD,

MacOS, Solaris and Windows.

SamHain (www.la-samhna.de/samhain) is a mul-

tiplatform, open source solution for centralized file in-

tegrity checking and/or host-based intrusion detection

on POSIX systems (Unix, Linux, Cygwin/Windows).

It has been designed to monitor multiple hosts with

potentially different operating systems from a central

location, although it can also be used as standalone

application on a single host.

Osiris (osiris.shmoo.com) is a HIDS that periodi-

cally monitors one or more hosts for change. It main-

tains detailed logs of changes to the file system, user

and group lists, resident kernel modules, and more.

Osiris can be configured to email these logs to the ad-

ministrator. Hosts are periodically scanned and, if de-

sired, the records can be maintained for forensic pur-

poses. Osiris keeps an administrator apprised of pos-

sible attacks and/or nasty little trojans. The purpose

here is to isolate changes that indicate a break-in or a

compromised system. Osiris makes use of OpenSSL

for encryption and authentication in all components.

Cfengine (www.cfengine.org) is one of the most

powerful system administration tools available to-

day. In a useful deviation from most scripting tools,

cfengine allows describing the desired state of a sys-

tem rather than what should be done to a system.

Cfengine itself takes care of testing compliance with

that state and will do its best to correct any misconfig-

urations. It also includes powerful classing capabili-

ties that allows grouping hosts into classes and create

different states on each class of host. Like all tools,

it has its drawbacks, but overall it should be consid-

ered the most important and most capable tool in the

sysadmin toolbox today.

DETECTION OF ILLICIT TRAFFIC USING NEURAL NETWORKS

7

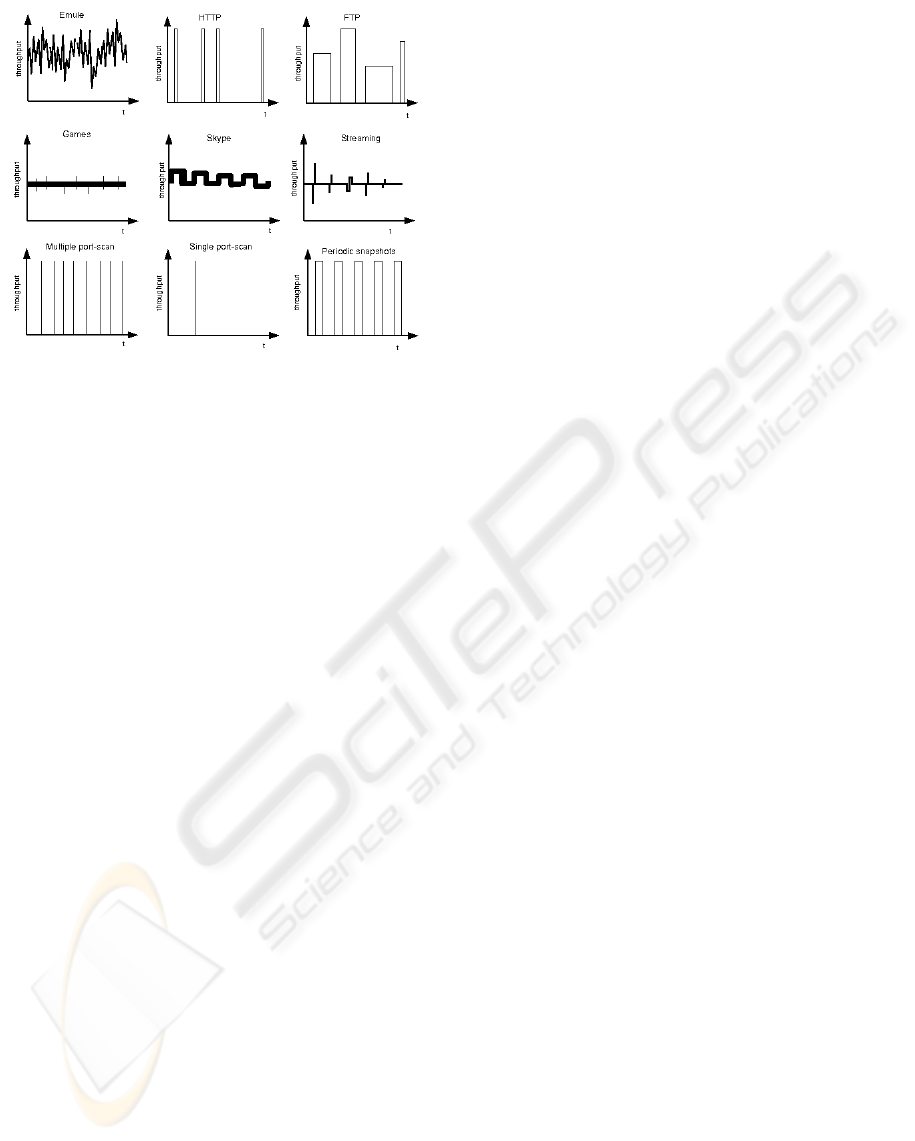

Figure 1: Traffic profiles for different Internet applications.

Nessus (www.nessus.org) is a comprehensivevul-

nerability scanning program. Its goal is to detect po-

tential or confirmed weaknesses on the tested ma-

chines, like for example: (i) vulnerabilities that al-

low a remote cracker to control the machine or access

sensitive data; (ii) misconfiguration (e.g. open mail

relay); (iii) un-applied security patches, even if the

fixed flaws are not exploitable in the tested config-

uration; (iv) default, common and blank/absent pass-

words; (v) denials of service against the TCP/IP stack.

3 DETECTION FRAMEWORK

It is known that Internet applications usually exhibit

characteristic traffic profiles, as illustrated in Figure

1:

• File sharing applications use high bandwidths and

are characterized by a high variability that can be

attributed to the enormous number of TCP ses-

sions that are opened/closed;

• HTTP traffic is characterized by short periods of

high bandwidth utilization with non-periodic du-

rations, intercalated by large periods of inactivity;

• FTP traffic also exhibits a high bandwidth utiliza-

tion, since established connections tend to occupy

all available resources. However, high activity pe-

riods are intercalated with inactivity periods;

• Games traffic is characterized by a medium level

continuous bandwidth utilization pattern, with pe-

riodic peaks;

• Skype traffic has an almost periodic pattern - since

it uses VoIP technology the bandwidth utiliza-

tion pattern should be periodic, but the adjustment

of the transmission parameters to the delay con-

straints imposed by the underlying network in-

duces some variability on the bandwidth utiliza-

tion profile;

• Streaming traffic is characterized by a

low/medium level and almost constant uti-

lization profile with some negative and positive

short non-periodic variations from the average

bandwidth. These variations can be explained

by bandwidth starvation and the consequent

bandwidth increment imposed by the streaming

protocol with the purpose of achieving an average

transfer rate.

The proposed detection framework will use this

knowledge about different application profiles to

build a ”memory” that will allow further identifica-

tions for new traffic traces that are presented to the

framework. Besides ”regular” or licit Internet ap-

plications, the detection framework must also have a

complete knowledge of the traffic profiles associated

to tasks or actions that are commonly performed by

controlled or compromised machines, so it can search

for and identify those profiles in a real operation sce-

nario. In order to obtain the typical traffic profiles as-

sociated to compromised machines, we had to mimic

the behavior of a compromised PC and the SubSeven

rootkit was chosen to generate the intended “illicit”

traffic traces.

A rootkit is a set of software tools intended to con-

ceal running processes, files or system data from the

operating system. They have their origin in benign

applications, but recently have been used by malware

to help intruders maintain access to systems while

avoiding detection. Rootkits often modify parts of

the operating system or install themselves as drivers

or kernel modules and can take full control of a sys-

tem. A rootkit’s only purpose is to hide files, network

connections, memory addresses, or registry entries

from other programs used by system administrators

to detect intended or unintended special privilege ac-

cesses to the computer resources. However, a rootkit

may be incorporated with other files which have other

purposes. Rootkits are often used to abuse a com-

promised system and often include so-called ”back-

doors” to help the attacker subsequently access the

system more easily. A backdoor may allow processes

started by a non-privileged user to execute functions

normally reserved for the superuser. All sorts of other

tools useful for abuse can be hidden using rootkits:

this includes tools for further attacks against computer

systems which the compromised system communi-

cates with, such as sniffers and keyloggers. Rootkits

are also used to allow its programmer to see and ac-

cess user names and log-in information for sites that

SECRYPT 2008 - International Conference on Security and Cryptography

8

require them.

The SubSeven backdoor is a Trojan Horse that

enables unauthorized people to access a computer

over the Internet without the knowledge of its owner.

When the server portion of the program runs on a

computer, the individual who is remotely accessing

the computer may be able to perform the following

tasks: (i) set it up as an FTP server; (ii) browse files

on that system; (iii) take screen shots; (iv) capture

real-time screen information; (v) open and close pro-

grams; (vi) edit information in currently running pro-

grams; (vii) show pop-up messages and dialog boxes;

(viii) hang up a dial-up connection; (ix) remotely

restart a computer; (x) open the CD-ROM; (xi) edit

the registry information.

In our simulation experiments, we have pro-

grammed three tasks on the SubSeven software: file

transfer, multiple port scan and periodic snapshots.

The file transfer bandwidth utilization profile is sim-

ilar to the FTP profile previously discussed and the

profiles associated to multiple port scan and periodic

snapshots are also illustrated in Figure 1: the first one

is characterized by several very short duration high

activity peaks and the second is characterized by pe-

riodic periods of high activity, each one demanding

constant bandwidth utilization.

The proposed framework for detection of illicit

traffic is based on neural networks, specifically a

feed-forward network model using the back propaga-

tion learning algorithm. Back propagation is a gen-

eral purpose learning algorithm for training multi-

layer feed-forward networks that is powerful but ex-

pensive in terms of computational requirements for

training. A back propagation NN model uses a feed-

forward topology, supervised learning, and the back

propagation learning algorithm. A back propagation

network with a single hidden layer of processing ele-

ments can model any continuous function to any de-

gree of accuracy (given enough processing elements

in the hidden layer) (Demuth and Beale, 1998).

For the dimension of our problem a feed-forward

back propagation network with three layers is appro-

priate. The correlation that exists between the tempo-

ral sequence of traffic values and the current distribu-

tion of traffic per application is taken into account by

presenting the current and the last h (where h repre-

sents a configurable parameter) traffic values as inputs

to the NN model. In this way, the input layer will have

h+ 1 neurons, corresponding to the dimensionality of

the input vectors. We have tried different values of h,

concluding that the best performances were obtained

for NN models having between 4 and 16 neurons in

the input layer. The number of nodes of both the in-

put and hidden layers are empirically selected such

that the performance function (the mean square error,

in this case) is minimized. The output layer has 1 neu-

ron, since each output vector represents the existence

of licit or illicit traffic.

The application of a NN model to solve a partic-

ular problem involves two phases: a training phase

and a test phase. In the training phase, a training

set is presented as input to the NN which iteratively

adjusts network weights and biases in order to pro-

duce an output that matches, within a certain degree

of accuracy, a previously known result (named target

set). In the test phase, a new input is presented to

the network and a new result is obtained based on

the network parameters that were calculated during

the training phase. There are two learning paradigms

(supervised or non-supervised learning) and several

learning algorithms that can be applied, depending es-

sentially on the type of problem to be solved. For

our problem the network was trained incrementally,

that is, network weights and biases were updated each

time an input was presented to the network. This op-

tion was mostly determined by the size of the training

set: loading the complete training set at once and pre-

senting it as input to the NN was very consuming in

terms of computational memory. The training method

used was the Levenberg-Marquardt (Madsen et al.,

2004) algorithm combined with automated Bayesian

regularization, which basically constitutes a modifica-

tion of the Levenberg-Marquardttraining algorithm to

produce networks which generalize well, thus reduc-

ing the difficulty of determining the optimum network

architecture.

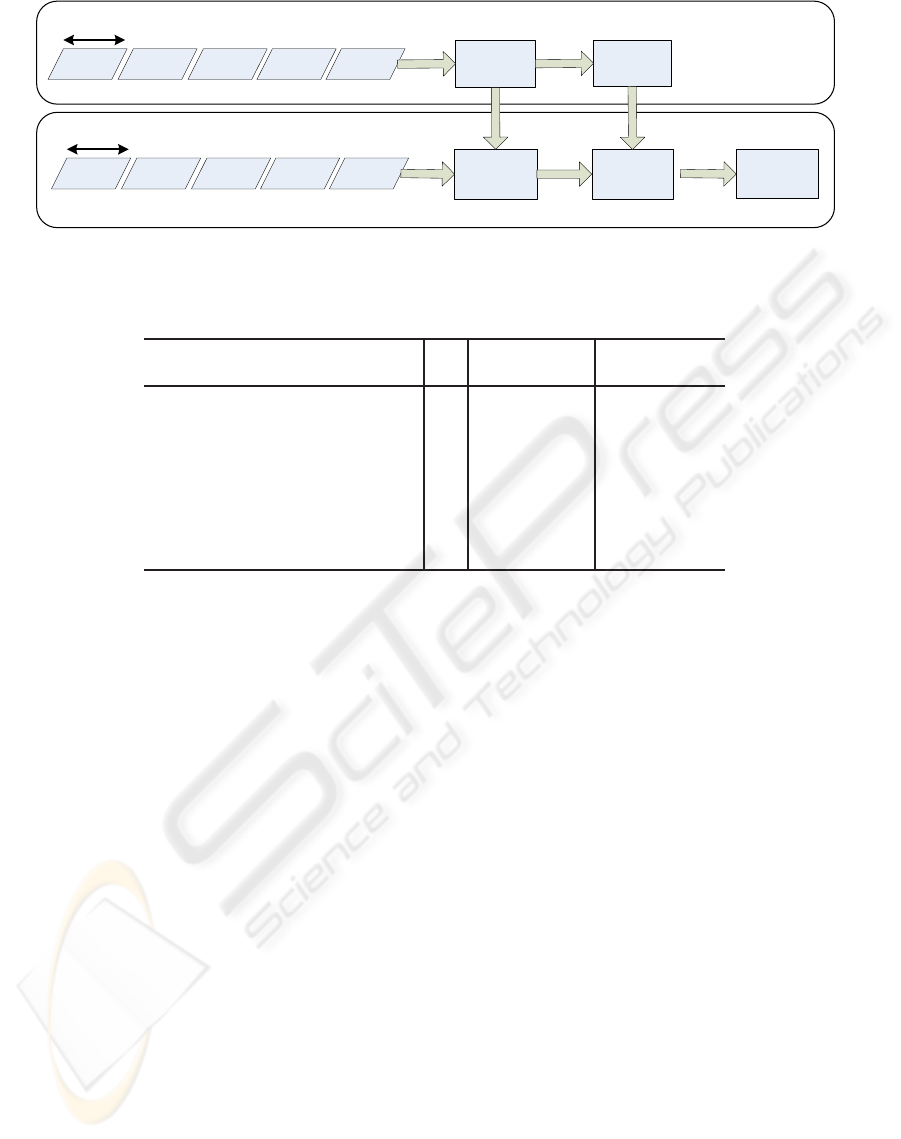

The general approach used for the identification

of illicit traffic is depicted in Figure 2: in the train-

ing phase, the first half of the different application

traces, that are designated as application profiles, are

presented to the neural network model, together with

a previous classification of the different profiles. This

phase leads to a trained neural network model whose

parameters were conveniently adjusted according to

the identification requirements. In the test phase, the

second half of the application profiles are inputted to

the trained neural network model, producing the cor-

responding identification results. By comparing these

results with the pre-identification values, the model

can be conveniently validated.

4 RESULTS

As previously explained, the neural network model is

trained with the first half of each traffic trace and the

second half will be used to test the accuracy of the

trained model. The following applications were used

DETECTION OF ILLICIT TRAFFIC USING NEURAL NETWORKS

9

Neural Network

Profile 1 Profile 2 Profile 3 ... Profile n

First half of

the data

Pre-classification

Training phase

Neural Network

Profile 1 Profile 2 Profile 3 ... Profile n

Second half

of the data

Results

Test phase

Validation

Figure 2: General approach for the detection of illicit applications.

Table 1: Correct identification percentages for the different traffic profiles - upload traffic.

Traffic type h # Neurons in Correct

hidden layer identification

HTTP + Port scan 10 16 98.26%

HTTP + Snapshots 16 20 100.00%

HTTP + File Transfer 8 16 94.49%

Streaming + Port scan 8 16 93.91%

Streaming + Snapshots 16 14 97.98%

Streaming + File Transfer 10 16 98.41%

HTTP + File Sharing + Skype +

(Snapshots or File Transfer) 16 12 88.80%

to evaluate the efficiency of the proposed methodol-

ogy: HTTP, Games, Peer-to-Peer File Sharing, Video

Streaming and Skype. Besides these licit applications,

illicit traffic was synthetically generated by program-

ming SubSeven to perform file transfer, multiple port

scan and periodic snapshots (with a 2 seconds period-

icity), as described in the previous section. By com-

bining these applications, the following traffic pro-

files were created: (i) HTTP Browsing + Port Scan;

(ii) HTTP Browsing + Periodic Snapshots; (iii) HTTP

Browsing + File Transfer; (iv) Streaming + Port Scan;

(v) Streaming + Periodic Snapshots; (vi) Streaming +

File Transfer; (vii) HTTP Browsing + P2P File Shar-

ing + Skype + (Snapshots or File Transfer). Only the

upload traffic will be considered and each trace has

1-hour duration and represents the number of upload

bytes per sampling interval (the sampling interval is

equal to 1 second).

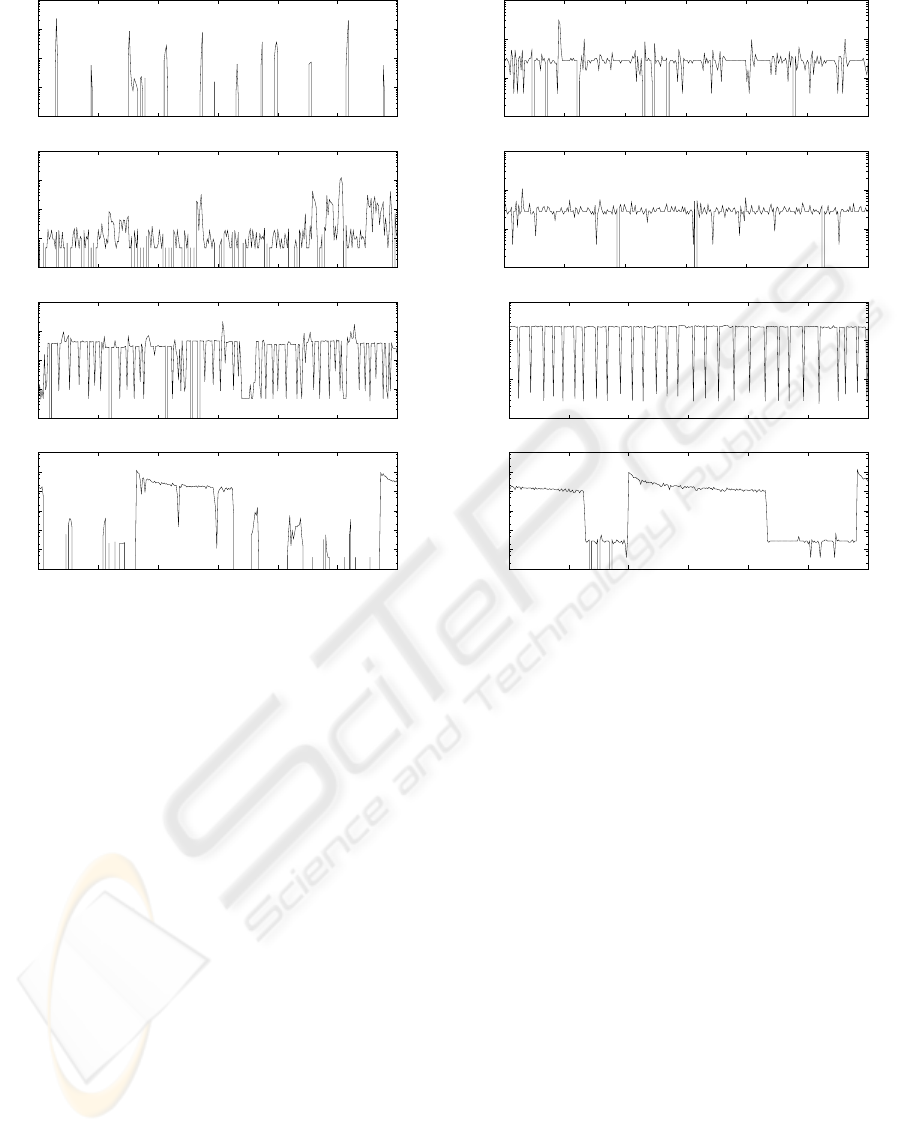

Figure 3 depicts 5 minute samples of the HTTP

browsing traces. Figure 3(a) shows a licit HTTP

browsing typical profile with short non-periodical

peaks generated by HTTP requests. The HTTP

browsing and a non-aggressive port scan traffic joint

profile is depicted in 3(b). It is possible to observe

small periodic peaks characteristic of the port-scan

embedded within the normal licit traffic. Figure 3(c)

shows the traffic profile of periodic snapshots trans-

ferred to a remote machine characterized by periodic

bursts of traffic in upstream direction. Figure 3(d)

depicts a transference of a large file in the upstream

direction. This type of traffic may not be illicit be-

cause it can be the result of a normal HTTP file up-

load. Nevertheless, a HTTP browsing trace should

not include file uploads and we chose to classify these

events as an anomaly that should be detected.

Figure 4 depicts 5 minute samples of the stream-

ing traces and the same illicit traffic profiles, de-

scribed above for the HTTP browsing traces, can be

observed embedded now within a streaming trace.

The streaming trace is characterized by a constant (in

average) bandwidth occupation. The profile has some

negative and positive short non-periodic variations

from the average bandwidth. These variations can be

explained by bandwidthstarvation and the consequent

bandwidth increment generated by the streaming pro-

tocol to counterbalance it and achieve an average con-

stant rate.

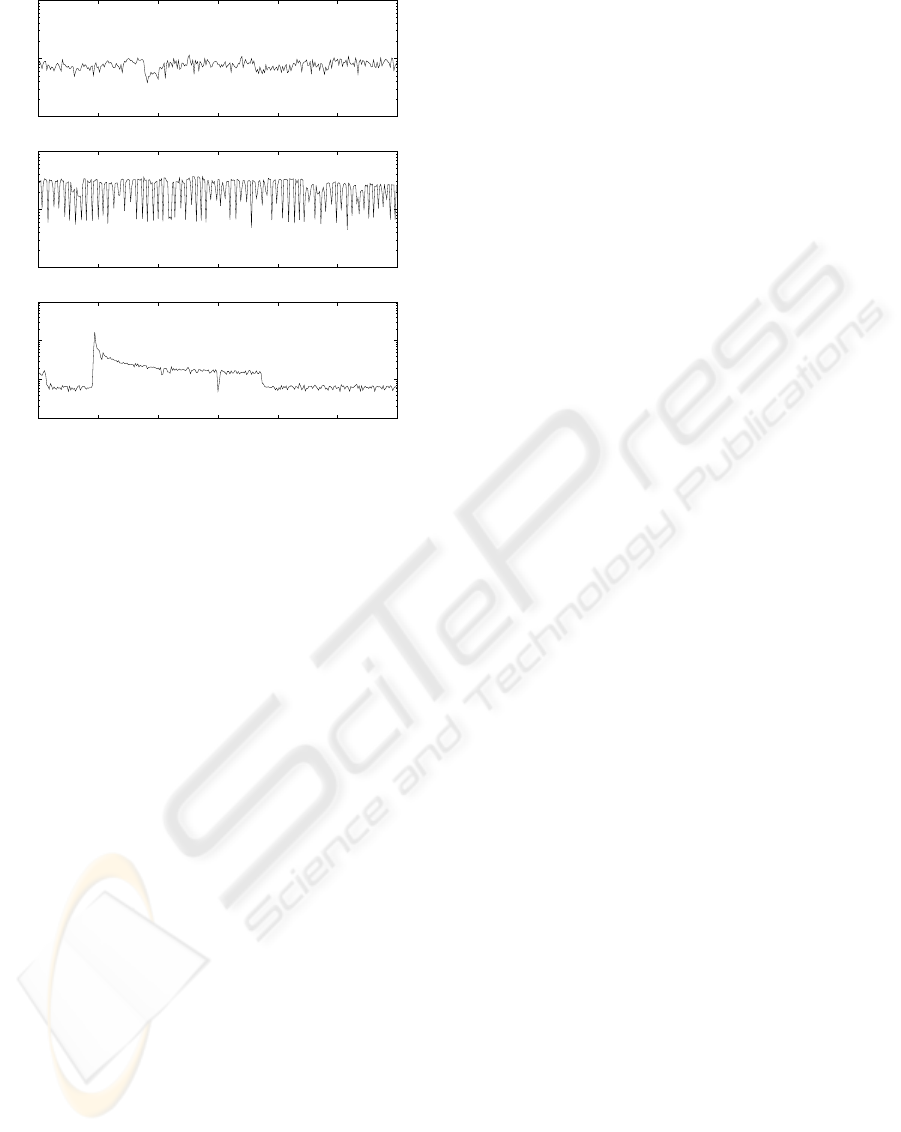

Figure 5 depicts 5 minute samples of the joint

HTTP Browsing + P2P File Sharing + Skype traces.

The licit traffic is depicted in Figure 4(a) and Fig-

ures 5(b) and 5(c) show the licit traffic in conjunc-

tion with snapshot and file transfer traffic, respec-

tively. The licit traffic is characterized by a high

bandwidth consumption with a relative high variation

around an average bandwidth value. We chose not to

include a non-aggressive port scan because such traf-

SECRYPT 2008 - International Conference on Security and Cryptography

10

10

100

1000

10000

100000

0 50 100 150 200 250 300

bytes

seconds

10

100

1000

10000

100000

0 50 100 150 200 250 300

bytes

seconds

10

100

1000

10000

100000

0 50 100 150 200 250 300

bytes

seconds

10

100

1000

10000

100000

1e+06

1e+07

0 50 100 150 200 250 300

bytes

seconds

Figure 3: HTTP Browsing (from top to bottom): (a) Licit,

(b) Licit + Port Scan, (c) Licit + Snapshot and (d) Licit +

File transfer.

fic is imperceptible within such high licit bandwidth

consumptions. The tested trace with illicit compo-

nents was composed by an alternate usage of snapshot

and file transfer (10 minutes each time) embedded in

the licit traffic.

For each class of licit traffic, one neural network

was trained to detect the presence of illicit traffic pro-

file signatures and identify the specific class of illicit

traffic. Table 1 presents the best correct identification

percentages for the different traffic profiles, as well

as the history length that was considered at the input

of the neural network (that is, the amount of tempo-

ral correlation that was taken into account in the input

traffic profiles) and the number of neurons in the hid-

den layer of each neural network model. As can be

seen from these results, the identification percentages

are quite high, illustrating the accuracy of the pro-

posed methodology for all considered traffic scenar-

ios. The worst result was obtained for the last traffic

profile, since it is the most heterogeneous profile that

includes a quite complicated mixture of applications.

Nevertheless, the trained model was able to correctly

identify more than 88% of the traffic values.

10

100

1000

10000

0 50 100 150 200 250 300

bytes

seconds

10

100

1000

10000

0 50 100 150 200 250 300

bytes

seconds

100

1000

10000

100000

0 50 100 150 200 250 300

bytes

seconds

10

100

1000

10000

100000

1e+06

1e+07

0 50 100 150 200 250 300

bytes

seconds

Figure 4: Streaming (from top to bottom): (a) Licit, (b)

Licit + Port Scan, (c) Licit + Snapshot and (d) Licit + File

transfer.

5 CONCLUSIONS

This paper presented a new approach for the identi-

fication of illicit traffic that tries to overcome some

of the limitations of existing approaches, while be-

ing computationally efficient and easy to deploy. The

approach is based on neural networks and is able to

detect illicit based on the historical traffic profile pre-

sented by each licit and illicit network application.

The test of the proposed methodology was based on

traffic generated on a controlled environment, where

malicious traffic was generated using the SubSeven

backdoor. The results obtained show that the pro-

posed methodology is able to achieve good identifica-

tion results, being at the same time computationally

efficient and easy to implement in real network sce-

narios.

ACKNOWLEDGEMENTS

This work was done under the scope of the Euro-

NGI and Euro-FGI Networks of Excellence - De-

DETECTION OF ILLICIT TRAFFIC USING NEURAL NETWORKS

11

10000

100000

1e+06

0 50 100 150 200 250 300

bytes

seconds

10000

100000

1e+06

0 50 100 150 200 250 300

bytes

seconds

10000

100000

1e+06

1e+07

0 50 100 150 200 250 300

bytes

seconds

Figure 5: HTTP Browsing + P2P File Sharing + Skype

(from top to bottom): (a) Licit, (b) Licit + Snapshots and

(c) Licit + File Transfer.

sign and Engineering of the Next Generation Internet,

and Euro-NF Network of Excellence - Anticipating

the Network of the Future (from Theory to Design),

funded by the European Union.

REFERENCES

Chen, P.-T. and Laih, C.-S. (2008). IDSIC: an intrusion de-

tection system with identification capability. Interna-

tional Journal of Information Security, 7(3):185–197.

Debar, H., Becker, M., and Siboni, D. (4-6 May 1992). A

neural network component for an intrusion detection

system. Research in Security and Privacy, 1992. Pro-

ceedings., 1992 IEEE Computer Society Symposium

on, pages 240–250.

Demuth, H. and Beale, M. (1998). Neural Network Toolbox

Users Guide. The MathWorks, Inc.

Denning, D. (1987). An Intrusion-Detection Model. IEEE

Transactions on Software Engineering, 13(2):222–

232.

Ilgun, K., Kemmerer, R., and Porras, P. (1995). State Tran-

sition Analysis - A Rule-Based Intrusion Detection

Approach. IEEE Transactions on Software Engineer-

ing, 21(3):181–199.

Jiang, W., Song, H., and Dai, Y. (2005). Real-time intru-

sion detection for high-speed networks. Computers &

Security, 24(4):287–294.

Madsen, K., Nielsen, H., and Tingleff, O. (2004). Methods

for Non-Linear Least Squares Problems. Technical

University of Denmark, 2nd edition.

Mukherjee, B., Heberlein, L., and Levitt, K. (1994). Net-

work Intrusion Detection. IEEE Network, 8(3):26–41.

Ryan, J., Lin, M.-J., and Miikkulainen, R. (1997). Intru-

sion detection with neural networks. In NIPS ’97:

Proceedings of the 1997 conference on Advances in

neural information processing systems 10, pages 943–

949, Cambridge, MA, USA. MIT Press.

Wang, K. and Stolfo, S. (2004). Anomalous Payload-based

Network Intrusion Detection. Recent Advances in In-

trusion Detection, Proceedings, 3224:203–222.

SECRYPT 2008 - International Conference on Security and Cryptography

12