A NOVEL SIMILARITY METRIC FOR RETINAL IMAGES BASED

AUTHENTICATION

M. Ortega, M. G. Penedo, C. Mari˜no

Department of Computer Science, University of A Coru˜na, A Coru˜na, Spain

M. J. Carreira

Department of Computer Science and Electronics, University of Santiago de Compostela, Santiago de Compostela, Spain

Keywords:

Authentication System, Similarity Measure, Retinal Images, Biometric Pattern, Feature point matching.

Abstract:

In biometrics the identity of an individual is verified using some physiologic or behavioural feature. In a

typical authentication process involving some biometric trait, the biometric pattern for the user is extracted (a

set of feature landmarks, a characteristic vector etc...). A similarity score is calculated between these patterns

to determine if they belong to the same individual or not. This work presents an analysis of similarity metrics

for an authentication system in which retinal vessel feature points are used as biometric pattern. The VARIA

database of retinal images is used. A new metric is defined weighting the matched points information with the

previously defined metrics. The obtained results show a large stretchment of the confidence gap between the

matching scores of patterns from the same individual and the matching scores of patterns from different ones.

1 INTRODUCTION

Traditional authentication systems based on knowl-

edge (a password, a pin) or possession (a card, a key)

are not reliable enough for many environments, due

to their common inability to differentiate between a

true authorized user and an user who fraudulently ac-

quired the privilege of the authorized user. A solu-

tion to these problems has been found in the biometric

based authentication technologies. A biometric sys-

tem is a pattern recognition system that establishes the

authenticity of a specific physiological or behavioural

characteristic.

Authentication technologies can be found in the

literature using fingerprints (Jain et al., 1997; Tico

and Kuosmanen, 2003) (perhaps the oldest of all the

biometric techniques), face recognition (Zhao et al.,

2000), speech (J.Big¨uin et al., 1997)...

These biometrics systems typically rely the com-

parison between individuals on a matching of their

own extracted patterns. This matching process has

a major impact in the final effectiveness of the sys-

tem. One of the typical pattern matchings is the point

pattern matching, where some feature points or land-

marks are extracted for the individuals using a bio-

metric trait (fingerprints, retinal vessel tree...) and

then both sets of points are compared. Once both sets

are matched, it is important to establish a good sim-

ilarity (or dissimilarity in some cases) metric value.

This value is the ultimate criterion to distinguish be-

tween a client (authorized access) or an attack (unau-

thorized).

Retinal vessel tree pattern has been proved a valid

biometric trait for personal authentication as it is

unique, time invariant and very hard to forge, as

showed in (Mari˜no et al., 2006) where a novel au-

thentication system based on this trait was introduced.

The whole arterious-venous tree structure was used

as the feature pattern for individuals. One drawback

of the proposed system was the necessity of storing

and handling the whole vessel tree image as a pattern.

Based on the idea of fingerprint minutiae (Jain et al.,

1997), a more ideal and robust pattern was first intro-

duced in (Ortega et al., 2006) where a set of land-

marks (bifurcations and crossovers between retinal

vessels) were extracted and used as feature points. In

this scenario, the matching problem is a point pattern

matching problem and the similarity metric is defined

in terms of matched points.

In this work, similarity metrics are designed and

analyzed for the retinal feature point based biometric

system. These metrics emphasise the importance of

249

Ortega M., Penedo M., Mariño C. and Carreira M. (2009).

A NOVEL SIMILARITY METRIC FOR RETINAL IMAGES BASED AUTHENTICATION.

In Proceedings of the International Conference on Bio-inspired Systems and Signal Processing, pages 249-253

DOI: 10.5220/0001510602490253

Copyright

c

SciTePress

the right classification in attacks and client accesses.

The paper is organized as follows: in section 2 a brief

description of the authentication system is presented,

specially the feature points extraction and the match-

ing stages. Section 3 deals with the analysis of sev-

eral similarity metrics applied to this system. Section

4 shows the effectiveness results obtained by the met-

rics running a test images set. Finally, section 5 pro-

vides some discussion and conclusions.

2 AUTHENTICATION SYSTEM

PROCESS

As previously commented, retinal vessel tree is a

good biometric trait for authentication. To obtain a

good representation of the tree, the creases of the im-

age are extracted. As vessels can be thought of as

ridges seeing the retinal image as a landscape, creases

image will consist in the vessels skeleton (Figure 1(a)

and 1(b)).

Using the whole creases image as biometric pat-

tern has a major problem in the codification and stor-

age of the pattern, as we need to store the whole im-

age. To solve this, similarly to the fingerprint minu-

tiae (ridges, endings, bifurcations in the fingerprints),

a set of landmarks is extracted as the biometric pattern

from the creases image. The most identifiable and in-

variant landmarks in retinal vessel tree are crossovers

and bifurcation points and, therefore, they are used as

biometric pattern in this work.

To detect feature points, creases are tracked to la-

bel all of them as segments in the vessel tree, mark-

ing their endpoints. Next, bifurcations and endpoints

are extracted by means of relationships between seg-

ments. These relationships are found detecting seg-

ments close to each other and calculating their direc-

tions. If a segment endpoint is close to another seg-

ment and forming an angle smaller than

π

2

, a bifurca-

tion or crossover is detected. Figure 1(c) shows the

result obtained after this stage.

Once the biometric pattern for an individual, β, is

obtained as a set of points, it has to be compared with

the stored reference pattern, α, to validate the identity

of the individual. Due to the eye movement during the

image acquisition stage, it is necessary to align β with

α in order to be matched. They may also have differ-

ent cardinality. Considering the reduced range of eye

movements during the acquisition, a Similarity Trans-

form schema (ST) is used to model pattern transfor-

mations (N. Ryan and de Chazal, 2004). A search in

the transformation space is performed to find the more

suitable parameters of the alignment. Once both pat-

terns are aligned, a point p from α and a point p

′

from

(a) (b)

(c)

Figure 1: (a) original image (b) creases image (c) creases

image with the feature points extracted from it.

β match if distance(p, p

′

) < D

max

, where D

max

is a

threshold introduced in order to consider the discon-

tinuities during the creases extraction process leading

to mislocation of feature points. This way, the num-

ber of matched points between patterns is calculated.

Next, similarity metrics are established to obtain a fi-

nal criterion of comparison between patterns.

3 SIMILARITY METRICS

ANALYSIS

The main goal is to define similarity measures on the

aligned patterns to correctly classify authentications

in both classes: attacks (unauthorizedaccesses), when

the two matched patterns are from different individu-

als and clients (authorized accesses) when both pat-

terns belong to the same person.

For the metric analysis a set of 150 images (100

images, 2 images per individual and 50 different im-

ages more) from VARIA database (VARIA, ) were se-

lected. These images have a high variability in con-

trast and illumination allowing the system to be tested

in quite hard conditions. In order to build the training

set of matchings, all images are matched versus all the

images (a total of 150x150 matchings). The match-

ings are classified into attacks or clients accesses de-

pending if the images belong to the same individual

or not. Separation of both classes by some metric de-

termines its classification capabilities.

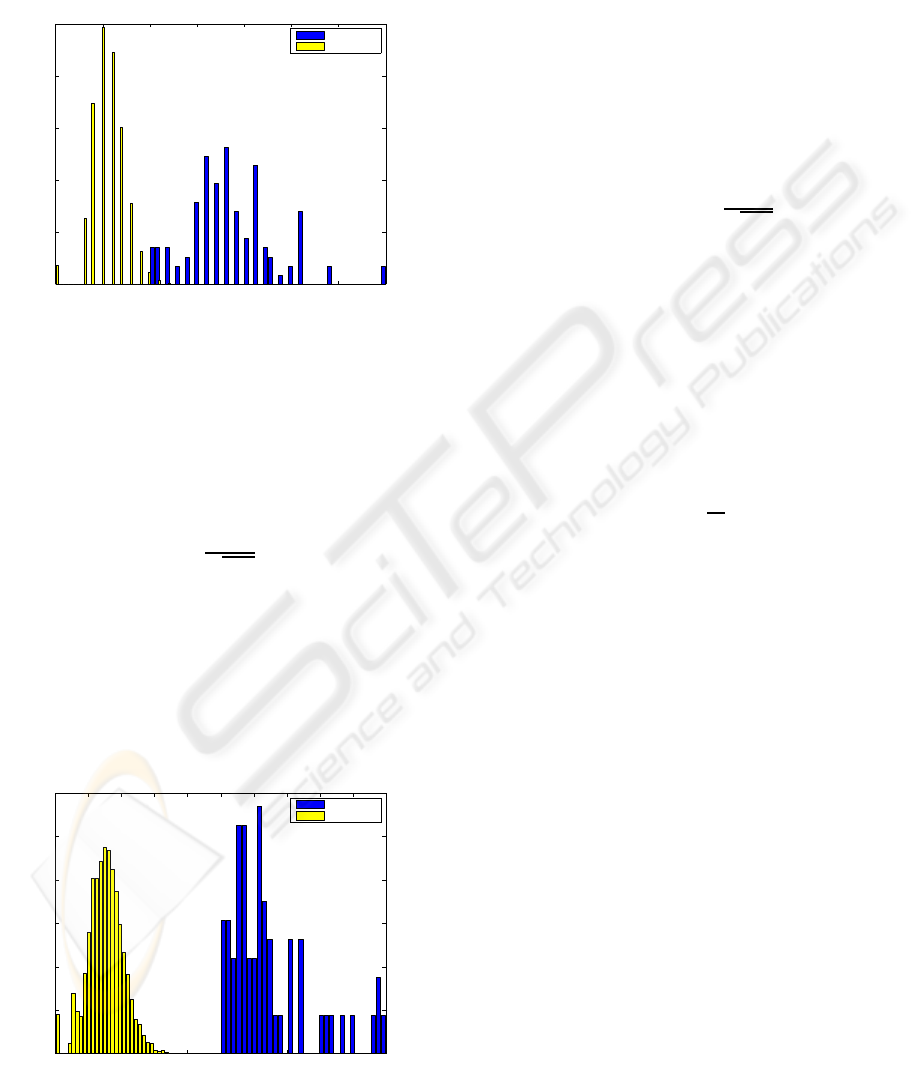

The main information to measure similarity be-

tween two patterns is the number of feature points

successfully matched between them. Figure 2, shows

histogram of matched points for both classes of au-

thentications in the training set. As it can be observed,

BIOSIGNALS 2009 - International Conference on Bio-inspired Systems and Signal Processing

250

matched points information is by itself quite significa-

tive but insufficient to completely separate both pop-

ulations as in the interval [10, 13] there is an overlap-

ping between them.

0 5 10 15 20 25 30 35

0

0.05

0.1

0.15

0.2

0.25

#MATCHED POINTS

NORMALIZED FREQUENCY OF MATCHED POINTS

Authorized

Unauthorized

Figure 2: Matched points histogram in the attacks (unau-

thorized) and clients (authorized) authentications cases. In

the interval [10,13] both distributions overlap.

To combine information of patterns size and nor-

malize the metric, a normalization function will be

used. The similarity measure (S) between two pat-

terns will be defined by

S =

C

√

MN

(1)

where C is the number of matched points between

patterns, and M and N are the matching patterns sizes.

Figure 3 shows distributions chart for our train-

ing set using the proposed metric in Equation 1. This

metric combines both pattern sizes information al-

lowing the system to reduce the similarity value in

attacks involving small sized patterns while keeping

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

0

0.02

0.04

0.06

0.08

0.1

0.12

SIMILARITY VALUE

NORMALIZED FREQUENCY OF SCORES

Authorized

Unauthorized

Figure 3: Similarity values distribution for authorized and

unauthorized accesses using metric defined in Equation 1.

clients cases histogram in a similar range. A confi-

dence band between both classes can be established

now in [0.38, 0.5].

However, normalizing the metric has the side ef-

fect of reducing the similarity between patterns of the

same individual where one of them has a muchgreater

number of points than the other. To correct this situ-

ation, the influence of the number of matched points

and the patterns size have to be balanced. A correc-

tion parameter (γ) is introduced in the similarity mea-

sure to control this situation. The new metric is de-

fined as:

S

γ

= S∗C

γ−1

=

C

γ

√

MN

(2)

where S,C, M and N arethe same parameters from

Equation 1. The γ correction parameter allows to im-

prove the similarity values when a reasonable num-

ber of matched points is obtained specially in cases of

patterns with many points.

In order to normalize the metric again to the [0, 1]

interval, S

γ

is divided by a reference value, R, rep-

resenting a similarity value in the S

γ

space which is

certain to be an authorized access case. The new nor-

malized metric will be defined as:

S

γR

= min

S

γ

R

, 1

(3)

R can be defined in the same space as S

γ

in Equa-

tion 2 as R = S

R

∗C

γ−1

R

, where S

R

and C

R

are values in

the similarity and matched points space, respectively.

Those values must have a very high probability to be-

long to a match between patterns from the same in-

dividual. Moreover, these parameters should not be

very high in order to allow a good number of positive

cases to get closer to a similarity value of 1.Ideally,

mean values for the similarity and matched points dis-

tributions should be used.

In Figure 2 and 3, the distribution of the unau-

thorized and authorized cases can be observed for the

matched points and normalized metric,respectively.

Mean values for the clients accesses are, respectively,

18 and 0.65. Distributions for the unauthorized ac-

cesses have a mean and standard deviation values of

µ

m

= 5.58, σ

m

= 1.74 for matched points and µ

s

=

0.1508, σ

s

= 0.0537 for similarity values.

Given that 18 > µ

m

+ 7 ∗σ

m

and 0.65 > µ

s

+ 9 ∗

σ

s

, S

R

= 0.65 and C

R

= 18 are values safe enough to

be used as they are far enough from their respective

attacks distributions means.

Finally, to choose a good γ parameter, the confi-

dence band improvement has been evaluated for dif-

ferent values of γ (Figure 4). The maximum improve-

ment is achieved at γ = 1.12 with a confidence band

A NOVEL SIMILARITY METRIC FOR RETINAL IMAGES BASED AUTHENTICATION

251

0 0.5 1 1.5 2 2.5 3

−0.4

−0.3

−0.2

−0.1

0

0.1

0.2

0.3

GAMMA VALUE

CONFIDENCE BAND

gamma=1.12

band=0.2304

Figure 4: Confidence band size vs gamma (γ) parameter

value. Maximum interval is obtained at γ = 1.12.

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

0

0.05

0.1

0.15

0.2

0.25

0.3

0.35

SIMILARITY VALUE

%

Authorized

Unauthorized

Figure 5: Similarity values distributions using the normal-

ized metric with γ=1.12

of 0.2304, twice the original from previous section.

Figure 5 shows the distributions values obtained for

this metric with γ = 1.12.

4 RESULTS

A test set of 100 images (2 per individual), different

from the training set has been built in order to test

the metrics performance once their parameters have

been fixed with the training set. Metrics performance

is used by means of the FAR vs FRR graph like in the

case of the ROC curves.

This graph displays two curves representing the

evolution of the False Acceptance Rate (FAR) and

False Rejection Rate (FRR) versus the value of the

similarity decision threshold. In this case, a false ac-

ceptance is the acceptance of an attack and a false re-

jection is the rejection of a client. A typical perfor-

mance parameter is the Equal Error Rate (EER) which

indicates the rate where FAR = FRR. Figure 6 shows

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

Error Rate

Similarity Threshold

FAR (S)

FRR (S)

FAR (S

FRR (SFRR (SγR)γR)

γR)

Figure 6: FAR vs FRR curves for the normalized similarity

metrics S (Equation 1) and S

γR

(Equation 3).

0 5 10 15 20 25 30 35 40

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

MINIMUM FEATURE POINTS THRESHOLD

CONFIDENCE BAND

Figure 7: Confidence band evolution depending on the min-

imum points constraint.

FAR and FRR curves for metrics defined in previous

section (the normalized metric defined in Equation 1

and the gamma-corrected normalized metric defined

in Equation 3). The EER is 0 for the normalized and

gamma corrected metrics as it was the same case in

the training set, and, again, the gamma corrected met-

ric shows the highest confidence band in the test set

(0.195 vs 0.109).

Finally, to evaluate the influence of the image

quality influence, in terms of feature points detected

per image, a test is run where, images with a biomet-

ric pattern size below a threshold are removed for the

set and the confidence band obtained with the rest of

the images is evaluated. Figure 7 shows the evolution

of the confidence band versus the minimum detected

points constraint. The confidence band does not grow

significatively until a fairly high threshold is set. Tak-

ing as threshold the mean value of detected points for

all the test set (25.7), the confidence band grows from

0.1950 to 0.2701. Even removing half of the images,

BIOSIGNALS 2009 - International Conference on Bio-inspired Systems and Signal Processing

252

the band only is increased by 0.075, suggesting that

the gamma-corrected metric is highly robust to big

quality image variations.

5 CONCLUSIONS AND FUTURE

WORK

The performance of an authentication system based

on feature points of the retinal vessel tree has been

evaluated. Several metrics have been analyzed in or-

der to test the classification capabilities of the system

and a new weighted metric has been defined. The re-

sults are very good and prove that the defined authen-

tication process is suitable and reliable for the task.

The use of feature points to characterise the individ-

uals is a robust biometric pattern and allow to define

similarity metrics that offer a good confidence band.

Moreover, to reduce the influence of low quality im-

ages a parameter γ is introduced to correct the influ-

ence of the absolute quantity of matched points.

Future work includes the use of high-level infor-

mation of points to complete the metrics and a full

quality image study to determine some constraints

over the contrast and illumination values.

ACKNOWLEDGEMENTS

This paper has been partly funded by the

Xunta de Galicia through the grant contracts

PGIDIT06TIC10502PR.

REFERENCES

Jain, A., Hong, L., Pankanti, S., and Bolle, R. (1997). An

identity authentication system using fingerprints. Pro-

ceedings of the IEEE, 85(9).

J.Big¨uin, C.Chollet, and G.Borgefors, editors (1997).

Proceedings of the 1st.International Conference on

Audio- and Video-Based Biometric Person Authenti-

cation, Crans-Montana,Switzerland.

Mari˜no, C., Penedo, M. G., Penas, M., Carreira, M. J., and

Gonz´alez, F. (2006). Personal authentication using

digital retinal images. Pattern Analysis and Applica-

tions, 9:21–33.

N. Ryan, C. H. and de Chazal, P. (2004). Registration of

digital retinal images using landmark correspondence

by expectation maximization. Image and Vision Com-

puting, 22:883–898.

Ortega, M., Mari˜no, C., Penedo, M. G., Blanco, M., and

Gonz´alez, F. (2006). Personal authentication based on

feature extraction and optical nerve location in digital

retinal images. WSEAS Transactions on Computers,

5(6):1169–1176.

Tico, M. and Kuosmanen, P. (2003). Fingerprint matching

using an orientation-based minutia descriptor. IEEE

Transactions on Pattern Analysis and Machine Intel-

ligence, 25(8):1009–1014.

VARIA. Varpa retinal images for authentication. http://

www.varpa.es/varia.html.

Zhao, W., Chellappa, R., Rosenfeld, A., and Phillips, P.

(2000). Face recognition: A literature survey. Tech-

nical report, National Institute of Standards and Tech-

nology.

A NOVEL SIMILARITY METRIC FOR RETINAL IMAGES BASED AUTHENTICATION

253