A UTILITY CENTERED APPROACH FOR EVALUATING AND

OPTIMIZING GEO-TAGGING

Albert Weichselbraun

Department of Computational Methods, Vienna University of Economics and Business, Vienna, Austria

Keywords:

Geo-tagging, Quality assessment, Evaluation, Utility model, GeoNames.

Abstract:

Geo-tagging is the process of annotating a document with its geographic focus by extracting a unique locality

that describes the geographic context of the document as a whole (Amitay et al., 2004). Accurate geographic

annotations are crucial for geospatial applications such as Google Maps or the IDIOM Media Watch on Cli-

mate Change (Hubmann-Haidvogel et al., 2009), but many obstacles complicate the evaluation of such tags.

This paper introduces an approach for optimizing geo-tagging by applying the concept of utility from eco-

nomic theory to tagging results. Computing utility scores for geo-tags allows a fine grained evaluation of the

tagger’s performance in regard to multiple dimensions specified in use case specific domain ontologies and

provides means for addressing problems such as different scope and coverage of evaluation corpora.

The integration of external data sources and evaluation ontologies with user profiles ensures that the framework

considers use case specific requirements. The presented model is instrumental in comparing different geo-

tagging settings, evaluating the effect of design decisions, and customizing geo-tagging to a particular use

cases.

1 INTRODUCTION

The vision of the Geospatial Web combines geo-

graphic data, Internet technology and social change.

Geospatial applications such as the IDIOM Me-

dia Watch on Climate Change (Hubmann-Haidvogel

et al., 2009) use geo-annotation services to refine

Web pages and media articles with geographic tags.

Geo-tagging is the process of assigning a unique ge-

ographic location to a document or text. In contrast

to geographic named entity recognition or toponym

resolution (Leidner, 2006) only one geographic loca-

tion which describes the document’s geography is ex-

tracted, even if multiple geographic references occur

in the document.

Most approaches toward geo-tagging facilitate

machine learning technologies, gazetteers, or a com-

bination of both to identify geo-entities. The

gazetteer’s size and tuning parameters determine the

geo-tagger’s performance and its bias towards smaller

geographic-entities or higher-level units. Choosing

these parameters often involve trade-offs; improve-

ments in one particular area do not necessarily yield

better results in other areas.

For instance, increasing the gazetteer’s size in-

creases the number of detected geographic entities

but comes at the cost of a higher probability of am-

biguities. Gazetteer entries such as Fritz/at, Mo-

bile/Alabama/us, Reading/uk challenge the tagger’s

capability to distinguish geographic entities from

common terms without a geographic meaning. There-

fore, a framework which monitors the effect of de-

sign decisions on the tagger’s performance and yields

comparable performance metrics is essential for de-

signing and evaluating geo-taggers.

Clough and Sandner (Clough and Sanderson,

2004) point out the importance of comparative evalu-

ations of geo-tagging as stimuli for academic and in-

dustrial research. Leidner (Leidner, 2006) provides

such an evaluation data set and describes the pro-

cess of designing evaluation corpora. Nevertheless

evaluating geographical data mining is still a tricky

task. Martins et al. (Martins et al., 2005) elaborate

on the challenges required to develop accurate meth-

ods for evaluating geographic tags, which include the

creation of geographic ontologies, interfaces for ge-

ographic information retrieval, and the development

of methods for ranking documents according to geo-

graphic relevance.

Providing a generic evaluation framework to com-

pare geographic annotations is still a rather complex

task. Parameters such as the gazetteer’s scope, cov-

134

Weichselbraun A. (2009).

A UTILITY CENTERED APPROACH FOR EVALUATING AND OPTIMIZING GEO-TAGGING.

In Proceedings of the International Conference on Knowledge Discovery and Information Retrieval, pages 134-139

DOI: 10.5220/0002289401340139

Copyright

c

SciTePress

erage, correctness, granularity, balance and richness

of annotation influence the outcome of any evaluation

experiment (Leidner, 2006). Therefore, even stan-

dardized evaluation corpora such as the one designed

by Leidner require geo-taggers to use a fixed gazetteer

to provide comparable results.

Studies show (Hersh et al., 2000; Allan et al.,

2005) that information retrieval performance mea-

sures as for instance recall do not always corre-

spond to adequate gains in actual user satisfaction

(Turpin and Scholer, 2006). Work by Turpin and

Hersh (Turpin and Hersh, 2001) suggests that im-

provements of information retrieval metrics do not

necessarily translate into better user performance for

specific search tasks.

Martins et al. (Martins et al., 2005) recommend

to close the gap between performance metrics and

user experience by performing user studies. Despite

the additional effort required to implement such stud-

ies, work by Nielsen and Landauer (Nielsen and Lan-

dauer, 1993) suggests that approximately 80% of the

described usability problems can be detected with

only five users (Martins et al., 2005).

This work addresses the need for comparative

evaluations and user participation by applying the

concept of utility to geo-tagger evaluation metrics.

Intra-personal settings translate tagging results into

utility values and allow to measure the performance

according to the user’s specific needs.

The remainder of this paper is organized as fol-

lows. Section 2 elaborates on challenges faced in geo-

tagging. Section 3 presents a blueprint for applying

the concept of utility to geo-tagging and describes the

process of deploying a geo-evaluation ontology. Sec-

tion 4 demonstrates the usefulness of utility centered

evaluations by comparing the utility based technique

to conventional approaches. The paper closes with an

outlook and draws conclusions in Section 5.

2 EVALUATING GEO-TAGS

Web pages often contain multiple references to geo-

graphic locations. State of the art geo-taggers facili-

tate these references to identify the site’s geographic

context and resolve ambiguities using the obtained

context. A focus algorithm decides based on the

identified geographic entities on the site’s geography

(Amitay et al., 2004). Tuning parameters determine

the focus algorithm’s behavior, such as whether it is

biased toward higher-level geographic units (such as

countries and continents) or prefers low-level entities

such as cities or towns.

Biases make judging the tagger’s performance dif-

ficult. An article about Wolfgang Amadeus Mozart,

for example, contains one reference to Salzburg and

two to Vienna - both cities in Austria. Depending on

the focus algorithm’s configuration, the page’s geog-

raphy might be set to (i) Salzburg (bias toward low-

level geographic units), (ii) Austria (bias toward high-

level geographic units), or (iii) Vienna (bias toward

low-level geographic units with a large population).

The task of judging the value of a particular an-

swer is far from trivial, because each possible solution

has a certain degree of correctness. Work comparing

results to a gold standard often fails to value these nu-

ances.

This paper therefore suggests to apply the concept

of utility, as found in economic theory, to the evalua-

tion of geo-taggers. The geographies returned by the

tagger are assessed based on preferences specified by

the user along different ontological dimensions and

get scored accordingly.

Maximizing utility instead of the number of cor-

rectly tagged documents, provides advantages in re-

gard to: (i) granularity - the architecture even ac-

counts for slight variations in the grade of “correct-

ness” of the proposed geo-tags; (ii) adaptability -

users can specify their individual utility profiles, pro-

viding the architect with means to assess the tagger’s

performance in accordance with the particular prefer-

ences of a user; and (iii) holistic observability - the

geo-tagger’s designer is no longer restricted to ob-

serve gains, but can consider costs in terms of com-

puting power, storage, network traffic, and response

times.

3 METHOD

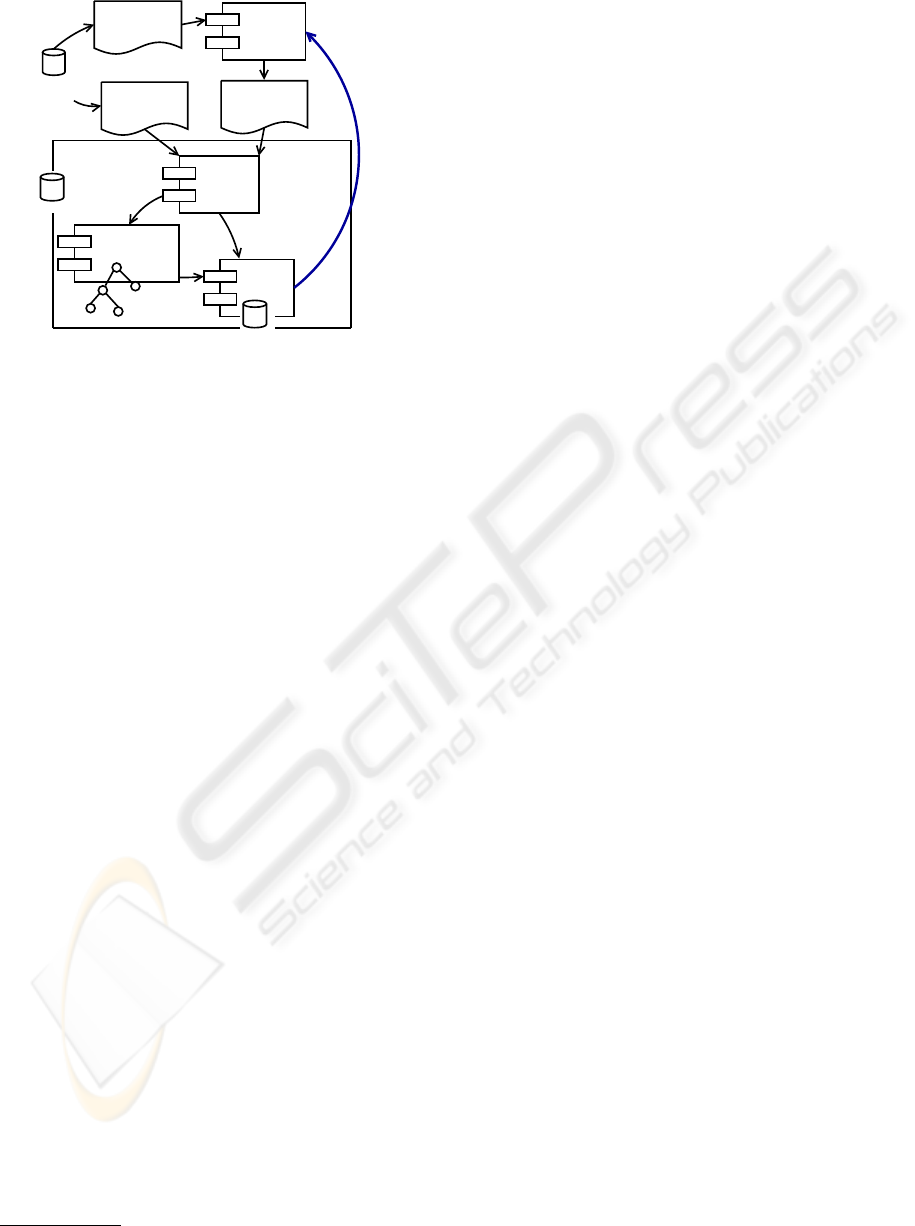

Figure 1 outlines how the utility based approach uses

ontologies to evaluate the geo-tagging performance.

The framework compares the geo-tagger’s annota-

tions with tags retrieved from a gold standard. Cor-

rect results yield the full score, incorrect results are

evaluating using ontology based scoring which veri-

fies whether the result is related to the correct answer

in regard to the dimensions specified in the evaluation

ontology and the extend of such a possible relation.

Queries against the data source identify such ontolog-

ical relationships between the computed and the cor-

rect tag, which are evaluated considering the answer’s

deviation from the correct answer and the user’s pref-

erence settings.

A UTILITY CENTERED APPROACH FOR EVALUATING AND OPTIMIZING GEO-TAGGING

135

Web

Document

Evaluation

Dataset

Gold Standard

Tags

Geo-Tagger

Geo-Tags

Evaluation

Ontology based

Scoring

evaluation

ontology

Scoring

data source

(Utility score,

relation)

correct

"incorrect"

user preferences

Figure 1: Ontology-based evaluation of geo-tags.

3.1 Ontology and Data Source

The evaluation ontology specifies the ontological di-

mensions considered for the evaluation task. Object

properties such as x partOf y, or x isNeighbor y spec-

ify the relations between the “correct” answer and its

deviations.

The data source provides instance data covering

the location entities identified by the tagger. It there-

fore allows querying pairs of objects to retrieve their

relations in regard to the evaluation ontology. Data

source and evaluation ontology are closely related.

Depending on the use case and available resources a

bottom-up (design the ontology according to an exist-

ing data source) or a top-down approach (design the

ontology and create a fitting data source) will be cho-

sen for the evaluation ontology’s design. The ontol-

ogy’s object properties specify valid ontological di-

mensions for the evaluation process.

Existing ontologies containing geographical cate-

gories as for instance the one applied by David War-

ren and Fernando Pereira (Warren and Pereira, 1982)

in the Chat-80 question-answering system may act

as a template for such an evaluation ontology. This

work uses a bottom-up approachbased on the publicly

available GeoNames database (geonames.org). Geo-

Name’s place hierarchy web service provides func-

tions to determine an entry’s children, siblings, hierar-

chy, and neighbors. Functions such as findNearby re-

turn streets, place names, postal codes, etc. for nearby

locations, and auxiliary methods deliver annotations

such as postal codes, Wikipedia entries, weather sta-

tions and observations for a given location. For a

full list of the supported functions please refer to the

GeoNames Web service documentation

1

.

1

www.geonames.org/export/ws-overview.html

Due to the applied bottom-up approach the cre-

ated ontology only considers relations derived from

GeoName entries. Despite the ontology’s general

scope its application to other use cases might require

refinements of the ontological constructs. The on-

tology supports standard properties such as partOf,

isNeighbor and sibling relationships as well as data

type properties assigning entities coordinates (center-

Coordinates), an area (totalArea), and a population

(totalPopulation), if applicable. The contains prop-

erty helps distinguishing between geo-entities com-

pletely containing another entity (e.g. Europe con-

tains Austria), and entities which are only partly con-

tained by another entity (e.g., Russia is partOf Eu-

rope, but Europe does not contain it).

Combining the geo-evaluation ontology’s knowl-

edge with queries for ontological instances in the

GeoNames database yields an effective framework for

the evaluation of geographic tags. Queries alongside

the ontological dimensions allow a fine grained as-

sessment of the tagger’s result including the extend to

which “incorrect” tags contribute helpful information.

3.2 User Preferences

User preferences determine the translation of test re-

sults into utility scores. Equation 1 shows a utility

function assuming linearly independent utility values.

u =

∑

a

i

∈S

A

f

eval

(a

i

) (1)

The utility equals to the sum of the utility gained by

a answer set S

A

= {a

1

,a

2

,...,a

n

}, which is evalu-

ated using an evaluation function f

eval

. To simplify

the computation of the utility many current evalua-

tion metrics only consider correct answers as useful

(f

eval

= 1 for correct answers and 0 otherwise).

Such approaches are too coarse to detect minor de-

teriorations in the tagger’s performance, because the

utility generated by a particular answer is highly use

case and user specific. Thus designing geo-taggers re-

quires more fine grained methods which consider the

user’s preferences and fine nuances of correctness.

The evaluation ontology outlines these nuances in

terms of ontological dimensions and the user prefer-

ences address the issue of assigning use case specific

weights to those dimensions. This approach adds the

following Equation to evaluate partly correct answers:

f

eval

(a

i

) =

n

∏

j=1

w

d

j

(2)

with a user specific weight w

d

j

[0,1] for deviations

alongside the ontological dimension d

j

. Identification

of paths between the tag a

k

and the correct answer

KDIR 2009 - International Conference on Knowledge Discovery and Information Retrieval

136

a

∗

k

along the ontological dimensions yields one w

d

j

for every movement. If no path between a

k

and a

∗

k

exists, f

eval

is set to zero, if multiple paths lead to

a

∗

k

the framework applies resolving strategies such as

(i) use the shortest path, (ii) maximize

∏

n

j=1

w

d

j

, or

(iii) summarize the utility of all paths and use f

eval

=

min( f

sum

eval

,1).

Vienna (St)

Salzburg (St)

Upper Austria (St)

Vienna (C)

Salzburg (C)

Austria (Country)

contains

contains

partOf

partOf

isNeighbor

isNeighbor

Lower Austria (St)

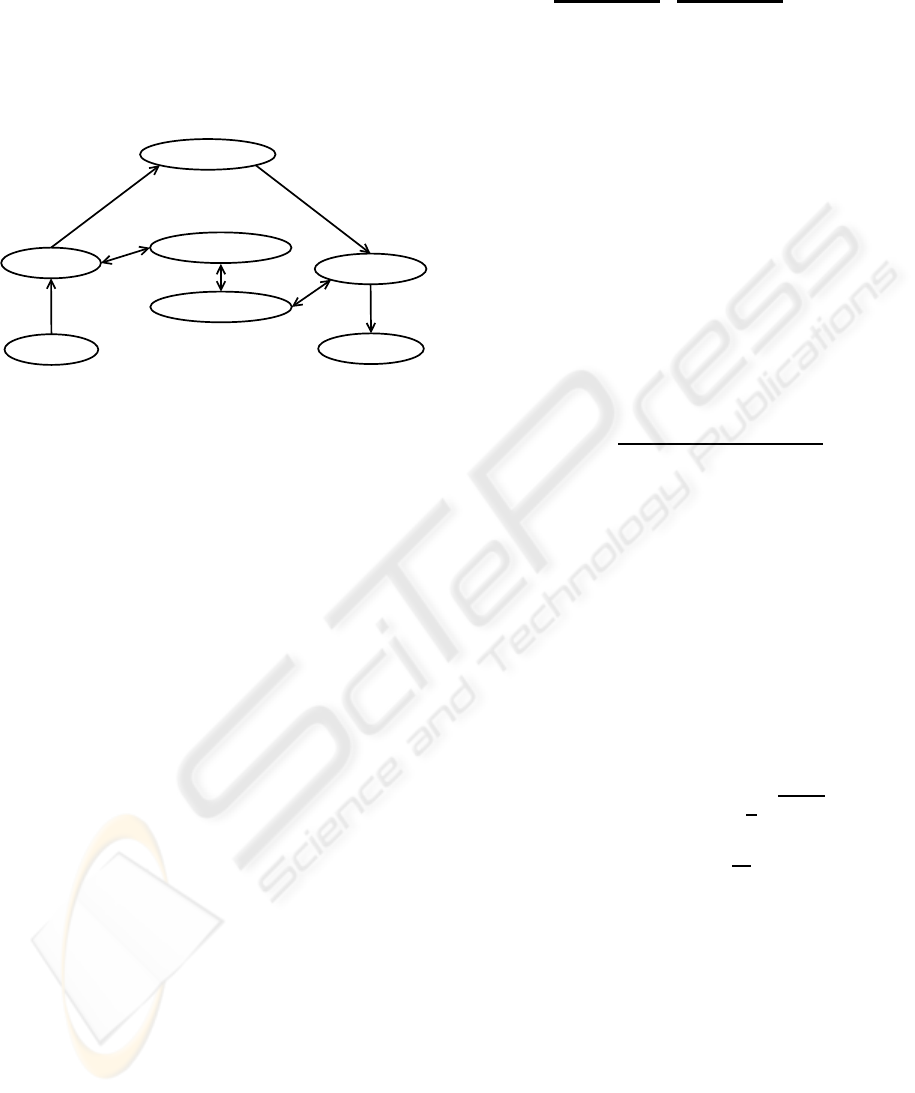

Figure 2: Evaluation of partially correct results.

Figure 2 demonstrates the application of the scor-

ing procedure which facilitates an evaluation ontol-

ogy designed for proximity based scoring. In the ex-

ample the tagger provides the tag Salzburg instead of

Vienna. Two paths lead to the correct answer: (i) Vi-

enna (City) via partOf to Vienna (State) via isNeigh-

bor to Lower Austria, Upper Austria and Salzburg

(State) via contains to Salzburg (City), and (ii) Vi-

enna (City) via partOf to Vienna (State) and Aus-

tria (Country) via contains to Salzburg (State) and

Salzburg (City). Depending on the chosen resolution

strategy f

eval

equals to

(w

partOf

(w

isNeighbor

)

3

w

contains

) or

((w

partOf

)

2

(w

contains

)

2

).

3.3 Scoring

Many heuristics for the evaluation of geo-tags have

emerged. Martins et al. (Martins et al., 2005) pro-

vide a number of possible measures for geographi-

cal relevance including (i) Euclidean distance, (ii) ex-

tend of overlap, (iii) topological distance as for in-

stance adjacency, connectivity or hierarchical con-

tainment, and (iv) the similarity in semantic struc-

tures. This work proposes a hybrid approach consid-

ering Euclidean distance, hierarchical containment,

and semantic structures as formalized in the evalua-

tion ontology by computing similarity based on the

number of correctly identified hierarchylevels and the

distance between the correctly and incorrectly tagged

entity.

At first a tagging result is followed along its hi-

erarchical structure (compare Figure 3) until its geo-

at /National Park Hohe Tauern correct

at

|{z}

u

h

c

/Carinthia/Spittal/Heiligenblut

| {z }

u

o

c

detected

Figure 3: Scoring for hierarchical u

h

c

entries and deviation

alongside dimensions specified in the evaluation ontology

u

o

c

.

entity differs from the correct answer. The tagging

utility u

c

consists of a utility for the correctly iden-

tified hierarchical levels u

h

c

and a utility assigned to

deviations along the dimensions specified in the eval-

uation ontology u

o

c

for partially correct entries:

u

c

= u

h

c

+ u

o

c

(3)

u

o

c

= (1− u

h

c

) · f

eval

(4)

The algorithm computes u

o

c

and u

h

c

based on the

number of geographic levels on which the results dis-

agree:

u

h

c

=

|S

correct

∩ S

suggested

|

max(|S

correct

|,|S

suggested

|)

(5)

Equal tags yield an u

h

c

of one and therefore u

o

c

of zero.

Deviations between the tags lead to u

h

c

< 1 and u

o

c

> 0.

Equations 6 and 7 show how f

eval

is composed

when applying the distance centered evaluation. The

idea of this method is to combine the information re-

trieved in terms of deviations alongside the ontolog-

ical dimensions in the evaluation ontology with the

additional accuracy retrieved from the “wrong” data

based on the distance between the given and the cor-

rect location (d) in comparison to the expected dis-

tance (d

e

) between two randomly selected points in a

circular area as big as the area of the last correct item

(A

S

l

) in the tagging hierarchy:

d

e

= E(d

random

) =

1

3

q

A

S

l

/π (6)

f

d

eval

= max(0,(1−

d

d

e

n

∏

i=1

w

di

)) (7)

Summarizing the utility gained from the identified

geographic entities yields the tagger’s total utility for

a particular tagging use case.

4 EVALUATION

To demonstrate the influence of user specific set-

tings such as the gazetteer size or the tag-

ger’s scope on the geo-tagger’s results, an evalu-

ation facilitating 15 000 randomly selected articles

from the Reuters corpus (trec.nist.gov/data/reuters-

/reuters.html) has been performed. The evaluation

A UTILITY CENTERED APPROACH FOR EVALUATING AND OPTIMIZING GEO-TAGGING

137

Table 1: Evaluation of geo-tags created by OpenCalais and geoLyzard.

Comparison = A ⊒ B A ⊑ B A ⊑ B∨ A ⊒ A

OpenCalais vs. Reuters 20.15 % 71.68 % 31.45 % 78.43 %

geoLyzard vs. Reuters 16.82 % 62.25 % 25.01 % 74.50 %

OpenCalias vs. geoLyzard 47.25 % 50.63 % 48.15 % 62.23 %

compares results obtained from the OpenCalais Web

service (www.opencalais.com) and the geoLyzard-

tagger used in the IDIOM Media Watch on Climate

Change (www.ecoresearch.net/climate) with location

reference data from the Reuters corpus. The Reuters

corpus specifies the location on a fixed scope (coun-

try or political organization), while both other taggers

determine the scope dynamically based on the docu-

ment’s content.

The experiment evaluates geo-tags according to

four different criteria:

1. verbatim correctness (A ≡ B): Both geo-tagger

identify exactly the same geographic entity.

2. more detailed specification (A ⊒ B): The found lo-

cation is an equal or a more detailed specification

of the gold standard’s entity (e.g. eu/at/Salzburg

is more detailed than eu/at).

3. more general specification (A ⊑ B): The tagger re-

turns an equal or more general specification of the

gold standard’s entity (e.g. eu/it is a more general

specification than

eu/it/Florence

).

4. more detailed or more general specification (A ⊒

B ∨ A ⊑ B): The location satisfies either condi-

tion 2 or 3.

In contrast to the evaluation of a tag’s verbatim

correctness the other three test settings require do-

main knowledge as outlined in Section 3.

Table 1 summarizes the evaluation’s results. Both

tagger tend to deliver most of the data at a more fine

grained scope than country level which leads to only

around 20% of verbatim conformance with the gold-

standard. Considering hierarchical data in the eval-

uation boosts the evaluation metric to approximately

75%. The rest of the deviations might be caused by

(i) different configurations of the foci algorithms used

in the taggers, (ii) by changes in the geopolitical sit-

uations as for instance the break-up of Yugoslavia

into multiple countries, (iii) by missing geographic

references in the original articles, and (iv) real mis-

classifications. Deviations due to different foci al-

gorithms as well as changes in the geopolitical situ-

ation might be addressed by extended evaluation on-

tologies, supporting more complex relations between

the geo-entities. In contrast, an evaluation of the test

corpus and a manual inspection of the returned geo-

tags is required to quantify the share of the latter two

causes.

The experiment illustrates how the inclusion of

domain knowledge improves the comparability of

geo-tagging evaluation metrics. The presented eval-

uation only uses a subset of two relations (partOf and

contains) from the ontology introduced in Seciton 3.

Applying all relations available at GeoNames will

yield even more accurate performance metrics. More

sophisticated approaches might even implement ge-

ographic reasoning (e.g., through Voronoi polygons

(Alani et al., 2001) or spatial indexes based on uni-

form grids (Riekert, 2002)).

5 OUTLOOK AND

CONCLUSIONS

This work presented a utility-testing centered ap-

proach for optimizing geo-taggers. The contributions

of this paper are (i) introducing a fine grained notion

of correctness in terms of a tagging utility applicable

to geo-tagging results, (ii) presenting an approach for

the evaluation of geo-taggers, (iii) demonstrating the

concrete implementation of such a framework by de-

signing a geo-evaluation ontology customized to be

used together with the GeoNames Web service, and

(iv) evaluating the effect of ontological knowledge

and external data on the evaluation metrics.

To Compare utility instead of geo-tags aids in

overcoming obstacles such as different scopes, foci

algorithms, granularity, and coverage. Conventional

approaches which limit the tagger’s scope or stan-

dardize the used gazetteer are not feasible to eval-

uate more sophisticated applications which provide

tags at many different scopes according to the user’s

preferences. The notion of utility provides a very

fine grained, user specific measure for the tagger’s

performance. Community efforts such as FreeBase

and WikiDB provide a solid base for extending this

method to other dimensions as outlined in Section 2.

Considering query cost in evaluating the tagger’s per-

formance is another interesting research avenue (We-

ichselbraun, 2008).

Future research will transfer these techniques and

results to more complex use cases and integrate mul-

tiple data sources.

KDIR 2009 - International Conference on Knowledge Discovery and Information Retrieval

138

REFERENCES

Alani, H., Jones, C. B., and Tudhope, D. (2001). Voronoi-

based region approximation for geographical informa-

tion retrieval with gazetteers. International Journal of

Geographical Information Science, 15(4):287–306.

Allan, J., Carterette, B., and Lewis, J. (2005). When will in-

formation retrieval be “good enough”? In SIGIR ’05:

Proceedings of the 28th annual international ACM SI-

GIR conference on Research and development in in-

formation retrieval, pages 433–440, New York, NY,

USA. ACM.

Amitay, E., Har’El, N., Sivan, R., and Soffer, A. (2004).

Web-a-where: geotagging web content. In SIGIR ’04:

Proceedings of the 27th annual international ACM SI-

GIR conference on Research and development in in-

formation retrieval, pages 273–280, New York, NY,

USA. ACM.

Clough, P. and Sanderson, M. (2004). A proposal for com-

parative evaluation of automatic annotation for geo-

referenced documents. In Proceedings of the Work-

shop on Geographic Information Retrieval at SIGIR

2004.

Hersh, W., Turpin, A., Price, S., Chan, B., Kramer, D.,

Sacherek, L., and Olson, D. (2000). Do batch and

user evaluations give the same results? In Proceed-

ings of the 23rd annual international ACM SIGIR con-

ference on Research and development in information

retrieval, pages 17–24, New York, NY, USA. ACM.

Hubmann-Haidvogel, A., Scharl, A., and Weichselbraun, A.

(2009). Multiple coordinated views for searching and

navigating web content repositories. Information Sci-

ences, 179(12):1813–1821.

Leidner, J. L. (2006). An evaluation dataset for the toponym

resolution task. Computers, Environment and Urban

Systems, 30:400–417.

Martins, B., Silva, M. J., and Chaves, M. S. (2005). Chal-

lenges and resources for evaluating geographical ir.

In GIR ’05: Proceedings of the 2005 workshop on

Geographic information retrieval, pages 65–69, New

York, NY, USA. ACM.

Nielsen, J. and Landauer, T. K. (1993). A mathematical

model of the finding of usability problems. In Pro-

ceedings of the INTERACT ’93 and CHI ’93 confer-

ence on Human factors in computing systems, pages

206–213, Amsterdam, The Netherlands. ACM.

Riekert, W.-F. (2002). Automated retrieval of information in

the internet by using thesauri and gazetteers as knowl-

edge sources. Journal of Universal Computer Science,

8(6):581–590.

Turpin, A. H. and Hersh, W. (2001). Why batch and user

evaluations do not give the same results. In SIGIR ’01:

Proceedings of the 24th annual international ACM SI-

GIR conference on Research and development in in-

formation retrieval, pages 225–231, New York, NY,

USA. ACM.

Turpin, A. H. and Scholer, F. (2006). User performance ver-

sus precision measures for simple search tasks. In SI-

GIR ’06: Proceedings of the 29th annual international

ACM SIGIR conference on Research and development

in information retrieval, pages 11–18, New York, NY,

USA. ACM.

Warren, D. H. D. and Pereira, F. C. N. (1982). An ef-

ficient easily adaptable system for interpreting natu-

ral language queries. Computational Linguistics, 8(3-

4):110–122.

Weichselbraun, A. (2008). Strategies for optimizing query-

ing third party resources in semantic web applica-

tions. In Cordeiro, J., Shishkov, B., Ranchordas, A.,

and Helfer, M., editors, 3rd International Conference

on Software and Data Technologies, pages 111–118,

Porto, Portugal.

A UTILITY CENTERED APPROACH FOR EVALUATING AND OPTIMIZING GEO-TAGGING

139