A MOBILE INTELLIGENT SYNTHETIC CHARACTER WITH

NATURAL BEHAVIOR GENERATION

Jongwon Yoon and Sung-Bae Cho

Department of Computer Science, Yonsei University, Seoul, Korea

Keywords: Intelligent synthetic character, Intelligent agent, Smartphones.

Abstract: As cell phones have become essential tools for human communication and especially smartphones rise as

suitable devices to implement ubiquitous computing, personalized intelligent services in smartphones are

required. There are many researches to implement services, and an intelligent synthetic character is one of

them. This paper proposes a structure of emotional intelligent synthetic character which generates natural

and flexible behaviour in various situations. In order to generate the character’s more natural behaviour, we

used the Bayesian networks to infer the user’s states and we used OCC model to create the character’s

emotion. After inferring these information, the behaviours are generated through the behaviour networks

with using the information. Moreover, we organized a usability test to verify a usability of the proposed

structure of the character.

1 INTRODUCTION

Recently, cell phones appear to play an

indispensable role for human communication.

Various additional services using mobile networks

have been developed and many enterprises have

competitively come out with high-end devices,

especially smartphones, which have both functions

of a PDA (Personal Digital Assistant) and a cell

phone due to not only an expansion of subscribers

but also an improvement of transmission speed.

Moreover, as smartphones become the device

suitable for realizing ubiquitous computing

environments, a necessity of personalized intelligent

services for smartphones is getting increase.

The intelligent synthetic character is an

autonomous agent which behaves based on its own

internal states, and can interact with a person in real-

time. The intelligent synthetic character can be

applied to entertainment robot and service robot

(Kim et al., 2002).

There is an issue when implementing the

intelligent synthetic character, that is to make the

character seem to alive. To achieve this successfully,

the character should express various behaviors

naturally and flexibly to make user feel that the

character behaves with its consciousness, not with

some simple rules for generating behaviors.

In this paper, we propose a method of generating

behaviors of the synthetic character. The Bayesian

networks are used to infer user’s states and OCC

model is used to generate the character’s emotions.

Moreover, we use behavior networks with

information gathered from previous process to

generate character's natural and flexible behaviors.

2 RELATED WORKS

Researches about the intelligent service on mobile

devices are mostly focused on the method to provide

personalized services using contexts around users

and smartphones. S. Schiaffino et al. (Schiaffino et

al., 2002) designed the software structure of the

personalized schedule manager agent, and proposed

a case-based reasoning method using the Bayesian

networks. And Kim et al. (Kim et al., 2004)

proposed an intelligent agent that selects the

behavior using its internal state and learns with the

interaction with user. Marsa-Maestre et al. (Marsa-

Maestre et al., 2008) proposed architecture of the

mobile agents which is applicable to smart

environments for service personalization. And Sung

et al. (Sung et al., 2008) designed an intelligent

agent that infers users’ status with case-based

learning.

315

Yoon J. and Cho S. (2010).

A MOBILE INTELLIGENT SYNTHETIC CHARACTER WITH NATURAL BEHAVIOR GENERATION.

In Proceedings of the 2nd International Conference on Agents and Artificial Intelligence - Agents, pages 315-318

DOI: 10.5220/0002723003150318

Copyright

c

SciTePress

Considering these points, we designed the

mobile intelligent synthetic character which

recognizes external situations and behaves

intelligently. To achieve this, we used the emotion

model to create the emotional state of the character

and the behavior network to generate the character’s

natural and flexible behaviors with its own

emotional state.

3 INTELLIGENT SYNTHETIC

CHARACTER

The intelligent synthetic character consists of four

components: perception system, emotion system,

motivation system and behavior generation system.

Figure 1 shows an architecture of the intelligent

synthetic character.

Figure 1: An architecture of an intelligent synthetic

character.

3.1 Perception System

The perception system gathers information that is

available in smartphones such as contacts

information, schedules, call logs, and device states.

Moreover, the perception system reasons the states

of the user. In this paper, the character infers

especially the user’s valence and arousal state, and

the business state.

The Valence-Arousal(V-A) model is a simple

model that represents emotion as a given position in

a two-dimensional space. It has been commonly

used in previous studies on emotion recognition

(Picard, 1995). However, we do not deal with user’s

exact emotions directly mapped on the V-A space –

such as happy, sad, fear, etc. because it’s hard to

recognize the user’s exact emotional state based on

the simple contexts in the smartphones. The system

reasons roughly the user’s valence and arousal states.

The perception system uses Bayesian networks

as a context-reasoning method of its own. The

Bayesian network is a representative method to infer

states with insufficient information and uncertain

situations. We designed the Bayesian network which

infers the user’s valence, arousal, and business states.

Figure 2 shows a part of the Bayesian networks we

designed.

Figure 2: A part of the Bayesian networks.

3.2 Emotion System

Emotions are particularly important for characters,

because they are an essential part of the self-

revelation feature of messages (Bartneck, 2002). The

emotion system uses OCC model (Ortony et al.,

1988) to create the character's affections among

various emotion models.

The OCC model is the standard model proposed

for a synthesis of emotions, and that is based on

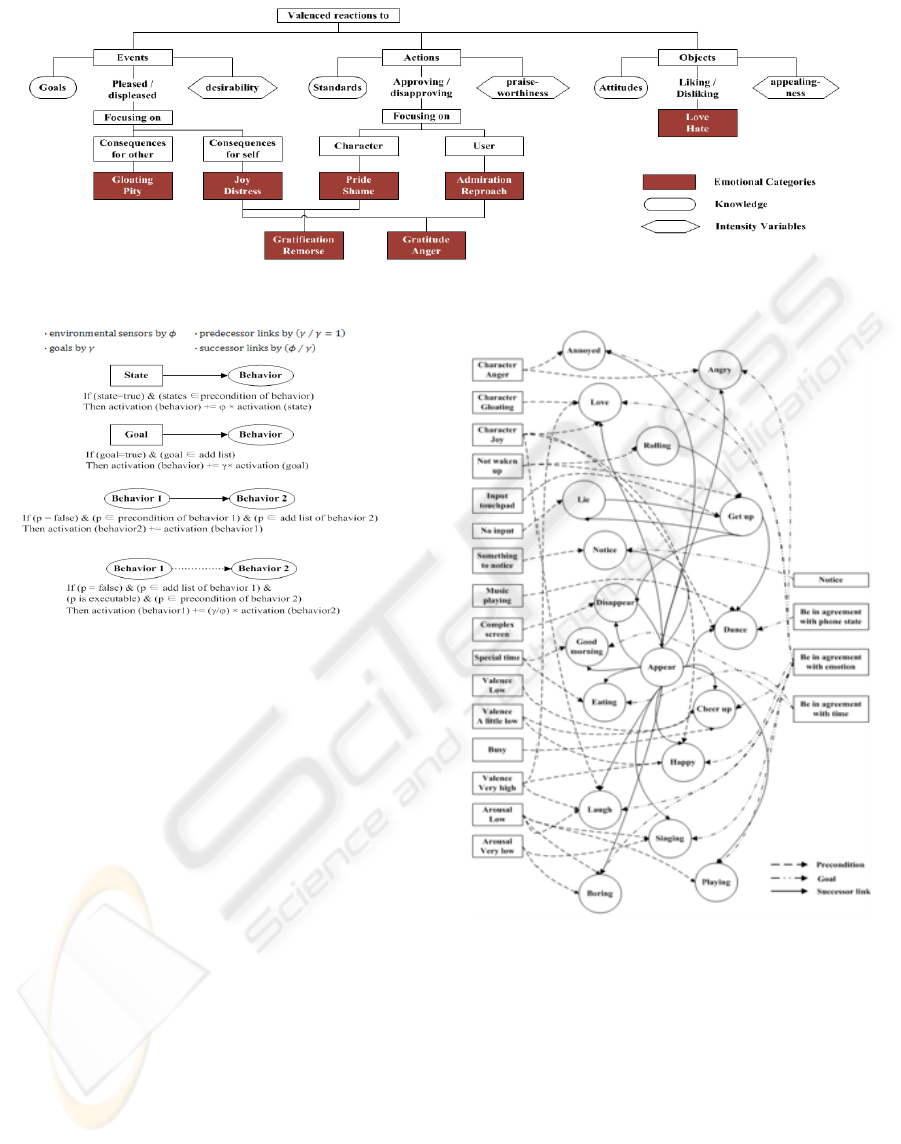

cognitive appraisal theory of emotion. In this paper,

we modified the original OCC model and proposed

the modified OCC model which has 14 emotional

categories. Figure 3 shows the proposed modified

OCC model. The emotion that has the highest

intensity among whole categories is chosen as the

current emotion of the character.

3.3 Motivation System

Goal also takes an important part of the intelligent

synthetic character. Like human, the character

behaves with its own goals which motivate it. This

makes the character more realistic. The character

does not behave with fixed goals but with the goals

that can be changed according to the current

situation.

For examples, when the battery is low, the

motivation system sets the goal as ‘Be in agreement

with phone state’ to warn that the battery is low to

user.

3.4 Behavior Generation System

In the behavior generation system, the behavior

network (Maes, 1989) is used as the method for

selecting the most natural and suitable behavior for

the situations. The behavior networks are the model

that consists of relationships between behaviors,

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

316

Figure 3: Modified OCC Model.

Figure 4: A part of the behaviour network.

goals, and external environment, and selects the

most suitable behavior for the current situation.

Behaviors, external goals and internal goals are

connected with each other through links.

The behavior network selects the behavior which

has the highest activation energy. The activation

energy is a degree which represents how much the

behavior is activated. Figure 5 shows spreading of

activation with the following weights.

In this paper, the proposed behavior generation

networks generate 31 behaviors using the conditions

such as the user's states, the character's emotion, the

user's current behavior and the current time, and its

goals set by the motivation system. Figure 4 shows a

part of the behavior generation networks designed.

4 IMPLEMENTATION

To develop the proposed intelligent synthetic

character, we used the Microsoft Visual Studio 2008

and the Microsoft Windows Mobile 5.0 SDK, and

the character actually runs on the Samsung SPH-

M4650 smartphone. Figure 6 shows screen shots of

the character.

Figure 5: Spreading activations (p is conditions of the

current state).

5 USABILITY TEST

In order to evaluate the fitness of the behaviors

generated through the proposed method on various

situations, we performed the usability test as follows.

Eight participants in their twenties were recruited for

the test. Most of participants reported that they heard

about the intelligent character but none of

participants had used it in actual. In the usability test,

the participants carried out 10 scenarios.

A MOBILE INTELLIGENT SYNTHETIC CHARACTER WITH NATURAL BEHAVIOR GENERATION

317

Figure 6: Screen shots of an intelligent synthetic character

on the actual smartphone(a) and the simulator(b).

The participants observed a sequence of five

behaviors for each scenario generated randomly and

another sequence of 5 behaviors generated by the

proposed method, respectively. The random

generation method just selects any behaviors

randomly to the user without understanding

situations

After the previous task, the participants

evaluated the fitness of each behavior on each

situation. The fitness ranged from 1 which means

"Strongly incongruent" to 5 which means "Strongly

suitable." Following the evaluations, we summed up

the fitness scores of each participant distinguishing

two different behaviors generating methods,

randomly generating method and the proposed

method, and measured the average fitness scores of

each method. Table 1 shows the average fitness

scores evaluated by the participants.

In order to analyze the result of the usability test,

we conducted the Wilcoxon signed-rank test with

the fitness scores. As the result of the Wilcoxon

signed-rank test, we got P-value by 0.012, thereby

we accept the alternative hypothesis because P-value

is smaller than 0.05. It confirms that the proposed

method is appropriate to generate the character's

behaviors.

6 CONCLUDING REMARKS

We presented the architecture of the mobile

intelligent synthetic character for its natural

behaviors. In order to provide enhanced intelligent

services, it is necessary for the intelligent synthetic

character to interact with the user and evolve by

itself. To achieve this, we will attempt to develop

algorithms for an interaction and an evolution,

especially the learning system that evolves the

structure of the Bayesian networks and the behavior

generation network with the user’s feedback in the

future work.

Table 1: Average fitness scores.

Participants Random generation Proposed method

1 2.46 4.50

2 2.40 4.22

3 3.00 4.44

4 2.14 4.38

5 2.44 4.40

6 3.12 4.44

7 2.88 4.54

8 2.06 4.20

ACKNOWLEDGEMENTS

This work was supported by the IT R&D program of

MKE/KEIT (10033807, Development of context

awareness based on self learning for multiple

sensors cooperation).

REFERENCES

Y.-D. Kim, Y.-J. Kim, J.-H. Kim and J.-R. Lim,

“Implementation of artificial creature based on

interactive learning,” In Proc. of 2002 FIRA Robot

World Congress, pp. 369-373, 2002.

S. Schiaffino and A. Amandi, “On the design of a software

secretary,” In Proc. of the Argentine Symp. on

Artificial Intelligence, pp. 218-230, 2002.

Y.-D. Kim, J.-H. Kim and Y.-J. Kim, “Behavior

generation and learning for synthetic character,”

Evolutionary Computation, vol. 1, pp. 898-903, 2004.

I. Marsa-Maestre, M. A. Lopez-Carmona, J. R. Velasco

and A. Navarro, “Mobile agent for service

personalization in smart environments,” Journal of

Networks, vol. 3, no. 5, pp. 30-41, 2008.

B.-K. Sung, “An intelligent agent for inferring user status

on smartphone,” Journal of KIIT, vol. 6, no. 1, pp. 57-

63, 2008.

R. Picard, “Affective Computing,” Media Laboratory

Perceptual Computing TR 321, MIT Media

Laboratory, 1995.

C. Bartneck, “Integrating the OCC model of emotions in

embodied characters,” In Proc. of the Workshop on

Virtual Conversational Characters: Applications,

Methods, and Research Challenges, 2002.

A. Ortony, G. Clore, and A. Collins, The Cognitive

Structure of Emotions, Cambridge University Press,

Cambridge, UK, 1988.

D.-W. Lee, H.-S. Kim and H.-G. Lee, “Research trend on

emotional communication robot,” Journal of Korea

Information Science Society, vol. 26, no. 4, pp. 65-72,

2008.

P. Maes, “How to do the right thing,” Connection Science

Journal, vol. 1, no. 3, pp. 291-323, 1989.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

318