AUCTION SCOPE, SCALE AND PRICING FORMAT

Agent-based Simulation of the Performance of a Water Quality Tender

Atakelty Hailu

School of Agricultural and Resource Economics, University of Western Australia

35 Sitrling Highway M089, Crawley WA 6009, Australia

John Rolfe, Jill Windle

Centre for Environmental Management, Central Queensland University, Rockhampton, QLD 4702, Australia

Romy Greiner

River Consulting,68 Wellington St, Townsville, QLD 4812, Australia

Keywords:

Computational economics, Auction design, Agent-based modelling, Conservation auctions.

Abstract:

Conservation auctions are tender-based mechanisms for allocating contracts among landholders who are in-

tertested in undertaking conservation activities in return for monetary rewards. These auctions have grown in

popularity over the last decade. However, the services offered under these auctions can be complex and auc-

tion design and implementation features need to be carefully considered if these auctions are to perform well.

Computational experiments are key to bed-testing auction design as the bulk of auction theory (as the rest of

economic theory) is focused on simple auctions for tractability reasons. This paper presents results from an

agent-based modelling study investigating the impact on performance of four auction features: scope of con-

servation activities in tendered projects; auction budget levels relative to bidder population size (scale effects);

endogeneity of bidder participation; and auction pricing rules (uniform versus discriminatory pricing). The

results highlight the importance of a careful consideration of scale and scope issues and that policymakers

need to consider alternatives to currently used pay-as-bid or discriminatory pricing fromats. Averaging over

scope variations, the uniform auction can deliver at least 25% more benefits than the discriminatory auction.

1 INTRODUCTION

This article presents results from an agent-based mod-

elling study undertaken as a component of federally-

funded auction trial project undertaken in Quensland,

Australia. The trial knownas the Lower Burdekin Dry

Tropics Water Quality Improvement Tender Project

was developed with the aim of exploring issues of

scope and scale in tender design (Rolfe et al., 2007).

It involved the conducting of a trial auction, an exper-

imental workshop and this agent-based modelling (or

computational experiments) component. The objec-

tives of the project were to assess whether and how

increases in scale and scope of a tender may lead to

efficiency gains and investigate the extent to which

these gains might be offset as a result of higher trans-

action costs and/or lower participation rates.

Auctions exploit differences in opportunity costs.

Therefore, one would expect budgetary efficiency to

be enhanced if tenders have wider scale and scope.

However, auctions with wider coverage might in-

volve complex design as well as higher implementa-

tion costs. Auctions with wider scope might also at-

tract lower participation rates. An evaluation of these

trade-offs is essential to the proper design of conser-

vation auctions.

The agent-based modelling study presented here

focused on evaluating the impact of auction scale

and scope changes in the presence of bidder learn-

ing. The study also explored the impact on perfor-

mance of the use of an alternative auction pricing for-

mat, namely, uniform pricing, which pays winners

the same amount for the same environmental bene-

fit. These auction design features are evaluated, first,

by ignoring the possible ramifications of auction out-

comes on the tendency to participate and, second, by

80

Hailu A., Rolfe J., Windle J. and Greiner R. (2010).

AUCTION SCOPE, SCALE AND PRICING FORMAT - Agent-based Simulation of the Performance of a Water Quality Tender.

In Proceedings of the 2nd International Conference on Agents and Artificial Intelligence - Agents, pages 80-87

DOI: 10.5220/0002730500800087

Copyright

c

SciTePress

allowing bidder participation to be affected by tender

experience.In summary, the agent-based modelling

study simulated aucton environments and design fea-

tures that could not be explored through the field tri-

als.

The paper is organized as follows. The next

section presents the case for agent-based modelling

in the design of auctions. Agent-based computa-

tional approaches are being increasingly utilized in

the economicsliterature to complement analytical and

human-experimental approaches (Epstein and Axtell,

1996; Tesfatsion, 2002). The distinguishing feature

of agent-based modelling is that it is based on exper-

imentation or simulation in a computational environ-

ment using an artificial society of agents that emulate

the behaviours of the economic agents in the system

being studied (Tesfatsion, 2002). These features make

the technique a convenient tool in contexts where an-

alytical solutions are intractable and the researcher

has to resort to simulation and/or in contexts where

modelling outcomes need to be enriched through the

incorporation of agent heterogeneity, agent interac-

tions, inductive learning, or other features. Section

3 presents the auction design features explored in the

study. These include budget levels, scope of conser-

vation activities, endoegeneity of participation levels,

and two alternative pricing formats. Simulated results

are presented and discussed in Section 4. The final

section summarizes the study and draws conclusions.

2 AGENT-BASED AUCTION

MODEL

Auction theory has focused on optimal auction de-

sign, but its results are usually valid only under very

restrictive assumptions on the auction environment

and the rationality of the players. Theoretical analysis

rarely incorporates computational limitations, of ei-

ther the mechanisms or the agents (Arifovic and Led-

yard, 2002). Experimental results (Erev and Roth,

1998; Camerer, 2003) demonstrate that the way peo-

ple play is better captured by learning models rather

than by the Nash-Equilibrium predictions of eco-

nomic theory. So, in practice, what we would observe

is people learning over time, not people landing on the

Nash equilibrium at the outset of the game. The need

to use alternative methods to generate the outcomes

of the learning processes has led to an increasing use

of human experimental as well as computational ap-

proaches such as agent-based modelling.

Our agent-based model has two types of agents

representing the players in a procurement auction,

namely one buyer (the government) and multiple sell-

ers (landholders) competing to sell conservation ser-

vices. Each landholder has an opportunity cost that is

private knowledge. The procuring agency or govern-

ment agent has a conservation budget that determines

the number of environmental service contracts.

Each simulated auction round involves the follow-

ing three major steps. First, landholder agents formu-

late and submit their bids. Second, the government

agent ranks the submitted bids based on their environ-

mental benefit score to cost ratios and selects winning

bids. The number of successful bids depends on the

size of the budget and the auction price format. In the

case of discriminatory or pay-as-bid pricing, the gov-

ernment agent allocates the money starting with the

highest ranked bidder until the budget is exhausted.

In a uniform pricing auction, all winning bidders are

paid the same amount per environmental benefit. The

cutoff point (marginal winner) for this auction is de-

termined by searching for the bid price that would ex-

haust the budget if all equally and better ranked bids

are awarded contracts. Third, landholder agents apply

learning algorithms that take into account auction out-

comes to update their bids for the next round. In the

very initial rounds, these bids are truthful. In subse-

quent rounds, these bids might be truthful or involve

mark-ups over and above opportunity costs.

Bids are updated through learning. Different

learning models have been developed over the last

several decades and can inform simulated agent be-

haviour in the model. A typology of learning mod-

els presented by (Camerer, 2003) shows the relation-

ship between these learning algorithms. This model

combines two types of learning models: a direction

learning model (Hailu and Schilizzi, 2004; Hailu and

Schilizzi, 2005) and a reinforcement learning algo-

rithm (Hailu and Thoyer, 2006; Hailu and Thoyer,

2007). These two algorithms are attractive for mod-

elling bid adjustment because they do not require that

the bidder know the forgone payoffs for alternative

strategies (or bid levels) that they did not utilize in

previous bids.

Learning direction theory asserts that ex-post ra-

tionality is the strongest influence on adaptive be-

haviour (Selten and Stoecker, 1986; Selten et al.,

2001). According to this theory, more frequently than

randomly, expected behavioural changes, if they oc-

cur, are oriented towards additional payoffs that might

have been gained by other actions. For example, a

successful bidder, who changes a bid, is likely to in-

crease subsequent bid levels. Reinforcement learning

(Roth and Erev, 1995; Erev and Roth, 1998) does not

impose a direction on behaviour but is based on the

reinforcement principle that is widely accepted in the

psychology literature. An agent’s tendency to select

AUCTION SCOPE, SCALE AND PRICING FORMAT - Agent-based Simulation of the Performance of a Water Quality

Tender

81

a strategy or bid level is strengthened (reinforced) or

weakened depending upon whether or not the action

results in favourable (profitable) outcomes. This al-

gorithms also allows for experimentation (or gener-

alization) with alternative strategies. For example, a

bid level becomes more attractive if similar (or neigh-

bouring) bid levels are found to be attractive.

In our model, we combine the two learning the-

ories because it is reasonable to assume that direc-

tion learning is a reasonable model of what a bidder

would do in the early stages of participation in auc-

tions. These early rounds can be viewed as discov-

ery rounds where the bidders, through their experi-

ence in the auctions, discover their relative standing

in the population of participants. It would thus be

reasonable to assume that successful bidders would

probabilistically adjust their bids up or leave them un-

changed. However, this process of directional adjust-

ment would end once the bidder fails to win in an auc-

tion. At this stage, the bid discovery phase can be con-

sidered to have finished and the bidder to be in a bid

refinement phase where they chose among bid levels

through reinforcement algorithm, with the probability

or propensity of choice initially concentrated around

the last successful bids utilized in the discoveryphase.

Further details on the attributes and implementation

of the reinforcement algorithm are provided in the pa-

per by Hailu and Thoyer on multi-unit auction pricing

formats (Hailu and Thoyer, 2007).

3 DESIGN OF EXPERIMENTS

In all experiments reported in this paper, a popula-

tion of 100 bidding agents is used. This number is

chosen to be close to the the number of actual bids

(88) submitted in the Burdekin auction trial (Greiner

et al., 2008). The opportunity cost of these bidding

agents depend on the mix of water quality enhancing

activities that are included in their projects. This de-

pendence of opportunity cost on project activities is

determined based on the relationship between costs

and activities implied in the actual bids. A data envel-

opment analysis (DEA) frontier is constructed from

the actual bids to provide a mechanism for generat-

ing project activities that extrapolate those observed

in the actual trial. For a given bundle of conservation

activities, this frontier provides the best possible cost

estimate. This cost estimate is then adjusted by a ran-

dom draw from the cost efficiency estimates obtained

for the actual bids.

The nature of the bidder opportunity costs, the

budget, payment formats and the responses of bidder

agents to auction outcomes are varied so that results

are generated for experiments that combine these fea-

tures in different configurations. Further details on

these design variations are provided below.

3.1 Scope of Conservation Activities

Changes in scope of the auction are imitated through

variations in the coverage of water quality improv-

ing activities undertaken by the bidding population.

These activities are nitrogen reduction, pesticide re-

duction and sediment reduction. In the actual bids,

the sediment reducing projects came almost entirely

from pastoralists while the nitrogen and pesticide re-

ducing activities came from sugar cane growers. The

shares of nutrient, pesticide and sediment reduction

in the environmental benefits (EBS) score value were

varied between 0 and 1 to generate a mix of activities

covering a wide range of heterogeneity in projects.

For example, to simulate auction performance for a

case where the range of allowed activities is on av-

erage a 50/50 contribution from nitrogen and pesti-

cide reducing activities, a random population of 100

shares is drawn from a Dirichlet distribution centered

at (0.5,0.5). This is then translated into nitrogen, pes-

ticide and sediment quantities using the relationship

between environmental benefit scores and reduction

activities employed for the actual auction

1

.

3.2 Auction Budgets

Two auction budgets are used, $600K and $300K. The

first level represents approximately the actual budget

used in the field trials, while the second budget indi-

cates a higher level of competition or ”degree of ra-

tioning” that can be achieved by increasing the scale

of participation.

3.3 Endogeneity of Bidder Participation

Auction performance is likely to be influenced by the

dynamics of participation. Unless auctions are orga-

nized differently (e.g. involving payments that main-

tain participation levels), one would expect some of

the bidders to drop out as a result of failure to win

contracts. Therefore, we carry out computational ex-

periments for a case where bidders are assumed to

participate even in the case of failure and also for a

case where bidders drop out, with some probability.

1

The method used is an approximation to the actual pro-

cedure based on a regression of reduction activity levels and

EBS scores. This was done because the actual scoring in-

volved adjustments that credited projects with extra points

for other aspects of the project besides nitrogen, pesticide

or sediment reduction.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

82

In the second case, if a bidder fails to win contracts,

the probability of dropping out increases up to a max-

imum of 0.5. However, a simulation that allows for a

one-way traffic (i.e. exit) would not take into account

the fact that the auction can become more attractive as

bidders drop out and competition declines. Therefore,

we allow for both exit and re-entry into the auctions.

Re-entry by inactive bidders occurs with a probability

that is increasing with the average net profit partici-

pating bidders are making from their contracts.

3.4 Auction Price Format

The choice of payment formats has been an interest-

ing research topic in auction theory. Theory offers

guidance on choices in simple cases but has difficulty

ranking formats in more complex cases, whether the

complexity arises from the nature of the bidder popu-

lation or the nature of the auction (e.g. multi-unit auc-

tion (Hailu and Thoyer, 2007)). The Burdekin field

trial, like most Market-based instrument (MBI) tri-

als conducted to date, has used a pay-as-bid or dis-

criminatory pricing format. In the agent-based simu-

lations, this payment format is compared to the alter-

native format of uniform pricing where winning bid-

ders would be paid the same per unit of environmental

benefit.

The auction design features discussed above are

varied to generate and simulate a range of auction

market experiments. Each auction experiment is

replicated 50 times to average over stochastic ele-

ments involved in the generation of opportunity cost

estimates and the probabilistic bid choices that are

employed in the learning algorithms. Results reported

below are averages over those 50 replications.

4 AUCTION PERFORMANCE

The key finding from these experiments is that out-

comes vary greatly with the details of the auction and

the activities covered in its scope. The results are

summarized below.

4.1 Scope Effects

The performance of the auction as measured by bene-

fits per dollar spent is dependent on the scope of con-

servation activities that are eligible. The benefits per

dollar range from a low of 1.91 environmental benefit

scores (EBS) per million dollars to a high of 3.62. An

increase in the share of sediment reduction reduces

benefits obtained; the benefits per million dollars are

always less than 3.0 when there is a positive average

share of sediment reduction activities. The benefits

improve with improvements in the share of pesticide

reduction activities.

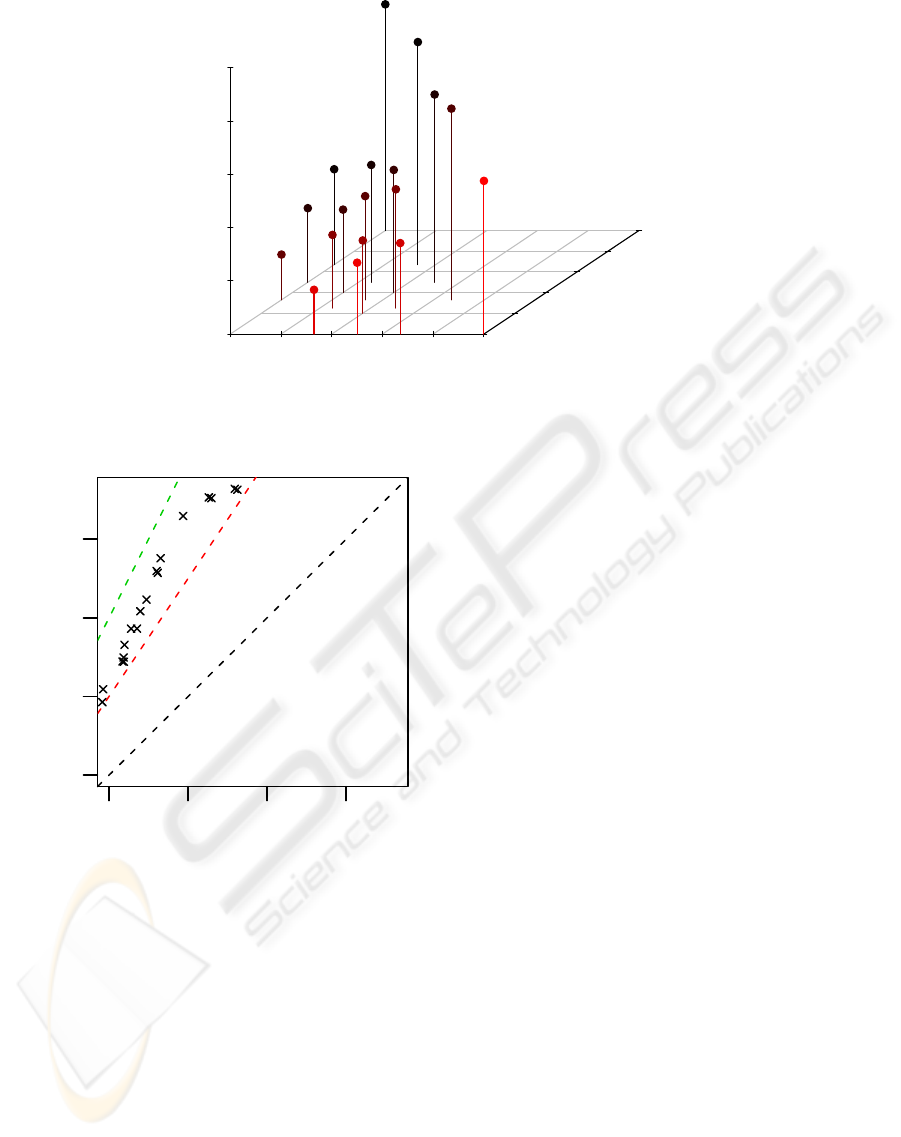

In Figure 1, the benefits are plotted against the av-

erage shares of nitrogen and pesticide contribution to

water quality in the projects. The horizontal axis in

the figure indicates the relative contribution of nitro-

gen reduction while the y-axis (diagonal line) indi-

cates the share of pesticide. The residual contribu-

tion is the share of sediment reduction. Points at the

bottom-right corner have higher shares of nitrogen as

opposed to points closer to the top-left corner of the

diagram. Points on the top-left to bottom-right diago-

nal line correspond to a constant combined contribu-

tion from nitrogen and pesticide reduction (i.e. they

represent a constant sediment contribution). There-

fore, points further to the left of the diagonal line

stretching from the top-left hand corner to the right-

bottom corner include higher shares of sediment re-

duction. The dots in the figure show the deteriora-

tion in benefits per dollar as the scope of the auc-

tion covers more and more sediment reduction. Look-

ing at variations other than sediments, the changes in

the heights of the lines increasing towards the top-left

corner (from the bottom-right) indicate the increase in

benefits that occur as the share of pesticide increase at

the expense of nitrogen reduction with sediment re-

duction excluded. See Table 3 in the Appendix for

further details on the results.

4.2 Budget Levels and Auction

Efficiency

For a discriminatory auction with 100 bidders, halv-

ing the budget from $600,000 to $300,000 has a large

effect on the performance of the auction. The benefit

to cost ratios are more than 50% higher for the auc-

tion with the $300,000 budget. See Figure 2 where

the benefits per million dollars for the auction with the

two budgets are shown. The different points represent

results for the different mixes in activities discussed

above. All the points fall between the two dotted lines

which represent ratios of 2 and 1.5 between the values

on the y and x axes.

The benefits of the higher degree of rationing are

similarly strong for all other experiments where other

features of the auction are varied (e.g. pricing for-

mats). Considering all cases together, the benefits per

dollar from the auction with a budget of $300,000 can

be between 40 and 100% above those where the bud-

get is $600,000. On average, the benefits were 67%

higher. The benefits of the higher degree of rationing

(or increased competition for a given budget) are no-

tably higher when the scope of the auction is such that

AUCTION SCOPE, SCALE AND PRICING FORMAT - Agent-based Simulation of the Performance of a Water Quality

Tender

83

0.0 0.2 0.4 0.6 0.8 1.0

1.5 2.0 2.5 3.0 3.5 4.0

0.0

0.2

0.4

0.6

0.8

1.0

nitrogen share

pesticide share

Benefits per $Mil.

Figure 1: Conservation activity mix and auction performance.

2 3 4 5

2 3 4 5

Benefits/$M, 600K

Benefits/$M, 300K

Figure 2: Benefits per million dollar for auctions with bud-

gets of $300,000 and $600,000.

it covers higher cost activities.

4.3 Participation and Efficiency

Results for repeated auctions where bidders stay

active even after bids are unsuccessful are only

marginally higher than for cases where bidders drop

out (and re-enter). The weakness of these results

seems to be due to the way the participation rules are

formulated in the simulation, being biased in favour

of bidding. For example, in our experiments, a bid-

der who just lost in an auction might participate in

the next one with a probability of at least 0.5 depend-

ing on their net revenue from the contracts in previous

rounds. In practice, bidders might be more responsive

to bid failures and the results reported here would un-

derstate the importance of investments in landholder

participation.

4.4 Uniform versus Discriminatory

Pricing

Results for a uniform pricing format where every win-

ning bidder gets paid the same for the same environ-

mental benefit are generated and compared with those

obtained under simulation conducted for discrimina-

tory pricing. The key results are summarized in Ta-

ble 1 where we report the ratios of values from the

uniform price auction to those from the discrimina-

tory price auction for both budget levels and activity

threshold specifications. A ”yes” value for activity

threshold or endogenous participation indicates that

the results in the row are for simulation where bid-

ders drop out as a result of failure to win. Each row

reports the results for the corresponding budget and

activity threshold averaged over all the activity scope

variations covered in the experiments.

In terms of performance, the results reported in

the third column indicate that a uniform auction de-

livers benefits that are at least 25% higher than those

obtained under a discriminatory auction when per-

formance measures are averaged over all scope vari-

ations. The relative benefits of the uniform auc-

tion are highest when competition is tight (budget of

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

84

Table 1: Ratio of results from uniform auction to those from discriminatory auction.

Endogeneous Ratio of benefits Ratio of first ranked Ratio of last ranked

Budget (000s) participation per dollar bid price bid price

300 no 1.30 0.52 0.45

300 yes 1.36 0.49 0.37

600 no 1.25 0.29 0.51

600 yes 1.28 0.28 0.43

$300,000) and for the case where bidders tend to drop

out when they fail to win contracts.

The higher benefits obtained under the uniform

price auction are the result of the fact that it encour-

ages less overbidding than the discriminatory pricing

auction. This can be seen in the last two columns of

Table 1 where the figures indicate the ratios of the

bid prices from uniform to those from discriminatory

prices for the highest (column 4) and for the lowest

ranked (column 5) bidders in the auction. The first

row results show that the benefit price (bid to bene-

fit ratio) of the highest ranked and the lowest ranked

bidders under the uniform auction were only 52 and

45 percent of the corresponding figures for the dis-

criminatory auction. In other words, the bids under

the uniform auction are more truthful or involve less

overbidding. This is because the bidder does not have

the incentive (unless they are the marginal bidder) to

misrepresent their bid under the uniform auction; sub-

ject to winning, a bidder’s payoff does not depend on

their own bid but on that of the most expensive win-

ner. The disparity between the bids of the best ranked

bidders are highest for the higher budget auction.

In summary, the performance of the auction is de-

pendent on the variations considered in the computa-

tion experiments. To investigate the individual contri-

butions of the different auction features, the benefits

per dollar are regressed against variables represent-

ing the design features. These results are presented in

Table 2 which highlights the importance of the auc-

tion design features, with 93% of the benefit per dollar

variations explained by the design features. Increases

in budgets (for a given pool of bidders), share of sed-

iment reduction contribution, discriminatory pricing

and the presence of activity threshold in a bidders de-

cision to participate all contribute towards lower ben-

efit value per dollar spent. Higher shares for pesticide

reduction activities, on the other hand, increase effi-

ciency.

5 CONCLUSIONS

This study conducted computational experiments to

evaluate the impact on auction performance of several

design features, including: the scope of water quality

improving activities allowed in projects; the scale of

the auction as measured by the budget size relative to

participating bidder numbers; and the choice of pric-

ing format. These design features were conducted for

two cases of bidder responses to failures in auctions.

In the first case, bidder numbers were assumed to be

constant regardless of auction outcomes. In the sec-

ond case, bidders were assumed to drop out with a

probability if they lose in tenders and to re-enter in

with a probability that increases with the net revenue

from contracting that is obtained by active bidders.

The results consistently indicate that auction per-

formance as measured by environmental benefits per

dollar is highly dependent on the mix of conservation

activities allowed in the projects. In particular, in-

creases in the average share of sediment reduction ac-

tivities are detrimental to the performance of the auc-

tion. The environmental benefits generated per dol-

lar of funding fall consistently as the average share of

sediment reduction activities in projects rises. This

outcome is a reflection of the more costly nature of

sediment based activities and highlights the need for

the identification of scope/efficiency trade-offs based

on the nature of conservation activities that prevail

in different industries. It demonstrates that narrowly

scoped auction focused on activities with high op-

portunity costs can perform very poorly compared to

more broadly scoped auctions.

Improvements in the scale of participation are

highly beneficial for auction performance. The ben-

efits of a higher degree of rationing obtained through

higher participation numbers relative to budgets are

very strong. In this case study, the benefits per dol-

lar from the auction with a budget of $300,000 can

be between 40 and 100% above those where the bud-

get is $600,000. The benefits of the higher degree of

rationing or increased competition for a given budget

are notably higher when the scope of the auction is

such that it covers higher cost activities.

Results for repeated auctions where bidders stay

active even after bids are unsuccessful are only

marginally higher than for cases where bidders drop

out and re-enter. The weakness of these results seems

to be due to the way the participation rules are formu-

lated in the simulation as discussed. Bidders might be

AUCTION SCOPE, SCALE AND PRICING FORMAT - Agent-based Simulation of the Performance of a Water Quality

Tender

85

Table 2: Results from a regression of environmental benefits per dollar on auction design features.

Variable Coef. estimate t-stat

(Intercept) 0.00811 60.987

Share of Pesticide 0.00057 4.309

Share of Sediment -0.00322 -20.727

Budget (dummy, with 600K equal to 1) -0.00001 -31.243

Discriminatory pricing (dummy) -0.00101 -16.253

Activity threshold (dummy, 0 if bidders do not drop out) -0.00007 -1.169

R-squared 0.93

more responsive to bid failures than is assumed in the

simulations.

Finally, the use of uniform pricing rather than dis-

criminatory pricing in repeated auctions would lead to

higher benefits per conservation dollar. With uniform

pricing, bidders get paid the price of the marginal win-

ner. Their own bids influence whether they win or not

but not how much they get paid (unless they are the

most expensive winner). This auction leads to more

truthful bidding or to less overbidding. The simula-

tions indicate that with uniform pricing in repeated

auctions, one could increase the benefits per dollar by

between 15 and 55%. The benefits from uniform pric-

ing are especially higher if bidders tend to drop out

following bid failures.

ACKNOWLEDGEMENTS

The Lower Burdekin Dry Tropics Water Quality Im-

provement Tender Project was funded by the Aus-

tralian Government through the National Market

Based Instruments Program, with additional support

provided by the Burdekin Dry Tropics Natural Re-

source Management Group. The project was con-

ducted as a partnership between Central Queensland

University, River Consulting, the University of West-

ern Australia and the Burdekin Dry Tropics Natural

Resource Management Group. The views and inter-

pretations expressed in these reports are those of the

authors and should not be attributed to the organisa-

tions associated with the project.

REFERENCES

Arifovic, J. and Ledyard, J. (2002). Computer testbeds: the

dynamics of groves-ledyard mechanisms. Technical

report, Simon Fraser University and California Insti-

tute of Technology.

Camerer, C. (2003). Behavioral Game Theory. Princeton

University Press, Russell Sage Foundation, New York.

Epstein, J. and Axtell, R. (1996). Growing artificial soci-

eties: Social sciences from the bottom up. Brookings

Institution Press, Washington DC.

Erev, I. and Roth, A. (1998). Predicting how people play

games with unique, mixed strategy equilibria. Ameri-

can Economic Review, 88:848–881.

Greiner, R., Rolfe, J., Windle, J., and Gregg, D. (2008).

Tender results and feedback from ex-post participant

survey, Research Report 5. Central Queensland Uni-

versity, Rockhampton.

Hailu, A. and Schilizzi, S. (2004). Are auctions more ef-

ficient than fixed price schemes when bidders learn?

Australian Journal of Management, 29:147–168.

Hailu, A. and Schilizzi, S. (2005). Learning in a ’bas-

ket of crabs’: An agent-based computational model

of repeated conservation auctions. In Lux, T., Reitz,

S., and Samanidou, E., editors, Nonlinear Dynamics

and Heterogeneous Interacting Agents, pages 27–39.

Springer, Berlin.

Hailu, A. and Thoyer, S. (2006). Multi-unit auction for-

mat design. Journal of Economic Interaction and Co-

ordination, 1:129–146.

Hailu, A. and Thoyer, S. (2007). Designing multi-unit

multiple bid auctions: An agent-based computational

model of uniform, discriminatory and generalized

vickrey auctions. Economic Record, 83(S1):S57–S72.

Rolfe, J., Greiner, R., Windle, J., and Hailu, A. (2007).

Identifying scale and scope issues in establishing con-

servation tenders: Using Conservation Tenders for

Water Quality Improvements in the Burdekin, Re-

search Report 1. Central Queensland University,

Rockhampton.

Roth, A. and Erev, I. (1995). Learning in extensive form

games: experimental data and simple dynamic mod-

els in the intermediate term. Games and Economic

Behavior, 8:164–212.

Selten, R., Abbink, K., and Cox, R. (2001). Learning di-

reciton theory and winners curse. Technical Report

Discussion Paper 10, Department of Economics, Uni-

versity of Bonn, Germany.

Selten, R. and Stoecker, R. (1986). End behaviour in

sequance finite prisoners’ dilemma supergames: A

learning theory approach. Journal of Economic Be-

haviour and Organization, 7:47–70.

Tesfatsion, L. (2002). Agent-based computational eco-

nomics: Growing economies from the bottom up. Ar-

tificial Life, 8:55–82.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

86

APPENDIX

Table 3: Benefits per million dollars for alternative activity

scopes.

Nitrogen Pesticide Sediment Benefits

share share share per Mill. $

0.00 1.00 0.00 3.62

0.00 0.50 0.50 2.20

0.00 0.33 0.67 1.93

0.00 0.67 0.33 2.40

1.00 0.00 0.00 2.94

0.50 0.00 0.50 2.17

0.33 0.00 0.67 1.92

0.50 0.50 0.00 3.26

0.33 0.33 0.33 2.47

0.25 0.25 0.50 2.19

0.33 0.67 0.00 3.59

0.25 0.50 0.25 2.60

0.20 0.40 0.40 2.28

0.67 0.00 0.33 2.35

0.67 0.33 0.00 3.30

0.50 0.25 0.25 2.62

0.40 0.20 0.40 2.18

0.40 0.40 0.20 2.65

AUCTION SCOPE, SCALE AND PRICING FORMAT - Agent-based Simulation of the Performance of a Water Quality

Tender

87