ON THE EVALUATION OF MINED FREQUENT SEQUENCES

An Evidence Theory-based Method

Francisco Guil

Computer Science School, University of Almer´ıa, Spain

Francisco Palacios

University Hospital of Getafe, Spain

Manuel Campos, Roque Mar´ın

Computer Science Faculty, University of Murcia, Spain

Keywords:

Temporal data mining, Theory of evidence, Entropy, Specificity.

Abstract:

Frequent sequences (or temporal associations) mining is a very important topic within the temporal data mi-

ning area. Syntactic simplicity, combined with the dual characteristics (descriptive and predictive) of the

mined temporal patterns, allow the extraction of useful knowledge from dynamic domains, which are time-

varying in nature. Some of the most representative algorithms for mining sequential patterns or frequent

associations are Apriori-like algorithms and, therefore, they cannot handle numeric attributes or items. This

peculiarity makes it necessary to add a new process in the data preparation step, the discretization process.

An important fact is that, depending on the discretization technique used, the number and type of discovered

temporal patterns change dramatically. In this paper, we propose a method based on the Shafer’s Theory of

Evidence that uses two information measures proposed by Yager for the quality evaluation of the extracted sets

of temporal patterns. From a practical point of view, the main goal is to select, for a given dataset, the best

discretization technique that leads to the discovery of useful knowledge. Nevertheless the underlying idea is

to propose a formal method for assessing the mined patterns, seen as a belief structure, in terms of certainty in

the information that represents. In this work, we also present a practical example, describing an application of

this proposal in the Intensive Care Burn Unit domain.

1 INTRODUCTION

Temporal data mining can be defined, in general

terms, as the activity of looking for interesting cor-

relations or patterns in large sets of data accumu-

lated for other purposes. It has the capability of mi-

ning activity, inferring associations of contextual and

temporal proximity, some of which may also indi-

cate a cause-effect association. This important sort

of knowledge can be overlooked when the temporal

dimension is ignored or treated as a simple numeric

attribute. There is a lot of work related to this area, in-

cluding those belonging to the Aprori-like algorithms,

like sequential pattern mining or temporal association

rules mining, amongst other. A common feature of

the Apriori-like algorithms is that they can not handle

databases with continuous attributes, requiring the ap-

plication of discretization techniques in the data pre-

processing step. Basically, the discretization tech-

niques consist of the partition of the numerical do-

main into a set of intervals, treating each interval as

a category. There are automatic discretization tech-

niques which find the partition that optimizes a given

evaluation function, and techniques that use expert

knowledge to divide the domain into several intervals.

In general, there is a large number of discretization

techniques, and their efficiency is often a bottleneck

in the knowledge discovery process. The problem

here is to select a good discretization method that, in

an efficient way, generates a discretized dataset from

which useful knowledge can be obtained. If we are

dealing with global data mining techniques, the ac-

curacy of the model can be a good proposal to se-

lect the best discretization technique. But, if we are

dealing with local methods, it is so difficult to as-

sess the quality of the discovered knowledge. The

usually huge number of mined patterns, a number

which depends both on the user-defined parameters

263

Guil F., Palacios F., Campos M. and Marín R. (2010).

ON THE EVALUATION OF MINED FREQUENT SEQUENCES - An Evidence Theory-based Method.

In Proceedings of the Third International Conference on Health Informatics, pages 263-268

DOI: 10.5220/0002736202630268

Copyright

c

SciTePress

of the algorithm and the used discretization method,

makes it virtually impossible to objectively evaluate

the outcome. It becomes necessary, therefore, a for-

mal method for evaluating mined (temporal) associ-

ations, which resulted in a value that indicates the

quality of the discovered knowledge. In this paper,

we propose to use a method to assess the quality of

mined frequent sequences based on the combined use

of two uncertainty measures, the entropy and speci-

ficity, both defined in (Yager, 1981) in the setting of

the Theory of Evidence. Once the set of associations

is prepared (normalizing the associated frequencydis-

tribution), we treat it as a body of evidence. From the

Theory of Evidence point of view, data mining is seen

as an evidence-based agent. But, from the data min-

ing point of view, the treatment of sets of frequent

associations as bodies of evidence allowing the use

of the information measures to quantify the quality of

the discovered knowledge. Specifically, we propose a

quality index based on the distance of the pair formed

by the entropy and non-specificity measures with re-

gard to the situation of total certainty.For the empiri-

cal evaluation of the proposed method, we have used

a dataset belonging to the medical domain, in partic-

ular, to the Intensive Care Burn Unit domain, special-

ized in the treatment of patients with severe burns.

The rest of this paper is organized as follows.

Section 2 introduces the notation and basic defini-

tions necessaries to define the problem. Section 3 in-

troduces the theoretical foundations of the proposed

method for evaluating sets of frequent event-based se-

quences. Section 4 describes and empirical evaluation

with a real dataset belonging to the medical domain.

Conclusions and future works as finally drawn in Sec-

tion 5.

2 THE PROBLEM DEFINITION

Frequent sequence mining is an extension of the Apri-

ori algorithm (Agrawal et al., 1993) and, therefore,

the mining process is iterative in nature and performs

a levelwise search. It is based on the downward clo-

sure property, which states that every subset of a fre-

quent sequence is also frequent (and also, it is true

that every superset of a non-frequent sequence is non-

frequent). Starting with the dataset, the first step fo-

cuses on the extraction of all the frequent events (se-

quences of length 1), and then the process continues

generating the set of patterns of length k from the set

of frequent patterns of k − 1 length. Next, we will

introduce the notation and basic definitions for speci-

fying in detail the main goal of the proposal.

Definition 1 (Event). An event (e) is defined as the

pair (te,t), or simply te

t

, where te is the type event

and t is the time instant of its occurrence. The events

are things that happen in the real world, and they usu-

ally represent the dynamic aspect of the world. In our

case, an event is related to the fact that a certain type

event occurs at a given point-based instant.

Definition 2 (Event-based Sequence).

A sequence (S) is defined as an ordered set of events,

that is, S = {e

0

, e

1

, . . . , e

k−1

}, where ∀i < j, e

i

< e

j

.

Obviously, |S| = k.

This sort of sequence is defined by a quantitativetreat-

ment of time. Nevertheless, the sequential patterns

are qualitative temporal sequences although, with a

simple transformation, they can be expressed as in the

Definition 2.

Example 1 Let S be a qualitative sequence (or a se-

quential pattern), such that S

ql

= {ab → cd → a}.

The → symbol denotes the “after than” temporal re-

lation. This sequence can be rewritten using a quan-

titative notation in the form S

qn

= {a

0

, b

0

, c

1

, d

1

, a

2

}.

In general, for i > 0 and j > i, S

ql

generates a fa-

mily of equivalent quantitative sequences defined as

S

∗

qn

= {a

0

, b

0

, c

i

, d

i

, a

j

}.

Given a dataset D, the goal of temporal sequence

mining is to determine all the frequent sequences that

show the temporal regularities in it.

Definition 3 (Temporal Data Mining Algorithm).

In our paper, a temporal data mining algorithm, de-

noted as TDM, is an Apriori-like algorithm that ex-

tracts a set of frequent temporal associations (also

called temporal patterns, sequences or even event-

based sequences) characterized by a frequency dis-

tribution. Each frequency value indicates the number

of objects that match the corresponding pattern.

The term frequent is related to the fact that every

frequency value associated with a pattern will be al-

ways greater than or equal to a user-defined parameter

called minimum support (denoted as ms). Depending

on the sort of algorithm, another user-defined param-

eter can be maxspan, which indicates the maximal

temporal distance among events that comprise a se-

quence. Examples of TDM algorithms of our interest

are mainly GSP (Srikant and Agrawal, 1996), SPADE

(Zaki, 2001), and TSET − Miner (an improved se-

quential version of the intertransactional algorithm

presented in (Guil et al., 2004)). The two first al-

gorithms are designed for mining sequential patterns.

The latter are designed for extracting a special type

of pattern, called event-based sequences. However,

HEALTHINF 2010 - International Conference on Health Informatics

264

as shown above, the sequential pattern can be rep-

resented as an event-based sequence, placing all the

three algorithms as viable alternatives in this paper.

Definition 4 (Sequences Base). Let B S be the to-

tal number of frequent sequences extracted by TDM,

that is, B S = {S

i

}, where S

i

is a frequent sequence.

B S is characterized by a frequency distribution f =

{ f

i

}, such that f

i

= freq(S

i

). We propose to normal-

ize the distribution f, obtaining f = { f

i

}, such that

f

i

=

f

i

∑

i

f

i

.

From the set of all frequent sequences, in this paper,

we are interested only in the maximal ones. A maxi-

mal sequence is a frequent sequence that has no fre-

quent super-sequences. In this way, the mining pro-

cess is done in a more efficient way, obtaining an in-

teresting sequences base that shows the temporal reg-

ularities presented in the dataset. Once the sequences

base is extracted, the next step is its evaluation and in-

terpretation. These tasks are designed with the aim to

discover useful knowledge, and usually they are per-

formed by the expert. However, this is a very hard

process and rarely can be done due to the huge amount

of frequent sequences that are often discovered. The

problem is compounded if, instead of evaluating one

sequences base, the expert must evaluate a set of se-

quences bases obtained by varying the parameters of

the algorithms for preprocessing the datasets. In this

case, the evaluation process must be carried out in

parallel for each of the mined bases, thus having a

much more complicated problem. If the expert must

compare two (or more) sequences bases to determine

which one is the better for obtaining useful knowl-

edge, what method should he/she follow? Is better

a base with more patterns, or else the base with the

longest patterns? Does not affect the structure and

frequency of the patterns to the quality of information

that they represent? On an experimental basis, we

can determine the best base obtaining, for each one,

a classification model and studying its accuracy. But,

several problems arises from this approach. On the

one hand, there is no single method to obtain a classi-

fication model from a set of sequences. What is more,

in the case of temporal classification models, its gene-

ration from temporal patterns is still a open (and very

interesting) problem. On the other hand, and assum-

ing that it is possible to obtain a suitable model from

each base, this evaluation method is time-consuming

and it must be done using a trial-error method of prob-

lem solving. So, it seems interesting to study a formal

method of evaluation that indicates us the quality of

the base without having to generate any later model,

that is, a formal method that determines the degree of

certainty of a sequences base from the structure and

frequency of each sequence in it. And, precisely, this

is the main goal of the next section.

3 MEASURING THE CERTAINTY

OF MINED SEQUENCES

Starting with a sequence base characterized by a nor-

malized frequency distribution, our goal here is to in-

troduce the information measures that will enable us

to achieve its quality in terms of certainty in the e-

vidence. In (Yager, 1981), the author introduces the

concepts of entropy (from the probabilistic frame-

work) and specificity (from the possibilistic frame-

work), in the framework of Shafer’s theory. Both in-

formation measures of uncertainty provide comple-

mentary measures of the quality of a body of evi-

dence. With this proposal, Yager extend the Theory of

Evidence of Shafer (also known as Dempster-Shafer

Theory), developed for modeling complex systems.

In the next section, a brief summary of the

Shafer’s Theory of Evidence is introduced. Next, the

section concludes with the proposed method, defining

the measures and setting their goals.

3.1 Shafer’s Theory of Evidence

The Shafer’s Theory of Evidence is based on a spe-

cial fuzzy measure called belief measure. Beliefs can

be assigned to propositions to express the uncertainty

associated to them being discerned. Given a finite

universal set U (the frame of discernment), the be-

liefs are usually computed based on a density function

m : 2

U

→ [0, 1], called basic probability assignment

(bpa):

m(

/

0) = 0, and

∑

A⊆U

m(A) = 1. (1)

m(A) represents the belief exactly committed to the

set A. If m(A) > 0, then A is called a focal element.

The set of focal elements constitute a core:

F = {A ⊆ U : m(A) > 0}. (2)

The core and its associate bpa define a body of evi-

dence, from where a belief function Bel : 2

U

→ [0, 1]

is defined:

Bel(A) =

∑

B|B⊆A

m(B). (3)

From any given measure Bel, a dual measure, Pl :

2

U

→ [0, 1] can be defined:

Pl(A) =

∑

B|B∩A6=

/

0

m(B). (4)

It can be verified (Shafer, 1976) that the functions

Bel and Pl are, respectively a possibility (or neces-

sity) measure if and only if the focal elements from

ON THE EVALUATION OF MINED FREQUENT SEQUENCES - An Evidence Theory-based Method

265

a nested or consonant set, that is, if it can be ordered

in such a way that each set is contained within the

next. It that case, the associated belief and plausibil-

ity measures posses the following properties. For all

A, B ∈ 2

U

,

Bel(A∩ B) = N(A∩ B) = min[Bel(A), Bel(B)], (5)

and

Pl(A ∪ B) = Π(A∪ B) = max[Pl(A), Pl(B)], (6)

where N and Π are the necessity and possibility mea-

sures, respectively. If all the focal elements are sin-

gletons, that is, A = ω, where ω ∈ U , then:

Bel(A) = Pr(A) = Pl(A) (7)

where Pr(A) is the probability of A. In general terms,

Bel(A) ≤ Pr(A) ≤ Pl(A). (8)

A significant aspect of Shafer’s structure is the ability

to represent in this common framework various dif-

ferent types of uncertainty, that is, probabilistic and

possibilistic uncertainty.

3.2 Specificity and Entropy

The concept of information measures has been en-

tirely renewed by investigations in the setting of mo-

dern theories of uncertainty like Fuzzy Sets, Possibil-

ity Theory, and Shafer’s theory of evidence. An im-

portant aspect is that all of these theories, although

they have a different approach than the probability

theory, they are quite related. The new types of gener-

alized information measures enable several facets of

uncertainty to be discriminated, modeled, and mea-

sured. Let B (U ) be the set of normal bodies of ev-

idence on U (m(

/

0) = 0). An information measure

will be any mapping f : B (U ) → [0, +∞) (the non-

negative real line)(Dubois and Prade, 1999). f sup-

posedly depends on both the core (F ) of the body of

evidence to which it applies, and its associated bpa

(m). f pertains to some propertyof bodies of evidence

and assesses the extent to which it is satisfied. In the

paper (Dubois and Prade, 1999), the authors study

three different measures in the general framework of

evidence theory, corresponding to different proper-

ties of bodies of evidence: measures of imprecision,

dissonance and confusion. However, in this paper,

we only take into account two particular measures of

imprecision and dissonance, the specificity and en-

tropy (respectively), because, as Yager pointed, the

combination of them is a good approach to measure

the quality of a particular body of evidence.Initially,

Yager introduced the concept of specificity (Yager,

1981), as an amount that estimates the precision of a

fuzzy set. Later, the author (Yager, 1983) and Dubois

and Prade (Dubois and Prade, 1985), extend the con-

cept to deal with bodies of evidence, defining the

measure of specificity (S

p

) of a body of evidence as:

S

p

(m) =

∑

A⊆U

m(A)

|A|

. (9)

|A| denotes the number of elements of the set A, that

is, the cardinality of A. It is easy to see that 1− S

p

(m)

is a measure of imprecision, related to the concept of

non-specificity. We will denote this imprecision value

as J

m

, that is, J

m

= 1− S

p

(m). Also, in (Yager, 1983),

the author extends the Shannon entropy to bodies of

evidence, considering the following expression where

ln is the Naperian natural logarithm (it is possible to

use log

2

instead of ln as well):

E

m

(m) = −

∑

A⊆U

m(A)ln(Pl(A)). (10)

Pl(A) is the plausibility of A, and ln(Pl(A)) can be

interpreted in terms of Shafer’s weight of conflict. As

the Shannon entropy, E

m

(m) is a measure of discor-

dance associated with de body of evidence.

In the ideal situation, where no uncertainty is pre-

sented in the body of evidence, E

m

(m) = J

m

(m) = 0.

This is the key point of the proposed method for mea-

suring the certainty associated with a belief structure.

E

m

provides a measure of the dissonance of the evi-

dence, whereas J

m

provides a measure of the disper-

sion of the belief. So, the lower the E

m

, the more

consistent the evidence and, the lower J

m

(the higher

S

p

), the less diverse. For certainty, we want low E

m

and S

m

measures. So, by using a combination of both

measures, we can have a good indication of the qua-

lity of evidence. For measuring this indicator (Q (m),

denoting quality), we propose the inverse of the Eu-

clidean distance between the pair (E

m

, J

m

) and the pair

(0,0), that is:

Q (m) =

1

p

(E

m

)

2

+ (J

m

)

2

. (11)

3.3 Evaluation of a Sequence Base

Let B S be the sequences base, formed by a set of

frequent sequences, B S = {S

i

}, 1 ≤ i ≤ |B S |, mined

from a dataset. And let f be the normalized frequency

distribution associated with B S . A frequent sequence

is an ordered set of events. Let E be the set of all

the frequent events present in the base. Making a syn-

tactic correspondence with the basic elements of the

Theory of Evidence, we obtain that the pair (B S , m)

is a body of evidence, where B S is the core (formed

by the set of focal elements S

i

⊆ E ), and f is the

HEALTHINF 2010 - International Conference on Health Informatics

266

basic probability assignment. In this case, E is the

frame of discernment. Once this correspondence is

established, the evaluation of the set of mined pat-

terns would be carried out by the method described in

the previous section, that is, using the function Q(m)

defined in Equation 11. In general terms, this mea-

sure adds additional information to the expert on the

quality of the sequences base. But, in particular, in

this paper, the Q(m) function (specifically Q( f )) will

be used to compare objectively three set of frequent

patterns mined from a dataset belonging to a medical

domain, which has been discretized using three differ-

ent discretization methods. Let TDM be an Apriori-

like temporal data mining algorithm, and let D be a

dataset. A special feature of TDM is that it can not

handle numerical attributes. Since D contains con-

tinuous attributes, very common in real datasets, it

will be require a discretization method to obtain a

dataset with only nominal attributes. Let d

1

, d

2

, d

3

be three different discretization methods that gener-

ate three different datasets, D

1

= d

1

(D), D

2

= d

2

(D),

and D

3

= d

3

(D). The execution of the algorithm on

each of the datasets (TDM(D

i

)) will results in three

different sequences bases, denoted as B S

D

i

, each one

characterized by a normalized frequency distribution

f

i

. In order to compare the three discretization meth-

ods and determine which one provides information

with less uncertainty, we propose the use of the Q( f

i

)

function, such that the best method is the one that gen-

erates a base with the highest value of Q. For a more

complete assessment, in the empirical evaluation we

will use also three different values for the minimum

support parameter of the TDM algorithm.

4 EMPIRICAL EVALUATION

From a practical point of view, we have carried out an

empirical evaluation using a preprocessed dataset that

represents the evolution of 363 patients in an Inten-

sive Care Burn Unit (ICBU) between 1992 and 2002.

The original database stores, for each patient, a lot of

clinical parameters such as age, presence of inhalation

injury, the extent and depth of the burn, the necessity

of early mechanical ventilation, and the patient sta-

tus int its last day of stay in the Unit, among others.

However, in the construction of the dataset, we only

take into account the temporal parameters, which in-

dicate the evolution of the patients during the resusci-

tation phase (first 2 days) and during the stabilization

phase (3 following days). Incomings, diuresis, fluid

balance, acid base balance (pH, bicarbonate, base ex-

cess) and other variables help to define objectives and

to assess the evolution and treatment response. For

each of these temporal variables, we used three dif-

ferent discretization methods (called d

1

, d

2

, and d

3

,

respectively). The first one (d

1

) is based on clinical

criteria and uses the knowledge previously defined in

the domain. A second discretization method (d

2

) can

be based on the usual interpretation of mean value

and standard deviation. In statistics, the mean is a

central value around which the rest of the values are

spread. When the distance of an element to the mean

is greater than two standard deviations it should be

carefully looked at because it may be a potential out-

lier. We have made a similar distinction defining nor-

mal values those in a interval of one standard devi-

ation around the mean, slightly high or slightly low

values those within two standard deviations around

the mean, and high or low values for those whose dis-

tance to the mean is greater than two standard devia-

tions. This discretization method is the one that ge-

nerates the highest number of patterns, since it does

not consider the domain values (that can be arbitrary

in the dataset) but the values found in the data and

therefore most of them should be found in the “nor-

mal” interval if they are distributed around the mean.

In the last method (d

3

), we used an entropy-based in-

formation gain with respect the output variable. The

information gain is equal to the total entropy for an

attribute if for each of the attribute values a unique

classification can be made for the output variable.

For each discretized dataset (D

i

), we have ob-

tained a set of maximal sequences using a version of a

temporal data mining algorithm designed for the ex-

traction of frequent sequences from datasets with a

time-stamped dimensional attribute. In the analysis

of the data, different values for the parameters of the

algorithm were set, in particular, maxspan to 5 days

(resuscitation and stabilization), and minimum sup-

port to 20%, 30%, and 50%. In total, 6 sets of fre-

quent sequences were extracted that, after the normal-

ization process, resulted in the generation of 6 nor-

malized sequences bases (denoted as B S

D

i

ms

, where D

i

is the dataset obtained by the discretization technique

d

i

, and ms is the minimum support). In terms of the

Theory of Evidence, each normalized sequences base

corresponds to a body of evidence or belief structure,

and is the input of the evaluation method that we pro-

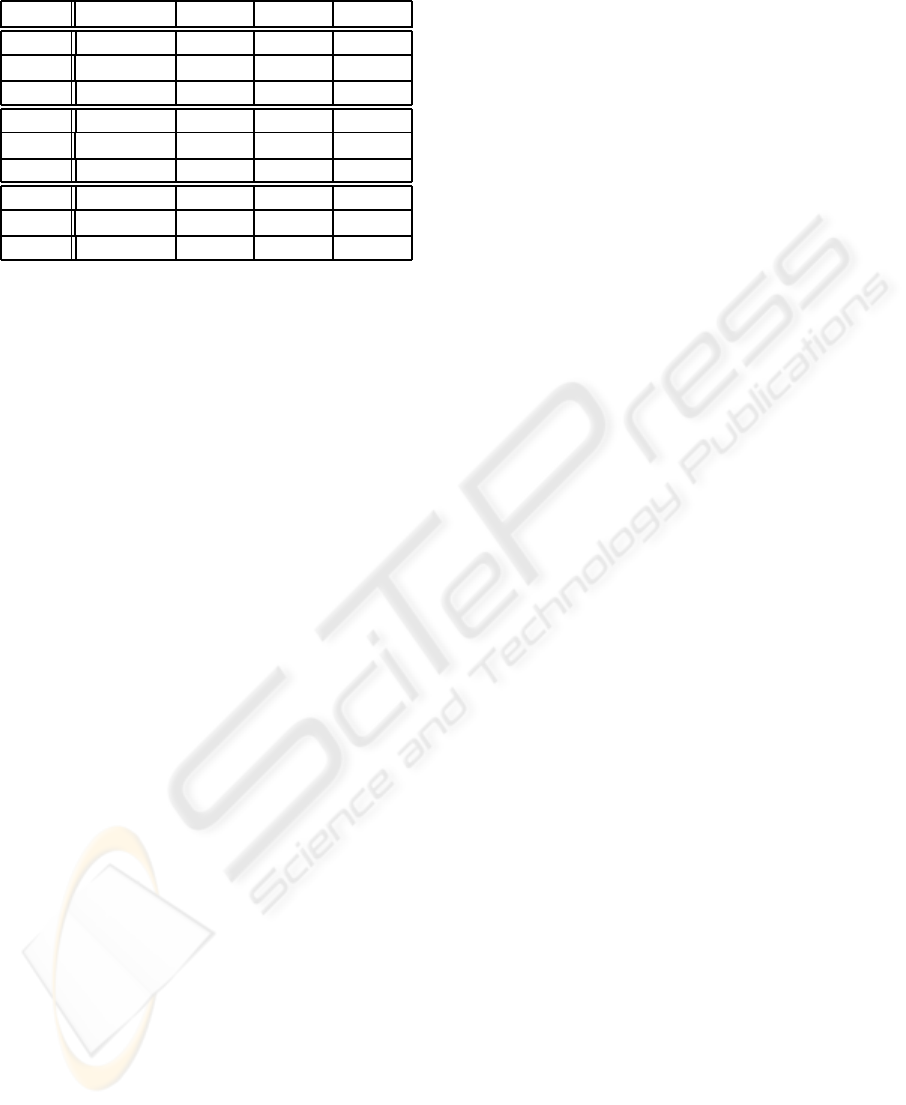

pose in this paper. Table 1 shows the results of the

proposed evaluation method. For each discretization

technique, and for each minimum support value, we

indicate in the table the entropy (E

m

) and the non-

specificity based measure (J

m

), where J

m

= 1 − S

m

,

and S

m

the specificity like measure, both proposed by

Yager as a quality indicator of a belief structure.

The Q parameter is the quality measure defined

in the Equation 11, that is, the inverse of the classi-

ON THE EVALUATION OF MINED FREQUENT SEQUENCES - An Evidence Theory-based Method

267

Table 1: E

m

, J

m

, and Q measures.

B S

D

i

ms

Measures 20% 30% 50%

E

m

0,4071 0,6294 1,0070

B S

D

1

ms

J

m

0,7897 0,7311 0,6139

Q 1.1255 1.0366 0.8479

E

m

0,0314 0,1252 0,4822

B S

D

2

ms

J

m

0,8699 0,8314 0,7318

Q 1.1489 1.1895 1.1410

E

m

0,1977 0,3774 0,7040

B S

D

3

ms

J

m

0,8382 0,7851 0,6681

Q 1.1612 1.1480 1.0303

cal Euclidean distance between the pair (E

m

, J

m

) and

the vector (0,0), which corresponds to the ideal situa-

tion where no uncertainty is presented in the body of

evidence. In our case, the best solution, in terms of

quality in the information presented in the patterns

(maximal Q), is the sequences base BD

D

2

30%

, that is,

the set of frequent maximal sequences obtained from

the dataset discretized by the d

2

method (the statisti-

cal version) and with the minimum support parameter

set to 30%. Depending of the intervals extracted by

the discretization methods, the relationship between

minimum support and number of patterns varies sig-

nificantly. This variation correlates with a variation in

the measures of entropy and non-specificity so that a

decrease in the minimum support value involves a less

specific and less entropic set of patterns (see Table 1).

On the one hand, we are interested in the extraction of

sets of patterns with low entropy or, what is the same,

with less uncertainty. So, if we take this measure in-

dependently, we have that the best sequence base is

B S

D

2

20%

, which corresponds with the dataset with a

greater number of patterns. But, on the other hand,

we are also interested in the extraction of more spe-

cific sets of patterns and, in this case, it corresponds

to the B S

D

1

50%

base, which has a smaller number of

patterns. Therefore, it is necessary to make a compro-

mise, taking into account the two measures together.

So, taking into account the Q measure, the less en-

tropic and more specific set of patterns is, precisely,

B S

D

2

30%

.

5 CONCLUSIONS AND FUTURE

WORK

In this paper we have presented a formal method for

evaluating the data mining results, that is, the set of

frequent patterns that shows the regularities presented

in a dataset. The method is based on the use of two

information measures proposed by Yager in the con-

text of the Shafer’s Theroy of Evidence. The pro-

posal involves the combined use of both measures, the

entropy-like measure (E

m

), and the (non)specificity-

like measure (J

m

), to quantify the quality level (cer-

tainty) of bodies of evidence. Our approach is to treat

the whole mined patterns as a body of evidence. So,

it is possible to use information measures to provide

objective values that assist the experts in the evalua-

tion and the interpretation of the mined patterns and,

therefore, in the discovery of useful information from

data.

In the best of our knowledge, the treatment of the

mined patterns (characterized by its structure and fre-

quency distribution) as a body of evidence is a novel

approach that enable us to assess the information ex-

tracted by (temporal) data mining algorithms in a for-

mal way. In this paper, we only used two information

measures, but it is possible, and we propose this topic

as a future work, to use additional information mea-

sures proposed in the literature to characterize mined

bodies of evidence.

REFERENCES

Agrawal, R., Imielinski, T., and Swami, A. (1993). Min-

ing association rules between sets of items in large

databases. In Proc. of the ACM SIGMOD Int. Conf.

on Management of Data, pages 207–216.

Dubois, D. and Prade, H. (1985). A note on measures of

specificity for fuzzy sets. International Journal of

General Systems, 10:279–283.

Dubois, D. and Prade, H. (1999). Properties of measures

of information in evidence and possibility theories.

Fuzzy Sets and System, 100:35–49.

Guil, F., Bosch, A., and Mar´ın, R. (2004). Tset: An algo-

rithm for mining frequent temporal patterns. In Proc.

of the 1st Int. Workshop on Knowledge Discovery

in Data Streams, in conjunction with ECML/PKDD

2004, pages 65–74.

Shafer, G. (1976). A Mathematical Theory of Evidence.

Princenton University Press, Princenton, NJ.

Srikant, R. and Agrawal, R. (1996). Mining sequential

patterns: Generalizations and performance improve-

ments. In Proc. of the 5th Int. Conf. on Extending

Database Technology, pages 3–17.

Yager, R. (1981). Measurement of properties of fuzzy sets

and possibility distributions. In Proc. of the Third Int.

Seminar on Fuzzy Sets, pages 211–222.

Yager, R. (1983). Entropy and specificity in a mathematical

theory of evidence. International Journal of General

Systems, 9:249–260.

Zaki, M. (2001). Spade: An efficient algorithm for mining

frequent sequences. Machine Learning, 42(1/2):31–

60.

HEALTHINF 2010 - International Conference on Health Informatics

268