VIEW-BASED APPEARANCE MODEL ONLINE LEARNING

FOR 3D DEFORMABLE FACE TRACKING

St

´

ephanie Lef

`

evre and Jean-Marc Odobez

Idiap Research Institute, Martigny, Switzerland

Ecole Polytechnique F

´

ed

´

erale de Lausanne, Switzerland

Keywords:

3D Head tracking, Appearance models, Structural features, View-based learning, Facial expression.

Abstract:

In this paper we address the issue of joint estimation of head pose and facial actions. We propose a method that

can robustly track both subtle and extreme movements by combining two types of features: structural features

observed at characteristic points of the face, and intensity features sampled from the facial texture. To handle

the processing of extreme poses, we propose two innovations. The first one is to extend the deformable 3D face

model Candide so that we can collect appearance information from the head sides as well as from the face. The

second and main one is to exploit a set of view-based templates learned online to model the head appearance.

This allows us to handle the appearance variation problem, inherent to intensity features and accentuated by

the coarse geometry of our 3D head model. Experiments on the Boston University Face Tracking dataset show

that the method can track common head movements with an accuracy of 3.2

◦

, outperforming some state-of-

the-art methods. More importantly, the ability of the system to robustly track natural/faked facial actions and

challenging head movements is demonstrated on several long video sequences.

1 INTRODUCTION

The many applications of face tracking, in domains

ranging from Human Computer Interaction to surveil-

lance, urged researchers to investigate the problem

for the last twenty years. Still some issues remain;

the difficulties come from the variability of appear-

ance created by 3D rigid movements (especially self

occlusions due to the head pose), non-rigid move-

ments (due to facial expressions), variability of 3D

head shape and appearance, and illumination varia-

tions.

An important contribution to the problem of near-

frontal face tracking was made by Cootes et al. The

idea was to use Principal Component Analysis to

model the 2D variations of the face shape (Active

Shape Model (ASM) (Cootes et al., 1995)), or of both

shape and appearance (Active Appearance Model

(AAM) (Cootes et al., 1998)). Later, some works have

extended the use of AAMs to more challenging poses

(Gross et al., 2006), but the lack of robustness when

confronted to large head pose variations is still a typi-

cal limitation of these models. Besides, extracting the

3D pose from the 2D fit is possible but not straight-

forward; it requires further computation (Xiao et al.,

2004).

Face tracking can also be formulated as an image

registration problem, and several approaches were de-

veloped to robustly track faces under large pose vari-

ations. They usually rely on a rigid 3D face/head

model, which can be a cylinder (Cascia et al., 2000;

Xiao et al., 2003), an ellipsoid (Morency et al., 2008),

or a mesh (Vacchetti et al., 2004). The model is fit to

the image by matching either local features (Vacchetti

et al., 2004) or a facial texture (Cascia et al., 2000;

Xiao et al., 2003; Morency et al., 2008). However,

they are limited to rigid movements. In the best case

the tracking is robust to facial actions; in the worst

case they will cause the system to lose track; in any

case they are not estimated.

To track both the head pose and the facial ac-

tions, an appropriate solution is to use a deformable

3D face/head model. Approaches using optical flow

(DeCarlo and Metaxas, 2000), local structural fea-

tures (Chen and Davoine, 2006; Lef

`

evre and Odobez,

2009), or facial texture (Dornaika and Davoine, 2006)

to fit the 3D model to a face have been tried in the past.

However, the tracking success is highly dependent on

the recording conditions. Optical flow methods can

be very accurate but are not robust to fast motions.

Structural features computed at a small set of charac-

teristic points provide useful information about both

the pose and the facial actions. However, due to the

set sparsity and the locality of the information, the

223

Lefèvre S. and Odobez J. (2010).

VIEW-BASED APPEARANCE MODEL ONLINE LEARNING FOR 3D DEFORMABLE FACE TRACKING.

In Proceedings of the International Conference on Computer Vision Theor y and Applications, pages 223-230

DOI: 10.5220/0002836002230230

Copyright

c

SciTePress

(a) (b)

Figure 1: (a) Set of locations where observations are collected (red squares for structural features and green dots for intensity

features). (b) Samples of the training set for the structural feature located on the right corner of the right eye, before removing

the patch mean.

model will not be constraining enough if too many

features are hidden (e.g. when reaching a near profile

view). Facial texture provides rich and precise infor-

mation for tracking but is very sensitive to appearance

changes. The latter is a serious problem; unless the

lighting is coming uniformly from every direction, the

appearance of the face will vary a lot as the head pose

changes.

The approach in (Lef

`

evre and Odobez, 2009)

showed the advantages of combining both types of

cues: it relied on both structural features similar to

(Chen and Davoine, 2006) and on intensity values

computed at a sparse set of face points. The appear-

ance model was continuously adapted to deal with ap-

pearance changes. However this approach suffered

from two main problems: first, because the majority

of observations are located in the face region, there

is very few information when the pose reaches pro-

file view. This issue is common to many models. To

our knowledge, models for head tracking which cover

the head sides are either coarse rigid models (cylinder,

ellipse) or person-specific rigid models (3D model ac-

quired with a scanner). Secondly, the system is mem-

oryless: the appearance model of the intensity fea-

tures always needs to adapt in the same way when

coming back to the same pose.

In this paper, our contribution is to propose a mod-

eling that addresses these two issues.

First, we propose to extend the Candide face

model to cover head sides. Although collecting fea-

tures from the head sides would allow to track chal-

lenging poses that face models cannot, the vast major-

ity of face tracking approaches do not consider such

information. Indeed, such an extension brings in ad-

ditional difficulties. The appearance changes issue

is even more present than before, since, most of the

time, between near frontal view and profile view the

intensity of points located on the head sides varies

drastically. These variations are accentuated by the

fact that the mesh extension is very coarse, in the

sense that the approximation of the depth of the points

on the head surface is usually inaccurate. In fact, it is

quite difficult to built a precise person specific head

model, and this is a reason why many approaches do

not consider such head side extensions (AAM, Can-

dide, etc.).

Secondly, to add memory in the appearance mod-

eling, we propose to represent the head using a set of

view-based template learned online. This is in con-

trast with the majority of approaches that propose to

handle the appearance variation problem using either

template adaptation of all sorts (e.g. doing recursive

adaptation (Lef

`

evre and Odobez, 2009; Dornaika and

Davoine, 2006), combining current observations with

the initial template (Matthews et al., 2004), or using

short and long term adaptation models (Jepson et al.,

2003)) or incremental model learning techniques (e.g.

incremental PCA (Li, 2004) or an EM algorithm (Tu

et al., 2009)). None of these methods consider the

fact that in most applications the appearance of the

face mainly depends on the pose, since the location

of the camera and of the illumination sources are usu-

ally fixed. The approach we propose that relies on

templates learned online and representing appearance

under different poses addresses this issue. Further-

more, it is well adapted to handle the coarse depth

modeling of the additional head side mesh elements.

The main difficulty of our approach lies in the build-

ing of the template set, as the risk is to learn an incor-

rect combination pose/template when the head motion

is heading towards a region of the pose space that was

not visited before. This issue is dealt with to a large

extent by exploiting a fixed (i.e. not subject to adap-

tation) likelihood term relying on structural features.

The fact that this likelihood model is learned off-line

and is built on illumination-invariant cues reduces the

risk of drift.

The performances of our approach are evalu-

ated on the Boston University Face Tracking (BUFT)

database (on both Uniform-light and Varying-light

datasets) and on several long video sequences of peo-

ple involved in natural conversation. They show that

the combination of head-side and view-based model-

ing allows us to outperform some recent state-of-the-

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

224

art techniques (Cascia et al., 2000; Morency et al.,

2008) and to robustly track challenging head move-

ments and facial actions.

2 CANDIDE, A DEFORMABLE 3D

MODEL

In this work we use an extended version of the Can-

dide (Ahlberg, 2001) face model. The original model

consists in a deformable 3D mesh defined by the 3D

coordinates of 113 vertices (facial feature points) and

by the edges linking them. By displacing the ver-

tices of a standard face mesh M according to some

shape and action units, one can reshape the wire-

frame to the most common face shapes and expres-

sions. The transformation of a point M

i

of the stan-

dard face mesh into a new point M

i

can be expressed

as follows: M

i

(α,σ) = M

i

+S

i

.σ+A

i

.α, where S

i

and

A

i

are respectively the 3 × 14 shape unit matrix and

the 3 × 6 action unit matrix that contain the effect of

each shape (respectively action) unit on point M

i

. The

14 × 1 shape parameters vector σ and the 6 × 1 action

parameters vector α contain values between -1 and 1

that express the magnitude of the displacement. In our

case, σ is learned once for all for a given person be-

fore tracking using a reference image (a frontal view

of the person) by manually or automatically annotat-

ing several points on reference image and by finding

the shape parameters σ that best fit the Candide model

to the data points.

Extending the Model. A limitation of the Candide

model is that it only covers the face region. In our

experiments we have to deal with some challenging

head poses under which the face is half-hidden (e.g.

self-occlusion at profile view). In that case it is use-

ful to collect some information on the sides of the

head. Indeed the texture and contrast in this region,

and especially around the ears, is a strong indicator

of the head movement. For this reason we extended

the Candide model so that the mesh reaches the ears.

Twenty vertices forming a unique planar region (for

each head side) in the continuity of the original mesh

were added to the standard mesh as well as a ”Head

width” shape unit vector. None of these new points

are displaced by the action units. Note that the part of

the mesh that covers the sides is very coarse; however

it will bring useful information during the tracking.

An illustration of the extended Candide model can be

found in Fig. 1.

State Space. In the Candide model, the points of

the mesh are expressed in the (local) object coordinate

system. They need to be transformed into the camera

coordinate system and then to be projected on the im-

age. The first step involves a scale factor s (the Can-

dide model is defined up to a scale factor), a rotation

matrix (represented by three Euler angles θ

x

, θ

y

and

θ

z

) and a translation matrix T = (t

x

t

y

t

z

)

T

. The cam-

era is not calibrated and we adopt the weak perspec-

tive projection model (i.e. we neglect the perspective

effect) to map a 3D point M

i

to an image point m

i

.

Thus the vector of the head pose parameters to es-

timate can be expressed as Θ = [θ

x

θ

y

θ

z

λt

x

λt

y

s]

where λ is a constant. The whole state (head pose

and facial actions parameters) at time t is defined as

follows:

X

t

= [Θ

t

α

t

] . (1)

3 TRACKING FACES

We set the problem as a Bayesian optimization prob-

lem. The objective is to maximize the posterior prob-

ability p(X

t

|Z

1:t

) of the state X

t

at time t given ob-

servations Z

1:t

from time 1 to time t. Under standard

assumptions, and assuming that the distribution of the

posterior p(X

t−1

|Z

1:t−1

) is a dirac δ(X

t−1

−

ˆ

X

t−1

) (we

only exploit a point estimate of the state at the previ-

ous time step),

ˆ

X

t−1

being the previous estimate of the

state, this probability can be approximated by:

p(X

t

|Z

1:t

) ∝ p(Z

t

|X

t

) · p(X

t

|

ˆ

X

t−1

) . (2)

This expression is characterized by two terms: the

likelihood p(Z

t

|X

t

), which expresses how good are

observations given a state value, and p(X

t

|

ˆ

X

t−1

)

which represents the dynamics, i.e. the state evolu-

tion. Our observations are composed of structural fea-

tures and intensity features, i.e. Z

t

= (Z

str

t

,Z

int

t

). As-

suming that they are conditionally independent given

the state, Eq. (2) can be rewritten as:

p(X

t

|Z

1:t

) ∝ p(Z

str

t

|X

t

) · p(Z

int

t

|X

t

) · p(X

t

|

ˆ

X

t−1

) . (3)

Each component is detailed below.

3.1 Likelihood Model of Structural

Features

Our goal is to learn a fixed appearance model valid

under variations of head pose and illumination for

patches located around characteristic points of the

face. The advantage of these features is that, when

they are visible, they give useful information about

both the head pose and the facial actions. By learn-

ing a robust likelihood model, we aim at constraining

the tracking strongly enough under any illumination

condition for near-frontal to mid-profile poses.

VIEW-BASED APPEARANCE MODEL ONLINE LEARNING FOR 3D DEFORMABLE FACE TRACKING

225

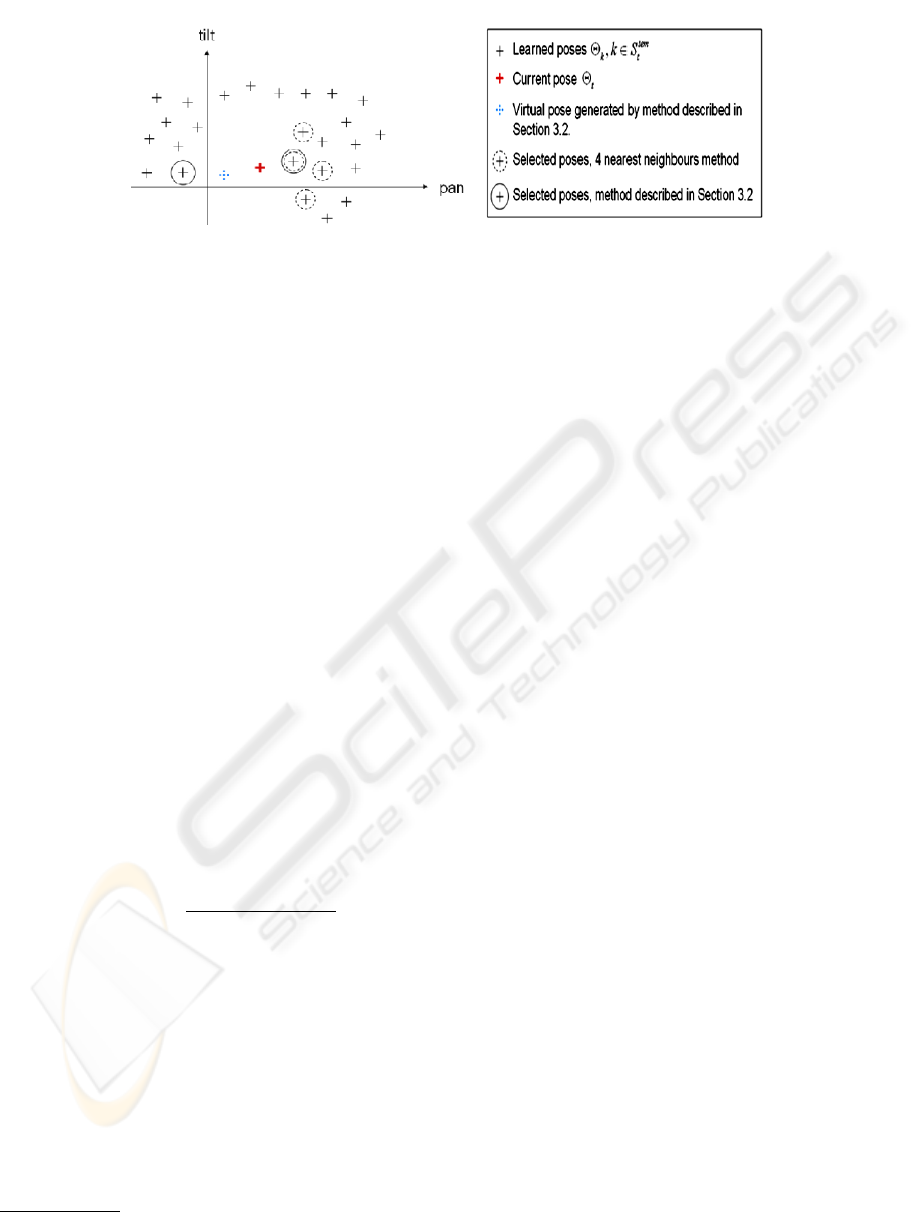

Figure 2: Building S

sel

t

(X

t

) based on the poses: example case (for simplicity we represent only two dimensions). Selection

with the k nearest neighbors approach, k = 4 v.s. selection with the approach described in Section 3.2.

Observations. We call S

str

the index set of 22 struc-

tural features. Given the state X

t

, observations Z

str

will be 9 × 9 zero-mean patches collected around

the projected points {m

i

(X

t

)}

i∈S

str

, i.e. Z

str

t

(X

t

) =

{Z

str

i,t

(X

t

)}

i∈S

str

= {patch(m

i

(X

t

))}

i∈S

str

. The loca-

tions of the observations are illustrated in Fig. 1.

Likelihood Modeling. Assuming conditional inde-

pendence between the features given the state

1

:

p(Z

str

t

|X

t

) =

∏

i∈S

str

p(Z

str

i,t

|X

t

) . (4)

This model is learned off-line using a reference image

of the face. For each feature we extract a patch in the

reference image, subtract the mean value to make it

invariant to illumination changes, and simulate what

it would look like under different head poses. This is

done by applying a set of affine transformations to it,

assuming the patch is planar. More precisely, for each

of the three rotation parameters we sample uniformly

seven values from −45

◦

to 45

◦

. This is illustrated in

Fig. 1 (b). From this training set we compute the 1 ×

81 mean vector µ

i

and the 81 × 81 covariance matrix

Σ

i

, and define the likelihood model for a normalized

9 × 9 image patch Z

str

i,t

as:

p(Z

str

i,t

|X

t

) ∝ e

−ρ(

q

(Z

str

i,t

−µ

i

)

T

Σ

−1

i

(Z

str

i,t

−µ

i

),τ

str

)

(5)

where ρ is a robust function (we used the truncated

linear function) and τ

str

is the threshold above which

a measurement is assumed to be an outlier.

3.2 Likelihood Model of Intensity

Features using a Set of View-based

Templates

The intensity features are located on both the face and

the head sides, and their location distribution is much

1

Note that such assumption would not be valid if patches

would overlap.

denser than the locations of the structural features.

Therefore the intensity features bring precise and rich

information about the appearance of the whole face.

In many cases, however, although the illumination

conditions are fixed the lighting is not uniform over

the face (e.g. the light might be coming from the

side). Thus the intensity of a face point is highly pose-

dependent and can vary quite fast depending on the

head movements. In order to handle this problem, we

define a likelihood model that relies on a set of view-

based templates.

Observations. The observations Z

int

are defined

by the intensity values at the projected points

{m

i

(X

t

)}

i∈S

int

, i.e. Z

int

t

(X

t

) = {Z

int

i,t

(X

t

)}

i∈S

int

=

{intensity(m

i

(X

t

))}

i∈S

int

, where S

int

denotes the index

set of intensity features. The locations of the observa-

tions are illustrated in Fig. 1.

Likelihood Modeling. The likelihood of the obser-

vations Z

int

t

(X

t

) is evaluated by comparing them to a

set of view-based templates. This set is built online by

adding a new template each time a new region of the

head pose space is reached, as described later. We call

S

tem

t

the complete set of view-based templates learned

so far at time t. A template T

k

= (µ

k

,Θ

k

),k ∈ S

tem

t

is

defined by a vector of intensities µ

k

and a pose Θ

k

.

The observations Z

int

t

(X

t

) will be compared to a set

of selected templates S

sel

t

(X

t

), with S

sel

t

⊆ S

tem

t

. From

this set of selected templates we create a mixed tem-

plate whose appearance µ

mix

t

is defined as: µ

mix

t

=

∑

k∈S

sel

t

w

k,t

· µ

k

,

where w

k,t

is the weight associated to the selected

template T

k

. The methodology to select S

sel

t

and the

weights is described below.

Assuming conditional independence between the fea-

tures given the state, we have:

p(Z

int

t

|X

t

) =

∏

i∈S

int

p(Z

int

i,t

|X

t

) (6)

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

226

where the likelihood model for a single intensity value

can be expressed as:

p(Z

int

i,t

|X

t

) ∝ e

−w

k,t

·ρ(

(Z

int

i,t

−µ

mix

i,t

)

2

σ

2

int

,τ

int

)

(7)

where ρ is a robust function (we used the truncated

linear function), τ

int

is the threshold above which a

measurement is assumed to be an outlier, and σ

int

is a

constant.

Selection of the Subset S

sel

t

(X

t

). The set of tem-

plates S

sel

t

(X

t

) plays an important role, as it defines the

mixed appearance µ

mix

t

. The main idea for our method

to build S

sel

t

(X

t

) follows the principle that, whenever

possible, to synthesize a view it is usually much bet-

ter to interpolate it than to extrapolate it. This is illus-

trated in Fig. 2. A classical approach would use the k

nearest neighbors to build µ

mix

t

. However, this is not

always a good solution because the set of templates is

learned online, and therefore the learned templates do

not uniformly populate the pose space. Most of the

views selected in this manner may be located on one

side only of the current pose (see Fig. 2), leading to

the extrapolation of the view from S

sel

t

(X

t

) rather than

its interpolation. If instead we select poses not only

based on their distance to Θ

t

but also based on their

spread in the pose space, we might increase the ac-

curacy of the view synthesis. Thus the proposed so-

lution consists of defining S

sel

t

(X

t

) = {T

1

,T

2

} where

T

1

is the template whose pose Θ

1

is the closest to the

current pose Θ

t

, and T

2

is the template whose pose

Θ

2

is the closest to the pose symmetrical to pose Θ

1

with respect to Θ

t

. This way we make sure that the

two selected poses will draw the current pose towards

two opposite directions, as much as possible given the

current set of templates. This is illustrated in Fig. 2.

This simple approach provides a good compromise

between the distance to Θ

t

and repartition in the pose

space. Finally, each of the two selected poses is as-

sociated a weight defined as w

k,t

=

1

d(Θ

k

,Θ

t

)

,k ∈ S

sel

t

,

where d(Θ,Θ

0

) is defined as the euclidean distance

between two poses Θ and Θ

0

in the pose space. That

way the contribution of a template varies with the dis-

tance of its pose to the current pose. The weights are

normalized so that their sum is equal to 1.

Addition of a Template to the set of View-based

Templates. S

tem

t+1

is built from the set of templates

S

tem

t

and the estimated pose

ˆ

Θ

t

by adding a new tem-

plate only if it models a new region of the pose space,

i.e. only if its pose is far enough from the poses of the

templates already learned. That is, when the follow-

ing condition is verified:

∀k ∈ S

tem

t

,d(

ˆ

Θ

t

,Θ

k

) > τ (8)

the template T = (

ˆ

Z

int

t

,

ˆ

Θ

t

) is added to the set S

tem

t

.

Otherwise S

tem

t+1

= S

tem

t

. As a value for τ we used 10

◦

, a

good compromise between appearance modeling and

pose densities.

Updating the Set of View-based Templates. There

is always a risk that a bad template is learned, for ex-

ample if one part of the mesh is temporarily not well

fit on the face when a new template is added to the

list. For this reason, it is useful to have an adaptation

mechanism that allows the appearance of a learned

template to be updated when the same pose is vis-

ited again. Under some specific conditions, we up-

date the appearance of the closest template T

k

in the

following way: µ

k,t+1

= β ·

ˆ

Z

int

t

+ (1 − β) · µ

k,t

, with

β = 0.5 − 0.5 ·

d(

ˆ

Θ

t

,Θ

k

)

τ

, i.e. β will vary between 0 and

0.5 depending on the distance d. The conditions to

perform this update are 1) No template has just been

created from the current pair pose/observations (see

description in the above paragraph) and 2) The same

template cannot be updated twice in a row. This last

criterion drastically reduces the risk of drift that oc-

curs when appearance is adapted continuously.

Dealing with Global Illumination Changes. The

appearance model as we described it so far is not ro-

bust to global illumination changes. We deal with this

issue in a coarse way, so that the tracking is not per-

turbed by a sudden change of camera gain or by a

long-term change in the lighting. Before processing

any frame, all intensities are corrected by a constant

value so that the average intensity of the image is the

same as the one in the first frame.

3.3 Dynamical Model

This term defines how large we assume the difference

in the state between two successive frames can be.

The N

p

components of the states are assumed to be

independent and to follow a constant position model:

p(X

t

|

ˆ

X

t−1

) =

∏

i=1:N

p

N (X

i,t

;

ˆ

X

i,t−1

,σ

d,i

) (9)

where X

i,t

denotes the i

th

component of X

t

, and {σ

d,i

}

i

are the noise standard deviations.

3.4 Optimization of the Error Function

In practice we minimize the negative logarithm of the

posterior defined in Eq. (3). Besides, we use our

knowledge of the geometry of the mesh to infer if

some of the feature points are occluded under a pose

Θ

t

. We introduce for each feature i a visibility factor

VIEW-BASED APPEARANCE MODEL ONLINE LEARNING FOR 3D DEFORMABLE FACE TRACKING

227

Figure 3: Performances of three trackers on the same sequence - Frames 95, 210, 260, 310, 360. From top to bottom: our

tracker (Tracker 1), our tracker without using the side mesh (Tracker 2), our tracker using a continuous adaptation scheme

(Tracker 3). For clarity, in all cases only the face part of the mesh is drawn.

v

i

(X

t

) defined so that it is equal to 0 when the fea-

ture is hidden, and 1 when it is maximally visible:

v

i

(X

t

) = max(0, ~n

i,t

(X

t

).~z).

where ~n

i,t

(X

t

) is the normal to the mesh triangle

to which the point belongs, and ~z the direction of the

camera axis. The visibility of a feature point is taken

into account as a weight factor in the likelihood terms

of the error function:

E(X

t

) = −

∑

i∈S

str

v

i

(X

t

) · log(p(Z

str

i,t

|X

t

))

−

∑

i∈S

int

v

i

(X

t

) · log(p(Z

int

i,t

|X

t

))

−

N

p

∑

i=1

log(p(X

i

|

ˆ

X

i,t−1

)) . (10)

The downhill simplex method was chosen to per-

form the minimization. This iterative non-linear opti-

mization method has several advantage: it does not re-

quire to derive the error function (which would be dif-

ficult to extract in our case) and it maintains multiple

hypothesis (which ensures robustness) during the op-

timization phase. The dimension of the state space be-

ing quite large, the optimization is done in two steps:

we first run the optimization algorithm to estimate the

pose parameters Θ

t

, then we estimate the whole state

X

t

.

4 EXPERIMENTS AND RESULTS

Our implementation of the described algorithm pro-

cesses an average of 3 frames per second. However,

execution time was not our priority and we believe

that the algorithm could run much faster with minor

revisions of the code.

The system was tested on several long video se-

quences in order to evaluate qualitatively its ability

to track challenging head poses and facial actions in

natural conditions and evaluate its stability over time,

which is our primary aim. However, to provide quan-

titative evaluation, we also used the BUFT database

(Cascia et al., 2000) to measure the precision of the

head pose estimation and compare with state-of-the-

art results.

4.1 Qualitative Results on Long Video

Sequences

We tested our system on 8 long video sequences to

evaluate its ability to track in the long term the head

pose and facial actions. Sample results are given on

Fig. 3 and 4, but the quality of the results is better as-

sessed from the videos given as supplementary mate-

rial. The first sequence is the publicly available Talk-

ing Face video from PRIMA - INRIA, a video of a

person engaged in a conversation. The second se-

quence is an extract of a politician’s speech in a TV

broadcast. The six other sequences are videos that we

recently recorded in order to test the system on more

challenging head poses and facial actions.

We compared the performances of three trackers.

Tracker 1 is the system described in this paper.

Tracker 2 is the same as Tracker 1, but with no exten-

sion of the Candide model, i.e. no information is col-

lected on the sides of the head. Tracker 3 is the same

as Tracker 1, but using a recursive adaption method

as proposed in (Lef

`

evre and Odobez, 2009) instead of

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

228

Figure 4: Sample images from various sequences obtained with our tracker.

Table 1: Comparison on the BUFT database of robustness and accuracy between our approach (in bold) and state-of-the-art

face trackers. The Three first results were extracted from the corresponding papers.

Uniform-light dataset Varying-light dataset

Approach P

s

E

pan

E

tilt

E

roll

E

m

P

s

E

pan

E

tilt

E

roll

E

m

La Cascia (Cascia et al., 2000) 75% 5.3

◦

5.6

◦

3.8

◦

3.9

◦

85% - - - -

Xiao (Xiao et al., 2003) 100% 3.8

◦

3.2

◦

1.4

◦

2.8

◦

- - - - -

Morency (Morency et al., 2008) 100% 5.0

◦

3.7

◦

2.9

◦

3.9

◦

- - - - -

Adaptation (Lef

`

evre and Odobez, 2009) 100% 4.4

◦

3.3

◦

2.0

◦

3.2

◦

100% 4.1

◦

3.5

◦

2.3

◦

3.3

◦

View-based 100% 4.6

◦

3.2

◦

1.9

◦

3.2

◦

100% 6.2

◦

4.4

◦

2.7

◦

4.4

◦

the view-based templates.

Not surprisingly, the three systems perform

equally well on the first two sequences. These two

sequences are useful to evaluate long-term and subtle

lip movements tracking, but the head poses do not go

very far from frontal view. The difference of perfor-

mance between the different approaches shows when

they are tested on the more challenging sequences.

Sample results obtained by the different systems on

the same sequence are illustrated in Fig. 3.

One can notice that Tracker 3 correctly estimates

the movement towards profile view, but looses track

when trying to come back to a more frontal pose. This

phenomenon is actually observed in most of the se-

quences in which such a movement (frontal-profile-

frontal) occurs. Indeed, the information that allows to

follow the movement back to frontal view is mainly

contained by the intensity features on the head side.

As mentioned before, the appearance of these features

varies a lot under such pose variations, and the mem-

oryless adaptive system cannot follow.

Tracker 2 is more robust, since it never looses

track in all our sequences. Despite the absence of

measurements on the head sides, the memory of the

learned appearances under different poses allows the

tracker to find its way under all kinds of head mo-

tions. However, the loss of information compared to

Tracker 1 leads to a lack of precision, and thus to a

less accurate fit. An example can be seen in Fig. 3.

On the second, third and fourth frames the eyes are

not correctly fit, and on the fifth image the mouth and

the eyebrows are not well positioned.

Out of the three systems, Tracker 1 is the one that

demonstrates the best results. The use of a set of

view-based templates over an adaptive template for

the intensity features allows to robustly track chal-

lenging poses, and the extension of the mesh allows

to gather more information and leads to an accurate

tracking. The system can follow both natural and

faked facial action under difficult head poses, as il-

lustrated in Fig. 4.

4.2 Results on the BUFT Database

The BUFT database contains 72 videos presenting 6

subjects performing various head movements (trans-

lations, in-plane and out-of-plane rotations). Each

sequence is 6 seconds long and has a resolution of

320 × 240 pixels. Ground truth was collected using a

“Flock of Birds” magnetic tracker. The databased is

divided into two datasets. The Uniform-light dataset

contains 45 sequences recorded under constant light-

ing conditions. The Varying-light dataset contains 27

sequences recorded under fast-changing challenging

lighting conditions.

We can define the robustness of a tracker as the

percentage P

s

of frames successfully tracked over all

the video sequences. The accuracy of a tracker is de-

fined as the mean pan, tilt and roll angle errors over

the set of all tracked frames: E

m

=

1

3

(E

pan

+ E

tilt

+

E

roll

). We compared the performances of five track-

ers; the results are shown in Table 1. The “View-

based” approach corresponds to the method described

in this paper. One can notice that the results obtained

by the Adaptation approach and the View-based ap-

proach are very similar. The performances on the

Uniform-light dataset are in accordance with our ex-

pectations; on such short sequences and only a few

profile views we did not expect to observe improve-

ment. On the other hand, we did not expect our sys-

tem to perform as well on the challenging Varying-

light dataset, since it does not incorporate a way to

handle fast illumination variations (appearance model

updates are much less frequent than in the recursive

VIEW-BASED APPEARANCE MODEL ONLINE LEARNING FOR 3D DEFORMABLE FACE TRACKING

229

case), but in the end our coarse estimation of the

global illumination changes and the update of the set

of templates was enough to successfully track all the

sequences with a small loss of accuracy compared

to (Lef

`

evre and Odobez, 2009). Remember however

that using this recursive approach in our modeling of-

ten failed on longer sequences, which showed that it

was not really stable. When comparing our approach

to three other trackers in the literature, we notice that

it perform noticeably better than (Cascia et al., 2000)

on both datasets. The performances on the Uniform-

light dataset are comparable to those demonstrated

in (Morency et al., 2008; Xiao et al., 2003). How-

ever, we handle the much more challenging Varying-

light dataset while none of (Morency et al., 2008;

Xiao et al., 2003) demonstrated successfully on this

dataset.

5 CONCLUSIONS

In this paper we introduced a face tracking method

that uses information collected on the head sides to

robustly track challenging head movements. We ex-

tended an existing 3D face model so that the mesh

reaches the ears. In order to handle appearance vari-

ation (mainly due to head pose changes in practice),

our approach builds online a set of view-based tem-

plates. These two distinctive features were proved

to be particularly useful when the tracker has to deal

with extreme head poses like profile views. More-

over we showed the ability of our approach to follow

both natural and exaggerated facial actions. However

we are aware that one limitation of our system is that

there is no mechanism to recover from a potential fail-

ure. One solution would be to add a set of detectors

for specific points that could help to set the system

back on track.

ACKNOWLEDGEMENTS

This work was supported by the EU 6th FWP IST In-

tegrated Project AMIDA (Augmented Multiparty In-

teraction with Distant Access) and the NCCR Inter-

active Multimodal Information Management project

(IM2). We thank Vincent Lepetit for useful discus-

sions on the model.

REFERENCES

Ahlberg, J. (2001). Candide 3 - an updated parameterised

face. Technical Report LiTH-ISY-R-2326, Link

¨

oping

University, Sweden.

Cascia, M. L., Sclaroff, S., and Athitsos, V. (2000). Fast,

reliable head tracking under varying illumination: An

approach based on registration of texture-mapped 3d

models. In IEEE Trans. Pattern Analysis and Machine

Intelligence (PAMI), volume 22.

Chen, Y. and Davoine, F. (2006). Simultaneous tracking of

rigid head motion and non-rigid facial animation by

analyzing local features statistically. In British Ma-

chine Vision Conf. (BMVC), volume 2.

Cootes, T., Edwards, G., and Taylor, C. (1998). Active ap-

pearance models. In European Conf. Computer Vision

(ECCV), volume 2.

Cootes, T., Taylor, C., Cooper, D., and Graham, J. (1995).

Active shape models - their training and applica-

tion. Computer Vision and Image Understanding,

61(1):38–59.

DeCarlo, D. and Metaxas, D. (2000). Optical flow con-

straints on deformable models with applications to

face tracking. Int. Journal of Computer Vision,

38(2):99–127.

Dornaika, F. and Davoine, F. (2006). On appearance based

face and facial action tracking. In IEEE Trans. On Cir-

cuits And Systems For Video Technology, volume 16.

Gross, R., Matthews, I., and Baker, S. (2006). Active ap-

pearance models with occlusion. Image and Vision

Computing Journal, 24(6):593–604.

Jepson, A., Fleet, D., and El-Maraghi, T. (2003). Ro-

bust online appearance models for visual tracking.

IEEE Trans. Pattern Analysis and Machine Intelli-

gence (PAMI), 25(10):1296– 1311.

Lef

`

evre, S. and Odobez, J. (2009). Structure and appear-

ance features for robust 3d facial actions tracking. In

Int. Conf. on Multimedia & Expo.

Li, Y. (2004). On incremental and robust subspace learning.

Pattern Recognition, 37(7):1509–1518.

Matthews, I., Ishikawa, T., and Baker, S. (2004). The tem-

plate update problem. IEEE Trans. Pattern Analysis

and Machine Intelligence (PAMI), 26(6):810 – 815.

Morency, L.-P., Whitehill, J., and Movellan, J. (2008). Gen-

eralized adaptive view-based appearance model: In-

tegrated framework for monocular head pose estima-

tion. In IEEE Int. Conf. on Automatic Face and Ges-

ture Recognition (FG).

Tu, J., Tao, H., and Huang, T. (2009). Online updat-

ing appearance generative mixture model for mean-

shift tracking. Machine Vision and Applications,

20(3):163–173.

Vacchetti, L., Lepetit, V., and Fua, P. (2004). Stable real-

time 3d tracking using online and offline information.

IEEE Trans. Pattern Analysis and Machine Intelli-

gence (PAMI), 26(10):1385–1391.

Xiao, J., Baker, S., and Matthews, I. (2004). Real-time com-

bined 2d+3d active appearance models. In IEEE Conf.

Computer Vision and Pattern Recognition (CVPR).

Xiao, J., Moriyama, T., Kanade, T., and Cohn, J. (2003).

Robust full-motion recovery of head by dynamic tem-

plates and re-registration techniques. Int. Journal of

Imaging Systems and Technology, 13(1):85–94.

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

230