DETECTION OF EXIT NUMBER FOR THE BLIND AT THE

SUBWAY STATION

Ho-Sub Yoon, Jae Yeon Lee

Robot Division, ETRI, Kajung, Taejeon, Korea

Eun-Mi Ji

Department of Medical Information, HyeChon University, Taejeon, Korea

Keywords: Digit Character Detection, Blind Guidance System.

Abstract: This paper presents an approach for detecting the exit number to enhance the safety and mobility of blind

people while walking around subway station. It is extremely important for a blind person to know whether a

frontal area is a correct exit number or not. In a crossing at each exit roads, the usual black exit number is

painted with blue circle contour that have white background in Taejon subway station. An image-based

technique has been developed to detect the isolated number pattern at the crossing roads. The presences of

exit numbers are inferred by careful analysis of numeral width, height, rate, number of numerals, as well as

bandwidth trend. If we have several candidates of numerals, we adapt to the OCR function. Experimental

evaluation of the proposed approach was conducted using several real images with and without exit roads. It

was found that the proposed technique performed with good accuracy.

1 INTRODUCTION

According to the World Health Organization

statistics, approximately 40 million people are blind

all over the world (Thylefors, 1995 and WHO,

1997). There are about 200,000 persons with

acquired blindness in Korea. Visually impaired

people have one goal that to navigate through

unfamiliar spaces with out the human guide helps to

establish this navigation. Mobility, which has been

defined as “the ability to travel safely, comfortably,

gracefully, and independently through the

environment,” (Shingledecker, 1978) is the main

barrier for these vision-disabled people. The most

widely used navigational aids for blind people are

the white cane and the guide dog. However, these

have many limitations: the range of detection of

special patterns or obstacles using a cane is very

narrow and a guide dog requires extensive training

and is not suitable for people who are not physically

fit or cannot maintain a dog (Whitestock, 1997). To

improve the versatility of the white cane, they use

many methods and devices to aid in mobility and to

increase safe and independent travel as in

(Diepstraten, 2004, Hub, 2006, and Matsuo, 2002).

When a visually impaired person is walking around

at the subway station, it is important to get exit

number information which is present in the scene. In

general, way finding into a man-made environment

is helped considerably by the ability to read exit

number signs. This paper presents the development

of an automatic detection of exit number for visually

impaired people at the subway station.

The researches on text extraction from natural

scene images have been growing recently. Many

methods have been proposed based on edge

detection (Yamaguchi, 2003), binarization (Matsuo,

2002), spatial-frequency image analysis (Liu, 1998)

and mathematical morphology operations (Gu,

1997). There are also other parallel research efforts

to develop a scene-text reading system for the

visually impaired (Zandifar, 2002).

All these systems make evident that the text

areas cannot be perfectly extracted from the image

because natural scenes consist of complex objects,

various lightings, giving rise to false text detection

and misses. The first step in developing our digit

reading system is to address the problem of text

detection in natural scene images. In this paper, we

assumed the fixed indoor space as same as subway

543

Yoon H., Yeon Lee J. and Ji E. (2010).

DETECTION OF EXIT NUMBER FOR THE BLIND AT THE SUBWAY STATION .

In Proceedings of the International Conference on Computer Vision Theory and Applications, pages 543-546

DOI: 10.5220/0002892505430546

Copyright

c

SciTePress

station. At that space, the lighting condition is stable

and target digit(exit number) sizes are predictable

because that sign board size are uniformed by

public subway company. From these assumptions,

our system passes through 2 steps as Figure 1. The

first step has filtering, adaptive binarization,

connected component detection and decision of

candidate of number region. The second step has

OCR verification and selects the one number.

Input images

Exit number

Figure 1: Processing steps of the proposed blind guidance

system

.

Section 2 describes system design. In section 3, we

will deal with image resolution firstly. And we will

propose a robust and efficient Exit number locating

method which is independent of the size of Exit

number. Section 4 shows experimental results.

Finally Section 5 talks about the conclusion.

2 SYSTEM DESIGN

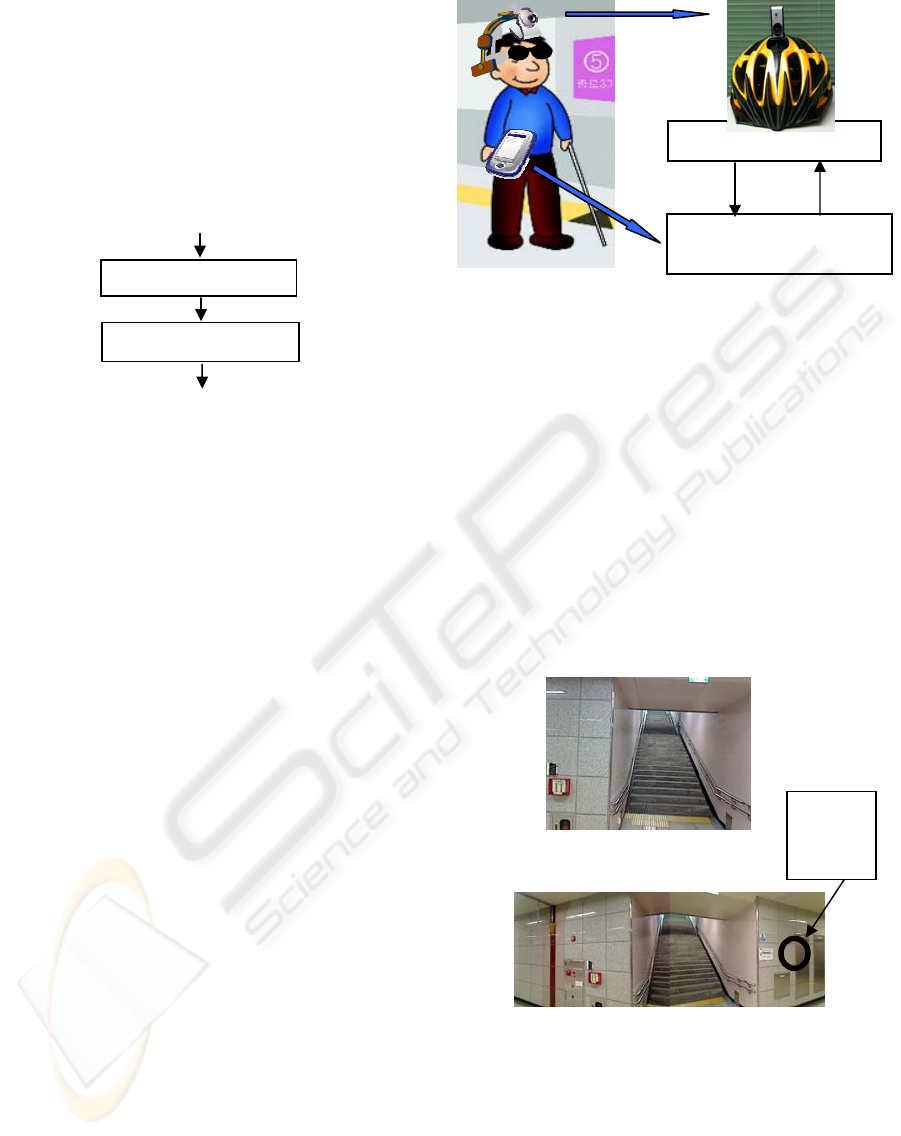

Figure 1 shows the general configuration of our

proposed system. The building elements are the

PDA(or notebook), the USB-camera and the voice

synthesizer.

Locating digit detection algorithm involves

scenarios. In the 'pause mode', the camera which is

placed on the user's head didn’t acquires an image of

the scene when blind person walk to straight way.

The blind met at crossing section following the

guide block, the blind can push the bottom to change

‘active mode’ or automatically change to the ‘active

mode’ when the blind move his body to each bridge

direction that can be detected by sensors.

In ‘active mode’, input images are captured and

the search for digit areas is performed using

proposed methods. These characters are recognized

and read out to the blind person via a voice

synthesizer. Figure 2 shows the system configuration.

Figure 2: System configuration.

3 EXIT NUMBER DETECTION

ALGORITHM

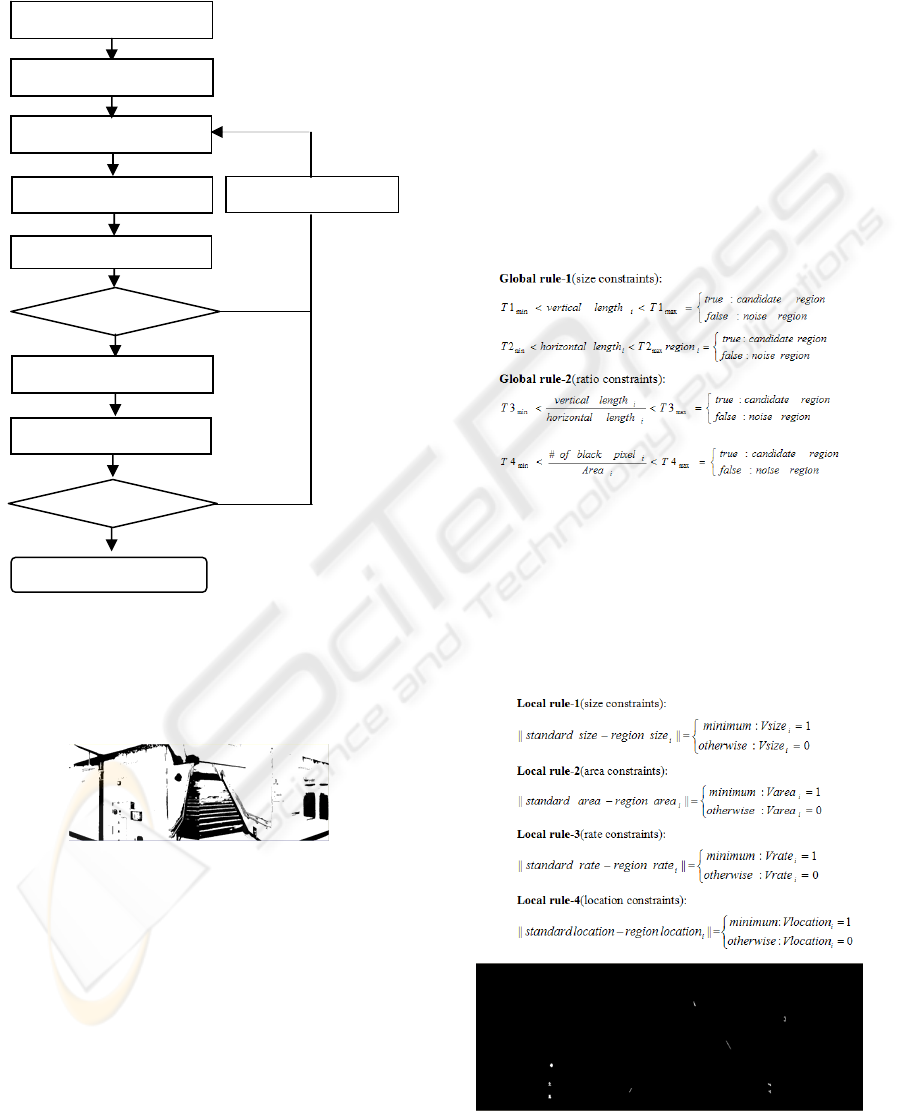

3.1 Image Capture

Our system captures colour images at high

resolution(1280x480) by horizontally synchronized

two USB cameras to make an as much as human

view angle even through we have distortion in the

middle area. Figure 3 explain the reason why need

the synchronized 2 USB cameras. Figure 3(a) was

captured by one camera that didn’t include the exit

number area.

(a) One camera image.

(b) Horizontally synchronized two camera image.

Figure 3: Input camera system.

For the processing speed, the image is firstly

processed for converting to the low resolution

(640x240) to identify candidate number location and

then will be recover to the high-resolution

(1280x480) to the OCR stage.

P

re-

p

rocess

i

ng

Pos

t

-

p

rocessing

Image Capture

- Processing

- Voice synthesizer

Target

Exit

digit

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

544

3.2 Pre-processing

Our exit number localization algorithm consists of

several stages as outlined in figure 4.

Figure 4: System flow chart for the Exit number

recognition algorithm.

3.2.1 Adaptive Threshold

Figure 5: The binarization result using adaptive threshold.

The threshold is a very critical parameter for object

detection, thus, a main problem

is how to determine the

optimal threshold. Basically, there

are two forms of the

methods that can set the threshold. One is to preset the

threshold by experimental

results; the other is to set the

threshold automatically based on the input image data.

We used the adaptive threshold method as same as

OpenCV libary(OpenCV, 2006).

3.2.2 CCA (Connected Component Analysis)

Next stage is a connected component analysis

procedure to make isolated regions information. We

used general 2-pass labelling algorithm. The output

results of labelling algorithm are location, size,

several rates from each isolated regions.

3.2.3 Heuristic Verification

From the CCA steps, there are a lot of isolated

regions. To search correct digit region, we used two

verification methods as global and local approaches.

The global verification approach has four noise

deletion rules using priori knowledge about

experimental results of correct digit information.

The local verification approach has four noise

deleting rules using priori knowledge about current

constraints.

The local verification approach has similarity

measuring rules using priori knowledge as same as

global rules. If ith region’s digit score is higher, ith

region will be high probability that is correct digit

region.

Figure 6: The CCA and heuristic verification result.

In

p

ut ima

g

e

Ada

p

tive Threshold

CCA

(

labellin

g

)

OK?

N

OCR

ver

ifi

cat

i

on

OK?

N

Y

Y

Hurisitic verification

Chan

g

e

t

hreshold

D

i

g

i

t recogn

i

t

i

on

Resolution reduction

Resolution extension

DETECTION OF EXIT NUMBER FOR THE BLIND AT THE SUBWAY STATION

545

3.3 Post-processing

From the previous steps, 3 ~ 10 candidates regions

are remained. To decide correct digit regions, we

tried to OCR verification

3.3.1 OCR Verification

Our OCR (Optical character Recognition) system

based on the (Kye Kyung Kim, 2002) that consist of

MLP(multi layer perception) with 198 input neurons,

100 hidden neurons and 10 output neurons. All

candidates regions will be recognized by this MLP,

and selected one or two regions to correct digits.

4 EXPERIMENTAL RESULTS

Our experiment environment consist of Intel

Pentium 2G-Hz, 1G Ram Notebook, Visual C++6.0

under the Windows XP OS. From the system

configuration in figure 2, we captured and tested a

lot of video scenes. We can get the high Exit digit

recognition rate over 90%.

5 CONCLUSIONS

This paper presents an approach for detecting the

Exit number to enhance the safety and mobility of

blind people while walking around subway station.

An image-based technique has been developed to

detect the isolated number pattern at the crossing

roads. The presences of exit numbers are inferred by

careful analysis of numeral width, height, rate,

number of numerals, as well as bandwidth trend. If

we have several candidates of numerals, we adapt to

the OCR function. It was found that the proposed

technique performed with good accuracy. Future

work will focus on new methods for extracting and

all kinds of text characters with higher accuracy and

on the development of a full demonstration system.

ACKNOWLEDGEMENTS

This research was supported by the Conversing

Research Center Program through the National

Research Foundation of Korea(NRF) funded by the

Ministry of Education, Science and Technology

(2009-0082293).

REFERENCES

Andreas Hub, Tim Hartter, Thomas Ertl, “Interactive

Tracking of Movable Objects for the Blind on the

Basis of Environment Models and Perception-Oriented

Object Recognition Methods”, 2006 .

A. Zandifar, R. Duraiswami, A. Chahine, and L. Davis, “A

Video Based Interface to Textual Information for the

Visually Impaired”, IEEE 4th ICMI, 2002, pp.325-330.

B. Thylefors, A. D. Negrel, R. Pararajasegaram, and K. Y.

Dadzie, “Global data on blindness,” Bull. WHO, vol.

73, no. 1, pp. 115–121, Jan. 1995.

“Blindness and visual disability: Seeing ahead projections

into the next century,” WHO Fact Sheet No. 146, 1997.

C. A. Shingledecker and E. Foulke, “A human factor

approach to the assessment of mobility of blind

pedestrians,” Hum. Factor, vol. 20, no. 3, pp. 273–286,

Jun. 1978.

Hub, A., Diepstraten, J., Ertl, T. “Design and

Development of an Indoor Navigation and Object

Identification System for the Blind”. Proceedings of

the ACM SIGACCESS conference on Computers and

accessibility, Atlanta, GA, USA, Designing for

accessibility, 147-152, 2004.

K. Matsuo, K.Ueda and M.Umeda, “Extraction of

Character String from Scene Image by Binarizing

Local Target Area”, T-IEE Japan, Vol. 122-C(2), 2002,

pp.232-241.

Kye Kyung Kim; Yun Koo Chung; Suen, C.Y, “Post-

processing scheme for improving recognition

performance of touching handwritten numeral

strings,” 16th International Conference on Pattern

Recognition, Volume 3, 2002 Page(s):327 – 330

L. Gu, N. Tanaka, T. Kaneko and R.M. Haralick, “The

Extraction of Characters from Cover Images Using

Mathematical Morphology”, IEICE Japan, D-II, Vol.

J80, No.10, 1997, pp. 2696-2704.

“OpenCV 1.0, Open Source Computer Vision Library,”

http://www.intel.com/technology/ computing/opencv/,

2006

R. H. Whitestock, L. Frank, and R. Haneline, “Dog

guides,” in Foundations of Orientation and Mobility,

B. B. Blasch and W. R. Weiner, Eds. New York:

Amer. Foundation for the Blind, 1997.

T. Yamaguchi, Y. Nakano, M. Maruyama, H. Miyao and

T.Hananoi, “Digit Classification on Signboards for

Telephone Number Recognition”, Proc.of the ICDAR,

2003, pp.359-363.

Y. Liu, T. Yamamura, N. Ohnishi and N. Sugie,

“Extraction of Character String Regions from a Scene

Image”, IEICE Japan, D-II, Vol. J81, No.4, 1998,

pp.641-650.

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

546