INTERPRETING EXTREME LEARNING MACHINE AS AN

APPROXIMATION TO AN INFINITE NEURAL NETWORK

Elina Parviainen, Jaakko Riihim¨aki

Dept. of Biomedical Engineering and Computational Science (BECS)

Aalto University School of Science and Technology, Aalto, Finland

Yoan Miche, Amaury Lendasse

Dept. of Information and Computer Science (ICS)

Aalto University School of Science and Technology, Aalto, Finland

Keywords:

Extreme learning machine (ELM), Neural network kernel.

Abstract:

Extreme Learning Machine (ELM) is a neural network architecture in which hidden layer weights are ran-

domly chosen and output layer weights determined analytically. We interpret ELM as an approximation to

a network with infinite number of hidden units. The operation of the infinite network is captured by neural

network kernel (NNK). We compare ELM and NNK both as part of a kernel method and in neural network

context. Insights gained from this analysis lead us to strongly recommend model selection also on the variance

of ELM hidden layer weights, and not only on the number of hidden units, as is usually done with ELM. We

also discuss some properties of ELM, which may have been too strongly interpreted in previous works.

1 INTRODUCTION

Extreme Learning Machine (Huang et al., 2006)

(ELM) is a currently popular neural network architec-

ture based on random projections. It has one hidden

layer with random weights, and an output layer whose

weights are determined analytically. Both training

and prediction are fast compared with many other

nonlinear methods.

In this work we point out that ELM, although in-

troduced as a fast method for training a neural net-

work, is in some sense closer to a kernel method in its

operation. A fully trained neural network has learned

a mapping such that the weights contain information

about the training data. ELM uses a fixed mapping

from data to feature space. This is similar to a ker-

nel method, except that instead of some theoretically

derived kernel, the mapping ELM uses is random.

Therefore, the individual weights of the ELM hidden

layer have little meaning, and essential information

about the weights is captured by their variance.

This thought is met also in derivation of the neu-

ral network kernel (NNK) (Williams, 1998), which is

widely used in Gaussian process prediction. The net-

work in the derivation has infinite number of hidden

units, and when the weights are integrated out, the re-

sulting function is parameterized in terms of weight

variance.

We interpret ELM as an approximation to this in-

finite neural network. This leads us to study con-

nections between NNK and ELM from two opposite

points of view.

On the one hand, we can use ELM hidden layer

to compute a kernel, and use it in any kernel method.

This idea has been suggested for Support Vector Ma-

chines (SVM) in (Fr´enay and Verleysen, 2010), which

has been the main inspiration for our work. We try out

the same idea in Gaussian process classification.

On the other hand, we can use NNK to replace the

hidden layer computations in ELM. This is done by

first computing a similarity-based representation for

data points using NNK, and then deriving a possible

set of explicit feature space vectors by matrix decom-

position. This corresponds to using ELM with an in-

finite number of hidden units.

Our experiments show that the theoretically de-

rived NNK can replace ELM when ELM is used as

a kernel. NNK can also perform computations of

the ELM hidden layer, albeit at higher computational

cost. An infinite network performing equally well or

often better than ELM raises a question about mean-

ingfulness of choosing model complexity based on

65

Parviainen E., Riihimäki J., Miche Y. and Lendasse A..

INTERPRETING EXTREME LEARNING MACHINE AS AN APPROXIMATION TO AN INFINITE NEURAL NETWORK.

DOI: 10.5220/0003071100650073

In Proceedings of the International Conference on Knowledge Discovery and Information Retrieval (KDIR-2010), pages 65-73

ISBN: 978-989-8425-28-7

Copyright

c

2010 SCITEPRESS (Science and Technology Publications, Lda.)

hidden units only, as is traditionally done with ELM.

We argue, and support the argument by experiments,

that the variance of hidden layer weights is an im-

portant tuning parameter of ELM. Model selection of

weight variance should therefore be considered.

We first present in Section 2 some tools (ELM,

NNK, data sets) which we will need in subsequent

sections. Section 3 discusses similarities and differ-

ences between fully trained neural networks, ELM,

and kernel methods, and reports the experiments with

ELM kernel and computing ELM hidden layer results

using NNK. Results of the experiments and properties

of ELM are commented in length in Section 4.

2 METHODS

2.1 Extreme Learning Machine (ELM)

The following, including Algorithm 1, is an abridged

and slightly modified version of ELM introduction in

(Miche et al., 2010).

The ELM algorithm was originally proposed

in (Huang et al., 2006) and it makes use of the Sin-

gle Layer Feedforward Neural Network (SLFN). The

main concept behind the ELM lies in the random

choice of the SLFN hidden layer weights and biases.

The output weights are determined analytically, thus

the network is obtained with very few steps and with

low computational cost.

Consider a set of N distinct samples (x

i

,y

i

) with

x

i

∈ R

d

1

and y

i

∈ R

d

2

; then, a SLFN with H hidden

units is modeled as the following sum

H

∑

i=1

β

i

f(w

i

x

j

+ b

i

), j ∈ [1, N], (1)

with f being the activation function, w

i

the input

weights, b

i

the biases and β

i

the output weights.

In the case where the SLFN perfectly approxi-

mates the data, the errors between the estimated out-

puts

ˆ

y

i

and the actual outputs y

i

are zero and the rela-

tion is

H

∑

i=1

β

i

f(w

i

x

j

+ b

i

) = y

j

, j ∈ [1, N], (2)

which writes compactly as Hβ = Y, with

H =

f(w

1

x

1

+ b

1

) ··· f(w

H

x

1

+ b

H

)

.

.

.

.

.

.

.

.

.

f(w

1

x

N

+ b

1

) ··· f(w

H

x

N

+ b

H

)

,

(3)

and β = (β

T

1

...β

T

H

)

T

and Y = (y

T

1

...y

T

N

)

T

.

Theorem 2.1 of (Huang et al., 2006) states that

with randomly initialized input weights and biases for

the SLFN, and under the condition that the activation

function is infinitely differentiable, then the hidden

layer output matrix can be determined and will pro-

vide an approximation of the target values as good as

wished (non-zero training error).

The way to calculate the output weights β from the

knowledge of the hidden layer output matrix H and

target values, is proposed with the use of a Moore-

Penrose generalized inverse of the matrix H, denoted

as H

†

(Rao and Mitra, 1972). Overall, the ELM algo-

rithm is summarized as Algorithm 1.

Algorithm 1: ELM.

Given a training set (x

i

,y

i

),x

i

∈ R

d

1

,y

i

∈ R

d

2

, an ac-

tivation function f : R 7→ R and the number of hidden

nodes H.

1: - Randomly assign input weights w

i

and biases

b

i

, i ∈ [1, H];

2: - Calculate the hidden layer output matrix H;

3: - Calculate output weights matrix β = H

†

Y.

Number of hidden units is an important parameter

for ELM, and should be chosen with care. The se-

lection can be done for example by cross-validation,

information criteria or starting with a large number

and pruning the network (Miche et al., 2010).

2.2 Neural Network Kernel

Neural network kernel is derived in (Williams, 1998)

by letting the number of hidden units go to infinity. A

Gaussian prior is set to hidden layer weights, which

are then integrated out. The only parameters remain-

ing after the integration are variances for weights.

This leads to an analytical expression for expected co-

variance between two feature space vectors,

k

NN

(x

i

,x

j

) =

2

π

sin

−1

2˜x

T

i

Σ˜x

j

q

(1+ 2˜x

T

i

Σ˜x

i

)(1+ 2˜x

T

j

Σ˜x

j

)

.

(4)

Above, ˜x

i

= [1 x

i

] is an augmented input vector and

Σ is a diagonal matrix with variances of inputs. In

this work all variances are assumed equal, for closer

correspondence with ELM.

NNK also arises as a special case of a more gen-

eral arc-cosine kernel (Cho and Saul, 2009). NNK is

not to be confused with tanh-kernel tanh((x

i

·x

j

)+ b),

which is often also called MLP kernel (from Multi-

Layer Perceptron).

KDIR 2010 - International Conference on Knowledge Discovery and Information Retrieval

66

2.3 Data Sets and Experiments

We perform two experiments. In ”GP experiment”

ELM kernel is used in a Gaussian Process classi-

fier (Rasmussen and Williams, 2006). Results as

function of H are compared with results of neural

network kernel. In ”ELM experiment” ELM clas-

sification accuracy (as function of number of hid-

den units) is compared to accuracy got by replac-

ing ELM hidden layer with NNK. The latter variant

of ELM is from now on referred to as NNK-ELM.

The comparison is done for five different variances

(σ ∈ {0.1,0.325,0.55, 0.775, 1}).

The GP experiment is implemented by modifying

gpstuff

toolbox

1

. Expectation propagation (Minka,

2001) is used for Gaussian process inference. The

ELM experiment uses the authors’ matlab implemen-

tation.

We use six data sets from UCI machine learning

repository (Asuncion and Newman, 2007). They are

described in Table 1. For representative results, the

data sets were chosen to have different sample sizes

and different dimensionalities.

Table 1: Data sets used in the experiments.

name # samples # dims data types

Arcene

†

200 10000 cont.

US votes 435 16 bin.

WDBC 569 30 cont.

Pima 768 8 cont.

Tic Tac Toe 958 27 categ.

Internet ads 2359 1558 cont., bin.

†

(Guyon et al., 2004)

For ELM experiment the data is scaled to range

[−1,1]. Each data set is divided into 10 parts. Nine

parts are used for training and one for testing, repeat-

ing this 10 times. This variation from data is shown in

figures. ELM results have another source of variation,

the random weights. This is handled by repeating the

runs 10 times, each time drawing random weights,

and averaging over results. Maximum number of hid-

den units for ELM experiment is 250, to make sure to

cover the sensible operating range of ELM (up to N

hidden units) for all data sets.

In the GP experiment, we are more interested in

ELM behavior as the function of hidden units than the

prediction accuracy. We therefore only consider vari-

ation from random weights, drawing 30 repetitions of

random weights. The data is split into train and test

sets (50 % / 50 %). Zero-mean unit-variance normal-

ization is used for the data.

1

http://www.lce.hut.fi/research/mm/gpstuff/

ELM places few restrictions to the activation of

hidden units. In this work we use the error function

Erf(z) = 2/

√

π

R

z

0

exp−t

2

, since it is the sigmoid used

in the derivation of NNK. For the same reason we use

Gaussian distribution for weights, instead of the more

usual uniform. ELM only requires the distribution to

be continuous.

3 ANALYSIS OF ELM

Essential property of a fully trained neural network is

its ability to learn features on data. Features extracted

by the network should be good for predicting the tar-

get variable of a classification/regression task.

In a network with one hidden and one output layer,

the hidden layer learns the features, while the out-

put layer learns a linear mapping. We can think

of this as first non-linearly mapping the data into a

feature space and then performing a linear regres-

sion/classification in that space.

ELM has no feature learning ability. It projects the

input data into whatever feature space the randomly

chosen weights happen to specify, and learns a lin-

ear mapping in that space. Parameters affecting the

feature space representation of a data point are type

and number of neurons, and the variance of hidden

layer weights. Training data can affect these parame-

ters through model selection, but not directly through

any training procedure.

This is similar to what a support vector machine

does. A feature space representation for a data point

is derived, using a kernel function with a few param-

eters, which are typically chosen by some model se-

lection routine. Features are not learned from data,

but dictated by the kernel. Weights for linear classi-

fication or regression are then learned in the feature

space. The biggest difference is that where ELM ex-

plicitly generates the feature space vectors, in SVM or

another kernel method only similarities between fea-

ture space vectors are used.

3.1 ELM Kernel

Authors of (Fr´enay and Verleysen, 2010) propose us-

ing ELM hidden layer to form a kernel to be used in

SVM classification. They define ELM kernel function

as

k

ELM

(x

i

,x

j

) =

1

H

f(x

i

) · f(x

j

), (5)

that is, the data is fed trough the ELM hidden layer to

obtain the feature space vectors, and their covariance

is then computed and scaled by the number of hidden

units.

INTERPRETING EXTREME LEARNING MACHINE AS AN APPROXIMATION TO AN INFINITE NEURAL

NETWORK

67

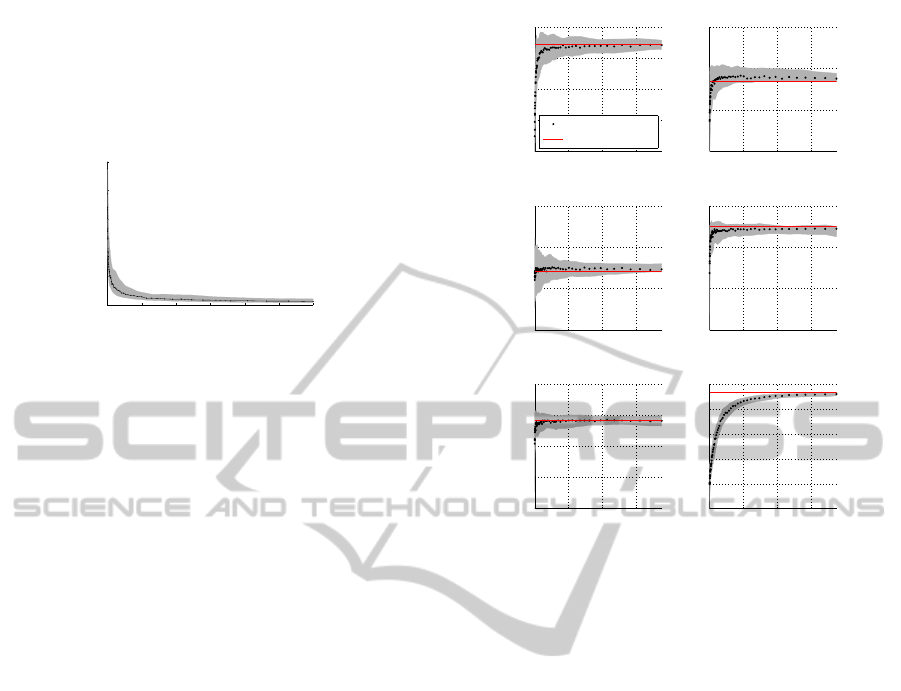

When the number of hidden units grows, this ker-

nel matrix approaches that given by NNK. Figure 1

shows the approach, measured by Frobenius norm, as

function of H. Especially for small H the ELM kernel

varies due to random weights, but it clearly converges

towards NNK.

0 1000 2000 3000 4000 5000 6000

0

20

40

60

80

100

#hidden

||K

ELM

−K

NNK

||

F

Figure 1: ELM kernel (K

ELM

) approaches neural network

kernel (K

NN

) in Frobenius norm when number of hidden

unit grows. Mean by black dots, 95% interval by shading.

Variation is caused by randomness in weights. WDBC data

set.

Results of GP experiment are shown in Fig-

ure 2. ELM kernel behavior in GP seems qualita-

tively similar to that observed in (Fr´enay and Verley-

sen, 2010) for SVM. The classification accuracy first

rises rapidly and then sets as a fixed level. Variation

due to random weights remains, but NNK result stays

inside the 95% interval of ELM.

3.2 NNK Replacing ELM Hidden Layer

3.2.1 Derivation

When using ELM, we only deal with vectorial data,

with data space vectors transformed into feature space

vectors by the hidden layer. Kernel methods rely on

pairwise data, where only similarities from any point

to all training points are considered. Kernel matrix

specifies the pairwise similarities. In order to use pair-

wise information from the NNK instead of ELM hid-

den layer, we must find a vectorial representation for

the data.

The idea of recovering points given their mutual

relationships is old (Young and Householder, 1938),

and is the basis of multidimensional scaling (Torg-

erson, 1952). Multidimensional scaling is used in

psychometry for handling results of pairwise compar-

isons, and more generally as a dimension reduction

method. When the data arises from real pairwise com-

parisons, like in psychometry, there is no guarantee

of structure of the similarity matrix. In our case, on

the contrary, the structure is known: NKK is derived

as covariance, and is therefore positive semidefinite

(PSD).

Any PSD matrix can be decomposed into a matrix

500 1000 1500

60

65

70

75

80

#hidden

correct %

TicTacToe

ELM−kernel/GP

NN−kernel/GP

500 1000 1500

85

90

95

100

#hidden

correct %

USvote

500 1000 1500

50

60

70

80

#hidden

correct %

Arsene

500 1000 1500

85

90

95

100

#hidden

correct %

WDBC

500 1000 1500

60

65

70

75

80

#hidden

correct %

Pima

500 1000 1500

50

60

70

80

90

100

#hidden

correct %

Internet Ad

Figure 2: GP classification accuracy when using ELM ker-

nel (black dots and shading) versus NKK accuracy (hori-

zontal line).

and its Hermitian conjugate

C = LL

H

. (6)

There are different methods for finding the factors

(Golub and Van Loan, 1996). Matlab

cholcov

im-

plements a method based on eigendecomposition. If

we take C in (6) to be output of the NNK function

(4), then L can be thought as one possible set of cor-

responding feature space vectors.

We use L to determine the output layer weights

the same way we used H in ELM,

β = L

†

Y. (7)

The factors L are unique only up to a unitary trans-

formation, but this is not a problem in ELM context,

as the linear fitting of output weights is able to adapt

to linear transformations.

With infinite number of hidden units, the feature

space is infinite-dimensional. Meanwhile, the data

we have available is finite, and the n data points can

span at most n-dimensional subspace. Thus the max-

imum size of L is n ×n; the number of columns can

be smaller if the data has linear dependencies.

If C is positive definite or close to it, a triangu-

lar L could be found using Cholesky decomposition,

leading to fast and stable matrix operations when find-

ing the output layer weights. As positive definiteness

KDIR 2010 - International Conference on Knowledge Discovery and Information Retrieval

68

cannot be guaranteed, we use the more general de-

composition for PSD matrices in all cases.

The one remaining problem is the mapping of test

points to the feature space. In ELM, the test data is

simply fed through the hidden layer. In our case, the

hidden layer does not physically exist, and we must

base the calculations on similarities fom test points to

training point, as given by NNK (4). This means that

NNK output for test data C

∗

is covariance matrix of

the form

C

∗

= LL

H

∗

. (8)

We already know the pseudoinverse of L. Therefore

L

∗

is recovered from

L

∗

= (L

†

C

∗

)

H

= (L

†

LL

H

∗

)

H

, (9)

and the predictions for test targets are computed as

Y

∗

= L

∗

β. (10)

3.2.2 ELM Experiment

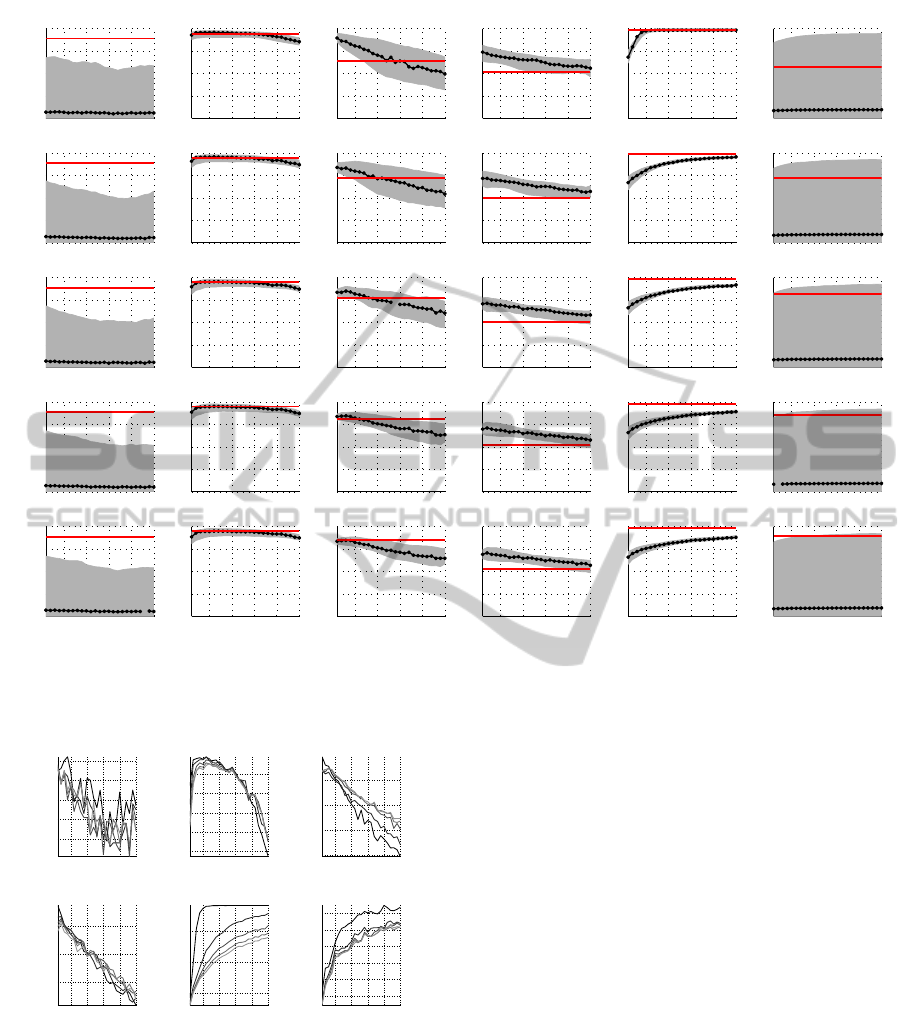

NNK-ELM results are shown in 3, as function of σ.

Results for two data sets are clearly affected by the

variance parameter, others are less sensitive.

0.1 0.3 0.6 0.8 1

0

25

50

75

100

σ

correct %

Arsene

0.1 0.3 0.6 0.8 1

0

25

50

75

100

σ

correct %

USvote

0.1 0.3 0.6 0.8 1

0

25

50

75

100

σ

correct %

WDBC

0.1 0.3 0.6 0.8 1

0

25

50

75

100

σ

correct %

Pima

0.1 0.3 0.6 0.8 1

0

25

50

75

100

σ

correct %

TicTacToe

0.1 0.3 0.6 0.8 1

0

25

50

75

100

σ

correct %

Internet Ad

Figure 3: NNK-ELM results, mean and 95 % interval due

to data variation.

Predictions given by ordinary ELM are shown in

in Figure 4, and mean values for NNK-ELM predic-

tions are included for comparison. We notice that

when the variance is properly chosen, using NNK

gives equal or better results than ELM for most data

sets. Pima data set is an exception, ELM has some

predictive power whereas NNK-ELM performs al-

most at level of guessing.

We also notice that the choice of variance has a

marked effect on two and some effect on other data

sets, both for ordinary ELM and the NNK variant. In

the following section we will look at variance effects

in in more detail and discuss the reasons for impor-

tance of variance.

3.3 Variance of Weights

Importance of variance, or more often the range used

for uniform distribution, is regarded important in

ELM works (e.g. (Miche et al., 2010)). However,

it is not seen as a model parameter, but simply a con-

stant which must be suitably fixed to guarantee that

the sigmoid operation neither remains linear nor too

strongly saturates to ±1.

Variance effects from Figure 4 are summarized in

Figures 5 and 6.

Figure 4 shows the mean predictions of ELM as

function of H. For TicTacToe and WDBC data sets

the predictions are clearly affected by the variance

parameter. For Internet ad data the overall effect of

both H and σ is very small. In that scale, the small-

est variance nonetheless gives results clearly different

than larger values. Results for other data sets are not

very sensitive to the variance values that were tried.

For TicTacToe and Internet ads smaller variance gives

better predictions, for WDBC the biggest one. Clearly

no fixed sigma can be used for all data sets.

Variance also affects the uncertainty of ELM pre-

dictions, as witnessed by Figure 6, where the width

of smoothed 95 % intervals of predictions is shown.

WDBC data set exhibit the strongest effect, followed

by Pima data. All data sets show some effect of vari-

ance. Number of hidden units interacts with effects

of σ, but no general pattern appears. Also direction

of the effect remains unspecified; for four data sets

smaller σ gives larger uncertainty, but the opposite is

true for the rest.

When thinking about the mechanism by which the

variance parameter affects the results, differences be-

tween data sets are to be expected. Variance affects

model complexity, and obviously different models fit

different data sets. Variance and distribution of the

data together determine the magnitude of values seen

by the activation function. The operating point of the

sigmoidal activation determines the flexibility of the

model.

When weights are small, the sigmoid produces

a nearly linear mapping. Large weights result in

a highly non-linear mapping. This is illustrated in

Figure 7. One-dimensional data points, spread over

range [-1,1] (the x-axis), are given random weights

drawn from zero-mean Gaussian distribution and then

fed through an error function sigmoid, repeating this

10000 times. Mean output and 95 % interval are de-

picted. On average, the sigmoid produces a zero re-

sponse, but the distribution of responses is determined

by the variance used. Small variance means mostly

small weights, and linear operation. Large variance

produces many large weights, which increase the pro-

INTERPRETING EXTREME LEARNING MACHINE AS AN APPROXIMATION TO AN INFINITE NEURAL

NETWORK

69

50 100 150 200 250

0

25

50

75

100

σ=0.1

Arsene

50 100 150 200 250

0

25

50

75

100

USvote

50 100 150 200 250

0

25

50

75

100

WDBC

50 100 150 200 250

0

25

50

75

100

Pima

50 100 150 200 250

0

25

50

75

100

TicTacToe

50 100 150 200 250

0

25

50

75

100

Internet Ad

50 100 150 200 250

0

25

50

75

100

σ=0.325

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

σ=0.55

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

σ=0.775

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

50 100 150 200 250

0

25

50

75

100

#hidden

σ=1

50 100 150 200 250

0

25

50

75

100

#hidden

50 100 150 200 250

0

25

50

75

100

#hidden

50 100 150 200 250

0

25

50

75

100

#hidden

50 100 150 200 250

0

25

50

75

100

#hidden

50 100 150 200 250

0

25

50

75

100

#hidden

Figure 4: ELM results (mean as black dots, 95 % interval as shading) for different values of σ. Mean of NNK results

(horizontal (red) line) are shown for comparison.

50100150200250

0.05

0.055

0.06

0.065

0.07

Arsene

#hidden

correct %, mean

50100150200250

0.86

0.88

0.9

0.92

0.94

USvote

#hidden

correct %, mean

50100150200250

0.5

0.6

0.7

0.8

WDBC

#hidden

correct %, mean

50100150200250

0.6

0.65

0.7

Pima

#hidden

correct %, mean

50100150200250

0.7

0.8

0.9

TicTacToe

#hidden

correct %, mean

50100150200250

0.084

0.086

0.088

0.09

0.092

0.094

Internet Ad

#hidden

correct %, mean

Figure 5: Effect of variance on mean of ELM predictions.

Darker shade indicates smaller variance.

portion of large responses by the network, allowing

nonlinear mappings.

Considering the effect of variance on complexity

of the model, we think that variance should undergo

model selection just as the number of hidden units

does.

4 DISCUSSION

4.1 On Properties of ELM

Authors of (Huang et al., 2006) promote ELM by

speed, dependence on a single tuning parameter,

small training error and good generalization perfor-

mance. These claims have often been repeated by

subsequent authors, but we have not come upon much

discussion of them. Here we present some comments

on these properties.

Training of a single ELM network is fast, provided

the number of hidden units is small. Speed of training

as the whole, however, depends also on the number of

training runs. Model selection may require consider-

able number of repetitions.

First factor to consider is the inherent randomness

of ELM results. If average performance of ELM is to

be assessed, any runs must be repeated several times,

adding to the computational burden.

Complexity of model selection is determined by

the number of tuning parameters, since all sensible

combinations should be considered.

KDIR 2010 - International Conference on Knowledge Discovery and Information Retrieval

70

50 100150200250

0.5

0.55

0.6

0.65

#hidden

correct %, 95%−width

Arsene

50 100150200250

0.06

0.08

0.1

0.12

#hidden

correct %, 95%−width

USvote

50 100150200250

0.1

0.2

0.3

#hidden

correct %, 95%−width

WDBC

50 100150200250

0.12

0.14

0.16

0.18

#hidden

correct %, 95%−width

Pima

50 100150200250

0.04

0.06

0.08

0.1

0.12

0.14

#hidden

correct %, 95%−width

TicTacToe

50 100150200250

0.84

0.86

0.88

0.9

0.92

0.94

#hidden

correct %, 95%−width

Internet Ad

Figure 6: Effect of variance on width of the 95 % interval of

ELM predictions. Darker shade indicates smaller variance.

−1 0 1

−1

0

1

data

erf output

σ = 0.1

−1 0 1

−1

0

1

data

erf output

σ = 0.325

−1 0 1

−1

0

1

data

erf output

σ = 0.55

−1 0 1

−1

0

1

data

erf output

σ = 0.775

−1 0 1

−1

0

1

data

erf output

σ = 1

Figure 7: Distributions of predictions of an error function

sigmoid for different σ.

First parameter is the number of hidden units. The

only theoretically motivated upper limit for the num-

ber of hidden units to try is N (which is enough for

zero training error). At that limit, computing pseu-

doinverse corresponds to ordinary inversion of an

N ×N matrix, with a complexity of O(N

3

). In prac-

tice, smaller upper limits are used.

Traditionally, somewhat arbitrary fixed values

have been used for weight variance, and model selec-

tion has only considered the number of hidden units.

Our results show that also the weight variance has a

noticeable effect on results, and should thus be con-

sidered a tuning parameter.

Generally, small training error and good general-

ization may be contradictory goals. ELM is proved

(Huang et al., 2006) to be able to reproduce the train-

ing data exactly if the number of hidden units equals

or exceeds the number of data points. This behavior,

though important in proving computational power of

ELM, is usually not desirable in modeling. A model

should generalize, not exactly memorize the training

data. This view is indirectly acknowledged in practi-

cal ELM work, where the number of hidden units is

much smaller than N. This may preventELM network

from overfitting to the training data, a factor usually

not discussed in ELM literature.

Generalization ability of ELM is attributed to the

fact that computing output layer weights by pseudoin-

verse achieves a minimum norm solution. Generaliza-

tion ability of a neural network is in (Bartlett, 1998)

shown to relate to small norm of weights. However,

Bartlett’s work considers the neural network as whole,

not only the output layer. Although ELM minimizes

the norm of output layer weights, norm of the hidden

layer weights depends on the variance parameter, and

does not change in ELM training.

In the hidden layer, generalization ability is re-

lated to the operating point of hidden unit activations,

discussed in Section 3.3. A model with small hidden

layer weights is nearly linear, and generalizes well. A

highly non-linear model, produced by large weights,

is more prone to overfitting. Therefore, conclusions

about generalization ability of ELM should not be

based on the output weights only.

4.2 ELM as a Kernel

Use of ELM as a kernel, at least in a Gaussian pro-

cess classifier, is likely to remain a curiosity. Clas-

sification performance seems to steadily increase as

the number of hidden units grows, and, when consid-

ering the variation caused by ELM randomness, the

performance does not exceed that of NNK. When an

easy-to-compute, theoretically derived NNK function

is available, we see no reason to favor a heuristical

kernel, computation of which requires generating and

storing randomnumbers and an explicitnonlinear fea-

ture space mapping.

4.3 NNK as ELM Hidden Layer

We introduced NNK-ELM as a way for studying the

effect of infinite hidden units in ELM, but it can also

find its use as a practical method.

Computational complexity of NNK-ELM corre-

sponds to that of N-hidden unit ELM. The matrix de-

composition required in NNK-ELM scales as O(N

3

).

ELM runs much faster than that, since the optimal

number of hidden units is usually much less than N.

However, the optimal value is usually found by model

selection, necessitating several ELM runs. If the se-

lection procedure also considers large ELM networks,

choosing ELM over NNK-ELM does not necessar-

ily save time. Prediction, on the other hand, is much

faster with ELM, if H ≪ N.

Furthermore, NNK-ELM has only one tuning pa-

rameter, the variance of hidden layer weights. Oppos-

ing the popular view, we argue that also ELM model

INTERPRETING EXTREME LEARNING MACHINE AS AN APPROXIMATION TO AN INFINITE NEURAL

NETWORK

71

selection should consider weight variance as parame-

ter. If the number of data points is reasonably small,

NNK-ELM can thus result in considerable time sav-

ings when doing model selection. A factor adding to

this is that, unlike ELM, NNK-ELM gives determin-

istic results, and only requires repetitions if variability

due to training data is considered.

NNK-ELM can also naturally deal with non-

standard data. NNK corresponds to an infinite net-

work with error function sigmoids in hidden units.

If a Gaussian kernel was used instead, the compu-

tation would imitate an infinite radial basis function

network. Dropping the neural network interpretation,

any positive semidefinite matrix can be used. This

leaves us with the idea of using a kernel for nonlin-

ear mapping, then returning to a vectorial represen-

tation of points, and applying a classical algorithm

(as opposed to an inner-product formulation of algo-

rithms needed for kernel methods). In the case of

NNK-ELM, the algorithm is a simple linear regres-

sion, but the same idea could be used with arbitrary al-

gorithms. This can serve as a way of applying classi-

cal, difficult-to-kernelize algorithms to non-standard

data (like graphs or strings), for which kernels are de-

fined.

4.4 Future Directions

When ELM is considered as an approximation of an

infinite network, it becomes obvious that the vari-

ance of hidden layer weights is more important than

the weights themselves. It should undergo rigorous

model selection, as any other parameter. Also lessons

already learned from other neural network architec-

tures, like the effect of weight variance on the oper-

ating point of sigmoids, should be kept in mind when

determining future directions for ELM development.

Questions about correct number and behavior of

hidden units in ELM remain open. If the hidden units

do not learn anything, what is their meaning in the

network? Do they have a role besides increasing the

variance of output?

If we fix the data x and draw weights w

i

(includ-

ing the bias) randomly and independently, then also

the hidden layer outputs a

i

= f(w

i

x) are independent

random variables. They are combined into model out-

put as b =

∑

H

i=1

β

i

a

i

.

Variance of b is related to number and variance

of a

i

. This is seen by remembering that, for in-

dependent random variables F and G, Var[F+ G] =

Var[F] + Var[G]. The more hidden units we use, the

larger the variance of the model output.

Training of the output layer has opposite effect on

variance. Output weights are not random, so vari-

ance of the model output b is related to that of a

i

by rule Var[cF] = c

2

Var[F] (where c is a constant).

That is, variance of b is formed as a weighted sum of

variances of a

i

. The weights β

i

’s are chosen to have

minimal norm. Although minimizing the norm does

not guarantee minimal variance, minimum norm esti-

mators partially minimize the variance as well (Rao,

1972). Therefore, choice of output weights tends to

cancel the variance-increasing effect of hidden units.

We have recognized the importance of variance,

yet the roles and interactions of weight variance, num-

ber of hidden units (increases variance) and determi-

nation of output weights (decreases variance) are not

clear, at least to the authors. If we are to understand

how and why ELM works, the role of variance needs

further study.

REFERENCES

Asuncion, A. and Newman, D. (2007). UCI machine learn-

ing repository.

Bartlett, P. L. (1998). The sample complexity of pattern

classification with neural networks: the size of the

weights is more important than the size of the net-

work. IEEE Transactions on Information Theory,

44(2):525–536.

Cho, Y. and Saul, L. K. (2009). Kernel methods for deep

learning. In Bengio, Y., Schuurmans, D., Lafferty, J.,

Williams, C., and Culotta, A., editors, Proc. of NIPS,

volume 22, pages 342–350.

Fr´enay, B. and Verleysen, M. (2010). Using SVMs with

randomised feature spaces: an extreme learning ap-

proach. In Proc. of ESANN, pages 315–320.

Golub, G. H. and Van Loan, C. F. (1996). Matrix computa-

tions. The Johns Hopkins University Press.

Guyon, I., Gunn, S. R., Ben-Hur, A., and Dror, G. (2004).

Result analysis of the nips 2003 feature selection chal-

lenge. In Proc. of NIPS.

Huang, G.-B., Zhu, Q.-Y., and Siew, C.-K. (2006). Extreme

learning machine: Theory and applications. Neuro-

computing, 70:489–501.

Miche, Y., Sorjamaa, A., Bas, P., Simula, O., Jutten, C., and

Lendasse, A. (2010). OP-ELM: Optimally pruned ex-

treme learning machine. IEEE Transactions on Neural

Networks, 21(1):158–162.

Minka, T. (2001). Expectation propagation for approximate

bayesian inference. In Proc. of UAI.

Rao, C. R. (1972). Estimation of variance and covariance

components in linear model. Journal of the American

Statistical Association, 67(337):112–115.

Rao, C. R. and Mitra, S. K. (1972). Generalized Inverse of

Matrices and Its Applications. Wiley.

Rasmussen, C. E. and Williams, C. K. I. (2006). Gaussian

processes for machine learning. MIT Press.

KDIR 2010 - International Conference on Knowledge Discovery and Information Retrieval

72

Torgerson, W. S. (1952). Multidimensional scaling: I. the-

ory and method. Psychometrika, 17(4):401–419.

Williams, C. K. I. (1998). Computation with infinite neural

networks. Neural Computation, 10:1203–1216.

Young, G. and Householder, A. S. (1938). Discussion of a

set of points in terms of their mutual distances. Psy-

chometrika, 3(1):19–22.

INTERPRETING EXTREME LEARNING MACHINE AS AN APPROXIMATION TO AN INFINITE NEURAL

NETWORK

73