USE OF COMPUTATIONAL INTELLIGENCE AND VISION

IN THE STUDY OF SELECTIVE ATTENTION

OF CONGENITAL BLIND CHILDREN

Kim Bins, Felipe Pulcherio, Maria M. D. Poyares, Eloisa Saboya

Neurolab, Instituto Benjamin Constant, Rio de Janeiro, Brazil

Carla Verônica M. Marques, Carlo Oliveira E. S. de Oliveira

Faculdade de Medicina, UFRJ, NCE - UFRJ, Rio de Janeiro, Brazil

Lidiane F. Silva

NCE, UFRJ, Rio de Janeiro, Brazil

Keywords: Congenital blindness, Neuropsychological tests, Selective attention, Computational intelligence, Orange

canvas.

Abstract: The test Cognitive Assessment System (CAS) of Das and Naglieri was translated and adapted by Laboratory

of Neuropsychology at Instituto Benjamin Constant for the cognition study in congenital blind children to

understand the peculiarities of cognitive development in the absence of vision. Our emphasis is Expressive

Attention subtest, showing its adaptation and manual implementation for the visually impaired. This subtest

assesses selective attention and may identify if the child has difficulty in cognitive process. The sample

consisted of 64 congenital blind students of Benjamin Constant Specialized School, where 21 performed the

test. Data obtained during the application were grouped and analyzed by artificial intelligence laboratory

Orange Canvas, which helped define the attention profiles of the sample. It can be created, in the future,

new pedagogical techniques for a better development of their cognition. It was also presented here the

automated adaptation which was developed by the Group for Information Technology Applied to Education

Electronic Computer Center, Rio de Janeiro Federal University based on system technology "Geometrix”.

The proposal is that this software evaluates the attention of visually impaired people more quickly and

efficiently, being more reliable to the original subtest, and also makes the statistical analysis of data,

generating profiles.

1 INTRODUCTION

Currently, the study of cognition in congenital blind

children is a topic not much discussed in scientific

research in Brazil. However, the study of this area is

very important to understand the possible

differences of cognition of the visually impaired.

According to Seminério (1984), the human

species has two morphogenetic channels through

which human beings are capable of developing the

structured processes of their knowledge, which are:

the visual-motor channel and the phonetic-audio

channel. Each of these channels has an afferent-

perceptual way (vision and hearing) and an efferent-

motor way (motor action and motor production of

the phonemes of speech) that are interconnected and

communicate through feedback. According to this

theory, it is inferred that the visually impaired have a

predominance of phonetic-audio channels and,

therefore, have their cognition different from sighted

people, as well as a different way to structure their

cognitive processes.

The education given to the visually impaired in

all grade levels uses teaching techniques based on

cognition of sighted children. Thus, visually

impaired children are not stimulated properly, which

could affect their cognitive development. The

493

Bins K., Pulcherio F., Poyares M., Saboya E., M. Marques C., Oliveira E. S. de Oliveira C. and Silva L..

USE OF COMPUTATIONAL INTELLIGENCE AND VISION IN THE STUDY OF SELECTIVE ATTENTION OF CONGENITAL BLIND CHILDREN .

DOI: 10.5220/0003128704930499

In Proceedings of the International Conference on Health Informatics (HEALTHINF-2011), pages 493-499

ISBN: 978-989-8425-34-8

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

Laboratory of Neuropsychology at the Instituto

Benjamin Constant translated and adapted the

battery of neuropsychological tests the CAS

(Cognitive Assessment System) with the purpose of

studying the cognition of the blind in order to

understand these differences and create, in the

future, new techniques for teaching them.

The Cognitve Assessment System (CAS) was

developed by Das and Naglieri (1997) based on

PASS-Luria theory, which states that cognitive

processes can be measured in the following different

instances: planning, attention, simultaneous

processing, and successive processing. The CAS has

several subtests designed to measure these cognitive

processes and to correlate them to problems that

may affect cognition, such as mental retardation,

learning disabilities, Attention Deficit Disorder

(ADHD), planning problems, emotional problems,

serious head injury or even if the child is gifted.

In this work we demonstrate the adaptation and

implementation of the Expressive Attention subtest

in order to assess the cognitive process of attention

in congenital blind children. This subtest assesses

selective attention, i.e. "the ability to selectively

focus on one stimulus while inhibiting conflicting

responses to stimuli presented over time" (DAS &

NAGLIERI, 1997). Likewise, when choosing to pay

attention to some stimulus over another, this process

of selective attention is also being used (Cohen,

2003; Duncan, 1999). This process of attention is

evaluated through the Stroop effect in the subtest,

which refers to the dominance that reading has on

the designation of colours in literate people.

According to Sternberg (2008), if the reading

becomes an automatic process, it is not in our

conscious control, and for this reason we have this

difficulty to stop reading the written word and

concentrate on identifying the ink colour.

During the implementation of the subtest there

were taken notes on the answering sheet, and the

data obtained was used for statistical analysis by

intelligence laboratory Orange Canvas. This

software implements functionalities, so that the

execution time is not crucial; it is a machine learning

and data mining. Through this program, the central

object library (core objects) and the sets of

programming instructions designed to perform a

specific task (routines), was made a cluster of data

inherent to the adapted subtest and prognosis

obtained by children behavioral analysis. This

cluster helped us to define the profiles of attention of

the sample.

In manual adjustment of Expressive Attention

subtest, it was not possible to place the stimuli (the

Braille and textures) simultaneously. With the

purpose of adapting this subtest in a more reliable

way, with simultaneous stimuli, the software

EXACT (Expressive Attention) is being finalized by

GINAPE/NCE-UFRJ to solve this problem and to

make the application faster and efficient. This

software is based on the technology of "Geometrix"

developed in Python (it is an interpreted language

which does not need to compile the code to be

executed), used to teach geometric concepts

interactively to the blind student. This software

captures through a webcam the finger movements of

the blind student on a board with coordinates (X, Y)

- in which he produces the figures with his finger

around the figure rubbery - and shows on the

computer screenplay what the student drew, and also

informs with a synthesized voice what has been

drawn.

The software "EXAT" will be using these

Geometrix principles adapted to the needs of the

Expressive Attention subtest, which are interaction,

recognition of colours by texture and the immediate

return to the child. It is being proposed the

automation of the entire manual process of

Expressive Attention subtest to the visually

impaired. When the blind child puts his finger on the

texture, the system through the webcam will

recognize the texture and will inform with

synthesized voice the name of the conflicting

texture. We believe that we will obtain a more

precise simultaneity of conflicting stimuli in this

subtest, which will help to assess the selective

attention of these children.

2 OBJECTIVE

The objective of this work is to define the profile of

attention from the sample of students at Instituto

Benjamin Constant (congenital blind children) using

the Expressive Attention subtest of the battery of

neuropsychological tests of Cognitve Assessment

System (CAS). From the definition of these profiles

and the description of their specific characteristics, it

is intended, in the future, to create neuropedagogical

techniques for a better learning of visually impaired

children and a better use of their cognition.

The proposed solution is to use the Orange

Canvas program to define the profiles of students in

the subtest adapted manually. Also, it is being

completed this subtest automation in order to make

it easer to use, to provide a more precise

simultaneity of stimuli (with more reliability to the

HEALTHINF 2011 - International Conference on Health Informatics

494

original), and to make statistical analysis defining

the profiles without using other tools.

The intention of this article is to show the

importance of studying cognition in visually

impaired and, likewise, to show how computer

technology can help these studies: by prognosis

definition with Orange Canvas and by convenience

for the application of subtest after its automation

using software EXAT. With this test's

automatization it will be possible to evaluate

children who not yet dominate the Braille system

and then produce an immediate prognostic and the

guiding for specific interventions.

3 METHODS

3.1 Participants

The sample consisted of 64 congenital blind

children. But only 21 of these children performed

the subtests, 10 male and 11 female. They are aged

between 8 and 13 years old, students from

kindergarten to the 6th grade of elementary school

and attending the Specialized School of Instituto

Benjamin Constant in Rio de Janeiro/Brazil. Figure

1 is a conceptual map of the sample data. According

to the school information sheet for each child, they

belong to lower social class. Among children who

could not attend the test, 18 were excluded because

they could not read Braille, 20 were excluded

because they were over the age established for

application of the test, and five were excluded for

non-attendance.

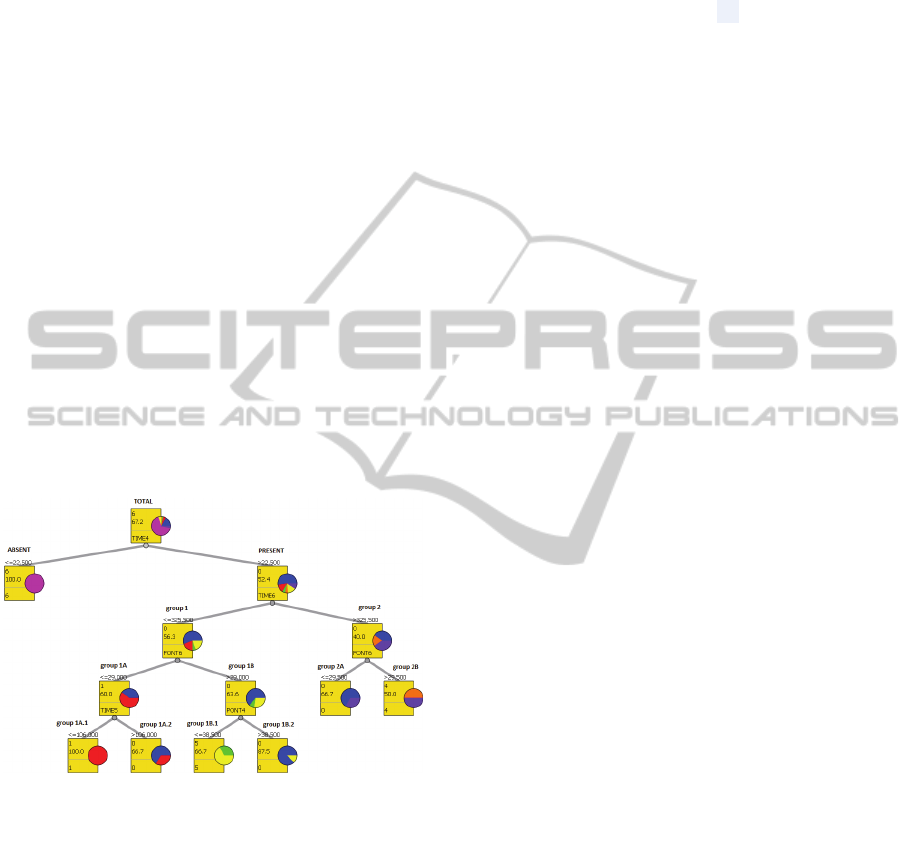

Figure 1: Sample Data.

3.2 Material

The Expressive Attention subtest for children aged

between 8 and 17 years old was translated into

Portuguese and adapted for blind children, changing

from visual stimuli to tactile stimuli.

The original subtest is a variation of the Stroop

test and consists of three parts. In the first part there

are two sheets of paper with the names of the

colours blue, yellow, green and red repeating

themselves without an order. The first sheet is a

small example with only two lines where the name

of four colours is written in each one. This example

enables the child to practice the task before doing it.

In the second sheet, there are eight lines with five

names of colours in each one.

In the second part there are two sheets with

several rectangles painted with the colours blue,

yellow, green and red repeating themselves, also

without an order. The first sheet is the example with

only two lines with four coloured rectangles in each.

In the second sheet there are eight lines with five

rectangles in each one.

In the third part there are two sheets with the

names of the colours blue, yellow, green and red

written in coloured ink. However, the ink colour is

different from the colour name written. For example,

the word blue written in green ink. The first sheet is

the example that has two lines with four words in

each one. The second sheet has eight lines with four

words in each one.

The first stage of adaptation was the translation

into Portuguese. Then, the colours, because they are

visual stimuli, were replaced for textures. The blue

colour changed to SANDPAPER, the yellow

changed to FABRIC, the red changed to LACE, and

the green changed to FELT. The writing in ink was

replaced by Braille.

In the first part of the test, the names of colours

written in ink were replaced by textures names

written in Braille on a board of size 47.5 cm x 36

cm. The board sample was slightly smaller because

it had fewer words, size 28 cm x 29 cm. Braille is

written in a material called thermoform (PVC film),

this page of thermoform stays in front of another

sheet of paper on which is written the names of the

textures in ink (to help the applicator to correct the

test).

The second and third parts of the test which were

in one sheet in the original test, had to be divided

into two boards of size 59 cm x 33 cm. This

adjustment was due to the size of the rectangles with

the textures that had to be large to fit inside the

Braille writing. The textures of the second board

were made with thermoform. However, the

rectangles with the textures in thermoform were

glued on the board of paper above the coloured

rectangles in ink. Below the rectangles, it is written

the name of their texture to help the applicator to

USE OF COMPUTATIONAL INTELLIGENCE AND VISION IN THE STUDY OF SELECTIVE ATTENTION OF

CONGENITAL BLIND CHILDREN

495

correct the test.

The adaptation of the third part is very similar to

the second, the difference is that within the

rectangles with the textures in thermoform, it is

written in Braille the name of another conflicting

texture. For example, in a rectangle with the texture

of lace, it will be written sandpaper in Braille in the

middle of this rectangle.

After the adjustment, we applied the pilot test to

check whether the adjustment was appropriate for

the children. Then we verified that children were

confusing two textures that had been very similar:

they mistook the lace with the fabric. Thus, we

changed the type of lace, redid the thermoform and

reapplied the test. This second model was adequate

and was considered the definitive one.

3.3 Procedures

Implementation of Expressive Attention subtest in

children was done in individual booths at the

Laboratory of Neuropsychology at Instituto

Benjamin Constant. The applicator conducted the

child to one of these booths, seated her on the chair

and asked some questions like her name, age, and

school grade. Then the applicator explained in a

playful and detailed way what she was doing there

and how she would perform the test. The child is

told that it is a game and has to be done as quickly as

possible.

Step 1: The initial board named Example D

(relating to children of 8-17 years old, the object of

this study) is presented to the child. This board has

two lines containing the name in ink and in Braille

(thermoform) of the textures of sandpaper, fabric,

felt and lace. The second line was made to

physically explain how it will be the sequence of

stimulus boards and contains the same words of the

first line, but in different order. For ages 8-17 years

old the board stimulus starts in Item 4. This board is

presented with eight lines, each line contains five

stimulus words in Braille and in ink. The child

should read the words from left to right until the end,

going to the next line and so on until the end, at the

best time possible. The applicator should take note

on the answer sheet if the child read the word

correctly and what was the time spent.

Step 2: It is presented to the child the board of

Example E containing four textures that correspond

to the four stimuli words, seeking for tactile

recognition by the congenital blind children. Then,

there are presented the boards of Item 5, containing

four lines each. The blind child must make the

recognition of each tactile stimulus and say its name

in sequence from left to right, going to the next line

until the end of the board, in the shortest time

possible. The applicator should take note on the

answer sheet if the child correctly recognized the

textures and what was the time spent.

Step 3: It is presented the board of Example F

which is similar to that of Example E, the difference

is that now it is written in Braille the name of a

conflicting texture. Then, there are presented the

boards of Item 6, in the same prototypical example,

but with four lines each. The child should read the

Braille silently and then recognizing tactually the

texture stimuli (conflicting) and say only the name

of the texture stimuli. The applicator should get the

child's hand and join her in reading the Braille, and

then direct her hand to the texture. During the test,

the applicator should take note on the answer sheet

when the child says the correct name of the texture

stimulus and what was the time spent on activity.

The score and time of each item are noted in the

answer sheet. At the time of application, there are

also noted some observations of the applicator, for

example, if the child is distracted or tired.

3.4 Automation Proposal

Compared to the manual process of Expressive

Attention subtest described above, the automated

result of this work, to be reliable to the original test,

requires more time, the researcher's interventions

during the application and requires immediate

response from the blind student for the test

sequence, that does not happen on the subtest

adapted. In order to solve this issue we decided to

seek for a tool that would make the application of

the test faster and with results consistent with the

objective of the original test, then we turned to

NCE/UFRJ (Electronic Computer Center, Federal

University of Rio de Janeiro - Brazil) to ask for a

solution for our needs. There was nominated a

student of Master who developed the Geometrix

system to assist blind students in the acquisition of

some geometric concepts using a Webcam, a

wooden board with the X, Y coordinates in self-

relief and figures made of rubberized material. This

system recognizes geometric shapes produced by the

blind, bordered on the board, and report to them the

properties of the polygon created.

Based on this architecture there was the idea of

adapting this technology for the Expressive

Attention subtest, preserving the same procedures

described above in Steps 1, 2 and 3, and only in the

test application there will be technology

interventions, where the student will be placed in an

HEALTHINF 2011 - International Conference on Health Informatics

496

individual booth with a computer, a webcam and the

board with textures (described in item 3). By

touching the texture, the system we call “EXAT" (an

allusion to the name of origin) will capture through

the Webcam the location of the finger of the student,

identifying the texture and informing him the name

of the texture (colour adjusted), thereby, the system

will store in "EXAT" database these markings,

generating the profile results at the end of the

application of subtest. The student will be able to

reveal the result of each test through a gestual

marking of the reply that also will be interpreted by

the system of computational vision.

However, for the accomplishment of automatized

stage 3, a new stimulus board was formed; this

board possess, as in the original subtest, all the

stimulus congregated in it (the division in two

boards was not necessary anymore), since the

rectangles with the textures do not need to be big

because they do not present the writing in Braille.

So, the board had eight lines with five textured

rectangles in each line. The textures continue being

done using thermoform material (PVC film) and,

below each texture, it was placed a colored rectangle

there. Actually, the computer recognizes the colors

when the child presses the rectangle and states the

name of a conflicting texture.

Therefore, it is expected that with the automation

of Expressive Attention subtest that the application

time becomes faster, the intervention of the

applicator happens only when really needed, the

interaction with students take place simultaneously,

and that the results are produced by the system at the

end of the application, making the automated subtest

as similar as possible compared to the original test.

Finally, it is believed that this technology

becomes a tool that opens new horizons of research,

contributing then to new tests adapted very closely

to those applied to seeing people. We emphasize that

the results produced by this system have not yet

been concluded, as we reported earlier it is being

finalized by the trainees of NCE/UFRJ with

conclusion expected to be in August 2010.

3.4.1 Prototype

The EXAT software prototype, implemented based

on the detailed information above, can bring truth to

the subtest “Expressive Attention”, once it keeps the

stimulus’ simultaneity.

The computer vision creates a new property for

the thermoform: the sound. Therefore, it gives a

label where there is none; translating it to an

accessible language. By that, the trace of the child’s

finger, through the webcam, allows it to use a

spoken label for virtual objects.

In order to begin receiving an explanation about

the task, the child has to press a key. For him/her to

listen to the explanation one more time, it’s possible

to press the key as many times as needed. The

EXAT sums up the number of times that the

explanation was given and calculates the reaction

time of the child (since the first explanation, until

the test has begun).

This software calculates the time transition from

previous position to the current one (∆t). As per this

data, the prognosis would be more objectively

classified in order to be used as counterproof for

previous prognosis that could not be reevaluated by

the manual adaptation, since there are no records of

it.

4 RESULTS

The analysis consisted in the grouping of the raw

scores of items 4, 5, 5.1, 6 and 6.1, the times of

those items and the forecasts produced by the

researcher during the implementation phase of the

subtest, with the help of statistical program Orange

Canvas. Such predictions were categorized into the

following levels: standard (0), lack of concentration

(1), fatigue (2), difficulty in understanding (3),

difficulty in identifying the textures (4), impatience

(5), did not attend (6); which received numbers for

statistical purposes.

By using the Orange Canvas program, it was

verified that there was a correlation between the

variables inherent of the subtest and the forecasts,

where their veracity was checked. Through

Classification Tree Graph tool, a diagram was drawn

to the forecasts and their correlation with all the

aforementioned variables. Of the 64 participants in

the sample, 43 were grouped for not having

conducted the test and 21 others who conducted the

test were in a group that split into two subgroups:

group 1 and group 2. It was found that from the 21

participants, 16 are in the same classification (group

1) in which there were 9 participant classified as

standard, three classified as poor concentration, one

classified as tiredness and three classified as

impatience, all of them having time of item 4 ≥

22.500 and time of item 6 ≤ 325 500. This first

group is divided into two other subgroups: group 1A

and group 1B. It was found that the group 1A had

two classified as standard and three classified as

poor concentration, all with scores of item 6 ≤ 29

USE OF COMPUTATIONAL INTELLIGENCE AND VISION IN THE STUDY OF SELECTIVE ATTENTION OF

CONGENITAL BLIND CHILDREN

497

000, time of item 4 ≥ 22.500 and time of item 6 ≤

325 500. In group 1B there were seven classified as

standard, one classified as fatigue and three

classified as impatience, all with a score of item 6 ≥

29 000, time of item 4 ≥ 22 500 and time of item 6 ≤

325 500. Group 1A is divided into two other

subgroups: group 1A.1 and 1A.2 group. In group

1A.1 there were two classified as lack of

concentration with a score of ≤ 29 000 on item 6,

time of item 4 ≥ 22 500, time of item 5 ≤ 106 000

and time of item 6 ≤ 325 500. In group 1A.2 there

were two classified as standard and one classified as

lack of concentration, all with scores of item 6 ≤ 29

000, time of item 4 ≥ 22 500, time of item 5 ≥ 106

000 and time of item 6 <= 325 500 . Returning to the

group 1B, it was also divided into two subgroups:

group 1B.1 and 1B.2. In group 1B.1 there was one

classified as a lack of concentration and two

classified as impatience, all with scores in item 4 ≤

38 500, scores in item 6 ≥ 29 000, time of item 4 ≥

22 500 and time of item 6 ≤ 325 500. In the group

1B.2 there were seven classified as standard and one

classified as impatience with score in item 4 ≥ 4 38

500, score in item 6 ≥ 29 000, time of item 4 ≥ 22

500 and time of item 6 ≤ 325 500.

Legend of the prognostic

Blue = standard (0)

Red = lack of concentration (1)

Green = fatigue (2)

Purple = difficulty of understanding (3)

Orange = difficulty in identifying the textures (4)

Yellow = impatience (5)

Pink = not done the test (6)

Figure 2: Classification Tree Graph.

Of the 21 participants, 16 were from group 1 and

the other five participants were from group 2, in

which there were two participants classified as

standard, one classified as difficulty in identifying

the textures and two classified as difficulty of

understanding. All these participants grouped with

time of item 4 ≥ 22 500 and time of item 6 ≥ 325

500. The group 2 was also divided into two

subgroups: group 2A and group 2B. In group 2A

there were two classified as standard and one

classified as difficulty of understanding, all with

scores of item 6 ≤ 29 500, time of item 4 ≥ 22 500

and time of item 6 ≥ 325 500. In group 2B there was

one classified as difficulty of identifying the

textures and one classified as difficulty in

understanding, all with a score of item 6 ≥ 29 500,

time of item 4 ≥ 22 500 and time of item 6 ≥ 325

500.

5 DISCUSSION

• The standard prognosis was indicated by the

applicator to the children who have not presented

difficulty in understanding and performing the

activity; and which had not clearly demonstrated any

evidence of dispersal during the test application.

These same children, in the analysis done by the

Orange Canvas intelligence laboratory, were present

in all subgroups, which means that the applicator

was unable to set a correct prognosis of the standard

attention.

• Lack of concentration was predicted to children

who were easily dispersed with stimuli from the

environment, such as sounds from the street or

sounds made by other children. Children who

wanted to talk with the applicator were also included

in the prognosis of lack of concentration. In the

analysis made by Orange, these children were, first,

grouped in the same group (1A) and then divided

into two subgroups by their score. But as they got

close, the analysis done by the Orange confirms the

prognosis made by the applicator.

• The fatigue was predicted to the children that

were lying on the table during the implementation of

subtest and yawned a lot. During application, there

was only one child with this prognosis; therefore, the

analysis made by the Orange can not be assessed for

this outcome specifically. We suggest the

application in a larger sample, so there might arise

more prognostics in this category and maybe can be

reassessed. However, prognosis fatigue and

impatience were grouped together in Group 1B,

which shows that these two types of prognosis can

be evaluated as the same kind of lack of attention.

• The prognosis impatience was indicated by the

applicator to children who kept asking to leave and

to those who were watching the clock too much

during application. Just like the prognosis of lack of

concentration, the children with prognosis of

impatience primarily stayed in the same group (1B)

HEALTHINF 2011 - International Conference on Health Informatics

498

and then subdivided into two other groups due to

differences in scoring. Analysis of Orange confirms

the prognosis made by the applicator.

• The difficulty of understanding was predicted to

children who had difficulty in understanding what

should be done during the activity. The Orange

analysed these students as similar, placing them in

the same group (2) which confirms the prognosis

made by the applicator.

• The difficulty in identifying the textures was

predicted to children who were confused to

differentiate the textures, changing one by another,

or confusing their names. Like fatigue, there was

only one child classified with this prognosis, which

complicates our assessment of Orange analysis in

this specific prognosis. However, it can be observed

that children who obtained the prognosis of

difficulty of understanding and difficulty of

identifying the textures were grouped together in

Group 2B with a high score. This indicates that the

difficulties which the applicator predicted, in fact,

appeared as an increased attention on these students

in perform the activity, achieving a higher score.

This finding originated from the analysis of the

computational intelligence of the system had a great

relevance for the experiment. An interesting aspect

of the analysis'result is that, not only it confirms the

classification of the profiles, but also the

computational intelligence has indicated a new

interpretation for the profiles of difficulty of

understanding and difficulty on identifying textures;

what was originally indicated as an understanding

problem, later was disclosed (by the analysis) as a

peculiar process of learning which has shown good

results from the participants.

The prognosis of lack of concentration, fatigue and

impatience were separated from the prognosis of

difficulty of understanding and difficulty of

identifying the textures, leaving the first ones with a

lower score; this corroborates the hypothesis

presented here that the difficulties of understanding

and identification texture caused an increase in the

attention of these students.

Therefore, the intelligence laboratory Orange

Canvas proves to be an important tool to help define

the profiles of the attention of visually impaired

children by grouping the data obtained in the

responses of children in the subtest, and by the

prognosis inferred by the applicator. This success in

defining profiles of attention by the Orange suggests

that adaptation automated which we are doing may

contain in itself this statistical analysis, with no need

to pass the data obtained during application to

another software.

6 CONCLUSIONS

The cognition of the visually impaired, although not

being an area very studied in scientific research in

Brazil has proved to be a promising area. Thus, from

results obtained with the analysis of the intelligence

laboratory Orange Canvas Expressive Attention and

the automation of the Expressive Attention subtest,

it is concluded that computer technology can bring

many benefits in research on these studies. The

speed and convenience that this technology can offer

us in gathering and processing the data means that it

may become a very useful tool for conducting such

activities. Moreover, contribution of information

technology for the present study may facilitate other

works, for example, the development of new

pedagogical techniques made specifically for the

cognition of blind children.

REFERENCES

Augmented Reality (AR). Available at http://www.realida

deaumentada.com.br/home/ (accessed June 17, 2010).

Camera Module Introduction. Available at http://www.pyg

ame.org/docs/tut/camera/CameraIntro.html (accessed

June 11, 2010).

Cohen, A., 2003. Selective Attention. In: L. Nadel (Ed.),

Encyclopedia of cognitve science, Vol.3, pp. 1033-

1037. London, England: Nature Publishing Group.

Das, J. P. & Naglieri, J. A., 1997. Cognitive Assessment

System: Interpretative Handbook. Itasca, Ilinois:

Riverside Publishing.

Data Mining Fruitful and Fun. Available at

http://www.ailab.si/orange/ (accessed May 21, 2010).

Duncan, J., 1999. Attention. In R. A. Wilson & F. C. Keil

(Eds.), The MIT Encyclopedia of cognitive sciences

(pp. 39-41). Cambriedge, MA: MIT Press.

Ferreira, P A. P., 2009. Um projeto arquitetural para

sistemas neuropedagógicos integrados. Rio de Janeiro:

IM/NCE/UFRJ. Dissertação de mestrado.

Library for building Augmented Reality (AR) applications.

Available at http://www.hitl.washington.edu/artoolkit/

(accessed June 4, 2010.

Official announcement of PythonBrazil[6]. Available at

http://www.python.org.br/wiki (accessed March 15,

2010).

Seminério, F. L. P., 1984. Infra-Estrutura da Cognição:

Fatores ou linguagens? Ed. Fundação Getúlio Vargas.

Rio de Janeiro, Cadernos do ISOP No4.

Silva, L. F., 2010. Geometrix : Ensinado conceitos

geométricos a deficientes visuais. Rio de Janeiro:

IM/NCE/UFRJ. Master’s thesis.

Sternberg, R. J., 2008. Psicologia Cognitiva. Ed. Artmed.

Porto Alegre, 4th Edition.

USE OF COMPUTATIONAL INTELLIGENCE AND VISION IN THE STUDY OF SELECTIVE ATTENTION OF

CONGENITAL BLIND CHILDREN

499