USER VERIFICATION FROM WALKING ACTIVITY

First Steps towards a Personal Verification System

Pierluigi Casale, Oriol Pujol and Petia Radeva

Computer Vision Center, Bellaterra, Barcelona, Spain

Dept. of Applied Mathematics, University of Barcelona, Barcelona, Spain

Keywords:

Pervasive computing, Security and privacy, Embedded systems, Wearable devices, One-class classification.

Abstract:

In this work, first encouraging results in user verification by walking activity using a wearable device are

reported. A discriminative machine learning pipeline is proposed for user verification. A general walking

classifier based on AdaBoost is used for personalization adding data related to the verified users. An ensemble

of One-Class classifiers is created for user verification. This novel technique proves to achieve very high

performances from both classification accuracy and computational cost point of view. Results obtained shows

that users can be verified with high confidence, with very high value of performance metrics.

1 INTRODUCTION

Privacy in today’s digital society is one of the most

important and controversial topics. Consider, for in-

stance, that your smart-phone, containing all kind of

personal informations, is stolen. If the system was

able to verify its owner while it is being carried, it

could automatically block or send an alert message in

case of detecting a non verified user. In this context,

the verification of authorized users using walking ac-

tivity patterns is of great interest related to security

and privacy.

Recognizing physical activities like walking is an

emerging field of research. Recognizing all type of

everyday life activities might be in the short future

a fundamental application in pervasive computing.

Even if first works about activity recognition used au-

dio and video streams (Clarkson and Pentland, 1999),

in many recent works activity recognition is based

on classifying sensory data using one or many ac-

celerometers. Accelerometers have been widely ac-

cepted due to their miniaturization, their low-power

requirements and for their capacity to provide data

directly related to motion. Modern smart-phones as

i-Phones or Android-based phones have an integrated

tri-axial accelerometer sensor.

In (Mannini and Sabatini, 2010), authors give a

complete review about the state of the art of activity

classification using data from one or more accelerom-

eters. In their review, seven basic activities and transi-

tions between activities are classified from five biax-

ial accelerometer placed in different parts of the body,

using an high dimensional features vector and a Hid-

den Markov Model classifiers, achieving 98.4% of ac-

curacy. In (Lester et al., 2006), authors summarize

their experience in developing an automatic physical

activities recognition system. In their work, the lo-

cation of the sensor has very small impact on their

results. Additionally, the performance of the system

improves as data from different users is used and they

achieve the best result fusing information from ac-

celerometers and microphones.

Although much effort has been put in activity

recognition, user verification by mean of accelerom-

eter data has been rarely addressed. The closest

work to user verification concerns user identification.

There is a fundamental difference between identifica-

tion systems and verification ones. While in identifi-

cation systems one tries to discriminate among differ-

ent users, in verification the purpose is to check that

data belong to the authorized user of the system with-

out prior knowledge of data of the intruders. Note

that, if one tries to mimic the behavior of the veri-

fication system using identification, one must model

all possible non-authorized users – which is unfeasi-

ble in general settings. In (M

¨

antyj

¨

arvi et al., 2005),

user identification have been performed using corre-

lation, frequency domain and data distribution statis-

tics, ensuring an error rate of 7%. In (Gafurov et al.,

2006), a biometric user authentication system based

on a person gait is proposed. Applying histogram

179

Casale P., Pujol O. and Radeva P..

USER VERIFICATION FROM WALKING ACTIVITY - First Steps towards a Personal Verification System.

DOI: 10.5220/0003329901790185

In Proceedings of the 1st International Conference on Pervasive and Embedded Computing and Communication Systems (PECCS-2011), pages

179-185

ISBN: 978-989-8425-48-5

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

similarity and statistics about walking cycle, authors

ensure 5% of identification error rate. Recently, some

efforts in user verification have been done also in (De-

rawi et al., 2010), where, using the accelerometer of

an Android-based mobile phone, walking data have

been collected from 51 testers walking in an interior

corridor. From the best of our knowledge, that work

represents the first one using data collected using a

real mobile phone.

In our work, first encouraging results in user ver-

ification by walking activity are reported. In our lab,

a wearable system easy to use and comfortable to

bring has been developed. Motion, audio and pho-

tometric data of five basic every-day life activities

have been collected from ten volunteer testers. The

activities performed are walking, climbing up/down

stairs, staying standing, talking with people and work-

ing at computer. Testers performed activities where

they want and for the time they want. Developing a

custom wearable system allows simulating the use of

wearable devices people use everyday, such as mobile

phones, but with the complete freedom to customize

each software level, from the operating system to the

application level. We propose a discriminative ma-

chine learning pipeline for user verification. Discrim-

inant classifiers have proven to be extremely efficient

and powerful tools, even surpassing the performances

of generative machine learning techniques. Using this

framework, a two stage process is defined. In the first

stage, a general walking classifier is trained using a

baseline training set using en ensemble strategy based

on AdaBoost (Freund and Schapire, 1999). The clas-

sifier is subsequently personalized adding data of ver-

ified users in order to boost the performances of the

walking activity for those users. Since AdaBoost is

an incremental classifier, this process is extremely ef-

ficient since it just needs to add further weak classi-

fiers to the original baseline classifier. Once the walk-

ing activity is detected for the specific user, we must

verify if it is an authorized user. From the discrim-

inative point of view, user modeling without counter

examples can be done using One-Class classification

strategy (Tax, 2001). In One-Class classification, the

boundary of a given dataset is found and the confi-

dence that data belong to that set depends on the dis-

tance to the boundary. Thus, in the second stage, a

One-Class ensemble is created using as base classi-

fier a convex-hull on a reduced feature space. In this

work, we shown that this novel technique performs

well from both classification accuracy and computa-

tional cost point of view. Results obtained prove that

users can be verified with high confidence, with very

low false positive and false negative rates. The lay-

out of this paper is as follows. In the next section,

we describe the wearable device developed and the

data acquisition process. In Section 3, we describe

the features extraction process and in Section 4, the

classifiers used for the classifications. In Section 5

we show results obtained and finally, in Section 6, we

discuss results and conclude.

2 THE WEARABLE DEVICE

In this section, the wearable device and the data ac-

quisition process are described. The wearable sys-

tem, called BeaStreamer, is built around the Beagle

Board (TI, 2008). The device has small form factor

and it is comfortable to wear. Using BeaStreamer,

data have been collected from ten testers performing

five activities.

2.1 BeaStreamer

BeaStreamer is a wearable system designed for multi-

sensors data acquisition and processing. The system

acquires audio, video and motion data. The system

can be easily worn in one hand or in a little bag around

the waist. The audio and video data flows are acquired

using a standard low-cost web cam. Motion data

are acquired using a Bluetooth tri-axial accelerom-

eter. The core of the system is the Beagle Board,

a low-power, low-cost single-board computer built

around the OMAP3530 system-on-chip. OMAP3530

includes an ARM Cortex-A8 CPU at 600 MHz, a

TMS320C64x+ DSP for accelerated video and au-

dio codecs, and an Imagination Technologies Pow-

erVR SGX530 GPU to provide accelerated 2D and

3D rendering that supports OpenGL ES 2.0. DC sup-

ply must be a regulated 5 Volts. The board uses 2

Watts of power. An AKAI external USB battery at

1700mAh allows approximately 3 hours of autonomy

for the system in complete functionality. A Linux

Embedded operating system has been compiled ad-

hoc for the system and standard software interfaces

such as Video4Linux2 and BlueZ can be used for data

acquisition. It is possible to connect directly a moni-

tor and a keyboard to the board, using the board as a

standard personal computer. It is also possible enter

into the system by a serial terminal. The GStreamer

framework has been used for acquiring audio video

and Bluetooth motion data allowing to easily manage

synchronization issue in the data acquisition process.

The board can be programmed in C or Python.

PECCS 2011 - International Conference on Pervasive and Embedded Computing and Communication Systems

180

2.2 Data Acquisition

The system works with video, audio and accelerome-

ter data. It takes photos, grabs audio and receives data

from the accelerometer via Bluetooth. Accelerom-

eter data are sampled at 52Hz with a resolution of

±4g. All sensors are localized on the chest. Data

have been collected from ten volunteers, three women

and seven men with age between 27 and 35. Testers

were free to perform activities in the environment they

selected overpassing the laboratory setting limitation.

All the activities have been performed for at least 15

minutes. Activities performed are climbing up/down

stair,walking,talking with people,standing and work-

ing at computer. For labeling activities, only the se-

quential order of the activities has been annotated.

Every time an activity is performed, testers have to

start the system that after booting, automatically starts

the acquisition task while the user is already perform-

ing the activity. Even if audio and video have been

also acquired, in this work only accelerometer data

are taken into account.

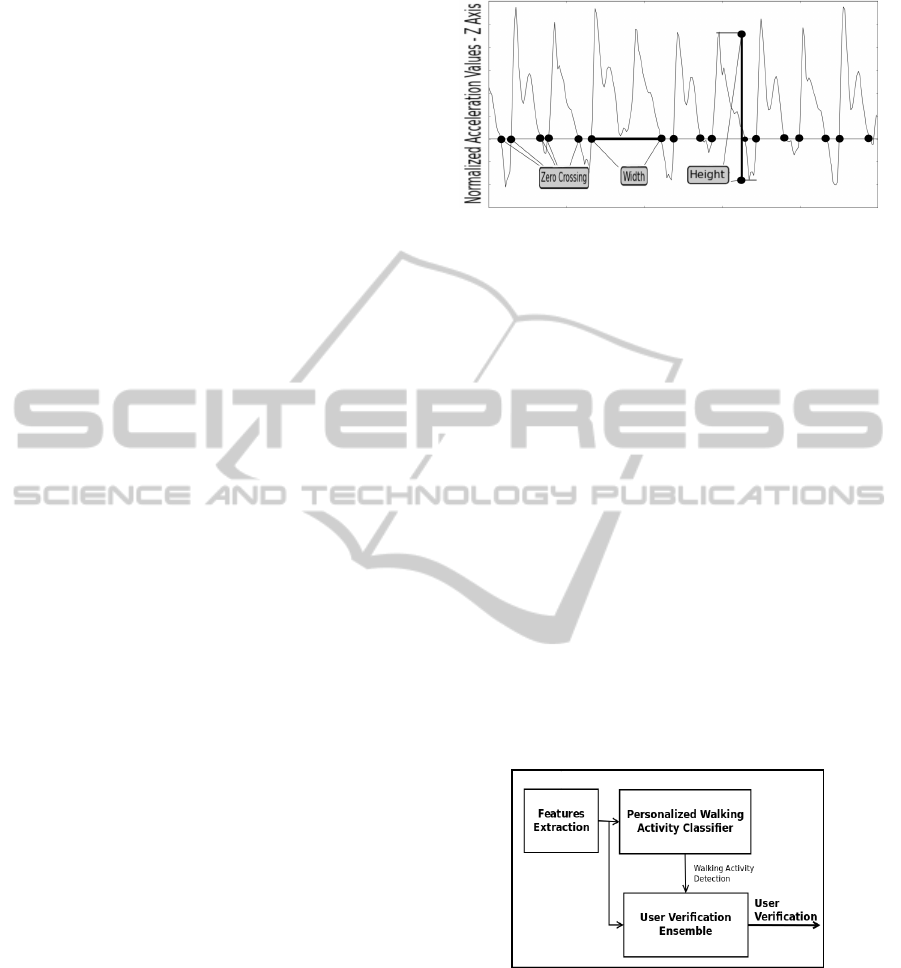

3 FEATURES EXTRACTION

Tri-axial accelerometers produce three acceleration

time series, one for each motion axis. Before extract-

ing features, a smoothing filter has been applied to the

signal. Each acceleration time series has been win-

dowed using a 2 seconds window. Mean value, stan-

dard deviation, skewness, kurtosis, mean value of the

number of samples where the normalized waveform is

positive, difference between the maximum value and

the minimum value in a normalized waveform and

number of zero-crossing of the normalized waveform

have been computed as features. Once features from

1 to 4 are computed, the acceleration data is normal-

ized and features from 5 to 7 computed. In Figure 1,

six seconds of acceleration related to walking activity

on Z axis are reported and features extracted from the

waveform are drawn. Feature 5 is denoted as width,

Feature 6 as height and Feature 7 as zero-crossing.

Finally, a 22-dimensional feature space is obtained.

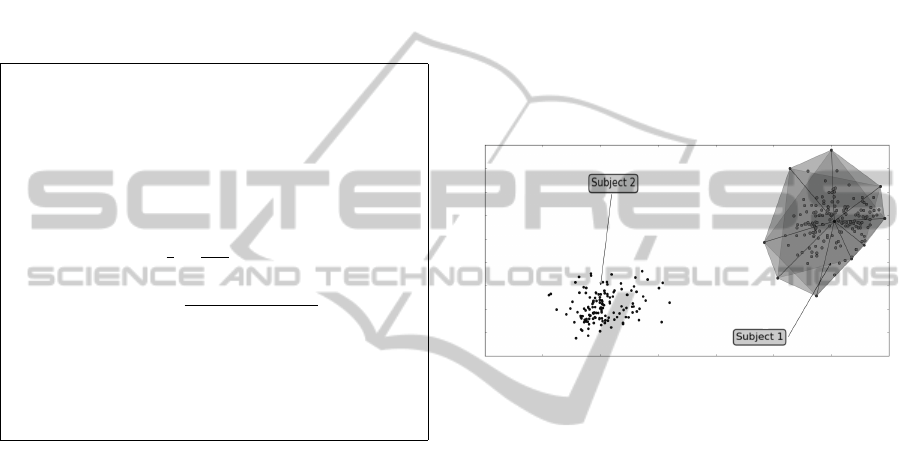

4 THE VERIFICATION PIPELINE

A two-stage pipeline is proposed for user verification.

The overall user verification system is shown in Fig-

ure 2. The general walking activity classifier, based

on AdaBoost, is trained using many walking activi-

ties from many persons. It receives as input the fea-

tures extracted from accelerometer data and it detects

Figure 1: Graphical description of Feature 5 (width), Fea-

ture 6 (height) and Feature 7(zero-crossing) extracted from

Motion Data.

when walking activities occur. The general classifier

is subsequently personalized adding data of the spe-

cific users. Using a further training step, the perfor-

mances of the walking activity are considerably en-

hanced for the specific user. In this way, the per-

sonalized walking activity classifier is able to filter

non walking activities and a big load of walking ac-

tivities from other users. The incremental training

process becomes extremely simple and efficient since

AdaBoost just needs to add more weak classifiers to

the original baseline classifier. When a walking ac-

tivity is detected, the verification task is provided by

the user verification ensemble. This classifier receives

as input the features computed on the accelerometer

data and checks if the walking activity belongs to the

verified user or not. User verification is performed

by an ensemble of naive One-Class classifiers using a

convex hull to define the region characteristic of the

specific user in a reduced features space.

Figure 2: Block Diagram of the User Verification System.

4.1 Walking Classification

AdaBoost (Freund and Schapire, 1999) is an efficient

algorithm for supervised learning. AdaBoost boosts

the classification performance of a weak learner, by

combining a collection of weak classification func-

tions to form a stronger classifier. The algorithm com-

bines iteratively the weak classifiers by taking into ac-

count a weight distribution on the training samples

USER VERIFICATION FROM WALKING ACTIVITY - First Steps towards a Personal Verification System

181

such that more weight is attributed to samples mis-

classified by previous iterations. The final strong clas-

sifier is a weighted combination of weak classifiers

followed by a threshold. Table 1 shows the pseudo-

code for AdaBoost. The algorithm takes as input

a training set (x

1

,y

1

),...,(x

m

,ym) where x

k

is a N-

dimensional features vector, and y

k

are the class la-

bels. After T rounds of training, T weak classifiers

h

t

and ensemble weights α

t

are used to assemble the

final strong classifiers. AdaBoost training algorithm

Table 1: AdaBoost Algorithm.

- Given a training set (x

1

,y

1

),..., (x

m

,y

m

), with x

k

∈ R

N

,

y

k

∈ Y = {1,+1} ;

- Initialize weights D

1

(k) = 1/m, k = 1,... ,m ;

- For t = 1,. .., T :

1. Train weak learner using distribution D

t

2. Get weak hypothesis h

t

: X → {−1, +1}

with error ε

t

= Pr

k∼D

t

[h

t

(x

k

) 6= y

k

]

3. Choose α

t

=

1

2

ln(

1−ε

t

ε

t

)

4. Update :

D

t+1

(k) =

D

t

(k) exp(−α

t

y

k

h

t

(x

k

))

Z

t

where Z

t

is a normalization factor chosen

so that D

t+1

will be a distribution.

- Output the final hypothesis

H(x) = sign(

∑

T

t=1

α

t

h

t

(x))

is incremental. After n training steps on a dataset, it is

possible to add further m training steps using another

dataset by adding new weak classifiers. This char-

acteristic is crucial in the personalization step of the

proposed pipeline. For classifying walking activity,

AdaBoost is trained only using features from 5 to 7.

As second step, the classifier has been further trained

using all the features related to the specific subject, for

each subject. The weak classifiers used are decision

stumps.

4.2 User Verification

Based on the intuition derived from visual feature

analysis, a One-Class classifier ensemble has been

trained using the convex hull generated on a reduced

features space. The underlying idea is shown in Fig-

ure 3. Data related to each user are localized in a spe-

cific region of the features space. Training the One-

Class classifier means building the convex hull and

defining the region of the space where user data lies.

When a new point appears, if the point is inside the

convex hull, then it represents a walking activity of

the user. If the point does not belong to the interior of

the convex hull, it has a confidence value to belong to

the user that is inversely proportional to the distance

from the border of the convex hull. Using the convex

hull is theoretically justified in (Bennett and Breden-

steiner, 2000). The authors state that finding the maxi-

mum margin between two sets is equivalent to finding

the closest points in the convex hull. Therefore, mod-

elling the convex hull around features points is equiv-

alent to use an SVM classifier if classes do not over-

lap. The computational complexity for building the

convex hull is O(n log n) as reported in (Toussaint,

1985), only using m n points stored in memory,

with n being the number of training points. For those

reasons, such algorithm can easily run in devices with

limited computational and memory resources. The

Figure 3: User verification using a convex hull as One-Class

classifier.

One-Class classifier has been trained for each pair-

wise combination of features, obtaining in this way

an ensemble of One-Class classifiers. The final re-

sult is obtained averaging the results of every single

One-Class classifier. The algorithm for the convex

hull One-Class classifier is reported in Table 2.

5 EXPERIMENTAL RESULTS

In order to validate walking activity classification

accuracy and user verification performances, cross-

validation has been used. Cross-validation is a tech-

nique for assessing how the results of a classifica-

tion process generalize on an independent data set.

A round of cross-validation is performed partition-

ing the entire dataset into different subsets, perform-

ing training on one set and testing the classifier on

an other dataset. There exist many different cross-

validation schemes. In this setting, a Leave-One-

User-Out (LOUO) cross validation scheme has been

used. LOUO cross-validation involves using a single

user for testing purposes and the remaining users as

training data. This is repeated such that each user in

the dataset is used once as testing data. In the fol-

PECCS 2011 - International Conference on Pervasive and Embedded Computing and Communication Systems

182

Table 2: Ensemble One-Class Algorithm.

Train:

-Given a training set X[m, n], with X ∈ R

M×N

;

1. For i = 0 : N

2. For j = i + 1 : N − 1

3. Compute the convex hull Ch{i, j} for the

dataset X

new

= {X [i, n],X[ j,n]}

Return Ch

Classify:

- Given a dataset D[m,n], with D ∈ R

M×N

;

- Initialize Res[i,j]=0

1. For i = 0 : N

2. For j = i + 1 : N − 1

3. If (D[i],D[ j]) is inside Ch[i, j]

4. Res[i, j] = 1

5. Else

6. Res[i, j] = e

−dx

where d = distance from the border

-Return the mean of all results for each point

lowing subsections, all the results relative to general

walking classification, personalized walking classifi-

cation and user verification are reported.

5.1 General Walking Classification

Results

The first step of the system aims at distinguishing

the walking activity disregarding the user informa-

tion. This value is used as reference value for further

improvements by means of personalization. Classifi-

cation performances on general walking classification

have been evaluated using LOUO scheme with Ad-

aBoost with 50 Decision Stumps trained only using

features from 5 to 7 – features that do not depend on

the specific user. Overall classification performances

obtained are reported in Table 3.

Table 3: Classification Performances for General Walking

Classifier.

Precision Recall Specificity Sensitivity

95.4% 95.5% 98.4% 98.5%

5.2 Personalized Walking Classification

Results

The results obtained from the classifier are good but

further improvement can be achieved if the training

set has access to actual authorized user data. Since

these data must be acquired compulsory for the veri-

fication process, they can be used to increase the per-

formances of step 1. In this step, the classification

performances for a walking activity for every single

subject have been evaluated. Datasets have been sep-

arated for the user and the rest of persons. For eval-

uating the performance taking into account data from

the authorized user a five-fold cross validation process

is performed for each fold of LOUO cross-validation.

This means that the classifier has been trained on 80%

of the data of all the persons and on 80% of the data

for the specific subject. The resulting classifier has

been tested on the remaining 20% of the data of both

the rest and the specific subject. This process is re-

peated 5 times for each user and results averaged.

The classification performances are reported in Fig-

ure 4. Adding a further training to the classifier has

the effect that might be expected. The classification

performances for general walking classification de-

crease while the classification performances for the

specific subject are considerably improved. The walk-

ing classifier works with very high performances only

on walking data of the specific subject, heavily filter-

ing the walking activities of other subjects. For the

majority of the subjects, the classification accuracy

for a walking activity is above 98%.

Figure 4: Personalized Walking Classification Accuracy.

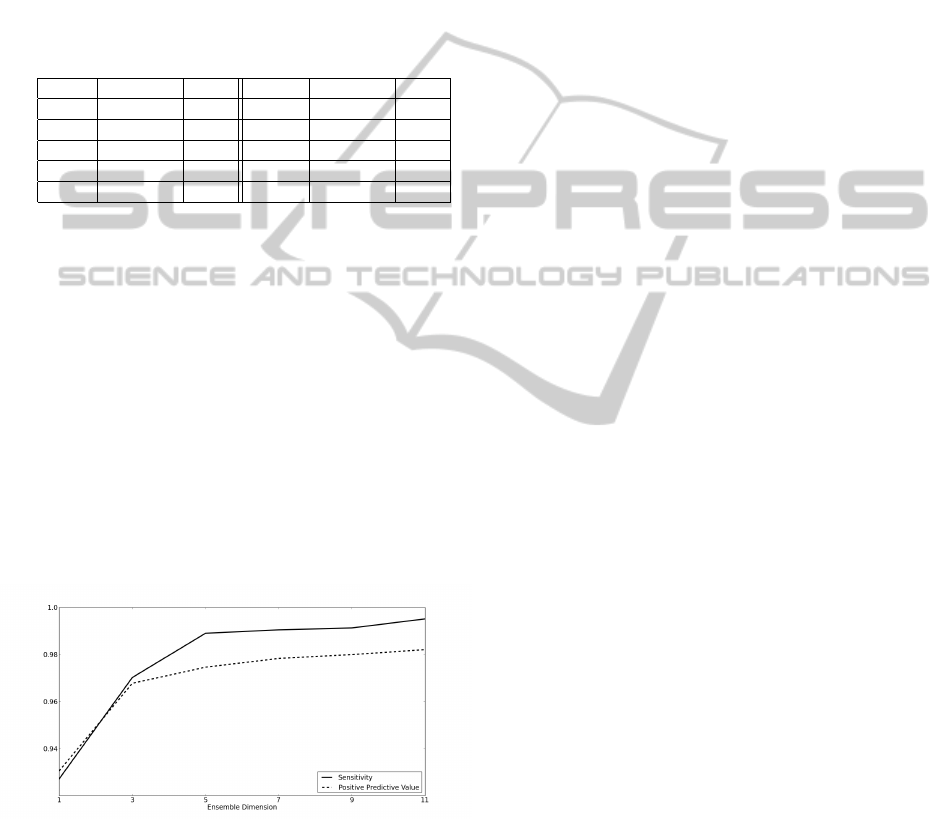

5.3 User Verification Results

The verification process must ensure two things. First

of all, the system must not block given an authorized

user. This means that the false negatives value should

be as small as possible. This is measured by the Sen-

sitivity parameter, defined in Equation 1.

Sensitivity =

True Positives

True Positives + False Negatives

(1)

On the other side, the system has to avoid as many in-

trusions as possible. This is measured by the number

of false positives and reflected in the Positive Predic-

tive Value parameter, defined in Equation 2.

PositivePredictiveValue =

True Positives

True Positives + False Positives

(2)

USER VERIFICATION FROM WALKING ACTIVITY - First Steps towards a Personal Verification System

183

Both parameters are critical and measure the degree

of goodness of the system. For verification purposes,

a five-fold cross validation process is performed for

each fold of LOUO cross-validation. Again, an En-

semble One-Class classifier has been trained on the

80% of the data of the authorized user and tested on

the remaining 20% of that user and on all data from

the rest of the subjects. This process is repeated five

times for each user using five different, non overlap-

ping data subsets for testing. The verification accu-

racy obtained is reported in Table 4. Both sensitivity

Table 4: Performances of the Verification System.

User Sensitivity PPV User Sensitivity PPV

Subj 1 0.922 0.981 Subj 6 0.914 0.991

Subj 2 0.976 0.718 Subj 7 0.835 0.931

Subj 3 0.97 1. Subj 8 0.91 0.972

Subj 4 0.936 0.938 Subj 9 0.971 0.947

Subj 5 0.981 0.912 Subj 10 0.853 0.912

and positive predictive value are high. However, this

value of sensitivity is not acceptable since we must

minimize the system mistakes on authorized users.

In order to improve this parameter, verification has

been performed on subsequent temporal windows us-

ing an ensemble of Ensemble One-Class with a Ma-

jority Voting decision process. In Figure 5, it is shown

how verification accuracy varies with respect to the

size of the ensemble. Using bigger ensembles, equiv-

alent to use wider temporal windows, improve signifi-

cantly the accuracy for both parameters, ensuring very

high sensitivity and positive predictive value. Starting

from an ensemble of size 3, all the user sensitivities

are above 98% and starting from an ensemble of size

5, all the positive predictive values are above 97%.

Figure 5: Sensitivity and PPV versus Ensemble Dimension.

6 DISCUSSION

AND CONCLUSIONS

In this work, an User Verification System by walk-

ing activity using a discriminative machine learning

pipeline has been proposed. Using a wearable sys-

tem, motion data have been collected from 10 testers.

Using motion data, classification of walking activity

and user verification have been performed.

Results related to walking classification demon-

strate that a walking activity can be separated from

other activities and successfully classified. In particu-

lar, when the classifier is personalized for the specific

subject, classification results are highly accurate and,

for the specific user, the walking classifier reaches

very high performances.

User verification has been performed with high

accuracy. In particular, experiments show that results

can be significantly improved when temporal infor-

mation is taken into account in the verification pro-

cess. Nevertheless, there exist still some subjects that

can not be verified as desired. For instance, subject 10

and, in a special way, subject 7, have sensitivity lower

respect to the others subjects. For subject 7, the worst

case, verification accuracy obtained using an ensem-

ble of 11 ensemble of One-Class classifiers is still

much lower with respect to the other subjects. Due to

the outliers, the convex hull has not well defined bor-

ders and the verification performances of these sub-

jects are affected. More study must to be done on this

subject.

From our point of view, using the convex hull as

One-Class classifier has been a very practical way

of tackling the problem of user verification ensuring

very good results but more sophisticated techniques

be suitable for this purpose. Using a One-Class SVM

should characterize better the boundaries of users re-

gion, providing also robustness to outliers at the cost

of increasing the processing time when specializing

the system for an authorized user. Note that this pro-

cess must be performed on the wearable system and

training a One-Class SVM is computationally hard.

Finally, a more accurate validation of the system

must be done using more testers and putting the sen-

sor in different body locations. Putting the sensor on

the chest is a realistic assumption, taking into account,

for instance, that a mobile phone can be worn in the

jacket pocket. However, for the development of a ro-

bust verification system, more locations need to be

taken into account, such as pant pockets or even in

the hands, providing more interesting and a challeng-

ing case of study.

ACKNOWLEDGEMENTS

This work has been supported in part by projects

TIN2009-14404-C02, La Marat

´

o de TV3 082131 and

CONSOLIDER-INGENIO CSD 2007-00018.

PECCS 2011 - International Conference on Pervasive and Embedded Computing and Communication Systems

184

REFERENCES

Bennett, K. P. and Bredensteiner, E. J. (2000). Duality and

geometry in svm classifiers. In 17th ICML, pages 57–

64. Morgan Kaufmann.

Clarkson, B. and Pentland, A. (1999). Unsupervised clus-

tering of ambulatory audio and video. In ICASSP ’99,

pages 3037–3040.

Derawi, M. O., Nickel, C., Bours, P., and Busch, C. (2010).

Unobtrusive user-authentication on mobile phones us-

ing biometric gait recognition. In Sixth Interna-

tional Conference on Intelligent Information Hiding

and Multimedia Signal Processing.

Freund, Y. and Schapire, R. E. (1999). A short introduction

to boosting.

Gafurov, D., Helkala, K., and Søndrol, T. (2006). Biometric

gait authentication using accelerometer sensor. Com-

puters, 1(7).

Lester, J., Choudhury, T., and Borriello, G. (2006). A prac-

tical approach to recognizing physical activities. In In

Proc. of Pervasive, pages 1–16.

Mannini, A. and Sabatini, A. M. (2010). Machine learning

methods for classifying human physical activity from

on-body accelerometers. Sensors, 10(2):1154–1175.

M

¨

antyj

¨

arvi, J., Lindholm, M., Vildjiounaite, E., M

¨

akel

¨

a, S.,

and Ailisto, H. (2005). Identifying users of portable

devices from gait pattern with accelerometers. In

ICASSP.

Tax, D. (2001). One-class classification. phd, Delft Univer-

sity of Technology, Delft.

TI (2008). http://beagleboard.org.

Toussaint, G. T. (1985). A historical note on convex hull

finding algorithms. PRL, 3(1):21–28.

USER VERIFICATION FROM WALKING ACTIVITY - First Steps towards a Personal Verification System

185