STATIC OPTIMIZATION OF DATA INTEGRATION PLANS

IN GLOBAL INFORMATION SYSTEMS

Janusz R. Getta

School of Computer Science and Software Engineering, University of Wollongong, Wollongong, Australia

Keywords:

Data integration, Global information system, Multidatabase system, Online data integration, Integration plan,

Static optimization.

Abstract:

Global information systems provide its users with a centralized and transparent view of many heterogeneous

and distributed sources of data. The requests to access data at a central site are decomposed and processed

at the remote sites and the results are returned back to a central site. A data integration component of the

system processes data retrieved and transmitted from the remote sites accordingly to the earlier prepared data

integration plans.

This work addresses a problem of static optimization of data integration plans in a global information system.

Static optimization means that a data integration plan is transformed into more optimal form before it is used

for data integration. We adopt an online approach to data integration where the packets of data transmitted

over a wide area network are integrated into the final result as soon as they arrive at a central site. We show

how data integration expression obtained from a user request can be transformed into a collection of data

integration plans, one for each argument of data integration expression. This work proposes a number of static

optimization techniques that change an order operations, eliminate materialization and constant arguments

from data integration plans implemented as relational algebra expressions.

1 INTRODUCTION

Efficient integration of data retrieved and transmitted

from the remote sources is one of the central problems

in the development of global information systems that

provide the users with a centralized and transparent

view of many heterogeneous and distributed sources

of data. A data integration component of a global

information system processes data retrieved from the

remote sites and transmitted to a central site. A typ-

ical architecture of a global information system de-

composes the user requests into the requests related

to the remote source of data and submits the requests

fro the processing at the remote sites. The results of

processing at the remote sites are transmitted back to

a central site and integrated with data already avail-

able there. A process of data integration acts upon a

data integration plan which is prepared when a user’s

request is decomposed into the requests related to the

remote sources. A data integration plan determines an

order in which the individual requests are issued and

a way how the results of these request are combined

into the final result. The individual requests can be

issued accordingly to entirely sequential or entirely

parallel, or mixed sequential and parallel strategies.

Accordingly to an entirely sequential strategy a re-

quest q

i

can be submitted for processing at a remote

site only when all results of the requests q

1

,...,q

i−1

are available at a central site. An entirely sequential

strategy is appropriate when the results received so

far can be used to reduce the complexity of the re-

maining requests q

i

,...,q

i+k

. Accordingly to an en-

tirely parallel strategy all requests q

1

,...,q

i

,...,q

i+k

are submitted simultaneously for the parallel process-

ing at the remote sites. An entirely parallel strategy

is beneficial when the computational complexity and

the amounts of data transmitted is more or less the

same for all requests. Accordingly to a mixed sequen-

tial and parallel strategy some requests are submitted

sequentially while the others in parallel. Optimiza-

tion of data integration plans is either static when the

plans are optimized before a stage of data integration

or it is dynamic when the plans are changed during

the processing of the requests.

The problem of static optimization of data inte-

gration plans can be formulated in the following way.

Given a global information system that integrates a

number of remote and independent sources of data.

141

R. Getta J..

STATIC OPTIMIZATION OF DATA INTEGRATION PLANS IN GLOBAL INFORMATION SYSTEMS.

DOI: 10.5220/0003423901410150

In Proceedings of the 13th International Conference on Enterprise Information Systems (ICEIS-2011), pages 141-150

ISBN: 978-989-8425-53-9

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

Consider a user request q to a global information sys-

tem and its decomposition into the individual requests

q

1

,...,q

n

simultaneously submitted for the process-

ing at the remote sites. Let a request q be equivalent

to an expression E (q

1

,...,q

n

) over the individual re-

quests. If the remote sites return the results r

1

,...,r

n

in the response to the individual requests q

1

,...,q

n

then the final result a user request q is equal to the

result of an expression E (r

1

,...,r

n

). Then, static op-

timization of data integration plan is equivalent to the

optimization of an expression E (r

1

,...,r

n

).

A naive and quite ineffective approach would be

to postpone data integration until the results r

1

,...,r

n

returned from the remote sites are available at a cen-

tral site. A more effective solution is to consider

an individual reply r

i

as a sequence of data pack-

ets r

i

1

,r

i

2

,...,r

i

k−1

,r

i

k

and to perform data integra-

tion each time a new packet of data is received at

a central site. Such approach to data integration is

more efficient because there is no need to wait for the

complete results when a data integration expression

E (r

1

,...,r

n

) is evaluated accordingly to a given or-

der of operations. Instead, whenever a new packet

of data is received at a central site it is immedi-

ately integrated into the intermediate result no mat-

ter which partial result it comes from. Then, static

optimization of data integration plan finds the best

processing strategy for the sequences of packets of

data r

i

1

,r

i

2

,...,r

i

k−1

,r

i

k

where i = 1, . . . , n. Such ob-

jective requires the transformation of data integra-

tion expression E (r

1

,...,r

i

,...,r

n

) into the individ-

ual data integration plans for the sequences of packets

r

i

1

,r

i

2

,...,r

i

k−1

,r

i

k

where i = 1,...,n.

A starting point for the optimization is a data inte-

gration expression E (r

1

,...,r

i

,...,r

n

) obtained from

decomposition of a user request to a global infor-

mation system. Processing of the individual pack-

ets means that an expression E (r

1

,...,r

i

⊕ δ

i

,...,r

n

)

must be recomputed each time a data packet δ

i

is ap-

pended to an argument r

i

. Of course reprocessing of

the entire data integration expression is too time con-

suming and a better idea is to perform an incremental

processing of the expression, i.e. to find how the pre-

vious result of an expression E (r

1

,...,r

i

,...,r

n

) must

be changed after δ

i

is appended to an argument r

i

. A

data integration expression is transformed into a set

of data integration plans where each plan represents

an integration procedure for the increments of one ar-

gument of the original expression. In our approach

a data integration plan is a sequence of so called id-

operations on the increments or decrements of data

containers and other fixed size containers. In order

to reduces the size of arguments, static optimization

of data integration plans moves the unary operation

towards the beginning of a plan. Additionally, the fre-

quently updated materializations are eliminated from

the plan and constant arguments and subexpressions

are replaced with the pre-computed values.

The paper is organized in the following way. First,

we overview the related works in an area of optimiza-

tion of data integration in distributed global informa-

tion systems. Next, we derive the incremental pro-

cessing of modification and we find a system of oper-

ation on modifications of data items for the system of

operations included in the relational algebra. Trans-

formation of data integration expressions into the sets

of individual data integration plans is discussed in a

section 4 and it is followed by presentation of static

optimization of data integration plans in the next sec-

tion. Finally, section 6 concludes the paper.

2 RELATED WORK

Optimization of data integration in global information

systems can be traced back to optimization of query

processing in multidatabase and federated database

systems (Ozcan et al., 1997).

The external factors affecting the performance of

query processing in multidatabase systems promote

the reactive query processing techniques. The early

works on the reactive query processing techniques

are based on partitioning (Kabra and DeWitt, 1998)

and dynamic modification of query processing plans

(Getta, 2000). A dynamic modification technique

finds a plan equivalent to the original one and such

that it is possible to continue integration of the avail-

able data sets. The similar approaches dynamically

change an order in which the join operations are exe-

cuted depending on the arguments available at a cen-

tral site. These techniques include query scrambling

(Amsaleg et al., 1998) and dynamic scheduling of

operators (Urhan and Franklin, 2001), and Eddies

(Avnur and Hellerstein, 2000),

Optimization of relational algebra operations used

for the data integration includes new versions of join

operation customised to online query processing, e.g.

pipelined join operator XJoin (Urhan and Franklin,

2000), ripple join (Haas and Hellerstein, 1999), dou-

ble pipelined join (Ives et al., 1999), and hash-merge

join (Mokbel et al., 2002).

A technique of redundant computations simulta-

neously processes a number of data integration plans

leaving a plan that that provides the most advanced

results (Antoshenkov and Ziauddin, 2000).

A concept of state modules described in (Raman

et al., 2003) allows for concurrent processing of the

tuples through the dynamic division of data integra-

ICEIS 2011 - 13th International Conference on Enterprise Information Systems

142

tion tasks. Adaptive data partitioning (Ives et al.,

2004) technique processes different partitions of the

same argument using different data integration plans.

The works on adaptive data partitioning (Ives et al.,

2004) and optimization of data stream processing

(Getta and Vossough, 2004) were the first attempts to

use the associativity of join operation to integrate the

separate partitions of the same argumentswith the dif-

ferent integration plans. An adaptive and online pro-

cessing of data integration plans proposed in (Getta,

2005) and later on in (Getta, 2006) considers the sets

of elementary operations for data integration and the

best integration plan for recently transmitted data.

Many of the techniques developed for the effi-

cient processing of data streams (Getta and Vossough,

2004) can be applied to data integration. The reviews

of the most important data integration techniques pro-

posed so far are included in (Gounaris et al., 2002).

3 INCREMENTAL PROCESSING

OF MODIFICATIONS

Initially we consider a process of data integration in

the separation from any particular model of data. We

adopt a general view of a database where a set of

generic unary and binary operations processes a col-

lection of data containers. We do not consider any

particular internal structure of data containers and any

particular system of operations on data containers.

Data containers may represent relational tables, XML

documents, set of persistent objects, files of records,

etc.

3.1 Id-operations

Let r

1

,...,r

k

be data containers whose structure is

consistent with a given model of data. A generic op-

eration of the model is an operation P such that its

arguments are the data containers r and s and whose

result is another data container.

A modification of a data container r is denoted by

δ and it is defined as a pair of disjoint data containers

<δ

−

, δ

+

> such that r∩ δ

−

= δ

−

and r∩ δ

+

=

/

0.

An data integration operation that applies a mod-

ification δ to a data container r is denoted by r ⊕ δ

i

.

For example, in the relational model integration of a

modification δ to a relational table r is defined as an

expression (r− δ

−

) ∪ δ

+

.

Consider a generic operation P (r,s) on the data

containers r and s. An incremental/decremental oper-

ation later on called as an id-operation of an argument

r of a generic operation P (r,s) is denoted by α

P

(δ,s)

and it is defined as the smallest modification δ

P

that

should be integrated with the result of P (r,s) to obtain

the result of P (r⊕ δ,s), i.e.

P (r,s) ⊕ α

P

(δ,r) = P (r ⊕ δ,s) (1)

An incremental/decremental operation of an argu-

ment s of a generic operation P (r, s) is denoted by

β

P

(r,δ) and it is defined as the smallest modification

δ

P

that should be integrated with the result of P (r,s)

to obtain the result of V(r,s⊕ δ), i.e.

P (r,s) ⊕ β

P

(r,δ) = P (r, s⊕ δ) (2)

If a generic operation P (r,s) is a component of

an integration expression then id-operations allow for

faster re-computation of P (r,s) when one of its ar-

guments is integrated with data transmitted from an

external data site An ineffective approach would be to

integrate the transmitted data with an argument and to

re-compute entire generic operation. It is represented

by the right hand sides of the equations (1) and (2).

A better idea is to apply an id-operation to trans-

mitted data and the other argument of the base opera-

tion to get a modification that can be integrated with

the previous result of generic operation. It is repre-

sented by the left hand sides of the equations (1) and

(2). The application of id-operations speeds up data

integration because it is possible to immediately pro-

cess data received at a central site. Id-operations al-

low for the incremental processing of data integration

expressions such that an increment of one of the ar-

guments triggers the computations of a sequence of

id-operations that return a modification, which should

be applied to the final result of integration.

3.2 Relational Algebra based

Id-operations

Let x be a nonempty set of attribute names later on

called as a schema and let dom(a) denotes a domain

of attribute a ∈ x. A tuple t defined over a schema x

is a full mapping t : x → ∪

a∈x

dom(a) and such that

∀a ∈ x, t(a) ∈ dom(a). A relational table created on

a schema x is a set of tuples over a schema x.

Let r, s be the relational tables such that

schema(r) = x, schema(s) = y respectively and let

z ⊆ x, v ⊆ (x∩ y), and v 6=

/

0. The symbols σ

φ

, π

z

, ⋊⋉

v

,

∼

v

, ⋉

v

, ∪, ∩, − denote the relational algebra opera-

tions of selection, projection, join, antijoin, semijoin,

and set algebra operations of union, intersection, and

difference. All join operations are considered to be

equijoin operations over a set of attributes v.

To find the analytical solutions of the equations (1)

and (2) we we assume that a data integrationoperation

is computed in the relational model in the following

way.

r⊕ δ = (r − δ

−

) ∪ δ

+

. (3)

STATIC OPTIMIZATION OF DATA INTEGRATION PLANS IN GLOBAL INFORMATION SYSTEMS

143

Then, the id-operations α

P

and β

P

can be decom-

posed into the pairs of operations each one acting on

either negative (δ

−

) or positive (δ

+

) component of a

modification δ.

α

P

(δ,s) =< α

−

P

(δ

−

,s),α

+

P

(δ

+

,s) >, (4)

β

P

(r,δ) =< β

−

P

(r,δ

−

),β

+

P

(r,δ

+

) > . (5)

If we separately consider the negative and positive

components of a modification δ and we replace data

integration operation with its relational definition then

we get the following equations.

P (r,s) − α

−

P

(δ

−

,s) = P (r− δ

−

,s) (6)

P (r,s) ∪ α

+

P

(δ

+

,s) = P (r∪ δ

+

,s) (7)

P (r,s) − β

−

P

(r,δ

−

) = P (r,s− δ

−

) (8)

P (r,s) ∪ β

+

P

(r,δ

+

) = P (r,s∪ δ

+

) (9)

To find the id-operations we solve the equations

above for the generic operations of union (∪), join

(⋊⋉), and antijon (∼) and we assume that selection op-

eration is always directly applied to the arguments of

binary operations and projection is applied only one

time to the final result of query processing.

The analytical solutions of the equations (6) and

(7) provide the following results.

α

∪

(δ,s) =< δ

−

− s,δ

+

− s > (10)

β

∪

(r,δ) =< δ

−

− r,δ

+

− r > (11)

The derivations of id-operation for the generic opera-

tions of join and antijoin can be obtained in the same

way.

α

⋊⋉

(δ,s) =< δ

−

⋊⋉

v

s,δ

+

⋊⋉

v

s > (12)

β

⋊⋉

(r,δ) =< δ

−

⋊⋉

v

r,δ

+

⋊⋉

v

r > (13)

α

∼

(δ,s) =< δ

−

∼

v

s,δ

+

∼

v

s > (14)

β

∼

(r,δ) =< r⋉

v

δ

+

,r⋉

v

δ

−

> (15)

3.3 Application of Id-operations to Data

Integration

Consider a data integration expression E (r,s,t) =

t ∼

v

(r ⋊⋉

z

s) where r, s, t are the remote data

sources and v = schema(r) ∩ schema(t) and z =

schema(r) ∩ schema(s). Assume, that a new incre-

ment δ

s

=<

/

0,δ

+

s

> of an argument s has been just

transmitted to a central site. We would like like to re-

compute a data integration expression E (r,s ⊕ δ

s

,t)

immediately after the integration of an increment δ

s

with an argument s.

To avoid re-computation of entire data integration

expression we first find a modification δ

rs

that should

be applied to a result of r ⋊⋉

z

s after the extension of

an argument s with δ

s

. Next, we find a modification

δ

rst

that should be applied to the result of t ∼

v

(r ⋊⋉

z

s)

after modification δ

rs

is applied to the result of r ⋊⋉

z

s

From the equation (13) we get δ

rs

=<

/

0,δ

+

s

⋊⋉ r >.

Next, to find δ

rst

we find β

∼

(t,δ

rs

). From the equation

(15) we get δ

rst

=< t ⋉

v

δ

+

rs

,r⋉

v

/

0 >. Finally, δ

rst

=<

t ⋉

v

(δ

+

s

⋊⋉ r),

/

0 >. Hence, in order to get the result of

E (r,s⊕ δ

s

,t) after the extension of s with δ

s

we have

to compute E (r,s,t) − (t⋉

v

(δ

+

s

⋊⋉ r)).

Next, we consider the same data integration ex-

pression t ∼

v

(r ⋊⋉

z

s) and a new increment δ

t

of a

remote data source t. Now, processing of an incre-

ment δ

t

needs either materialization of an intermedi-

ate result of a subexpression (r ⋊⋉

z

s) or transforma-

tion of the data integration expression into an equiv-

alent one with either left (right)-deep syntax tree and

with an argumentt in the leftmost (rightmost)position

of the tree. Materialization of an intermediate results

decreases the overall performance because when one

of its arguments is extended then entire subexpression

of materialization must be re-computed. On the other

hand it is not always possible to transform an inte-

gration expression into a left or right deep syntax tree

such that a modification is located at the lowest leaf

level of the tree.

If materialization m

rs

= r ⋊⋉ s is available then

from an equation (14) we get δ

rst

=<

/

0,δ

+

t

∼

v

m

rs

>.

Hence, in order to get the result of E (r,s,t ⊕ δ

t

) af-

ter the extension of t we have to compute E (r, s,t) ∪

(δ

+

t

∼

v

m

rs

).

If materialization m

rs

is not available then an in-

teresting option is to transform an expression δ

+

t

∼

v

(r ⋊⋉ s)) into δ

+

t

∼

v

((r⋉ δ

+

t

) ⋊⋉ s). Such transforma-

tion is correct because we do not need the entire result

of r ⋊⋉ s to be computed, we only need the rows from

r that can be joined with δ

+

t

over the attributes in v. A

subexpression r⋉ δ

+

t

will reduce the size of an argu-

ment r before join with s and its computation can be

done faster because δ

+

t

is small.

4 DATA INTEGRATION PLANS

In this section we introduce a concept of data inte-

gration plan and we show how to transform a data

integration expression into a set of data integration

plans. Let E (r

1

,...r

n

) be a data integration expres-

sion built over the generic operations and data con-

tainers r

1

...,r

n

. A syntax tree T

e

of an expression E

is a binary tree such that:

(i) for each instance of argument r

1

,...r

n

there is a

leaf node are labelled with a name of argument,

(ii) for each subexpression P (e

′

,e

′′

) of E where P is

a generic operation and e

′

and e

′′

are the subex-

ICEIS 2011 - 13th International Conference on Enterprise Information Systems

144

pression of E there is a node labelled with P that

has two subtrees T

e

′

and T

e

′′

.

A data integration plan is a sequence of assignment

statements s

1

,...,s

m

where the right hand side of each

statement is either an application of a modification to

a data container (m

j

:= m

j

⊕ δ

i

) or an application of

left or right id-operation (δ

j

:= α

j

(δ

i

,m

k

)).

Consider an argument r

i

of a data integration expres-

sion E . An implementation of data integration ex-

pression for an argument r

i

is constructed in the fol-

lowing steps.

1: Assign unique numbers to each node of a syntax

tree T

e

.

2: Make an implementation p

i

empty.

3: Start from a leaf node of T

e

labelled with r

i

.

4: While not in the root node of T

e

move to ancestor

node of the current node and execute a procedure

moveToAncestor(i, j) where i is an identifier of

the current node and j is an identifier of the an-

cestor node.

5: When in the root node i append a statement

result := result⊕ δ

i

;

A procedure moveToAncestor(i, j) consists of the fol-

lowing steps.

1: If i is a leaf node and it is the left descendant of

a node j then append a statement that computes

id-operation p

i

: δ

j

:= α

operation

j

(δ

r

i

, m

k

); where

m

k

is either a data container or a materialization

in the right descendant of node j.

2: If i is a leaf node and it is the right descendant of

a node j then append a statement that computes

id-operation p

i

: δ

j

:= β

operation

j

(m

k

, δ

r

i

); where

m

k

is either a data container or a materialization

in the left descendant of node j.

3: If i is not a leaf node of T

e

then append a statement

m

i

:=m

i

⊕ δ

i

; where m

i

is a materialization in a

node i.

4: If i is not a leaf node and it is the left descendant

of a node j then append a statement that computes

id-operation δ

j

:= α

operation

j

(δ

i

, m

k

); where m

k

is

either a data container or a materialization in the

right descendant of node j.

5: If i is not a leaf node and it is the right descendant

of a node j then append a statement that computes

id-operation δ

j

:= β

operation

j

( m

k

, δ

i

); where m

k

is

either data container or a materialization in the left

descendant of node j.

As a simple example consider a data integration

expression P (r, s,t) = t ⋊⋉

v

(r ∼

w

s) and its syntax tree

~t

r s

1:

2: 3:

4: 5:

Figure 1: A syntax tree of an expression t ⋊⋉

v

(r ∼

w

s).

with the numbered nodes given in Figure (1). Starting

from a node 4 we get a data integration plan for the

modifications of argument r:

p

4

: δ

3

:= α

∼

(δ

r

,s);

m

3

:= m

3

⊕ δ

3

;

δ

1

:= β

⋊⋉

(t,δ

3

);

result := result ⊕ δ

1

;

An equivalent relational data integration plan is ob-

tained by the substitution of id-operations with the

equivalent relational algebra expressions.

p

4

: δ

3

:= δ

r

∼ s;

m

3

:= m

3

⊕ δ

3

;

δ

1

:= t ⋊⋉ δ

3

;

result := result ⊕ δ

1

;

The relational algebra operations on the relational

tables and modifications process both negative and

positive components of the modifications. For ex-

ample, δ

3

:= δ

r

∼ s; is equivalent to δ

−

3

:= δ

−

r

∼ s;

δ

+

3

:= δ

+

r

∼ s;

The data integration plans for the arguments s and t

are the following.

p

5

: δ

3

:= β

∼

(r,δ

s

);

m

3

:= m

3

⊕ δ

3

;

δ

1

:= β

⋊⋉

(t,δ

3

);

result := result ⊕ δ

1

;

p

3

: δ

1

:= α

⋊⋉

(δ

t

,m

3

);

result := result ⊕ δ

1

;

5 STATIC OPTIMIZATION OF

DATA INTEGRATION PLANS

Static optimization of data integration plan means

that transformation of the plans is performed before a

stage of data integration while dynamic optimization

of data integration plans changes the plans during a

stage of data integration. In order to get more sound

results we consider a language of data integration ex-

pressions to be the relational algebra and we consider

data integration expressions built from the operations

of join, antijoin, and union only. We also assume, that

operation of selection is always processed together

with an adjacent binary operation and projection is

computed at the very end of data integration.

STATIC OPTIMIZATION OF DATA INTEGRATION PLANS IN GLOBAL INFORMATION SYSTEMS

145

5.1 Preliminary Optimizations

The preliminary optimizations are performed be-

fore the transformation of data integration expression

into data integration plans and it includes the stan-

dard transformations of relational algebra expressions

where the selections and projections are ”pushed”

down the syntax trees of data integration expression

and join operations are reordered such that the joins

on the small arguments are performed first. Next, the

selection operations are associated with the binary op-

erations such that the rows that satisfy a selection con-

dition are directly ”piped” to the first stage of the com-

putations of the binary operations. For example, if

a join operation implemented as hash-based join fol-

lows a selection then a row that satisfies a selection

condition is not saved in a temporary results of se-

lection and instead is hashed in the first stage of the

computations of a hash-based join. The same tech-

niques is applied to the selection operations that can-

not be ”pushed” down below the binary operations,

for example σ

a>c

(r(ab) ⋊⋉

b

s(bc)) are computed by

”piping” the rows obtained as the results of binary

operation r(ab) ⋊⋉

b

s(bc) directly to a selection op-

erations. It is also possible to implement a selection

operation as an additional comparison when the rows

from the arguments are matched during the process-

ing of binary operation, for example in the example

above, testing of equality condition r.b = s.b can be

followed by testing of a condition r.a > s.c. After

the preliminary stage of optimizations data integra-

tion expressions are transformed into data integration

plans.

5.2 Optimization through Reordering of

Operations

The following example shows how further optimiza-

tion of data integration plans can be achieved through

reordering of the operations. Consider a fragment of

data integration plan p

t

: δ

j

:= δ

t

⋊⋉

v

r; m

j

:= m

j

⊕ δ

j

;

δ

k

:= δ

j

∼

w

s; The following two observations lead to

the transformations that may have a positive impact

on the performance of data integration plans. First, if

a data container r is significantly larger than a data

container s then it would be more efficient to start

from the computations on a data container s because

the results would be smaller. Second, some of the

data items in δ

j

may not contribute to the results of

δ

k

:= δ

j

∼

w

s and can be removed from δ

j

before the

computations of antijoin operation. We compute an

operation δ

i

÷ s to partition both s and δ

j

into pairs

<s

(δ

j

+)

,s

(δ

j

−)

> and <δ

(s+)

j

,δ

(s−)

j

> such that only

s

(δ

j

+)

and δ

(s+)

j

have an impact on the result of

δ

k

:= δ

j

∼

w

s; The partitioning is performed such that

δ

(s+)

i

:= δ

i

⋉ s, δ

(s−)

i

:= δ

i

∼ s, s

(δ

i

+)

:= s⋉ δ

i

, and

s

(δ

i

−)

:= s ∼ δ

i

. Then, we compute <δ

(s+)

i

,δ

(s−)

i

>⋊⋉ r

and later on the result of δ

(s−)

i

⋊⋉ r is directly passed

to δ

k

and it is unioned with with the result of (δ

(s+)

i

⋊⋉

r) ∼ s

(δ

i

+)

.

The complexity of the partitioning δ

i

÷ s is com-

parable with the complexity of an ordinary join op-

eration. The complexity of the computations of

<δ

(s+)

i

,δ

(s−)

i

>⋊⋉ r is the same as the complexity of

δ

i

⋊⋉ r. It is expected that the additional computations

of δ

i

÷ s will take less time than the difference be-

tween the computations of (δ

i

⋊⋉ r) ∼ s and the com-

putations of (δ

(s+)

i

⋊⋉ r) ∼ s

(δ

i

+)

. The benefits depend

on how far the partitioning reduces the size of s

(δ

i

+)

and (δ

(s+)

i

⋊⋉ r).

5.3 Elimination of Materializations

An algorithm that transforms a syntax tree of data

integration expression into the data integration plans

creates the references to so called materializations.

Materialization is a relational table that contains the

intermediate results of processing of one of subex-

pression of a data integration plan. Materializations

are needed when when an online processing plan is

created for an argument which is not at the bottom

level of a syntax tree or a syntax tree is not left-

/right-deep syntax tree. Materializations require the

additional integration operations in online processing

plans and because of that frequently performed in-

tegrations of the partial results with materializations

may consume a lot of additional time. To avoid this

problem we find the ways how to remove materializa-

tions from data integration plans.

Consider an integration expression t ⋊⋉

w

(r ⋊⋉

v

s).

An integration plan for an argument t, i.e. p

t

: δ

trs

:=

δ

t

,⋊⋉

w

m

rs

; result := result ⊕ δ

trs

; uses a materializa-

tion m

rs

which contains the intermediate results of a

subexpression r ⋊⋉

v

s. The plans for the arguments r

and s integrate the intermediate results of the same

subexpression with the materialization m

rs

, for exam-

ple p

r

: δ

rs

:= δ

r

⋊⋉

v

s; m

rs

:= m

rs

⊕δ

rs

; δ

rst

:= δ

rs

,⋊⋉ t;

result := result ⊕ δ

rst

; A simple solution to eliminate

a materialization m

rs

is to apply the associativity of

join operation and to transform the expression into an

expression which has left-/right-deep syntax tree and

argument t is located at at one of the bottom leaf level

nodes of the tree. To do so, we transform the integra-

tion expression into (t ⋊⋉

w

r) ⋊⋉

v

s and we create a new

integration plan p

′

t

: result := result ⊕ (δ

t

⋊⋉ r) ⋊⋉

v

s;

ICEIS 2011 - 13th International Conference on Enterprise Information Systems

146

Additionally, we eliminate the integration of partial

results with a materialization m

rs

from the integration

plans for r and s.

The next example shows, that associativity of the

operations involved in an expression is not a neces-

sary condition for the elimination of materialization.

We consider a data integration expression (r ∼

v

s) ⋊⋉

w

t. The objective is to eliminate a materialization that

contains the intermediate results of r ∼

v

s from an

integration plan for the argument t, i.e. p

t

: δ

rst

:=

m

rs

⋊⋉ δ

t

; result ⊕ δ

rst

. We transform an expression

m

rs

⋊⋉ δ

t

into a form where a modification δ

t

is lo-

cated at the bottom level of left-/right-deep syntax

tree in the following way. First we substitute m

rs

with

r ∼

v

s. Next, we replace an argument r with an equiv-

alent expression (r⋉

y

δ

t

) + (r ∼

y

δ

t

) where opera-

tion + denotes a concatenation of the disjoint results

of semijoin and antijoin operations. The distribu-

tivity of concatenation operation over semijoin and

join operations allows us to transform an expression

(((r⋉

y

δ

t

)+(r ∼

y

δ

t

)) ∼

v

s) ⋊⋉

w

δ

t

into concatenation

of two subexpressions ((r⋉

y

δ

t

) ∼

v

s) ⋊⋉

w

δ

t

+ ((r ∼

y

δ

t

) ∼

v

s) ⋊⋉

w

δ

t

. The results of processing a subex-

pression ((r ∼

y

δ

t

) ∼

v

s) ⋊⋉

y

δ

t

are always empty be-

cause y-values removed from r by (r ∼

y

δ

t

return no

results when join with δ

t

is performed later on. We

get an expression ((r⋉

y

δ

t

) ∼

v

s) ⋊⋉

w

δ

t

equivalent to

an expression m

rs

⋊⋉ δ

t

in the original integration plan

p

t

. Because the transformations above eliminated a

materialization m

r

s from p

t

it is possible to elimi-

nate it from the remaining plans p

r

and p

s

. Hence,

the data integration plans for an integration expres-

sion (r ∼

v

s) ⋊⋉

w

t are as follows.

p

r

: result := result ⊕ (δ

r

∼

v

s) ⋊⋉

w

t;

p

s

: result := result ⊕ (r⋉

v

δ

s

) ⋊⋉

w

t;

p

t

: result := result ⊕ (((r⋉

y

δ

t

) ∼

v

s) ⋊⋉

w

δ

t

);

Next, we discuss how to eliminate materializa-

tion in a more general case. Consider an argument

r whose integration plan uses a materialization. If r

has at least one common attribute with a modifica-

tion of δ

s

of another argument s of integration ex-

pression than it is always possible to replace r with

(r⋉

v

δ

s

) + (r ∼

v

δ

s

). Then it is possible to apply dis-

tributivity of concatenation operation and to eliminate

one of the components of the expression later on like

in the example above. A problem is how to find when

such transformation is possible. Consider an imple-

mentation of online processing plan where an oper-

ation p

z

(δ(x),m(y)) acts on a modification δ(x) and

materialization m(y) such that x ∩ y = z and z 6=

/

0.

It is possible to eliminate materialization m(y) from

the online processing plan when there exists an ar-

gument s(v) of subexpression of materialization m(y)

(see Figure 2) such that:

δ (x)

r

1

r

2

r

k

r

n

u

1

u

n

p

z

u

m(y)

... ...

Figure 2: Elimination of materialization m(y).

(i) v∩ x 6=

/

0 and

(ii) (v∩ x) ∈ z.

Elimination of materialization m(y) is performed

by the substitution of s(v) in a subexpression of

the materialization with (s(v)⋉

v∩x

δ(x)) + (s(v) ∼

v∩x

δ(x)). Then processing of modification δ(x) triggers

the computations along a path that leads from the pro-

cessing of semijoin and antijoin of s(v) with δ(x) to a

materialization m(y). Unfortunately, it does not solve

the problem from performance point of view . The

substitution of s(v) with a concatenation of semijoin

and antijoin of s(v) with a modification δ(x) still pro-

vides a complete s(v) and requires the reprocessing of

entire materialization m(y). In fact, when modifica-

tion δ(x) is small then only a fraction of materializa-

tion m(y) affects the result of p

z

(δ(x),m(y)). Then, a

solution would be to recompute only such component

of materialization that affect the result of operation

p

z

. If it is possible to eliminate one of semijoin of

s(v) with δ(x) or antijoin of s(v) with δ(x) then only

a subset of argument s(v) is involved in the process-

ing. Next, we show a formal method that finds when

a materialization can be removed and what transfor-

mations of the arguments of a relational implemen-

tation of data integration plan are required to do so.

Let T

e

be a syntax tree of a relational algebra expres-

sion e(r

1

,...,r

n

) built over the operations of set dif-

ference, join, semijoin, and antijoin. Let a node n

p

in T

e

represents a binary operation p

v

(r(x),s(y)) such

that v = x∩ y. Labelling of T

e

is performed in the fol-

lowing way.

(i) An edge between a leaf node that represent an ar-

gument r(x) can be labeled with z

r

where z ⊆ x

and z 6=

/

0.

(ii) If a node n

p

in T

e

represents an operation p that

produces a result r(x) and ”child” edge of a node

n

p

is labeled with one of the symbols z, z−, −z,

z∗ then a ”parent” edge of n

p

can be labeled with

a symbol located in a row indicated by a label of

”child” edge and a column indicated by an opera-

tion p

v

in a Table 1.

Labelling of syntax tree is performed to discover the

types of coincidences between the z-values of one or

more arguments of relational algebra expression. The

STATIC OPTIMIZATION OF DATA INTEGRATION PLANS IN GLOBAL INFORMATION SYSTEMS

147

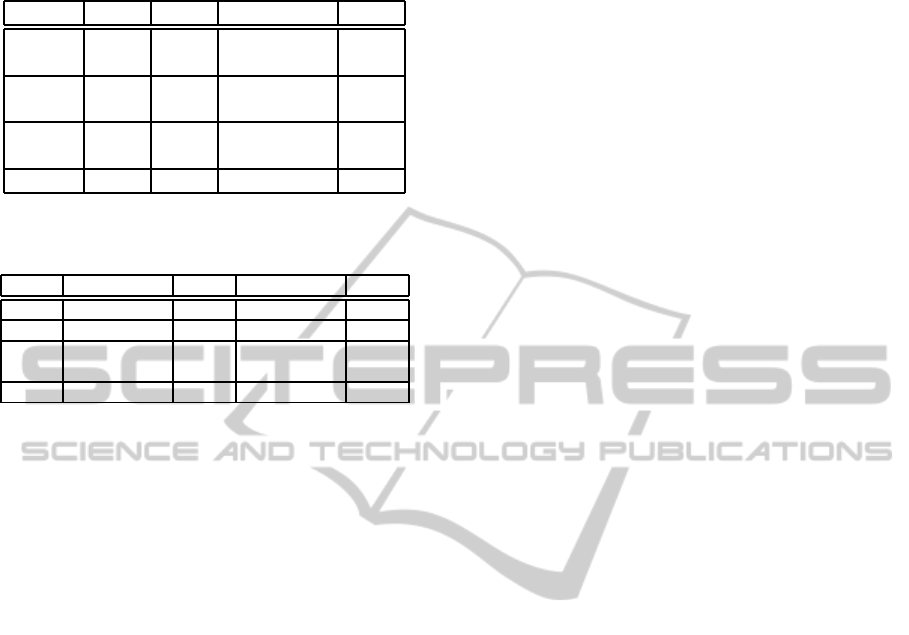

Table 1: The labelling rules for syntax trees of relational algebra expressions.

⋊⋉

v

(left) ∼

v

∼

v

(right) (left)⋉

v

⋉

v

(right) (left)− −(right)

z z− z− −(z∩ v) z− (z∩ v)− z− −z

z− z− z− (z∩ v)∗ z− (z∩ v)− z− z∗

−z −z −z (z∩ v)∗ −z (z∩ v)∗ −z z∗

z∗ z∗ z∗ (z∩ v)∗ z∗ z∗ z∗ z∗

~

y

δ

t

(yz)

y

t

y

r

y

s

−y

s

r

y −

m

rs

y

r(xy)

s(yz)

Figure 3: A labelled syntax tree of online processing plan

p

t

: result := result ⊕((r(xy) ∼

y

s(yz)) ⋊⋉

y

δ

t

(yz)).

coincidencesand their types are needed to find out if it

is possible to remove the materializationsand whether

their elimination is beneficial.

As an example, consider an integration expres-

sion (r(xy) ∼

y

s(yz)) ⋊⋉

y

t(yz) and an integration plan

p

t

: result := result ⊕ (m

rs

(xy) ⋊⋉

y

δ

t

(yz)); for pro-

cessing the increments of an argument t. A materi-

alization is computed as m

rs

(xy) = r(xy) ∼

y

s(yz). A

syntax tree of the plan with the materialization m

rs

re-

placed with r(xy) ∼

y

s(yz) is given in Figure 3. To

eliminate the materialization we try to find the coin-

cidences between y-values of r(xy) and δ

t

(yz) and we

perform the labelling of the syntax tree in a way de-

scribed above. The ”parent” edges of the nodes r(xy),

s(yz), and δ

t

(yz) obtain the labels y

r

, y

s

, and y

t

. A

left ”child” edge of the root node obtained a label y

r

−

indicated by a location in the first row and the sec-

ond column in Table 1. Moreover, the same edge ob-

tained a label −y

s

indicated by a location in the first

row and the third column in Table 1. The final la-

belling of the syntax tree is given in Figure 3. The

interpretations of the labels are the following. A label

y

t

attached to a ”child” edge of join operation at root

node of the tree indicate that all y-values of an argu-

ment δ

t

(yz) are processed by the operation. A label

y

r

− attached to a ”child” edge of the same operation

indicates that only a subset of y-values of an argument

r(xy) and no other y-values are processed by the oper-

ation. A label −y

s

attached to the same edge indicates

that none of y-values in s(y,z) is included in the result

of r(xy) ∼

y

s(yz). The above interpretation of the la-

bels y

r

− and y

t

in a context of join operation over a

set of attributes y means that y-values not included in

the arguments r(xy) and δ

t

(yz) have no impact on the

result of join operation. It means that r(xy) can be re-

placed with r(xy)⋉

y

δ

t

(yz) and δ

t

(yz) can be replaced

with δ

t

(yz)⋉

y

r(xy) without changing the result of the

expression. The interpretation of the labels −y

s

and y

t

in a context of join operation allows for the elimina-

tion from δ

t

(yz) of all y-values, which are included in

s(yz) because these values have no impact on join op-

eration. It means that δ

t

(yz)⋉

y

r(xy) can be replaced

with (δ

t

(yz)⋉

y

r(xy)) ∼

y

s(yz) with changing the re-

sult of the expression. It is also possible to replace

s(yz) with s(yz)⋉ δ

t

(yz) because all y-valuesincluded

in s(yz) and not included in δ

t

(yz) have no impact on

the result of join operation. However, the last modi-

fication is questionable from a performance point of

view. It definitely, speeds up antijoin operation but it

also delays join operation because the results of anti-

join operation are larger after the reduction of s(y,z).

The labelling and the possible replacements of ar-

guments are summarized in the Tables 2 and 3. The

interpretations of the Tables are the following. Con-

sider a relational algebra expression e(r, r

1

,...,r

n

,s)

such that operation p

x

is included in the root node of

its syntax T

e

. If an operation p

x

is either join or semi-

join operations then the possible replacements of the

arguments r and s are included in a Table 2. If an op-

eration p

x

is either antijoin or set difference the the

possible replacements are included in a Table 3. The

replacements of the arguments r and s over a com-

mon set of attributes z ⊆ x can be found after the la-

belling of both paths from the leaf nodes representing

the arguments r and s towards the root node of T

e

la-

beled with p

x

. The replacements of the arguments

r and s are located at the intersection of a row la-

beled with a label of left ”child” edge and a column

labeled with a label of ”right” child edge of the root

node. For instance, consider a subtree of the argu-

ments s and r such that an operation ⋊⋉

x

is in the root

node of the subtree. If a left ”child” edge of the root

node is labeled with − z

r

, and a right ”child” edge of

the root node is labeled with z

s

∗ then Table 2 indi-

cates that it is possible to replace the contents of an

argument s with an expression s ∼

z

r. A sample

justification of the replacements included in the Ta-

ble 2 at the intersection of a row labeled with −z

r

and a column labeled with z

s

∗ is the following. Let

T

e

be a syntax tree of a relational algebra expression

e(r,r

1

,...,r

n

,s) built of the operations of join, semi-

join, antijoin, and set difference, and such that root

node of the tree is labeled with either ⋊⋉

x

or ⋉

x

and

ICEIS 2011 - 13th International Conference on Enterprise Information Systems

148

Table 2: The replacements of arguments in integration

plans.

⋊⋉

x

, ⋉

x

z

s

z

s

− −z

s

z

s

∗

z

r

n/a r⋉

z

s r ∼

z

s s⋉

z

r

s⋉

z

r s⋉

z

r

z

r

− r⋉

z

s r⋉

z

s r ∼

z

s s⋉

z

r

s⋉

z

r s⋉

z

r s ∼

z

r

−z

r

s ∼

z

r s ∼

z

r either s ∼

z

r s ∼

z

r

r⋉

z

s r ∼

z

s or r ∼

z

s

z

r

∗ r⋉

z

s r⋉

z

s r ∼

z

s none

Table 3: The replacements of arguments in integration

plans.

∼

x

,− z

s

z

s

− −z

s

z

s

∗

z

r

n/a s⋉

z

r s⋉

z

r s⋉

z

r

z

r

− s⋉

z

r s⋉

z

r s⋉

z

r s⋉

z

r

−z

r

either s ∼

z

r s ∼

z

r either s ∼

z

r s ∼

z

r

or r ∼ s or r ∼ s

z

r

∗ r ∼

z

s r ∼

z

s none none

its left ”child” edge is labeled with −z

r

and its right

”child” edge is labeled with z

s

∗, z ⊆ x. Then, for

any values of the arguments r,r

1

,...,r

n

,s an expres-

sion e(r,r

1

,...,r

n

,s) = e(r,r

1

,...,r

n

,(s ∼

z

r)). A la-

bel −z

r

attached to a left ”child” edge of join of op-

eration ⋊⋉

x

or ⋉

x

means that none of z-values of an

argument r is include in an argument of join or semi-

join. Then, these z-values can be removed from an

argument s because they will never participate in join

or semijoin operation. On the other hand we cannot

replace an argument r because label z

s

∗ means that

some new z-values can be added to the original set of

z-values in s.

Let e

m

(r

1

,...,r

n

) be an expression that defines a

materialization m in an integration plan for the in-

crements δ

s

of an argument s. Elimination of mate-

rialization m from integration plan for δ

s

is possible

when some of the arguments r

1

,...r

n

can be replaced

with the subexpressions involving δ

s

such that syntax

tree of e

′

m

(r

1

,...,r

n

,δ

s

) does not contain a subexpres-

sion that does not involves δ

s

. In the other words, we

try to replace some of the arguments in an expression

that defines a materialization such that entire expres-

sion can be recomputed with an argument δ

s

and no

subexpression exists that does not involve δ

s

.

An interesting problem is whether any material-

ization can be removed using the replacements de-

scribed above. The analysis of the Tables 2 and 3 and

the structural properties of relational implementations

of integration plans reveal three cases when material-

izations cannot be removedthrough the replacements.

(1) An operation at the root node syntax tree of online

processing plan is a join operation and its both left

and right ”child” edges are labeled with z

r

∗ and

z

s

∗ respectively, see a location in the right lower

corner of Table 2.

(2) An operation at the root node syntax tree of on-

line processing plan is either a join operation or

semijoin operation, a materialization is the fist ar-

gument of the operation and right ”child” edge is

labeled with z

s

∗. This is because all reduction in

the last column of 2 are applicable to the second

argument of the operation which is obtained from

the processing of modification and not material-

ization.

(3) An operation at the root node of a syntax tree of

online processing plan is either an antijoin opera-

tion or difference operation, materialization is the

second argument of the operation and left ”child”

edge of the node is labeled with z

r

∗. This is be-

cause all replacements in the last row of Table

3 are applicable to the first argument of the op-

eration which is obtained from the processing of

modification and not materialization.

6 SUMMARY, CONCLUSIONS,

AND FUTURE WORK

This work addresses a problem of static optimization

of data integration plans in the global informationsys-

tems. The users’ requests submitted at a central site

are decomposed into the individual requests and si-

multaneously submitted for processing at the remote

sites. We show how data integration plans for the in-

crements of the individual arguments can be derived

from a data integration expression and we propose a

number of static optimization techniques for data in-

tegration plans implemented as relational algebra ex-

pressions.

A technique of immediate processing of data

packets as they are received from the remote sites al-

lows for better utilization of data processing resources

available at a central site. The continuous processing

of small portions of data transmitted from the remote

sites eliminates idle time when a data integration sys-

tem has to wait for the transmission of an entire argu-

ment. Decomposition of data integration expression

into the individual plans allows for more precise op-

timization of data integration and it also allows for

better scheduling of data processing on multiproces-

sor systems. Identification of coincidences between

the arguments of data integration expression leads to

elimination of materializations from data integration

plans and reduction of the processing load when ma-

terializations are frequently change.

STATIC OPTIMIZATION OF DATA INTEGRATION PLANS IN GLOBAL INFORMATION SYSTEMS

149

A number of problems remains to be solved.

Elimination of materialization from data integration

plans depends on the parameters of transmission of

the arguments and a problem is how predict these pa-

rameters at static optimization phase. Another inter-

esting problem is identification of all materializations

that can be eliminated in a given moment of time

and scheduling of the replacements in a process of

online data integration. The other problems include

the derivations of more sophisticated systems of id-

operations from the systems of binary operations dif-

ferent from the relational algebra e.g. a system in-

cluding aggregation operations, further investigations

of the properties of data integration plans and more

advanced data integration algorithms where the ap-

plication of a particular online plan depends on what

increments of data are available at the moment.

REFERENCES

Amsaleg, L., Franklin, J., and Tomasic, A. (1998). Dynamic

query operator scheduling for wide-area remote ac-

cess. Journal of Distributed and Parallel Databases,

6:217–246.

Antoshenkov, G. and Ziauddin, M. (2000). Query process-

ing and optmization in oracle rdb. VLDB Journal,

5(4):229–237.

Avnur, R. and Hellerstein, J. M. (2000). Eddies: Contin-

uously adaptive query processing. In Proceedings of

the 2000 ACM SIGMOD International Conference on

Management of Data, pages 261–272.

Getta, J. R. (2000). Query scrambling in distributed multi-

database systems. In 11th Intl. Workshop on Database

and Expert Systems Applications, DEXA 2000.

Getta, J. R. (2005). On adaptive and online data integration.

In Intl. Workshop on Self-Managing Database Sys-

tems, 21st Intl. Conf. on Data Engineering, ICDE’05,

pages 1212–1220.

Getta, J. R. (2006). Optimization of online data integration.

In Seventh International Conference on Databases

and Information Systems, pages 91–97.

Getta, J. R. and Vossough, E. (2004). Optimization of data

stream processing. SIGMOD record, 33(3):34–39.

Gounaris, A., Paton, N. W., Fernandes, A. A., and Sakellar-

iou, R. (2002). Adaptive query processing: A survey.

In Proceedings of 19th British National Conference

on Databases, pages 11–25.

Haas, P. J. and Hellerstein, J. M. (1999). Ripple joins for

online aggregation. In SIGMOD 1999, Proceedings

ACM SIGMOD Intl. Conf. on Management of Data,

pages 287–298.

Ives, Z. G., Florescu, D., Friedman, M., Levy, A. Y., and

Weld, D. S. (1999). An adaptive query execution sys-

tem for data integration. In Proceedings of the 1999

ACM SIGMOD International Conference on Manage-

ment of Data, pages 299–310.

Ives, Z. G., Halevy, A. Y., and Weld, D. S. (2004). Adapt-

ing to source properties in processing data integration

queries. In Proceedings of the 2004 ACM SIGMOD

International Conference on Management of Data.

Kabra, N. and DeWitt, D. J. (1998). Efficient mid-query re-

optimization of sub-optimal query execution plans. In

Proceedings of the 1998 ACM SIGMOD International

Conference on Management of Data.

Mokbel, M. F., Lu, M., and Aref, W. G. (2002). Hash-merge

join: A non-blocking join algorithm for producing fast

and early join results.

Ozcan, F., Nural, S., Koksal, P., Evrendilek, C., and Do-

gac, A. (1997). Dynamic query optimization in mul-

tidatabases. Bulletin of the Technical Committee on

Data Engineering, 20:38–45.

Raman, V., Deshpande, A., and Hellerstein, J. M. (2003).

Using state modules for adaptive query processing. In

Proceedings of the 19th International Conference on

Data Engineering, pages 353–.

Urhan, T. and Franklin, M. J. (2000). Xjoin: A reactively-

scheduled pipelined join operator. IEEE Data Engi-

neering Bulletin 23(2), pages 27–33.

Urhan, T. and Franklin, M. J. (2001). Dynamic pipeline

scheduling for improving interactive performance of

online queries. In Proceedings of International Con-

ference on Very Large Databases, VLDB 2001.

ICEIS 2011 - 13th International Conference on Enterprise Information Systems

150