A QUANTITATIVE EXAMINATION OF THE IMPACT

OF FEATURED ARTICLES IN WIKIPEDIA

Antonio J. Reinoso, Jesus M. Gonzalez-Barahona

GSyC/Libresoft, Universidad Rey Juan Carlos, Madrid, Spain

Roc´ıo Mu˜noz-Mansilla

Automatic Control and Computer Science Department, UNED, Madrid, Spain

Israel Herraiz

Department of Applied Mathematics and Computing, Technical University of Madrid, Madrid, Spain

Keywords:

Wikipedia, Featured articles, Usage patterns, Traffic characterization, Quantitative analysis.

Abstract:

This paper presents a quantitative examination of the impact of the presentation of featured articles as quality

content in the main page of several Wikipedia editions. Moreover, the paper also presents the analysis per-

formed to determine the number of visits received by the articles promoted to the featured status. We have

analyzed the visits not only in the month when articles awarded the promotion or were included in the main

page, but also in the previous and following ones. The main aim for this is to assess the attention attracted

by the featured content and the different dynamics exhibited by each community of users in respect to the

promotion process. The main results of this paper are twofold: it shows how to extract relevant information

related to the use of Wikipedia, which is an emerging research topic, and it analyzes whether the featured

articles mechanism achieve to attract more attention.

1 INTRODUCTION

Wikipedia continues to be an absolute success and

stands as the most relevant wiki-based platform. As a

free and on-line encyclopedia, it offers a rich collec-

tion of contents, provided in different media formats

and related to all the areas of knowledge. Undoubt-

edly, the Wikipedia phenomenon constitutes one of

the most remarkable milestones in the evolution of

encyclopedias. In addition, its supporting paradigm,

based in the application of collaborative and coopera-

tive efforts to the production of knowledge, has been

object of a great number of studies and examinations.

This significant relevance has made Wikipedia

to become a subject of increasing interest for the

research community. However, this research usu-

ally concerns quality and reliability aspects about the

Wikipedia contents (Priedhorsky et al., 2007; Olleros,

2008) or examines its growth or evolution (Suh et al.,

2009). By contrast, very few studies have been de-

voted to analyze how Wikipedia users visit and brow-

se the encyclopedia.

This paper presents an analysis aimed to deter-

mine the attention, measured in number of visits,

that featured articles attract when they are included

as quality content in the main pages of different

Wikipedia editions. Furthermore, we have also con-

sidered the differences in traffic to featured articles

during the discussion of their promotion in order to

assess if different communities exhibit different dy-

namics when looking for consensus.

The rest of the article is structured as follows: first

of all, we describe the data sources used in our anal-

ysis as well as the methodology followed to conduct

our work. After this, we present our results prior to

a proper discussion about them. Finally, we propose

some ideas for further work and present our conclu-

sions.

301

J. Reinoso A., M. Gonzalez-Barahona J., Muñoz-Mansilla R. and Herraiz I..

A QUANTITATIVE EXAMINATION OF THE IMPACT OF FEATURED ARTICLES IN WIKIPEDIA.

DOI: 10.5220/0003494903010304

In Proceedings of the 6th International Conference on Software and Database Technologies (ICSOFT-2011), pages 301-304

ISBN: 978-989-8425-76-8

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

2 THE DATA SOURCES

Visits to Wikipedia are issued in the form of URLs

sent from users’ browsers. These URL’s are regis-

tered by the Wikimedia Foundation Squid servers in

the form of log lines after serving the requested con-

tent. Squid servers are a special kind of servers per-

forming web caching which are used by the Wikime-

dia Foundation as the first layer to manage the overall

traffic directed to all its projects. Part of the informa-

tion they register is sent to universities and research

centers interested in its study.

2.1 The Wikimedia Foundation Squid

Subsystem

As a part of their job, Squid systems do log informa-

tion about every request they serve whether the cor-

responding contents stem from their caches or, on the

contrary, are provided by the web servers placed be-

hind them. These log lines are sent in a UDP-packet

stream to our facilities where they are conveniently

stored.

The Wikimedia Foundation Squid servers use a

customized format for generating their log lines.

However, we do not receive all of the registered infor-

mation, basically in consideration to users’ privacy,

but just several fields of the log format. The most

important field we receive is the URL which consti-

tutes the submitted request. In addition, the date of

the request and a field indicating if it caused a write

operation to the database are also included.

2.2 Featured Articles

Featured articles are considered the best articles all

over the Wikipedia. In order to be promoted to this

status, the articles, first, have to be nominated and in-

cluded in an special page as candidates to featured

articles. Usually and prior to the their nomination,

future candidate articles pass through a peer revision

process in which reviewers make suggestions to im-

prove their quality.

Featured article have to meet a set of criteria apart

from the requirements demanded to every Wikipedia

article. These criteria cover from a clear and compre-

hensive writing of the article to a proper structure and

organization. Other aspects such us stability, neutral-

ity as well as length and citation robustness are also

considered.

In what our research is concerned, we analyze the

impact of featured articles in two very different ways.

First, we consider the influence of the promotion of

articles to the featured status in their number of visits.

Then, we also study the impact of the presentation of

a featured article as an example of high quality con-

tent in the main page of some editions of Wikipedia.

Regarding this, our main goal is to determine some

kind of pattern which can serve to model the traffic to

an article after being considered as featured.

3 METHODOLOGY OF THE

STUDY

The analysis presented here is based on a sample

of the Wikimedia Foundation Squid log lines corre-

sponding to two different periods, each consisting in

three months: Mars, April and May in one set, and

September, October and November, in the other one.

As we receive the 1% of all the traffic directed to the

Wikimedia Foundation projects, this results in more

than 8,200 million log lines to process for the consid-

ered months.

This analysis has focused just on the traffic di-

rected to the Wikipedia project and to ensure that the

study involved mature and highly active language edi-

tions, only the requests corresponding to the top-six

visited editions have been considered.

Once the log lines from the Wikimedia Founda-

tion Squid systems have been received in our facil-

ities, they become ready to be analyzed by the tool

developed for this aim: The WikiSquilter project.

The analysis consists on a characterization based on

a parsing process to extract the relevant elements of

information prior to a filtering one according to the

study directives. As a result of both processes, nec-

essary data to conduct a characterization are obtained

and stored in a relational database for further analysis.

Browsing the special pages of each Wikipedia edi-

tion devoted to its featured contents, we obtained the

featured articles promoted during April and October

2009. Moreover, we extracted the featured articles

appearing in the main page during the same months.

Then, we queried the database resulting from the pro-

cessing of the Squid log lines to look for the num-

ber of visits corresponding to those articles during the

aforementioned months as well as during the previous

and the following ones in the aim of finding out what

impact have the two featured mechanisms on the vis-

its that articles get. We did all the analysis shown here

using the GNU R statistical package (R Development

Core Team, 2009).

ICSOFT 2011 - 6th International Conference on Software and Data Technologies

302

Mar Apr May

Avg. num. of visits

0 200 400 600 800

Figure 1: Average number of visits for today’s featured ar-

ticles in the English Wikipedia during April 2009.

4 STATISTICAL ANALYSIS

Before starting to analyze the impact of the featured

articles, we characterized the volume and activity of

each one of the considered Wikipedias. This will

allow to cross correlate the results and conclusions

with the dimension of the population under study. In

this way, we put in decreasing order the considered

Wikipedias by size in number of articles and by num-

ber of received visits. As a result, we obtained that

both ordinations were different. It is interesting the

case of the Spanish Wikipedia, the smallest in size

but the second in number of visits.

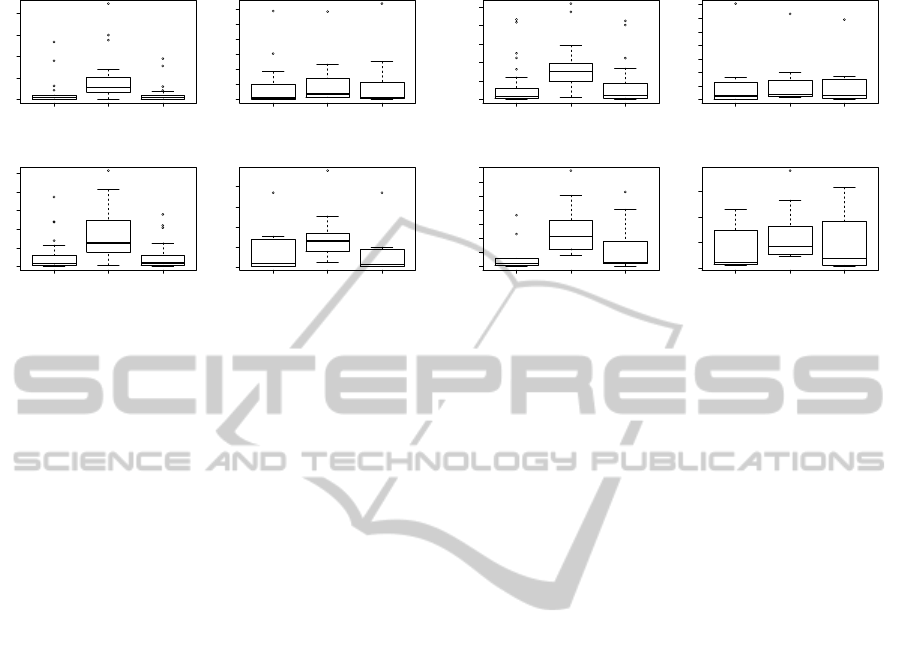

Figure 1 shows the average number of visits (or

mean) for the featured articles presented in the main

page of the English Wikipedia during April. At a first

glance, it seems clear that the so-called “today’s fea-

tured articles” attract a great amount of attention in

the month they are included in the main page, com-

pared with the previous and the following ones.

If we analyze now the same metric applied to the

articles just promoted to the featured status in April

and November, we obtain that those articles do not

receive always the highest number of visits in the

month they are promoted as today’s featured articles

did. This is probably due to the effect of the internal

mechanism for promotion that entails a reviewing, a

nomination and a consensus process. In this way, the

different dynamics exhibited by each community of

users in the promotion process are reflected in the vis-

its that the articles attract.

If we focus on the case of the English Wikipedia,

at a first glance, it seems that the level of visits during

April and October was higher than the corresponding

to the previous and following months, when visits re-

mained quite similar in number. It seems that, in both

periods, the bulk of visits correspond to the months

when articles are displayed in the main pages in all

the Wikipedias except the Spanish one that presents a

similar behavior in all the months.

To find out whether the differences in the median

values for all the samples are negligible or not, we

use a statistical test. Because the median values seem

to be highly skewed in the box, the first step is testing

whether the samples are extracted from a Normally

distributed population. Depending on the result, we

will choose a different statistical test to compare visits

in different months.

In this way, the table 1 shows the results of

the Normality test for the visits to the featured ar-

ticles displayed in the main page of the English

(EN) and , Spanish (ES), German (DE) and French

(FR) Wikipedias during the second considered set of

months. The value of the W column is the Shapiro-

Wilcox statistic, which indicates whether the sample

is normal if and only if the p value is lower than a

certain threshold (0.05 most often). Thus, after ta-

ble 1 only the month of September for the French

Wikipedia and the month of October for the English

Wikipedia present Normal distributions.

Table 1: Normality tests for featured articles displayed in

the main pages. Only the month of September for the

French Wikipedia and the month of October for the English

one seem to be Normal (p < 0.05). The rest of samples are

non-Normal.

Sept Oct Nov

Lang. W p W p W p

DE 0.94 0.64 0.96 0.85 0.91 0.33

EN 0.95 0.19 0.92 0.03 0.95 0.16

ES 0.94 0.63 0.91 0.30 0.97 0.85

FR 0.82 0.03 0.89 0.22 0.87 0.13

This non-normality of the samples implies that

we have to test the median rather than the mean val-

ues, because the mean is highly biased for this kind

of samples. Because of this issue, we decided to

use a Wilcoxon rank-sum test (also known as Mann-

Whitney-Wilcoxon test) to find out whether or not the

appearance of a featured article in the main page im-

plies a greater number of visits to those articles. This

test is not sensitive to the normality of the data.

Table 2: Results of the Wilcoxon rank-sum test for all the

samples. In the English Wikipedia, the month of October

gets more visits (p < 0.05). In the English and German

Wikipedias, the month of October receives more visits. In

the rest, the level of visits is similar. S: September, O: Oc-

tober, N: November.

S / O O / N S / N

Lang U p U p U p

DE 13 0.01 63 0.05 32 0.47

EN 140 0.00 645 0.00 337 0.37

ES 33 0.53 46.5 0.62 36 0.72

FR 25 0.19 52 0.34 38 0.86

A QUANTITATIVE EXAMINATION OF THE IMPACT OF FEATURED ARTICLES IN WIKIPEDIA

303

Mars Apr May

0 1000 2000 3000 4000

English

Visits

Mars Apr May

0 200 400 600 800 1200

Spanish

Visits

Mars Apr May

0 200 400 600 800 1000

German

Visits

Mars Apr May

0 20 40 60 80

French

Visits

Sept Oct Nov

0 500 1000 1500 2000 2500

English

Visits

Sept Oct Nov

0 500 1500 2500 3500

Spanish

Visits

Sept Oct Nov

0 100 300 500 700

German

Visits

Sept Oct Nov

0 100 200 300

French

Visits

Figure 2: Boxplot of the visits to featured articles included in the main pages of the considered Wikipedias.

Table 2 shows the results of the test. The column

labeled U shows the value of the statistic, and the col-

umn p shows the level of significance. These results

indicate that featured articles displayed in the main

pages attracted more visits during October only in the

case of the English Wikipedia.

When examining the promoted articles, none of

the central months attracted a number of visits signif-

icantly higher than the next ones. Again the expla-

nation may reside in the different way of conducting

when developing the promotion process.

4.1 Summary of Results

For the featured articles displayed in the main pages

of the four considered Wikipedias (by number of arti-

cles and by traffic), we have assessed whether their in-

clusion as example of featured content has an impact

on the visits that those articles get. After an statis-

tical analysis comparing the number of visits for the

months right before and right after the month when

the articles appear in the main page, our results in-

dicate that such an impact surely happens for the En-

glish Wikipedia. Interestingly, we found the same im-

pact in the German Wikipedia for the month of April.

On the other hand, our study shows how the different

dynamics involved in the articles’ promotion process

are reflected in very different patterns of visits to the

articles awarded with the featured status.

5 CONCLUSIONS AND FURTHER

WORK

Wikipedia, the largest wiki-based platform in the

world, is a source of information for millions of

people around the world. One of the resources of

Wikipedia to improve the users experience is the fea-

tured status. Best articles are awarded with this status

and they are included in the main pages as a sort of

recognition.

Our results indicate that we can observe that

increased attention only constant in the English

Wikipedia. The German Wikipedia also presents a

significant increase of the visits to their featured arti-

cles shown in the main page in one of the considered

periods. In the case of articles promoted to the fea-

tured status, our results show that there is not a com-

mon pattern of conducting in the promotion process.

This subject clearly deserves an in-depth examination

in the search for common features in all the processes.

REFERENCES

Olleros, F. (2008). Learning to trust the crowd: Some

lessons from wikipedia. In e-Technologies, 2008 In-

ternational MCETECH Conference on, pages 212 –

216.

Priedhorsky, R., Chen, J., Lam, S. T. K., Panciera, K., Ter-

veen, L., and Riedl, J. (2007). Creating, destroying,

and restoring value in wikipedia. In GROUP ’07: Pro-

ceedings of the 2007 international ACMconference on

Supporting group work, pages 259–268, New York,

NY, USA. ACM.

R Development Core Team (2009). R: A Language and

Environment for Statistical Computing. R Foundation

for Statistical Computing, Vienna, Austria. ISBN 3-

900051-07-0.

Suh, B., Convertino, G., Chi, E. H., and Pirolli, P.

(2009). The singularity is not near: slowing growth

of wikipedia. In WikiSym ’09: Proceedings of the 5th

International Symposium on Wikis and Open Collab-

oration, pages 1–10, New York, NY, USA. ACM.

ICSOFT 2011 - 6th International Conference on Software and Data Technologies

304