MOBILE CLOUD COMPUTING ARCHITECTURE FOR

UBIQUITOUS EMPOWERING OF PEOPLE WITH DISABILITIES

Carlos Fernandez-Llatas, Gema Iba˜nez, Pilar Sala, Salvatore F. Pileggi and Juan Carlos Naranjo

TSB-UPV, Universidad Polit´ecnica de Valencia, Valencia, Spain

Keywords:

Cloud Computing, e-Inclusion, Mobile environments, Assistive Tecnologies.

Abstract:

Information and Communication Technologies are more and more present in the modern society. The penetra-

tion of personal devices in worldwide citizens is daily increasing. In addition, the accessibility of those devices

is being more and more improved in order to profit the technology advances to help people with disabilities

in daily life. Nevertheless, the processing capabilities of those personal devices are not enough to cover the

need of intelligence for holistic assistive technologies. This addressesinnovative approaches in which remote

resources can be remotely accessed and consumed by mobile devices . In this paper, a cloud-based architecture

is presented. The CORE infrastructure provides an intelligent and pervasive environment composed of remote

services to assistive applications.

1 INTRODUCTION

For approximately 80 million of Europeans with a

disability, there are major obstacles that put activi-

ties such as travelling out of reach. To break down

the barriers that prevent persons with disabilities from

participating in society on an equal basis it is needed

the creation of smart and personalized inclusion, in-

volving Information and Communication Technolo-

gies (ICT) tools.

Due to their penetration, mobile phones are more

and more used as a tool for create innovativesolutions

within the area of assistive technologies. These de-

vices have increased exponentially their capacity and

process capability in few years. In this way, several

large scale human-centric ubiquitous computing and

smart space projects have been completed during the

last years, like PERSONA (045459, ), ASKIT (Con-

sortium, 2008a) and OASIS (Consortium, 2008b).

Furthermore, modern smart devices are not currently

limited to mobile phone considering the strong and

continuous convergence between mobility and com-

putation: the last generation of relatively low-cost

mobile devices (e.g. phones, tablets, PDAs) is pro-

vided with increased capabilities in terms of computa-

tion and data-storage as well as with a set of advanced

sensors.

To profit the potential of those devices, the ac-

cessibility in mobile phones is an interesting prob-

lem to solve. This allows the creation of more and

more intelligent applications that assists the citizen

with disabilities in mobile scenarios. Design for All

or Universal Design (Story et al., 1998) constitutes an

approach for building modern applications that need

to accommodate for heterogeneity in user character-

istics, devices and contexts of use. However, one

of the main difficulties encountered by developers is

the general lack of indication on how to instantiate

its principles. Universal Design does not necessarily

solve all accessibility problems, it does incorporate a

human factors (user-centred approach) to producing

products, so that they can be used by as many individ-

uals as possible regardless of age, abilities, skills, re-

quirements, situations, and preferences. Therefore, a

critical property of interactive artifacts becomes their

capability for intelligent adaptation and personaliza-

tion, their ability to communicate in a common open

area.

Although the increasing of capabilities of personal

devices, there is not enough in them for the current

processing needs of last generation assistive technolo-

gies. This is because the high level of personalization

and the high complexity of intelligent services that

are claimed by people with disabilities. To solve this

problem, it is needed to create processing environ-

ments that provide more intelligence to mobile per-

sonal use cases.

By a technological point of view, these environ-

ments haveto be designed according to a high-flexible

model that allows a fundamental dynamism respect to

377

Fernandez-Llatas C., Ibañez G., Sala P., F. Pileggi S. and Carlos Naranjo J..

MOBILE CLOUD COMPUTING ARCHITECTURE FOR UBIQUITOUS EMPOWERING OF PEOPLE WITH DISABILITIES.

DOI: 10.5220/0003607003770382

In Proceedings of the 6th International Conference on Software and Database Technologies (IWCCTA-2011), pages 377-382

ISBN: 978-989-8425-76-8

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

concrete applications/services as well as to different

business models (Foster et al., 2008).

Virtual environments based on scalable service

models appear at the moment as a high competitive

solution that, under the assumption of always con-

nected devices and relatively high bandwidth, could

be the most realistic and effective approach in order

to enable complex services on mobile devices.

In this paper, an architecture aimed to provide a

mobile solution to the distribution of services needed

by assistive technologies based on the Cloud Comput-

ing paradigm is presented.

The paper is structured in three main parts: first

an overview at the most relevant aspects of assistive

technologies, than a brief analysis of main advantages

of cloud technologies and finally a ”big picture” of the

proposed infrastructure are proposed.

2 ASSISTIVE TECHNOLOGIES

ICTs are commonly used to empower impaired peo-

ple in their daily life. In this way, the concept of as-

sistive technologies is defined (S et al., 2009). As-

sistive technologies are solutions to provide disabled

people with assistive, adaptive and rehabilitative de-

vices. These framework promotes the independence

by enabling people to perform common tasks that are

not able to perform by themselves of had a great dif-

ficulty to accomplish them.

To achievethis, Assistive Technologies, must, first

of all, be able to gather all the information available

about the user that will be the projection of the user

on the system. This is the concept of context (Preuve-

neers, 2010). In this framework, context can be de-

fined as any information that can be used to explain

the situation that is relevant to the interaction between

the users and the application. In this approach, the

key is to automatically determine whether observed

behavioral cues share a common cause - for exam-

ple, whether the mouth movements and audio signals

complement to indicate an active known or unknown

speaker (how, who, where) and whether his or her fo-

cus of attention is another person or a computer (what,

why).

The Context data can be gathered not only from

the user directly but also from the ambient. Existing

localization techniques will be combined (fused) with

information coming from the vision sensors in order

to track a person inside an apartment or any other

equipped enviroment. A person in the line of sight

of a vision sensor is located with great precision: one

knows in which room he/she is, even in which part

of the room, given the angle of the camera. A Wire-

less Sensor Network localization algorithm can use

this information as a starting point for tracking some-

one in places out of the sight of any vision sensor.

When the subject enters the field of sight of a visual

device again, the information is dispatched and used

to correct an eventual error of the radio-based local-

ization algorithm. This scheme will follow a mobile

device-centred approach.

An assistive application must not only gather

the information of the context but also be aware of

them and react to specific situations. This is the

mission of Context-Awareness systems (Preuveneers,

2010). Context-awareness is a very important aspect

of the emerging pervasive and autonomic computing

paradigm. The efficient management of contextual

information requires detailed and thorough modeling

along with specific processing and inference capabil-

ities. Mobile nodes that know more about the user

context are able to function efficiently and transpar-

ently adapt to the current user situation. Data fu-

sion combines the information originating from dif-

ferent sources. It is one of the primary elements of

modern tracking techniques. Its objective is to maxi-

mize the useful informationand make it more reliable,

obtain more efficient data and information represen-

tation, and detect higher-order relationships between

different data types.

Interactive and affective behavior may involve and

modulate all human communicativesignals: facial ex-

pression, speech, vocal intonation, body posture and

gestures, hand gesticulation, non-linguistic vocal out-

bursts, such as laughter and sighs, and physiological

reactions, like heartbeat and clamminess. Sensing and

analysis of all these modalities have improved signif-

icantly in the recent years. Vision-based technologies

for facial features, head, hand and body tracking have

advanced significantly with sequential state estima-

tion approaches, as for example Kalman (Chui and

Chen, 1987) and particle filtering, which reduced the

sensitivity of the detection and tracking schemes to

occlusion, clutter, and changes in illumination.

Recent advent of non-intrusive sensors and wear-

able computers, which promise less invasive physi-

ological sensing, opened up possibilities for includ-

ing tactile modality into automatic analyzers of hu-

man behavior. However, virtually all technologies for

sensing and analysis of different human communica-

tive modalities and for detection and tracking of hu-

man behavioral cues have been trained and tested us-

ing audio and/or video recordingsof posed, controlled

displays. Hence, these technologies, like the ones de-

veloped in FP6 AMI and CHIL projects (explained

below), are, in principle, inapplicable for sensing,

tracking, and analysis of human behavioural cues oc-

ICSOFT 2011 - 6th International Conference on Software and Data Technologies

378

curring in spontaneous displays (as opposed to posed

displays) of human interactive and affective behavior.

More specifically, it will be developed a mutually in-

formed face detector,facial feature tracker, body parts

tracker, head pose estimator, and body pose estima-

tor, which can be used for processing subtle human

behavior typical for real-world scenarios.

Mobile phones are a suitable entry point for as-

sistive applications in mobile scenarios (EMB, 2010).

Mobile devices can be connected to Personal Area

Network (PAN) of the user as well as to the Local

Area Network (LAN) of the ambient in order to gather

the required data to fill the context. Nevertheless, the

execution of complex data fusion and reasoning tech-

niques are unaffordable by the limited processing ca-

pability of current mobile devices and must be per-

formed in external servers.

3 CLOUD COMPUTING

TECHNOLOGY

Cloud Computing (Miller, 2008) is a technological

solution aimed to provide remote computational so-

lutions (normally on demand) through computer net-

works.

Cloud technologies are more and more present in

real systems to provide them a more efficient way to

build scalable systems. Cloud resources can be dy-

namically changed. These resources are extremely

dynamic by the hardware point of view. Available

or assigned resources could be increased in order to

make the system able to deal with great amounts of

requests in certain moments and decreased on the op-

posite case.

This allows the systems to be more efficient

and scalable as well as assuring potential benefits

by power consumption point of view (green ap-

proach) (Baliga et al., 2011).

The economic impact of cloud approach to dis-

tributed systems is one of the key aspects for the ef-

fective realization of commercial infrastructures: re-

sources can be consumed on demand (the user only

pays for what he is really consuming) as well as ac-

cording to a great number of business models (Chang

et al., 2010).

Other advantage of cloud computing is its flexi-

bility respect to the information storage model and,

more in general, respect to the independence of appli-

cations from access devices. Cloud servers proposes

an interesting and competitive cost model based on

a scalable approach: they are maintained by IT ex-

perts and there are no need of IT personnel in enter-

prises to be constantly worried about constant server

updates and other computing issues, reducing the cost

of maintaining the system being, in this case, more

sustainable and more protected to hacker attacks.

In addition, the cloud is always available on inter-

net and people can access information wherever they

are.

In our problem, assistive technologies can need

a different bandwidth depending on the kind of ser-

vices. At the same time, an assistive platform re-

quires the efficient coordination and cooperation of

contextual services: as the service provider is the ba-

sic stakeholder, the infrastructure is the technologic

core in order to assure an adequate quality of experi-

ence.

4 MOBILE CLOUD ASSISTIVE

ENVIRONMENTS

According with the need of Assistive Technologies in

mobile environments, the data collected by the per-

sonal system must be processed to create intelligence

in the context-aware system to allow the applications

to react to the demands of users.

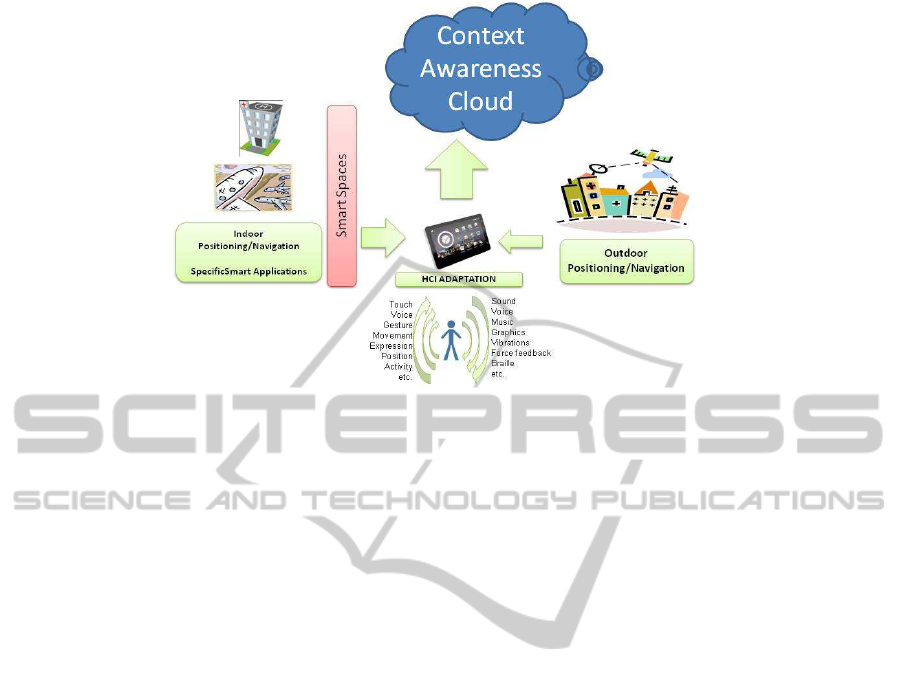

Figure 1 summarize the needs of mobile assis-

tive environments. The mobile device is in charge

of collect the data to populate the context as well as

provide the UI to offer the adequate contents to the

user. This is made through the Human Computer In-

terface(HCI). The HCI is also divided in two different

modules; the Input HCI and the Output HCI:

The Input HCI is in charge of collect the basic

data of the user context. This data represent the data

directly captured from the user via the basic sensors

installed on the mobile phone. The selection of those

sensors depends specifically on the characteristics of

the user. For example, speech recognition systems

to implements voice commands are suitable for blind

people but are not appropriate for people with speech

disorders. Examples of these kind of sensors that can

be found in existing mobile devices are: GPS, speech

recognition systems, microphone, Camera, compass,

etc.

The Output HCI is in charge to provide to user the

assistive information needed depending on the cur-

rent activity in which the user is involved. For exam-

ple, when a blind user is asking for some information

about timetables in an airport, the mobile phone can

use a text-to-speech system or a braille AT to provide

the required information in an individualized way. In

this case, deaf people must use different systems to

access that information.

To provide the proper information to the individ-

ual, a higher level intelligent processing is needed.

MOBILE CLOUD COMPUTING ARCHITECTURE FOR UBIQUITOUS EMPOWERING OF PEOPLE WITH

DISABILITIES

379

Figure 1: Context Awareness.

The basic data collected by the user is received by

context processors that infers high level data. This

high level data is dependent on the user profile and

the specific status and situation in which the user is

involved. In this way, the system should be able to

sense, interpret and react to changes in the environ-

ment a user is situated in. For that reason, a context

aware system has to deal with:

• Context Representation by adopting certain

knowledge representation model rich in seman-

tics,

• Context Sensing and Fusion by considering the

heterogeneous sources and their reliability on cap-

turing contextual data,

• Context Inference and Reasoning by adopting

inference engines capable for extracting further

knowledge (new context) from the sensed context,

and,

• Context Adaptation by adopting certain mech-

anism able to adjust specific system parameter

(e.g., presentation issues, learning rates) upon

user feedback.

In this case, the context processors collect in-

formation from heterogeneous context sources, such

as, the context-centred and user-centred sensors, user

profile and historical user actions. The combina-

tion of such sensor data (e.g., noise, level, lightness)

with spatial knowledge (e.g., location, proximity) and

temporal knowledge (e.g., history of events, current

time), leads to a detailed depiction of the environ-

ment, i.e., inferred context or current user situation.

The situation of a user indicates additional knowl-

edge derived from the environment of the user that

are conveniently and semantically tagged according

to the context representation model

In order to allow the systems to be proactive,

some rule-based inference schemes can be processed

as Event Condition Action rules. This allow to the

system to react to specific events and conditions via

starting adequate actions to support the user. For ex-

ample, fall detection event can suppose the execution

of an alarm action.

As it was mentioned previously, the mobile device

is not able to process information. Then, it is neces-

sary to distribute the execution. In Figure 2 it is shown

the processing distribution. HCI adaptation is per-

formed in the mobile phone. This is because the basic

data and the interaction with the user must be near

the user. Nevertheless, the context awareness system

should be allocated in the cloud. This is because the

context processors might need large amounts of pro-

cess capabilities (i.e., image processing). The use of

cloud computing paradigm give potentially unlimited

processing power and storage to the system. In addi-

tion, private clouds could be used in smart spaces to

provide specific smart applications depending on the

specific space (i. e. hospitals, airports, etc.)

The global evaluation of the architecture should be

approached according to a two side-perspective (ser-

vice/technical perspective and business perspective).

This analysis will be object of the following subsec-

tions.

4.1 A Technologic Perspective: Service

Model

An deeper analysis of the CORE infrastructure allows

ICSOFT 2011 - 6th International Conference on Software and Data Technologies

380

Figure 2: Context Awareness in Cloud.

to distinguish three different kind of services:

• Basic Service (third-part service): they are pro-

vided by service providers according to a perva-

sive approach. These services are not directly ac-

cessible by end-users but they are the ”bricks” to

build end-user services. Examples are the services

provided by smart spaces or any kind of intelligent

ambient.

• CORE Service: services provided by the platform

in order to coordinate basic services and/or to pro-

vide any kind of contextual matching and/or opti-

mization. In other words, they provide any kind

of required elaboration (e.g. contextualization) on

Basic Services that are so available as personal-

ized services for the end-user.

• End-user Service: as in the common mean.

As implicitly mentioned, the Basic Services pro-

vide a strong support for the mobility: the remote ex-

ecution of services enables complex services in smart

devices (Kovachev et al., 2010). The current tech-

nologies, able to support the various cloud solutions

(e.g. IaaS, Paas, SaaS), provide a strong and advanced

technologic environment that reflect a full service-

oriented view at distributed systems in which services

are available as virtual resources in the platform. A

further technologic point of interest is the ”appliance”

approach (Wikipedia, 2011) that can assure always

updated applications as well as no explicit service

configuration, no need of intrusive software, etc. The

key issue for the success ”in the real world” of this

class of infrastructure is the enterprise approach to

the service that allows realistic business models as ex-

plained in the following subsection.

4.2 A Business Perspective:

Stakeholders and Business Models

One of the key aspects of the proposed architecture

is its flexibility respect to the business model. First

of all, the platform was designed under realistic as-

sumptions for the great part of users: Always Con-

nected/Always Best Connected devices, (relatively)

high-capable network connection and last generation

mobile devices. The platform evidently takes ad-

vantage by general benefits provided by the Cloud

approach in terms of scalability, consumption, com-

petivity, etc. Furthermore, the benefits introduced

by cloud approach for mobile environments and the

availability of optimized smart and intelligent envi-

ronments assure a global, flexible and advanced tech-

nologic solution. The key business stakeholder is ev-

idently the service provider: multiple realistic busi-

ness scenarios and marketplaces for both governmen-

tal and private institutions are easy to be detected.

Governments and, more in general, public institutions

could find a competitive solution to assist disable peo-

ple in the everyday life. Private institutions and enter-

prises could find new publicity channels for disable

people as well as for their entourage (e.g. parents and

friends). This last aspect has to be evaluated consider-

ing common ethic rules (as well as other aspects such

us privacy need a clear policy). Most generally, the

services classification in function of the level of con-

fidence should be welcome.

5 CONCLUSIONS

The use of cloud-based solution can provide effective

MOBILE CLOUD COMPUTING ARCHITECTURE FOR UBIQUITOUS EMPOWERING OF PEOPLE WITH

DISABILITIES

381

solutions to the problem of mobile assistive technolo-

gies in which public spaces (e.g. airports, metro sta-

tions, museums, hotels etc.) and the services they pro-

vide are fully accessible by impaired people. At the

moment, there is a notable request of services able to

support (above all) visually impaired persons or peo-

ple with kinetic problems in order to enhance inclu-

sion, mobility and autonomy. However it is easy to

foresee a quick increasing of the availability of ser-

vices supporting any class of disability as soon as ef-

fective platforms will be available in a commercial

context.

These platforms, taking advance by a completely

distributed and scalable approach, will allow the de-

velopment of always more advanced smart applica-

tions that can provide disabled people with the same

opportunities that the rest of citizens to perform their

daily tasks in a context of economic sustainability.

REFERENCES

(2010). Information and Assistance bubbles to help elderly

people in public environments.

045459, V. F. P. I. P. Persona project. perceptive spaces

promoting independent aging.

Baliga, J., Ayre, R., Hinton, K., and Tucker, R. (2011).

Green cloud computing: Balancing energy in process-

ing, storage, and transport. Proceedingd of IEEE,

99:149–167.

Chang, V., Wills, G., and De Roure, D. (2010). A review

of cloud business models and sustainability. In Pro-

ceedings of the 2010 IEEE 3rd International Confer-

ence on Cloud Computing, CLOUD ’10, pages 43–50,

Washington, DC, USA. IEEE Computer Society.

Chui, C. K. and Chen, G. (1987). Kalman filtering with real-

time applications. Springer-Verlag New York, Inc.,

New York, NY, USA.

Consortium, A. (2003-2008a). Ask-it project: Ambient in-

telligence system of agents for knowledge-based and

integrated services. http://www.ask-it.org/.

Consortium, O. (2003-2008b). Oasis project: Open archi-

tecture for accessible services integration and stan-

dardisation.

Foster, I., Zhao, Y., Raicu, I., and Lu, S. (2008). Cloud

computing and grid computing 360-degree com-

pared. 2008 Grid Computing Environments Workshop,

abs/0901.0(5):1–10.

Kovachev, D., Renzel, D., Klamma, R., and Cao, Y. (2010).

Mobile community cloud computing: Emerges and

evolves. In Mobile Data Management’10, pages 393–

395.

Miller, M. (2008). Cloud Computing: Web-Based Applica-

tions That Change the Way You Work and Collaborate

Online. Que Publishing Company, 1 edition.

Preuveneers, D. (2010). Context-aware adaptation for Am-

bient Intelligence: Concepts, methods and applica-

tions. LAP Lambert Academic Publishing, Germany.

S, K., M, M., H, W. K., M, P., and R, H. (2009). On health

enabling and ambient-assistive technologies. what has

been achieved and where do we have to go? Methods

Inf Med, 48(1):29–37.

Story, M. F., Mueller, J. L., and Mace, R. L. (1998). THE

UNIVERSAL DESIGN FILE Designing for People of

All Ages and Abilities. The Center for Universal De-

sign.

Wikipedia (2011). Wikipedia. software appliance http://

en.wikipedia.org/wiki/software appliance.

ICSOFT 2011 - 6th International Conference on Software and Data Technologies

382