UTILIZING TERM PROXIMITY BASED FEATURES TO IMPROVE

TEXT DOCUMENT CLUSTERING

Shashank Paliwal and Vikram Pudi

Center for Data Engineering, International Institute of Information Technology Hyderabad, Hyderabad, India

Keywords:

Text document clustering, Document similarity, Term proximity, Term dependency, Feature weighting.

Abstract:

Measuring inter-document similarity is one of the most essential steps in text document clustering. Traditional

methods rely on representing text documents using the simple Bag-of-Words (BOW) model which assumes

that terms of a text document are independent of each other. Such single term analysis of the text completely

ignores the underlying (semantic) structure of a document. In the literature, sufficient efforts have been made

to enrich BOW representation using phrases and n-grams like bi-grams and tri-grams. These approaches take

into account dependency only between adjacent terms or a continuous sequence of terms. However, while

some of the dependencies exist between adjacent words, others are more distant. In this paper, we make an

effort to enrich traditional document vector by adding the notion of term-pair features. A Term-Pair feature

is a pair of two terms of the same document such that they may be adjacent to each other or distant. We

investigate the process of term-pair selection and propose a methodology to select potential term-pairs from

the given document. Utilizing term proximity between distant terms also allows some flexibility for two

documents to be similar if they are about similar topics but with varied writing styles. Experimental results

on standard web document data set show that the clustering performance is substantially improved by adding

term-pair features.

1 INTRODUCTION

With a large explosion in the amount of data found

on the web, it has become necessary to devise bet-

ter methods to classify data. A large part of this web

data (like blogs, webpages, tweets etc.) is in the form

of text. Text document clustering techniques play an

important role in the performance of information re-

trieval, search engines and text mining systems by

classifying text documents. The traditional clustering

techniques fail to provide satisfactory results for text

documents, primarily due to the fact that text data is

very high dimensional and contains a large number of

unique terms in a single document.

Text documents are often represented as a vector

where each term is associated with a weight. The

Vector Space Model (Salton et al., 1975) is a pop-

ular method that abstracts each document as a vec-

tor with weighted terms acting as features. Most

of the term extraction algorithms follow “Bag of

Words”(BOW) representation to identify document

terms. For the sake of simplicity the BOW model as-

sumes that words are independent of each other but

this assumption does not hold true for textual data.

Single term analysis is not sufficient to successfully

capture the underlying (semantic) structure of a text

document and ignores the semantic association be-

tween them. Proximity between terms is a very useful

information which if utilized, helps to go beyond the

Bag of Words representation. Vector based informa-

tion retrieval systems are still very common and some

of the most efficient in use.

Most of the work which has been done in the di-

rection of capturing term dependencies in a document

is through finding matching phrases between the doc-

uments. According to (Zamir and Etzioni, 1999),

a “phrase” is an ordered sequence of one or more

words. Phrases are less sensitive to noise when it

comes to calculating document similarity as the prob-

ability of finding matching phrases in non related doc-

uments is low (Hammouda and Kamel, 2004). But

as phrase is an ‘ordered sequence’, it is not flexible

enough to take different writing styles into account.

Two documents may be on same topic but due to var-

ied writing styles there may be very few matching

phrases or none in worst case scenario. In such cases,

phrase based approaches might not work or at best be

as good as a single term analysis algorithm.

537

Paliwal S. and Pudi V..

UTILIZING TERM PROXIMITY BASED FEATURES TO IMPROVE TEXT DOCUMENT CLUSTERING.

DOI: 10.5220/0003645805290536

In Proceedings of the International Conference on Knowledge Discovery and Information Retrieval (SSTM-2011), pages 529-536

ISBN: 978-989-8425-79-9

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

Measuring term dependency through phrases or

n-grams includes dependency only between adjacent

terms. However, genuine term dependencies do not

exist only between adjacent words. They may also

occur between more distant words such as between

“powerful” and “computers” in powerful multipro-

cessor computers. This work is targeted in the di-

rection of capturing term dependencies between ad-

jacent as well as distant terms. Proximity could be

viewed as indirect measure of dependence between

terms. (Beeferman et al., 1997) shows that term de-

pendencies between terms are strongly influenced by

proximity between them. The intuition is that if two

words have some proximity between each other in

one document and similar proximity in the other doc-

ument, then a combined feature of these two words

when added to the original document vector should

contribute to similarity between these two documents.

We also suggest a feature generation process to limit

the number of pairs of words to be considered for in-

clusion as a feature. Cosine similarity is then used

to measure similarity between the two document vec-

tors and finally Group Hierarchical Agglomerative

Clustering (GHAC) algorithm is used to cluster docu-

ments.

To the best of our knowledge, no work has been

done so far to utilize term proximity between distant

terms for improved clustering of text documents. The

contribution of this paper is two folds. Firstly we in-

troduce a new kind of feature called Term-Pair fea-

ture. A Term-Pair feature consists of a pair of terms

which might be adjacent to each other as well as dis-

tant and is weighted on the basis of a term proximity

measure between the two terms. With the help of dif-

ferent weights, we show how clustering is improved

in a simple yet effective manner. Secondly, we also

discuss how from the large number of such possible

features, only the most important ones are selected

and remaining ones are discarded.

The rest of the paper is organized as follows. Sec-

tion 2 briefly describes the related work. Section 3

explains the notion of term proximity in a text docu-

ment. Section 4 describes our approach to the calcu-

lation of similarity between two documents. Section

5 and 6 describe experimental results and the conclu-

sion respectively.

2 RELATED WORK

Many Vector Space Document based clustering mod-

els make use of single term analysis only. To fur-

ther improve clustering of documents and rather than

treating a document as Bag Of Words, including

term dependency while calculating document similar-

ity has gained attention. Most of the work dealing

with term dependency or proximity in text document

clustering techniques includes phrases (Hammouda

and Kamel, 2004), (Chim and Deng, 2007), (Zamir

and Etzioni, 1999) or n-gram models. (Hammouda

and Kamel, 2004) does so by introducing a new doc-

ument representation model called the Document In-

dex Graph while (Chim and Deng, 2007), (Zamir and

Etzioni, 1999) do so with the use of Suffix Tree. In

(Bekkerman and Allan, 2003), the authors talk about

the usage of bi-grams to improve text classification.

(Ahlgren and Colliander, 2009), (Andrews and Fox,

2007) analyze the existing approaches for calculating

inter document similarity. In all of the above men-

tioned clustering techniques, semantic association be-

tween distant terms has been ignored or is limited to

words which are adjacent or a sequence of adjacent

words.

Most of the existing information retrieval mod-

els are primarily based on various term statistics. In

traditional models - from classic probabilistic models

(Croft and Harper, 1997), (Fuhr, 1992) through vector

space models (Salton et al., 1975) to statistical lan-

guage models (Lafferty and Zhai, 2001), (Ponte and

Croft, 1998) - these term statistics have been captured

directly in the ranking formula.The idea of including

term dependencies between distant words (distance

between term occurrences) in measurement of doc-

ument relevance has been explored in some of the

works by incorporating these dependency measures

in various models like in vector space models (Fagan,

1987) as well as probabilistic models (Song et al.,

2008). In literature efforts have been made to extend

the state-of-the-art probabilistic model BM25 to in-

clude term proximity in calculation of relevance of

document to a query (Song et al., 2008), (Rasolofo

and Savoy, 2003). (Hawking et al., 1996) makes use

of distance based relevance formulas to improvequal-

ity of retrieval. (Zhao and Yun, 2009) proposes a new

proximity based language model and studies the in-

tegration of term proximity information into the un-

igram language modeling. We aim to make use of

term proximity between distant words, in calculation

of similarity between text documents represented us-

ing vector space model.

3 BASIC IDEA

The basis of the work presented in this paper is mea-

sure of proximity among words which are common in

two documents. This in turn conveys that two doc-

uments will be considered similar if they have many

KDIR 2011 - International Conference on Knowledge Discovery and Information Retrieval

538

words in common and these words appear in ‘similar’

proximity of each other in both the documents.

3.1 Proposed Model

A Term-Pair feature is a feature whose weight is

a measure of proximity between the pair of terms.

These terms may be distant i.e. one appears after cer-

tain number of terms from other or be adjacent to each

other. Since it is unclear what is the best way to mea-

sure proximity, we use three different proximity mea-

sures while using the fourth proximity measure for

the purpose of normalization. All these measures are

independent of other relevance factors like Term Fre-

quency(TF) and Inverse Document Frequency(idf).

We chose term as a segmentation unit i.e. we mea-

sure the distance between two term occurrences based

on the number of terms between two occurrences af-

ter stop words removal. dist(t

i

,t

j

) refers to number of

terms which occur between terms t

i

and t

j

in a docu-

ment after stop words removal.

Let D be a document set with N number of docu-

ments:

d

n

= { t

1

,t

2

,t

3

.....t

m

}

Where d

n

is the n

th

document in corpus and t

i

is

i

th

term in document d

n

.

In this paper we use three kinds of proximity mea-

sures to compute term-pair weights for terms t

i

and t

j

:

1. dist

min

(t

i

,t

j

)

where dist

min

(t

i

,t

j

) is the minimum distance ex-

pressed in terms of number of words between terms t

i

and t

j

in a document d. Distance of 1 corresponds to

adjacent terms.

2. dist

avg min

(t

i

,t

j

)

where dist

avg min

(t

i

,t

j

) is the average of the short-

est distance between each occurrence of the least fre-

quent term and the nearest occurrence of the other

term.Suppose t

i

occurs less frequently than t

j

, then

d

avg min

(t

i

,t

j

) is the average of minimum distance be-

tween every occurrence of t

i

and nearest occurrence

of t

j

.

3. dist

avg

(t

i

,t

j

)

where dist

avg

(t

i

,t

j

) is the difference between aver-

age positions of all the occurrences of terms t

i

and t

j

(Cummins and O’Riordan, 2009).

Example 1. Let in a document, t

1

occurs at

{2,6,10} positions and t

2

occurs at {3,4} positions.

Then,

• dist

min

(t

1

,t

2

)=(3-2)=1.0

• dist

avg min

(t

1

,t

2

)=((3-2)+(6-4))/2)=1.5

• dist

avg

(t

1

,t

2

)=((2+6+10)/3)-((3+4)/2)=2.5

4 SIMILARITY COMPUTATION

BETWEEN DOCUMENTS

Computing similarity between two documents con-

sists of three steps which are as follows :

1. For every document we form a set of highly

ranked terms which are discriminative and help

distinguish the concerned document from other

documents.

2. We form an enriched document vector by adding

term proximity based features to traditional single

term document vectors.

3. We compute similarity between two documents

using cosine similarity measure.

4.1 Term-pair Feature Selection

In document retrieval framework, the most obvious

choices of term pairs for measuring proximity are

terms present in the query. However while calculating

similarity between two documents, out of the large

combinations of term pairs possible, only the most

important ones should be selected. Also, not only

strong dependencies are important but weak depen-

dencies can not be neglected as they too might con-

tribute to similarity or dissimilarity between the doc-

uments as per the case.

Due to including term dependencies between dis-

tant terms, the possible set of term pairs are

m

C

2

if

we assume a document d

i

to have m unique words.

To select only those combinations which are useful in

our calculation of similarity we first sort terms of a

document on the basis of tf-idf weights and then con-

sider only highly ranked words. If this set of highly

ranked words is denoted by HRTerms then for a pair

of words to be considered for inclusion as a feature

in document vector, at least one of the words must

belong to this set HRTerms.

If the set of words which are common in both the

documents is represented by CTerms then for a pair

of terms t

i

and t

j

to be considered as a feature if :

1. Both t

i

and t

j

belong to HRTerms, then (t

i

,t

j

) is a

feature (Algorithm 1).

or

1. t

i

and t

j

belong to CTerms and either t

i

or t

j

be-

longs to HRTerms, then (t

i

,t

j

) is a feature (Algo-

rithm 2).

4.1.1 Algorithm for Building First Term-pair

Feature Set FTPairs

Algorithm 1 first forms a set of highly ranked words

for a document d

i

consisting of all those terms

UTILIZING TERM PROXIMITY BASED FEATURES TO IMPROVE TEXT DOCUMENT CLUSTERING

539

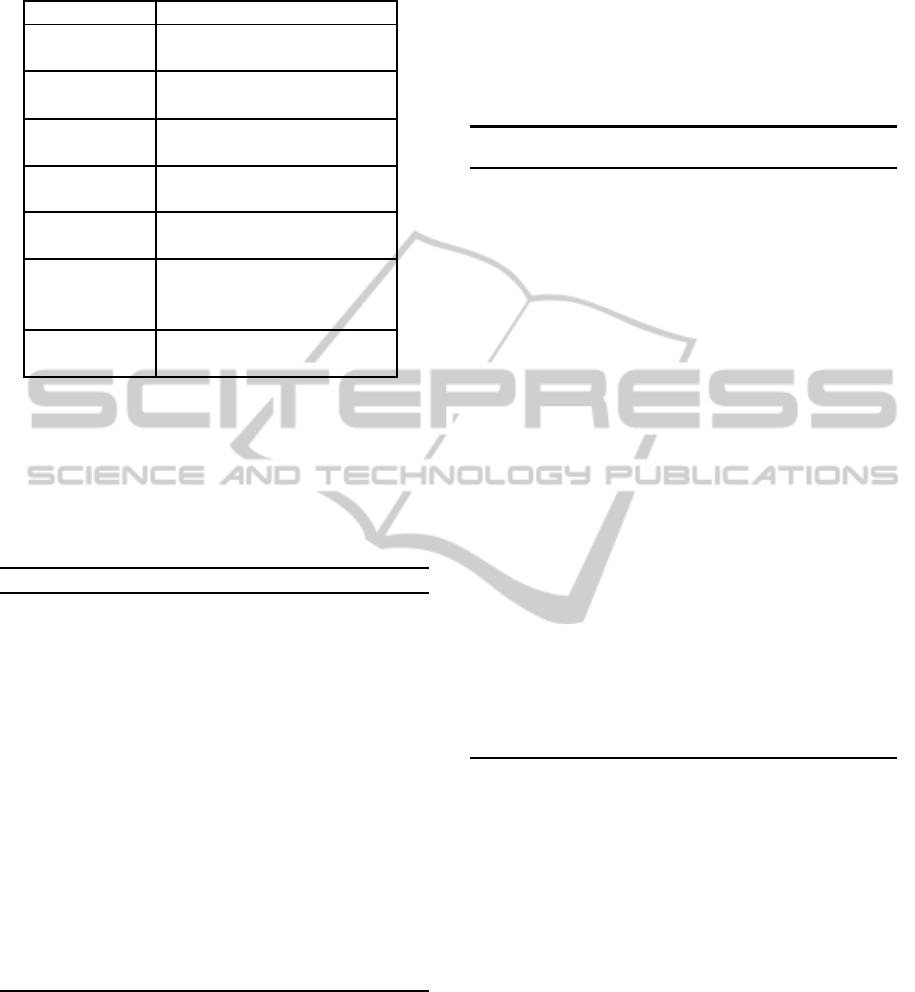

Table 1: Notations.

Term Description

D Set of Documents

in a data set

d

i

i

th

document

of set D

Dterms

i

Set of terms

belonging to d

i

HRterms

i

Set of Highly

Ranked terms for d

i

FT pairs

i

Set of First

Term-Pair features for d

i

SDT pairs

(i, j)

Set of Second dynamic

Term-Pair features for

d

i

when d

j

is encountered

CTerms

(i, j)

Set of terms which are

common between d

i

and d

j

which have a tf-idf weight higher than a certain user-

specified minimum threshold. Now, all the possible

combination of pairs of terms from HRTerms

i

are

then added to set FTPairs. If HRTerms

i

consists of

k terms then FTPairs will consist of

k

C

2

Term-Pair

Features.

Algorithm 1: Extracting First Term-Pair Features.

Input:Dterm

i

, Minimum Threshold

Output:HRTerms

i

and FTPairs

i

FTPairs

i

= {}

HRTerms

i

= {}

foreach term t ∈ Dterm

i

if (t f − id f weight(t) ≥ Minimum Threshold)

HRTerms

i

= HRTerms

i

∪ t

end if

end for

\\ Let HRTerms

i

obtained above be {t

1

,t

2

,t

3

....t

k

}

for (m = 1;m < k;m+ +)

for (n = m;n ≤ k;n+ +)

FTPairs

i

= FTPairs

i

∪ {<t

m

,t

n

>}

end for

end for

4.1.2 Algorithm for Building Second Dynamic

Term-pair Feature Set SDTpairs

Algorithm 2 extracts Term-Pair features dynamically

before computing the similarity between two docu-

ments d

i

and d

j

. These features are primarily based

on set of common words CTerms

(i, j)

between the two

documents. Although all the pair of terms from this

set are candidates for Term-Pair features, only those

pairs are finally added as features of whose at least

one term belongs to set of highly ranked terms for

respective documents. It is important to note that

SDTPairs

(i, j)

is different from SDTPairs

( j,i)

since set

HRterms is different for both the documents.

Algorithm 2: Extracting Second Dynamic Term-Pair Fea-

tures.

Input:Dterm

i

, Dterm

j

, HRTerms

i

, HRTerms

j

,

FTPairs

i

and FTPairs

j

Output:SDTPairs

(i, j)

and SDTPairs

(i, j)

SDTPairs

(i, j)

= {}

SDTPairs

( j,i)

= {}

CTerms

(i, j)

= {}

CTerms

(i, j)

= Dterms

i

∩ Dterms

j

\\ Let CTerms

(i, j)

obtained above be {t

1

,t

2

,....t

l

}

for (m = 1;m < l;m+ +)

for (n = m;n ≤ l;n+ +)

if (t

m

∈ HRTerms

i

)||(t

n

∈ HRTerms

i

)

if < t

m

,t

n

> /∈ FTPairs

i

SDTPairs

(i, j)

= SDTPairs

(i, j)

∪ {<t

m

,t

n

>}

end if

end if

if (t

m

∈ HRTerms

j

)||(t

n

∈ HRTerms

j

)

if < t

m

,t

n

> /∈ FTPairs

j

SDTPairs

( j,i)

= SDTPairs

( j,i)

∪ {<t

m

,t

n

>}

end if

end if

end for

end for

Example 2. Document 1= “Document clustering

techniques mostly rely on single term analysis of

text.”

Document 2 = “Traditional data mining tech-

niques do not work well on text document clustering.”

Considering that all of the words shown in bold

belong to the set of highly ranked words for both the

documents, term pairs that will be a part of document

vectors as term-pair feature for Document 1 as per

possible cases mentioned above are :

1. According to Algorithm 1: {T

1

,T

4

} ,{T

1

,T

5

}

,{T

1

,T

6

} ,{T

4

,T

5

} , {T

4

,T

6

} , {T

5

,T

6

} .

2. According to Algorithm 2: {T

1

,T

2

} , {T

1

,T

3

} ,

{T

1

,T

7

}.

where T

i

is the word with position i in Docu-

ment 1 (see Table 2) after stop word removal.And

{document,clustering,techniques,text} is the set of

terms which are common in both the documents.

KDIR 2011 - International Conference on Knowledge Discovery and Information Retrieval

540

Table 2: Words present in Document 1 and 2 and their corre-

sponding indices in respective documents after stop-words

removal.

Word Positions in Positions in

Document 1 Document 2

analysis 6 -

clustering 2 7

data - 2

document 1 6

mining - 3

single 4 -

techniques 3 4

term 5 -

text 7 5

traditional - 1

4.2 Enriching Original Document

Vector with Term-pair Feature

So the term pairs obtained from the above step along

with their respective term proximity weights (tpw) are

added as features to original document vector with

unigrams or single terms and their respective tf-idf

weights. These weights are shown in Table 3.

Table 3: Feature Weighting Schema. t f

t,d

is frequency of

term t in document d, N is number of documents in corpus

and x

t

is number of documents in which term t occurs.

Feature Weight

Single Term tf-idf :

log(1+ t f

t,d

) ∗ log(

N

x

t

)

Term Pair tpw

1

=

dist

min

(t

i

,t

j

)

SL

(based on Term Proximity) tpw

2

=

dist

avg min

(t

i

,t

j

)

SL

tpw

3

=

dist

avg

(t

i

,t

j

)

SL

We use “span length” to normalize the term-pair

weights. It is the number of terms present in the

document segment which covers all occurrences of

common set of words between two documents. The

word “span” has been used here in a similar sense

as used by (Tao and Zhai, 2007), (Hawking et al.,

1996). (Tao and Zhai, 2007) define two kind of prox-

imity mechanisms namely span-based approaches and

distance aggregation approaches. Span-based ap-

proaches measure proximity based on the length seg-

ment covering all the query terms and distance aggre-

gation approaches measure proximity by aggregating

pair-wise distances between query terms. Span-based

approaches do not consider the internal structure of

terms and calculate proximity only on the basis of text

spans. According to their definitions, dist

min

(t

i

,t

j

),

dist

avg min

(t

i

,t

j

), dist

avg

(t

i

,t

j

) belong to class of dis-

tance aggregation while span length is itself a prox-

imity measure belonging to class of span-based ap-

proaches. However, we treat span length simply as

a normalization factor and not a proximity measure.

The reason behind this treatment of span-length as a

simple normalization factor and not a proximity mea-

sure is the basic difference between query-document

similarity and document-document similarity. When

all the query terms appear in a small span of text, it

is reasonable to assume that such a document is more

relevant to query. However, query terms are generally

more closely related as compared to words common

between two documents. It would not be reasonable,

in our opinion to assume that when set of common

words between two documents appear in a small text

span then this contributes to similarity between two

documents. There might be very few words common

between two documents and thus these words can oc-

cur in a very small text span in one or both of the doc-

uments but this does not make those two documents

similar. Utilizing span-length as a normalization fac-

tor caters to above mentioned problem and helps to

normalize such proximities.

For Example 2, for document 1 span-length

1

is (7-

1)=6 since document is the first word and text is the

last word in Document 1 which are common between

the two documents. Similarly, for Document 2 span-

length

2

is (7-4)=3.

4.3 Similarity Computation

We use cosine similarity to measure similarity be-

tween enriched document vectors. Cosine similarity

between two document vectors

~

d

1

and

~

d

2

is calculated

as

Sim(

~

d

1

,

~

d

2

) =

~

d

1

.

~

d

2

|

~

d

1

||

~

d

2

|

where (.) indicates the vector dot product and

~

|d|

indicates the length of the vector

~

d .

5 EXPERIMENTAL RESULTS

AND DISCUSSION

We conducted our experiment on web dataset

3

con-

sisting of 314 web documents already classified into

10 classes.

1

Position of word “document” is 1 and position of “text”

is 7 in Document 1.

2

Position of word “techniques” is 4 and position of

“clustering” is 4 in Document 2.

3

http://pami.uwaterloo.ca/

˜

hammouda/webdata

UTILIZING TERM PROXIMITY BASED FEATURES TO IMPROVE TEXT DOCUMENT CLUSTERING

541

No kind of bound has been kept on maximum

number of hops between the pair of terms which com-

bine to form a term-pair feature as the terms which are

close to each other in one document but far away from

each other in other documents are the ones which con-

tribute to dissimilarity between the two documents

and it is important to keep them. Experimental results

also support this and unlike in relevance model, where

a limit is generally kept on the distance between two

terms in terms of number of words which occur be-

tween them.

To form the set HRTerms of highly ranked words,

we sort terms on the basis of their tf-idf weights.

These are the words which are discriminative and help

to distinguish a document from other unrelated docu-

ments. If the average of tf-idf weight of all the words

in a document is denoted by avg, then all the words

whose tf-idf weight is greater than (β × avg) belong

to set HRTerms. Here β is a user-defined value such

that 0 ≤ β ≤ 1. For our experiments, we use β as 0.7.

We use F-measure score to evaluate the quality of

clustering. F-measure combines precision and recall

by calculating their harmonic mean. Let there be a

class i and cluster j, then precision and recall of clus-

ter j with respect to class i are as follows:

Precision(i, j) =

n

ij

n

j

Recall(i, j) =

n

ij

n

i

where

• n

ij

is the number of documents belonging to class

i in cluster j.

• n

i

is number of documents belonging to class i.

• n

j

is the number of documents in cluster j.

Then F-score of class i is the maximum F-score it

has in any of the clusters :

F-score(i) =

2∗ Precision∗ Recall

Precision+ Recall

The overall F-score for clustering is the weighted av-

erage of F-score for each class i :

F

overall

=

∑

i

(n

i

∗ F(i))

∑

i

n

i

where n

i

is the number of documents belonging to

class i.

For clustering we use GHAC with complete link-

age with the help of a java based tool

4

. We chose tra-

ditional tf-idf weighting based single term approach

as our baseline approach. We obtained F-score of 0.77

with traditional single term document vector with tf-

idf weighting. We perform two different experiments

using different document vectors.

4

Link to download tool : http://www.cs.umb.edu/ smi-

marog/agnes/agnes.html

5.1 Experiment 1

We add term-pair features to original document vector

consisting of individual terms. It is important to note

we do not apply any kind of dimensionality reduction

on original document vector which consists of only

single term features since our aim is to investigate

whether adding term pair features to original docu-

ment vectors could improve clustering or not. The F-

scores using different term-pair weights are tabulated

in Table 4.

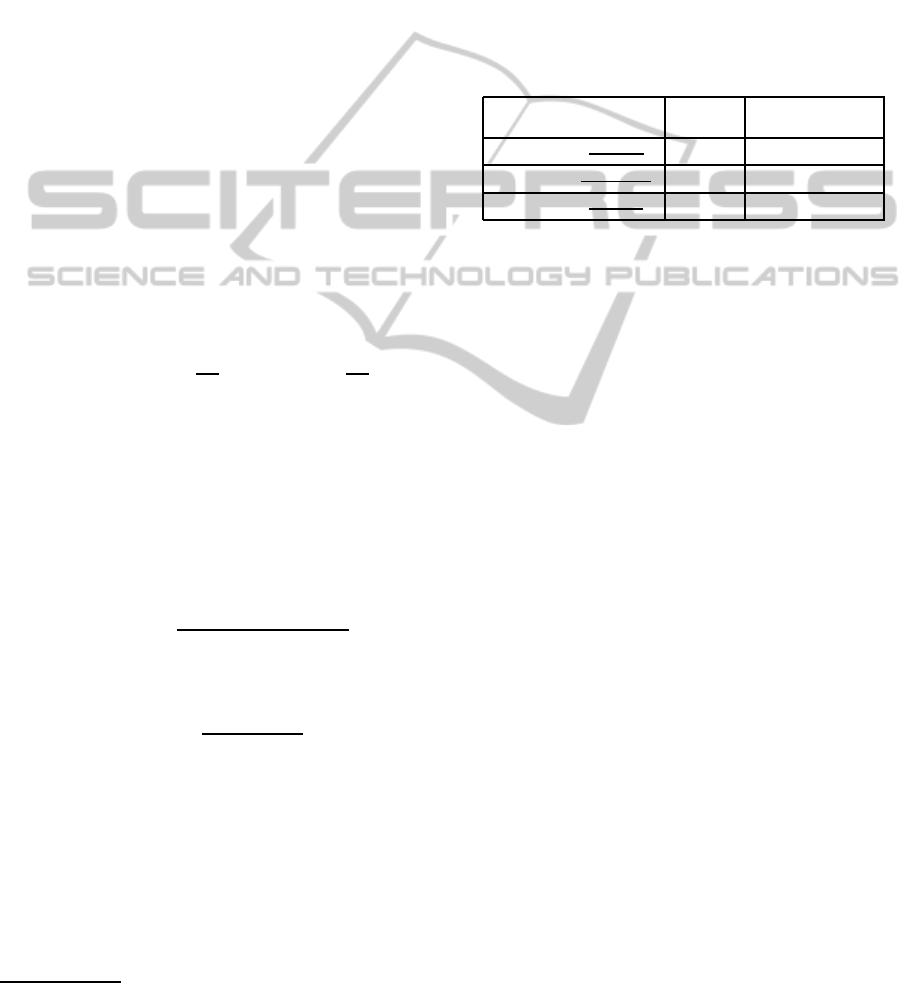

Table 4: Obtained F-score and percentage improvement

over baseline approach with different term pair weights.

Term Pair F-score % improvement

Weight over baseline

tpw

1

(t

i

,t

j

)=

d

min

(t

i

,t

j

)

SL

0.840 7.91

tpw

2

(t

i

,t

j

)=

d

min

a

vg

(t

i

,t

j

)

SL

0.81 4.08

tpw

3

(t

i

,t

j

)=

d

avg

(t

i

,t

j

)

SL

0.799 2.67

The results of this experiment agree with experi-

ments in relevance model where too proximity mea-

sure based on minimum pair distance generally also

performs well in relevance model as reported by

(Tao and Zhai, 2007) and (Cummins and O’Riordan,

2009).

5.2 Experiment 2

To further investigate the significance of Term-Pair

features, we combined term-pair features vector based

cosine similarity with traditional single term feature

vector based cosine similarity using weighted aver-

age of two similarities. If Sim

tp f

(d

1

,d

2

) represents

cosine similarity between document vectors consist-

ing of only term-pair features and Sim

t f−id f

(d

1

,d

2

)

represents cosine similarity between document con-

sisting of only traditional single term, tf-idf weighted

features, then combined similarity is given by equa-

tion (1) :

Sim(d

1

,d

2

)) =

α∗ Sim

tp f

(d

1

,d

2

) + (1− α) ∗ Sim

t f−id f

(d

1

,d

2

)

where α is the similarity bend factor and its value lies

in the interval [0,1].(Hammouda and Kamel, 2004)

We experimented with three values of α - 0.4,0.5

and 0.6 for each of the three Term-Pair weights. Fig-

ure 1 shows the results with respective curves. The

obtained F-Scores however, are mostly less than the

baseline score. For tpw

1

(t

i

,t

j

), F-scores improve as

value of α is increased from 0.4 to 0.6. Better results

were obtained with Experiment 1 which suggests that

Term-Pair features tend to combine well with single

term features as compared to combining similarity of

KDIR 2011 - International Conference on Knowledge Discovery and Information Retrieval

542

Figure 1: Variation of F-Scores for different Term-Pair weights with α. (a) For tpw

1

(t

i

,t

j

), (b) For tpw

2

(t

i

,t

j

) and (c) For

tpw

3

(t

i

,t

j

).

both the features.Thus, in other words a document is

better represented by a vector consisting of both fea-

tures and such a document vector helps in distinguish-

ing one document from other documents.

We also formed a document vector which con-

sisted only of Term-Pair features without any single

term features and measured similarity between these

document vectors. We can say for this experiment,

α is taken as one. With tpw

1

(t

i

,t

j

) we obtained a F-

Score of 0.73 . This F-score might be less than that

obtained with traditional tf-idf weighted vectors, but

still highlights the importance of Term-Pair features

and utilizing term dependency between distant terms

for clustering of documents could prove to be a new

dimension for text document representation and clus-

tering.

6 CONCLUSIONS

The presented approach might not provide best results

but are definitely promising. It is to be kept in mind

that the purpose of this paper is to determine whether

document clustering can be improved by adding sim-

ple term proximity based Term-Pair features. There

are many more possibilities such as to investigate ef-

fect models other than vector space model, to take dif-

ferent similarity measure, to apply different weight-

ing schemes for Term-Pair features. In the future, we

are working on developing a model which is suitable

and make full use of term proximity between distant

terms. Based on the results obtained, it is our intuition

that if such a simple approach can improve the clus-

tering then a more complex and complete approach

can prove to be very useful and produce much better

clustering.

REFERENCES

Ahlgren, P. and Colliander, C. (2009). Document-document

similarity approaches and science mapping: Experi-

mental comparison of five approaches. Journal of In-

formetrics, 3(1):49–63.

Andrews, N. O. and Fox, E. A. (2007). Recent Devel-

opments in Document Clustering. Technical report,

Computer Science, Virginia Tech.

Beeferman, D., Berger, A., and Lafferty, J. (1997). A model

of lexical attraction and repulsion. In Proceedings of

the eighth conference on European chapter of the As-

sociation for Computational Linguistics, EACL ’97,

pages 373–380, Stroudsburg, PA, USA. Association

for Computational Linguistics.

Bekkerman, R. and Allan, J. (2003). Using bigrams in text

categorization.

Chim, H. and Deng, X. (2007). A new suffix tree similarity

measure for document clustering. In Proceedings of

the 16th international conference on World Wide Web,

WWW ’07, pages 121–130, New York, NY, USA.

ACM.

Croft, W. B. and Harper, D. J. (1997). Using probabilistic

models of document retrieval without relevance infor-

mation, pages 339–344. Morgan Kaufmann Publish-

ers Inc., San Francisco, CA, USA.

Cummins, R. and O’Riordan, C. (2009). Learning in a pair-

wise term-term proximity framework for information

retrieval. In Proceedings of the 32nd international

ACM SIGIR conference on Research and development

in information retrieval, SIGIR ’09, pages 251–258,

New York, NY, USA. ACM.

Fagan, J. (1987). Automatic phrase indexing for document

retrieval. In Proceedings of the 10th annual interna-

tional ACM SIGIR conference on Research and de-

velopment in information retrieval, SIGIR ’87, pages

91–101, New York, NY, USA. ACM.

Fuhr, N. (1992). Probabilistic models in information re-

trieval. The Computer Journal, 35:243–255.

Hammouda, K. M. and Kamel, M. S. (2004). Efficient

phrase-based document indexing for web document

clustering. IEEE Trans. on Knowl. and Data Eng.,

16:1279–1296.

Hawking, D., Hawking, D., Thistlewaite, P., and Thistle-

waite, P. (1996). Relevance weighting using distance

UTILIZING TERM PROXIMITY BASED FEATURES TO IMPROVE TEXT DOCUMENT CLUSTERING

543

between term occurrences. Technical report, The Aus-

tralian National University.

Lafferty, J. and Zhai, C. (2001). Document language mod-

els, query models, and risk minimization for informa-

tion retrieval. In Proceedings of the 24th annual inter-

national ACM SIGIR conference on Research and de-

velopment in information retrieval, SIGIR ’01, pages

111–119, New York, NY, USA. ACM.

Ponte, J. M. and Croft, W. B. (1998). A language model-

ing approach to information retrieval. In Proceedings

of the 21st annual international ACM SIGIR confer-

ence on Research and development in information re-

trieval, SIGIR ’98, pages 275–281, New York, NY,

USA. ACM.

Rasolofo, Y. and Savoy, J. (2003). Term proximity scor-

ing for keyword-based retrieval systems. In Pro-

ceedings of the 25th European conference on IR re-

search, ECIR’03, pages 207–218, Berlin, Heidelberg.

Springer-Verlag.

Salton, G., Wong, A., and Yang, C. S. (1975). A vector

space model for automatic indexing. Commun. ACM,

18:613–620.

Song, R., Taylor, M. J., Wen, J.-R., Hon, H.-W., and Yu,

Y. (2008). Viewing term proximity from a different

perspective. In Proceedings of the IR research, 30th

European conference on Advances in information re-

trieval, ECIR’08, pages 346–357, Berlin, Heidelberg.

Springer-Verlag.

Tao, T. and Zhai, C. (2007). An exploration of proxim-

ity measures in information retrieval. In Proceedings

of the 30th annual international ACM SIGIR confer-

ence on Research and development in information re-

trieval, SIGIR ’07, pages 295–302, New York, NY,

USA. ACM.

Zamir, O. and Etzioni, O. (1999). Grouper: A dynamic clus-

tering interface to web search results. In Proceedings

of the eighth international conference on World Wide

Web, pages 1361–1374.

Zhao, J. and Yun, Y. (2009). A proximity language model

for information retrieval. In Proceedings of the 32nd

international ACM SIGIR conference on Research

and development in information retrieval, SIGIR ’09,

pages 291–298, New York, NY, USA. ACM.

KDIR 2011 - International Conference on Knowledge Discovery and Information Retrieval

544