A FORWARD-BACKWARD ALGORITHM FOR STOCHASTIC

CONTROL PROBLEMS

Using the Stochastic Maximum Principle as an Alternative to Dynamic

Programming

Stephan E. Ludwig

1

, Justin A. Sirignano

2

, Ruojun Huang

3

and George Papanicolaou

4

1

Department of Mathematics, Heidelberg University, INF 288, Heidelberg, Germany

2

Department of Management Science and Engineering, Stanford University, Stanford, U.S.A.

3

Department of Statistics, Stanford University, Stanford, U.S.A.

4

Department of Mathematics, Stanford University, 380 Sloan Hall, Stanford, U.S.A.

Keywords:

Optimal stochastic control, Stochastic maximum principle, Forward-Backward stochastic differential equa-

tions.

Abstract:

An algorithm for solving continuous-time stochastic optimal control problems is presented. The numeri-

cal scheme is based on the stochastic maximum principle (SMP) as an alternative to the widely studied dy-

namic programming principle (DDP). By using the SMP, (Peng, 1990) obtained a system of coupled forward-

backward stochastic differential equations (FBSDE) with an external optimality condition. We extend the

numerical scheme of (Delarue and Menozzi, 2006) by a Newton-Raphson method to solve the FBSDE system

and the optimality condition simultaneously. As far as the authors are aware, this is the first fully explicit

numerical scheme for the solution of optimal control problems through the solution of the corresponding

extended FBSDE system. We discuss possible numerical advantages to the DDP approach and consider an

optimal investment-consumption problem as an example.

1 INTRODUCTION

We consider continuous-time stochastic control prob-

lems where the state variable is a controlled stochastic

process of Markovian type and the objective function

depends on the state and on the control. These type

of problems typically appear in mathematical finance

and economics. The most common method to solve

these problems is the dynamic programming princi-

ple (DPP), which leads to the well-known Hamilton-

Jacobi-Bellman (HJB) equation. Various numerical

schemes take advantage of the DDP’s discrete version

by performing a backward algorithms or directly solv-

ing the HJB partial differential equation using a finite

difference scheme.

In this paper, we consider an alternative approach

to the problem based on the stochastic maximum

principle (SMP), which leads to a system of cou-

pled forward-backward stochastic differential equa-

tions (FBSDE) plus an external optimality condition.

This was first studied by (Peng, 1990). It is well

known that a quasilinear PDE has a FBSDE repre-

sentation, which is an extension of the well-known

Feynman-Kac formula. However, the FBSDE repre-

sentation cannot be directly applied to the HJB equa-

tion unless the optimal control is known as an explicit

function. Instead, by using the SMP, we obtain a cou-

pled FBSDE system for the adjoint equations. The

coupling arises through the additional optimality con-

dition only.

In addition to reviewing briefly the connection be-

tween stochastic control problems and FBSDE sys-

tems, the main objective of this paper is to present a

complete numerical algorithm by obtaining approx-

imate solutions to a certain class of optimal control

problems. We need to use advanced numerical meth-

ods for the FBSDE because of the coupling which

arises from dependence of the state process on the

controls and is therefore connected to the controlled

objective function. Therefore, we take advantage of

an existing numerical scheme for coupled FBSDEs,

initially proposed by (Delarue and Menozzi, 2006)

and extend it to satisfy the optimality condition.

The paper’s outline is as follows. After the prob-

83

E. Ludwig S., A. Sirignano J., Huang R. and Papanicolaou G..

A FORWARD-BACKWARD ALGORITHM FOR STOCHASTIC CONTROL PROBLEMS - Using the Stochastic Maximum Principle as an Alternative to

Dynamic Programming.

DOI: 10.5220/0003885900830089

In Proceedings of the 1st International Conference on Operations Research and Enterprise Systems (ICORES-2012), pages 83-89

ISBN: 978-989-8425-97-3

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

lem definition in section 2, we briefly derivethe corre-

sponding FBSDE representation for the adjoint equa-

tions and state a verification theorem in section 3. In

section 4 we discretize the time-continuous problem

and provide the numerical scheme using a Markov

chain approximation and a Newton-Raphson method

for the optimization. A brief comparison of the com-

putational costs between our method and the stan-

dard dynamic programming approach together with

first results from an application on an investment-

consumption problem are presented in section 5. The

paper concludes with some outlook to further devel-

opment.

2 PROBLEM STATEMENT

Throughout the paper we assume a given probability

space (Ω, F, P), endowed with a d-dimensional Brow-

nian motion (W

t

)

t≥0

, whose natural filtration is de-

noted by {F

t

}

t≥0

.

Consider the following problem. The dynamics of

a controlled diffusion process X

t

, which represents the

state of our system, are given by:

dX

t

= b(X

t

, π

t

)dt+σ(X

t

, π

t

)dW

t

, X

0

= x∈R

n

, (1)

and the goal is to maximize a given objective function

with finite time horizon [0, T] over admissible con-

trols

˜

π = {π

t

}

t∈[0,T]

∈ A:

J(t, x,

˜

π) := E

˜

π

t

Z

T

t

f(s, X

s

, π

s

)ds+ g(X

T

)

X

t

= x

.

(2)

Here, A is the set of all progressively F

t

-measurable

controls which takes its values π

t

in a compact set

A ⊂R

r

. If it exists, we will denote the optimal control

by:

˜

π

∗

:= argmax

˜

π∈A

J(t, x,

˜

π), ∀(t, x) ∈ [0, T] ×R

n

, (3)

and the value function by:

v(t, x) := sup

˜

π

∈A

J(t, x,

˜

π), ∀(t, x) ∈ [0, T] ×R

n

.

(4)

2.1 General Conditions

For each fixed π ∈ A, we ensure the existence of a

unique solution to the controlled forward SDE (1) by

the following assumptions:

• b(·, π), σ(·, π) are uniformly Lipschitz continuous

with respect to x:

∀π ∈ A, d|b(x

1

, π) −b(x

2

, π)|+ |σ(x

1

, π) −σ(x

2

, π)|

≤C|x

1

−x

2

|,

(5)

• b(·, π), σ(·, π) satisfy a linear growth condition

with respect to x:

∀π ∈ A, |b(x, π)|+ |σ(x, π)| ≤C(1+ |x|).

(6)

To ensure the boundedness of the objective function

(2) we further assume:

• f(t,·,π),g(·) satisfy a quadratic growth condition:

∀t ∈ [0, T], ∀π ∈ A,

|g(x)|+ |f(t, x, π)| ≤C(1+ |x|

2

), ∀x ∈R

n

.

(7)

Both proofscan be found in (Pham, 2009) chapter 3.2.

For further proceedings we assume also that:

• b, σ, f, g are twice continuously differentiable

with respect to x and π:

∀t ∈ [0, T], (b, σ, f, g)(t, ·, ·) ∈C

1,2

(R

n

, A),

(8)

• f, g are uniformly concave with respect to x and

π.

To be explicit, we use a Markov Chain approxima-

tion in section 4.1. In order to calculate the Brown-

ian increments for this Markov chain approximation

in (36), σ needs to be invertible. This also means that

d = n. Otherwise, we can use Quantization methods

or Monte Carlo simulations to calculate expectations

instead. This would make no difference to the general

scheme.

3 THE STOCHASTIC MAXIMUM

PRINCIPLE

3.1 Derivation of the FBSDE

Following (Pham, 2009), let us suppose there exists

a unique solution v ∈ C

1,3

([0, T) ×R

n

) ∩C

0

([0, T] ×

R

n

) to (4) and an optimal control

˜

π

∗

∈ A described in

(3) with associated controlled diffusion

ˆ

X

t

satisfying

(1).

The adjoint equations can be derived in two basic

steps, namely 1) derive the HJB equation at the opti-

mal control with respect to x:

∂

x

∂

t

v(t,

ˆ

X

t

) + G(t,

ˆ

X

t

, π

∗

t

, ∇

x

v(t,

ˆ

X

t

), ∇

2

x

v(t,

ˆ

X

t

))

= 0,

(9)

where G : [0, T] ×R

n

×A ×R

n

×R

n×n

→ R is given

by:

G(t,x,π, p, M) := b(x, π)p+

1

2

tr(σσ

′

(x, π)M)

+ f(t, x, π).

(10)

ICORES 2012 - 1st International Conference on Operations Research and Enterprise Systems

84

2) Apply Ito’s formula to ∇

x

v(t,

ˆ

X

t

) and plug in the

above relation (9). After a few calculations, the ad-

joint equations come out as:

−d ∇

x

v(t,

ˆ

X

t

) =

∇

x

H(t,

ˆ

X

t

, π

∗

t

, ∇

x

v(t,

ˆ

X

t

), ∇

2

x

v(t,

ˆ

X

t

)σ(

ˆ

X

t

, π

∗

t

))dt

−∇

2

x

v(t,

ˆ

X

t

)σ(

ˆ

X

t

, π

∗

t

)dW

t

,

∇

x

v(T,

ˆ

X

T

) = ∇

x

g(

ˆ

X

t

),

where the so called Hamiltonian H : [0, T] ×R

n

×A×

R

n

×R

n×d

→ R is defined by:

H(t, x, π, y, z) := b(x, π)y+ tr[σ

′

(x, π)z] + f(t, x, π).

(11)

Furthermore, since G is continuously differen-

tiable with respect to π, we get:

0 = ∂

π

G(t,

ˆ

X

t

, π

∗

t

, ∇

x

v(t,

ˆ

X

t

), ∇

2

x

v(t,

ˆ

X

t

))

= ∂

π

H(t,

ˆ

X

t

, π

∗

t

, ∇

x

v(t,

ˆ

X

t

), ∇

2

x

v(t,

ˆ

X

t

)σ(x, π)).

Assuming concavity of H with respect to π, H must

attain a maximum at π

∗

t

.

Let us summarize the above results. Under the

above assumptions and H being concave with respect

to π, the triple:

(

ˆ

X

t

,

ˆ

Y

t

,

ˆ

Z

t

) :=

ˆ

X

t

, ∇

x

v(t,

ˆ

X

t

), ∇

2

x

v(t,

ˆ

X

t

)σ(

ˆ

X

t

, π

∗

t

)

is the unique solution to the coupled FBSDE system:

X

t

= x+

R

t

0

b(X

s

, π

∗

s

)ds+

R

t

0

σ(X

s

, π

∗

s

)dW

s

,

Y

t

= ∇

x

g(X

T

) +

R

T

t

∇

x

H(s, X

s

, π

∗

s

,Y

s

, Z

s

)ds

−

R

T

t

Z

s

dW

s

,

(12)

such that the following optimality condition holds:

π

∗

t

= argmax

π

t

∈A

H(t,

ˆ

X

t

, π

t

,

ˆ

Y

t

,

ˆ

Z

t

). (13)

3.2 Verification Theorem

Theorem 1. Suppose that there exists a unique so-

lution v ∈ C

1,3

([0, T] ×R

n

, R) of the value function

(4). Let (

ˆ

X,

ˆ

Y

t

,

ˆ

Z

t

) and the control

˜

π

∗

:= {π

∗

t

}

t∈[0,T]

be

associated solutions to the FBSDE system (12) such

that the optimality condition (13) holds. Additionally

assume that g(·) and H(t, ˙,·,

ˆ

Y

t

,

ˆ

Z

t

) are uniformly con-

cave in (x, π). Then:

•

˜

π

∗

is the optimal control of the stochastic control

problem (4) and

ˆ

X

t

is the solution of the associated

controlled state process (1),

• (

ˆ

Y

t

,

ˆ

Z

t

) =

∇

x

v(t,

ˆ

X

t

), ∇

2

x

v(t,

ˆ

X

t

)σ(

ˆ

X

t

, π

∗

t

)

Proof. The proof of the first statement is given in

(Pham, 2009) Theorem 6.5.4.

For the second step we define a function u(t,

ˆ

X

t

)

via its first derivative ∇

x

u(t,

ˆ

X

t

) :=

ˆ

Y

t

and its terminal

value u(T,

ˆ

X

T

) := g(

ˆ

X

T

) and apply Ito’s formula to

∇

x

u. Comparing the diffusion term with the backward

SDE for

ˆ

Y

t

we get

ˆ

Z

t

= ∇

2

xx

u(t,

ˆ

X

t

)σ(

ˆ

X

t

, π

∗

t

). Compar-

ing the drift terms we get a third order PDE which

is exactly the derivative of the HJB equation with re-

spect to x. Since the solution of this PDE is unique,

the verification is completed by using the verification

theorem for the HJB equation.

The concavity of H is an important condition for

the connection of the optimal control problem and the

optimality condition for the FBSDE. This condition

mainly specifies the problem class for applications.

Recall that we already assumed f, g to be concave in

section 2.1.

4 A NUMERICAL SCHEME FOR

SOLVING THE FBSDE

Let us state the complete (coupled) FBSDE problem

one more time:

X

t

= X

0

+

R

t

0

b(X

s

, π

∗

s

)ds+

R

t

0

σ(X

s

, π

∗

s

)dW

s

,

Y

t

= Y

T

+

R

T

t

∇

x

H(s, X

s

, π

∗

s

,Y

s

, Z

s

)ds−

R

T

t

Z

s

dW

s

,

π

∗

t

= argmax

π

t

∈A

H(t, X

t

, π

t

,Y

t

, Z

t

),

(14)

where X

0

= x and Y

T

= ∇

x

g(X

T

).

4.1 The Discrete Problem

Following (Kushner and Dupuis, 1992), let us define

a fixed, scalar approximation parameter h > 0. In the

following, the superscript h denotes the dependency

on this approximation parameter. Let ∆t

h

k

> 0, for k =

0, ..., N −1, N < ∞, discretize the time interval [0, T]

by defining:

t

0

:= 0, t

1

:= ∆t

h

0

, t

k

:=

k−1

∑

i=0

∆t

h

i

, t

N

:= T.

Suppose that ∆t

h

k

→ 0, as h →0. Let:

C

k

:=

(ξ

j

)

j∈I

k

, I

k

⊂ N

⊂ R

n

, ∀k = 0..N, (15)

be a spatial grid satisfying C

j

⊂ C

i

, for all

j < i. Furthermore, we denote the set of ad-

missible, piecewise constant controls by A

h

=

{

˜

π ∈ A |π

t

is constant over [t

k

,t

k+1

), ∀k ≤N −1}.

To calculate the propagation of the state process

from time t

k

to t

k+1

, we choose the Markov chain

approximation method here. As mentioned above,

we can use different methods like Quantization. The

Markov chain is defined by its transition probabilities:

∀k = 0, ..., N −1, ∀ξ

j

∈ C

k

, ∀ξ

l

∈ C

k+1

,

P(ξ

j

, ξ

l

|π

k

(ξ

j

)) = p

jl

k

(π

k

(ξ

j

)).

(16)

A FORWARD-BACKWARD ALGORITHM FOR STOCHASTIC CONTROL PROBLEMS - Using the Stochastic

Maximum Principle as an Alternative to Dynamic Programming

85

We denote ∆ξ

k

= ξ

k+1

−ξ

k

. The discrete Markov

chain approximation ξ

k

converges to the real state

process (1) as h → 0, if the following local consis-

tency conditions hold:

E

k

∆ξ

k

= b(ξ

k

, π

k

)∆t

h

k

(ξ

k

, π

k

) + o(∆t

h

k

(ξ

k

, π

k

)),

Var

k

∆ξ

h

k

= [σσ

′

](ξ

k

, π

k

)∆t

h

k

(ξ

k

, π

k

) + o(∆t

h

k

(ξ

k

, π

k

)),

sup

k,ω

|∆ξ

k

| → 0, as h → 0,

(17)

where E

k

is the conditioned expectation given all in-

formation up to time k and Var

k

is the variance accord-

ingly. For methods to derive proper transition proba-

bilities see (Kushner and Dupuis, 1992).

For any control

˜

π

h

∈ A

h

, we define the following

approximation of the objective function (2):

J

h

(t

k

, x,

˜

π

h

) =

E

˜

π

h

k

∑

N−1

i=k

f(t

i

, ξ

i

, π

h

i

)∆t

h

i

+ g(ξ

N

)

ξ

k

= x

,

(18)

and the approximation of the value function (4) by:

V

h

k

(x) = max

˜

π

h

∈A

h

J

h

(t

k

, x,

˜

π

h

). (19)

4.2 A Controlled Forward-Backward

Algorithm

Starting from final time T going backwards, let us

suppose we have already calculated the approxima-

tions Y

h

k+1

(·), Z

h

k+1

(·) for (41). Now, let us use these

functions as natural predictors for the still unknown

Y

h

k

(·) and Z

h

k

(·) respectively. Then ∀ξ

j

∈ C

k

we cal-

culate:

π

∗

t

k

(ξ

j

) = argmax

π∈A

H(t

k

, ξ

j

, π,Y

h

k+1

(ξ

j

), Z

h

k+1

(ξ

j

)).

(20)

For notational simplicity we denote π

∗

t

k

(ξ

j

) by π

j∗

k

. If

we think of the control π

∗

k

in (41) as being a function

of t, X

t

,Y

t

, Z

t

:

π

∗

k

(t, X

t

,Y

t

, Z

t

) = argmax

π∈A

H(t

k

, X

t

, π,Y

t

, Z

t

), (21)

we can replace the control variable by this function in

the FBSDE system (41) and receive a fully coupled

FBSDE system without control.

To solve the coupled FBSDE system we make

use of existing numerical schemes. In particular, we

choose the algorithm for coupled FBSDE systems

proposed by (Delarue and Menozzi, 2006) using the

pre-calculated controls π

j∗

k

(20). The algorithm then

reads as follows:

V

h

k

(ξ

j

) = f(t

k

, ξ

j

, π

j∗

k

)∆t

h

k

+

∑

ξ

l

∈C

k+1

p

jl

k

(π

j∗

k

)V

h

k+1

(ξ

l

),

(22)

Y

h

k

(ξ

j

) = ∇

x

H(t

k

, ξ

j

, π

j∗

k

,Y

h

k+1

(ξ

j

), Z

h

k+1

(ξ

j

))∆t

h

k

+

∑

ξ

l

∈C

k+1

p

jl

k

(π

j∗

k

)Y

h

k+1

(ξ

l

),

(23)

Z

h

k

(ξ

j

) =

1

∆t

h

k

∑

ξ

l

∈C

k+1

p

jl

k

(π

j∗

k

)Y

h

k+1

(ξ

l

)∆W

jl

k

, (24)

where ∆W

jl

k

is calculated via the Euler relationship:

∆W

jl

k

= σ

−1

(ξ

j

, π

j∗

k

)(ξ

l

−ξ

j

−b(ξ

j

, π

j∗

k

)∆t

h

k

). (25)

The main contribution in our paper is the explicit

pre-calculation of π

∗

via (20). This is the essential

step in our scheme to solve an optimal control prob-

lem through a FBSDE representation. The signifi-

cance is that the optimization is performed externally

from the backward calculations for V

t

,Y

t

, Z

t

in (22),

(23), (24) and does not include the calculation of ex-

pectations. This will be outlined more precisely in the

following sections.

4.3 Optimization

At first appearance, the difference of the above

method to dynamic programming is that in the for-

mer method the optimization does not have to be per-

formed over an expectation operator. Instead, opti-

mization is performed over a known explicit function

in (20) where H is given by:

b(ξ

j

, π)Y

j

k+1

+ tr[σ

′

(ξ

j

, π)Z

j

k+1

] + f(t

k

, ξ

j

, π), (26)

where Y

j

k+1

:= Y

h

k+1

(ξ

j

). To be explicit, we consider

the Newton method in a line search algorithm, see

(Nocedal and Wright, 2006) for details. For fixed

points in space and time k, j we guess a starting point

π

0

∈ A and perform the following iteration:

π

i+1

= π

i

+ p

i

, for i = 1, .., m. (27)

A careful reader might notice that the step length is

1 here, which is enough in Newton’s method to hold

the Wolf conditions. In the exact Newton method, the

search directions p

i

∈R

r

are calculated by solving the

linear system:

∇

2

π

H(π

i

)p

i

= ∇

π

H(π

i

). (28)

Since H is smooth enough and the Hessian ∇

2

π

H(π

i

) is

positive definite - see above assumptions - the method

converges to a local minimum and the rate of conver-

gence of {π

i

} is quadratic. For a proof see (Nocedal

and Wright, 2006), Theorem 3.2 and Theorem 3.5.

In detail, to solve the optimization in (20) for one

point (t

k

, ξ

j

) we denote:

H(π) := −H(t

k

, ξ

j

, π,Y

h

k+1

(ξ

j

), Z

h

k+1

(ξ

j

)) =

−b(ξ

j

, π)Y

j

k+1

−tr[σ

′

(ξ

j

, π)Z

j

k+1

] − f(t

k

, ξ

j

, π),

(29)

and calculate:

H

π

= −b

π

Y

h

k+1

−

∑

i, j

σ

ij

π

Z

ij

k+1

− f

π

,

ICORES 2012 - 1st International Conference on Operations Research and Enterprise Systems

86

H

ππ

= −b

ππ

Y

h

k+1

−

∑

i, j

σ

ij

ππ

Z

ij

k+1

− f

ππ

,

where we denote the gradients for all functions g =

b, σ, f, F by g

π

= ∇

π

g(π) and the Hessians by g

ππ

accordingly. Note that b

π

, b

ππ

, σ

ij

π

, σ

ij

ππ

, f

π

, f

ππ

are

known continuous functions, given by the problem

definition. Then we solve the system:

H

ππ

(π

i

)p

i

= H

π

(π

i

),

and repeat this procedure until the error is smaller

than a certain specified ε

h

. If b, σ, f are too complex

to derive the first or second derivatives by hand, one

can use methods of ’automatic differentiation’ to cal-

culate them. An introduction into these methods can

be found for example in (Nocedal and Wright, 2006).

4.4 Full Algorithm

Recall that the the space grid at time point k is

indexed by I

k

in (15) and G is defined by (10).

Therefore, the full algorithm reads as follows:

∀j ∈ I

N

,

π

j∗

N

= argmax

π∈A

G(T, ξ

j

, π, ∇

x

g(ξ

j

), ∇

2

xx

g(ξ

j

)), (30)

V

h

N

(ξ

j

) = g(ξ

j

), Y

h

N

= ∇

x

g(ξ

j

),

Z

h

N

= ∇

2

g(ξ

j

)σ(ξ

j

, π

j∗

N

).

(31)

∀k = N −1..0,

∀j ∈ I

k

,

set π

j0

k

= π

j∗

k−1

. For i = 1..i

max

solve:

H

ππ

(π

ji

k

)p

i

= H

π

(π

ji

k

),

π

j,i+1

k

= π

ji

k

+ p

i

,

(32)

until ||p

i

|| < cε

h

. Set π

j∗

k

= π

j,i+1

k

.

V

h

k

(ξ

j

) = f(t

k

, ξ

j

, π

j∗

k

)∆t

h

k

+

∑

ξ

l

∈C

k+1

p

jl

k

(π

j∗

k

)V

h

k+1

(ξ

l

),

(33)

Y

h

k

(ξ

j

) = ∇

x

H(t

k

, ξ

j

, π

j∗

k

,Y

h

k+1

(ξ

j

), Z

h

k+1

(ξ

j

))∆t

h

k

+

∑

ξ

l

∈C

k+1

p

jl

k

(π

j∗

k

)Y

h

k+1

(ξ

l

),

(34)

Z

h

k

(ξ

j

) =

1

∆t

h

k

∑

ξ

l

∈C

k+1

p

jl

k

(π

j∗

k

)Y

h

k+1

(ξ

l

)∆W

jl

k

, (35)

where ∆W

jl

k

is calculated via the Euler relationship:

∆W

jl

k

= σ

−1

(ξ

j

, π

j∗

k

)(ξ

l

−ξ

j

−b(ξ

j

, π

j∗

k

)∆t

h

k

). (36)

4.5 Known Convergence Results

For a specific subclass of stochastic control problems,

the convergence of the proposed FBSDE scheme can

be rigorously proved. Suppose that only the drift is

controlled, i.e. σ(x, ·) = σ(x). We also drop the time-

dependence in the coefficients. Furthermore, suppose

that

• σσ

′

is positive definite,

• ∇

x

H(x, π

∗

(x, y, z), y, z) and ∇

x

g(x) are Lipschitz

continuous in x,

• ∇

x

g ∈ C

2+α

(R

r

), for α > 0, and its norm in

bounded.

Note that H = by+ tr[σ

′

z] + f, is linear and Lipschitz

continuous in y, z by definition.

Also remember that we have written the optimal

control variable π

∗

as a function of the state variables

x, y, z. Then, according to (Delarue and Menozzi,

2006), the numerical FBSDE scheme converges. We

remark that a general proof of convergence for con-

trolled diffusion processes, i.e. σ(x, π), would be

analogous to proving the existence and uniqueness of

a fully nonlinear PDE. Such a proof is beyond the

scope of this paper.

5 APPLICATIONS

5.1 An Investment-consumption Model

As an example, let us consider an investment-

consumption model with convex transaction costs.

Convex transaction costs preserve the problem from

the usual bang-bang control, see (Davis and Norman,

1990). It is a reasonable assumption in certain mar-

kets, e.g. scarce commodities.

Let A

t

∈ R denote the portfolio owner’s mone-

tary amount of assets and let α

t

, β

t

> 0 denote his in-

vestment and deinvestment rate at time t respectively.

Let the convex functions f

β

, f

α

∈C

2

(R, R) determine

the transaction costs, respectively. Furthermore, let

B

t

∈ R denote the portfolio owner’s bank account,

which pays a deterministic interest rate r ≥ 0. Let

the dynamics of the states be given by:

dA

t

= (µA

t

+ α

t

−β

t

)dt + σA

t

dW

t

, A

0

= a

0

,

dB

t

= (rB

t

−c

t

−α

t

+ β

t

)dt

−( f

α

(α

t

) + f

β

(β

t

))dt, B

0

= b

0

,

(37)

where µ, σ > 0. W

t

denotes a standard Brownian mo-

tion and c

t

≥ 0 denotes the portfolio owner’s con-

sumption at time t.

The goal of the risk averse portfolio owner is to

A FORWARD-BACKWARD ALGORITHM FOR STOCHASTIC CONTROL PROBLEMS - Using the Stochastic

Maximum Principle as an Alternative to Dynamic Programming

87

maximize his expected, concave utility u ∈C

2

(R, R)

from consumption over a given time horizon T:

J(t, a

0

, b

0

, α, β, c) =

E

0

h

R

T

0

e

−δt

u(c

t

)dt + e

−δT

u(A

T

+ B

T

)

a

0

, b

0

i

,

(38)

v(t, a, b) = max

(α,β,c)∈A

J(, a, b, α, β, c), (39)

where A is the set of all F

t

-measurable control strate-

gies, δ ≥ 0 denotes the owner’s impatience to con-

sume and E

0

denotes the expectation operator with

information set at time zero.

Using this problem setup, the Hamiltonian

H(t, x, y, z, α

t

, β

t

, c

t

) in (11) takes the following form:

H = [α

t

−β

t

+ µA

t

]y

A

+

+

rB

t

−c

t

−α

t

+ β

t

−( f

α

(α

t

) + f

β

(β

t

))

y

B

+(σA

t

)z

AA

+ e

−δt

u(c

t

).

(40)

For the example calculations below, we choose log-

utility and quadratic transaction costs:

u(x) = ln(x),

f

α

(a) = ca

2

, f

β

(b) = cb

2

, for c > 0.

Then, the adjoint equations become:

−dY

A

t

=

µY

A

t

+ σZ

AA

t

dt −Z

AA

t

dW

t

, Y

A

T

=

e

−δT

A

T

+B

T

−dY

B

t

= rY

B

t

dt, Y

B

T

=

e

−δT

A

T

+B

T

.

(41)

There are similar problems that have a strictly con-

cave Hamiltonian with respect to the controls. One

example is when the trading activities α, β influence

the asset drift µ(α, β), which is called feedback con-

trol.

5.2 Numerical Results

To produce the results below, we implement the nu-

merical algorithm with Matlab using parallel process-

ing on an eight core machine.

We implemented the forward-backward (FB) al-

gorithm of section 4.4 in two ways: using a

Markov chain approximation and using a Quantiza-

tion method. Since the latter method attained bet-

ter results, we present the results of this method

here. Therefore, let ∆W

i

, i = 1, ..., L denote the pre-

calculated Brownian increments and let p

i

denote

their probabilities. For simplicity, we used a log-

scaled, time-constant grid C. Then, for a fixed time

point t

k

, we calculated for each grid point (A

j

k

, B

j

k

) ∈C

its propagation A

j,i

k+1

= A

j

k

+ ∆A

j,i

t

, for each i = 1...L,

where:

∆A

i

t

= (µA

t

+ α

t

−β

t

)∆t + σA

t

p

i

√

∆t∆W

i

. (42)

In order to evaluate the function V,Y

A

,Y

B

, Z

AA

at

the points (A

j,i

k+1

, B

j

k+1

) we used a linear interpola-

tion/extrapolation.

In the Figures below, we used M

2

= |I| = 100

2

space points, N = 100 time steps, L = 20 quantiza-

tion points, C ∈ [0.2, 2.2] × [0.2, 2.2] as space grid

and [t

0

, T] = [0, 2] as time interval. Moreover, we set

µ = 5%, r = 3%, δ = 2%, σ = 0.2 and c = 0.1%.

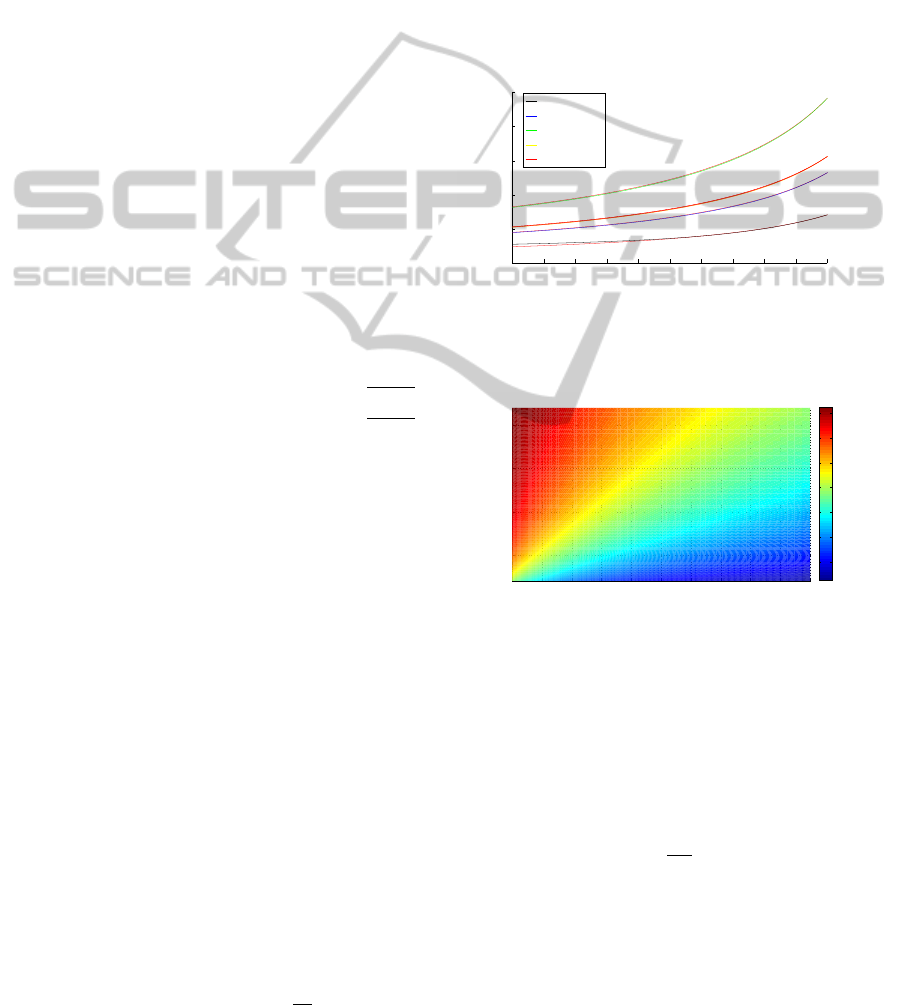

Figure 1 shows the consumption c

t

for five differ-

ent space points (A, B) over time. Figure 2 shows the

surface of the combined control α

∗

−β

∗

at time step

k = 98. The average calculation time for one time step

was 2.6 seconds.

0 0.2 0.4 0.6 0.8 1 1.2 1.4 1.6 1.8 2

0

0.5

1

1.5

2

2.5

time

consumption

(A,B)= (0.35,0.35)

(A,B)= (0.66,0.66)

(A,B)= (1.2,1.2)

(A,B)= (1.2,0.35)

(A,B)= (0.35,1.2)

Figure 1: Optimal consumption c

∗

t

for five different space

points, using the FB algorithm. Plots of the optimal con-

sumption of the original Merton problem are added in red

color.

0.2 0.4 0.6 0.8 1 1.2 1.4 1.6 1.8 2 2.2

0.5

1

1.5

2

A

B

−0.3

−0.2

−0.1

0

0.1

0.2

0.3

Figure 2: Optimal control α

∗

−β

∗

at time t

k

= 1.96 using

the FB algorithm.

The consumption in this problem is close to the

consumption of the original Merton problem without

transaction costs. A slight irregularity can be seen

for (A, B) = (0.35, 0.35) for small t. This shows the

truncation error that propagates inside the space grid

while going backward in time. The selected point is

affected first since it is close to the lower space grid

boundary (0.2,0.2). Since

µ−r

σ

2

= 0.5, the optimal con-

trols α

∗

, β

∗

should be zero whenever A = B, as in the

original Merton problem.

To compare the results with the dynamic pro-

gramming (DP) approach, we used the Matlab op-

timization toolbox. We obtained the best results

with the provided Sequential Quadratic Program-

ming (SQP) method, which is based on a line search

ICORES 2012 - 1st International Conference on Operations Research and Enterprise Systems

88

quasi-Newton method. Details can be found at

http://www.mathworks.com/help/toolbox/optim/. We

needed to set L = 40 and used a spline interpolation

method to get reasonable results at all.

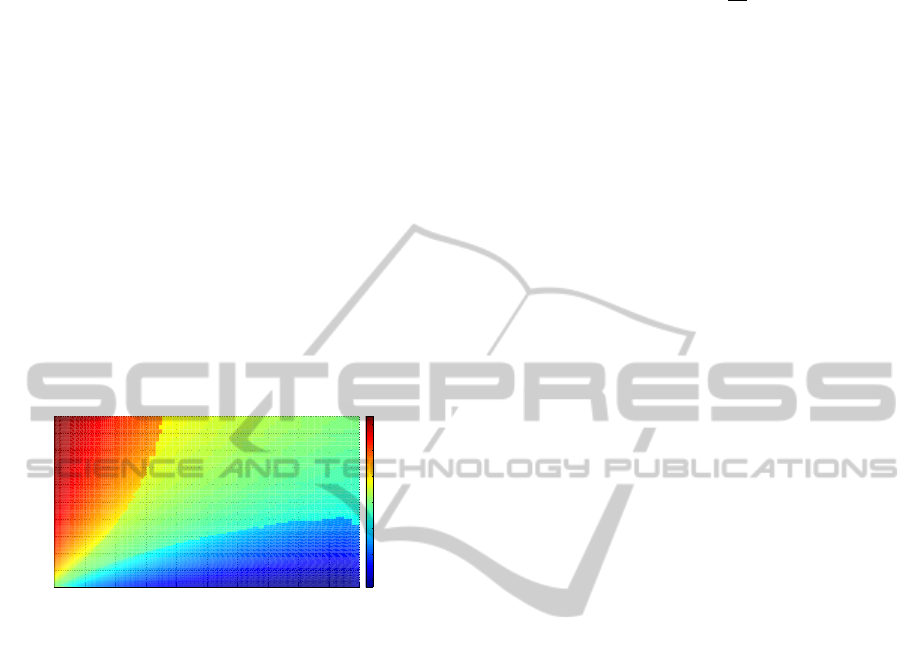

Figure 2 show the surface of the combined, opti-

mal control α

∗

−β

∗

at time step k = 98. The calcula-

tion time for one time step was 450 seconds.

In this example, our FB algorithm is 170 times

faster than the DP method. This is due to 1) the

smaller amount of function evaluations and 2) the dif-

ferent interpolation method needed.

Moreover, the α

∗

−β

∗

surface of the FB method

in Figure 2 is smooth, while the surface of the DP

method in Figure 3 already has become rough at time

step k = 98, indicating instability. One reason may be

that in the DP method, the optimization is performed

over the highly nonlinear value function V. In our

FB algorithm, the optimization step depends on the

functions Y

t

and Z

t

only.

0.2 0.4 0.6 0.8 1 1.2 1.4 1.6 1.8 2 2.2

0.2

0.4

0.6

0.8

1

1.2

1.4

1.6

1.8

2

2.2

A

B

−0.3

−0.2

−0.1

0

0.1

0.2

0.3

Figure 3: Optimal control α

∗

−β

∗

at time t

k

= 1.96 using

the DP method.

5.3 Comparison of Computational Cost

We briefly compare our FB algorithm with the stan-

dard dynamic programming approach. Let M

n

= |I

l

|;

that is, M is the number of grid points in each dimen-

sion of space where we have n dimensions. Also,

let L be the number of calculated transition proba-

bilities (or, alternatively, the number of quantization

points/simulations if we use a Quantization/Monte

Carlo method). N is the number of time-steps and

let m be the number of iterations for the Newton-

Raphson method to reach a given level of accuracy

ε

h

. Assuming that for a given level of accuracy,

the same time grid and space grid may be used for

both algorithms, the computational cost for the dy-

namic programming approach is NM

n

Lm(1 + 2r

2

)

while the computational cost for the FB algorithm is

NM

n

[L(1+ n+ nd) + mr]. Assuming a large number

of simulations L are needed for each evaluation, the

FB algorithm is superior if:

2mr

2

> n(d + 1) + 1. (43)

It is clear that the FB algorithm has a significantly

lower computational cost if a nontrivialnumber of op-

timization iterations are required. For example, in one

dimension the FB algorithm has

3

2m

the computational

cost of the dynamic programming approach. The ad-

vantage of the FB algorithm is that we do not need

to optimize over the entire value function, which re-

quires one to recalculate the expectation in the value

function for at least m times. This is very computa-

tionally expensive. Instead, one only has to optimize

the Hamiltonian, which is a much simpler procedure.

6 CONCLUSIONS

We have proposed a complete numerical algorithm to

solve optimal control problems through the associated

FBSDE system. By complete we mean that the algo-

rithm explicitly includes the optimization step. Our

numerical approach is an alternative to standard dy-

namic programming methods. A comparison of com-

putational cost between the dynamic programming

method and the FBSDE method illustrate the advan-

tages of the FBSDE approach.

We included results of a numerical example that

commonly appears in finance and economics. These

results confirm the advantage in accuracy and compu-

tational efficiency of the FB algorithm compared to

the dynamic programming method for certain prob-

lem classes.

A next step would be to analyze the convergence

speed and the convergenceerror in theory and practice

in detail.

REFERENCES

Davis, M. and Norman, A. (1990). Portfolio selection with

transaction costs. In Mathematics of Operation Re-

search, Vol. 15, No. 4. The lnstitute of Management

Sciences/Operations Research Society of America.

Delarue, F. and Menozzi, S. (2006). A forward-backward

stochastic algorithm for quasi-linear pdes. In The An-

nals of Applied Probability, Vol. 16, No. 1, 140184.

Universite Paris.

Kushner, H. and Dupuis, P. (1992). Numerical Methods

for Stochastic Control Problems in Continuous Time.

Springer, London, 1st edition.

Nocedal, J. and Wright, S. (2006). Numerical Optimization.

Springer Series in Operation Research and Financial

Engineering, London, 2nd edition.

Peng, S. (1990). A general stochastic maximum principle

for optimal control problems. In Journal of Control

and Optimization, Vol. 28, No. 4, pp.966-979. SIAM.

Pham, H. (2009). Continuous-time stochastic control and

optimization with financial applications. In Stochas-

tic Modeling and Applied Probability 61. Springer-

Verlag Berlin Heidelberg.

A FORWARD-BACKWARD ALGORITHM FOR STOCHASTIC CONTROL PROBLEMS - Using the Stochastic

Maximum Principle as an Alternative to Dynamic Programming

89