Comparison of Neural Networks for Prediction of Sleep Apnea

Yashar Maali and Adel Al-Jumaily

University of Technology, Sydney (UTS), Faculty of Engineering and IT, Ultimo, Australia

Keywords: Sleep Apnea, Neural Networks, Prediction.

Abstract: Sleep apnea (SA) is the most important and common component of sleep disorders which has several short

term and long term side effects on health. There are several studies on automated SA detection but not too

much works have been done on SA prediction. This paper discusses the application of artificial neural net-

works (ANNs) to predict sleep apnea. Three types of neural networks were investigated: Elman, cascade-

forward and feed-forward back propagation. We assessed the performance of the models using the Receiver

Operating Characteristic (ROC) curve, particularly the area under the ROC curves (AUC), and statistically

compare the cross validated estimate of the AUC of different models. Based on the obtained results, gener-

ally cascade-forward model results are better with average of AUC around 80%.

1 INTRODUCTION

Sleep Apnea (SA) is one of the most common types

of sleep disorders with around 3% prevalence in

industrialized countries (Young, Palta et al. 1993).

SA is characterized by a repeated and temporary

cessation or reduction of breathing during sleep

(Guilleminault et al., 1978). Clinically, apnea is de-

fined as the total or near-total absence of airflow.

This reduction becomes significant once the decline

of the breathing signal amplitude is at least around

75% with respect to the normal respiration and oc-

curs for a period of 10 seconds or longer. A hypop-

nea is an event of less intensity; it is defined as a

reduction in baseline signal amplitude around 30–

50%, also lasting 10 seconds in adults (Flemons et

al., 1999). Sleep apnea also can be categorized to

three types as; obstructive, central and mixed. The

SA has several short term and long term side effects

(Chokroverty et al., 2009). Short-term effects lead to

impaired attention and concentration, reduce quality

of life, increased rates of absenteeism with reduced

productivity, and increased the possibility of acci-

dents at work, home or on the road. Long-term con-

sequences of sleep deprivation include increased

morbidity and mortality from increasing automobile

accidents, coronary artery disease, heart failure, high

blood pressure, obesity, type 2 diabetes mellitus,

stroke and memory impairment as well as depression.

Long-term consequences, however, remain open

(Chokroverty, 2010).

Unfortunately, as many patients are asymptomat-

ic, sleep apnea may go undiagnosed for years

(Kryger et al., 1996); (Ball et al., 1997). Usually it is

patients’ spouses, roommates, or family members

who report the apnea periods alternating with arous-

als and accompanied by loud snoring (Stradling and

Crosby 1990; Hoffstein 2000). Symptomatic patients

with SA are usually assessed by sleep medicine

Specialists and diagnosed through an overnight sleep

study in a sleep clinic. SA is diagnosed by a manual

analysis of a polysomnographic record, an integrated

device comprising of the EEG, EMG, EOG, ECG,

and oxygen saturation (SPO2) (Penzel et al., 2002).

The polysomnography also contains records of air-

flow through the mouth and nose, along with the

thoracic and abdominal effort signals (Kryger,

1992), and the position of the body during sleep.

The conventional scoring of the polysomnographic

recording is laboured intensive and time-consuming

(Kirby et al., 1999); (Sharma et al., 2004). There-

fore, many efforts have been done to develop sys-

tems that score the records automatically (Cabrero-

(Canosa et al., 2003); (de Chazal et al., 2003);

(Cabrero-Canosa et al., 2004). For this reason sever-

al automated algorithms are used in this area such

as; fuzzy rule-based system (Maali and Al-Jumaily,

2011), genetic SVM (Maali and Al-Jumaily, 2011)

and PSO-SVM (Yashar and Adel, 2012) which have

been proposed in our previous works.

As mentioned, there are several works on appli-

cations of predicting in different areas, but there are

60

Maali Y. and Al-Jumaily A..

Comparison of Neural Networks for Prediction of Sleep Apnea.

DOI: 10.5220/0004701400600064

In Proceedings of the International Congress on Neurotechnology, Electronics and Informatics (NEUROTECHNIX-2013), pages 60-64

ISBN: 978-989-8565-80-8

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

few studies in sleep apnea prediction. One of the

earliest work was published by Dagum and Galper

in 1995 (Dagum and Galper, 1995). This paper

developed a time series prediction by using belief

networks models and then this algorithm used in

sleep apnea. This paper used a multivariate data set

contains 34000 recordings, sampled at 2 Hz, of heart

rate (HR), chest volume (CV), blood oxygen con-

centration (SaO

2

), and sleep state from the time se-

ries competition of the Santa Fe Institute in 1991.

Based on this study, prediction with the CV signal

has more bias than HR and SaO2, because of rapid

and erratic oscillations of the CV series.

Another pioneer work in sleep apnea prediction,

we can can be found in Bock and Gough paper in

1998 . This study use 4.75 hz of heart rate, respira-

tion force, and blood oxygen saturation (SaO

2

) col-

lected from a chronic apnea patient. They use simple

recurrent networks (SRN) proposed by Elman

(Elman, 1991). Each of three time series variables

(heart rate, breathing, and blood oxygenation) were

used as inputs for network training and testing oper-

ations. Each variable was introduced to a unique

network node at the input layer; this network had 18

nodes in the hidden layer. «

One of the newest paper in this area is the work

of Waxaman, Graupe and Carley in 2010 (Waxman

et al., ). They predicted apnea 30 to 120 seconds in

advance. They use Large Memory Storage And Re-

trieval (LAMSTAR) neural network (Graupe and

Kordylewski, 1998). LAMSTAR is a supervised

neural network that can process large amount of data

and also provide detailed information about its deci-

sion making process. Input signals for this algorithm

are EEG, heart rate variability (HRV), nasal pres-

sure, oronasal temperature, submental EMG, and

electrooculography (EOG). It must be noted that

LAMSTAR has this ability to determine most im-

portant input (signal) in predicting process. In pre-

processing phase, data that segmented of 30, 60, 90

and 120 seconds was normalized. They trained sepa-

rate LAMSTAR for each 30, 60, 90 and 120 seconds

segment. Results show that best prediction belongs

to next 30 seconds and they obtained lower perfor-

mance for longer lead time, however, most of pre-

dictions up to 60 seconds in the future is correct.

Also, prediction of non-REM (NREM) events is

better than REM events, generally. For example, for

apnea prediction using 30-second segments and a

30-second lead time during NREM sleep, the sensi-

tivity was 80.6 ± 6 5.6%, the specificity was 72.78 ±

66.6%, the positive predictive values (PPV) was

75.16 ± 3.6%, and the negative predictive values

(NPV) was 79.4 ± 6 3.6%. REM apnea prediction

demonstrated a sensitivity of 69.36 ± 10.5%, a speci-

ficity of 67.46 ± 10.9%, a PPV of 67.4 6 ± 5.6%,

and an NPV of 68.8 ± 6 5.8%. Analyses also showed

that the most important signal for the predicting

apnea into the next second is submental EMG, and

RMS value of the first wavelet level is the most

important feature. But, for the 60 seconds prediction,

nasal pressure is most important signal.

This paper discusses the development of super-

vised artificial neural networks, Elman, cascade-

forward and feed-forward back propagation to pre-

dict sleep apnea. In the rest of this paper, the three

NNs are introduced. Then the issues related to net-

work design and training, especially how to avoid

over fitting, are addressed. The use of AUC as per-

formance measure of the models, and the statistical

comparison of the overall performance of the models

by means of cross-validation, are outlined. The re-

sults and conclusions are presented at the end of the

paper.

2 PRELIMINARIES

2.1 Elman Neural Networks

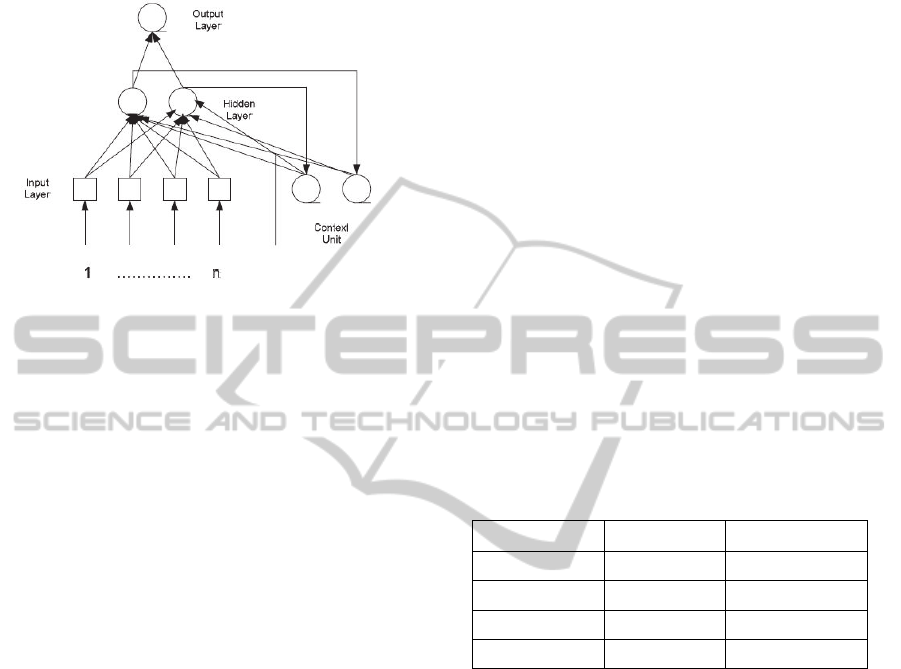

The Elman neural network is one kind of globally

feed-forward locally recurrent network model pro-

posed by Elman (Li et al., 2009). It occupies a set of

context nodes to store the internal states. Thus, it has

certain dynamic characteristics over static neural

networks, such as the Back-Propagation (BP) neural

network and radial-basis function networks. The

structure of an Elman neural network is illustrated in

Figure 1.

It is easy to observe that the Elman network con-

sists of four layers: input layer, hidden layer, context

layer, and output layer. There are adjustable weights

connecting every two adjacent layers. Generally, the

Elman neural network can be considered as a special

type of feed-forward neural network with additional

memory neurons and local feedback. The distinct

‘local connections’ of context nodes in the Elman

neural network make its output sensitive not only to

the current input data, but also to historical input

data, which is useful in time series prediction. The

training algorithm for the Elman neural network is

similar to the back-propagation learning algorithm, as

both based on the gradient descent principle. Howev-

er, the role that the context weights as well as initial

context node outputs play in the error back-

propagation procedure must be taken into considera-

tion in the derivation of this learning algorithm. Due

to its dynamical properties, the Elman neural network

ComparisonofNeuralNetworksforPredictionofSleepApnea

61

has found numerous applications in such areas as

time series prediction, system identification and

adaptive control (Gao and Ovaska, 2002).

Figure 1: Structure of an Elman neural network model.

2.2 Cascade-forward Neural Network

Models

Cascade-forward models are similar to feed-forward

networks, but include a weight connection from the

input to each layer and from each layer to the suc-

cessive layers. While two-layer feed-forward net-

works can potentially learn virtually any input-

output relationship, feed-forward networks with

more layers might learn complex relationships more

quickly. For example, a three layer network has

connections from layer 1 to layer 2, layer 2 to layer

3, and layer 1 to layer 3. The three-layer network

also has connections from the input to all three lay-

ers. The additional connections might improve the

speed at which the network learns the desired rela-

tionship. Cascade-forward artificial intelligence

model is similar to feed-forward back propagation

neural network in using the back propagation algo-

rithm for weights updating, but the main symptom of

this network is that each layer of neurons related to

all previous layer of neurons. This network is a

Feed-Forward network with more than one hidden

layer. Multiple layers of neurons with nonlinear

transfer functions allow the network to learn more

complex nonlinear relationships between input and

output vectors (Abdennour, 2006).

3 PROPOSED APPROACH

In this paper three input signals as; airflow, ab-

dominal and thoracic movement signals are used as

had been found in our previous work as the most

important signals for SA studies (Maali and Al-

Jumaily, 2012). In the present work data collected

from 5 patients which events of them are annotated

by an expert were collected in the concord hospital

in Sydney. We extracted data segments of 30, 60,

90, and 120 seconds. Also, Different lead times as

30, 60, 90 and 120 seconds are investigated in this

paper. Also, AUC is considered as performance

measure in this paper.

3.1 Feature Generation

Each signal from each segment was normalized by

dividing by its mean value. A discrete wavelet trans-

form was then applied. For each windows a variety

of features were extracted from the nasal airflow,

abdominal and thoracic movement signals. Features

are generated from coefficients of wavelet packet

and the original signals. Daubechies wavelet packet

of order 4 and 7 levels is used and different statisti-

cal measures are generated from the coefficients and

original signal. These features represent the inputs of

the NNs algorithm. Full list of proposed features are

included in the Table 1.

Table 1: List of statistical features, x is coefficients of the

wavelet.

logmeanx^2 kurtosisx^2 geomeanabsx

stdx^2 varx^2 madx

skewnessx^2 meanabsx meanx^2

skewnessx kurtosisx varx

geomeanx^2 madx^2 stdx

More details about these statistical measures are presented in

the Appendix

3.2 Early Stopping

Usually standard neural network architectures such

as the fully connected multi-layer perceptron almost

always are prone to overfitting. While the network

seems to get better and better (the error on the train-

ing set decreases), at some point during training it

actually begins to get worse again, (the error on

unseen examples increases).

There are basically two ways to fight overfitting:

reducing the number of dimensions of the parameter

space or reducing the effective size of each dimen-

sion. The corresponding NN techniques for reducing

the size of each parameter dimension are regulariza-

tion such as weight decay or early stopping

(Prechelt, 1998). Early stopping is widely used be-

cause it is simple to understand and implement and

has been reported to be superior to regularization

methods in many cases. This method can be used

NEUROTECHNIX2013-InternationalCongressonNeurotechnology,ElectronicsandInformatics

62

either interactively, i.e. based on human judgment,

or automatically, i.e. based on some formal stopping

criterion. In this paper automatic stopping criteria is

used based on the increases of validation error.

4 RESULTS

The results for prediction of apnea in the immediate-

ly following segment as the segment duration are

varied between 30, 60, 90 and 120 seconds are com-

putes for each of three NNs. For each experiments10

validation is performed and average and standard

deviation of these results are shown in table 2, 3 and

4. ANOVA test on these data shows that the average

of AUC of these segmentations are significantly

different and performing pair t-test shows that per-

formances are generally better for 30-seconds seg-

ment, and as the segment duration is increased, the

performance is decreased. Also, increase of the lead

time results in increasing of the performances.

Table 2: AUC of apnea prediction by Cascade-forward

model.

Lead Time

30 60 90 120

30

71.05 2.42 75.29 3.79 78.15 5.75 82.22 3.93

60

67.71 1.93 72.55 7.29 77.68 5.92 79.66 5.79

90

66.29 4.43 71.85 8.17 80.47 4.54 81.05 4.97

120

63.40 1.54 73.44 6.26 78.19 7.72 77.52 7.18

Table 3: AUC of apnea prediction by feed-forward model.

Lead Time

30 60 90 120

30

68.12 2.43 74.88 5.77 73.61 5.76 82.31 7.19

60

65.56 2.72 72.90 7.48 71.71 6.23 80.45 6.50

90

65.95 4.43 69.31 5.20 73.08 8.67 72.90 7.53

120

61.12 1.47 67.12 7.83 76.39 8.38 79.72 6.52

Table 4: AUC of apnea prediction by Elman model.

Lead Time

30 60 90 120

30

69.32 2.24 74.88 5.77 73.61 5.76 78.31 7.19

60

67.59 2.53 77.64 7.34 78.49 4.81 79.16 6.43

90

64.87 1.68 77.02 5.90 77.62 6.85 79.99 5.94

120

65.57 2.28 72.88 7.14 76.46 8.31 79.83 5.19

Also, ANOVA test shows that performance of

these models are not same, and in general cascade

model results in better prediction, but not for all of

the experiments, this shows that each model can

predict some type of samples better than other mod-

els. Therefore using ensemble of neural networks

maybe helpful and should be considered (Li et al.,

2009).

5 CONCLUSIONS

In this study, we present the first step of an ongoing

investigation into the prediction of individual events

of sleep apnea with different artificial neural net-

works. Experimental results of Elman, cascade-

forward and feed-forward back propagation neural

networks shows that, generally cascade-forward NN

can predict the sleep apnea events better, but this

advantage is not for all samples and investigation on

ensemble of these NNs is subject to future works.

Also, this study shows that increasing the lead time

can improve the performances, in the most cases.

REFERENCES

Abdennour, A. (2006). "Evaluation of neural network

architectures for MPEG-4 video traffic prediction."

Ieee Transactions on Broadcasting 52(2): 184-192.

Ball, E. M., R. D. Simon, et al. (1997). "Diagnosis and

treatment of sleep apnea within the community - The

Walla Walla project." Archives of Internal Medicine

157(4): 419-424.

Bock, J. and D. A. Gough (1998). "Toward prediction of

physiological state signals in sleep apnea." Ieee Trans-

actions on Biomedical Engineering 45(11): 1332-

1341.

Cabrero-Canosa, M., M. Castro-Pereiro, et al. (2003). "An

intelligent system for the detection and interpretation

of sleep apneas." Expert Systems with Applications

24(4): 335-349.

Cabrero-Canosa, M., E. Hernandez-Pereira, et al. (2004).

"Intelligent diagnosis of sleep apnea syndrome." Ieee

Engineering in Medicine and Biology Magazine 23(2):

72-81.

Chokroverty, S. (2010). "Overview of sleep & sleep disor-

ders." Indian Journal of Medical Research 131(2):

126-140.

Chokroverty, S., C. Sudhansu, et al. (2009). Sleep depriva-

tion and sleepiness. Sleep Disorders Medicine (Third

Edition). Philadelphia, W.B. Saunders: 22-28.

Dagum, P. and A. Galper (1995). "Time-series prediction

using belief network models." International Journal of

Human-Computer Studies 42(6): 617-632.

de Chazal, P., C. Heneghan, et al. (2003). "Automated

processing of the single-lead electrocardiogram for the

detection of obstructive sleep apnoea." Ieee Transac-

tions on Biomedical Engineering 50(6): 686-696.

Elman, J. L. (1991). "Distribute representations, simple

ComparisonofNeuralNetworksforPredictionofSleepApnea

63

recurrent networks, and gramimatical structure." Ma-

chine Learning 7(2-3): 195-225.

Flemons, W. W., D. Buysse, et al. (1999). "Sleep-related

breathing disorders in adults: Recommendations for

syndrome definition and measurement techniques in

clinical research." Sleep 22(5): 667-689.

Gao, X. Z. and S. J. Ovaska (2002). "Genetic Algorithm

training of Elman neural network in motor fault detec-

tion." Neural Computing & Applications 11(1): 37-44.

Graupe, D. and H. Kordylewski (1998). "A large memory

storage and retrieval neural network for adaptive re-

trieval and diagnosis." International Journal of Soft-

ware Engineering and Knowledge Engineering 8(1):

115-138.

Guilleminault, C., J. van den Hoed, et al. (1978). "Over-

view of the sleep apnea syndromes. In: C. Guillemi-

nault and Wc Dement, Editors, Sleep apnea syn-

dromes, Alan R Liss, New York." 1-12.

Hoffstein, V. (2000). Snoring. Principles and practice of

sleep medicine. M. H. Kryger, T. Roth and D. W. C.

Philadelphia, PA: W.B., Saunders: 813-826.

Kirby, S. D., W. Danter, et al. (1999). "Neural network

prediction of obstructive sleep apnea from clinical cri-

teria." Chest 116(2): 409-415.

Kryger, M. H. (1992). "management of obstractive sleep-

apnea." Clinics in Chest Medicine 13(3): 481-492.

Kryger, M. H., L. Roos, et al. (1996). "Utilization of

health care services in patients with severe obstructive

sleep apnea." Sleep 19(9): S111-S116.

Li, Y., D. F. Wang, et al. (2009). A Dynamic Selective

Neural Network Ensemble Method for Fault Diagnosis

of Steam Turbine.

Maali, Y. and A. Al-Jumaily (2011). Automated detecting

sleep apnea syndrome: A novel system based on ge-

netic SVM. Hybrid Intelligent Systems (HIS), 2011

11th International Conference on.

Maali, Y. and A. Al-Jumaily (2011). "Genetic Fuzzy Ap-

proach for detecting Sleep Apnea/Hypopnea Syn-

drome." 2011 3rd International Conference on Ma-

chine Learning and Computing (ICMLC 2011).

Maali, Y. and A. Al-Jumaily (2012). Signal selection for

sleep apnea classification. Proceedings of the 25th

Australasian joint conference on Advances in Artifi-

cial Intelligence. Sydney, Australia, Springer-Verlag:

661-671.

Penzel, T., J. McNames, et al. (2002). "Systematic com-

parison of different algorithms for apnoea detection

based on electrocardiogram recordings." Medical &

Biological Engineering & Computing 40(4): 402-407.

Prechelt, L. (1998). "Automatic early stopping using cross

validation: quantifying the criteria." Neural Networks

11(4): 761-767.

Sharma, S. K., S. Kurian, et al. (2004). "A stepped ap-

proach for prediction of obstructive sleep apnea in

overtly asymptomatic obese subjects: a hospital based

study." Sleep Medicine 5(4): 351-357.

Stradling, J. R. and J. H. Crosby (1990). "Relation be-

tween systemic hypertension and sleep hypoxemia or

snoring- analysis in 748 men drawn from general-

practice." British Medical Journal 300(6717): 75-78.

Waxman, J. A., D. Graupe, et al. "Automated Prediction

of Apnea and Hypopnea, Using a LAMSTAR Artifi-

cial Neural Network." American Journal of Respirato-

ry and Critical Care Medicine 181(7): 727-733.

Yashar, M. and A.-J. Adel (2012). A Novel Partially Con-

nected Cooperative Parallel PSO-SVM Algorithm

Study Based on Sleep Apnea Detection. Accepted in

IEEE Congress on Evolutionary Computation. Bris-

bane, Australia.

Young, T., M. Palta, et al. (1993). "the occurrence of

sleep-disordered breathing among middle-aged

adults." New England Journal of Medicine 328(17):

1230-1235.

APPENDIX

Mean, variance (VAR) and standard deviation

(STD) are common and well known statistical tools,

so other statistical features, in this study, are re-

viewed here.

Kurtosis: The kurtosis of a distribution is a measure

of how outlier-prone a distribution is, and defined as

follow

^4/^4

Geomean: Geomean is the geometric mean and com-

puted as follow:

∏^1 ⁄

Skewness: Skewness is a measure of the asymmetry

of the data around the sample mean, and defined as

follow

^3/^3

Mad: mad is mean absolute deviation of the sample

as, | |.

NEUROTECHNIX2013-InternationalCongressonNeurotechnology,ElectronicsandInformatics

64