Hidden Conditional Random Fields for Action Recognition

Lifang Chen

1,2

, Nico van der Aa

1,2

, Robby T. Tan

1

, Remco C. Veltkamp

1

1

Department of Information and Computing Sciences, Utrecht University, Utrecht, The Netherlands

2

Noldus InnovationWorks, Noldus Information Technology, Wageningen, The Neterlands

Keywords:

Action Recognition, (Max-Margin) Hidden Conditional Random Fields, Part Labels Method.

Abstract:

In the field of action recognition, the design of features has been explored extensively, but the choice of

action classification methods is limited. Commonly used classification methods like k-Nearest Neighbors and

Support Vector Machines assume conditional independency between features. In contrast, Hidden Conditional

Random Fields (HCRFs) include the spatial or temporal dependencies of features to be better suited for rich,

overlapping features. In this paper, we investigate the performance of HCRF and Max-Margin HCRF and their

baseline versions, the root model and Multi-class SVM, respectively, for action recognition on the Weizmann

dataset. We introduce the Part Labels method, which uses explicitly the part labels learned by HCRF as a new

set of local features. We show that only modelling spatial structures in 2D space is not sufficient to justify the

additional complexity of HCRF, MMHCRF or the Part Labels method for action recognition.

1 INTRODUCTION

Action recognition is split into feature selection and

classificiation. Having extracted features, assigning

action labels to videos becomes a classification prob-

lem. Next to conventional classifiers like k-Nearest

Neighbor (Blank et al., 2005) and Support Vector Ma-

chines (SVM) (Jhuang et al., 2007), more complex

models have been introduced for action classification,

which are either generative or discriminative.

Generative approaches model a joint probability

distribution over both the features and their part la-

bels, implying the need of a prior model over the fea-

tures. To model this prior tractably, generative ap-

proaches assume features are conditionally indepen-

dent of their labels. A typical example is the Hidden

Markov Model using hidden states to represent differ-

ent phases in an action (Yamato et al., 1992).

Discriminative approaches do not need to model

the prior on features, since they directly model a con-

ditional distribution over action classes from the fea-

tures. Therefore, the independence assumption is re-

laxed. Conditional Random Fields (CRFs) (Kumar

and Hebert, 2003) is such a discriminative approach.

However, CRF requires fully labelled data where

each observation node has an intermediate level la-

bel, like ”hands up” or ”put down leg”. Since most

available datasets do not provide this intermediate la-

belling, Quattoni et al. (Quattoni et al., 2004) propose

the HCRF model, which extends CRF to incorporate

these intermediate part labels as hidden variables. The

assignments of these hidden variables are learned dur-

ing training, not required in the dataset. HCRF was

originally proposed for object recognition. Wang and

Mori (Wang and Mori, 2011) extended HCRF to ac-

tion recognition by modelling the spatial dependen-

cies of patches within a frame as they model a human

action as a constellation of parts conditioned on im-

age features. They improved the classification perfor-

mance by combining the flexibility of local represen-

tation and the large-scale global representation under

the unified framework of HCRF.

Max-margin methods set separating hyperplanes

such that the margin between the correct label and

all others is maximized, ensuring the score of the

correct label is much higher than the incorrect ones.

Felzenszwalb et al. (Felzenszwalb et al., 2008) pro-

pose the Latent Support Vector Machine (LSVM),

which learns a discriminative model with structured

hidden (or latent) variables similar to HCRF with a

max-margin approach. LSVM is a binary classifier

which does not directly handle multi-class classifi-

cation. Crammer and Singer (Crammer and Singer,

2002) introduce the multi-class SVM which extends

the binary SVM to support multi-class classification.

Similarly, Wang and Mori (Wang and Mori, 2011)

proposed MMHCRF to extend LSVM to directly han-

dle multi-class classification.

240

Chen L., van der Aa N., T. Tan R. and C. Veltkamp R..

Hidden Conditional Random Fields for Action Recognition.

DOI: 10.5220/0004652902400247

In Proceedings of the 9th International Conference on Computer Vision Theory and Applications (VISAPP-2014), pages 240-247

ISBN: 978-989-758-004-8

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

Our work is based on HCRF and MMHCRF. Both

methods model the spatial structure of an image by

structured hidden variables. However, HCRF learns

the model parameter with a maximum likelihood ap-

proach, while MMHCRF adopts a max-margin ap-

proach. We propose a new method that combines the

advantages of both HCRF and MMHCRF, leading to

more accurate classification results.

2 CLASSIFICATION METHODS

To compare and analyze the value of HCRF and

MMHCRF, the theory behind these methods is briefly

explained, including their baseline method where the

hidden labels are removed.

2.1 Hidden Conditional Random Fields

To classify a frame I in a video sequence, let x

be the feature extracted from I, and y be its ac-

tion label. Denote Y as the set of possible ac-

tion classes. Assume I contains a set of patches

{I

1

, I

2

, . . . , I

m

}, and its corresponding features can be

written as x = {x

0

, x

1

, . . . , x

m

}. x

0

is the global feature

vector which is extracted from the whole frame, and

x

i

(i = 1, . . . , m) is the local feature vector extracted

from patch I

i

. Our training set consists of labelled

frames (x

t

, y

t

) for t = 1, . . . , T.

Assume we can assign each patch I

i

with a hidden

part label h

i

from a finite set of possible part labels H ,

each frame I has a vector of hidden part labels h =

{h

1

, h

2

, . . . , h

m

}. A hidden part label represents the

motion pattern of a body part, such as move forward

for the head. As the values of h are learned during

training, they are the hidden variables of the model.

The hidden part labels can depend on each other.

For example, in the case of walking, head and torso

might tend to move forward. Assuming an undi-

rected graph structure G = (V, E) for each frame,

h

i

(i = 1, 2, . . . , m) are the vertices V and the depen-

dence between h

j

and h

k

is an edge ( j, k) ∈ E. In-

tuitively, G models the conditional dependencies be-

tween the hidden part labels. The structure of G is

assumed to be a tree (Quattoni et al., 2007). Note that

the graph structure can be different from image to im-

age. Figure 1 shows the graphical model.

Given the feature x, part labels h, and class la-

bel y, we can define a potential function θ

⊺

·Φ(x, h, y)

which is parametrized by the model parameter θ:

θ

⊺

· Φ(x, h, y) =

∑

j∈V

α

⊺

· φ(x

j

, h

j

) +

∑

j∈V

β

⊺

· ϕ(y, h

j

)

+

∑

( j,k)∈E

γ

⊺

· ψ(y, h

j

, h

k

) + η

⊺

· ω(y, x

0

), (1)

ℎ

!

ℎ

"

#

$

ℎ

$

#

"

#

!

#

%

class label

hidden part labels

image

&(∙)

*(∙)

*(∙)

+(∙)

Figure 1: The undirected graph model. The circles and

squares correspond to variables and factors, respectively.

where α, β, γ and η are the components of θ, i.e.

θ = {α, β, γ, η}. Φ is linear with respect to θ. φ(·),

ϕ(·), ψ(·) and ω(·) are functions defining the fea-

tures of the model. The unary potential α

⊺

· φ(x

j

, h

j

)

models how likely patch x

j

is assigned with part la-

bel h

j

, while the unary potential β

⊺

· ϕ(y, h

j

) mea-

sures how likely an image with class label y con-

tains a patch with part label h

j

. The pairwise po-

tential γ

⊺

· ψ(y, h

j

, h

k

) measures how likely an image

with class label y contains a pair of part labels h

j

and h

k

, where ( j, k) ∈ E. Finally, the root potential

η

⊺

· ω(y, x

0

) measures the compatibility of class label

y and the global feature of the whole image.

Given the potential function θ

⊺

· Φ(x, h, y), the

conditional probabilistic model is given as

P(y, h|x, θ) =

exp(θ

⊺

· Φ(x, h, y))

∑

y

′

∈Y

∑

h

exp

θ

⊺

· Φ(x, h, y

′

)

. (2)

Its denominator is a normalization term which sums

over all possible class labels y

′

∈ Y and all possible

combinations of h. When the feature of an image x

and model parameter θ are known, the probability of

this image having class label y is the summation of

conditional probabilities P(y, h|x, θ) over all possible

assignments of part labels h:

P(y|x, θ) =

∑

h

P(y, h|x, θ) (3)

The joint conditional probability P(y|x, θ) is maxi-

mized for all training samples. The objective function

used for training parameters θ is defined as:

L(θ) =

∑

t

logP(y

t

|x

t

, θ) −

1

2σ

2

||θ||

2

. (4)

The first term in Eq.(4) is the conditional log-

likelihood on the training images. The second term

penalizes large values of θ. The optimal θ is learned

by maximizing the objective function in Eq.(4). The

optimal θ

∗

cannot be computed analytically; instead

we need to employ iterative gradient-based optimiza-

tion methods such as limited-memory BFGS (Byrd

HiddenConditionalRandomFieldsforActionRecognition

241

et al., 1994) to search for the optimal θ. Similarly

as with other hidden state models like HMMs, adding

hidden states h makes the objective function L(θ) not

convex (Quattoni et al., 2004). Therefore, this method

cannot guarantee a global optimal point.

2.2 Root Model

The baseline model of HCRF, called the root model,

only uses the global feature x

0

to train the root filter η

and does not include the hidden part labels. We only

use the last part of the potential function in Eq.(1) for

modelling. The probability of class label y given the

global feature x

0

and root filter parameter η is:

P

root

(y|x

0

;η) =

exp(η

⊺

· ω(y, x

0

))

∑

y

′

∈Y

exp(η

⊺

· ω(y

′

, x

0

))

. (5)

The η

∗

that optimizes this probability is computed

analogously as θ

∗

for the HCRF.

Besides being a simplified version of HCRF, the

root model can also be used to initialize the root fil-

ter η of HCRF. Since the objective function of HCRF

is not convex, a good starting point of the model pa-

rameters lead to a good local optimum. The trained

model parameter of the root model, called root filter,

can be a good estimation of the root filter η in the

HCRF model. The other parameters α, β and γ are

initialized randomly.

2.3 Max-Margin HCRF

Instead of using maximum likelihood, the Max-

Margin Hidden Conditional Random Fields

(MMHCRF) uses a max-margin criterion to set the

model parameter to maximize the margins between

the correct label and the other labels. MMHCRF

uses the potential function and its parametrization

in Eq.(1) as HCRF does. For a training image, its

feature vector and action label pair (x, y) are scored

by the potential function with the best assignment of

hidden variables:

f

θ

(x, y) = max

h

θ

⊺

· Φ(x, h, y). (6)

Given the training samples (x

1

, y

1

), . . . , (x

T

, y

T

), we

want to find θ that maximizes the margin between

the score of the correct label and the score of other

labels. Similar to multi-class SVM (Crammer and

Singer, 2002), this training process can be formulated

as an optimization problem:

min

θ,ξ

1

2

||θ||

2

+C

T

∑

t=1

ξ

t

(7)

s.t. max

h

θ

⊺

· Φ(x

t

, h, y) − max

h

′

θ

⊺

· Φ(x

t

, h

′

, y

t

)

6 ξ

t

− δ(y, y

t

), ∀t, ∀y,

where δ(y, y

t

) is 1 if y 6= y

t

and 0 otherwise. Intu-

itively, we want to find θ whose L

2

-norm is as small

as possible, and satisfies the constraints that the score

for the correct label y

t

is at least one larger than the

scores of the other labels for each training sample. ξ

t

is the slack variable for the t-th training image to han-

dle the soft margin when data is not fully linearly sep-

arable, and C controls the trade-off between the slack

variable penalty and the size of the margin.

Note that the constraints of Eq.(7) are not con-

vex. Therefore, this method is not guaranteed to reach

the global optimum. Using a coordinate descent al-

gorithm similar to (Felzenszwalb et al., 2008), a lo-

cal optimum of Eq.(7) can be computed by iterating

through these two steps:

1. Holding θ, ξ fixed, optimize the hidden part labels

h

′

for the training example (x

t

, y

t

):

h

t,y

t

= argmax

h

′

θ

⊺

· Φ(x

t

, h

′

, y

t

). (8)

2. Holding h

t,y

t

fixed, optimize θ, ξ by solving this

optimization problem:

min

θ,ξ

1

2

||θ||

2

+C

T

∑

t=1

ξ

t

(9)

s.t. max

h

θ

⊺

· Φ(x

t

, h, y) − θ

⊺

· Φ(x

t

, h

t,y

t

, y

t

)

6 ξ

t

− δ(y, y

t

), ∀t, ∀y.

These two steps are repeated until convergence.

During testing, for every new image x, we first

calculate the optimal h for every possible class label

y: h

y

= argmax

h

θ

⊺

· Φ(x, h, y). Next, we calculate

the score of each class label and pick the label with

the highest score: y

∗

= argmax

y

θ

⊺

· Φ(x, h

y

, y).

2.4 Multi-class SVM

In a similar way as the root model is the base-

line model for HCRF, we can derive a root model

for MMHCRF, which only uses the root potential

η

⊺

· ω(y, x

0

) as its potential function and trains the

model parameter with a max-margin approach. Set-

ting f

θ

(x, y) = η

⊺

· ω(y, x

0

), we obtain:

min

θ,ξ

1

2

||θ||

2

+C

T

∑

t=1

ξ

t

(10)

s.t. η

⊺

· ω(y, x

t,0

) − η

⊺

· ω(y

t

, x

t,0

) 6 ξ

t

− 1, ∀t, ∀y 6= y

t

ξ

t

≥ 0, ∀t.

This quadratic program is the standard multi-class

SVM (Crammer and Singer, 2002).

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

242

3 PART LABEL METHOD

Inspired by the concept of bag-of-words (Niebles and

Fei-Fei, 2007), we introduce the Part Labels method

to find the best part label assignment for each im-

age using the model parameter trained by HCRF. The

main idea behind this method is that if the model pa-

rameter is well trained, its learned part labels are de-

scriptive enough for the image, and thus can improve

the performance compared to the root model.

3.1 Model Formulation

When the model parameter θ is trained by the HCRF

model, ideally, the trained θ should give the correct

assignments of part labels higher probabilities, and

the incorrect ones lower probabilities. Therefore, we

could safely use this θ to find the assignment of part

labels with the highest probability h

∗

= argmax

h

θ

⊺

·

Φ(x, h, y) as the correct part labels. Our method is dif-

ferent from MMHCRF since it only adopts the maxi-

mization approach to pick the best assignment of part

labels once, after the HCRF training process. To ob-

tain the best assignment of part labels h

∗

for each

training sample, we can use the decoding process of

Belief Propagation (Yedidia et al., 2003).

The vector of part labels could be considered as

a refined set of local features for the images, like the

”words” for the bag-of-words approach. For example,

the part label for the patch on the head describes the

movement pattern of the head. It is an abstraction of

the patch features. We use these part labels as the lo-

cal features of this image and combine them with the

global feature vector by concatenation: x

′

= (h

∗

, x

0

).

Next, we train the new feature vector x

′

in a sim-

ilar way with the root model. For a training image

(x

′

, y), we define its potential function:

η

′

⊺

· ω

y, x

′

=

∑

a∈Y

η

′

⊺

a

· 1

{y=a}

· x

′

, (11)

where η

′

is the model parameter, ω(·) is the feature

function, η

′

a

measures the compatibility between fea-

ture x

′

and class label y = a. η

′

is the concatenation

of η

′

a

for all a ∈ Y . The length of vector η

′

is |Y ||x

′

|.

Using the potential function defined above, we

could define the probability or likelihood of class la-

bel y given the feature vector x

′

:

P(y|x

′

;η

′

) =

exp

η

′

⊺

· ω

y, x

′

∑

y

′

∈Y

exp

η

′

⊺

· ω

y

′

, x

′

. (12)

The objective function for the set of all training

samples can be formulated as the summation of log-

likelihood of all samples:

L

η

′

=

T

∑

t=1

L

t

η

′

=

T

∑

t=1

logP

y

t

|x

′

t

;η

′

. (13)

The training process can be formulated as an opti-

mization problem to find the optimal η

′

∗

that gives the

maximum of the objective function. We use gradient

ascend to search for the optimal η

′

∗

.

3.2 Testing

Given a test image x, we cannot calculate its

part labels directly because its class label is un-

known. Instead, we calculate the part labels for

each class label to obtain a set of |Y | part labels

{h

(1)

, h

(2)

, . . . , h

(|Y |)

}, where each part label vector

h

(k)

is obtained by finding patches using class la-

bel y = k. Then, we concatenate them with global

feature x

0

to form a new set of feature vectors

{x

′

(1)

, x

′

(2)

, . . . , x

′

(|Y |)

}. We can calculate the proba-

bilities of all possible assignments of the part labels

using η

′

and classify it by the class label that gives

the maximum probabilities.

3.3 Analysis

This method uses the learned part labels as a new

set of features and sends them to the training pro-

cess again. It uses the abstract information contained

in the part labels explicitly. The model parameter is

learned with a method similar to the root filter learn-

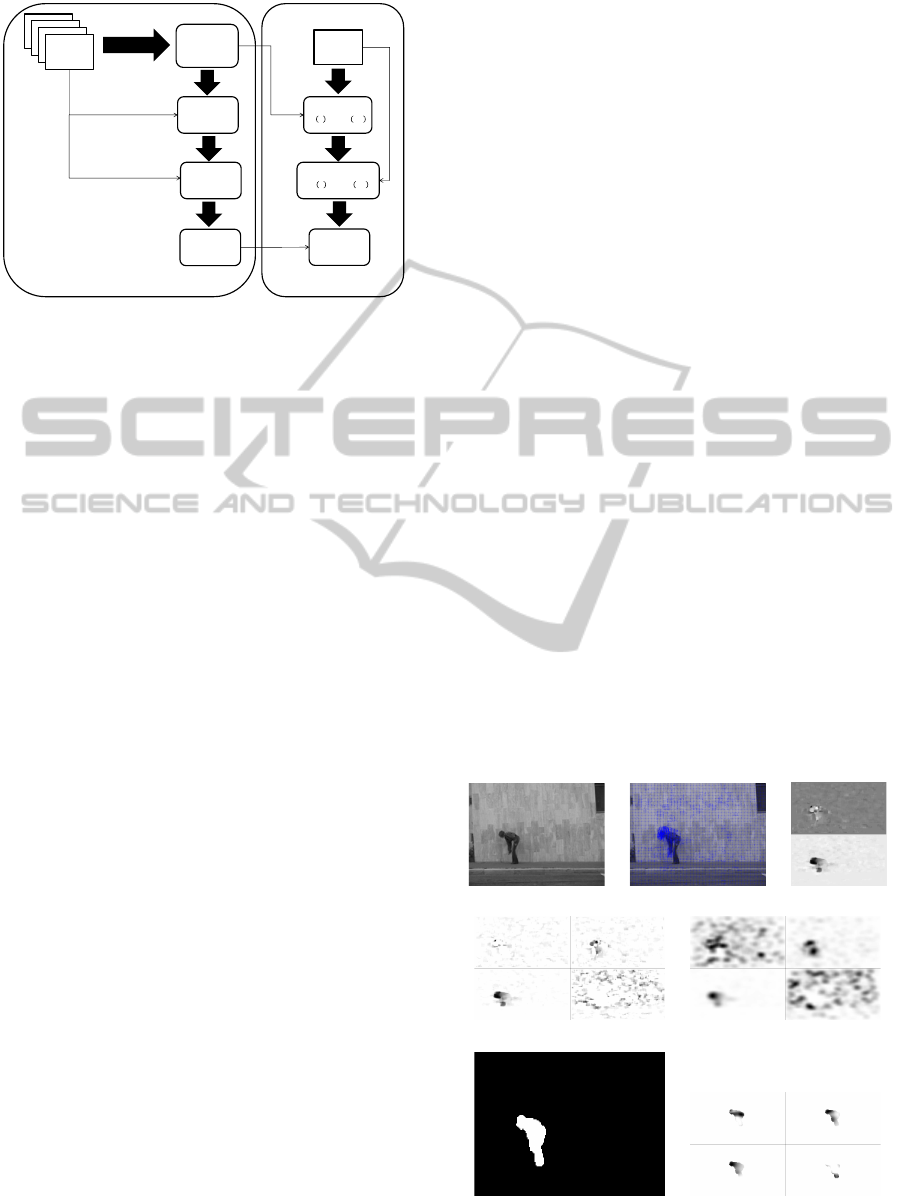

ing method. Figure 2 shows the flowchart of the train-

ing and testing process. The output of the training

process are two model parameters θ and η

′

, which are

learned using HCRF and gradient ascent respectively.

This method is similar to the bag-of-words ap-

proach, since the part labels can be considered as

words. But the way they assign part labels and words

is different. This method uses the model trained by

HCRF to find the part labels, while bag-of-words first

computes a word vocabulary and assigns words to

patches by calculating the Euclidean distance. An-

other difference is that this method combines both

global and local features together. The global features

contain rich overall information for classification, and

local part labels provide a higher level of abstraction

from local patch features.

The Part Labels method can be considered as a

hybrid of the root model and the HCRF model. It

uses the part labels learned by HCRF and trains them

using the root model. Compared with the root model,

it uses more information than the global feature alone,

and compared with the HCRF model, it has an extra

maximization step.

HiddenConditionalRandomFieldsforActionRecognition

243

Model

parameter

Train

images

HCRF

Training

Part labels

∗

Belief

Propagation

Feature

vector "

#

Concatenation

Model

parameter

$

#

Gradient

Ascent

Test

image

Part labels

(

%

, … ,

|&|

)

Belief

Propagation

Feature vectors

("

#

%

, … , "

# |&|

)

Concatenation

Class label

'

Probability

calculation

Training

Testing

Figure 2: Flow chart of the Part Labels method.

4 EXPERIMENTATION

We test the performance of HCRF, MMHCRF, Root

Model, Multiclass SVM and the Part Labels method

on the popular publicly available Weizmann dataset

(Blank et al., 2005). It contains 83 video sequences

at 180 × 144 pixel resolution and 25 frames per sec-

ond. The sequences contain nine different people,

each performing nine different natural actions: bend,

jumping jack (or shortly ”jack”), jump forward on

two legs (or ”jump”), jump in place on two legs (or

”pjump”), run, gallop sideways (or ”side”), walk,

wave one hand (or ”wave1”), wave two hands (or

”wave2”). The dataset is captured under laboratory

settings with fixed background and camera location.

We choose the videos of five subjects as the train-

ing set, and the videos performed by the other four

subjects as the testing set. All frames in the training

set are randomly shuffled so that the training process

converges faster. During testing, we classify frame-

by-frame in a video (per-frame classification). We can

obtain the action label for the whole video by majority

voting of its frame labels (per-video classification).

We calculate the motion features of these video se-

quences in the way similar to what has been proposed

in (Efros et al., 2003). This feature is based on pixel-

wise optical flow to capture the motion information

invariantto appearances (see Figure 3(b)). The optical

flow vector F is split into two vectors corresponding

to the horizontal and vertical components of the op-

tical flow: F

x

and F

y

(see Figure 3(c)). F

x

and F

y

are

further split into four non-negativechannels: F

+

x

, F

−

x

,

F

+

y

and F

−

y

, so that F

x

= F

+

x

− F

−

x

and F

y

= F

+

y

− F

−

y

(see Figure 3(d)). To capture only the essential posi-

tion information, each channel is blurred with a Gaus-

sian kernel and normalized to obtain Fb

+

x

, Fb

−

x

, Fb

+

y

and Fb

−

y

(see Figure 3(e)). The foreground figure is

extracted using the mask provided in the dataset (see

Figure 3(f)). Next, move the salient region of the per-

son to the center of the view to obtain the final motion

features (see Figure 3(g)). This last step is different

from the original feature in (Efros et al., 2003), which

requires to track and stabilize the video first and com-

pute the optical flow next. With this adjustment we

avoid that the correspondence of pixels gets lost due

to tracking and stabilizing the person first.

The obtained motion feature vector is the global

feature of a frame. We find the local patches on

this frame from this global feature vector using the

root model. The concatenation of the four channels

Fb

+

x

, Fb

−

x

, Fb

+

y

, Fb

−

y

within the salient region is the

motion feature of this patch. To describe the loca-

tion of a patch, we divide the image into a grid of

w× h bins. The bin where the patch is located is set

to 1, all other bins are set to 0. This length w × h

vector is the location feature of this patch. The mo-

tion feature vector and location feature vector are con-

catenated as the feature vector of a patch. The tree

structure among the local patches are built by run-

ning a minimum spanning tree algorithm over these

patches, using the distances between patches as edge

weights. The resulting tree structure can be different

from frame to frame.

4.1 Root Model Evaluation

The root model only uses the global feature to train

the root model parameter η. Since the root model

does not contain hidden part labels, it does not need to

solve the inference problem for parameter estimation.

This makes this method very efficient. In addition, it

(a) Original image (b) Optical flow (c) F

x

and F

y

(d) F

+

x

,F

−

x

,F

+

y

, F

−

y

(e) Fb

+

x

,Fb

−

x

,Fb

+

y

, Fb

−

y

(f) Foreground mask (g) Motion features

Figure 3: Calculation of motion features.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

244

bend

jack jump

pjump

run side walk

wave1

wave2

bend

0.9502

0.0000

0.0000

0.0000

0.0000

0.0083

0.0000

0.0415

0.0000

jack

0.0199

0.8458

0.0025

0.0771

0.0000

0.0000

0.0000

0.0025

0.0522

jump

0.1090

0.0128

0.7756

0.0000

0.0000

0.0897

0.0128

0.0000

0.0000

pjump

0.0051

0.2335

0.0051

0.7513

0.0000

0.0051

0.0000

0.0000

0.0000

run

0.0000

0.0058

0.0000

0.0000

0.8480

0.0526

0.0643

0.0000

0.0292

side

0.0000

0.0056

0.0056

0.0000

0.0167

0.9667

0.0056

0.0000

0.0000

walk

0.0000

0.0000

0.0318

0.0000

0.0058

0.1012

0.8613

0.0000

0.0000

wave1

0.1545

0.0091

0.0000

0.0000

0.0000

0.0000

0.0000

0.8318

0.0045

wave2

0.0080

0.0040

0.0000

0.0000

0.0161

0.0000

0.0000

0.0321

0.9398

(a) Per-frame classification

bend

jack jump

pjump

run side walk

wave1

wave2

bend

1 0 0 0 0 0 0 0 0

jack

0 1 0 0 0 0 0 0 0

jump

0 0

1 0 0 0 0 0 0

pjump

0 0.25 0 0.75 0 0 0 0 0

run

0 0 0 0

1 0 0 0 0

side

0 0 0 0 0

1 0 0 0

walk

0 0 0 0 0 0

1 0 0

wave1

0.25 0 0 0 0 0 0 0.75 0

wave2

0 0 0 0 0 0 0 0 1

(b) Per-video classification

Figure 4: Confusion matrices for the root model.

gives a global optimal solution because its objective

function is convex.

Figure 4 gives the confusion matrices of the per-

frame and per-video classification on our feature.

For most actions the classification result is good.

One ”wave1” video is misclassified ”bend” and one

”pjump” video as ”jack”. The first error is caused

by the angle between arm and body is similar for

”wave1” to the angle between upper body and lower

body in ”bend”. The second error is due to moving the

person to the center of the view, the information about

whole body movement in vertical direction is ignored,

causing the body torso movements of ”pjump” and

”jack” to be similar to each other. The root filter η

for ”pjump” shows that the whole body moves up,

while η for ”jack” shows limbs waving around and

the torso moves up. After applying the feature on η

for ”jack”, the movement of limbs is eliminated and

only the torso movement remains, making it hard to

distinguish ”pjump” from ”jack”.

The root model does not explicitly include tempo-

ral information, since it only uses the time informa-

tion between two consecutive frames contained in the

optical flow feature. As a result, movement patterns

of all frames overtime in an action are stacked. Move-

ment patterns of arms and legs are projected onto the

root filter. A new image is classified as the action

whose movement pattern overlaps most. This char-

acteristic of the root model causes confusion if two

actions have similar frames and causes the root model

to prefer actions with more variations to actions with

less variations.

Overall, the root model is efficient and powerful

with 0.8659 accuracy on per-frame classification and

0.9474 accuracy on per-video classification.

pjump wave1 wave2

pjump wave1 wave2

(a) Bend (b) Pjump

(c) Walk (d) Wave1

Figure 5: Learned part labels on the Weizmann dataset.

4.2 HCRF Evaluation

The HCRF model is evaluated with the root filter η

initialized using the root filter learned in the previous

root model. The parameter settings in these experi-

ments are kept the same with (Wang and Mori, 2011).

The size of possible part labels H = 10. The num-

ber of salient patches on each frame is set to 10. The

size of each patch is 5 × 5. The other parameters not

specified in (Wang and Mori, 2011) are experimen-

tally tuned. The grid division of each frame is set

to 10 × 4. The model parameters α, β and γ are ini-

tialized randomly using a Gaussian distribution with

mean 0 and standard deviation 0.01. All these param-

eters are tuned specifically for the Weizmann dataset.

Figure 5 is a visualization of the learned part la-

bels. The patches are labeled with their most likely

parts. From this visualization we can make observa-

tions about the meaning of the part labels. For exam-

ple, the part label No.1 in yellow seems to represent

the pattern ”moving down” which occurs in ”bend”.

The part label No.8 in purple seems to present ”mov-

ing up” which happens most in ”pjump”. The part la-

bel No.6 in blue seems to represent ”rotating” which

could happen in ”walk” and ”wave”.

Figure 6 shows the confusion matrix of per-frame

HCRF classification results. If we compare the clas-

sification results of the root model and the HCRF

model (see Table 1) and their confusion matrix, sur-

prisingly, their outputs are not significantly different

Table 1: Comparison of the root model with HCRF.

root model HCRF

Per-frame 0.8659 0.8737

Per-video 0.9474 0.9474

HiddenConditionalRandomFieldsforActionRecognition

245

bend

jack jump

pjump

run side walk

wave1

wave2

bend

0.9378

0.0083

0.0124

0.0000

0.0000

0.0000

0.0000

0.0415

0.0000

jack

0.0000

0.8930

0.0000

0.0796

0.0000

0.0000

0.0000

0.0025

0.0249

jump

0.0705

0.0385

0.8141

0.0000

0.0000

0.0577

0.0128

0.0000

0.0064

pjump

0.0051

0.2487

0.0000

0.7411

0.0000

0.0051

0.0000

0.0000

0.0000

run

0.0000

0.0585

0.0000

0.0000

0.8187

0.0409

0.0643

0.0058

0.0117

side

0.0000

0.0167

0.0167

0.0000

0.0111

0.9500

0.0056

0.0000

0.0000

walk

0.0000

0.0087

0.0202

0.0000

0.0116

0.0636

0.8960

0.0000

0.0000

wave1

0.1682

0.0000

0.0000

0.0000

0.0045

0.0000

0.0000

0.8227

0.0045

wave2

0.0080

0.0000

0.0000

0.0000

0.0482

0.0000

0.0000

0.0241

0.9197

Figure 6: Per frame confusion matrix of HCRF model.

from each other. This implies the root filter has domi-

nated the HCRF model and lowered the contributions

of the other parts. One possible reason of this result

is that the global feature and local patch features are

the same type of feature. The local patch feature is

simply part of the global feature. Therefore, the dis-

criminative power of the global feature and the local

patch feature is overlapping with each other. Another

reason is that the local patch features in 2D space are

not informative enough for action recognition, since

the temporal structure of an action is not taken into

account. Although this type of features work well on

recognition tasks in the 2D domain, like object recog-

nition, it is not sufficient for challenging tasks like

action recognition. In Table 2 the classification re-

sults of previous work are shown which only use local

patch features in 2D space. Their performance is not

satisfactory, regardless of their classification results.

Overall, the performance of the HCRF model is

comparable to the root model.

Table 2: Classification results of works using only 2D patch

features on Weizmann dataset.

Method

Classification

result (%)

(Scovanner et al., 2007) 30.4

(Niebles and Fei-Fei, 2007) 55.0

4.3 MMHCRF Evaluation

We have implemented the MMHCRF model for the

Weizmann dataset. Unfortunately, we are not able to

get a satisfactory result. To prove that the failure is

not caused by the dataset itself or the max-margin ap-

proach, we evaluated the Weizmann dataset on a sim-

pler model which only has the root potential and trains

its model parameter with a max-margin approach.

Figure 7 shows the confusion matrix of the per-

frame multi-class SVM classification results on the

Weizmann dataset. The overall accuracy is 0.8867

for per-frame classification and 0.9737 for per-video

classification. If we compare this model with the root

model, we can see that the Multi-class SVM slightly

outperforms the root model. This experiment proves

pjump wave1 wave2

bend

jack jump

pjump

run side walk

wave1

wave2

bend

0.9544

0.0041

0.0000

0.0041

0.0000

0.0083

0.0000

0.0290

0.0000

jack

0.0050

0.8383

0.0000

0.1294

0.0000

0.0000

0.0000

0.0025

0.0249

jump

0.0769

0.0000

0.8654

0.0000

0.0064

0.0513

0.0000

0.0000

0.0000

pjump

0.0000

0.1726

0.0000

0.8223

0.0000

0.0051

0.0000

0.0000

0.0000

run

0.0000

0.0351

0.0058

0.0000

0.8304

0.0468

0.0702

0.0000

0.0117

side

0.0000

0.0056

0.0056

0.0000

0.0000

0.9833

0.0056

0.0000

0.0000

walk

0.0000

0.0058

0.0029

0.0000

0.0087

0.0751

0.9075

0.0000

0.0000

wave1

0.1455

0.0045

0.0000

0.0091

0.0000

0.0000

0.0000

0.8364

0.0045

wave2

0.0161

0.0201

0.0040

0.0080

0.0000

0.0000

0.0000

0.0040

0.9478

Figure 7: Per frame confusion matrix of multi-class SVM.

the strength of the max-margin approach, because the

Multi-class SVM trains its model parameter with a

max-margin approach and it proves that it is not the

dataset why MMHCRF fails.

MMHCRF trains the model parameter in a similar

way as the multi-class SVM, but since MMHCRF in-

troduces the hidden part labels, the optimization prob-

lem becomes not convex and only a local optimum

can be obtained. Different ways of model parameter

initialization lead to different local optimal solutions.

Hence, the performance of MMHCRF is heavily de-

pendent on its model parameter initialization.

Additionally, MMHCRF is very sensitive to its

trade-off parameter C, which controls the trade off

between margin size and training error. The bigger

C, the less tolerable the system is to the training error.

Finally, the computational complexity of

MMHCRF is much higher compared to HCRF,

because it needs to solve both inference problem

and a quadratic program for every training sample,

whereas HCRF only needs to do the inference.

4.4 Part Labels Evaluation

Our novel Part Labels method utilizes the model pa-

rameter trained by HCRF to find the most likely part

labels for each frame, which are concatenated with the

global feature for training. Table 3 shows the compar-

ison of the root model, HCRF, multi-class SVM and

this Part Labels method. Figure 8 showsthe confusion

matrices of the per-frame and per-video classification

results of the Part Labels method.

The per-frame classification results of these four

models are not really significantly different from each

other, most likely since they essentially use the same

information. The part labels are learned from the local

Table 3: Comparison of the root model, HCRF, multi-class

SVM and Part Labels.

root

model

HCRF

multi-

class

SVM

Part

Labels

Per-frame 0.8659 0.8737 0.8867 0.8705

Per-video 0.9474 0.9474 0.9737 0.9737

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

246

bend

jack jump

pjump

run side walk

wave1

wave2

bend

0.9046

0.0124

0.0124

0.0041

0.0000

0.0000

0.0000

0.0415

0.0249

jack

0.0075

0.9030

0.0000

0.0746

0.0000

0.0025

0.0000

0.0025

0.0100

jump

0.0449

0.0064

0.8269

0.0000

0.0256

0.0833

0.0128

0.0000

0.0000

pjump

0.0000

0.2487

0.0000

0.7360

0.0000

0.0000

0.0000

0.0051

0.0102

run

0.0000

0.0000

0.0000

0.0000

0.8363

0.0643

0.0994

0.0000

0.0000

side

0.0000

0.0111

0.0167

0.0000

0.0056

0.9611

0.0056

0.0000

0.0000

walk

0.0000

0.0000

0.0318

0.0000

0.0116

0.0925

0.8642

0.0000

0.0000

wave1

0.1045

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.8318

0.0636

wave2

0.0080

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0723

0.9197

(a) Per-frame classification

bend

jack jump

pjump

run side walk

wave1

wave2

bend

1 0 0 0 0 0 0 0 0

jack

0

1 0 0 0 0 0 0 0

jump

0 0 1 0 0 0 0 0 0

pjump

0 0.25 0 0.75 0 0 0 0 0

run

0 0 0 0

1 0 0 0 0

side

0 0 0 0 0

1 0 0 0

walk

0 0 0 0 0 0 1 0 0

wave1

0 0 0 0 0 0 0 1 0

wave2

0 0 0 0 0 0 0 0 1

(b) Per-video classification

Figure 8: Confusion matrices of the Part Labels method.

patches which are included in the global feature.

The performance of the Part Labels method is still

slightly better than the performance of the root model.

This is because the Part Labels method use the part

labels in addition to the global feature. In the con-

fusion matrix of per-video Part Labels classification,

”wave1” is not misclassified as ”bend”. Even though

the global features of these two actions are similar,

their part labels are different, as we can see from Fig-

ure 5. Using this information in the Part Labels model

helps to distinguish them from each other.

5 CONCLUDING REMARKS

This paper introduces a new method for action recog-

nition called the Part Labels method which finds the

best assignment of part labels for each image using

the model parameters trained by HCRF. By analysing

the root model, HCRF, Multi-class SVM, MMHCRF

and the newly proposed Part Labels method on a

benchmark dataset for human actions, we noticed that

the performance of simpler models (the root model

and the multi-class SVM) is comparable to the more

complex models (HCRF and Part Labels). This is be-

cause both HCRF and Part Labels only model the spa-

tial structure, and neglects the temporal structure over

frames. For challenging tasks such as action recogni-

tion, the spatial structure changes over time and be-

comes too complex to model.

A natural extension of our work is to include the

temporal information. This could be done by includ-

ing the temporal information in spatio-temporal fea-

tures or by directly modelling the temporal structure

among frames.

ACKNOWLEDGEMENTS

This work was supported by the EU FP7 Marie Curie

Network iCareNet under grant number 264738 and

the Dutch national program COMMIT.

REFERENCES

Blank, M., Gorelick, L., Shechtman, E., Irani, M., and

Basri, R. (2005). Actions as space-time shapes. In

ICCV’05.

Byrd, R., Nocedal, J., and Schnabel, R. (1994). Represen-

tations of quasi-newton matrices and their use in lim-

ited memory methods. Mathematical Programming,

63:129–156.

Crammer, K. and Singer, Y. (2002). On the algorithmic

implementation of multiclass kernel-based vector ma-

chines. The Journal of Machine Learning Research,

2:265–292.

Efros, A., Berg, A., Mori, G., and Malik, J. (2003). Recog-

nizing action at a distance. In ICCV’03.

Felzenszwalb, P., McAllester, D., and Ramanan, D. (2008).

A discriminatively trained, multiscale, deformable

part model. In CVPR’08.

Jhuang, H., Serre, T., Wolf, L., and Poggio, T. (2007). A

biologically inspired system for action recognition. In

ICCV’07.

Kumar, S. and Hebert, M. (2003). Discriminative random

fields: A discriminative framework for contextual in-

teraction in classification. In ICCV’03.

Niebles, J. and Fei-Fei, L. (2007). A hierarchical model of

shape and appearance for human action classification.

In CVPR’07.

Quattoni, A., Collins, M., and Darrell, T. (2004). Con-

ditional random fields for object recognition. In

NIPS’04.

Quattoni, A., Wang, S., Morency, L.-P., Collinsl, M., and

Darrell, T. (2007). Hidden conditional random fields.

IEEE Transactions on Pattern Analysis and Machine

Intelligence, 29(10):1848–1852.

Scovanner, P., Ali, S., and Shah, M. (2007). A 3-

dimensional SIFT descriptor and its application to ac-

tion recognition. In Proc. of the 15th international

conference on Multimedia.

Wang, Y. and Mori, G. (2011). Hidden part models for

human action recognition: Probabilistic versus max-

margin. IEEE Transactions on Pattern Analysis and

Machine Intelligence, 33(7):1310–1323.

Yamato, J., Ohya, J., and Ishii, K. (1992). Recognizing

human action in time-sequential images using hidden

markov model. In CVPR’92.

Yedidia, J., Freeman, W., and Weiss, Y. (2003). Understand-

ing belief propagation and its generalizations. In Ex-

ploring artificial intelligence in the new millennium,

pages 239–269.

HiddenConditionalRandomFieldsforActionRecognition

247