Feature Extraction for Human Motion Indexing of Acted Dance

Performances

Andreas Aristidou and Yiorgos Chrysanthou

Department of Computer Science, University of Cyprus, 75 Kallipoleos Street, 1678, Nicosia, Cyprus

Keywords:

Motion Capture, Laban Movement Analysis, Motion Indexing, Emotions.

Abstract:

There has been an increasing use of pre-recorded motion capture data for animating virtual characters and syn-

thesising different actions; it is although a necessity to establish a resultful method for indexing, classifying

and retrieving motion. In this paper, we propose a method that can automatically extract motion qualities from

dance performances, in terms of Laban Movement Analysis (LMA), for motion analysis and indexing pur-

poses. The main objectives of this study is to analyse the motion information of different dance performances,

using the LMA components, and extract those features that are indicative of certain emotions or actions. LMA

encodes motions using four components, Body, Effort, Shape and Space, which represent a wide array of

structural, geometric, and dynamic features of human motion. A deeper analysis of how these features change

on different movements is presented, investigating the correlations between the performers’ acting emotional

state and its characteristics, thus indicating the importance and the effect of each feature for the classification

of the motion. Understanding the quality of the movement helps to apprehend the intentions of the performer,

providing a representative search space for indexing motions.

1 INTRODUCTION

Motion analysis and classification is of high interest

in a variety of major areas including robotics, com-

puter animation, psychology as well as the film and

computer game industries. The increasing availability

of large motion databases (CMU, 2003; UTA, 2011;

UCY, 2012), in addition to the motion re-targeting

(Gleicher, 1998; Hecker et al., 2008) and motion syn-

thesis (Kovar et al., 2002; Arikan et al., 2003) ad-

vancements, have contributed to the sharp increase in

use of pre-recorded motion for animating virtual hu-

man characters, thus making motion indexing an es-

sential key for easy motion composition.

Motion analysis consists of understanding differ-

ent types of human actions, such as basic human ac-

tions (e.g. walking, running, or jumping) in addition

to stylistic variations in motion caused by the actor’s

emotion, expression, gender, age etc. An important

role in the description and categorisation of move-

ments is played by the emotion, the expression and

the effort of each movement, in addition to the pur-

pose of the movement, reflecting its nuance. The

nuance

1

of a movement, along with the concentra-

1

The details of movement style in which essence or meaning is encapsu-

lated in the proper execution of the steps” Muriel Topaz, 1986 (in Dunlop’s

book Dance Words).

tion and the energy needed to carry out the action,

represents the intangible characteristics, and can de-

scribe the intentions of the performer; it is the addi-

tional information that the human eye and brain use

to assess and index a movement. Based on the prin-

ciples of movement observation science (Moore and

Yamamoto, 1988), we aim to extract the so-called nu-

ance of motion and use it for motion indexing and

classification purposes.

The movement of the human body is complex

and it is not possible to completely describe the hu-

man movement language if rough simplifications in

motion description are used or if motion has not

been properly indexed from the outset. Laban Move-

ment Analysis (LMA) (Maleti

´

c, 1987) is a multidisci-

plinary system which incorporates contributions from

anatomy, kinesiology, and psychology and which

draws on Rudolf Laban’s theories to describe, inter-

pret and document human movements; it is one of the

most widely used systems of human movement analy-

sis and has been extensively used to describe and doc-

ument dance and choreographies over the last century.

Consequently, we propose an efficient method that

can automatically extract motion qualities, in terms of

LMA entities, for motion analysis and indexing pur-

poses; each movement is associated with a qualitative

and quantitative description that may help to search

277

Aristidou A. and Chrysanthou Y..

Feature Extraction for Human Motion Indexing of Acted Dance Performances.

DOI: 10.5220/0004662502770287

In Proceedings of the 9th International Conference on Computer Graphics Theory and Applications (GRAPP-2014), pages 277-287

ISBN: 978-989-758-002-4

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

for any correlations between different performances

or actions. The main objective and novelty of this

study is to analyse the motion information of differ-

ent dance performances, using the LMA components,

to extract those features that are indicative of certain

emotions and explore how they change with regards

to the performer’s emotion, as referred in emotion re-

search science (Russell, 1980). To get the users in-

volved in a more active manner, we used acted dance

data of different contemporary scenarios since the

performers try to express their feelings through the

dance and their movement vocabulary; the perform-

ers put more emphasis on movements since it is the

only way of channelling their emotions to the pub-

lic. It is important to note that this paper does not

intend to document the emotions of a dance or its per-

former (which are subjective), but to export those fea-

tures that characterise the performer’s movement and

are indicative to the movement quality. An analysis of

how these features change on movements with differ-

ent feelings is presented, investigating the correlations

between the performers’ acting emotional state and its

characteristics; the outcomes of this work can be used

as an alternative or complement to the standard meth-

ods for motion synthesis and classification. Results

demonstrate the importance of each of the proposed

features and their effect in the classification of mo-

tion. Understanding movement quality helps to ap-

prehend the intentions of the performer, providing a

valuable criterion for motion indexing.

2 RELATED WORK

Over the last decade, a large number of different ap-

proaches have been developed for human figure an-

imation and motion synthesis, where the characters

behave autonomously through learning and percep-

tion (Arikan et al., 2003; Fang and Pollard, 2003).

Most papers in the literature synthesise new move-

ments to enrich the motion databases by combining

different motion parts and reusing existing data. They

segment the human skeleton into the upper and lower

body or into smaller kinematic chains and classify

motions using simple vocabularies (such as walk, run,

kick, box, etc.), (Kovar and Gleicher, 2004; Ikemoto

and Forsyth, 2004), while other works designed vo-

cabularies based on a specific subject (e.g. kickbox-

ing, dancing etc.), (Kwon et al., 2008; Chan et al.,

2011). (M

¨

uller et al., 2005) proposed a content-based

retrieval method to compute a small set of geometric

properties which are used for motion similarity pur-

poses. Various techniques have been proposed for

spatial indexing of motion data (Keogh et al., 2004;

Kr

¨

uger et al., 2010); (Barbi

ˇ

c et al., 2004) and (Liu

et al., 2005) applied Principal Component Analysis

(PCA) to reduce the representation of human motion

for motion retrieval, whereas (Chao et al., 2012) used

a set of orthonormal spherical harmonic function. Re-

cently, (Deng et al., 2009) and (Wu et al., 2009) clus-

tered motion data on hierarchically structured body

segments for indexing and retrieval purposes. Never-

theless, most of the aforementioned approaches have

been based on primary human actions, such as body

posture and pose changes, regardless of the actor’s

style, emotion and intentions; the quality of the move-

ment and the required effort have been neglected.

Motion indexing, classification and recognition

draws high interest in a variety of disciplines and

has been studied in-depth by the computer animation

community. Some papers consider the actor’s style

and emotion; still, rough simplifications in simulation

and notation of movement are used, ignoring experi-

ences collected in dance notation over the last cen-

tury. For instance, (Troje, 2009) has applied PCA on

human walking clips to extract the lower-dimensional

representations of various emotional states. (Shapiro

et al., 2006) and (Min et al., 2010) used style com-

ponents to separate and synthesise different motions.

Recently, (Cimen et al., 2013) analysed human emo-

tions using posture, dynamic and frequency based

features, aiming to classify the movements of the

character in terms of their affective state. How-

ever, most of these works overlooked the experiences

gained in motion analysis and movement observation

over the last century, such as described in LMA.

The idea of using a choreography notation, kinesi-

ology theory or movement analysis to classify the hu-

man motion and segregate humanlike skeletons into

different kinematic chains is relatively new. In order

to achieve a satisfying simulation for the complex hu-

man body language, a simple as possible but com-

plex as necessary description of the human motion is

required and LMA (Maleti

´

c, 1987) fulfils these de-

mands. The relationship between gesture and pos-

ture has been studied in movement theory (Lamb,

1965) and psychology (Nann Winter et al., 1989). The

posture is defined as a movement that is consistent

throughout the whole body, while gesture as a move-

ment of a particular body part or parts (Lamb, 1965).

In that manner, (Luo and Neff, 2012) have recently

presented a perceptual study of the relationship be-

tween posture and gesture for virtual characters, en-

abling a wider range of expressive body motion vari-

ations. (Chi et al., 2000) presented the EMOTE sys-

tem, for motion parameterisation and expression, that

synthesises gesture based on the Effort and Shape

qualities derived from LMA. In addition, different ap-

GRAPP2014-InternationalConferenceonComputerGraphicsTheoryandApplications

278

proaches for extracting the LMA components have

been proposed. (Zhao and Badler, 2005) designed a

neural network for gesture animation that maps from

extracted motion features to motion qualities in terms

of the LMA Effort factors. (Hartmann et al., 2006)

quantify the expressive content of gesture based on

a review of the psychology literature, whereas (Tor-

resani et al., 2006) used LMA for learning motion

styles. Lately, (Wakayama et al., 2010) and (Oka-

jima et al., 2012) demonstrated the use of a subset

of LMA features for motion retrieval, while (Kapadia

et al., 2013) proposed a method for searching motions

in large databases. (Alaoui et al., 2013) have recently

developed the Chiseling Bodies, an interactive aug-

mented dance performance, that extracts movement

qualities (energy, kick, jump/drop, vericality/height

and stillness) and returns a visual feedback.

In this paper, we analyse meaningful expressive

features inspired from LMA that can be used to cap-

ture the emotional state of the dancer and evaluate

their influence in motion; we focus on a set of features

that includes the Body, Effort, Shape and Space

2

com-

ponents, with a view to asses the significance of each

feature in motion classification and synthesis. This

work differs to the literature since it studies how fea-

tures that have been considered in movement analysis

vary with respect to the emotion of the performer; it

aims to find similarities and differences in order to

achieve a smooth composition or a discrete classifica-

tion in animation.

3 DATA ACQUISITION

In this study, we used motion capture data recorded

with an 8-cameras PhaseSpace Impulse X2 motion

capture system (with capture rate 960Hz). The per-

former wears a special outfit (mocap suit) that can

be observed from the cameras surrounding the site

where the character moves. The data were then used

for skeletal reconstruction, thus capturing the motion.

It is important although to note that many different

factors may affect the characteristics of a dance per-

formance; the music rhythm, the song lyrics, the per-

former’s personality and idiosyncrasies, experience,

emotional charge, and many others. The emotional

and intangible characteristics of human behaviour and

motion are subjective and may depend on, in addition

to the dancer’s skill and experience, momentary feel-

ings, the external environment etc. Some of the most

important factors that affect the quality of the motion

during the capturing procedure are:

2

LMA key terms are capitalised in order to be distinguished from their

common English language usage.

• The mocap suit has markers attached on every

limb giving the feeling of restriction or reduced

motion to the performer. Solution: Allow 5-10

minutes for warming up to familiarise the user

with the outfit.

• The size of the laboratory restrict the movements

of the performer to a limited space; in addition,

the feeling of laboratory environment reduces the

user’s intimacy with the area, thus limiting his cre-

ativity. Solution: Dances can be captured in envi-

ronments which are familiar to the dancer, such

as dance schools, thereby reducing the potential

influence of external factors.

Five different actors performed in our laboratory,

each of them acting six different emotional states. The

actors are professional dancers, one male and four fe-

males, while their age range between 20 and 35 years

old. The dancers were asked to perform an emotional

state for 90 - 120 seconds, together with music of their

choice; each actor had the required time to prepare the

scenario and get ready for the performance. It is im-

portant to note that the performers do not know what

the assessment criteria are.

In this project we have used the BVH (Biovision

Hierarchical Data) format; it consists of two parts

where the first section details the hierarchy and ini-

tial pose of the skeleton and the second section de-

scribes the channel data for each frame, thus the mo-

tion section. BVH format maps all the performers to

a normalised character with standard height and body

shape.

4 MOTION STUDY ANALYSIS

Laban Movement Analysis (LMA) offers a clear doc-

umentation of the human motion and it is divided

into four main categories: Body, Effort, Shape and

Space. In this section, we present the LMA compo-

nents and the representative features which are indica-

tive to capture the motion properties, allowing users to

characterise complex motions and feelings.

4.1 Body Component

Body describes the structural and physical character-

istics of the human body in motion. This compo-

nent is responsible for describing which body parts

are moving, which parts are connected, which parts

are influenced by others, what is the sequence of the

movement between the body parts, and general state-

ments about body organisation. The Body component

helps to address the orchestration of the body parts

FeatureExtractionforHumanMotionIndexingofActedDancePerformances

279

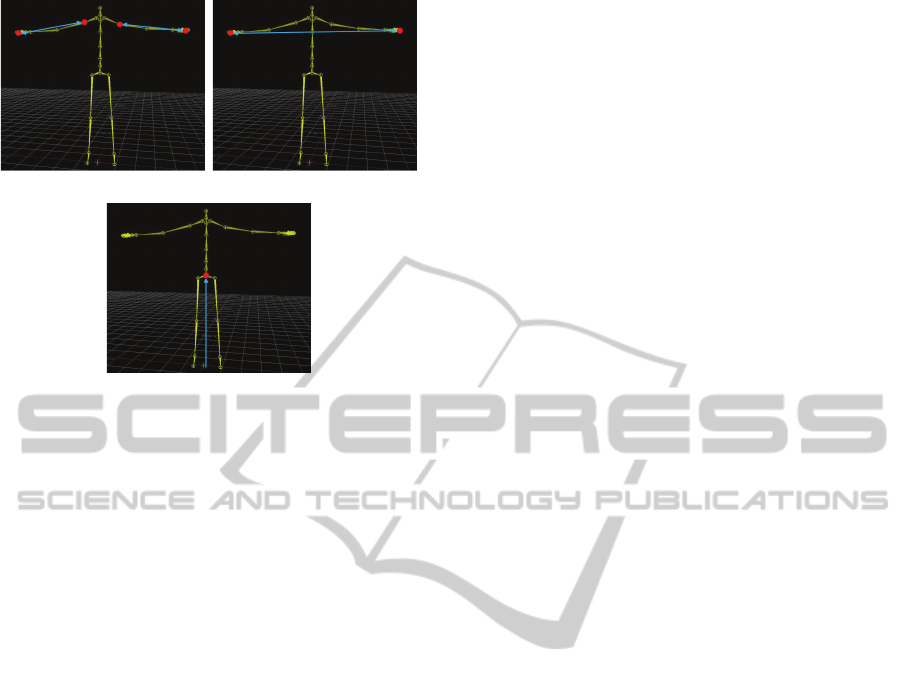

(a) (b)

(c)

Figure 1: (a) The hands-shoulder displacement, (b) the left

hand - right hand displacement, (c) the distance between

ground and the root.

and identify the starting point of the movement. In

order to express the body connectivity and find the re-

lation between body parts, we propose the following

features:

• Displacement and Orientations: Different dis-

placements have been tried such as hand - head,

foot - hip etc., but the results were not indicative

of any emotion, thus cannot be used for motion

indexing. Hands - shoulder and right hand - left

hand displacements, as shown in Figure 1(a) and

1(b) respectively, appear to be much more useful

for extracting the intention of the performer.

• Hip height can be calculated as the distance be-

tween the root joint and the ground, as shown in

Figure 1(c). This feature is particularly useful for

specifying whether the performer kneels, jumps in

the air or falls to the ground.

Other features were studied to extract the Body

component, such as the centre of mass, the centroid,

the balance, but results showed that they are not offer-

ing additional information for the separation of mo-

tion.

4.2 Effort Component

Effort describes the intention and the dynamic qual-

ity of the movement, the texture, the feeling tone and

how the energy is being used on each motion. For ex-

ample, there is a difference between giving a glass of

water to someone from pushing him in terms of the

intention of the movement, even if the actual move-

ment is extension of the arm at both cases. Effort in

LMA comprises four subcategories - each having two

polarities - named Effort factors:

1. Space. addresses the quality of active attention to

the surroundings, where. It has two polarities, Di-

rect (when using Direct movement your attention

is on a single point in space, focused and specific)

and Indirect (giving active attention in more than

one thing at once, multi-focused and flexible at-

tention, all around awareness),

2. Weight. is a sensing factor, sensing the physical

mass and its relationship with the gravity and is

related to the movement impact, what. The two

dimensions of Weight are Strong (bold, forceful,

determined intention) and Light (delicate, sensi-

tive, easy intention),

3. Time. is the inner attitude of the body toward

the time, not the duration of the movement, when.

Time polarities are Sudden (has a sense of quick,

urgent, staccato, unexpected, isolated, surprising)

and Sustained (has a quality of stretching the time,

legato, leisurely, continuous, lingering),

4. Flow. is the continuity of the movement, the base

line of “goingness”. It is the key factor in the way

that the movement is being expressed because is

related with the feelings, and progression, how.

The Flow dimensions are Bound (is related with

the controlled movement, careful and restrained,

contained and inward) and Free (is related with

released movement, outpouring and fluid, going

with the flow).

Effort changes are generally related with the

changes of mood or emotion and are essential for the

expressivity. The Effort factors can be derived as fol-

lows:

Space Feature. Eye focus is a very important fac-

tor for understanding the intentions of the performer.

Thus, we can extract the intention of the character by

studying the attitude and the orientation of the body

in relation to the direction of the motion. If the char-

acter is moving in the same direction as the head ori-

entation, then the movement is classified as Direct,

whereas if the orientation of the head does not coin-

cide with the direction of the motion, then this move-

ment is classified as Indirect. A good approximation

of the angle formed between the head and the direc-

tion of the movement is given as Θ = θ

1

+ θ

2

+ θ

3

,

and combines different angles formed at various key

points of the body: the angle between the head and

the upper body, θ

1

, the angle between the upper and

the lower body, θ

2

, and the angle between the lower

body and the direction of the movement, θ

3

. If the

direction of the movement is similar (or close to sim-

ilar, Θ u 0

o

) to the orientation of the head, then the

GRAPP2014-InternationalConferenceonComputerGraphicsTheoryandApplications

280

movement is classified as direct, whereas in any other

cases is classified as indirect.

Weight Feature. The Weight factor is a sensing

factor; it can be estimated by calculating the deceler-

ation of motion and how it varies over time; peaks in

decelerations means a movement with Strong Weight,

where no peaks (e.g. smooth and fluid) refers to a

movement with Light Weight. It is important to note

that the Weight factor is velocity independent.

Time Feature. The Time factor can be extracted

using the velocity and acceleration features. The ve-

locity of the performer’s movement can be estimated

by calculating the distance covered by the root joint

over a time period (10-frame time windows, note that

data are recorded at 30 frames per second). In addi-

tion, the average velocity of both hands is calculated,

thus adding an extra parameter in movement classifi-

cation. Using this feature, we can distinguish move-

ments where the performer is standing but his feelings

are mainly expressed by the hands.

Flow Feature. A direct way to extract the Flow of

each movement is jerk. Jerk is the rate of changes of

acceleration or force and it is calculated by taking the

derivative of the acceleration of the root joint with re-

spect to time. Bound motion has large discontinuities

with high jerk, whereas Free motion has little changes

in acceleration.

4.3 Shape Component

While the Body component primarily develops body

and body/space connections, Shape analyses the way

the body changes shape during movement. There

are several subcategories in Shape, such as mode or

quality, which describe static shapes that the body

takes, the relation of the body to itself, the relations

of the body with the environment, the way the body

is changing toward some point in space, and the way

the torso can change in shape to support movements

in the rest of the body.

The Shape of the body at any given time can be

captured using the volume of the performer’s skele-

ton. The volume is given by calculating the convex

hull of the bounding box given from the five end-

effector joints (head, left and right hand, left and right

foot), as presented in Figure 2(a). The area within the

bounding box was also calculated but the results do

not differ significantly from the volume results; vol-

ume will be preferred as it gives more distinct val-

ues for separation and classification of the performer’s

emotions.

In addition, the torso height can be used to es-

timate the distance between the head and the root

joint, as shown in Figure 2(b). This feature indicates

(a) (b)

(c)

Figure 2: (a) The bounding box, (b) the distance between

hip and head (torso), (c) the different levels of the body

where hands can be located.

whether the performer is crouching, meaning bend-

ing his torso. Please note that this feature does not

take into account whether the legs are bent, but only

if the torso is kept straight or not.

The Shape component can be also identified us-

ing an algorithm for understanding whether the hands

of the performer are moving on the upper level of

the body, the middle level or the low level (see Fig-

ure 2(c)). Any hand movement with orbit above the

performer’s head is classified as upper level. When

the movement is carried out in the space between the

head and the midpoint position between the head and

root, then it is considered as middle level, where, if

the movements are lower than the midpoint position

are classified as low level. The same algorithm ap-

plies even if the performer is crouching, kneeling or

jumping.

4.4 Space Component

Space describes the movement in relation with the

environment, spatial patterns, pathways, levels, and

lines of spatial tension. It articulates the relationship

between the human body and the three-dimensional

space. Laban classified principles for the move-

ment orientation based on the body kinesphere (the

space within reach of the body, mover’s own personal

movement sphere) and body dynamosphere (the space

where the body’s actions take place, the general space

which is an important part of personal style).

In order to measure the space factor, we used two

FeatureExtractionforHumanMotionIndexingofActedDancePerformances

281

Figure 3: The trajectory of the performer in the allowable

space after 15 seconds. In red colour is the trajectory of the

performer acting happy, where blue is the trajectory when

acting scared.

different features: (a) the total distance covered over

a time period; we used for evaluation three different

time durations of 30, 15 and 5 seconds, and (b) the

area covered for the same time period. Using the

area key it is expected to quantify the relationship

of the performer’s feelings with the environment, and

whether his movements are taking advantage of all the

allowable space. Figure 3 shows an example with the

relation of the performer and the environment, where

the trajectory of the performer was projected on the

ground for two cases: when asked to act (a) a happy

feeling, and (b) fear. Obviously, in case of happiness

he moved all over the space, thus covering a large

area, where in case of fear, he limited the movements

only to a small section of the allowable space.

5 RESULTS

In this section, the experimental results are presented

and analysed; our method takes as input raw motion

data in BVH format and extracts meaningful features

to provide a compact and representative space for in-

dexing. The proposed features are evaluated based on

their ability to extract the qualitative and quantitative

characteristics of each emotion, how they vary in dif-

ferent emotional states, as well as their importance for

valuable motion indexing.

Our datasets comprise BVH files from acted

contemporary dance performances of five different

dancers. It is important to recall that BVH skeletons

are by default normalised, thus skeleton and joint dis-

tances, such as arm span and other displacements, are

calculated under the same conditions. The size of mo-

tion clips range between 90 - 120 seconds; we used

different size windows (usually 300-frames windows

with a 15-frames step) to draw the proposed LMA

features and measure the observations, resulting in

200 observations for each clip (1000 observations for

each feeling). Figure 4 shows two different snapshots

from our video clips, where actors/dancers perform

Figure 4: Snapshots of contemporary dance performances

at our laboratory.

different contemporary dance scenarios. Six repre-

sentative feelings or emotional situations (happiness,

sadness, curiosity, nervousness, activeness and fear)

have been studied for evaluation and comparison pur-

poses.

5.1 Body Features

Displacement and Orientations. Studying the fea-

tures of displacement and orientation, we were able

to understand some of the motion qualities and distin-

guish different feelings. The distance between hands

and hips varies significantly in different feelings; for

instance, the average distance when the performer

was acting happiness or having an active behaviour

was relatively large (53cm), with a large distribution

in values (standard deviation over 21cm). On the other

hand, the feelings of sadness, nervousness and fear

had an average distance close to 38cm and standard

deviation up to 16 cm, where values tend to be con-

centrated in the range between 28cm-32cm. Curios-

ity has an average distance close to 46cm and a rel-

atively small deviation; the distance rarely exceeded

80cm, which is a valuable criterion to distinguish it

from other emotions. Studying the distance between

the two hands, we noticed that for happiness, curios-

ity and activeness, the performer has chosen to make

movements with large distance between right and left

hand, where in some cases this distance reach up to

140cm. The average value is close to 68cm, while the

standard deviation is 32cm. The cases of sadness and

fear have a much smaller average distance between

hands (42cm), where large distances appear rarely. Fi-

nally, when the performer impersonated the feeling of

nervousness, the average distance is marginally larger

than the case of sadness, but smaller than happiness.

Hip Height. Looking at the distance between the

skeleton root and the ground, the feeling of happiness

has values greater than 90cm (the initial distance in

BVH files when the character is in standing pose is

90cm), indicating that the performer was jumping. In

the same way, the distance histogram for active be-

GRAPP2014-InternationalConferenceonComputerGraphicsTheoryandApplications

282

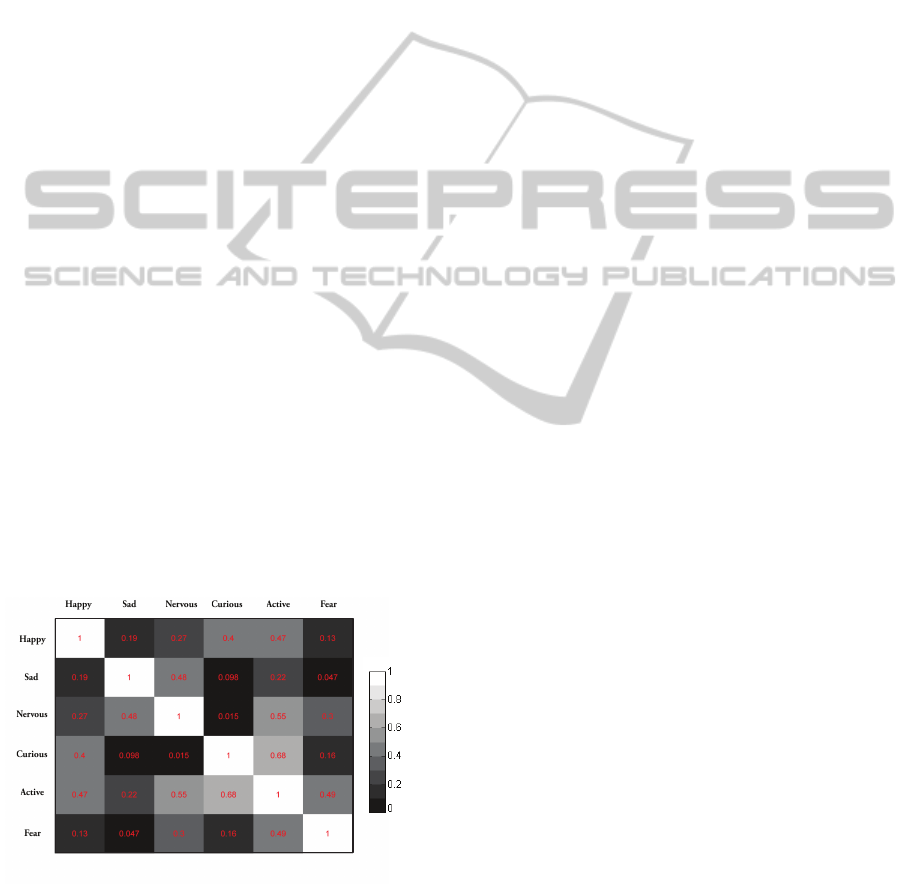

Figure 5: The correlation matrix showing the relation be-

tween different emotions with regards to the body features.

haviours resembles the histogram of the happy feel-

ing. In contrast, when the performer asked to act a sad

feeling, the distance get values only between 60cm to

90cm, which implies that the performer never jumped.

The feeling of nervousness can be distinguished from

other feelings since the distance is mainly distributed

between the values 85cm and 90cm, having the small-

est standard deviation (3cm). A clear observation per-

ceived for the curiosity feeling is that there were cases

whereas the distance had very low values (close to

30cm), with large distance distribution (standard de-

viation near to 13.5cm). Lastly, the feeling of fear

was impersonated with kneeling or even sitting on to

the ground (probably to protect the body, leaving a

smaller body area unprotected), driving to the lowest

value for distance (24cm) and the largest standard de-

viation (17 cm).

In order to assess the significance of the proposed

body features, a correlation matrix is introduced to

present the association between the different emo-

tional states. The correlation matrix measures the

Pearson’s linear correlation coefficient, that is nor-

malised to take values between 0 and 1 (0 - no corre-

lation, 1 - high correlation). Figure 5 gives the corre-

lation between the emotions with regard to the body

features; the matrix displays the average correlation

over all body features. Clearly, most of the emotions

have small correlation coefficients, meaning it is easy

to be distinguished; as expected, happiness and fear

are correlated, as well as fear and active behaviour.

5.2 Effort Features

Head Orientation and Direction of Movement. The

head orientation is proved to be a valuable factor

for understanding the effort component; for instance,

happiness, nervousness and activeness can be ex-

pressed as direct movements since mostly the per-

former was moving in the same direction of the head.

On the other hand, looking at curiosity, we notice that

movements are mainly indirect since the performer’s

direction was independent of the head orientation (the

performer moved around the “target” to observe). The

head during sadness moved somewhat uncontrollably,

without being a remarkable criterion for separation.

Similarly, fear had large variations on head orienta-

tion, probably because the performer was checking

the area to protect himself.

Body Velocity. Studying clips with happy state,

we observed that the velocity of the character is rel-

atively high (average speed 72cm/s), while values

close to 90cm/s appeared several times. The maxi-

mum value for velocity is 165cm/s and the standard

deviation is 37.5cm/s. This is consistent with the

feeling of happiness, since being happy most of the

times means a playful and full of energy behaviour.

Curiosity and active states have similar behaviours

to happiness, with maximum speed 67.8cm/s and

78.9cm/s, respectively. In contrast, the average speed

of the character when impersonated a sad state was

significantly smaller (33cm/s), with standard devi-

ation close to 24cm/s, while speed never exceeds

105cm/s. The cases of nervousness and fear have

average velocity 45cm/s and 57cm/s, respectively,

but they differ in standard deviation; being scared

means large variation (39cm/s) compared to nervous-

ness (28cm/s).

Body Acceleration. All emotional states have the

same acceleration histogram, where its shape has a

normal Gaussian distribution. However, each emotion

has a different standard deviation; curiosity, active-

ness and fear have the largest distribution (4.5cm/s

2

)

in motion acceleration, where the largest acceleration

for these feelings reach up to 16.5cm/s

2

, meaning

that moves were mainly sudden. During the feeling

of happiness, even if movements are mainly fast, the

acceleration is not very high with maximum value

at 12cm/s

2

. In contrast, sadness produce sustained

movements with small distribution in acceleration,

where the highest value is lower than 9cm/s

2

.

Hands Velocity. The hands velocity feature per-

forms similarly to the body velocity feature; however,

it is a useful feature to study since there are cases

where feelings are mainly expressed using the hands

and not the whole body, amplifying the separation cri-

teria between different emotions. The dissection of

each hand’s velocity as individual does not provide

any additional information, so it is ignored.

Jerk. Jerk is a feature to measure the flow of a

movement. As expected, the happy feeling and the

active behaviour have high average values for jerk,

indicating that movements are mainly bound (maxi-

mum value is 5.1cm/s

3

). On the other hand, sadness

is mostly represented with free movements. Curiosity,

FeatureExtractionforHumanMotionIndexingofActedDancePerformances

283

nervousness and fear seems to have similar behaviour

with maximum value close to 3.6cm/s

3

.

Volume. Volume is one of the most decidable fea-

tures for understanding motion; looking at the results,

we observe that the performer, in order to demon-

strate happiness, tries to increase the body volume

by opening and stretching arms and legs. Generally

speaking, the feeling of happiness is intentional; the

performer, trying to convey or transfer his emotions

to others, gets the largest average volume (0.63m

3

),

where in some cases reaches up to 2.4m

3

. Similarly,

during his attempt to investigate a subject or the place

showing curiosity, he tends to increase the volume by

stretching the body for better observation (to come

closer to the object), having a maximum volume of

2

.

3

m

3

(average 0

.

63

m

3

). During an active behaviour,

the performer’s movement shows energy and action

thus, the volume can take different values, from large

to small; the average volume is 0.45m

3

, while the

maximum value is 1.65m

3

. On the contrary, the per-

former has chosen a smaller volume (average 0.23m

3

)

for the feeling of sadness, because probably he does

not want to grab other’s attention; the movements are

more gathered together, and the value never exceeds

1.1m

3

. Similarly, when the performer acts in nervous

the volume remains low (average 0.34m

3

) with a max-

imum value close to 1.3m

3

. Finally, the feeling of

fear tries not to leave any part of the body unprotected

or uncovered, resulting in an average volume close to

0.25m

3

, and maximum 1m

3

.

Figure 6 shows the relation between six emotional

states regarding to effort. It is evident that the effort

features can be a valuable factor for understanding

movements, able to extract movement’s quality, and

they are useful for separating actions.

Figure 6: The correlation matrix showing the relation be-

tween different emotions with regards to the effort features.

5.3 Shape and Space Features

Hands Level. LMA suggests that hand movement

and position could provide reliable principles for un-

derstanding the performer’s intention and the quality

of his movement; thus, this feature will play an im-

portant role for the extraction of the performer’s emo-

tional state. It is important to note that this feature dif-

fers from the body volume, which may increase even

when there is a forward extension of the hands. The

proposed feature can help in understanding at what

level hands are located, i.e. if they are moving over

the head or forward. Looking at the data, happiness

and curiosity have similar volume shapes; however,

when the performer was acting happiness, hands ap-

peared several times in the upper level, in contrast to

curiosity where hands rarely moved there. Another

clear observation is in case of fear, where hands were

mainly located at the middle level, probably protect-

ing the head. Similarly, in case of nervousness or sad-

ness, hands almost never appeared on the upper level.

Nevertheless, a combination of this feature with oth-

ers, such as the body volume and the inter-hands dis-

tance, give us additional information about the struc-

ture and the quality of the motion.

Space and Total Distance Covered in a 30 Sec-

ond Window. During acting the emotion of hap-

piness, the performer moved on average 21.5m; the

covered area is large, suggesting that the performer,

in a try to externalise his feelings, moved almost in

all the available space. Activeness was imperson-

ated with similar behaviour to happiness. Contrary,

in case of sadness or fear, the total distance cov-

ered is much lower, almost half the case of happiness

(10.5m), pausing several times. The area covered is

small, which implies that our character did not move

across the available space but only in few areas; prob-

ably the performer did not want to express his feelings

or just wanted to protect himself. Looking at the clips

of the emotional state of nervousness, the most im-

portant observation is that the character never stopped

moving, having a permanently fixed speed and there-

fore a steady increase in the distance covered (total

distance covered is 13.5m). Similarly, the covered

area is larger than the case of sadness but smaller than

happiness, since the performer moves over the same

trajectories or repeat the same actions. Lastly, in cu-

riosity clips we observe that the average distance cov-

ered is not very large (17m), whereas the area cov-

ered is large. This indicates that the performer moved

around an object to have a better and more detailed

observation.

Torso Height. The distance between the root and

the head is also an important factor for the qualita-

tive analysis of the movement, able to separate differ-

ent emotions. The feelings of happiness and nervous-

ness were expressed with no major changes in torso

shape; it has been noticed that the body remained in

GRAPP2014-InternationalConferenceonComputerGraphicsTheoryandApplications

284

Figure 7: The correlation matrix showing the relation be-

tween the emotions based on the shape and space features.

an upright position (62cm). Curiosity does not differ

from the feeling of happiness; although the performer

was usually bending the knees, the body remained

stretched continuously. Conversely, when the charac-

ter depicted the sad feeling, there was a large variation

of the distance, with minimum value at 50cm. Oddly,

when the performer had an active behaviour, the dis-

tance distribution resembles the case of sadness; the

character performed a wide range of movements, in-

cluding head and body bending. Fear propels the per-

former to keep the body in an upright position, mostly

because of the need for self-protection, with an aver-

age value close to happiness and nervousness.

Figure 7 presents the correlation matrix between

emotions based on the shape and space features.

Since there are only few features to distinguish the na-

ture of feelings, there are cases with high correlation,

such as curiosity - nervousness or happiness - sadness.

Nevertheless, it seems to add a valuable criterion for

understanding the nuance of the movements, able to

separate most of the other emotional states.

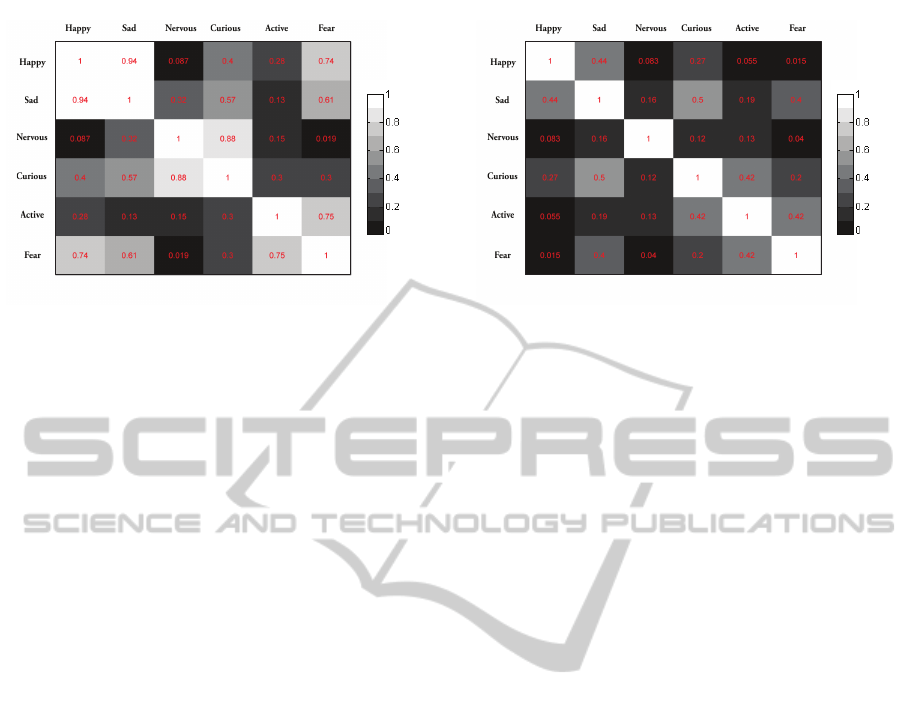

5.4 All Features

Finally, the correlation between the emotional states

has been tested using all the features discussed in this

paper. A matrix showing the normalised Pearson’s

linear correlation coefficients is illustrated in Figure

8. It is evident that the aforementioned features offer

a distinct manner for separating the emotional states.

The proposed features were able to identify the dif-

ference in the movement quality and structure, based

on the LMA components; none correlation coefficient

exceeds 0.5, proving that they offer reliable distin-

guishing conditions for classifying movements.

The results confirm the effectiveness of the pro-

posed features to capture the LMA components, thus

extracting the quality and identifying the diversity of

each movement. By extracting and studying the qual-

itative and quantitative characteristics of the move-

Figure 8: The correlation matrix showing the relation be-

tween different emotions using all features.

ment, we can have a deeper understanding of the per-

former’s emotions and intentions, proving that the

emotional state of the character affects the quality of

the motion.

6 CONCLUSIONS

We have proposed a method that can automatically

extract motion qualities from dance performances, in

terms of Laban Movement Analysis, for motion anal-

ysis and indexing purposes. We believe that this pa-

per contributes to the understanding of the human be-

haviour and actions from an entirely different per-

spective that those currently used in computer anima-

tion; it can be used as an alternative or complement to

the standard methods of measuring similarity in ani-

mation.

Summarising, in this work we studied which fea-

tures are able to extract the LMA components in a

mathematical and analytical way, aiming to capture

the movement’s nuance. We used acted dance data

with different emotional states and studied how the

proposed features changed when the performer was

acting different feelings. The results confirm that the

aforementioned features are indicative to extract the

LMA components, implying their importance in mo-

tion indexing and classification; the proposed features

succeed to characterise each of the movements, form-

ing a valuable criterion for the separation of the per-

former’s emotions. In addition, we investigated the

correlations between the performer’s acting emotional

state and the qualitative and quantitative characteris-

tics of motion. Experiments show that the proposed

LMA features and the emotional state of the per-

former are highly correlated, proving the efficiency of

our approach. A limitation of the proposed method-

ology is that a subset of the features requires the use

of a short time-window, resulting in delays in the ex-

traction of the user emotions.

FeatureExtractionforHumanMotionIndexingofActedDancePerformances

285

Future work will focus on the study of more emo-

tional states for a better understanding of the quality

of human movements and the intentions of the per-

former. In addition, more performances from differ-

ent actors will be captured for better evaluation of the

results; some captures will take place at dance schools

to reduce the potential influences of the laboratory en-

vironments. We are also planning to study how the

gender, age, weight and height affect the emotion ex-

pression and recognition and whether these factors

can be correlated with motion and emotional state.

Furthermore, we will study the performance of the

classifier in relation to the size of the window used for

motion clips’ segmentation, as well as the weight of

influence of each feature in the classification of move-

ments. Besides, the results of this paper will be re-

ferred to establish a similarity function that measures

the correlation between different actions. In contrast

to the existing techniques, we intend to compare ev-

ery movement based, not only on the position, posture

or the rotation of the limbs, but on the motion qualita-

tive and quantitative characteristics, such as the effort

and the purpose that has been executed. In addition,

the motion graphs (Zhao and Safonova, 2009) that in-

dicate possible future action paths will be enriched,

apart from whether a movement is well-matched to

another, with the qualitative and quantitative charac-

teristics of the action.

ACKNOWLEDGEMENTS

This project (DIDAKTOR/0311/73) is co-financed by

the European Regional Development Fund and the

Republic of Cyprus through the Research Promotion

Foundation. The authors would also like to thank Mrs

Anna Charalambous for her valuable help in explain-

ing LMA, as well as all the dancers who performed at

our department.

REFERENCES

Alaoui, S. F., Jacquemin, C., and Bevilacqua, F. (2013).

Chiseling bodies: an augmented dance performance.

In Proceedings of ACM SIGCHI Conference on Hu-

man Factors in Computing Systems, Paris, France.

ACM.

Arikan, O., Forsyth, D. A., and O’Brien, J. F. (2003).

Motion synthesis from annotations. ACM Trans. of

Graphics, 22(3):402–408.

Barbi

ˇ

c, J., Safonova, A., Pan, J.-Y., Faloutsos, C., Hodgins,

J. K., and Pollard, N. S. (2004). Segmenting motion

capture data into distinct behaviors. In Proceedings of

Graphics Interface, GI ’04, pages 185–194.

Chan, J. C. P., Leung, H., Tang, J. K. T., and Komura, T.

(2011). A virtual reality dance training system using

motion capture technology. IEEE Trans. on Learning

Technologies, 4(2):187–195.

Chao, M.-W., Lin, C.-H., Assa, J., and Lee, T.-Y. (2012).

Human motion retrieval from hand-drawn sketch.

IEEE Trans. on Visualization and Computer Graph-

ics, 18(5):729–740.

Chi, D., Costa, M., Zhao, L., and Badler, N. (2000). The

emote model for effort and shape. In Proceedings of

SIGGRAPH ’00, pages 173–182, NY, USA. ACM.

Cimen, G., Ilhan, H., Capin, T., and Gurcay, H. (2013).

Classification of human motion based on affective

state descriptors. Computer Animation and Virtual

Worlds, 24(3-4):355–363.

CMU (2003). Carnegie Mellon Univiversity: MoCap

Database. http://mocap.cs.cmu.edu/.

Deng, Z., Gu, Q., and Li, Q. (2009). Perceptually consistent

example-based human motion retrieval. In Proceed-

ings of I3D ’09, pages 191–198, NY, USA. ACM.

Fang, A. C. and Pollard, N. S. (2003). Efficient synthe-

sis of physically valid human motion. ACM Trans. of

Graphics, 22(3):417–426.

Gleicher, M. (1998). Retargetting motion to new characters.

In Proceedings of SIGGRAPH ’98, pages 33–42, NY,

USA. ACM.

Hartmann, B., Mancini, M., and Pelachaud, C. (2006). Im-

plementing expressive gesture synthesis for embod-

ied conversational agents. In Proceedings of GW’05,

pages 188–199. Springer-Verlag.

Hecker, C., Raabe, B., Enslow, R. W., DeWeese, J., May-

nard, J., and van Prooijen, K. (2008). Real-time

motion retargeting to highly varied user-created mor-

phologies. ACM Trans. of Graphcis, 27(3):1–27.

Ikemoto, L. and Forsyth, D. A. (2004). Enriching a motion

collection by transplanting limbs. In Proceedings of

SCA ’04, pages 99–108, Switzerland.

Kapadia, M., Chiang, I.-k., Thomas, T., Badler, N. I., and

Kider, Jr., J. T. (2013). Efficient motion retrieval in

large motion databases. In Proceedings of I3D ’13,

pages 19–28, NY, USA. ACM.

Keogh, E., Palpanas, T., Zordan, V. B., Gunopulos, D.,

and Cardle, M. (2004). Indexing large human-motion

databases. In Proceedings of VLDB, pages 780–791.

Kovar, L. and Gleicher, M. (2004). Automated extraction

and parameterization of motions in large data sets.

ACM Trans. of Graphics, 23(3):559–568.

Kovar, L., Gleicher, M., and Pighin, F. (2002). Motion

graphs. ACM Trans. of Graphics, 21(3):473–482.

Kr

¨

uger, B., Tautges, J., Weber, A., and Zinke, A. (2010).

Fast local and global similarity searches in large mo-

tion capture databases. In Proceedings of SCA ’10,

pages 1–10, Switzerland. Eurographics Association.

Kwon, T., Cho, Y.-S., Park, S. I., and Shin, S. Y.

(2008). Two-character motion analysis and synthesis.

IEEE Trans. on Visualization and Computer Graph-

ics, 14(3):707–720.

Lamb, W. (1965). Posture & gesture: an introduction to the

study of physical behaviour. G. Duckworth, London.

GRAPP2014-InternationalConferenceonComputerGraphicsTheoryandApplications

286

Liu, G., Zhang, J., Wang, W., and McMillan, L. (2005).

A system for analyzing and indexing human-motion

databases. In SIGMOD ’05, pages 924–926.

Luo, P. and Neff, M. (2012). A perceptual study of the

relationship between posture and gesture for virtual

characters. In Motion in Games, pages 254–265.

Maleti

´

c, V. (1987). Body, Space, Expression: The Edevel-

opment of Rudolf Laban’s Movement and Dance Con-

cepts. Approaches to semiotics. De Gruyter Mouton.

Min, J., Liu, H., and Chai, J. (2010). Synthesis and editing

of personalized stylistic human motion. In Proceed-

ings of I3D’10, pages 39–46, NY, USA. ACM.

Moore, C.-L. and Yamamoto, K. (1988). Beyond Words:

Movement Observation and Analysis. Number v. 2.

Gordon and Breach Science Publishers.

M

¨

uller, M., R

¨

oder, T., and Clausen, M. (2005). Efficient

content-based retrieval of motion capture data. ACM

Trans. of Graphics, 24(3):677–685.

Nann Winter, D., Widell, C., Truitt, G., and George-Falvy,

J. (1989). Empirical studies of posture-gesture merg-

ers. Journal of Nonverbal Behavior, 13(4):207–223.

Okajima, S., Wakayama, Y., and Okada, Y. (2012). Human

motion retrieval system based on LMA features using

interactive evolutionary computation method. In In-

nov. in Intelligent Machines, pages 117–130.

Russell, J. A. (1980). A circumplex model of affect. Journal

of Personality and Social Psychology, 39:1161–1178.

Shapiro, A., Cao, Y., and Faloutsos, P. (2006). Style compo-

nents. In Proceedings of GI’06, pages 33–39, Canada.

Torresani, L., Hackney, P., and Bregler, C. (2006). Learning

motion style synthesis from perceptual observations.

In Proceedings of NIPS’06, pages 1393–1400.

Troje, N. F. (2009). Decomposing biological motion: A

framework for analysis and synthesis of motion gait

patterns. Journal of Motion, 2(5):371–387.

UCY (2012). Univiversity of Cyprus: Dance MoCap

Database. http://dancedb.cs.ucy.ac.cy/.

UTA (2011). Univiversity of Texas-Arlington: Human Mo-

tion Database. http://smile.uta.edu/hmd/.

Wakayama, Y., Okajima, S., Takano, S., and Okada, Y.

(2010). IEC-based motion retrieval system using la-

ban movement analysis. In Proceedings of KES’10,

pages 251–260. Springer-Verlag.

Wu, S., Wang, Z., and Xia, S. (2009). Indexing and retrieval

of human motion data by a hierarchical tree. In Pro-

ceedings of VRST, pages 207–214, NY, USA. ACM.

Zhao, L. and Badler, N. I. (2005). Acquiring and validating

motion qualities from live limb gestures. Graphical

Models, 67(1):1–16.

Zhao, L. and Safonova, A. (2009). Achieving good

connectivity in motion graphs. Graphical Models,

71(4):139–152.

FeatureExtractionforHumanMotionIndexingofActedDancePerformances

287