A Physiological Evaluation of Immersive Experience of a View Control

Method using Eyelid EMG

Masaki Omata

1

, Satoshi Kagoshima

2

and Yasunari Suzuki

2

1

Interdisciplinary Graduate School of Medicine and Engineering, University of Yamanashi, Takeda, Kofu, Japan

2

Department of Computer Science and Media Engineering, University of Yamanashi, Takeda, Kofu, Japan

Keywords:

physiological Evaluation, Immersive Impression, Eyelid-Movement, Electromyogram, Virtual Environment.

Abstract:

This paper describes that the number of blood-volume pulses (BVP) and the level of skin conductance (SC)

increased more with increasing immersive impression with a view control method using eyelid electromyo-

graphy in virtual environment (VE) than those with a mouse control method. We have developed the view

control method and the visual feedback associated with electromyography (EMG) signals of movements of

user’s eyelids. The method provides a user with more immersive experiences in a virtual environment because

of strong relationship between eyelid movement and visual feedback. This paper reports a physiological evalu-

ation experiment to compare it with a common mouse input method by measuring subjects’ physiological data

of their fear of an open high place in a virtual environment. Based on the results, we find the eyelid-movement

input method improves the user’s immersive impression more significantly than the mouse input method.

1 INTRODUCTION

In recent years, some devices have been developed on

how to provide a user with more immersive impres-

sion in a virtual environment (VE)(Nagahara et al.,

2005), (Meehan et al., 2002). In the research field,

some visual displays have been developed as visually-

immersive devices such as a head-mounted display

that covers the user’s view and peripheral vision, a

wall-sized screen that covers the user’s whole body

and 3D display that give a user a sense of depth. On

the other hand, some interaction techniques in a vir-

tual environment have been designed (Touyama et al.,

2006), (Ries et al., 2008), (Asai et al., 2002), (Man-

ders et al., 2008), (Steinicke et al., 2009), (Miyashita

et al., 2008), (Haffegee et al., 2007), (Kikuya et al.,

2011). Particularly, it is important to provide a user

with embodiment interaction techniques in a virtual

environment, just like the same as natural embodi-

ment motion in the real world. Therefore, several ges-

tural interaction techniques and several walk-through

environments have been developed. By using the in-

teraction techniques and the displays, users can more

naturally interact with a virtual environment in where

visual feedback to the users corresponds with motor

skills of the users.

However, these existing embodied interaction

techniques have some shortcomings of the correspon-

dence between motor skills and visual feedback. For

instance, people usually scrunch up their eyes to look

carefully at an important point. Conversely, people

usually open their eyes widely with to get a wide view

of surrounding area. Existing virtual reality systems

do not incorporate the basic movement like looking.

They usually provide users with control devices such

as a mouse, a joystick and a location sensor to control

user’s view. Because there is no real-world-like rela-

tionship (eye movement and view) between the device

operations and the visual feedback, the device opera-

tions may make the user aware of the gap between

the real world in where the user controls the device

and the virtual world in where the user sees and may

reduce the user’s immersive impression.

We, therefore, have developed a view control

method and the visual feedback associated with elec-

tromyographic (EMG) signals of movements of user’s

eyelids (TheAuthors, 2011). The method provides

users with more immersive experiences in a virtual

environment because of the strong relationships be-

tween the eyelid movements and the visual feedback.

Moreover, the visual feedback enhances a user’s vi-

sual functions in a virtual world, such as zoom-in/out

and see-through, by following the relationship be-

tween motor skills of seeing and views in the real

world. We think that the method will be used for

a natural and direct view control system in a three-

224

Omata M., Kagoshima S. and Suzuki Y..

A Physiological Evaluation of Immersive Experience of a View Control Method using Eyelid EMG.

DOI: 10.5220/0004719102240231

In Proceedings of the International Conference on Physiological Computing Systems (PhyCS-2014), pages 224-231

ISBN: 978-989-758-006-2

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

dimensional virtual environment.

Another research issue of VE interaction is how

to evaluate user’s immersive experience. A question-

naire, such as Likert scale or semantic differential

method, is one of conventional evaluation techniques,

but it is not continuous and not objective. Addition-

ally, it is difficult to measure and evaluate sensation

by conducting a performance evaluation such as task

completion time and error ratio. We therefore con-

ducted a physiological evaluation test to compare the

immersive impression with the eyelid-movement in-

put method with that with a mouse input method.

This paper describes related work, the develop-

ment summary of the system, the physiological eval-

uation test about the users’ immersive impression and

improvement of the recognition algorithm of EMG of

user’s eyelid movements.

2 RELATED WORK

Asai et al. developed a vision-based interface based

on body position for viewpoint control in an immer-

sive projection display by tracking 3D positions of

the arms and head of the user by using image pro-

cessing without attached devices (Asai et al., 2002).

They evaluated the utility of the interface by com-

paring the interface with a joystick. The results in-

dicate that the performance of the interface is com-

parable to that of the joystick in terms of viewpoint

control, but enhances the sensation of speed. Man-

ders et al. presented a method for interacting with 3D

objects in an immersed 3D virtual environment with a

head-mounted display (HMD) by tracking user’s hand

gestures with a stereo camera (Manders et al., 2008).

The system allows the user to manipulate a 3D object

with five degrees of freedom using a series of intuitive

hand gestures. Steinicke et al. developed a VR-based

user interface for presence-enhancing gameplay with

which players can explore the game environment in

the most natural way, i. e., by real walking (Steinicke

et al., 2009). While the player walked through the

virtual game environment by wearing a HMD, they

analyzed the usage of transitional environments via

which the player could enter a virtual world. The re-

sults of the psychophysical experiments have shown

that players can be guided on circular arc with a ra-

dius of 22.03m whereas they believe themselves to be

walking straight. Oshita proposed a motion-capture-

based control framework for third-person view virtual

environments with a large screen (Oshita, 2006). The

framework can generate seamless transitions between

user controlled motion and system generated reactive

motions.

Because these systems use user’s body motions to

control view in virtual environment, there are a few

fundamental problems. A problem is that the view

control is rough by using body motions. Another

problem is that the device input makes the user feel

the seam between the real and virtual worlds. There-

fore, some systems to adopt eye movement for view

control have been developed.

Miyashita et al. proposed an electrooculography

(EOG)-based gaze interface that was implemented by

mounting EOG sensors on a HMD with a head-tracker

and proposed a gaze estimation method on the HMD

screen(Miyashita et al., 2008). The accuracy of gaze

estimation was experimentally determined to be 68.9

%. The system solves the problems of eye-tracker

devices’ block to a HMD. Haffegee et al. focusses

on methods and algorithms for using an eye-tracker

to takes the eye-tracker output and converts it into a

virtual world gaze vector in an immersive VE by us-

ing an eye camera and a head tracker (Haffegee et al.,

2007).

These systems mainly adopt the user’s line of

sight. However, people usually use their eye move-

ments to not only control line of sight but also several

controls for sight such as focus, gaze, interest and af-

fect. For instance, people usually tighten their eyelids

to look carefully. People also widen their eyes to look

at someone with interest or in surprise. Additionally,

because a person with x-ray vision in a science fic-

tion movie tightens his/her eyelids to look through ob-

jects, we proposed that it is possible to enhance user’s

visual functions in a virtual environment by tracking

eye movements in detail.

3 A VIEW CONTROL METHOD

USING EMG OF EYELIDS

We proposed a view control method associated to

movements of use’s eyelids to enhance user’s vi-

sual functions in a virtual environment (TheAuthors,

2011). The basic method deals with three types

of eyelid movements: staring, neutral and widely-

opening. The neutral is a state of looking at some-

thing naturally without straining or widely-opening

(see figure 1a). The staring is a state of looking care-

fully with gathering eyebrows (see figure 1b). The

widely-opening is a state of looking at whole with

raising eyebrows (see figure 1c). Figure 2 shows the

state transition diagram of the three states.

By using the states, we designed two types

of view control techniques in a virtual environ-

ment: the zoom in/out control technique and the see

through/annotation control technique. By using the

APhysiologicalEvaluationofImmersiveExperienceofaViewControlMethodusingEyelidEMG

225

Figure 1: Three states of eyelid movements: (a) neutral, (b)

staring, and (c) widely-opening.

Figure 2: State-transition diagram of the three eyelid states.

zoom in/out technique, a user can look at a distant

object as the zoomed-in view by looking at it with

gathering eyebrows. Moreover, the user can look at

objects, which are outside of the user’s neutral view,

as the zoomed-out view by looking at the center of the

view with raising eyebrows. The view of zoom in/out

becomes the normal view when the user repositions

the eyebrows and relaxes the palpebral muscles.

On the other hand, by using see-

through/annotation technique, a user can look

inside an object such as an engine of a car by looking

at it with gathering eyebrows. Moreover, the user can

read annotation text, such as spec of a car around it

by looking at it with raising eyebrows. The views

become the initial view when the user repositions the

eyebrows and relaxes the palpebral muscles.

We think that the system improves the user’s im-

mersive experience because of the relationship be-

tween the actual eye movements and the visual feed-

back. This advantage solves the problem of reducing

a user’s immersive impression because of the tenuous

relationship between visual feedback and the move-

ment of conventional input devices, such as a mouse,

a joystick, or a location sensor.

Additionally, the system has a possibility to pro-

vide a user with controlling the view in multiple steps

by adjusting the strength of his/her eyelid muscle. In

other words, the stronger the user gathers his/her eye-

brows, the larger the zoom is, and the higher the trans-

parency is.

3.1 Implementation

We use a Z800 3D Visor (eMagin Co.) as a

head-mounted display (HMD), a ProComp Infiniti

(Thought Technology Ltd.) as a biofeedback device,

two MyoScan sensors (Thought Technology Ltd.) as

pre-amplified surface electromyography (EMG) sen-

sors, and a PC to implement an eyelid-movement rec-

ognizer and a 3 D virtual environment . The speci-

fications of the HMD are as follows: a 105-inch vir-

tual screen at a distance of 3.6 m, 40-degree angle

of view, horizontal 360-degree angle and vertical 60-

degree angle head tracking, 800 600 pixels. The

biofeedback device and the EMG sensors are used to

record the EMG signals on a user’s facial surface and

to send the data to the PC via USB. The recording

frequency is 20 Hz, and the recorded signals are raw

voltage values. The PC is used to process the EMG

data and to render the scene of a virtual environment

with Microsoft Visual C++ and OpenGL. The three

dimensional view of the scene is controlled with the 6

degrees of freedom data of the head-tracking HMD.

Figures 3 shows the HMD and the electrodes of

the EMG sensors attached to a user’s face. An EMG

sensor consists of three electrodes: positive, negative,

and reference. We use an EMG sensor to detect eyelid

movements because it is difficult to detect it by image

data processing with a camera, such as a study of Val-

star et.al(Valstar et al., 2006), because of the narrow

space between user’s face and the HMD (Figure 3c).

Figure 3: State of wearing the HMD and attaching the EMG

sensors in our proposed system.

3.2 Measuring EMG and Detecting

Eyelid Movements

EMG is a technique for detecting and amplifying tiny

electrical impulses that are generated by muscle fibers

when they contract. An EMG sensor can record the

signals from all the muscle fibers within the recording

area of the sensor contact. Some research studies use

EMG to develop human interfaces. Manabe et al. pro-

posed the use of an EMG of facial muscles to control

an electric-powered wheelchair(Manabe et al., 2009).

Agustin et al. presented a low-cost gaze-pointing and

EMG-clicking device, which a user employs with an

EMG headband on his/her forehead (Agustin et al.,

2009). Clark et al. used the EMG on a user’s arm

movement to control a robotic arm (Jr. et al., 2010).

Also, Costanza st al. presented a formal evaluation

of subtle motionless gestures based on EMG signals

from the upper arm for interaction in a mobile con-

text(Costanza et al., 2007) , and Gibert et al. de-

PhyCS2014-InternationalConferenceonPhysiologicalComputingSystems

226

veloped describe a light expression recognition sys-

tem based on 8 facial EMG sensors placed on specific

muscles able to discriminate 6 expressions to enhance

human computer interaction (Gibert et al., 2009). It is

practical to use EMG data in order to enable a com-

puter to intuitively interact with body movements.

Our system measures the EMG data between the

eyebrows of a user and between the upper and lower

right side of a user’s right eye to detect three types of

eyelid movements: staring, neutral, and wide open-

ing (Figure 1). This means that the system measures

the EMG signals of the frontalis muscle to recognize a

wide opening state, and the EMG signals of the corru-

gator muscle to recognize a staring state. This is based

on our preliminary experiment (TheAuthors, 2011).

3.3 Calibration and Recognition

Typically, the values of the electric potential of the

EMG signals are positive and negative. Therefore,

our system converts all values into absolute values for

simple statistical processing for calibration and recog-

nition.

Our system calibrates the thresholds of the EMG

data to recognize the three types of eyelid movements

for an individual user, before the user uses the view

control method. After a user puts the EMG sensors

on his/her face, the system records the EMG data five

times among 20 frames at the two places while the

user maintains each of the eyelid movements. Next,

the system calculates the average and the standard de-

viation (SD) of the recorded EMG data of each eyelid

movement state of the user. Based on the calculations,

the threshold of the neutral state is the sum of the aver-

age and the SD of the state. The threshold of the star-

ing state is the difference between the average and the

SD of the state, and the threshold of the wide opening

state is the difference between the average and the SD

of the state.

While a user uses the eyelid-view-control method,

the system continuously records the EMG data among

four frames at the two places, calculates two averages

and two SDs from the data and compares the averages

and the SDs with the thresholds of the calibration data

in order to recognize the eyelid movements.

4 PHYSIOLOGICAL

EVALUATION

We conducted a physiological evaluation experiment

to compare the visually immersive experience of

the eyelid-movement input method with that of a

mouse input method as a conventional common in-

put method. We measured skin conductance (SC) of

a subject’s hand and blood-volume pulse (BVP) on

a subject’s finger and analyzing them. The reason is

that physiological data continuously and objectively

reflect emotions of users (subjects) who are experi-

encing an immersive virtual environment.

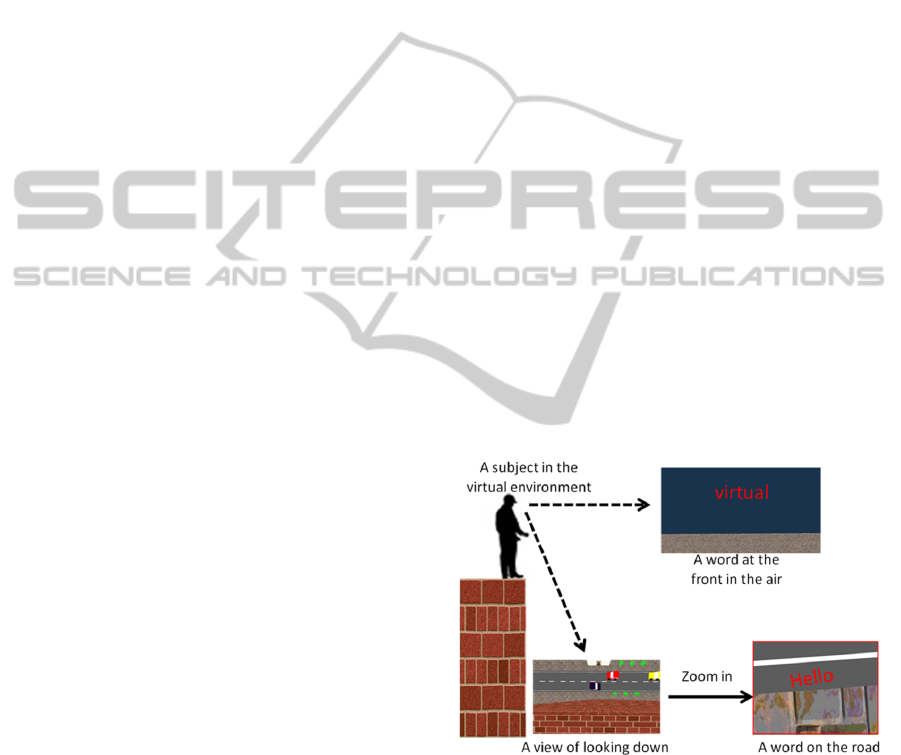

4.1 Experimental Task

The experimental task is to read a word and say it in

the air in front of the subject and on the road under the

subject’s foot from the edge of the top of a tall build-

ing in a 3D virtual environment. The subjects perform

the task by using our system or a mouse while wear-

ing the HMD for a first person’s view with head track-

ing. Figure 4 illustrates the task. A subject stands at

the edge of the top of a tall building in a virtual en-

vironment. First, the subject reads a word and says it

on the road after looking down at the road by bending

his/her neck down and zooming in on the word (for

example, Figure 4 shows gHelloh). We set the font

size of the word to small so that it is impossible for

the subjects to read it without zooming it in. Next, the

subject reads a word and says it in the air in front of

him/her by raising his/her head (for example, Figure 4

shows gvirtualh). After that, the subject reads another

word and says it on the road again by zooming it in.

The subject repeats the task for five words.

Figure 4: Schematic representation of the experimental

task.

We set the task of reading a word from a high alti-

tude because we could provide the subjects with a fear

of the open high place in the 3D virtual environment,

and hypothesize that the more immersive feelings a

subject had, the more he/she would experience a fear

of the place. For the purpose of the more immersive

experience, we asked the subjects to stand at the edge

of the board that was approximately 4 cm thick (Fig-

ure 5).

APhysiologicalEvaluationofImmersiveExperienceofaViewControlMethodusingEyelidEMG

227

Figure 5: State of standing on a board to provide the subject

with the feeling of standing on the edge of a building.

4.2 Procedure

We covered the HMD with a black thick cloth to block

natural light from entering between the HMD and the

subject’s face, because the light in the real world de-

creases the subject’s immersive feelings in a virtual

world.

All subjects performed the task once using each

method (within subjects); the eyelid-movement input

method and a mouse input method. For the mouse in-

put method, a subject held a mouse on his/her thigh

while standing on the board. The left click button was

used to zoom in and the right click button was used

to zoom out. To avoid an ordering effect between

the two methods, half of the subjects performed the

task of eyelid movements first and the other half per-

formed the task of mouse clicking first.

The subjects practiced how to use each of the

methods for approximately 1 min before they started

to perform the task by using the assigned method.

During practice, the subjects confirmed the head-

tracking function of the HMD and how to zoom in

and out by standing on the ground in the virtual en-

vironment, not by standing on the top of a building.

The subjects performed the experimental task at the

rate of approximately 2 min per method. We recorded

the subject’s physiological data of the time in order

to validate the differences in the physiological data of

a heightened sense of fear of the high place between

the eyelid-movement input method and the mouse in-

put method. We assumed these differences to reflect

the differences in immersive feelings between the in-

put methods.

After completing the tasks, the subjects answered

a questionnaire consisting of five-point scale pair

comparisons about the differences they perceived be-

tween the input methods. Table 1 shows the question-

naire.

4.3 Measurement of Physiological Data

We analyzed how the subjects immersed in the vir-

tual environment when using each of the methods by

measuring the skin conductance (SC) of a subject’s

hand and the blood-volume pulse (BVP) on a sub-

Table 1: Questionnaire for user’s experience.

Questions and indexes

Q1DFor which did you feel more immersive impression?

1:mouse ——— 5: eyelid movement

Q2DFor which did you become more aware?

1:mouse ——— 5: eyelid movement

Q3DWhich was more intuitive?

1:mouse ——— 5: eyelid movement

Q4DWhich was easier to control as intended?

1:mouse ——— 5: eyelid movement

Q5DFor which did you feel more tired?

1:mouse ——— 5: eyelid movement

Q6DWhich do you like to use?

1:mouse ——— 5: eyelid movement

ject’s finger (Figure 6) with a ProComp infiniti en-

coder. We measured the physiological data because

they are known to increase according to the degree of

tension of the fear of high place (Meehan et al., 2002)

and to estimating arousal and valence levels of emo-

tions (Soleymani et al., 2008), (Lin et al., 2005). We

recorded the SC and the BVP of a subject during prac-

tice and the experimental tasks, and calculated the dif-

ferences of the average of the data between practice

and the tasks. We hypothesized that the differences of

the average between the practice and the experimental

task with the eyelid-movement input method is more

than that between the practice and the mouse input

method, because the subjects experienced the fear of

high place by the eyelid-movement method to a larger

extent than that by the mouse method. We think the

high embodiment of the eyelid movements makes feel

more immersive to the user.

Figure 6: State of attaching an SC sensor and a BVP sensor.

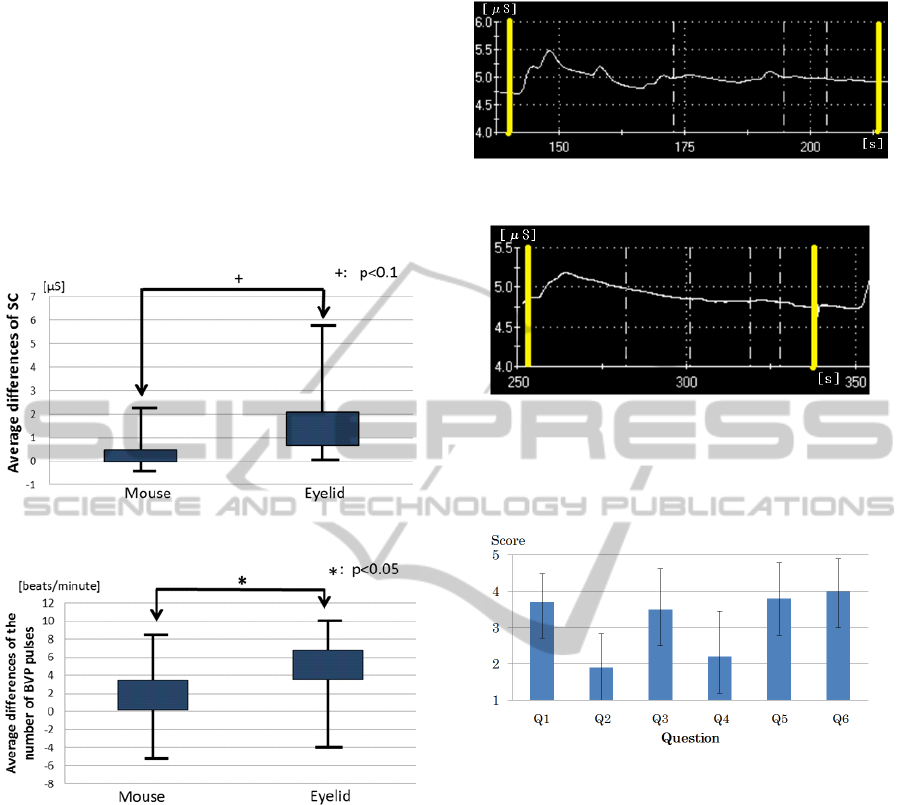

4.4 Results

Ten subjects (men aged between 21 and 25 years

old) participated in the experiment. Figure 7 shows

a box plot of the average differences of SC between

the practice (on the ground) and the experiment (at

the top of the building) in each of the input meth-

ods (mouse input and eyelid movement), and Fig-

ure 8 shows a box plot of the average differences of

the number of BVP pulses between the practice and

the experiment in each of the input methods. On

the basis of the results, we observe a significant dif-

PhyCS2014-InternationalConferenceonPhysiologicalComputingSystems

228

ference in SC between the input methods (ANOVA,

F = 5.130,d f = 1/9, p < 0.1). Therefore, we find

that the SC of the eyelid-movement input method

is significantly higher than that of the mouse input

method. In addition, there is a significant differ-

ence in the BVP between the input methods (ANOVA,

F = 3.943,d f = 1/9, p < 0.05). Therefore, we also

find that the number of BVP pulses of the eyelid-

movement input method is significantly higher than

that of the mouse input method.

Figure 7: Average differences of SC of the input methods.

Figure 8: Average differences of the number of BVP pulses

of the input methods.

As observed from the typical signals of SC in both

methods (Figures 9 and 10), the values of SC of most

of the subjects increased soon after the subject saw the

high altitude. Then, the values gradually decreased.

However, in the eyelid-movement input method, there

were typically some waves on the SC signals (Figures

9). The solid perpendicular lines of Figure 9 and Fig-

ure 10 show start and finish of the task.

Figure 11 shows the averages and the standard de-

viations of the answers to the questionnaire (Table

1). In terms of results, we find the subjects consid-

ered that the eyelid-movement input method is more

immersive than the mouse input method (Q1). Also,

they considered the eyelid-movement input method is

intuitive (Q3) because of marginal awareness of the

boundary between the real and virtual worlds (Q2);

they also would like to use it again (Q6). However,

Figure 9: Typical SC signals in eyelid-movement input

method.

Figure 10: Typical SC signals in mouse input method.

they observed that it was not as controllable as in-

tended (Q4), and they felt tired by using it (Q5).

Figure 11: Average and SD scores of the answers.

4.5 Discussion

Based on the results, our proposed eyelid-movement

input method improves the users’ immersive experi-

ences more effectively than the mouse input method.

We find that the eyelid-movement input method has a

significant fear effect on the users’ immersive experi-

ence because of the increase in SC and BVP pulses.

Additionally, the differences of the physiological data

between the eyelid-movement input method and the

mouse input method denote the same tendency of the

results of the questionnaire. We think that the physi-

ological data show not only the differences but also

the time series variation of subjects’ sense of fear.

Therefore, we consider that the waves of the SC in

eyelid-movement input method (Figure 9) show the

noticeable changes in the sense of fear with the phys-

ical and intuitive operation. We also consider that this

is due to the fact that the eyelid-movement input is

APhysiologicalEvaluationofImmersiveExperienceofaViewControlMethodusingEyelidEMG

229

associated with the act of looking at something, but

the mouse input is not associated with any such act.

Therefore, the subjects performed the task intuitively,

without being aware of the boundary between the real

and virtual worlds, and immersed themselves in the

virtual world.

On the other hand, based on the results of the

questionnaire, the eyelid-movement input did not per-

form as the users intended a few times, as compared

with the mouse input, and imposed a physical load on

the subjects. We consider that this is due to the fact

that the system failed to recognize some eyelid move-

ments a few times, although the system recognized

all the mouse clicks correctly. Actually, some sub-

jects experienced cognitive loads because of recogni-

tion errors between the zoom-in and zoom-out move-

ments, and some subjects experienced physical loads,

because a user of the eyelid-movement input method

was required to maintain a state, such as wide open-

ing of the eyelids. In addition, the average time to

complete the task by using the mouse input was 83

s, which was shorter than the time of the eyelid-

movement input, which was 160 s. For these rea-

sons, we have improved the recognition algorithm to

recognize each eyelid movement more accuracy, and

we have enhanced the system to recognize not only

the three types of eyelid movements but also multi-

degrees of eyelid state based on the present three

states.

5 IMPROVEMENT

We improved the recognition algorithm by adapting

an algorithm to remove outliers of EMG data because

there had been a lot of variation in the EMG data. We

have used the Smirnov-Grubbs’ test that detects out-

liers (p < 0.05) not included a normal distribution of

recorded EMG data, removes them and repeats the de-

tection and the remove until an outlier is undetectable.

Expression (1) shows the Smirnov-Grubbs’ test.

T =

|X

max

−X |

√

U

(1)

where X is mean of data, X

max

is the farthest value

from X, U is dispersion and T is test statistic. If a

value is less than T , the value is an outlier.

We conducted an experiment to verify the recogni-

tion rate of the improved system. Ten subjects (eight

men and two women, aged between 21 and 22 years

old) participated in the experiment. They did three

types of eyelid movements ten times each type in a

random order (within-subjects design) by using the

conventional system and the improved system.

Table 2: Recognition rates of the three states of the conven-

tional system (%).

Neutral Staring Widly-Opening

Average 82.0 78.0 84.0

SD 14.0 24.4 20.0

Table 3: Recognition rates of the three states of the im-

proved system (%).

Neutral Staring Widely-Opening

Average 90.0 90.0 92.0

SD 7.7 8.9 8.7

Table 3 and table 4 show the recognition rates of

each movement of each system. The average recogni-

tion rate of the conventional system is 80 % and that

of the improved system is 90 %. Additionally, the de-

viation of the improved system is smaller than that of

the conventional system. We therefore find that the

improved system is more accuracy than the conven-

tional system.

Moreover, we enhanced the number of states to

recognize eyelid movements from three types to five

types; strongly staring, softly staring, neutral, softly

opening, and widely-opening (see figure 12). As the

result of verifying the recognition rates of the five

movements like the experiment described above, table

4 shows the recognition rates of the five types (within-

subjects design) of ten subjects (eight men and two

women, aged between 21 and 22 years old). The rate

of the softly staring and the rate of the softly-opening

are lower than other rates.

(a) (c) (e)

(b) (d)

Figure 12: Three states of eyelid movements: (a) strongly

staring, (b) softly staring, (c) neutral, (d) softly opening, and

(e) widely-opening.

6 CONCLUSIONS

We conducted an experiment to compare our pro-

posed method with the mouse input method by mea-

suring the subjects’ physiological data of the fear of

the open high place in the virtual environment. On the

PhyCS2014-InternationalConferenceonPhysiologicalComputingSystems

230

Table 4: Recognition rates of the three states of the im-

proved system (%).

Neutral

Strongly-

staring

Softly-

staring

Widely-

Opening

Softly-

opening

Average 93.0 91.0 81.0 94.0 74.0

SD 6.4 7.0 9.4 6.6 6.6

basis of the results, we find that the eyelid-movement

input method improves the user’s immersive impres-

sion more significantly than the mouse input method.

Therefore, we conclude that it is important to immerse

an input method in an immersive virtual environment

because the user can use it intuitively and does not

have to be concerned about the seam between the real

and the virtual worlds. We also conclude that physio-

logical data are useful in continuously and objectively

evaluating usability of an input method.

For future work, we plan to further improve

the user’s immersive experience by applying real-

world embodiment movements and emotions esti-

mated from a user’s physiological data, such as EMG,

SC, BVP, brain waves and cerebral blood flow.

REFERENCES

Agustin, J. S., Hansen, J. P., Hansen, D. W., and Skovs-

gaard, H. H. T. (2009). Low-cost gaze pointing and

emg clicking. chi extended abstracts. CHI Extended

Abstracts 2009, pages 3247–3252.

Asai, K., Osawa, N., Sugimoto, Y., and Tanaka, Y. (2002).

Viewpoint motion control by body position in im-

mersive projection display. Proc. of SAC2002, pages

1074–1079.

Costanza, E., Inverso, S. A., Allen, R., and Maes, P. (2007).

Intimate interfaces in action: assessing the usability

and subtlety of emg-based motionless gestures. Proc.

of CHI 2007, pages 819–828.

Gibert, G., Pruzinec, M., Schultz, T., and Stevens, C.

(2009). Enhancement of human computer interac-

tion with facial electromyographic sensors. Proc. of

OZCHI 2009, pages 421–424.

Haffegee, A., Alexandrov, V. N., and Barrow, R. (2007).

Eye tracking and gaze vector calculation within im-

mersive virtual environments. Proc. of VRSTACM,

pages 225–226.

Jr., F. C., Nguyen, D., Guerra-Filho, G., and Huber, M.

(2010). Identification of static and dynamic muscle

activation patterns for intuitive human/computer inter-

faces. Proc. of PETRA 2010.

Kikuya, K., Hara, S., Shinzato, Y., Ijichi, K., Abe, H., Mat-

suno, T., Shikata, K., and Ohshima, T. (2011). Hyak-

ki men: Development of a mixed reality attraction

with gestural user interface. INTERACTION 2011,

2011(3):469–472.

Lin, T., Hu, W., Omata, M., and Imamiya, A. (2005). Do

physiological data relate to traditional usability in-

dexes? Proc. of OZCHI ’05.

Manabe, T., Tamura, H., and Tanno, K. (2009). The control

experiments of the electric wheelchair using s-emg of

facial muscles. Proc. FIT2009, pages 541–542.

Manders, C., Farbiz, F., Tang, K. Y., Yuan, M., Chong, B.,

and Chua, G. G. (2008). Interacting with 3d objects

in a virtual environment using an intuitive gesture sys-

tem. Proc. of VRCAI08.

Meehan, M., Insko, B., Whitton, M., and Brooks, F. P.

(2002). Physiological measures of presence in stress-

ful virtual environments. ACM Trans. Graph. In SIG-

GRAPH ’02, 21(3):645–652.

Miyashita, H., Hayashi, M., and ichi Okada, K. (2008).

Implementation of eog-based gaze estimation in hmd

with head-tracker. Proc. of ICAT2008, pages 20–27.

Nagahara, H., Yagi, Y., and Yachida, M. (2005). A

wide-field-of-view catadioptrical head-mounted dis-

play. Electronics and Communications in Japan, J88-

D-II(1):95–104.

Oshita, M. (2006). Motion-capture-based avatar control

framework in third-person view virtual environments.

Proc. of ACE ’06.

Ries, B., Interrante, V., Kaeding, M., and Anderson, L.

(2008). The effect of self-embodiment on distance

perception in immersive virtual environments. Proc.

of VRST 2008, 15(5):167–170.

Soleymani, M., Chanel, G., Kierkels, J. J. M., and Pun,

T. (2008). Affective ranking of movie scenes using

physiological signals and content analysis. Proc. of

MSACM, pages 32–39.

Steinicke, F., Bruder, G., Hinrichs, K. H., and Steed, A.

(2009). Presence-enhancing real walking user inter-

face for first-person video games. Proc. of ACM SIG-

GRAPH Symposium on Video Games, pages 111–118.

TheAuthors (2011). *****. *****.

Touyama, H., Hirota, K., and Hirose, M. (2006). Imple-

mentation of electromyogram interface in cabin im-

mersive multiscreen display. Proc. of IEEE Virtual

Reality 2006, pages 273–276.

Valstar, M., Pantic, M., Ambadar, Z., and Cohn, J. F. (2006).

Spontaneous vs. posed facial behavior: Automatic

analysis of brow actions. Proc. of ICMI ’06, pages

162–170.

APhysiologicalEvaluationofImmersiveExperienceofaViewControlMethodusingEyelidEMG

231