Effects of Stereoscopy on a Human-Computer Interface for Network

Centric Operations

Alessandro Zocco

1

, Davide Cannone

1

and Lucio Tommaso De Paolis

2

1

Product Innovation & Advanced EW Solutions, Elettronica S.p.A., Via Tiburtina Valeria Km 13.700, Rome, Italy

2

Department of Engineering for Innovation, University of Salento, Lecce, Italy

Keywords: Stereoscopic Vision, Human Computer Interface, Network Centric Operations, Command and Control

System.

Abstract: Network Centric Warfare can be accomplished thanks to a network of geographically distributed forces,

granting a flow of increased contents, quality and timeliness of information, building up a shared situational

awareness. When this flow is displayed to an operator, there is the possibility of reaching a state of

information overload. To avoid this situation, new ways to conceive the interface between human and

computer must be evaluated. This paper proposes an experimental stereoscopic 3D synthetic environment

aimed to improve the understanding of the modern battle spaces. This facility is part of the LOKI Project, a

Command and Control system for Electronic Warfare developed by Elettronica S.p.A. We discuss technical

details of the system and describe a preliminary usability study. This first evaluation is very positive and

encourages continuing research into Human-Computer Interaction for military applications.

1 INTRODUCTION

Network Centric Warfare (NCW) (U.S. Navy, 1995;

Braulinger, 2005), and consequently Network

Centric Operations (NCO), can be accomplished

thanks to a network of geographically distributed

forces. The network, directly connected to the

platforms by means of sensing, commanding,

controlling and engaging systems, increases

contents, quality, and timeliness of information

between nodes enhancing the situational awareness.

In this context is more appropriate talking of shared

situational awareness, because all the network

elements can have access at the same up-to-date

information.

Figure 1 shows an example of a Network Centric

scenario: two different platforms (e.g., ships), have

the capability of sensing some limited areas and

each one has a personal limited awareness of its

proximity (a); each platform sends collected data

(e.g., electromagnetic tracks) (in (a) depicted as

dotted arrows starting from ships) to a specific

platform, known as Command and Control (C2), that

has the special task to fuse data, in a manual or

automatic way; then, the C2 sends the fused data (in

(b) depicted as solid arrows starting from C2) to the

platforms; in this way they will share the same

enhanced situational awareness (b); this process is

continuously repeated during the military operation.

a)

b)

Figure 1: Example of a Network Centric scenario. In (a)

each naval platform is collecting local data and send them

to the C2 platform. In (b), C2 platform is sharing fused

data with the naval platform, building up their shared

situational awareness.

As described in the example, the C2 holds an

important role in these networks; it is the system

devoted to the decision-making process of the

operational aspects of the warfare. Such systems are

operated by commanders by means of a Human

Computer Interface (HCI), in order to get access to

information gained by the other platforms, that act

like sensors in the network, and in order to make

decisions (e.g., sharing the commander’s perception

249

Zocco A., Cannone D. and Tommaso De Paolis L..

Effects of Stereoscopy on a Human-Computer Interface for Network Centric Operations.

DOI: 10.5220/0004850902490255

In Proceedings of the 9th International Conference on Computer Vision Theory and Applications (VISAPP-2014), pages 249-255

ISBN: 978-989-758-009-3

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

of the situation, manifesting command decisions)

(Alberts, Garstka and Stein, 1999).

Increasing the number of commanded platforms,

an operator of the C2 can easily reach a state of

information overload, where information flow rate is

greater than the operator’s processing rate; this

situation could cause a wrong mental model of the

mission scenario and, consequently, the making of

wrong decisions that could lead to catastrophic

situations (Shanker and Richtel, 2011).

Thus, the HCI becomes a key factor when

developing the architecture of a C2.

The focus of this paper is on the display issues of

a HCI and how it can be improved in order to reduce

information overload and enhance the usability of

information. In particular, we evaluate usability of

an immersive synthetic environment in the

understanding of a NCW scenario.

This research is part of the LOKI Project, a

Command and Control (C2) system for Electronic

Warfare (EW) developed by Elettronica S.p.A.

(ELT). Despite this work focuses on warfare topic,

we believe that any time-pressure system operated

by a human (e.g., HCI for network Intrusion

Detection System), for network operations can

benefit from this research (Cox, Eick and He, 1996).

2 RELATED WORKS

Gaining a detailed understanding of the modern

battle space is essential for the success of any

military operation.

In these applications, the main function of a

human-computer interface is to display the current

situation and the relevant information and intentions

to the operator (e.g., location of own forces,

reconnoitered opponent troops and facilities,

commands and order from the superiors, platforms’

status); this information is generally displayed on

scaled maps with regional properties of the mission

area.

Several research groups have focused their

activities on the design and development of new

display paradigms and technologies for advanced

information visualization.

Dragon (Julier, et al., 1999) has been one of the

first research projects in formalizing requirements

for systems with the need to visualize a huge amount

of information on tactical maps for real-time

applications. A real-time situational awareness

virtual environment for battlefield visualization has

been realized with an architecture composed of

interaction devices, display platforms and

information sources.

Other solutions have been proposed by

Pettersson, Spak and Seipel (2004) and Alexander,

Renkewitz and Conradi (2006). In the former, the

proposed visualization environment is based on the

projection of four independent stereoscopic image

pairs at full resolution upon a custom designed

optical screen. This system suffers from apparent

crosstalk between stereo images pairs. The latter

presents some examples of Augmented Reality and

Virtual Reality technologies, showing benefits and

flaws, and the results of the experiments regard the

evaluation of visibility and interactivity

performances.

Kapler and Wright (2005) have developed a

novel visualization technique for displaying and

tracking events, objects and activities within a

combined temporal and geospatial display. The

events are represented within an X, Y, T coordinate

space, in which the X and Y plane shows flat

geographic space and the T-axis represents time into

the future and past. This technique is not adequate

for an immersive 3D virtual environment because it

uses an axis to describe the time evolution constrains

the spatial representation on a flat surface; the

altitude information, that is an important information

in avionic scenarios, can’t be displayed. However, it

is remarkable that the splitting-up of geographical

and logical information (e.g., health of a platform)

can enhance the usability of the system.

3 STEREOSCOPIC VISION

The stereoscopic vision can improve the

understanding of a modern battle space by providing

the depth perception and enhancing the level of

realism and the sense of presence.

Different technologies have been developed for

generating 3D stereoscopic visualization. Some of

these are related to entertainment such as cinema

(Lipton, 2007) and video games (Mahoney,

Oikonomou and Wilson, 2011), as well as to other

serious/work-related applications such as medical

interventions and telerobotics (Dey, et al., 2002;

Livatino, et al., 2010).

Stereoscopic visualization, or simply stereo, can

be active or passive (Cyganek and Siebert, 2009). In

short, passive stereo is a solution where light is

polarized differently for left and right eyes. The

polarization can be obtained in various ways; the

most known is the colour polarization, used in

cinemas in the 1950s for the first time. Nowadays,

the most used polarizations within virtual reality

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

250

applications are the linear polarization or the circular

one. The latter has more degrees of freedom than the

former when the viewer moves the head in relation

to the image, avoiding effects that may degrade the

stereo perception.

Passive stereo only needs a pair of low-cost LCD

projectors, a pair of light cardboard glasses, both

with built-in polarization filters and a good

reflecting screen that assures that the light beam

isn’t omnidirectional reflected, but straight back to

the viewer.

The active stereo requires glasses synchronized

with the projectors; so, the right and left lens are

blackened out alternately at the same rate of the

images projected on the screen for the left and right

eyes. Active stereo is generally more expensive than

passive one because the former has the requirement

of a 120 Hz image frequency and the normal

projectors are usually not built for this frequency.

The stereoscopic visualization artificially

reproduces the mechanisms that govern the

binocular vision and it is closer to the way we

naturally see the world (Drascic, 1991).

The stereoscopy leads to several improvements:

comprehension and appreciation of presented visual

inputs, perception of structure in visually complex

scenes, spatial localization, motion judgment,

concentration on different depth planes and

perception of material surfaces.

However, a stereo vision could be hard to get

right at first attempt because the hardware could

cause crosstalk, misalignment, image distortion (due

to lens, displays or projectors), and all these

situations can cause eye strain, a double image

perception, depth distortion, look around distortion

(typical for head-tracked displays). These drawbacks

prevented a large application of stereoscopic

visualization (Sexton and Surman, 1999).

4 PROPOSED INVESTIGATION

The main goal of the research presented in this paper

is the design and implementation of a visualization

system for NCW scenarios (e.g., displaying symbols

and logical information on tactical maps) by creating

a stereoscopic 3D synthetic environment aimed at a

total immersion of the operator. This facility is part

of the LOKI Project, a C2 system for Electronic

Warfare.

4.1 High-level Architecture of LOKI

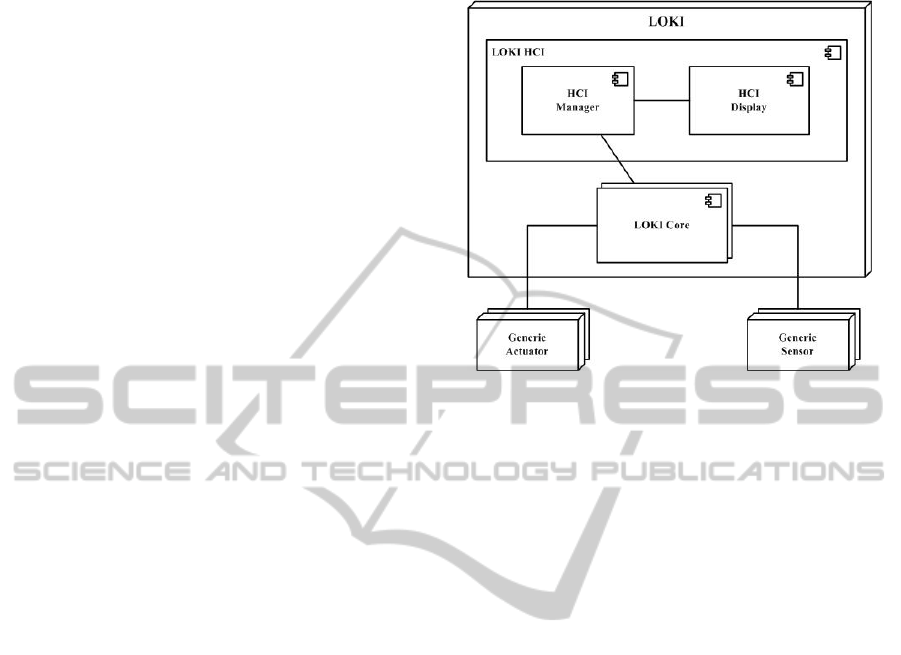

Figure 2 shows the high-level architectural view of

the LOKI system.

Figure 2: LOKI architecture in the large.

The LOKI Core component continuously executes

an advanced multi-sensor data fusion process on the

data retrieved from cooperating systems. Once these

data are properly fused, the system is capable to

infer new important information such as a better

localization of emitters and countermeasures

strategy. This information is transferred to the LOKI

HCI using a communication middleware based on

Data Distribution Service (DDS) paradigm (OMG,

2007).

The HCI Manager component provides a

persistence mechanism to decouple the presentation

layer from the core application logic. It is

responsible for the communication with the core

(i.e., receiving input data by the core and sending

operator commands to the core) and for the

translation of received data in a model

understandable by the presentation layer.

The HCI Display component contains the

elements that implement and display the User

Interface (UI) and manage user interaction. It

provides a high definition view of a realistic

geographic environment. Platforms are positioned

on the scene according to their geographic

coordinates and are represented according to the

Common Warfighting Symbology MIL-STD-2525C

standard (Department of Defense, 2008).

4.2 Design Choices for HCI

The HCI has been designated with high modularity

applying UI Design Patterns (UIDP). Using these

patterns helps to ensure that key human factors

concepts are quickly and correctly implemented

EffectsofStereoscopyonaHuman-ComputerInterfaceforNetworkCentricOperations

251

within the code of advanced visual user interfaces

(Feng, Liu and Wan, 2006). In addition, structural

patterns Composite and Decorator were used: the

former allows to dynamically add properties (e.g.,

borders around a window) or behaviors (e.g.,

scrolling) to any component of the interface; the

latter allows to compose interfaces as tree structures,

to show part-whole structures, and lets to equally

handle single objects and compositions (Gamma, et

al., 2005).

The software has been developed in Java

language, using an OpenGL binding in order to talk

to the OpenGL runtime installed on the underlying

operating system.

Figure 3: Visualization of EW entities with their

geographic location.

Figure 3 shows a sample of the interface, built up in

two different layers, as inspired by the research of

Kapler and Wright (2005). A 3D terrain map, in the

bottom part of the screen, is used to show both

features of the selected terrain and geographic data

of the elements of the scenario (e.g., real position,

past track) and for elements that are not grounded, a

transparent curtain is used to indicate their altitude.

A parallel layer, that hosts the so-called “logical

view” of the scenario, displayed above is used to

represent other relevant non-geographic information

(e.g., health status, lethality); it can be also used to

visualize connections between the elements and to

show elements that are outside the area that the

operator is currently viewing in the geographic layer

below. The geographical reference is maintained

through connections between the two layers, using

an algorithm of forces that avoids most possible

crossing between lines. This separation, with the use

of colours to show different levels of alerts, grants

the operator the possibility to focus on geographic

locations avoiding the overloading of symbols and

text on the terrain.

4.3 Hardware Setup

The stereo vision setup includes:

a PC equipped with a Nvidia Quadro graphics

card;

a Digital Light Processing (DLP) projector with a

WUXGA (1920x1200px) resolution and a

brightness of 7000 ANSI lumens;

a special eyewear comprising two infrared

controlled Liquid Crystal Display (LCD) light

shutters working in synchronization with the

projector (Figure 4).

Figure 4: Active 3D display system.

When the projector displays the left eye image, the

right eye shutter of the active stereo eyewear is

closed, and vice versa. The projector is capable of

displaying at a refresh rate high enough (greater than

120 Hz) in order that the viewer does not perceive a

flicker between alternate frames. We decided to

choose an active stereo system because more light is

projected to each eye and therefore the 3D image

appears brighter. We adjusted stereo parameters

(i.e., separation and convergence) in order to obtain

a negative screen parallax. So, when stereo pair is

viewed through shutter glasses, the 3D objects of the

scene appear out of the screen (Figure 5).

Figure 5: Binocular fusion of the stereo pairs.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

252

4.4 Evaluation Setup

The preliminary evaluation study took place at the

facilities of ELT in Rome and involved 12 users.

The case study required around half hour per

participant to be completed.

To evaluate the proposal, we developed a

realistic scenario based on a coastal sea surveillance

task. The user supervises, using both mono and

stereo visualization, a relatively big area of sea, the

Strait of Sicily, where there is a large volume of

traffic, generated by different types of vessels. The

operator is able to see the trajectory generated by

each track and intelligence regarding each vessel.

The simulator, where the scenario is developed

and executed, is an integration with Commercial-

Off-The-Shelf (COTS) products and proprietary

software and is based on the principles of distributed

and live simulation (Sindico, et al., 2012).

The following qualitative data were collected

using questionnaires and interviews: the realism of

the visual feedback, the sense of presence, the depth

impression and the user’s viewing comfort.

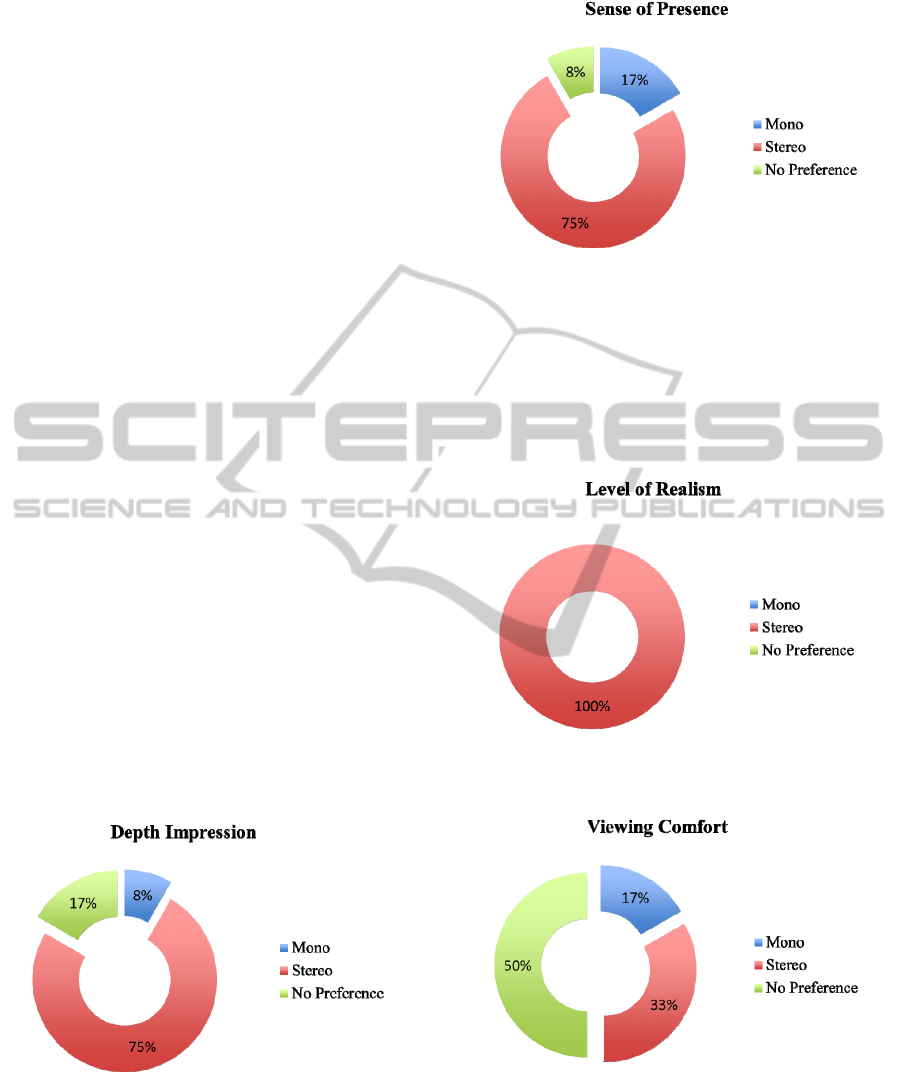

4.5 Results

Most of the participants had no doubts that the depth

impression (see Figure 6) and the sense of presence

(see Figure 7) are higher in case of stereo

visualization. With complex electronic warfare

scenarios, when monocular depth cues are

ambiguous, the stereo viewing enhances spatial

judgments: it is possible to detect very closely

spaced icons on the screen (representing platforms

with installed active emitters).

Figure 6: Preferences about depth impression by

participants of the preliminary evaluation study.

All users find that stereo visualization provides more

realism than mono-viewing (see Figure 8). The

image resulting from the fusion of the stereoscopic

pair is very clear and natural looking because

Figure 7: Preferences about sense of presence by

participants of the preliminary evaluation study.

surface properties such as luster, scintillation, and

sheen are different in luminance and colour between

the left and right retinal images. This allows the

viewer to perceive the differences between

successive frames (e.g., platforms’ positions) at a

glance.

Figure 8: Preferences about level of realism by

participants of the preliminary evaluation study.

Figure 9: Preferences about viewing comfort by

participants of the preliminary evaluation study.

There is no significant difference in viewing comfort

between stereo and mono visualization (see Figure

9). This result, obtained through a reduction of the

amount of parallax within each stereo pair,

EffectsofStereoscopyonaHuman-ComputerInterfaceforNetworkCentricOperations

253

contradicts the general assumption of stereo viewing

causes some problems such as visual fatigue and

headache.

5 CONCLUSIONS AND FUTURE

WORK

In the design and development of the C2 systems for

NCO, a key element is the HCI. Bad assumptions

may lead to bad design choices; those in turn may

lead the operator to an information overload state.

To avoid this situation, new ways to conceive HCI

must be explored.

In this paper we evaluated the use of a

stereoscopic 3D synthetic environment, aiming at a

total immersion of the operator.

Preliminary evaluation shows the relevant role

played by stereo visualization and its advantages in

terms of sense of depth, presence and realistic

viewing perception. The results presented are not

authoritative in terms of metrics; however, they

represent the initial experimentation phase of

continuing research into user interface measurement

for military purposes.

The lack of comparative evaluation with respect

to other works specifically addressing NCW is due

to the actual complexity of this domain. Livatino et

al. (2010) obtained similar results in mobile robot

teleguide based on video images. Even if applicative

domains are different, similar results confirmed that

we are in the right direction and more investigation

must be done into HCI for military applications.

In the next future, we are interested in

performing a formal user study aimed at improving

and extending previous evaluations. We have

designed a test plan according to recommendation

gathered from the literature.

The test procedure will start with a brief

presentation of the project and the purpose of the

evaluation study. Then a visual attention test will be

performed to classify the participants' level of

selective visual attention. Each user will be involved

in the understanding of a complex NCW scenario

within an interactive test, during which quantitative

data (e.g. errors made while estimating the distance,

number and percentage of tasks completed correctly)

will be recorded. The last step consists in the

completion of pre-designed questionnaires to acquire

qualitative data referring to the users' experience

with stereovision technology.

We will evaluate the viewing comfort by

subjecting participants to an intensive use of the

system (about 3 hours). We will put special attention

on the counterbalancing of the tasks as well as the

sequence during the entire user study to avoid

fatigue and learning effects. This aim will require

the participants to perform the tests according to a

precise schedule.

The collected evaluation measures will be

analyzed through inferential and descriptive

statistics and the results will be graphically

represented by means of diagrams.

We expect that formal test results will clearly

confirm the benefits of the stereoscopic vision in the

understanding of a NCW scenario.

ACKNOWLEDGEMENTS

We would like to thank the colleague Andrea

Sindico, who has supported us on the integration

with this research and the LOKI project.

REFERENCES

Alberts, D. S., Garstka J.J. and Stein F. P., 1999. Network

Centric Warfare: Developing and Leveraging

Information Superiority. 2

nd

ed. United States: CCRP

Publication Series.

Alexander, T., Renkewitz, H., Conradi, J., 2006.

Applicability of Virtual Environments as C4ISR

Displays. United States of America, [online].

Available at: http://www.dtic.mil/cgi-bin/

GetTRDoc?AD=ADA473296 (accessed on 14

October 2013)

Braulinger, T.K., 2005. Network Centric Warfare

Implementation and Assessment. Master’s Thesis. U.S.

Army Command and General Staff College.

Cox, K. C., Eick, S. G. and He, T., 1996. 3D Geographic

Network Displays. ACM Sigmod Record, 25(4), pp.50-

54.

Cyganek, B. and Siebert, J. P., 2009. An Introduction to

3D Computer Vision Techniques and Algorithms.

Great Britain: John Wiley & Sons, Ltd.

Department of Defense, 2008. MIL-STD-2525C: Common

Warfighing Symbology. United States of America,

(online). Available at: http://www.mapsymbs.com/

ms2525c.pdf (Accessed on 14 October 2013).

Dey, D., Gobbi, D. G., Slomka, P.J., Surry, K. J. M. and

Peters, T. M, 2002. Automatic Fusion of Freehand

Endoscopic Brain Images to Three-Dimensional

Surfaces: Creating Stereoscopic Panoramas. IEEE

Transaction on Medical Imaging, 21(1), pp.23-30.

Drascic, D., 1991. Skill Acquisition and Task Performance

in Teleoperation using Monoscopic and Stereoscopic

Video Remote Viewing. Human Factors and

Ergonomics Society Annual Meeting, 35(19), pp.1367-

1371.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

254

Feng, S., Liu M., Wan J., 2006. An agilely adaptive User

Interface based on Design Pattern. In: IEEE, 6

th

International Conference on Intelligent Systems

Design and Applications, Jinan, China, 16-18 October

2006. Available on: IEEE Xplore Digital Library

(Accessed on 5 March 2013).

Gamma, E., Helm, R., Johnson, R. and Vlissides, J., 2005.

Design Patterns: Elements of Reusable Object-

Oriented Software. Massachussets (USA): Addison-

Wesley.

Julier S., King, R., Colbert, B., Durbin, J. and Rosenblum,

L., 1999. The Software Architecture of a Real-Time

Battlefield Visualization Virtual Environment. In:

IEEE, Proceedings of Virtual Reality. Houston, Texas,

USA, 13-17 March 1999. Available on: IEEE Xplore

Digital Library [Accessed on 26 July 2012].

Kapler, T. and Wright, W., 2005. Geotime Information

Visualization. Information Visualization, 4(2), pp.136-

146.

Lipton, L., 2007. The Last Great Innovation: The

Stereoscopic Cinema. SMPTE Motion Imaging

Journal, 116(11-12), pp.518-523.

Livatino, S., Muscato G., Sessa, S. and Neri, V., 2010.

Depth-enhanced mobile robot teleguide based on laser

images. Mechatronics, 20(7), pp. 739-750.

Mahoney, N., Oikonomou, A. and Wilson, D., 2011.

Stereoscopic 3D in Video Games: A Review of

Current Design Practices and Challenges. In: IEEE,

16

th

International Conference on Computer Games.

Louisville, KY, USA, 27-30 July 2011. Available on:

IEEE Xplore Digital Library (Accessed on 26 July

2012).

OMG, 2007. DDS, http://portals.omg.org/dds/ (Accessed

on 08 April 2013).

Pettersson, L. W., Spak, U. and Seipel S., 2004.

Collaborative 3D Visualizations of Geo-Spatial

Information for Command and Control. In:

Proceedings of SIGRAD, Gävle, Sweden, 24-25

November 2004. Available on: LiU Electronic Press

(Accessed on 26 July 2012).

Sexton, I. and Surman, P., 1999. Stereoscopic and

Autostereoscopic Display Systems. IEEE Signal

Processing Magazine, 16(3) pp.85-89.

Shanker, T. and Richtel, M., 2011. In New Military, Data

Overload can be Deadly. The New York Times,

(online) 17 January 2011. Available at:

http://www.umsl.edu/~sauterv/DSS4BI/links/17brain.

pdf (Accessed on 14 October 2013).

Sindico, A., Cannone, D., Tortora, S., Italiano, G. F.,

Naldi, M., 2012. Distributed Simulation of Electronic

Warfare Command and Control Scenarios. In:

Proceedings of Symposium on Navy Operational

Research and Logistics (SPOLM). Rio de Janeiro,

Brazil, 23-24 August 2012.

U.S. Navy, 1995. Copernicus… Forward C4I for the 21

st

Century. United States: Office of Chief of Naval

Operations (OPNAV). Available at: http://

www.navy.mil/navydata/policy/coperfwd.txt (accessed

on 14 October 2013).

EffectsofStereoscopyonaHuman-ComputerInterfaceforNetworkCentricOperations

255