The Elevate Framework for Assessment and Certification Design for

Vocational Training in European ICT SMEs

Iraklis Paraskakis

1

and Thanos Hatziapostolou

2

1

South East European Research Center (SEERC), International Faculty of the University of Sheffield, City College,

24 Proxenou Koromila, 54622, Thessaloniki, Greece

2

Department of Computer Science, International Faculuty of the University of Sheffield, City College, Leontos Sofou,

54632 Thessaloniki, Greece

Keywords: Vocational e-Training, Competence-based Education, e-Assessment, Web2.0.

Abstract: This paper discusses vocational training and e-Training within the context of IT SMEs, focusing on the

process of assessment and certification. More specifically, current trends in the assessment and certification

of IT skills are discussed, as revealed in the study of related work. In addition, issues in traditional and e-

Assessment are presented, and a new approach: “Assessment 2.0”, which exploits the characteristics of

contemporary trainees, is proposed. Finally, after examining relevant research projects, and current

European certification programs, the ELEVATE project approach to certification and assessment is

introduced with an example. More precisely, the proposed methodology for defining competence-based

learning is put forward.

1 INTRODUCTION

The continuing Information and Communication

Technology (ICT)-driven evolution of products and

processes, coupled with the need for a low-carbon

economy as well as population ageing, will mean that

jobs and social structures will change: education and

training, including vocational education and training

(VET), must adapt accordingly (

COM 296, 2010). The

Europe 2020 Strategy puts a strong emphasis on

education and training to promote “smart, sustainable

and inclusive growth” and to reinforce the

attractiveness of VET.

Actions to improve VET help to provide the

skills, knowledge and competences needed in the

labor market. As such, they are an essential part of

the EU's ‘Education and Training 2020’ work

programme. Also, encouraging learners to take part

in VET in different countries is also a priority of EU

actions, providing individuals with increased

opportunities and experiences, and enhancing

efficiency and innovation.

Assessments are the foundation of effective

instructional practices and return-on-investment

studies, since in research such as that by Glahn

(2008), it is revealed that assessment does not only

allow the expression but also the comparison of

knowledge and competences among groups of

learners. Moreover, the power and consequences of

assessment have become exponentially more

important with the advent of content management

systems (CMS) and learning management systems

(LMS) which foster communication and

dissemination.

At its most basic level, assessment is the process

of generating evidence of trainee learning and then

making a judgment about that evidence (Elliott,

2008). Current assessment practice provides evidence

in the form of examination scripts, essays and other

artefacts. Furthermore, data from assessments helps

drive the development of solid content and advances

instructional practices.

‘Assessment 1.0’ can be thought of as assessment

practice from the beginning of the 20

th

century until

today. Throughout this period, assessment exhibited

the following characteristics:

paper-based,

classroom-based,

formalised (in terms of organisation and

administration),

synchronised (in terms of time and place), and

controlled (in terms of contents and marking).

According to the Europe 2020 Strategy, there

appears to be a need for greater flexibility regarding

57

Paraskakis I. and Hatziapostolou T..

The Elevate Framework for Assessment and Certification Design for Vocational Training in European ICT SMEs.

DOI: 10.5220/0004858800570066

In Proceedings of the 6th International Conference on Computer Supported Education (CSEDU-2014), pages 57-66

ISBN: 978-989-758-021-5

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

how learning outcomes and competences are

acquired, how they are assessed and how they lead to

qualifications. In formal educational settings, for

example, this is achieved through specially trained

staff and the application of fine grained assessment

methods. Methods which are not limited to formally

strict testing, but can include observing learners on

their learning course, stimulating group work,

apprenticing, analyzing a learner’s contributions in

discussions and problem solving approaches.

A more up-to-date form of assessment has

emerged in the last decade, which involves the use of

computers in the assessment process (Elliott, 2008).

‘E-Assessment’ also embraces ‘e-testing’ (a form of

on-screen testing of knowledge) and ‘e-portfolios’ (a

digital repository of assessment evidence normally

used to assess practical skills). This approach to

assessment runs tangent to the application of

Software as a Service (SaaS).

SaaS is going to be a big part of the way we work

and learn in the future (Marks, 2008). Some of the

advantages of SaaS include: a) lower maintenance

and functionality costs, b) no need for software

licenses or hardware upgrades, c) increased mobility

since documents are accessible anywhere, through

the Internet, and d) documents are safely stored

remotely.

According to Walker et al. (2004), there are six

key components for the successful delivery of e-

Assessment: central support, quality software, quality

hardware, clear policies and procedures, integration

within the learning system and staff education.

Therefore, considering the advantages of utilizing

SaaS, the burdens of implementing e-Assessment for

an SME could be limited to just integration with an

existing learning system and staff education.

Firms generally praise online training (Strother,

2002) as a cost-effective, convenient, and productive

way to deliver corporate education. Results of

studies, such as by Hamburg et al. (2008) however,

show that less than 25% of SME (Small and Medium

Enterprises) staff participates in vocational training

courses and less than 60% of employers provide any

type of training for their staff. This is mainly due to

the fact that many SMEs have neither enough

knowledge, or resources to develop and implement

sustainable training strategies based on new media

and knowledge processes for their own organization.

Issues such as this will be analyzed in a following

section, followed by appropriate proposed solutions.

One of the key qualities of formal education is to

make learning processes accessible for

communication in such a way that forms of

assessment results (i.e., certificates) can serve as

proof of the acquired knowledge and competences.

This paper focuses on the certification of IT skills in

SMEs and presents the rationale behind the

certification approach selected for the ELEVATE

project members.

In the rest of the paper, we examine related

research on assessment in vocational training,

focusing on the assessment of IT skills in SMEs. The

paper describes current practices, as well as issues

pertaining to traditional and electronic assessment.

Next, we examine an update to current assessment

practices, which utilizes Internet technologies and,

more specifically, Web2.0. This is referred to as

Assessment 2.0. Following this, the paper presents

the assessment and certification approach of the

ELEVATE project and describes an example of its

application. Finally, the last section discusses

conclusions and future work.

2 ISSUES ON ASSESSMENT AND

CERTIFICATION IN SMES

Information technology proficiency among citizens is

a key factor in the dynamics of Information Society

development and further economic growth. In the

following sections we examine specific issues which

hinder the processes of assessment and certification

in SMEs, and discuss related projects and initiatives.

2.1 Traditional and e-Assessment

Issues

In traditional types of assessment (Assessment1.0),

the formats of the examinations range from 45-120

minute restricted response (i.e., multiple-choice,

identification, one best correct answer, 2/3/4 correct

answers), to restricted-response adaptive modes (that

stop the testing process at the point in the adaptive

curve at which a passing score could be predicted at a

95 percent confidence level), constructed response

(e.g., drag and drop), and “essays”, simulations,

scenarios and case studies involving performance

benchmarks.

Traditional assessment has a bureaucratic nature,

which is expensive to run and doesn’t scale well

(Elliott, 2008). It’s also inflexible and organised

around annual examination ‘diets’. Moreover, some

educationalists claim that the current assessment

system encourages surface learning and “teaching to

the test”. Instead of instilling genuine problem

solving skills, it fosters memorisation.

The traditional assessment approach concentrates

CSEDU2014-6thInternationalConferenceonComputerSupportedEducation

58

mainly on the testing of basic skills, supposedly

acquired through mainly drill and practice

experiences. Such an assessment system is often

referred to as a test culture (Sluijsmans, 2002). On

the contrary, assessment that is performance-

oriented, such as the ELEVATE project’s

competence-based approach, aims to measure not

only the correctness of a response, but also the

thought processes involved in arriving at the

response.

Employers in SMEs complain that, in spite of

rising achievement (DfES, 2007), young people are

not gaining the skills that are needed in the modern

workplace – skills such as problem solving,

collaboration, innovation and creativity. Vocational

education and training must equip young learners

with skills directly relevant to evolving labor

markets, such as e-skills, and highly developed key

competences; such as digital and media literacy to

achieve digital competence (

COM 296, 2010).

In addition, trainers complain about the rising

burden of time spent carrying-out and marking

assessments, which reduces the time available for

“real teaching”. These criticisms are not confined to

paper based assessment. E-testing has been criticised

for crudely imitating traditional assessment. These

criticisms of e-Assessment mirror the criticisms of

virtual learning environments (VLEs) – that they

simply seek to mimic traditional classroom practice.

Both paper-based and computer-based

assessments are perceived by trainees as something

external to them; something over which they have no

control; something that is ‘done’ to them. And the

assessment instrument itself is considered contrived,

just a hurdle to be jumped, not part of their learning.

Or, worse, it is perceived as the sole purpose of their

learning, with all their efforts going into passing the

test rather than the acquisition of new knowledge and

skills.

Assessment 1.0 is also intensely individualistic.

Assessment activities are done alone, competition is

encouraged, and any form of collaboration is

prohibited. Furthermore, the use of e-Assessment

systems might hold back progress in assessment by

constraining practice to traditional (paper based)

assessment and the limited form of computer-based

assessment made possible by these systems.

2.2 Issues on the Certification of IT

Skills

The most basic principle of IT industry certification

is that content counts. Given the global reach of IT

certification, some examinations are available in

languages other than English, but that depends on the

subject matter.

Certification tests in the IT world are constantly

being retired and replaced to meet the current state of

vendor products and industry knowledge (Adelman,

2000). Testing firms do not award the formal

certifications. Rather, they report examination results

to vendors and industry associations that issue the

documents of certification, and both vendors and

industry associations may have requirements for

certification beyond those of examination.

Increasingly, too, we find “cross-vendor

recognized” examinations, a development that

underscores the rationalizing trends in the industry,

along with the competition for trained labour.

Microsoft, for example, waives its networking

examination requirement for those who are already

certified by Novell, Banyan or Sun as network

engineers, specialists or administrators. These cross-

vendor recognized examinations are a prelude to the

adoption of industry-wide certification standards and

accreditation.

2.2.1 Related Projects

During research, the ELEVATE project discovered

some highly relevant projects, which utilize

competence-based training. In the following

paragraphs, these projects are examined with respect

to their assessment and certification approaches:

PROLIX – Although studying the PROLIX

research findings enabled the ELEVATE project

to gain some important insight into competence-

based training, there doesn’t seem to be enough

information on how these competences are

assessed. Certainly, specific types of assessment

are defined, but there isn’t enough available

information, to allow the ELEVATE partners to

analyze, evaluate, adapt and perhaps integrate

specific methodologies.

TenCompetence – The TenCompetence project

has yielded some interesting results, especially

in the form of pedagogical tools, aiding Learning

Design based on the IMS-LD standard. Yet,

TenCompetence does not seem to focus on the

certification of competences. It seems the

validation of competences is realized outside of

the system, by specific organizations. The user

simply submits this certification as evidence of

acquired competencies. In other words, there is

no integration of the certification or assessment

process within the TenCompetence system.

Therefore, the TenCompetence system can be

mostly utilized as a learning design tool for the

TheElevateFrameworkforAssessmentandCertificationDesignforVocationalTraininginEuropeanICTSMEs

59

trainer and an e-portfolio mechanism for the

trainee.

The Leonardo da Vinci Programme – As part of

the Lifelong Learning Programme, it funds a

wide range of actions in vocational training,

ranging from opportunities for individuals to

improve their work-related skills through

placements abroad, to co-operation projects

between training organisations in different

countries. With regards to certification, mobility

certificates are given to organisations that have

shown a particular quality in carrying out

Leonardo da Vinci mobility projects

(knowledge, experience and resources) and have

developed an internationalisation strategy.

Therefore, this Programme does not support the

certification of individuals, as required by the

ELEVATE SME certification requirements.

However, there are a number of initiatives under

development to enhance the transparency,

recognition and quality of competences and

qualifications, facilitating the mobility of

learners and workers. These include the

European Qualifications Framework (EQF),

Europass, the European Credit System for VET

(ECVET), and the European Quality Assurance

Reference Framework for VET (EQAVET).

From the initiatives mentioned above, only EQF

and Europass are relevant and will be examined

in a following section.

2.2.2 European Certification Programs

During research, four European initiatives relevant to

certification have been identified: EITCI, ECQA,

EQF, and Europass. These initiatives are presented in

more detail below.

Europass is an EU initiative to increase

transparency of qualification and mobility of

citizens in Europe. It aims to be a Life Long

Learning Portfolio of documents containing the

descriptions of all learning achievements,

official qualifications, work results, skills and

competencies, acquired over time, along with the

related documentation. Therefore, this is not an

approach to certification, but a form of e-

portfolio. However, by following the specific

standards and templates set by Europass, the

ELEVATE user profile should be able to be

adapted and exported.

The European Information Technologies

Certification Institute (EITCI,

http://www.eitci.org/) has been established as a

not-for-profit European, non-governmental

organization, dedicated to counteracting digital

exclusion in society by undertaking research and

development of IT certification methodologies

and standards. The EITCI Institute currently runs

two IT certification programs: a) the European

IT Certification Course EITCC program, and b)

the European IT Certification Academy EITCA

program. Both certification programs are

accessible over the Internet and are targeted at

individuals and institutions, enabling formal

documentation of information technology

competencies. Due to a specially designed fully

on-line certification procedure preserving high

quality of the certificates, there is no need to

undertake EITCC and EITCA examinations at a

physical location. This is an important factor in

overcoming individual barriers and ensuring

accessibility of IT certification services to

everyone. Furthermore, within the framework of

the EITC Program, the Institute is currently

supporting the following IT Professional

Certification Paths: a) EITC-I: Internet

Technologies - This certification path is

recognized globally as a formal proof of

applicable knowledge, qualifications and

expertise in the Internet and eCommerce

technologies domain, b) EITC-S: Security

Technologies - This certification path is

recognized globally as a formal proof of

applicable knowledge, qualifications and

expertise in the IT security technologies domain,

c) EITC-M: Information Management - This

certification path is recognized globally as a

formal proof of applicable knowledge,

qualifications and expertise in the Information

Technologies management domain, and d)

EITC-D: Software Development - This

certification path is recognized globally as a

formal proof of applicable knowledge,

qualifications and expertise in the areas of

modern software development and engineering.

Although EITC covers a number of certification

paths, these are too general in order to be

applicable to specific SME needs. In other

words, SME certification types should not

necessarily conform to a European standard but

should be able to adapt and merge with it. This

can be achieve through ECQA which is

presented next.

The ECQA (European Certification &

Qualification Association, http://www.ecqa.org/)

is the result of a number of EU supported

initiatives in the last ten years, where in the

European Union Life Long Learning Program

CSEDU2014-6thInternationalConferenceonComputerSupportedEducation

60

different educational developments decided to

follow a joint process for the certification of

persons in the industry. Through the ECQA it

becomes possible to attend courses for a specific

profession in one country and perform a Europe-

wide agreed test at the end of the course. If an

organisation or consortium wants to propose a

new European profession for ECQA certification

it must apply the guidelines for ECQA certified

job roles and submit a job role proposal to the

ECQA board members. Criteria for Certification

of EU Job Roles include: a) the job role

descriptions must comply with the European

standards for skills descriptions. Each skill unit

team describes the skill unit, learning elements

and performance criteria following the standards

for skills descriptions, b) a pool of test questions

must be provided using a specific standard

description format (so that the test questions can

be entered into the exam portals), c) the pool of

test questions must contain at least 5 different

test questions per performance criterion so that a

test per participant can be generated generically,

d) to be accepted as a profession with a cross-

regional and cross-national European scope the

job role must additionally satisfy European

dimension criteria, e) to be accepted as a

profession with impact on a European level there

must be a partnership agreement available

(examples are consortium agreements,

exploitation agreements, qualification boards)

which assures that the profession and its skills

card will be maintained for a number of years

(minimum is 3), and f) to establish an

independent and computer controlled test and

certification centre all professions must use the

European skills portals to administer a test pool

and run all exams through it (with an

independent objective board controlling the test

servers and procedures).

The ELEVATE certification approach is based on

the ECQA. The ECQA corresponds to the Bologna

principles and European Qualification Framework

(EQF). The EQF acts as a translation device to make

national qualifications more readable across Europe,

promoting workers' and learners' mobility between

countries and facilitating their lifelong learning.

However, in order for the ELEVATE system to

be in accordance with the ECQA specifications, the

assessment and certification process should be

carefully designed to meet specific criteria, as is

described in the next section.

3 THE ELEVATE PROJECT

APPROACH

Based on the bibliographical research and analytical

study presented in the previous sections, we deduced

the fundamental characteristics of the envisaged

certification and assessment methodology of the

ELEVATE educational system.

3.1 ELEVATE Project Assessment

Approach

Educational innovations, such as problem and

competence based education, are more likely to

succeed if they include new forms of assessment,

whereby assessment and learning are strongly

interconnected in the course materials. Trainers in

SMEs, however, usually lack the expert knowledge

of pedagogists or instructional designers, required in

order to facilitate this process.

For this reason the ELEVATE system

incorporates a four step methodology, which allows

the design of courses in which instruction and

assessments are completely aligned:

Define the purpose of the performance

assessment.

Choose the assessment task - Issues that must be

taken into account are time constraints,

availability of resources, and how much data is

necessary in order to make an informed decision

about the quality of a trainee’s performance. The

literature distinguishes between two types of

performance based assessment activities that can

be implemented: informal and formal.

Define performance criteria.

Create assessment forms - In these forms,

trainers determine at what level of proficiency a

trainee is able to perform a task or display

knowledge of a concept.

The steps stated above define the necessary

components for complying with the ECQA

requirements., The approach could be further

extended by including informal methods of

assessment, used as evaluation factors to appointed

performance criteria. Such informal methods of

evaluation could utilize Web2.0 tools such as blogs,

participation in the creation or editing of a wiki

article, searching for external sources relevant to the

studied topic, uploading documents/videos of the

training experience or participating in online social

activities such as forums, chat rooms, or social

network groups.

Assessment2.0 is an approach proposed by Elliott

TheElevateFrameworkforAssessmentandCertificationDesignforVocationalTraininginEuropeanICTSMEs

61

(2008), as an update to traditional assessment, best

suited for the characteristics of contemporary

learners. Trainees are already using Web2.0 services

as part of their everyday lives. As a result, education

and training approaches are becoming disconnected.

The classroom is a drab, technology-free zone that

bears little relation to the increasingly technological

reality of people’s lives outside of the classroom.

Furthermore, an Assessment 2.0 approach allows

trainees to take more control of their own learning

and to become more reflective. This was also

identified as the vision of the JISC (Joint Information

Systems Committee) for 2014, which funded an e-

Assessment Roadmap, which reviewed current

policies and practice relating to e-Assessment across

the UK (Whitelock, 2007).

Unfortunately a wide range of examples that have

used Web2.0 tools are not yet available in the

literature. In the meanwhile, however, the way

advances with Web and Web 2.0 tools are addressing

the new assessment agenda have been evaluated.

Four examples of summative assessment presented

by Whitelock (2010), illustrate effective methods of

web technologies supporting innovative practices that

display a number of Elliott’s key characteristics.

Tried and tested pedagogical strategies have been

enhanced in many of the cases examined and

illustrate significant learning gains with the

introduction of these technologies.

Assessment 2.0 poses challenges for trainers –

who are often the epitome of the digital immigrant.

Not only might they lack the IT skills needed to

understand Web 2.0 services but they may lack the

knowledge and experience required to appraise

trainees’ work produced using these tools.

Furthermore, trainers also lack the rubrics required to

assess Web 2.0 skills.

For the reasons stated above, the utilization of

Assessment2.0 in the ELEVATE project approach is

regarded within the context of informal assessment.

In other words, trainees are not evaluated based on

their participation in social network activities or on

their skills with Web2.0 tools. For ELEVATE,

Assessment2.0 is regarded as an optional,

supplementary activity to regular training.

3.2 ELEVATE Project Certification

Approach

The pedagogic approach to the vocational training

adopted by the ELEVATE project is a competency-

based development strategy. In order to achieve an

efficient implementation of this approach, the

ELEVATE project introduces the Competency Graph

as the domain knowledge model. Since the

competence graph is developed by the domain expert

through a system component, the interface should

support the design and implementation of all the

necessary features of the suggested approach for

assessment and certification.

As mentioned before, the ELEVATE certification

approach is based on the ECQA. The ECQA

corresponds to the Bologna principles and European

Qualification Framework (EQF). Therefore, the main

goal for the certification component of the system is

to be compatible with the ECQA certification

template and specifications.

In order for the system to achieve this, some

features need to be designed and implemented:

The domain expert must be able to define

certifications for specific job roles or

professions.

For each job role, the domain expert must be

able to designate the specific competencies

which are considered requirements of

certification.

For each competency, the domain expert must be

able to define multiple performance criteria (i.e.,

skills exhibited by the trainee which serve as

evidence of competency acquirement).

For each performance criterion, the domain

expert must be able to define at least five (5)

evaluation questions based on the principles set

by the ECQA template, as well as a factor

representing the percentage of success which

qualifies for a pass grade.

In addition, the ELEVATE user profile should

follow the specific standards and templates set by

Europass in order to allow it to be adapted and

exported for the specific e-portfolio system. This

would necessitate the definition of these fields in the

user profile: personal information (name, birth date,

nationality, contact etc.), desired employment (this

could be the suggested job role defined for the

ECQA certification), work experience (this is

arbitrarily set by the user), education and training

(this is arbitrarily set by the user), personal skills and

competences (this can include information set

arbitrarily by the user and acquired competences

based on the ELEVATE system and categorised into

team work, mediating skills, intercultural skills,

computer skills etc.).

3.3 Designing the ELEVATE Project

Assessment and Certification

Approach

CAS is a software company based in Germany and

CSEDU2014-6thInternationalConferenceonComputerSupportedEducation

62

one of the three SME participants of the ELEVATE

project. CAS develops Customer Relationship

Management (CRM) solutions and provides face to

face training of its developed genesisWorld software

to customers and partners.

Based on the CAS genesisWorld Marketing

Module e-Training analysis, which resulted in the

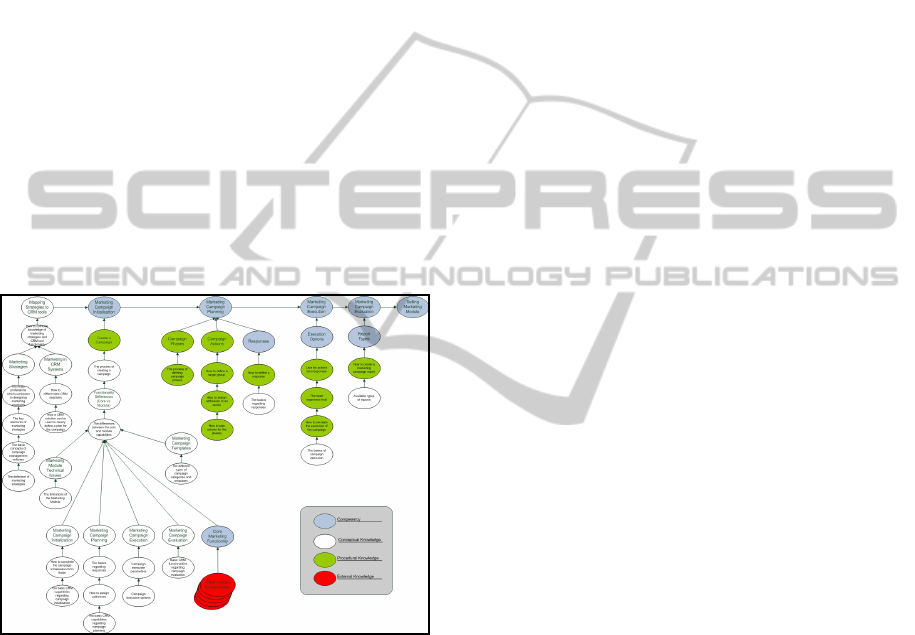

competency graph depicted below (Figure 1), we will

provide an example of the suggested ELEVATE

approach to assessment design and certification

implementation. The design of the training and

evaluation approach will follow a specific step-by-

step methodology, conforming to the templates and

principles set by the ECQA association. The process,

realized by the domain expert, is as follows:

1. Define the job role (i.e., skill unit)

2. Define the participating elements (i.e.,

competencies)

3. Define the performance criteria (i.e., learning

objects)

4. Define the test questions (i.e., formal assessment)

5. Define the informal assessment method

Figure 1: Competency Graph for Marketing Module

Training.

3.3.1 Define the Job Role (I.E., Skill Unit,

Certificate)

By adopting the ECQA rules and complying with set

specifications, the ELEVATE project could propose

a job role, in coordination with the participating

SMEs, defined based on European standards for

skills descriptions. Example job roles could include:

CRM Sales-person, CRM Developer, CRM

Administrator etc. In essence, the job role defined by

the trainer here corresponds to the certification

awarded after successful completion of the course

contents.

Therefore, the first task of the trainer / domain expert

is to define the job role, which will be taught to the

trainees. The properties of the job role that must be

defined include:

Skill Unit MM-SP: Marketing Module Sales Person

– genesisWorld Sales Marketing Module

Skill Unit Acronym: MM-SP

Skill Unit Title: genesisWorld Sales Marketing

Module

Skill Unit Description: This unit consists of five (5)

elements: Marketing Campaign Initialisation,

Marketing Campaign Planning, Marketing Campaign

Execution, and Marketing Campaign Evaluation.

3.3.2 Define the Participating Elements

(I.E., Competences)

Next, the tutor / domain expert has to define the

required competences, which must be acquired by the

trainee in order for him or her to be eligible for a

specific job role or certification.

The CAS genesisWorld Marketing Module e-

Training analysis resulted in the competency graph

depicted in

Figure 1. The goal Competency (i.e., the

most general concept the trainee will learn) is the

competency on the far right (i.e., Selling Marketing

Module).

A competency consists of learning objects which

can refer to either procedural or conceptual

knowledge. When a competency only contains

procedural learning objects it is coloured green and is

referred to as procedural knowledge. On the other

hand, if a competency only contains conceptual

learning objects it is painted white and is referred to

as conceptual knowledge. A competency which

contains both types of learning objects is coloured

light purple.

Finally, knowledge which is essential for

acquiring the competency but which is not included

in this part of the training is referred to as external

and is coloured red.

3.3.3 Define the Performance Criteria (I.E.,

Learning Objects)

The Learning Objects, as used by the ELEVATE

project are distinguished into categories based on the

offered degree of interaction:

Passive: The Learning Objects are presented to

the trainee in the form of a lecture, without any

kind of interaction between the trainee and the

system (e.g., text manuals, video tutorials etc.).

Interactive: These Learning Objects are

TheElevateFrameworkforAssessmentandCertificationDesignforVocationalTraininginEuropeanICTSMEs

63

constructed for the purpose of interaction of the

trainee with the training system, usually in order

to diagnose the knowledge state of the trainee.

Passive learning objects can contain inherent

degrees of scaffolding support for the trainee. These

refer to the tactic of the training approach, and can

be: a) implicit, and b) explicit.

The explicit approach (e.g., definition, example)

is the simplest and most direct approach. In other

words, no cognitive effort or reasoning capability is

required on the part of the trainee, other than to

memorise the information presented.

On the other hand, an implicit approach (e.g.,

analogy, example, discovery learning) to learning

requires some form of deductive reasoning on the

part of the trainee. For example, in the implicit

approach of discovery learning a chapter in a book is

given to the trainee and he/she has to mine the

chapter for the necessary information.

Therefore, in this step the tutor defines the

learning objects for each of the required

competencies. Properties of the learning object which

must be clearly outlined include: learning goal, id,

type, source and presentation method. For example:

Learning Goal: Trainee learns the key elements of

marketing strategies.

Identifier: MM-SP- MCI- MS- LO3a

Type: Passive | Implicit | Discovery Learning

Source: Book: “Customer Relationship

Management” – pages 418-20

Presentation Method: Text-based document

3.3.4 Define Formal and Informal

Assessment

After training for each competency, a small

evaluation is carried out based on the learning objects

presented and the knowledge acquired. Two types of

evaluation can be defined: a) formal, and b) informal.

Formal evaluation is based on traditional methods of

assessment such as multiple choice questions,

true/false statements, open ended questions etc. If the

trainee fails at formal evaluation, a different training

approach for the same learning object is presented.

On the other hand, informal assessment is based

on contemporary tools of communication and

collaboration such as wikis, social networks, forums,

and document/video repositories etc. For example:

Search the internet for examples of real life

marketing strategies and post links of them on

your personal blog.

Define a detailed example of a fictional

marketing strategy and attach it to the Examples

section of the relevant wiki.

The completion of informal assessment activities is

optional for the trainees, since their performance is

not strictly evaluated. However, such activities

enable trainees to participate in relevant communities

and contribute to the development of additional

training material for future generations of trainees.

3.4 Applying the ELEVATE Project

Assessment and Certification

Approach

The training begins with the trainee selecting a

specific target competency he or she desires to

acquire. Next, the ELEVATE system calculates an

optimal learning path towards that goal (based on the

defined competence graph of the domain knowledge)

and presents the appropriate learning object to the

trainee. If the trainee hasn’t defined any training

approach preferences, the default sequence is

applied. The default sequence progresses from the

more implicit approach to the most explicit

definition. For example, a sequence can present:

analogy, example, discovery learning and definition

approaches in that order.

After studying a training approach, the trainee is

presented with some feedback, with the system

requiring a selection between two alternatives. The

trainee can choose to examine the same learning

object again, albeit with a different, more explicit,

training approach. Alternatively, if the trainee feels

confident enough, he or she can proceed to the

assessment stage.

If the trainee selects to be evaluated, a set of

formal assessment questions are presented. In order

for the trainee to acquire the studied competence,

he/she must answer correctly at least 75% of the

questions. If the trainee is successful at the

assessment, the system presents some optional

informal assessment activities he/she can participate

in. The trainee can then proceed with the next

required competence along the predefined learning

path.

If the trainee fails at evaluation, he/she is

presented with the same learning object, through a

different training approach. The selection of training

approach is based on the default sequence or user

preferences and on the questions which were

answered incorrectly. If the trainee has studied all the

learning object training approaches and still fails at

evaluation a new methodology is attempted.

For each round of training-assessment-failure, the

system presents the trainer with the default sequence

of training approaches. The difference is that in each

round, the most implicit training approach is ignored.

CSEDU2014-6thInternationalConferenceonComputerSupportedEducation

64

This method leads to a gradually increasing level of

explicitness in the learning object training

approaches. If the trainee continues to fail, even with

the most explicit approach, the system restarts.

Finally, when the trainee completes the learning

path, and acquires all the associated competences

along the way, the system checks to see if any of

those competences participate in the requirements of

a certification. If the necessary competences have

been attained, the system awards the certification.

Alternatively, the system informs the trainee of his

progress towards certification and suggests

competences which should subsequently be acquired.

4 CONCLUSIONS & FUTURE

WORK

This paper discussed vocational training and e-

Training within the context of IT SMEs, focusing on

the process of assessment and certification by

examining relevant work, research and projects. Next

we proposed a methodology, in order to aid SME in

the design of the training, evaluation and certification

approach, which conforms to the templates and

principles, set by the ECQA association and

Europass standard.

Currently, a prototype of the ELEVATE e-

Training system is being tested and evaluated at the

three participating SMEs. Based on the comments

and suggestions of the evaluators we will ascertain

the effectiveness and applicability of the proposed

methodology and if necessary augment the e-

Training system with desirable functionality.

REFERENCES

Adelman, C., 2000. A parallel postsecondary universe:

The certification system in information technology.

Education Publications Center, US Dept. of Education,

ISBN: 0-16-050512-7.

COM 296., 2010. Communication From the Commission

to the European Parliament, the Council, the European

Economic and Social Committee and the Committee

of the Regions, (2010). Brussels, 9.6.2010 final,

http://eurlex.europa.eu/LexUriServ/LexUriServ.do?uri

=COM:2010:0296:FIN:EN:PDF.

DfES., 2007. Johnson Welcomes Rising Achievement.

Retrieved 22 April 2007. http://www.dfes.gov.uk/pns/

DisplayPN.cgi?pn_id=2006_0119.

Elliott, B., 2008. Assessment 2.0, Proceedings of the Open

Workshop of TenCompetence, Empowering Learners

for Lifelong Competence Development: Pedagogical,

Organisational and Technological Issues, Madrid –

España, April 10-11, 2008.

Eraut, M. (1997. Developing Professional Knowledge and

Competence. The Falmer Press, London (1997).

Glahn, C., 2008. Supporting reflection in informal

learning. Presented at the ECTEL Doctoral

Consortium '08. September, 16, 2008, Maastricht, The

Netherlands.

Hamburg, I., Engert, St., Anke, P., & Marin, M., 2008.

Improving e-Learning 2.0-based training strategies of

SMEs through communities of practice. Proceedings

of the Seventh IASTED International Conference,

Web-based Education, March 17-19, 2008 Innsbruck,

Austria.

Holtham, C., Rich, M., 2008. Developing the architecture

of a large-scale informal e-Learning network,

Proceedings of the Open Workshop of

TenCompetence, Empowering Learners for Lifelong

Competence Development: Pedagogical,

Organisational and Technological Issues, Madrid –

España, April 10-11, 2008.

LPC., 1995. Beroep in beweging. Beroepsprofiel leraar

primair onderwijs [Profession in action. Vocational

training profile for the primary school teacher].

Utrecht: Forum Vitaal Leraarschap.

Marks, G., 2008. Beware the Hype for Software as a

Service,

http://www.businessweek.com/technology/content/jul

2008/tc20080723_506811_page_2.htm.

Schön, D. A., 1983. The Reflective Practitioner: How

Professionals think in Action. Maurice Temple Smith,

London (1983).

Sluijsmans, D. M. A., 2002. Student involvement in

assessment. The training of peer assessment skills.

Unpublished doctoral dissertation, Open University of

the Netherlands, The Netherlands, http://

dspace.ou.nl/handle/1820/1034.

Strother, J., 2002. An Assessment of the Effectiveness of

e-Learning in Corporate Training Programs,

International Review of Research in Open and

Distance Learning, ISSN: 1492-3831, Vol. 3, No. 1

(April, 2002).

Walker, D., Adamson, M., Parsons, R., 2004. Staff

Education – Learning about Online Assessment,

Online. In: Danson, M., ed., Proceedings of 8th

International CAA Conference. Loughborough,

University of Loughborough.

Whitelock, D., 2007. Computer Assisted Formative

Assessment: Supporting Stuidents to become more

Reflective Learners.

Proceedings of the 8th

International Conference on Computer Based

Learning in Science, CBLIS 2007, 30 June – 6 July

2007. (eds.) Constantinou, C.P., Zacharia, Z.C. and

Papaevripidou, M. pp.492-503 ISBN 978-9963-671-

06-9.

Whitelock, D., 2010 Activating Assessment for Learning:

are we on the way with Web 2.0? In M.J.W. Lee & C.

McLoughlin (Eds.) Web 2.0 - Based-E-Learning:

Applying Social Informatics for Tertiary Teaching.

IGI Global.

TheElevateFrameworkforAssessmentandCertificationDesignforVocationalTraininginEuropeanICTSMEs

65

Whitelock, D., Brasher, A., 2006. Developing a roadmap

for e-Assessment: Which way now? Paper published

in the Proceedings of the 10th CAA International

Computer Assisted Assessment Conference, 4/5 July

2006, edited by Myles Danson, ISBN 0-9539572-501.

CSEDU2014-6thInternationalConferenceonComputerSupportedEducation

66