Supporting Distant Human Collaboration under Tangible Environments

A Normative Multiagent Approach

Fabien Badeig

1,2

and Catherine Garbay

1

1

Laboratoire d’Informatique de Grenoble (LIG), AMA team, Universit

´

e Joseph Fourier / CNRS, Grenoble, France

2

Fayol Institute, ISCOD team, Ecole Nationale Sup

´

erieure des Mines de Saint-Etienne, Saint-Etienne, France

Keywords:

Human Collaboration, Tangible Interaction, Multiagent Design, Normative System, Trace-based System.

Abstract:

The purpose of this paper is to present a new approach to support distant human collaboration under tangible

environments. Our aim is not to build and transmit across the distant tables an accurate and complete de-

scription of the human activity. Rather, our choice is to restrict communication to the possibilities offered by

the tangible tables (tangible object moves and virtual feedback). In this context, we propose to focus on the

elicitation and sharing of the norms and conventions that frame human activity, a core issue to sustain proper

collaboration. We promote in this perspective the design of a normative multiagent system, whose goal is to

emulate the influence of these norms on distant cooperation, thus bringing mutual awareness to the human

partners. The role of such system is (i) to represent these potentially heterogeneous and evolving systems of

norms in a declarative and distributed way, (ii) to filter the interpretation and communication of human activity

according to these norms, and (iii) to build an informed virtual feedback providing information about the con-

formity of action with respect to the conventions. An application to the RISK game is presented to exemplify

the proposed approach.

1 INTRODUCTION

The purpose of this paper is to present a new approach

to support distant human collaboration under a tan-

gible environment called TangiSense (Lepreux et al.,

2011). The TangiSense table may be seen as a mag-

netic retina that can detect and locate tangible objects

equipped with RFID tags. Its spatial and temporal

resolution is compatible with real-time. The table sur-

face is further equipped with a liquid-crystal display

(LCD) allowing virtual display. Each table is con-

nected to a computer that manages tangible objects

tracking and virtual feedback. Virtual display through

the LCD surface may provide immersion in simulated

environments but also, and more importantly, assess

the effective detection of tangible object and provide

feedback about their positioning and moves for dis-

tant users. Assessing the conformity of moves with

respect to the rules governing the collaborative ac-

tivity is a further role that is core to the present pro-

posal. The system to be designed must support a col-

lective and constructive approach to problem solving,

as opposed to a competitive one. This implies the

development of mutually consistent decision-making

processes in which users share resources and knowl-

edge. However, our aim is not to build and transmit

across the distant tables an accurate and complete de-

scription of the human activity. Rather, our choice is

to restrict communication to the possibilities offered

by the tangible tables (tangible object moves and vir-

tual feedback). In order that collaboration fully ben-

efit from the specificities of tangible interaction, the

design should finally preserve the spontaneous, op-

portunistic character of human activity. In this con-

text, we propose to focus on the elicitation and shar-

ing of the norms and conventions that frame human

activity, a core issue to sustain proper collaboration.

We promote in this perspective the design of a nor-

mative multi-agent system, whose goal is to emu-

late the influence of these norms on distant cooper-

ation, thus bringing mutual awareness to the human

partners. The role of such system is (i) to represent

these potentially heterogeneous and evolving systems

of norms in a declarative and distributed way, (ii) to

filter the interpretation and communication of human

activity according to these norms, and (iii) to build

an informed virtual feedback providing information

about the conformity of action with respect to the con-

ventions. To exemplify the proposed approach, we

describe an application to the RISK game. The RISK

249

Badeig F. and Garbay C..

Supporting Distant Human Collaboration under Tangible Environments - A Normative Multiagent Approach.

DOI: 10.5220/0004916102490254

In Proceedings of the 6th International Conference on Agents and Artificial Intelligence (ICAART-2014), pages 249-254

ISBN: 978-989-758-016-1

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

Figure 1: The TangiSense table equipped for the RISK

game with tangible objects and virtual images displaying

the ground map and tangible object moves.

game is a strategic board game where players fight to

win territories. Upon start, each player is given an

army (cannons, soldiers, cavalrymen) and a set of ter-

ritories from a political map of the Earth. Each player

attacks the other players in turn. To this end, he must

first of all designate two territories, one from its own,

supporting the attacking armies, and the other from

the board, that is attacked. The attacking and attacked

players then throw the dice to determine who loses

and who wins the round. A sample view of the game,

as played on the TangiSense table, is provided in Fig-

ure 1. This game leaves some autonomy to the play-

ers (which army to select, which territories to attack).

However, they have to remember and follow the rules

governing each move and proceed according to well-

defined gameplay (in this case, a turn-taking proto-

col). Support for the follow-up of these rules will be

provided by the collaborative support system that we

describe in the following sections.

2 STATE OF THE ART

2.1 Collaborative Support Systems

One major challenge when designing collaborative

support systems is to preserve the spontaneity and flu-

idity of human activity while ensuring the consistency

and proper coordination of action (Pape and Graham,

2010). Informal and opportunistic working styles

should indeed be promoted (Gutwin et al., 2008); at

the same time, the role of the system is to support

the building of a common vision or so-called ”mutual

awareness” (Kraut et al., 2003). Physical co-presence

provides multiple resources for awareness and con-

versational grounding. This has to be complemented

in the case of distant communication. Tangible inter-

action occupies a specific niche in this respect, since

tangible objects may be seen as full resources to sit-

uate action (Shaer and Hornecker, 2010). Commu-

nication is then grounded in the objects of the work-

ing space, and some of them may be designed to sup-

port action coordination and elicitation. Visual infor-

mation then becomes a conversational resource that

allows maintaining mutual awareness (Kraut et al.,

2003). Beyond conversation, perceiving the other’s

activity may be approached from the viewpoint of

the other’s social embodiment, that is considering

the constraints and rules that shape individual activ-

ity (Erickson and Kellogg, 2003). These issues were

discussed in a previous paper (Garbay et al., 2012)

into some more depth. We proposed in particular the

introduction of tangigets, tangible objects aimed at

supporting distant coordination, and ”norms”, declar-

ative rules aimed at representing social laws and con-

ventions, and governing the processing of tangible

objects moves. Managing human activities in dis-

tributed environments requires the adoption of com-

plex, emergent and adaptive system design, where

flexibility, re-configurability and responsiveness play

crucial roles (Millot and Mandiau, 1995). Various

architecture models have been proposed in this re-

spect. As quoted by (Kolski et al., 2009), these

models have been largely inspired by interactive sys-

tems architectures. Among these, CoPAC, PAC* or

CLOVER (Laurillau and Nigay, 2002) propose a dis-

tinction between production, communication and co-

ordination spaces. Such distinction is of interest to

our work, since there is a need to (i) ensure the follow-

up of distant objects, ((ii) ensure the follow-up of the

state of collaboration and (iii) provide feedback about

these moves.

2.2 Normative Multiagent Systems

The goal in normative multiagent systems is to model

cooperation and coordination under a social per-

spective. In such systems, norms drive agents to-

ward ”proper and acceptable behavior” and define

”a principle of right action binding upon the mem-

bers of a group” (Boella et al., 2007). These norms

are usually represented as production rules of the

form: ”whenever ⟨context⟩ if ⟨state⟩ then ⟨agent⟩

is ⟨deontic operator⟩ to do ⟨action⟩” (Boella et al.,

2007). Specific to this style of programming is the

fact that agents autonomously commit to obey the

norms, in a way specified by the deontic operator.

Any agent may however decide not to follow some

norm: this may result in penalties. The implemen-

tation of normative agent architectures is very of-

ten based on the belief, desire, and intention (BDI)

paradigm, with norms seen as external incentives for

action (Dignum et al., 2002). Norms are triggered by

a dedicated engine and result in agent notifications.

Another specificity of this modeling is the fact that

norms may evolve along the course of action. This

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

250

may appear necessary in large, open organizations,

where sub-groups often exhibit different and some-

times conflicting sets of norms. Agents in such or-

ganizations may need to join and leave the system

freely, or to move from one group to another: mech-

anisms must be provided so that they may recognize

and acquire local sets of norms (Hollander and Wu,

2011). (Boella et al., 2007) distinguishes between two

complementary dynamics: the one of the social rules,

and the one the environment or physical laws, which

may evolve in response to changing circumstances.

Such design has already been promoted in the field

of CSCW. (Rong and Liu, 2006) proposes a distinc-

tion between Local agents (individual partners), Su-

per agents (monitoring local agent actions and pro-

viding feedback so that local norms may evolve), In-

teractive agents (creating connections between agents

based on their abilities) and Cooperation agents (mon-

itoring agent organizations). This work is based on

the EDA agent’s model (Filipe, 2000) in which sev-

eral types of norms with different semiotic levels are

distinguished (perceptual, cognitive, behavioral and

evaluative). We proposed in a previous paper (Badeig

et al., 2012) an approach centered on the notion of

norm awareness, with situated agents sharing a com-

mon multidimensional trace reflecting conformity to

the norm.

3 PROPOSITION

3.1 Proposed View

Our approach to distant collaboration support is cen-

tered on the notion of norm awareness: core to hu-

man collaborative work is the fact that people may

not share the rules and conventions their frame their

activity, be it because they belong to different orga-

nization, or because they behave as individual peo-

ple, and shows for example a tendency to prioritize

some rules over others. We promote a multi-agent de-

sign, to cope with the distributed nature of the tangi-

ble surfaces, with the potential complexity of the task

to be handled and with the potential heterogeneity of

the human organizations in front. We further model

collaboration as a process coupling production, com-

munication and coordination spaces, according to the

CLOVER approach for groupware design (Laurillau

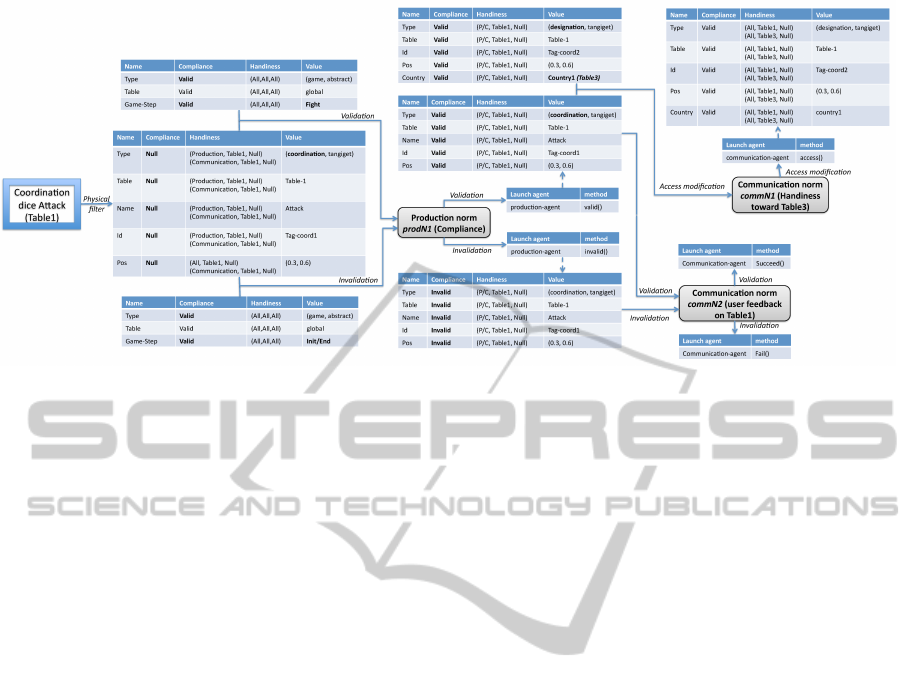

and Nigay, 2002). As illustrated in Figure 2, each

space is populated with agents and norms of different

types: events from the tangible surface are processed

within the production space, virtual feedback are op-

erated from the communication space and norms are

evolved within the coordination space. The agents

Figure 2: Functional view showing the various types of

agents, filters and traces.

are situated within a multidimensional trace reflect-

ing the evolution of human activity and its confor-

mity to the norms under consideration. In a dual per-

spective, human activity is situated in the space of the

tangible surface. Virtual feedback is provided to re-

flect distant activity as well as compliance of a tangi-

ble object move to the norm. This feedback may be

considered as incentive for the co-evolution and im-

proved coordination of actions among collaborating

partners. The role of the norms, defined as condition-

action rules, is to regulate the system’s activity in each

of the production, communication and coordination

spaces, by checking the conformance of the activity

and shaping agent processing in a context sensitive

way. Coordination agents are provided with the abili-

ties to update the set of norms, to account for contex-

tual specificities (major collaboration steps or critical

events detection, for example). Human and artificial

agents are responsible for the activity dynamics while

norms are responsible for the regulation of this dy-

namics. Traces evolve jointly under the asynchronous

and concurrent action of human and artificial agents.

3.2 Formal Definition of the System

Components

The system architecture involves four working

spaces, namely the environment, the traces space

t

, the

agents space

a

and the norms space

n

.

system = ⟨environment, space

t

, space

a

, space

n

⟩ (1)

environment = {tang

i

, virt

j

|∀i, j } (2)

The environment is a physical space, made of tangible

and virtual objects. It is open to human actors, accord-

ing to certain constraints and rights. The management

SupportingDistantHumanCollaborationunderTangibleEnvironments-ANormativeMultiagentApproach

251

by the system of these objects gives rise to numeri-

cal traces, which constitute a numerical space. This

space is open to artificial actors (the agents), accord-

ing to other constraints and rights. These constraints

and rights depends on the organizational specification

of the system where each agent plays different roles,

depending on its types (typically update the current

normative policy for coordination agents) and belong

to different groups (we typically consider that agents

working on a table constitute a group). The state of

the environment, at a given time, is specified by a sub-

set of the space of traces. Core to our design is the

management of the rights and constraints over physi-

cal and numerical information processing. To account

for this specificity of our approach, we model a trace

as a tuple of

(

property

,

value

)

pairs with properties

typed to register their compliance and handiness:

space

t

=

{

trace

i

|∀i

}

(3)

trace = {(p, v)} where

p = ⟨name : compliance ∈ {valid, invalid}

: handiness ∈ {(type, group, role)}⟩

(4)

The compliance of a property may be typed as valid

(or invalid) to express the fact that the given prop-

erty is compliant (or not) with respect to the norms

at hand. Various access rights may in addition be

specified through the field handiness. The tuple

(type, group, role) for this field restricts the access

of property p to agents of given type (production,

communication or coordination), group and role in

the system. Newly created traces are defined as

not compliant with access restricted to local group

((Null, < idTable >, Null)). We distinguish between

three types of agents: production, communication

and coordination. Communication agents ensure the

communication between human and artificial actors

that constitute the socio-technical system at hand:

they ensure the follow-up of incoming events from

the tangible surface and update the numerical trace

accordingly; conversely, they exploit the numerical

trace properties, depending on their compliance and

handiness values, to build some virtual feedback for

human actors. The role of production agents is to

build an understanding of the moves at hand, account-

ing for the various constraints surrounding human and

agent activity, and to enrich the trace properties ac-

cordingly. The coordination agents ensure the man-

agement of the norms under which human and arti-

ficial agents activity has to take place. Their role is

more precisely to update the system of norms, to ac-

count for potential evolution of the state of activity

and stage of collaboration. The agent space is ex-

pressed as follows:

space

a

= {prod

ag

i

, comm

ag

j

, coor

ag

k

|∀i, j , k } (5)

Ag = ⟨id, (group, role)

+

, behaviors, norms⟩ (6)

with id a unique identifier, role the role of the agent

in a specific group in the system, behaviors a list of

concurrent agent abilities, norms the set of norms that

the agent has to follow. Agents subscribe to norms,

depending on their group, role, type, abilities and cur-

rent context. We distinguish between three kinds of

norms. Communication norms are dedicated to the

formalization of human-to-human, human-to agent

and agent-to-agent access rights. Production norms

are dedicated to the formalization of domain- and

task-dependent constraints. Coordination norms are

dedicated to the formalization of the overall evolution

of task requirements. The role of these norms is to

launch the agents in a situated way, with respect to

these constraints. The space of norms is expressed as

follows:

space

n

= {prod

N

i

, comm

N

j

, coor

N

k

|∀i, j, k} (7)

N = ⟨id, context, group, role, ob ject⟩ (8)

in which context represents an overall evaluation

condition (current processing or activity stage, cur-

rent system state or actors’ situation), role rep-

resents the agent’s role concerned by this norm

and ob ject is a complex field, typically written as

launch(conditions , actions) characterizing the condi-

tional action attached to the norm (launching of agent

behavior).

4 RISK APPLICATION

The purpose of this short example is to exemplify the

expressiveness of the proposed approach and illus-

trates some of its coordination mechanisms. The sys-

tem operates according to the following information

flow: (1) Early detection of a tangible object move by

communication norms operating at the infrastructure

level: creation or update of the corresponding local

trace, (2) Triggering of the coordination norms: up-

date of the current set of norms (when necessary), (3)

Triggering of the production norms: computation of

further trace properties, (4) Triggering of the commu-

nication norms: providing feedback to local and dis-

tant human actors. When the game starts, a default

norm policy is activated to handle the process asso-

ciated with game initialization. The attacking state

is reached as soon as the corresponding coordination

tangiget is placed on a table. A designation tangiget

may then be handled. A new norm policy must be

applied to deal with this current state. A coordina-

tion norm, called Norm

attack

, detects this context and

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

252

Figure 3: Compliance and Handiness process in a specific phase of the RISK game.

launches a coordination agent (role synchro) to update

the norm policy for the attacking and attacked players:

Norm

attack

=< id, [step = f ight], synchro,

launch(cond, manage

normpolicy

) > with

(9)

cond = [trace.type(?t1) = coordination]

∧ [trace.table(?t1) =?tab1]

∧ [trace.name(?t1) = attack]

∧ [trace.type(?t2) = designation]

∧ [trace.table(?t2) =?tab1]

∧ [trace.country(?t2) =?c]

(10)

At the end of an attack, each player rolls a dice to

determine the winner and looser for this phase. A

production norm, called Norm

dice result

, ensures the

follow-up of the dice roll results, the determination

of the winner and loser and the launching of an agent

(role dice

result

), whose role will be to update the traces

accordingly:

Norm

dice result

=< id, [step = f ight],

dice

result

, launch(cond, win) > with

(11)

cond = [trace.type(?t1) = dice]

∧ [trace.value(?t1) =?v1]

∧ [trace.table(?t1) =?x]

∧ [trace.type(?t2) = dice]

∧ [trace.value(?t2) =?v2]

∧ [trace.table(?t2) ̸=?x] ∧[?v1 >?v2]

(12)

Figures 3 and 4 further depict the evolution of the

trace depending on the mutual action of the agents

and norms, for the attacking phase. Handling the

coordination tangigets on Table 1, and then designat-

ing an attacked territory belonging to Table3 modify

the trace, thus triggering the successive management

of its compliance and handiness properties, thanks

to the production and communication norms prodN1

and commN1. This updating triggers a communica-

tion norm commN2, resulting in a virtual feedback to

the local user operating on Table1. A new stage of

the game must now be entered (fighting stage), which

means that some norms have to be updated, for the

attacking and attacked players on both Table1 and

Table3. This process is divided in two steps, as il-

lustrated by Figure 4. The first one is the modifica-

tion of the global trace of the game (reachable by all

agents) by a coordination agent working at the global

group level. This coordination agent specifies the new

norm policy in the field policy of the global trace.

This agent is launched by the coordination norm

coordGlobal. The second step is the norm modifi-

cation for the attacked and attacking players, respec-

tively on Table1 and Table 3. This is performed by

coordination norm coordT 1 (resp. coordT 3) whose

role is to launch a coordination agent on the attacking

and attacked tables (Table1 and Table3) to update the

local norm policy as specified in the field policy of the

global game trace.

5 CONCLUSIONS

In this paper, we presented a new architecture based

on normative multiagent systems to support collabo-

rative work over distant tangible surfaces. The pur-

pose of our design, illustrated on a simple scenario

from the RISK game, is to support the representa-

tion and sharing of the systems of norms that frame

human collaboration. We have proposed to this end

an architecture inspired from the CLOVER approach

to groupware design, and involving production, com-

SupportingDistantHumanCollaborationunderTangibleEnvironments-ANormativeMultiagentApproach

253

Figure 4: Norm dynamic in a specific phase of the RISK game.

munication and coordination agents. These agents

are designed as sharing a common multidimensional

trace reflecting human as well as artificial actors’ ac-

tivity. Their role, under control of dedicated norms,

is to ensure the compliance, handiness and proper co-

ordination of activity. These properties are reflected

(i) in the numerical trace and (ii) over the physical

surface by means of virtual display, to ensure mu-

tual awareness between the human and artificial ac-

tors’ worlds. Further work would involve considering

more complex scenarios, i.e. more complex activities

and human organizations styles.

ACKNOWLEDGEMENTS

This work was done while F. Badeig was member of

the LIG/AMA team and was supported by Agence

Nationale de la Recherche under grant IMAGIT

(ANR-10-CORD-0017).

REFERENCES

Badeig, F., Garbay, C., Valls, V., and Caelen, J. (2012).

Normative approach for socio-physical computing -

an application to distributed tangible interaction. In

ICAART, pages 309–312.

Boella, G., van der Torre, L. V. N., and Verhagen, H. (2007).

Introduction to normative multiagent systems. In Nor-

mative Multi-agent Systems.

Dignum, V., J.-J.Meyer, and Weigand, H. (2002). Towards

an organizational model for agent societies using con-

tracts. In AAMAS, pages 694–695.

Erickson, T. and Kellogg, W. (2003). Social translucence:

Using minimalist visualizations of social activity to

support collective interaction. In H

¨

o

¨

ok, K., Benyon,

D., and Munroe, A., editors, Designing Information

Spaces: The Social Navigation Approach, pages 17–

41.

Filipe, J. (2000). An organizational semiotics model for

multi-agent systems design (short paper). In EKAW,

pages 449–456.

Garbay, C., Badeig, F., and Caelen, J. (2012). Normative

multi-agent approach to support collaborative work in

distributed tangible environments. In CSCW (Com-

panion), pages 83–86.

Gutwin, C., Greenberg, S., Blum, R., Dyck, J., K.Tee, and

McEwan, G. (2008). Supporting informal collabora-

tion in shared-workspace groupware. Journal of Uni-

versal Computer Science, 14(9):1411–1434.

Hollander, C. D. and Wu, A. S. (2011). The current state of

normative agent-based systems. Journal of Artificial

Societies and Social Simulation, 14(2):6.

Kolski, C., Forbrig, P., David, B., Girard, P., Tran, C. D.,

and Ezzedine, H. (2009). Agent-based architecture for

interactive system design: Current approaches, per-

spectives and evaluation. In HCI, pages 624–633.

Kraut, R. E., Fussell, R., and Siegel, J. (2003). Visual infor-

mation as a conversational resource in collaborative

physical tasks. In HCI, pages 13–49.

Laurillau, Y. and Nigay, L. (2002). Clover architecture for

groupware. In CSCW, pages 236–245.

Lepreux, S., Kubicki, S., Kolski, C., and Caelen, J. (2011).

Distributed interactive surfaces using tangible and vir-

tual objects. In Workshop Distributed User Interfaces,

pages 65–68.

Millot, P. and Mandiau, R. (1995). Man-machine coop-

erative organizations: formal and pragmatic imple-

mentation methods. In Hoc, J.-M., Cacciabue, P. C.,

and Hollnagel, E., editors, Expertise and technology,

pages 213–228. L. Erlbaum Associates Inc.

Pape, J. and Graham, T. (2010). Coordination policies for

tabletop gaming. In Graphics Interface, pages 24–25.

Rong, W. and Liu, K. (2006). A multi-agent architecture for

cscw systems: from organizational semiotics perspec-

tive. In PRIMA, pages 766–772.

Shaer, O. and Hornecker, E. (2010). Tangible user inter-

faces: Past, present and future directions. In HCI,

pages 1–138.

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

254