Detection of Semantic Relationships between Terms with a New

Statistical Method

Nesrine Ksentini, Mohamed Tmar and Fa¨ıez Gargouri

MIRACL: Multimedia, InfoRmation Systems and Advanced Computing Laboratory

University of Sfax, Higher Institute of Computer Science and Multimedia of Sfax, Sfax, Tunisia

Keywords:

Semantic Relatedness, Least Square Method, Information Retrieval, Query Expansion.

Abstract:

Semantic relatedness between terms plays an important role in many applications, such as information re-

trieval, in order to disambiguate document content. This latter is generally studied among pairs of terms and is

usually presented in a non-linear way. This paper presents a new statistical method for detecting relationships

between terms called Least Square Mehod which defines these relations linear and between a set of terms.

The evaluation of the proposed method has led to optimal results with low error rate and meaningful relation-

ships. Experimental results show that the use of these relationships in query expansion process improves the

retrieval results.

1 INTRODUCTION

With the increasing volume of textual data on the in-

ternet, effective access to semantic information be-

comes an important problem in information retrieval

and other related domains such as natural language

processing, Text Entailment and Information Extrac-

tion.

Measuring similarity and relatedness between

terms in the corpus becomes decisive in order to im-

prove search results (Agirre et al., 2009). Earlier ap-

proaches that have been investigating the latter idea

can be classiffied into two main categories: those

based on pre-available knowledge (ontology such as

wordnet, thesauri, etc) (Agirre et al., 2010) and those

inducing statistical methods (Sahami and Heilman,

2006),(Ruiz-Casado et al., 2005).

WordNet is a lexical database developed by lin-

guists in the Cognitive Science Laboratory at Prince-

ton University (Hearst, 1998). Its purpose is to iden-

tify, classify and relate in various ways the semantic

and lexical content of the English language. WordNet

versions for other languages exist, but the English ver-

sion, however, is the most comprehensive to date.

Information in wordnet ;such as nouns, adjectives,

verbs and adverbs; is grouped into synonyms sets

called synsets. Each group expresses a distinct con-

cept and it is interlinked with lexical and conceptual-

semantic relations such as meronymy, hypernymy,

causality, etc.

We represent WordNet as a graph G = (V, E) as

follows: graph nodes represent WordNet concepts

(synsets) and dictionary words; undirected edges rep-

resent relations among synsets; and directed edges

represent relations between dictionary words and

synsets associated to them. Given a pair of words

and a graph of related concepts, wordnet computes in

the first time the personalized PageRank over Word-

Net for each word, giving a probability distribution

over WordNet synsets. Then, it compares how similar

these two probability distributions are by presenting

them as vectors and computing the cosine between the

vectors (Agirre et al., 2009).

For the second category, many previous studies

used search engine collect co-occurrence between

terms. In (Turney, 2001), author calculate the Point-

wise Mutual Information (PMI) indicator of syn-

onymy between terms by using the number of re-

turned results by a web search engine.

In (Sahami and Heilman, 2006), the authors pro-

posed a new method for calculating semantic similar-

ity. They collected snippets from the returned results

by a search engine and presented each of them as a

vector. The semantic similarity is calculated as the

inner product between the centroids of the vectors.

Another method to calculate the similarity of two

words was presented by (Ruiz-Casado et al., 2005)

it collected snippets containing the first word from a

Web search engine, extracted a context around it, re-

placed it with the second word and checked if context

340

Ksentini N., Tmar M. and Gargouri F..

Detection of Semantic Relationships between Terms with a New Statistical Method.

DOI: 10.5220/0004960403400343

In Proceedings of the 10th International Conference on Web Information Systems and Technologies (WEBIST-2014), pages 340-343

ISBN: 978-989-758-024-6

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

is modified in the Web.

However, all these methods measure relatedness

between terms in pairs and cannot express them in a

linear way. In this paper, we propose a new method

which defines linear relations between a set of terms

in a corpus based on their weights.

The paper is organized as follows, section 2 is de-

voted to detailing the proposed method followed by

the evaluation in section 3. Finally, section 4 draws

the conclusions and outlines future works.

2 PROPOSED METHOD

Our method is based on the extraction of relation-

ships between terms (t

1

,t

2

, ··· ,t

n

) in a corpus of doc-

uments. Indeed, we try to find a linear relationship

that may possibly exist between them with the fol-

lowing form:

t

i

= f(t

1

,t

2

, ··· ,t

i−1

,t

i+1

, ··· ,t

n

) (1)

Least square method (Abdi., 2007), (Miller, 2006) is a

frequently used method for solving this kind of prob-

lems in an approximateway. It requires some calculus

and linear algebra.

In fact, this method seeks to highlight the connection

being able to exist between an explained variable (y)

and explanatory variables (x). It is a procedure to find

the best fit line (y = ax+ b) to the data given that the

pairs (x

i

, y

i

) are observed for i ∈ 1, ·· · , n.

The goal of this method is to find values of a and

b that minimize the associated error (Err).

Err =

n

∑

i=1

(y

i

− (ax

i

+ b))

2

(2)

Using a matrix form for the n pairs (x

i

, y

i

):

A = (X

T

× X)

−1

× X

T

×Y (3)

where A represents vector of values (a

1

, a

2

, ··· , a

n

)

and X represents the coordinate matrix of n pairs.

In our case, let term (t

i

) the explained vari-

able and the remaining terms of the corpus

(t

1

,t

2

, ··· ,t

i−1

,t

i+1

, ··· ,t

n

) the explanatory variables.

We are interesting in the linear models; the relation

between these variables is done by the following:

t

i

≈ α

1

t

1

+ α

2

t

2

+ · ··+ α

i−1

t

i−1

+ α

i+1

t

i+1

+·· · + α

n

t

n

+ ε =

∑

i−1

j=1

(α

j

t

j

) +

∑

n

j=i+1

(α

j

t

j

) + ε

(4)

Where α are real coefficients of the model and present

the weights of relationships between terms and ε rep-

resents the associated error of the relation.

We are looking for a model which enables us to

obtain an exact solution for this problem.

Therefore, we proceed to calculate this relation for

each document in the collection and define after that

the final relationship between these terms in the whole

collection. For that, m measurements are made for

the explained and the explanatory variables to calcu-

late the appropriate α

1

, α

2

, ··· , α

n

with m represent

the number of documents in the collection.

t

1

i

≈ α

1

.t

1

1

+ α

2

.t

1

2

+ · ··+ α

n

.t

1

n

t

2

i

≈ α

1

.t

2

1

+ α

2

.t

2

2

+ · ··+ α

n

.t

2

n

.

.

.

t

m

i

≈ α

1

.t

m

1

+ α

2

.t

m

2

+ · ··+ α

n

.t

m

n

(5)

Where t

j

i

is the Tf-Idf weight of term i in document j.

By using the matrix notations the system becomes:

t

1

i

t

2

i

.

.

.

t

m

i

|

{z }

t

i

≈

t

1

1

t

1

2

··· t

1

n

t

2

1

t

2

2

··· t

2

n

.

.

.

.

.

.

.

.

.

.

.

.

t

m

1

t

m

2

··· t

m

n

|

{z }

X

×

α

1

α

2

.

.

.

α

n

|

{z }

A

(6)

where X is a TF-IDF (Term Frequency-Inverse Doc-

ument Frequency) matrix whose rows represent the

documents and columns represent the indexing terms

(lemmas).

Thus, we seek A = (α

1

, ··· , α

n

) such as X ×A is more

near possible to t

i

. Rather than solving this system of

equations exactly, least square method tries to reduce

the sum of the squares of the residuals. Indeed, it tries

to obtain a low associated error (Err) for each relation.

We notice that the concept of distance appears.

We expect that d(X × A,t

i

)is minimal , which is writ-

ten:

min || X × A − t

i

|| (7)

To determine the vector A for each term in a corpus,

we applied the least square method on the matrix X

for each one.

∀i = 1, . . . , n.

A

i

= (X

iT

× X

i

)

−1

× X

T

[i, .] × t

i

(8)

Where X

i

is obtained by removing the row of the

term

i

in matrix X and n is the number of terms in a

corpus.

X

T

[i, .] represents the transpose of the line weight of

term

i

in all documents.

3 EXPERIMENTS

In this paper, we use our method to improve informa-

DetectionofSemanticRelationshipsbetweenTermswithaNewStatisticalMethod

341

tion retrieval performance, mainly, by detecting rela-

tionships between terms in a corpus of documents.

We focus on the application of the least square

method on a corpus of textual data in order to achieve

expressive semantic relationships between terms.

In order to check the validity and the performance

of our method, an experimental procedure was set up.

The evaluation is then based on a comparison of

the list of documents retrieved by a developed infor-

mation retrieval system and the documents deemed

relevant.

To evaluate within a framework of real exam-

ples, we have resorted to a textual database, of

1400 documents, called Cranfield collection (Ahram,

2008)(Sanderson, 2010). This collection of tests in-

cludes a set of documents, a set of queries and the list

of relevant documents in the collection for each query.

For each document of the collection, we proceed

a handling and an analysis in order to lead it to lem-

mas which will be the index terms. Once the doc-

uments are each presented with a bag of words, we

have reached by a set of 4300 terms in the whole col-

lection. Hence, matrix X is sized 1400 ∗ 4300. After

that, we applied on it the least square method for each

term in order to determine the vector A for each one.

The obtained values A

i

indicate the relationship be-

tween term

i

and the remaining terms in the corpus.

We obtain another square matrix T with 4300 lines

expressing the semantic relationships between terms

as follows:

∀i ∈ 1, 2, .. . ,4300,∀ j ∈ 1, 2, .. . ,4300

term

i

=

∑

(T[i, j].term

j

) (9)

Example of obtained semantic relationships:

Term airborn = 0.279083 action + 0.222742 air-

forc + 0.221645 alon + 0.259213 analogu + 0.278371

assum + 0.275861 attempt + 0.210211 behaviour +

0.317462 cantilev + 0.215479 carrier + 0.277437

centr + 0.216453 chapman + 0.22567 character +

0.23094 conecylind + 0.347057 connect + 0.239277

contact + 0.225988 contrari + 0.217225 depth +

0.283544 drawn + 0.204302 eighth + 0.26399 ellip-

soid + 0.312026 fact + 0.252312 ferri + 0.211903

glauert + 0.230067 grasshof + 0.223152 histori +

0.28336 hovercraft + 0.380206 inch + 0.238555 in-

elast + 0.205513 intermedi + 0.275635 interpret +

0.235573 interv + 0.216454 ioniz + 0.319457 meksyn

+ 0.200089 motion + 0.223062movement + 0.233753

multicellular + 0.376881 multipli + 0.436183 nautic +

0.219787 orific + 0.414204 probabl + 0.214005 pro-

pos + 0.305503 question+ 0.204316 read + 0.222911

reciproc + 0.256728 reson + 0.237344 review +

0.202781 spanwis + 0.351152 telemet + 0.226465ter-

min + 0.212812 toroid+ 0.339988 tunnel + 0.25228

uniform + 0.233854 upper + 0.20262 vapor.

We notice that obtained relationships between

terms are meaningful. Indeed, related terms in a re-

lation talk about the same context, for example the

relationship between the lemma airbon and the other

lemmas (airborn, airforc, conecylind, action, tunnel

. .. ) talks about the airborne aircraft carrier subject.

To test these relationships, we calculate for each one

the error rate (Err):

Err(term

i

) =

∑

1400

j=1

(X[ j, i] − (

∑

i−1

k=1

(X[ j, k] × T[i, k])

+

∑

4300

q=i+1

(X[ j, q] × T[i, q])))

2

(10)

The obtained values are all closed to zero, for exam-

ple the error rate of the relationship between term (ac-

count) and the remaining of terms is 1.5∗10

−7

and for

the term (capillari) is 5, 23∗ 10

−11

.

To check if obtained relations improve informa-

tion retrieval results, we have implemented a vector

space information retrieval system which test queries

proposed by the Cranfield Collection.

The aim of this kind of system is to retrieve docu-

ments that are relevant to the user queries. To achieve

this aim, the system attributes a value to each candi-

date document; then, it rank documents in the reverse

order of this value. This value is called the Retrieval

Status Value (RSV) (Imafouo and Tannier, 2005) and

calculated with four measures (cosines, dice, jaccard

and overlap).

Our system presents two kinds of evaluation;

firstly, it calculates the similarity (RSV) of a docu-

ment vector to a query vector. Then, it calculates the

similarity of a document vector to an expanded query

vector. The expansion is based on the relevant docu-

ments retrieved by the first model (Wasilewski, 2011)

and the relationships obtained by least square method.

Indeed, if a term of a collection is very related

with a term of query (α >= 0.5) and appears in a the

relevant returned documents, we add it to a query.

Mean Average Precision (MAP) is used to calculate

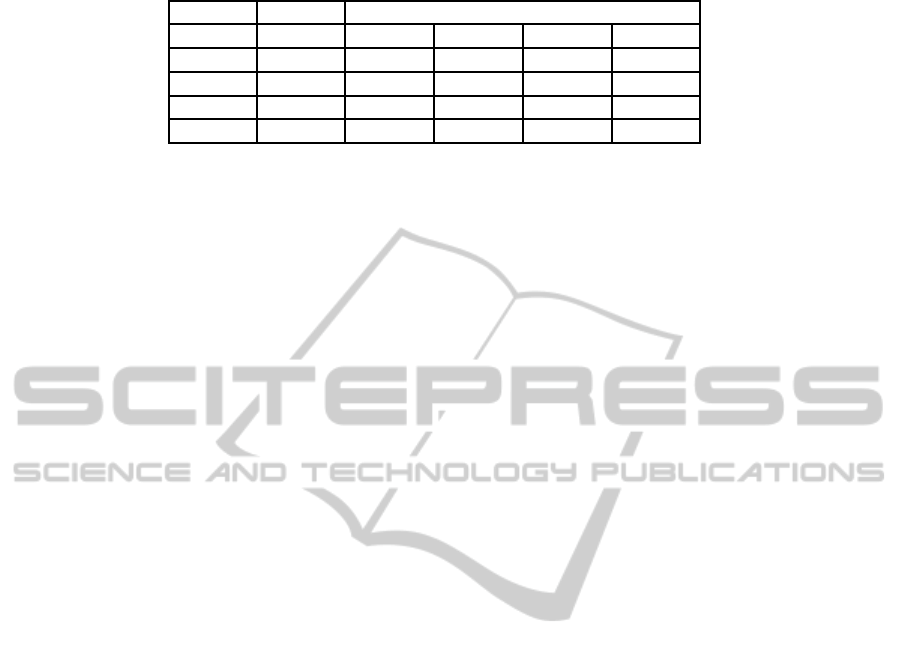

precision of each evalution. Table1 shows the ob-

tained results.

We notice from this evaluation, that relationships

obtained by least square method are meaningful and

can provide improvements in the information retrieval

process. Indeed, the MAP values are increasing when

these relations are used in information retrieval sys-

tem. For example, our method improves information

retrieval results using cosinus measure when α > 0.6

with MAP = 0.21826 compared to the basic VSM

model (MAP = 0.20858).

Compare our results with other works, we note

WEBIST2014-InternationalConferenceonWebInformationSystemsandTechnologies

342

Table 1: Variation of MAP values.

VSM VSM with expanded query

α > 0.8 α > 0.7 α > 0.6 α > 0.5

Cosinus 0.20858 0.20654 0.21273 0.21826 0.21822

Dice 0.20943 0.20969 0.21529 0.21728 0.22060

Jaccard 0.20943 0.21043 0.21455 0.21341 0.20642

Overlap 0.12404 0.12073 0.12366 0.12311 0.12237

that this new statistical method (least square) im-

proves search results. In (Ahram, 2008), experimen-

tal results from cranfield documents collection gave

an average precision of 0.1384 which is less than that

found in our work (0.21826 with cosinus measure,

0.22060 with dice measure).

4 SUMMARY AND FUTURE

WORKS

We present in this paper a new method for detect-

ing semantic relationships between terms. The pro-

posed method (least square) defines meaningful rela-

tionships in a linear way and between a set of terms

using weights of each one which represent the distri-

bution of terms in the corpus.

These relationships give a low error rate. Indeed, they

are used in the query expansion process for improving

information retrieval results.

As future works, firstly, we will intend to participate

in the competition TREC to evaluate our method on

a large test collection. Secondly, we will look for

how to use these relations in the process of weighting

terms and the definition of terms-documents matrix to

improve information retrieval results. Finally, we also

will investigate these relations to induce the notion of

context in the indexing process.

REFERENCES

Abdi., H. (2007). The method of least squares.

Agirre, E., Alfonseca, E., Hall, K., Kravalova, J., Pas¸ca,

M., and Soroa, A. (2009). A study on similar-

ity and relatedness using distributional and wordnet-

based approaches. In Proceedings of Human Lan-

guage Technologies: The 2009 Annual Conference of

the North American Chapter of the Association for

Computational Linguistics, NAACL ’09, pages 19–

27, Stroudsburg, PA, USA. Association for Compu-

tational Linguistics.

Agirre, E., Cuadros, M., Rigau, G., and Soroa, A. (2010).

Exploring knowledge bases for similarity. In Chair),

N. C. C., Choukri, K., Maegaard, B., Mariani, J.,

Odijk, J., Piperidis, S., Rosner, M., and Tapias,

D., editors, Proceedings of the Seventh International

Conference on Language Resources and Evaluation

(LREC’10), Valletta, Malta. European Language Re-

sources Association (ELRA).

Ahram, T. Z. (2008). Information retrieval performance

enhancement using the average standard estimator

and the multi-criteria decision weighted set of perfor-

mance measures. PhD thesis, University of Central

Florida Orlando, Florida.

Hearst, M. (1998). WordNet: An electronic lexical database

and some of its applications. In Fellbaum, C., edi-

tor, Automated Discovery of WordNet Relations. MIT

Press.

Imafouo, A. and Tannier, X. (2005). Retrieval status val-

ues in information retrieval evaluation. In String Pro-

cessing and Information Retrieval, pages 224–227.

Springer.

Miller, S. J. (2006). The method of least squares.

Ruiz-Casado, M., Alfonseca, E., and Castells, P. (2005).

Using context-window overlapping in synonym dis-

covery and ontology extension. In International Con-

ference on Recent Advances in Natural Language Pro-

cessing (RANLP 2005), Borovets, Bulgaria.

Sahami, M. and Heilman, T. D. (2006). A web-based ker-

nel function for measuring the similarity of short text

snippets. In Proceedings of the 15th international con-

ference on World Wide Web, WWW ’06, pages 377–

386, New York, NY, USA. ACM.

Sanderson, M. (2010). Test collection based evaluation of

information retrieval systems. Now Publishers Inc.

Turney, P. D. (2001). Mining the web for synonyms: Pmi-ir

versus lsa on toefl. In Proceedings of the 12th Euro-

pean Conference on Machine Learning, EMCL ’01,

pages 491–502, London, UK, UK. Springer-Verlag.

Wasilewski, P. (2011). Query expansion by semantic mod-

eling of information needs.

DetectionofSemanticRelationshipsbetweenTermswithaNewStatisticalMethod

343