Model-driven Privacy Assessment in the Smart Grid

Fabian Knirsch

1

, Dominik Engel

1

, Cristian Neureiter

1

, Marc Frincu

2

and Viktor Prasanna

2

1

Josef Ressel Center for User-Centric Smart Grid Privacy, Security and Control,

Salzburg University of Applied Sciences, Urstein Sued 1, A–5412 Puch/Salzburg, Austria

2

Ming-Hsieh Department of Electrical Engineering, University of Southern California, Los Angeles, U.S.A.

Keywords:

Smart Grid, Privacy, Model-based, Assessment.

Abstract:

In a smart grid, data and information are transported, transmitted, stored, and processed with various stake-

holders having to cooperate effectively. Furthermore, personal data is the key to many smart grid applications

and therefore privacy impacts have to be taken into account. For an effective smart grid, well integrated solu-

tions are crucial and for achieving a high degree of customer acceptance, privacy should already be considered

at design time of the system. To assist system engineers in early design phase, frameworks for the automated

privacy evaluation of use cases are important. For evaluation, use cases for services and software architectures

need to be formally captured in a standardized and commonly understood manner. In order to ensure this com-

mon understanding for all kinds of stakeholders, reference models have recently been developed. In this paper

we present a model-driven approach for the automated assessment of such services and software architectures

in the smart grid that builds on the standardized reference models. The focus of qualitative and quantitative

evaluation is on privacy. For evaluation, the framework draws on use cases from the University of Southern

California microgrid.

1 INTRODUCTION

In a smart grid a number of stakeholders (actors) have

to cooperate effectively. Interoperability has to be as-

sured on many layers, ranging from high level busi-

ness cases to low level network communication. Data

and information is sent from one actor to another in

order to ensure effective communication. Further-

more, the exchange of vast amounts of data is crucial

for many smart grid applications, such as demand re-

sponse (DR) or electric vehicle charging (Cavoukian

et al., 2010), (Langer et al., 2013). However, this

data is also related to individuals and privacy issues

are an upcoming concern (McDaniel and McLaugh-

lin, 2009), (Simmhan et al., 2011a). Especially the

combination of data, e.g., meter values and prefer-

ences for DR can exploit serious privacy threats such

as the prediction of personal habits. In system engi-

neering, privacy is a cross-cutting concern that has to

be taken into account throughout the entire develop-

ment life-cycle, which is also referred to as privacy by

design (Cavoukian et al., 2010).

Model-driven privacy assessment is especially

useful when applied in software engineering. In

(Boehm, 2006), the author thoroughly investigates the

phases in software engineering and the expected costs

for error correction and change requests. Costs dou-

ble with every phase and once an application or a ser-

vice is delivered, the additional adding of crosscutting

concerns such as privacy is tied to enormous costs. As

a result, design time privacy assessment is preferred

in early phases of the software engineering process.

Therefore, a framework is needed to (i) model the

system, including high-level use cases and concrete

components and communication flows; and (ii) to as-

sess the system’s privacy impact using expert knowl-

edge from the domain. Related work in the domain

of automated assessments in the smart grid mainly

focuses on security aspects and is not primarily con-

cerned with privacy and the modeling in adherence to

reference architectures.

In this paper we address these issues and present

an approach for the model-driven assessment of pri-

vacy for smart grid applications. The framework pro-

posed in this paper is designed to assist system engi-

neers to evaluate use cases in the smart grid in an early

design phase. For evaluation only meta-information

is used and no concrete data is needed. We use Data

Flow Graphs (DFG) to formally define use cases ac-

cording to a standardized smart grid reference ar-

chitecture. The assessment is based on an ontology

173

Knirsch F., Engel D., Neureiter C., Frincu M. and Prasanna V..

Model-driven Privacy Assessment in the Smart Grid.

DOI: 10.5220/0005229601730181

In Proceedings of the 1st International Conference on Information Systems Security and Privacy (ICISSP-2015), pages 173-181

ISBN: 978-989-758-081-9

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

driven approach taking into account expert knowledge

from various domains, including customer views on

privacy as well as system engineering concerns. The

output is a set of threats and a quantitative analysis

of risks, i.e., a number indicating the strength of that

threat. To evaluate the system we draw on insights

from the University of Southern California microgrid.

The primary contributions of this paper are (i) the use

of DFGs to model use cases in the smart grid; (ii) the

usage of DFGs for a quantitative privacy assessment;

and (iii) the use of an ontology driven approach to

capture domain knowledge.

The remainder of this paper is structured as fol-

lows: In Section 2 related work in the area of smart

grid reference architectures, privacy evaluation and

automated assessment tools is presented. In Section

3 the architecture of the proposed framework and its

components are described. This includes the concept

of DFGs for modeling use cases in the smart grid, the

principal design of the ontology and the mapping of

data flow graphs to the ontology, the methodology for

defining threat patterns and finally, how these patterns

are matched to use cases. The framework is evalu-

ated with a set of representative use cases in Section

4. Section 5 summarizes this paper and gives an out-

look to further work in this area.

2 RELATED WORK

In this section related work in the field of smart grid

reference architectures, privacy evaluation and assess-

ment as well as automated assessment tools are pre-

sented. Often, privacy and security are used inter-

changeably. For the purpose of this paper we refer to

privacy as legally accessing data but not using it for

the intended purpose. Security, by contrast, would in-

volve the illegal acquisition of data. In both cases, the

well established and widely understood terminology

from security assessment is used, i.e., threat, attacker,

vulnerability and countermeasure.

2.1 Reference Models

Stakeholders in the smart grid come from historically

different areas, including electrical engineering, com-

puter science and economics. To ensure interoperabil-

ity and to foster a common understanding, standard-

ization organizations are rolling out reference mod-

els and road maps. In the US the NIST Framework

and Roadmap for Smart Grid Interoperability Stan-

dards (National Institute of Standards and Technol-

ogy, 2012) and in the EU the Smart Grid Reference

Architecture (CEN, Cenelec and ETSI, 2012b) were

published. The European Smart Grid Architecture

Model (SGAM) is based on the NIST Framework, but

extends the model to better meet European require-

ments, such as distributed energy resources. In this

paper we investigate use cases from the US. In partic-

ular we are focusing on use cases from the University

of Southern California microgrid and we thoroughly

discuss a typical DR use case. Investigations have,

however, shown that for the purpose of this project

all use cases from the US can be directly mapped to

the European SGAM without the loss of information.

Therefore we propose the utilization of the SGAM for

two reasons: (i) the SGAM builds on the NIST model

and allows to capture both, use cases from the US and

the EU; and (ii) with the SGAM Toolbox (D

¨

anekas

et al., 2014) present a framework for modeling use

cases based on the SGAM; in that way formally mod-

eled use cases are the input for the evaluation.

2.2 Privacy

Privacy (and security) issues in the smart grid are ad-

dressed by standards in the US (National Institute of

Standards and Technology, 2010) and the EU (CEN,

Cenelec and ETSI, 2012a). Privacy, in specific, has no

clear definition. According to a thorough analysis in

(Wicker and Schrader, 2011), privacy can be defined

as the right of an individual’s control over personal

information. More formally this is defined by (Barker

et al., 2009) in a four dimensional privacy taxonomy.

The dimensions are purpose, visibility, granularity

and retention. The purpose dimension refers to the

intended use of data, i.e., what personal information

is released for. The purpose ranges from single, a spe-

cific use only, to any. Visibility refers to who has per-

mitted access. The range is from owner to all/world.

Granularity describes to what extent information is

detailed. The retention dimension finally is the period

for storage of data. In any case, privacy is assured

if all these dimensions are communicated clearly and

fully disclosed to data owners and the compliance to

the principles is governed. Hence, data is collected

and processed for the intended purpose only, and the

degree of visibility, granularity and retention is at the

necessary minimum.

2.3 Assessment Tools

To measure the degree to which systems adhere to pri-

vacy requirements, approaches for automated qualita-

tive assessments (resulting in statements of possible

privacy impacts due to privacy critical actions or rela-

tionships) and quantitative assessments (resulting in a

ICISSP2015-1stInternationalConferenceonInformationSystemsSecurityandPrivacy

174

numeric value that determines the risk of privacy im-

pacts) exist.

In (Ahmed et al., 2007), the authors present an ap-

proach towards ontology based risk assessment. The

authors propose three ontologies, the user environ-

ment ontology capturing where users are working, i.e.,

software and hardware, the project ontology capturing

concepts of project management, i.e., work packages

and tasks and the attack ontology capturing possible

attacks, e.g., non-authorized data access, virus distri-

bution or spam emails. For a risk assessment, attacks

(defined in the attack ontology) are matched with in-

formation available from the other ontologies. For a

quantitative assessment, the annual loss expectancy is

calculated by combining a set of harmful outcomes

and the expected impact of such an outcome with the

frequency of that outcome. The approach presented

by Ahmed et al. is designed for security issues and

does not explicitly cover privacy assessments.

In (Kost et al., 2011) and (Kost and Freytag, 2012)

an ontology driven approach for privacy evaluation is

presented. The aim of these papers is to integrate pri-

vacy in the design process. High-level privacy state-

ments are matched to system specifications and im-

plementation details. The proposed privacy by design

process includes the following phases: identification

of high-level privacy requirements, translation of ab-

stract privacy requirements to formal privacy descrip-

tions, realization of the requirements and modeling

of the system and analyzing the system by match-

ing formal privacy requirements to the formal system

model. Contrary to our work this approach is not fo-

cused on use cases in the smart grid and therefore does

not model systems based on a standardized reference

architecture.

A workflow oriented security assessment is pre-

sented in (Chen et al., 2013). This approach is not

based on ontologies but on argument graphs. The pre-

sented framework uses security goal, workflow and

system description, attacker model and evidence as an

input. This information is aggregated in a discrimina-

tive set of argument graphs, each taking into account

additional input. Nodes in the graph are aggregated

using boolean expressions and the output is a quanti-

tative assessment of the system. Instead of focusing

on workflow analysis using graphs, we model systems

as a whole in adherence to the standardized reference

architecture using an ontology driven approach to in-

tegrate expert knowledge.

A considerably broader approach for an assess-

ment tool that incorporates both, the balancing of pri-

vacy requirements and operational capabilities is pre-

sented in (Knirsch et al., 2015). This work presents

a graph based approach that allows the modeling of

systems with respect to the operational requirements

of certain nodes (e.g. metering at a certain frequency)

and the impact of privacy restrictions on subsequent

nodes. The authors further present an optimum bal-

ancing algorithm, i.e. to what extent restrictions

gained from privacy enhancing technologies and the

necessary operational requirements can be combined.

However, this needs sufficient information on how

privacy is impacted by certain use cases which is pro-

vided by this work.

3 ARCHITECTURE

This section is dedicated to an architectural overview

as well as a detailed discussion of the components.

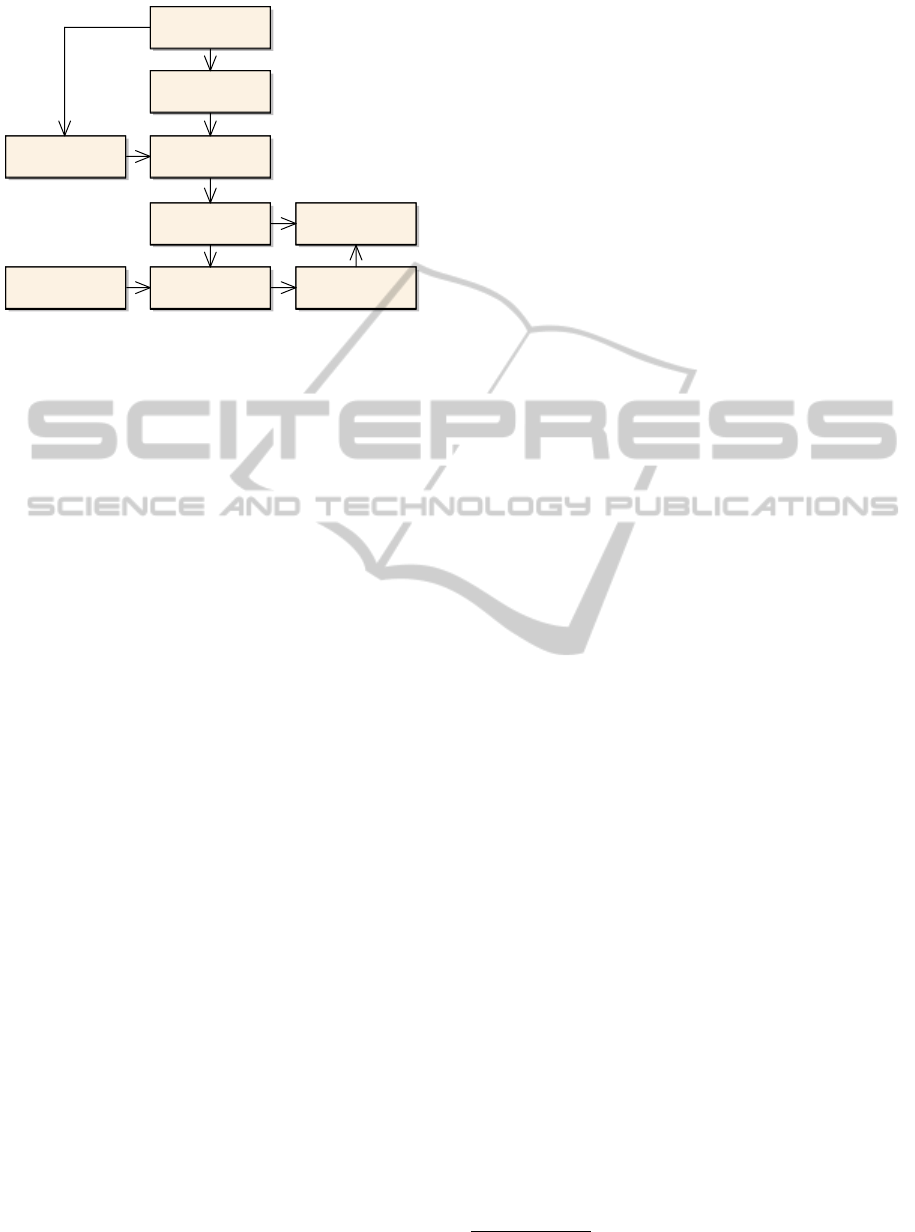

Figure 1 shows the principal components of the pro-

posed architecture, including input and output. For

a privacy assessment, the framework accepts two in-

puts, a use case UC modeled as a DFG in adherence

to the SGAM and a set of threat patterns T . In or-

der to qualitatively analyze this input the use case is

mapped to individuals – i.e., instances of classes – of

an ontology (sometimes referred to as the assertion

box, ABox (Shearer et al., 2008)). The correspond-

ing class model (sometimes referred to as the termi-

nological box, TBox (Shearer et al., 2008)) is based on

the SGAM. This qualitative analysis provides explicit

and implicit information about the elements from the

DFG: actors, components, information objects and

their interrelation. The results of the qualitative as-

sessment are the input for the subsequent quantitative

analysis. The output of that analysis is finally a class c

from a set of classes C that the use case is assigned to.

A threat pattern t is used to describe potential threats,

where t ∈ T and a class c represents a subset of threats

T

∗

. A class c describes how threat patterns and the

qualitative results are combined, which is presented

as a threat matrix as an output. Note that the terminol-

ogy threat matrix is borrowed from security analysis

and that the output is not a matrix in the mathematical

sense. A threat matrix compares a set of threats and

the risk for these threats. Formally, the classifier is de-

fined as Assign UC to c

i

if t ∈ T

∗

i

, ∀t ∈ T, 1 ≤ i ≤ {C}.

A threat exploits a set of vulnerabilities and is miti-

gated by a set of countermeasures. Each threat pat-

tern can be evaluated for itself or multiple patterns

are combined to classes of threats. A vulnerability

is any kind of privacy impact for any kind of stake-

holder or actor. Threats are evaluated using the attack

vector model which is adapted from security analy-

sis and defined in detail later in this paper. In gen-

eral, an attack is feasible, if given (i) an attacker; (ii)

a privacy asset; and (iii) the resources to perform the

Model-drivenPrivacyAssessmentintheSmartGrid

175

Smart Grid Architecture

Model (SGAM)

«Input»

Data Flow Graph (DFG)

Ontology TBox Ontology ABox

Qualitative Analysis

«Input»

Threat Patterns

Pattern Matching Quantitative Analysis

«Output»

Threat Matrix

Figure 1: Architecture overview showing input, output,

components and principal information flows of the frame-

work.

attack. Hence, a receiver or collector of privacy crit-

ical data items is potentially able to access these as-

sets and to use them in a way not corresponding to

the original purpose. This is formally represented as

h

data access, privacy asset, attack resources

i

.

3.1 Data Flow Graphs

In order to qualitatively and quantitatively assess the

privacy impact of a use case a formalization is cru-

cial. In this section we introduce the concept of Data

Flow Graphs (DFG) for the smart grid based on a

model-driven design approach originally presented in

(D

¨

anekas et al., 2014) and (Neureiter et al., 2013).

DFGs formally capture all aspects of use cases in the

smart grid in adherence to the SGAM. They contain

high-level business cases as well as detailed views of

a system’s characteristics such as encryption and pro-

tocols. DFGs are a powerful tool as they allow both,

easy modeling and full adherence to the reference ar-

chitecture. Furthermore, in the graph relationships

between actors, as well as the transported informa-

tion objects (IO) are modeled. Nodes in a graph rep-

resent business actors, system actors or components

and edges represent data flows annotated with IOs. In

accordance to the standard (CEN, Cenelec and ETSI,

2012b), DFGs consist of the following five layers:

1. Business Layer. In a DFG this layer is a high level

description of the business case. Business actors,

their common business goal and their business re-

quirements are modeled.

2. Function Layer. The function layer details the

business case by mapping business actors to sys-

tem actors and by dividing the high level business

goals in use cases and steps.

3. Information Layer. This layer describes informa-

tion flows in detail. System actors communicate

to each other through IOs. IOs are characterized

by describing information attributes on a meta-

level. An IO is one of the key data used for clas-

sification and is discussed in greater detail below.

4. Communication Layer. The communication layer

is a more detailed view on communication taking

into account network and protocol specifications.

5. Component Layer. In a DFG this layer contains

concrete components. Therefore system actors

are mapped to components and devices.

Each layer is a directed graph. Both, nodes and

edges can have attributes. The semantics, however,

are varying. For instance, where attributed edges in

the business layer describe a business case, in the in-

formation layer concrete meta-data of communication

flows are captured. Even though implicitly covered in

the model presented above, for automated evaluation

we introduce two additional layers: Between business

and function layer we include the Business Actor to

System Actor Mapping and between communication

and component layer the System Actor to Component

Mapping. This allows to capture the complexity of

use cases on different levels while still maintaining

the cross-layer relationship between high-level busi-

ness actors and their representation as components.

These layers are directed graphs as well, with edges

indicating the mapping. The mapping defines a one

to many relationship from business actors to system

actors and from system actors to components. In the

European Smart Grid Reference Architecture with the

SGAM Methodology an approach for mapping use

cases to the reference model is suggested. DFGs build

on this methodology focusing on actors and their in-

terrelation. An implementation for modeling DFGs

in UML is available as the SGAM Toolbox

1

. Data

Flow Graphs contain explicit information (what is

modeled) and implicit information (what can be con-

cluded). Conclusions are drawn using ontology rea-

soning.

3.2 Ontology Design

The ontology driven approach for classification has

been chosen for two main reasons: (i) ontologies are

powerful for capturing domain knowledge explicitly;

and (ii) through logic reasoning (Shearer et al., 2008)

ontologies are a source for implicit knowledge. The

power of ontologies to formally capture knowledge

and how to draw conclusions is discussed in (Guar-

ino et al., 2009). The power of reasoning for gaining

1

http://www.en-trust.at/downloads/sgam-toolbox/

ICISSP2015-1stInternationalConferenceonInformationSystemsSecurityandPrivacy

176

additional, implicit knowledge can easily be outlined

with two examples: In a DFG, information objects

may be sent from an actor A to an actor B and from

there to another actor C. This is explicitly modeled in

the DFG. A reasoner in an appropriate ontology, how-

ever, may conclude directly the transitivity, hence that

actor A in fact sends information to actor C. Another

example is concerned with compositions of data. An

information object I

1

may contain sensitive data and

it may be used by an actor D to compose another in-

formation object I

2

that is sent to a collecting actor

E. It is not explicitly modeled in the DFG, but it can

be concluded by the reasoner, that E receives an in-

formation object which is of type sensitive data since

I

2

is a composition of I

1

. The ontology we propose

here is designed to capture all aspects of a DFG. The

ontology is modeled in OWL

2

and class expressions

are stated in Manchester Syntax

3

. Therefore, all com-

ponents available for modeling DFGs are represented

either directly or as an abstraction in the ontology (re-

ferred to as the TBox). The DFG is represented in

the ontology as a set of individuals (referred to as

the ABox). Figure 2 depicts the principal classes and

relationships of the ontology and therefore the most

relevant concepts for mapping a DFG to the ontol-

ogy. This view shows the main classes and relation-

ships for illustration purposes only; our current ontol-

ogy comprises more than 60 classes, data properties

and object properties. Crucial concepts represented

immediately, include which actor sends or receives

which data and IO and how these IOs are composed.

Furthermore, a set of pre-classifiers is defined to de-

termine implicit knowledge.

These classifiers are OWL classes using an

equivalent class expression in Manchester Syntax.

For instance, to determine if some aggregation

consists of direct personal data, the following expres-

sion is used: Data and isAggregationOf some

DirectPersonalData. To determine the multiplicity

of the sending actor and if the data is a composi-

tion sent by many of such actors, more elaborate

expressions can be phrased: Data and isSentBy

some Actor and Multiplicity value "n" and

isCompositionOfMany some Data.

3.3 Threat Patterns

In this paper we evaluate the privacy impact on cus-

tomers, thus we identified the following list of typ-

ical high-level threats based on literature reviews

(Cavoukian et al., 2010), (Langer et al., 2013),

(Simmhan et al., 2011a). These threats have been

2

http://www.w3.org/TR/owl-features/

3

http://www.w3.org/TR/owl2-manchester-syntax/

Data Actor

Event Series BusinessCase

+sends+isSentBy

+isReceivedBy +receives

+isA ggregationOf

+isCompositionOf

+hasBusinessCase

+isS ubjectTo

Figure 2: Principal components of the ontology, showing a

subset of the relationships between actor and data.

modified in order to be more representative for the

use cases from the University of Southern California

microgrid that are investigated in this paper. Subse-

quently, IOs that may cause these threats are deter-

mined.

Customer Presence at Home. This privacy concern

is discussed in (Cavoukian et al., 2010). To poten-

tially determine a person’s presence at home, some

device in the customer premises is needed. This de-

vice collects data at a certain frequency, high enough

to have a resolution that allows to draw conclusions

on the energy usage of specific devices. Furthermore,

data collected from that device needs to be sent to an-

other actor (i.e., a utility). At the utility an individ-

ual or a system needs to have access to the data in an

appropriate resolution. Since we always assume that

data is accessed legally, we do not focus on unallowed

data access. Additionally, the total delay of the data

transmission is of relevance. If data is collected and

transmitted in almost real time the presence at home

can be determined immediately. If data is available

with a delay only, the analysis of past events and pre-

dictions might be possible. If this information is pub-

lished, an attacker might exploit this vulnerability in

order to break in the house.

Tracking Customer Position. This threat is espe-

cially interesting for electric vehicle charging. As-

suming the customer has some identification towards

the charging station, at least the location, a timestamp

and the amount of energy consumed will be recorded

for billing. Depending on the design of the infrastruc-

ture only little information will be sent to the opera-

tor or a very detailed profile of the customer is main-

tained. Here, the multiplicity of the actors is crucial

and the fact that different actors have access to the

same data. Attacks for this threat are described in

(Langer et al., 2013), e.g., using information for tar-

geted ads, for tracking movements to certain places or

to infer the income based on recharges.

3.4 Pattern Matching

Actual classification is done in the pattern matching

process. For each actor in the DFG and the ontology,

Model-drivenPrivacyAssessmentintheSmartGrid

177

respectively, the attack vector is determined, i.e., to

which resources does an actor have access and what

is the effort. If that shows feasible matching this

is seen as a threat. It can be retrieved immediately

from the ontology if an actor has access to a certain

IO. This is done by evaluating actor and data object

properties and by incorporating information from

the pre-classifiers. Furthermore, relationships on the

business layer and data properties such as encryption

are taken into account. The following, discriminative

set of classifiers is used to determine potential threats:

first, for each information object the data provider and

the data collector are determined (according to the

terminology defined in (Barker et al., 2009)) and it is

assessed who has access to the data. This yields a list

of three-tuples in the form hinformation object (IO),

data provider (DP), data collector (DC)i. Then it is

determined if an information object either contains

sensitive or direct personal data (according to the

terminology defined in (The European Parliament and

the Council, 1995)). This yields another three-tuple

in the form hinformation object (IO), sensitive (S),

direct personal (DP)i. Finally it is determined if the

attacker has actual data access, yielding one more

three-tuples in the form hinformation object (IO),

data collector (DC), access (A)i. Data access

depends on the relationship of actors, on data res-

olution, retention and encryption. Matching these

tuples to each other results in the components of the

attack vector, recalling hdata access, privacy asset,

attack resourcesi yields hhIO, DP, DCi, hIO, S, DPi,

hIO, DC, Aii. An exemplary attack vector for a

DR use case where DR preferences are sent to

the utility is hhDR preferences, customer, utilityi,

hDR preferences, false, falsei,

hDR preferences, utility, trueii. This already provides

thorough qualitative analysis. It is possible to deter-

mine which actor can potentially threaten the privacy

of another actor. It is even possible to conclude

how and where this might happen. However, for

a quantitative assessment the risk for a particular

threat is calculated. While a qualitative assessment is

useful in supporting detailed system design decisions

and evaluation, for a very first outline of the overall

system characteristics, a quantitative value is much

more expressive. Further, providing a numeric

value for the system’s privacy impact helps to easily

compare and contrast proposed designs.

Risk is calculated as the product of the probability

of occurrence (PO) and the expected loss (EL). For the

set T

∗

a number of patterns t

v,1

. . . t

v,N

and t

c,1

. . . t

c,M

,

respectively is defined. A pattern therefore contains

a set of conditions for vulnerabilities t

v,i

and counter-

measures t

c,i

. Conditions are SPARQL ASK queries

4

that return either true or false if the pattern applies

or not. For brevity, t

0

v

denotes the number of vul-

nerabilities that apply, t

0

c

the number of countermea-

sures that apply and t

v

and t

c

denote the total num-

ber of vulnerabilities and countermeasures, respec-

tively. In this paper we propose the following ap-

proach for determining values for the probability of

occurrence PO(t

0

v

, t

0

c

) and the expected loss EL(t

0

v

, t

0

c

):

PO(t

0

v

, t

0

c

) is determined by defining a plane that satis-

fies the following conditions: PO(t

0

v

= t

v

, t

0

c

= 0) = 1,

PO(t

0

v

= 0, t

0

c

= t

c

) = 0 and PO(t

0

v

= 0, t

0

c

= 0) =

1

2

.

This yields PO(t

0

v

, t

0

c

) =

1

2

(

t

0

v

t

v

−

t

0

c

t

c

+1). A linear model

is chosen due to its simplicity and might be extended

by more complex approaches in future. A condition

that is of type vulnerability increases EL(t

0

v

, t

0

c

), a con-

dition of type countermeasure decreases EL(t

0

v

, t

0

c

).

The value of EL(t

0

v

, t

0

c

) is defined in the pattern. Risk

R is finally defined by R = PO(t

0

v

, t

0

c

)EL(t

0

v

, t

0

c

).

To feed in the results gained from the quali-

tative analysis, certain variables in the query can

be bound to instances. For example, given the

following fraction of a query (where usc denotes

the namespace prefix for actors and IOs in the

University of Southern California microgrid) $io

usc:isSentBy ?systemactor . ?systemactor

usc:isRealizationOf ?businessactor .

?businessactor a usc:BusinessActor to

determine if some information object is sent by

some business actor. It is now possible to bind

the variable $io to a concrete value as deter-

mined in the qualitative assessment, e.g., $io ←

InformationObject.CustomerName. This allows

to assess a particular impact on a particular in-

formation object or component/actor based on the

previously calculated attack vectors.

We developed generic patterns for typical threats,

i.e., such as the ones mentioned above. The frame-

work is, however, not limited to this set of patterns

and allows the definition of an arbitrary number of

additional patterns to meet the individual needs of the

application scenario. The output of the framework is

a threat matrix contrasting the results from the qual-

itative analysis and from the quantitative risk assess-

ment. For a UC, a threat matrix contains the attack

vector and the assigned risk for the determined class

c.

For illustrative purposes, the following listing

shows an example pattern for customer presence at

home. This includes the vulnerability device in cus-

tomer premises (exemplary assigned an EL of 4) and

4

http://www.w3.org/TR/sparql11-query/

ICISSP2015-1stInternationalConferenceonInformationSystemsSecurityandPrivacy

178

the countermeasure aggregation of data from multiple

customers (exemplary assigned an EL of -6).

<Pattern name="customer presence at home">

<Vulnerability

name="device in customer premises">

<EL>4</EL>

<Condition>

?device x:isRealizationOf $ba .

$ba a x:BusinessActor .

?device x:Zone

"Customer Premises"ˆˆxsd:string

</Condition>

</Vulnerability>

<Countermeasure

name="aggregation of data from multiple

customers">

<EL>-6</EL>

<Condition>

$io x:manyAreAggregatedBy ?io2 .

?io2 x:isReceivedBy ?ba1 .

$io x:isRecevivedBy ?ba2

FILTER (?ba1 != ?ba2)

</Condition>

</Countermeasure>

</Pattern>

4 EVALUATION

For evaluating the framework new, previously unused

use cases are applied. The set of threat patterns and

their impact on privacy is based on the aforemen-

tioned literature reviews. We are therefore using a

representative set of use cases describing typical ap-

plications in the smart grid. This includes, but is not

limited to, smart metering, electric vehicle charging

and DR. In this section a real-life use case from the

University of Southern California microgrid is eval-

uated as an example. This use case has been chosen

as it is (i) simple enough to verify results based on

literature reviews; and (ii) complex enough to have

an interesting combination of actors and information

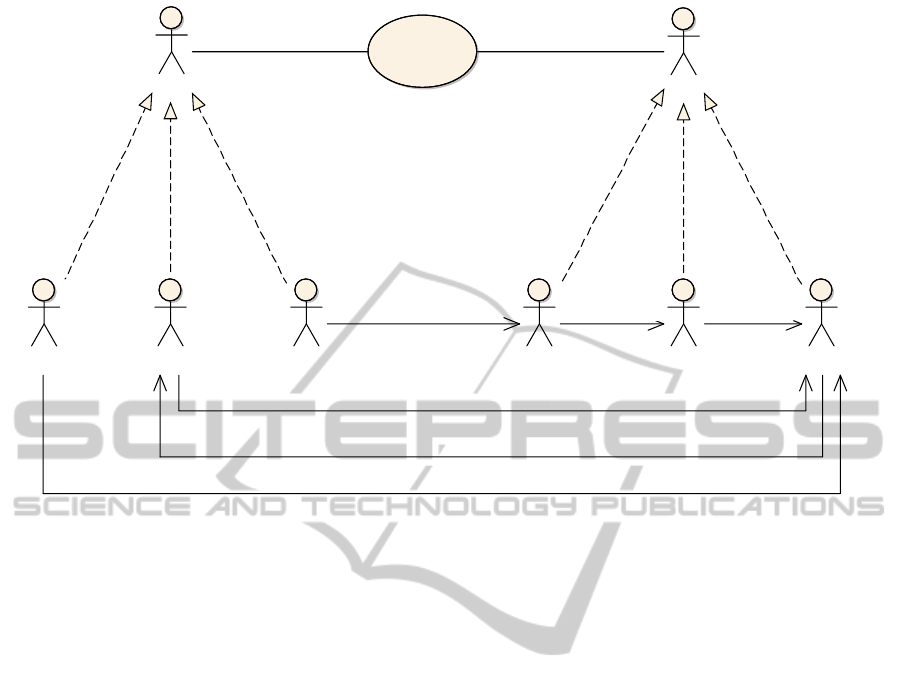

flows. We are focusing on a DR scenario similar to

the one described in (Simmhan et al., 2011b). This

scenario is outlined in Figure 3. A customer inter-

ested in DR creates an online profile stating on which

DR actions the customer is interested to participate

(e.g., turning down air condition). When the utilities

want to curtail load with DR, a customer whose pro-

file fits the current requirements is sent a text message

to, e.g., turn down the air condition. This message is

acknowledged by the customer and the utility further

reads the meter values to track actual power reduc-

tion. Besides the data flows mentioned, this further

involves the storing of the profile and the past behav-

ior of the customer for a more accurate prediction.

For modeling this use case as a DFG, the following

actors and IOs are identified. Evaluation is performed

with a prototypical implementation that uses DFGs

and threat patterns as an input and produces a threat

matrix as an output.

4.1 Data Flow Graph

Actors. Business actors are the user and the utility.

The user is mapped to the system actors smart meter,

device and portal. DR requests are sent to the user de-

vice (e.g., a cell phone) and the user’s DR preferences

are set in the portal (e.g., a web service). The smart

meter is used to measure actual curtailment. The util-

ity is mapped to a DR repository, containing prefer-

ences for each user and past behavior, to a prediction

unit predicting DR requests based on the preferences

and a control unit to meter user feedback and actual

curtailment.

Information Objects. Cross-domain/zone informa-

tion flows include user preferences sent to the utili-

ties, DR requests sent to the user from the utility and

both, the user acknowledge/decline and the meter val-

ues sent back to the utility. Information flows within

the utilities’ premises are from the DR repository to

the prediction unit and from the control unit to the

DR repository. Given the threat patterns introduced

in Section 3, we use our framework to determine the

privacy impact of this use case which provides the fol-

lowing results.

Customer Presence at Home. The qualitative analy-

sis shows that in the DR repository of the utility infor-

mation about both, past customer behavior and cus-

tomer data is brought together, i.e., direct personal

data is composed with a detailed history of a per-

son’s actions. Furthermore, the customer’s acknowl-

edge/decline and the measured curtailment reveal if a

customer (i) responded to the DR request; and (ii) ac-

tually participated in DR; both is a indication for the

presence at home. For this threat we identified four

vulnerabilities (device in customer premises, collect-

ing data at a certain frequency, receiver has access to

data, data retention is unlimited) and one countermea-

sure (aggregation of data from multiple customers),

resulting in a PO of 0.9, an EL of 11.5 and a risk

value of 10.35.

Tracking Customer Position. In our case, this threat

might apply in two different scenarios: First, this

threat is immediate if the acknowledge/decline re-

sponse to DR requests contains the customer posi-

tion (e.g., if sent by a cell phone or other mobile de-

vice). This does not only show the customers past

and present position, but also if the customer is able

to remotely control devices in his premises. Second,

when the customer is represented by an additional

Model-drivenPrivacyAssessmentintheSmartGrid

179

Customer

Utility

«SystemActor»

SmartMeter

«SystemActor»

Device

«SystemActor»

Portal

«SystemActor»

DRRepository

«SystemActor»

PredictionUnit

«SystemActor»

ControlUnit

Demand Response

DRRequest

DRP reparationOptimalDRPredictionDRPreferences

Acknowledge/Decline

CurtailmentMetering

Figure 3: Outline of the DR use case that is discussed for evaluation.

component electric vehicle charging station. Assum-

ing that DR requests are also sent with respect to the

charging behavior. Based on the amount of energy

the customer is willing to DR it might be possible to

estimate the consumption of the electric vehicle and

subsequently the traveled distance. For this threat

we identified two vulnerabilities (composition of lo-

cation and timestamp, different actors have access to

the same data) and one countermeasure (aggregation

of data from multiple customers), resulting in a PO of

0.66, an EL of 5 and a risk value of 3.33.

The mode-driven assessment of the DR use case

has shown that the risk of tracking customer posi-

tion is low compared to the risk of determining cus-

tomer presence at home. This result stems from the

fact that there apply a number of vulnerabilities with

high expected loss value, hence a device in the cus-

tomer premises, data collected at a certain frequency,

receiver has access to data and unlimited data reten-

tion.

5 CONCLUSION AND FUTURE

WORK

In this paper we introduced a framework for the

model-driven privacy assessment in the smart grid.

The framework builds on an ontology driven ap-

proach matching threat patterns to use cases that are

modeled in adherence to standardized reference ar-

chitectures. The approach presented here builds on

meta-information and high-level data flows. It has

been shown how to utilize this framework to success-

fully assess the privacy impact on use cases in early

design time. Exemplary threats and exemplary use

cases draw on insights from the University of South-

ern California microgrid. Future work will include an

evaluation of the systems ability to generalize to arbi-

trary kinds of threats in the smart grid. Furthermore

the system will be extended to serve as a policy de-

cision point for system developers and customers in a

smart grid IT infrastructure.

ACKNOWLEDGEMENT

The financial support of the Josef Ressel Center by the

Austrian Federal Ministry of Economy, Family and

Youth and the Austrian National Foundation for Re-

search, Technology and Development is gratefully ac-

knowledged. Funding by the Austrian Marshall Plan

Foundation is gratefully acknowledged. The authors

would like to thank Norbert Egger for his contribution

to the prototypical implementation.

This material is based upon work supported by

the United States Department of Energy under Award

Number number DE-OE0000192, and the Los Ange-

les Department of Water and Power (LA DWP). The

views and opinions of authors expressed herein do not

ICISSP2015-1stInternationalConferenceonInformationSystemsSecurityandPrivacy

180

necessarily state or reflect those of the United States

Government or any agency thereof, the LA DWP, nor

any of their employees.

REFERENCES

Ahmed, M., Anjomshoaa, A., Nguyen, T., and Tjoa, A.

(2007). Towards an ontology-based risk assessment in

collaborative environment using the semanticlife. In

Proceedings of the The Second International Confer-

ence on Availability, Reliability and Security, ARES

07, pages 400–407, Washington, DC, USA. IEEE

Computer Society.

Barker, K., Askari, M., Banerjee, M., Ghazinour, K.,

Mackas, B., Majedi, M., Pun, S., and Williams, A.

(2009). A data privacy taxonomy. In Proceedings of

the 26th British National Conference on Databases:

Dataspace: The Final Frontier, BNCOD 26, pages

42–54, Berlin, Heidelberg. Springer.

Boehm, B. (2006). A view of 20th and 21st century soft-

ware engineering. In Proceedings of the 28th Inter-

national Conference on Software Engineering, ICSE

2006, pages 12–29, New York, NY, USA. ACM.

Cavoukian, A., Polonetsky, J., and Wolf, C. (2010). Smart-

privacy for the smart grid: embedding privacy into the

design of electricity conservation. Identity in the In-

formation Society, 3(2):275–294.

CEN, Cenelec and ETSI (2012a). Smart Grid Information

Security. Technical report, CEN/Cenelec/ETSI Smart

Grid Coordination Group Std.

CEN, Cenelec and ETSI (2012b). Smart Grid Reference

Architecture. Technical report, CEN/Cenelec/ETSI

Smart Grid Coordination Group Std.

Chen, B., Kalbarczyk, Z., Nicol, D., Sanders, W., Tan, R.,

Temple, W., Tippenhauer, N., Vu, A., and Yau, D.

(2013). Go with the flow: Toward workflow-oriented

security assessment. In Proceedings of New Security

Paradigm Workshop (NSPW), Banff, Canada.

D

¨

anekas, C., Neureiter, C., Rohjans, S., Uslar, M., and En-

gel, D. (2014). Towards a model-driven-architecture

process for smart grid projects. In Benghozi, P.-J.,

Krob, D., Lonjon, A., and Panetto, H., editors, Digital

Enterprise Design & Management, volume 261 of Ad-

vances in Intelligent Systems and Computing, pages

47–58. Springer International Publishing.

Guarino, N., Oberle, D., and Staab, S. (2009). What Is

an Ontology? Handbook on Ontologies – Interna-

tional Handbooks on Information Systems. Springer,

2nd edition.

Knirsch, F., Engel, D., Frincu, M., and Prasanna, V. (2015).

Model-based assessment for balancing privacy re-

quirements and operational capabilities in the smart

grid. In Proceedings of the 6th Conference on Innova-

tive Smart Grid Technologies (ISGT2015). to appear.

Kost, M. and Freytag, J.-C. (2012). Privacy analysis us-

ing ontologies. In CODASPY ’12 Proceedings of the

second ACM conference on Data and Application Se-

curity and Privacy, pages 205–2016, San Antonio,

Texas, USA. ACM.

Kost, M., Freytag, J.-C., Kargl, F., and Kung, A. (2011).

Privacy verification using ontologies. In Proceedings

of the 2011 Sixth International Conference on Avail-

ability, Reliability and Security, ARES ’11, pages

627–632, Washington, DC, USA. IEEE Computer So-

ciety.

Langer, L., Skopik, F., Kienesberger, G., and Li, Q. (2013).

Privacy issues of smart e-mobility. In Industrial Elec-

tronics Society, IECON 2013 - 39th Annual Confer-

ence of the IEEE, pages 6682–6687.

McDaniel, P. and McLaughlin, S. (2009). Security and pri-

vacy challenges in the smart grid. Security Privacy,

IEEE, 7(3):75–77.

National Institute of Standards and Technology (2010).

Guidelines for smart grid cyber security: Vol. 2, pri-

vacy and the smart grid. Technical report, The Smart

Grid Interoperability Panel – Cyber Security Working

Group.

National Institute of Standards and Technology (2012).

NIST Framework and Roadmap for Smart Grid Inter-

operability Standards, Release 2.0. Technical Report

NIST Special Publication 1108R2, National Institute

of Standards and Technology.

Neureiter, C., Eibl, G., Veichtlbauer, A., and Engel, D.

(2013). Towards a framework for engineering smart-

grid-speficic privacy requirements. In Proc. IEEE

IECON 2013, Special Session on Energy Informatics,

Vienna, Austria. IEEE.

Shearer, R., Motik, B., and Horrocks, I. (2008). Hermit: A

highly-efficient owl reasoner. In Dolbear, C., Rutten-

berg, A., and Sattler, U., editors, OWLED, volume 432

of CEUR Workshop Proceedings. CEUR-WS.org.

Simmhan, Y., Kumbhare, A., Cao, B., and Prasanna, V.

(2011a). An analysis of security and privacy issues

in smart grid software architectures on clouds. In

IEEE International Conference on Cloud Computing

(CLOUD), 2011, pages 582–589. IEEE.

Simmhan, Y., Zhou, Q., and Prasanna, V. (2011b). Seman-

tic information integration for smart grid applications.

In Kim, J. H. and Lee, M. J., editors, Green IT: Tech-

nologies and Applications, pages 361–380. Springer,

Berlin Heidelberg, Germany.

The European Parliament and the Council (1995). Official

Journal L 281, 23/11/1995 P. 0031 - 0050 – Direc-

tive 95/46/EC of the European Parliament and of the

Council of 24 October 1995. Online.

Wicker, S. and Schrader, D. (2011). Privacy-aware design

principles for information networks. Proceedings of

the IEEE, 99(2):330–350.

Model-drivenPrivacyAssessmentintheSmartGrid

181