Investigating User Response to a Hybrid Sketch Based Interface for

Creating 3D Virtual Models in an Immersive Environment

Alexandra Bonnici

1

, Johann Habakuk Israel

2

, Anne Marie Muscat

1

, Daniel Camilleri

1

,

Kenneth Camilleri

1

and Uwe Rothenburg

3

1

Department of Systems and Control Engineering, Faculty of Engineering, University of Malta, Msida, Malta

2

Berliner Technische Kunsthochschule, University of Applied Sciences, Berlin, Germany

3

Division Virtual Product Creation Model-based Engineering,

Fraunhofer Institute for Production Systems and Design Technology IPK,

Pascalstrasse 8-9, 10587 Berlin, Germany

Keywords:

Sketch-based Interfaces, Mixed Sketching Environments, Usability Study.

Abstract:

Computer modelling of 2D drawings is becoming increasingly popular in modern design as can be witnessed

in the shift of modern computer modelling applications from software requiring specialised training to ones

targeted for the general consumer market. Despite this, traditional sketching is still prevalent in design, par-

ticularly so in the early design stages. Thus, research trends in computer-aided modelling focus on the the

development of sketch based interfaces that are as natural as possible. In this paper, we present a hybrid sketch

based interface which allows the user to make draw sketches using offline as well as online sketching modal-

ities, displaying the 3D models in an immersive setup, thus linking the object interaction possible through

immersive modelling to the flexibility allowed by paper-based sketching. The interface was evaluated in a

user study which shows that such a hybrid system can be considered as having pragmatic and hedonic value.

1 INTRODUCTION

Computer modelling of 2D drawings is becoming

increasingly popular in modern design (Cook and

Agah, 2009) and this can be observed in the shift

in computer modelling applications from software

such as AutoCAD (AutoDesk Inc, 2014) and CA-

TIA (Dassault Systems, 2014) among others, targeted

for engineers and architects to others such as Sketch-

Up (Trimble Navigation Limited, 2013) among oth-

ers, which target the general consumer market. De-

spite the fact that commercial computer modelling

interfaces are becoming more user-friendly, they are

primarily based on window, icon, menu and pointer

(WIMP) interfaces which contrast with the ease and

flexibility with which pen and paper sketches can be

created (Cruz and Velho, 2010; Olsen et al., 2011).

Thus, paper-based sketches are still popularly used by

designers to sketch initial ideas. Although not neces-

sarily accurate, sketches, allow the designer to start

depicting his ideas and build on them, creating flat,

2D representations of the designers initial ideas.

Thus, pen and paper sketching has an important

role in the design process, allowing the artist to exter-

nalise thought concepts quickly and efficiently (Cook

and Agah, 2009; Schweikardt and Gross, 2000). In

addition, since human observers can understand 2D

drawings as abstractions of the 3D world, artists can

use sketches as effective communications tools (Cruz

and Velho, 2010). This is particularly useful in com-

mercial design, allowing the artist to present the client

with initial designs before the final construction be-

gins (Cook and Agah, 2009). In modern design how-

ever, the computer modelling software provides for

enhanced graphics, such as virtual walk-through and

dynamic interaction, which augment the level of com-

munication between the artist and client (Schweikardt

and Gross, 2000), such that computer models of the

initial designs also have an important role in the de-

sign process. Therefore, the initial design stage will

typically involve quick pen and paper sketches which

are then re-drawn, sometimes by dedicated artists,

with computer modelling software (Eissen and Steur,

2007; Olsen et al., 2009).

The research trend in computer-based modelling

focuses on bridging the gap between pen and pa-

per sketching and the WIMP interfaces by creating

sketch-based interfaces (SBIs) that are as natural as

470

Bonnici A., Israel J., Muscat A., Camilleri D., Camilleri K. and Rothenburg U..

Investigating User Response to a Hybrid Sketch Based Interface for Creating 3D Virtual Models in an Immersive Environment.

DOI: 10.5220/0005320504700477

In Proceedings of the 10th International Conference on Computer Graphics Theory and Applications (GRAPP-2015), pages 470-477

ISBN: 978-989-758-087-1

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

possible (Lai and Zakaria, 2010). Thus, bringing

together the sketching flexibility of pen-and-paper

sketching with computer-based modelling.

In this paper, we build on the paper-based SBI

and immersive modelling environments described in

(Bartolo et al., 2008) and (Israel et al., 2013) respec-

tively to create a new SBI that combines 2D sketch-

ing with immersive 3D modelling. This interface dif-

fers from others described in the literature in that 2D

sketching can be performed online within the immer-

sive environment and in an offline environment, such

that 3D models can be projected in the immersive en-

vironment from the user pen-and-paper sketches, thus

creating a hybrid SBI that accepts online and offline

sketching as input. We also report the results of a

user study performedusing both sketching modalities,

hence observing the user’s perception to the new in-

terface.

The rest of this paper is organised as follows: Sec-

tion 2 presents the related work; Section 3 presents

our proposed sketch-based interface; the methodol-

ogy employed for the user evaluation is presented in

Section 4, with results discussed in Section 5, while

Section 6 concludes the paper.

2 RELATED WORK

Sketch based interfaces generally incorporate ges-

tures and sketching to allow the user to create 3D

models from drawings. Gestures, which can be cre-

ated using tools and instruments like pens, can range

from simple editing commands such as the deletion of

strokes, to more complex, 3D modelling commands

such as extrusion and lofting commands (Zeleznik

et al., 2006; Fonseca et al., 2002). To help the user

visualise the effect of the gesture, it is common prac-

tice for SBIs to temporarily visualise the gesture trace

as lines or strokes. Gestures therefore facilitate the

interpretation of the sketch, but require that the user

has a good knowledge of the gestures and their ac-

tions. Thus, sketched based interfaces reach a balance

between sketching freedom and the use of gestures

which aid the interpretation of the sketch.

One such interface is CHATEAUX (Igarashi et al.,

1997) which allows the artist to sketch in 3D, pro-

viding thumbnails with different possibilities with

which a sequence of strokes can be completed. While

such a suggestive interface can help speed up the

modelling process, it is somewhat intrusive, limit-

ing the design exploration to the suggested mod-

els provided by the interface. Less intrusive inter-

faces which also provide more drawing flexibility

are attained through blob-like inflations of 2D con-

tours, such as TEDDY (Igarashi et al., 1999) and

SHAPESHOP (Schmidt et al., 2006) among others.

These allow the designer to create blob-like mod-

els from the contours. By allowing creating mod-

els from sketched contours, these interfaces provide

for a natural drawing style, however, the inflations

used for the 3D modelling limit the applicability of

these interfaces to blob-like models. To amend this,

additional sketched gestures in the 3D space are re-

quired to mold the model into the desired shape. Such

gestures could range from simple inflation or defla-

tion of the blob-like model to more complex defor-

mation tools that are loosely modelled on deforma-

tions that are used to form clay sculptures, with DIG-

ITAL CLAY (Schweikardt and Gross, 2000) and FI-

BREMESH (Nealen et al., 2007) providing examples

of such interfaces.

These sketching modalities can be extended to in-

troduce fully immersive drawing (Perkunder et al.,

2010), (Israel et al., 2013), whereby a rendering sys-

tem and an optical tracking system to allow the user

to sketch and interact with 3D objects in a virtual en-

vironment within a five-sided CAVE. Freehand draw-

ing and modelling are carried out using three tangi-

ble interfaces, namely a stylus to draw virtual ink in

the virtual environment, a pair of pliers which allow

the user to group, reposition and release virtual ob-

jects in the CAVE and a Bezier-tool which allows the

user to extrude a Bezier curve in 3D space, follow-

ing the movement of a two-handed tool (Israel et al.,

2009). Withthis system, users are not restricted to any

particular gestures or sketching language and there-

fore, after overcoming the missing physical sketch-

ing medium, users are allowed greater sketching free-

dom than other interfaces mentioned earlier. More-

over, it has been shown that designers are able to learn

the necessary interaction techniques to interact with

the immersive environment, albeit with a rather steep

learning curve (Wiese et al., 2010).

These interfaces model the 3D geometries incre-

mentally, building the 3D shape as the user sketches

and makes use of gestures. Sketching must therefore

be carried out in an online fashion and, in the par-

ticular case of Israel et al., within the immersive en-

vironment, thus precluding the use of pen-and-paper

sketching. In contrast, Bartolo et al. describe a

sketching interface which infers the 3D geometry of

the sketch in an offline manner, allowing the user to

sketch with real ink on real paper, as well as with dig-

ital ink on graphic tablets. Using this SBI, the user’s

sketch is expected to contain two components namely,

the sketched longitudinal profile of the object, which

defines the object shape, and annotations, which aug-

ment the sketch with additional information about the

InvestigatingUserResponsetoaHybridSketchBasedInterfaceforCreating3DVirtualModelsinanImmersive

Environment

471

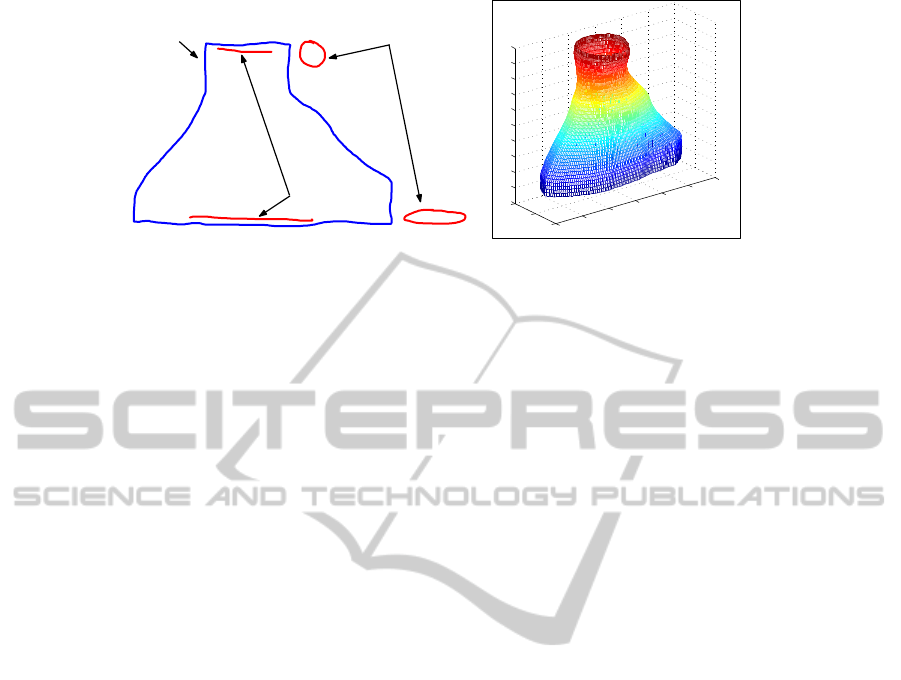

Longitudinal

sketch

Cross-sectional

profiles

Plane lines

(a)

200

300

400

500

600

700

800

-100

0

100

-50

0

50

100

150

200

250

300

350

400

450

(b)

Figure 1: (a) Example of a sketch drawn using the sketching language of (Bartolo et al., 2008). (b) The resulting 3D model.

3D geometry of the object. The annotations can be

further divided into plane lines and cross-sectional

profiles as shown in Figure 1. Cross-sectional pro-

files are used to define the cross-sectional shape of

the object while plane lines are used to indicate the

place on the sketch where the cross-sectional profiles

should be applied. The user is required to use differ-

ent colours for the sketch and annotations, allowing

the interpretation algorithms to demarcate the sketch

from the annotations. Although the cross-sectional

profiles define the 3D shape of the object at the plane

on which they reside, to create the full 3D model, the

object’s cross-sectional shape at intermediary planes

is required. The cross-sectional shape of the object

at these intermediary planes is determined by linearly

interpolating the shape of the cross-sectional profiles,

while the size and number of intermediary profiles re-

quired can be determined from the shape of the longi-

tudinal sketch (Bartolo et al., 2008).

Although this SBI allows the user to obtain 3D

models from offline sketches, the SBI does not of-

fer support for further interaction with the 3D model,

such that, if any part of the object needs modifica-

tion, the user must either redraw the sketch or port

the 3D model to some other SBI. In the latter case,

the user must engage with the object using the differ-

ent sketching rules of the second interface. Ideally, a

user will have an SBI that allows for offline and on-

line sketching modalities, providing for consistency

between the two modalities.

3 A HYBRID SKETCH BASED

INTERFACE

In this work, we build upon the offline SBI of (Bartolo

et al., 2008) and the immersive modelling of (Israel

et al., 2013) to create a preliminary hybrid SBI that

allows for offline and online sketching modalities, us-

ing a common sketching language as the sketch in-

put while allowing for a seamless interaction with the

completed 3D model.

3.1 Objects That Can Be Modelled

Using this preliminary SBI, the user will be able to

create 3D models of objects that have a single axis,

however, the object does not need to be symmetric

about this axis. The interface assumes that the top-

most and bottommostcross-sections are flat, while the

bottommost cross-section must be drawn such that it

is in a horizontal position.

3.2 Offline Sketching Modality

Using this modality, the user sketches the object us-

ing the prescribed sketching language, using real pen-

and-paper of a graphics tablet as a sketching medium,

scanning, or saving the sketch as an image for pro-

cessing. The 3D geometry of the object is inferred

from the sketch and this can be shown as a static 3D

model on the computer monitor or in the immersive

screen used in the online sketching modality.

The sketching language required for the sketch is

similar to that described in (Bartolo et al., 2008); the

user is required to sketch the longitudinal profile of

the object in one colour and provide annotations in

a different colour. However, we simplify the anno-

tations required by retaining only the cross-sectional

profiles and using the centre of moment of the cross-

sectional shape to determine the location of the plane

which bears this cross-sectional shape, thus rendering

the plane lines redundant.

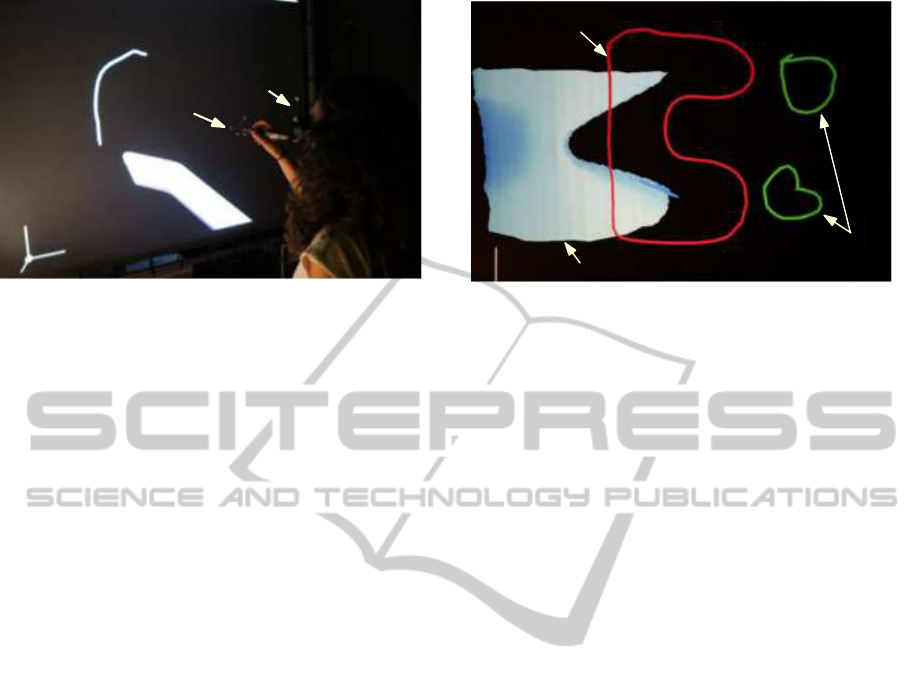

3.3 Online Sketching Modality

The online sketching modality adapts the offline

sketching language to an immersive environment. As

shown in Figure 2, the setup consists on an immersive

GRAPP2015-InternationalConferenceonComputerGraphicsTheoryandApplications

472

Immersive screen

pen

stylus

Head

tracker

Figure 2: Sketching in the immersive setup. The user is

seen here drawing the longitudinal profile using the stylus

pen. Once finished, the sketchedlongitudinal profile turns

to red, showing it has been correctly recognized.

screen together with a head tracking device. This al-

lows the user to observe the complete 3D model from

different angles. In this setup, we use two of the tangi-

ble interfaces described in (Israel et al., 2013), namely

the stylus which allows the user to sketch in virtual

ink and the pliers tool which allows the user to grab

and move the 3D object.

Since the sketch is being drawn in an online man-

ner, and the nature of the sketching language requires

that the user draws the longitudinal profile first in or-

der to obtain a reference against which the annotated

cross-sectional profiles are sketched, the sketch inter-

pretation can use the temporal information to distin-

guish between the annotations and the cross-sectional

profiles. Thus, using the online modality, the user

is not required to use different pen colours to sketch

the longitudinal profile and the cross-sectional pro-

files using different colours. However, colours are

introduced by the interface as a form of feedback,

changing the colour of the longitudinal profile from

green to red, providing visual feedback to the user,

indicating that the sketched strokes have been inter-

preted correctly by the system. The pen colour then

switches automatically to the default green, allowing

the user to sketch the cross sectional profiles, such

that the completed sketch will consist of a red longi-

tudinal profile and green cross sectional profiles.

4 USER EVALUATION

The success of an SBI depends on whether users are

willing to engage with the SBI and for this, the SBI

must be appealing to the user in terms of useability

and functionality. In this case, the user must find mo-

tivation and practical use for both the online sketch-

ing modality as well as the offline sketching modal-

Longitudinal sketch

Cross-sectional

profiles

3D model

Figure 3: The complete sketch and corresponding 3D

screen. After drawing the sketch, the 3D virtual model is

displayed in blue. This can be then rotated as needed by the

user using the plier tool.

ity for the SBI to be accepted as a hybrid SBI. The

user evaluation therefore seeks to understand if both

sketching modalities are accepted by the user, and

in cases where an immersive system is unavailable,

whether users would also be satisfied by using the of-

fline sketching modality, with the possibility of dis-

playing and interacting with their results in the im-

mersive environment at some later stage.

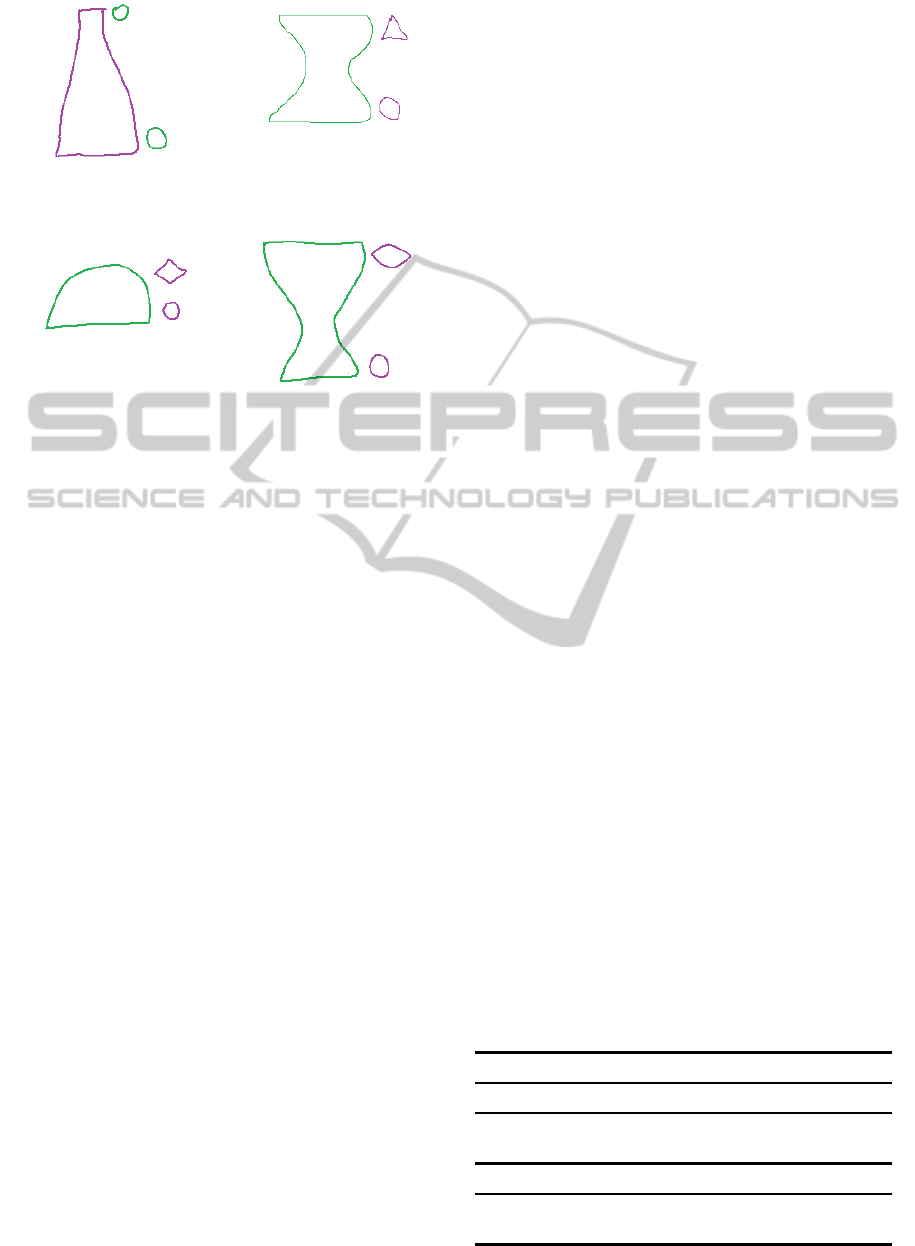

To this extent, we asked eight test subjects to try

the SBI. These test subjects were presented with four

different sketches, shown in Figure 4, which had to

be copied in order to obtain a 3D model from each

sketch. The sketches were drawn twice, once using

the online sketching modality and once using the of-

fline sketching modality, resulting in a total of eight

sketching tasks for each user. The subjects included

two females and six males whose age ranged between

21 and 36. Five of the subjects are engineers, two are

computer scientists and one, a human factor expert. In

order to ensure that the order of presentation does not

affect the outcome of the result of the user evaluation,

four subjects were presented with the offline sketch-

ing modality first, followed by the immersive sketch-

ing modality, while the remaining four subjects were

presented with the immersive modality followed by

the offline modality. For practical reasons, in the of-

fline sketching modality, subjects were given a Genius

G-Pen 450 drawing tablet (Genius G-Pen, 2007) in

lieu of traditional pen-and-paper. The resulting sketch

was then saved as an image and processed, with the fi-

nal 3D model being displayed on the same immersive

screen used for the online sketching modality. Be-

fore drawing the actual sketches, the users were given

time to familiarize themselves with the sketching in-

terfaces and after completing the sketching tasks in

each modality, subjects were asked to fill in a ques-

tionnaire about their experience and the usability of

InvestigatingUserResponsetoaHybridSketchBasedInterfaceforCreating3DVirtualModelsinanImmersive

Environment

473

Sketch 1 Sketch 2

Sketch 3 Sketch 4

Figure 4: The annotated sketches presented to the users to

copy. These sketches test the 3D model generation with

different longitudinal profiles and different cross-sectional

profiles.

the system. The time during which the users were en-

gaged in sketching was also recorded.

4.1 The Questionnaire

In order to determine how the users respond to

the SBI, we made use of the AttrakDiff ques-

tionnare (Hassenzahl, 2008), which consists of a

number of 7-point items with bipolar verbal anchors.

This provides a semantic differential scale which is a

rating scale that is able to indicate the attitude of the

user towards the interactive system at use. It is set in a

way that allows us to evaluate not only the pragmatic

functional quality of the system, but also the hedonic

aspects of the system, providing measures for the user

stimulation, identification with the system and its at-

traction (Hassenzahl, 2008).

The pragmatic quality (PQ) refers to the useful-

ness and usability of the system and can be measured

by asking the user to scale the system in terms of it

being human-centric or computer-centric; simple or

complicated; and confusing or clear amongst others.

The hedonic quality of stimulation (HQS) relates to

the personal need to develop oneself and gain new

skills and knowledge. This is measured by asking

the user to rank the system on a scale of original to

typical; standard to creative. The identification qual-

ity (HQI) refers to the user’s identification with the

system, giving an indication of how well the system

communicates important personal values to the user.

The user identification can be measured by ranking

the system on a scale of professional to amateurish;

cheap to valuable among others. The attraction qual-

ity (ATTR) of the system will give an indication of

whether the users had an overall pleasing interaction

with the system. This can be measured by asking the

user to rank the system on a scale of likeable to unlik-

able; and ugly to beautiful (Hassenzahl, 2008).

The questions posed in the questionnaire therefore

provide an insight on the overall user experience of

the system and give an indication of whether a user

would likely engage with the system again. In or-

der to be considered useful and desirable to users,

the proposed hybrid sketch-based interface must have

an above average ranking in the pragmatic, hedonic

and attractive qualities, for both the offline sketching

modalities and the online sketching modalities, im-

plying that users would find both modalities useful

and practical.

5 RESULTS AND DISCUSSION

Table 1 gives the mean and standard deviation of the

user responses for the pragmatic, hedonic and attrac-

tive qualities of the system. Since the questionnaire

made use of a 7-point scale, the results in Table 1,

show that the user response to the two sketch modal-

ities is above-average, indicating that the users re-

sponded well to both sketch modalities.

The average results shown in Table 1 show that

the test subjects gave a higher ranking to the hedonic

qualities of both sketching modalities, indicating that

the subjects could identify with and engage well with

both sketching modalities while being able to achieve

the set goals with both sketching modalities. The

lower pragmatic values can be due to the somewhat

restricted set of objects that can be modelled with the

system as well as the limited interaction that can be

Table 1: Average user responses µ to the questionnaire re-

sults for the pragmatic qualities (PQ), hedonic qualities of

identification (HQI) and stimulation (HQS) and the overall

hedonic quality (HQ) and attractiveness (ATTR) of the two

sketching modalities, giving also the standard deviation (σ)

of the user responses.

Online sketching modality

PQ HQS HQI HQ ATTR

µ

µ

µ 4.77 5.29 4.98 5.13 5.50

σ

σ

σ 1.02 0.47 0.71 0.45 0.5

Offline sketching modality

µ

µ

µ 4.18 4.39 4.41 4.40 4.68

σ

σ

σ 1.44 1.52 1.14 1.03 1.57

GRAPP2015-InternationalConferenceonComputerGraphicsTheoryandApplications

474

performed within the immersive environment which

were made available in this system. Increasing the in-

teractions could expand the range of objects that can

be modelled and hence increase the usability and use-

fulness of the system.

Table 1 shows that the test subjects gave different

ranking to the dimensions posed by the questionnaire.

Some differences in the user responses are to a certain

extent expected and are due to the different nature of

the sketching modalities. For example, when using

the online sketching modality, the 3D model could

be displayed instantaneously and the user could in-

teract directly with the 3D model whereas in the of-

fline sketching modality required that the generated

3D models were manually passed to the immersive

setup via a USB drive, incurring a delay between the

completion of the sketch and the display of the 3D

model in the immersive environment. Moreover, the

lab environment could have made the practical aspect

of the offline sketching paradigm, namely that the de-

sign concepts can be created when away from the im-

mersive setup while retaining the ability to display

and later manipulate these models in the immersive

environment, difficult to communicate to the test sub-

jects. Thus, the online sketching modality can be per-

ceived as more practical and less cumbersome than

the offline sketching modality.

The different sketching modalities could also af-

fect the hedonic qualities of the two systems. For ex-

ample, drawing on a graphics tablet is similar to draw-

ing on paper, such that the offline sketching modality

may appear to be more identifiable than stimulating,

while sketching in virtual ink, which has the added

difficulty of there being no physical drawing medium

may appear to be more challenging than stimulating.

Overall, the above average responses obtained for

both sketching modalities, indicates that the users

found the online and offline sketching modalities are

somewhat interchangeable. The results show that

there is a tendency for users to give a higher rank-

ing to the immersive system. For this reason, a one-

way ANOVA was performed on the user responses to

each of the pragmatic, hedonic and attractive qualities

of the two sketching modalities in order to determine

whether the difference observed is significant. Table 2

gives the result of this test which shows that there is

no statistical significance between the mean user re-

sponses to the two sketching modalities. Although the

greatest difference is observed in the overall hedonic

qualities, the ANOVA shows that there is no statis-

tical difference between the mean user responses to

questions on the stimulation and identification hedo-

nic qualities of the two sketching modalities. Thus,

although there are some differences between the user

Table 2: Results of the ANOVA at the 95% confidence level,

of the user responses on the pragmatic, hedonic and attrac-

tive aspects of the two sketching modalities.

F p-value

PQ 0.894 0.360

HQ 3.392 0.087

HQS 2.533 0.134

HQI 1.441 0.250

ATTR 1.983 0.181

responses in the questionnaire, the subjects in this

evaluation do not show a significant preference to ei-

ther sketching modality.

The recorded time taken by the users to complete

the four drawing tasks in both sketching modalities

are given in Figure 5(a). This shows that the users in

general required more time to complete the sketches

in the offline sketching modality, with all median

times being larger for the offline sketching modality

than for the online sketching modality. However, one

may note that there is higher variability in the time

spent during the offline sketching modality than the

online sketching modality, particularly for sketches

three and four. This is an indication that the time

spent in the offline sketching modality is more user

dependent than the online sketching modality. This

can in fact be observed in the average time each user

spent while sketching in online and offline modes as

shown in Figure 5(b). Form this, one may note that

while participants 4, 6, 7, and 8 have very little dif-

ferences in the time spent sketching, participants 2,

3, and 5 spent considerable more time on the offline

sketching modality. This is mainly due to the differ-

ences in the offline and online nature of sketching.

When drawing on the graphics tablet, the user was at

liberty to modify the sketch, removing any unwanted

parts, modifying others or even redrawing parts of the

sketch, as one would typically do when drawing us-

ing pen-and-paper. In the online environment how-

ever, we adopted the pen-computer interaction typi-

cally used in the absence of icons, that is, the ink is

interpreted upon pen release, such that the system the

digital ink once and as soon as this has been drawn

without offering the option to adjust any part of the

sketch. Thus any users wanting to make modifica-

tions while engaged in the online sketching were not

able to through this system.

6 CONCLUSION

In conclusion, this user study showed that this system

has both the pragmatic and hedonic qualities which

could be further developed into a fully fledged, hy-

InvestigatingUserResponsetoaHybridSketchBasedInterfaceforCreating3DVirtualModelsinanImmersive

Environment

475

Offline sketching modality Online sketching modality

Time to complete each sketch

Time (s)

Sketch

10

20

30

40

50

60

1 2 3 4 1 2 3 4

Time each user spent on sketching tasks

User

Time (s)

2 3 4 5 6 7 8

10

20

30

40

50

60

mean time for online modality

mean time for offline modality

one standard deviation range

Figure 5: (a) Time spent by the users to complete each sketch. (b) The average time and corresponding standard deviation error

that each individual user spent on all four sketching tasks in the online and offline modalities. Note that time measurements

were available for all but the first user participating in the evaluation study and that even numbered participants started with

the online sketching modality followed by the offline sketching modality while odd numbered participants approached the

tasks in reverse order.

brid sketching interface. By providing the user with

more scope for interaction with the sketched objects,

the possible geometries that can be created using this

hybrid interface can be extended beyond the scope of

this user study, increasing the utility and applicability

of the SBI. Furthermore, by automating the transfer of

the paper-based sketches into the immersive environ-

ment, less effort is required by the user to obtain the

3D models when sketching in the offline modality, al-

lowing the user to take advantage of an input modality

with which the user can already identify with.

The results obtained from this user study are en-

couraging and show that it is possible to integrate of-

fline and online sketching modalities, while retain-

ing a system that has pragmatic and hedonic qualities.

Moreover, this study shows that users can be given the

flexibility to choose their preferred sketching modal-

ity without reducing the quality of the generated 3D

models.

ACKNOWLEDGEMENTS

This research was done in collaboration with the

Fraunhofer Institute for Production Systems and De-

sign Technology Berlin. It was supported by VI-

SIONAIR, a project funded by the European Com-

mission under grant agreement 262044.

REFERENCES

AutoDesk Inc (2014). Autocad. http://www.autodesk.com/

products/autocad/overview. Last Accessed: 08-09-

2014.

Bartolo, A., Farrugia, P., Camilleri, K., and Borg, J. (2008).

A profile-driven sketching interface for pen-and-paper

sketches. In VL/HCC Workshop: Sketch Tools for Di-

agramming.

Cook, M. and Agah, A. (2009). A survey of sketch-based

3-d modeling techniques. Interacting with computers,

21:201–211.

Cruz, L. and Velho, L. (2010). A sketch on sketch-based in-

terfaces and modeling. In Graphics, Patterns and Im-

ages Tutorials (SIBGRAPI-T), 2010 23rd SIBGRAPI

Conference on, pages 22–33.

Dassault Systems (2014). Catia. http://www.3ds.com/

products-services/catia/. Last Accessed: 09-12-2014.

Eissen, K. and Steur, R. (2007). Sketching. Drawing Tech-

niques for Product Designers. BIS Publishers.

Fonseca, M. J., Pimentel, C., and Jorge, J. A. (2002). Cali:

An online scribble recognizer for calligraphic inter-

faces. In Sketch Understanding, Papers from the 2002

AAAI Spring Symposium. Citeseer.

Genius G-Pen (2007). Australia products review and rating

website. http://www.reviewproduct.com.au/ genius-g-

pen-450-graphics-pad/. Last Accessed: 10-12-2014.

Hassenzahl, M. (2008). The interplay of beauty, goodness,

and usability in interactive products. Hum.-Comput.

Interact., 19(4):319–349.

Igarashi, T., Matsuoka, S., Kawachiya, S., and Tanaka, H.

(1997). Interactive beautification: a technique for

rapid geometric design. In UIST ’97: Proceedings

of the 10th annual ACM symposium on User interface

software and technology, pages 105–114, New York,

NY, USA. ACM.

Igarashi, T., Matsuoka, S., and Tanaka, H. (1999). Teddy:

A sketching interface for 3d freeform design. In Pro-

ceedings of the 26th Annual Conference on Computer

Graphics and Interactive Techniques, SIGGRAPH

GRAPP2015-InternationalConferenceonComputerGraphicsTheoryandApplications

476

’99, pages 409–416, New York, NY, USA. ACM

Press/Addison-Wesley Publishing Co.

Israel, J., Wiese, E., Mateescu, M., Zllner, C., and Stark, R.

(2009). Investigating three-dimensional sketching for

early conceptual designresults from expert discussions

and user studies. Computers & Graphics, 33(4):462 –

473.

Israel, J. H., Mauderli, L., and Greslin, L. (2013). Mastering

digital materiality in immersive modelling. In Pro-

ceedings of the International Symposium on Sketch-

Based Interfaces and Modeling, SBIM ’13, pages 15–

22, New York, NY, USA. ACM.

Lai, C.-Y. and Zakaria, N. (2010). As sketchy as possible:

Application programming interface (api) for sketch-

based user interface. In Information Technology (IT-

Sim), 2010 International Symposium in, volume 1,

pages 1–6.

Nealen, A., Igarashi, T., Sorkine, O., and Alexa, M.

(2007). Fibermesh: Designing freeform surfaces with

3d curves. ACM Trans. Graph., 26(3).

Olsen, L., Samavati, F., and Jorge, J. (2011). Naturasketch:

Modeling from images and natural sketches. Com-

puter Graphics and Applications, IEEE, 31(6):24–34.

Olsen, L., Samavati, F. F., Sousa, M. C., and Jorge, J. A.

(2009). Sketch-based modeling: A survey. Computers

& Graphics, 33(1):85 – 103.

Perkunder, H., Israel, J. H., and Alexa, M. (2010). Shape

modeling with sketched feature lines in immersive 3d

environments. In (Eds.), E. Y.-L. D. . M. A., ed-

itor, ACM SIGGRAPH/Eurographics Symposium on

Sketch-Based Interfaces and Modeling SBIM10, page

127134.

Schmidt, R., Wyvill, B., Sousa, M. C., and Jorge, J. A.

(2006). Shapeshop: Sketch-based solid modeling with

blobtrees. In ACM SIGGRAPH 2006 Courses, SIG-

GRAPH ’06, New York, NY, USA. ACM.

Schweikardt, E. and Gross, M. D. (2000). Digital clay: de-

riving digital models from freehand sketches. Automa-

tion in Construction, 9(1):107 – 115.

Trimble Navigation Limited (2013). Sketchup. http://

www.sketchup.com/. Last Accessed: 08-09-2014.

Wiese, E., Israel, J. H., Meyer, A., and Bongartz, S.

(2010). Investigating the learnability of immersive

free-hand sketching. In Proceedings of the Seventh

Sketch-Based Interfaces and Modeling Symposium,

SBIM ’10, pages 135–142, Aire-la-Ville, Switzerland,

Switzerland. Eurographics Association.

Zeleznik, R. C., Herndon, K. P., and Hughes, J. F. (2006).

Sketch: An interface for sketching 3d scenes. In

ACM SIGGRAPH 2006 Courses, SIGGRAPH ’06,

New York, NY, USA. ACM.

InvestigatingUserResponsetoaHybridSketchBasedInterfaceforCreating3DVirtualModelsinanImmersive

Environment

477