Virtual Immersive Environments for Underwater Archaeological

Exploration

Massimo Magrini, Maria Antonietta Pascali, Marco Reggiannini,

Ovidio Salvetti and Marco Tampucci

SILab, Institute of Information Science and Technololgies - CNR, Via G. Moruzzi 1, 56124, Pisa, Italy

Keywords: Underwater Cultural Heritage, Image-based Modelling and 3D Reconstruction, Underwater Optical and

Acoustic Data Processing, Virtual Environment.

Abstract: In this paper we describe a system designed for the fruition of underwater archaeological sites. It is under

development in the ARROWS project (end August 2015, funded by the European Commission), along with

other advanced technologies and tools for mapping, diagnosing, cleaning, and securing underwater and coastal

archaeological sites. The main objective is to make easier the management of the heterogeneous set of data

available for each underwater archaeological site (archival and historical data, 3D measurements, images,

videos, sonograms, georeference, texture and shape of artefacts, others). All the data will be represented in a

3D interactive and informative scene, making the archaeological site accessible to experts (for research

purposes, e.g. classification of artefacts by template matching) and to the general public (for dissemination of

the underwater cultural heritage).

1 INTRODUCTION

Approximately the 72% of the Earth surface is

covered by marine water. The oceans represent a rich

source of discovery and knowledge in several fields,

from ecology to archaeology. In spite of the huge

extension and relevance in affecting the climate

trends and the underwater biology, the seabed

continues to be largely unexplored so far. This is

mostly due to the extreme setting represented by the

underwater environment, a reason why the majority

of the people cannot be involved in the direct

exploration. Indeed, the survey of these locations

typically requires large amounts of funding and

expertise, and may be a serious threat to the explorer

safety. The current computer technologies allow

overcoming these limitations and can extend the

information enclosed in the marine environment to a

wider community. In this scenario, our activity has

been focused on the development of a system with the

purpose of creating virtual scenes as a photorealistic

and informative representation of the underwater

sites. Through the virtualization of marine

environments, a generic user may explore a simulated

scene, realistically replicating the underwater survey

experience. The virtual scene allows the

archaeologists to perform offline analysis of wrecks

and provides the public with a tool to visit the

archeological site, without damage risk or safety

danger.

The system presented in this paper has been

developed in the frame of ARROWS, EU-FP7 project

devoted to the development of low cost technologies

for underwater archaeological site detection and

preservation. In more detail, the ARROWS topics

focus on the realization of tools for underwater sites

mapping, diagnosis and cleaning. To those aims a

team of heterogeneous Autonomous Underwater

Vehicles (AUVs) is currently being developed. These

vehicles sense the environment by means of proper

underwater sensors like digital cameras and side scan

sonar or multibeam echo-sounders. The virtual

representation of the marine seabed is based on the

data captured by the AUVs during the project

missions, data that are exploited both for the detection

of interesting sites as well as for the 3D reconstruction

and virtual environment implementation. The

resulting executable procedure is expected to be

appealing to the generic user: the virtual diving in

underwater scenarios features a wide set of possible

choices in terms of exploration actions, recreating in

a realistic way the survey and the discovery of

relevant archaeological sites. The following sections

53

Magrini M., Pascali M., Reggiannini M., Salvetti O. and Tampucci M..

Virtual Immersive Environments for Underwater Archaeological Exploration.

DOI: 10.5220/0005461700530057

In Proceedings of the 5th International Workshop on Image Mining. Theory and Applications (IMTA-5-2015), pages 53-57

ISBN: 978-989-758-094-9

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

of the paper contain a description of the procedure

setup for the active scenario realization and the data

employed to enrich the underwater scene. Finally, a

case study will be presented, where the described

method will be applied to the representation of an

underwater archaeological site, based on a data

capture campaign performed in a realistic underwater

scenario.

2 THE SYSTEM, METHODS AND

TECHNOLOGIES

A system designed for the research and dissemination

in underwater archaeology must fulfil many

requirements: it must offer an accurate reproduction

of the site and of the objects lying in it; it must let the

users to explore the scene at their own level of

education, with an interaction as natural as possible,

and possibly allow some actions (e.g. exporting 3D

models). An example of a virtual reality tool devoted

to the visualization of the underwater environment

and to the simulation of underwater robotics is

UWsim (M. Prats, 2012). Our system is a more

sophisticated tool; indeed it meets the need for an

easy but not simplistic visualization of the data

collected and available about an underwater

archaeological site.

The major features of the system, as a tool for

dissemination and research in the underwater cultural

heritage, are listed below:

⋅ Different usage by user type

⋅ Interactive, Informative and Immersive

⋅ Accurate virtual reconstruction.

In order to realize a system able to fulfil the

requirements and purposes described above, the

system has been designed and developed exploiting

the most advanced technologies and enriched with a

set of dedicated functionalities. Indeed, the system is

capable to adapt to the several needs of the user

providing different functionalities to the different

user approaches. By distinguishing two different

kinds of users and, along with them, two different

approaches, the system has been developed as a

technical tool for specialized users and as a powerful

disseminative tool for the public

The system provides a set of scenes that can be freely

explored by the user. These scenes are the results of a

processing pipeline dedicated to the 3D

reconstruction starting from raw data acquired,

exploiting equipped Autonomous Underwater

Vehicle, during dedicated campaign.

The user can interact with the scene objects and

access to additional data concerning them. These data

are:

⋅ videos captured during the ARROWS

missions or already available from pre-

existing resources;

⋅ raw data captured by different sensors

(sonograms, etc.);

⋅ the complete reconstructed 3D mesh of the

objects, displayed separately from the scene

and available for observations from multiple

points of view;

⋅ any supplementary information.

Moreover, the system is connected with a database

that manages historical information about the objects

represented in the scenes. These data describe several

features concerning the object’s dimensions, material

and history (for the archaeologist interest)

information.

The system also provides a set of functionalities

dedicated to measure the model. This tool can be very

useful to obtain further information about the

discover artefacts and, such as in case of jar, to

classify their type and/or define their functions (i.e.

distinguish between funerary, wine and food jar).

2.1 From Data to the 3D Model:

The Pipeline

As mentioned before, data about the underwater

environment are acquired by AUVs during dedicated

mission. The AUVs are equipped by several sensors

(such as optical camera, side scan sonar, etc.) which

data contribute to the reconstruction of the scene. The

processing pipeline in charge of 3D reconstruction is

described in the following.

Once the mission is completed, the data are

downloaded from the AUVs internal memories. The

downloaded data are then processed with algorithm

devoted to the detection of artefacts (D. Moroni 2013

and D. Moroni 2013). System operators can select the

most interesting scenes in order to perform further

accurate analysis and to reconstruct from them the

artefact 3D models. The sequences are analysed

frame by frame. The system automatically calibrates

the video frames, balances the colours and rectifies

other aberration occurred during the acquisition phase

(R. Prados, 2014). Exploiting advanced

photogrammetry algorithms and tools, the system

correlates each extracted frame with the others and

generates a point cloud (stored as a .ply file, a

standard file format of point clouds as well as meshes

containing also RGB information for every point) (O.

IMTA-52015-5thInternationalWorkshoponImageMining.TheoryandApplications

54

Pizarro, 2004 and T. Nicosevici, 2013). From this

point cloud, the system builds an optimized mesh

(created by the triangulation of the Poisson surface

that better fits the point cloud), using dedicated

software such as Meshlab (Meshlab) and/or Blender

(Blender). Finally the mesh is exploited to obtain the

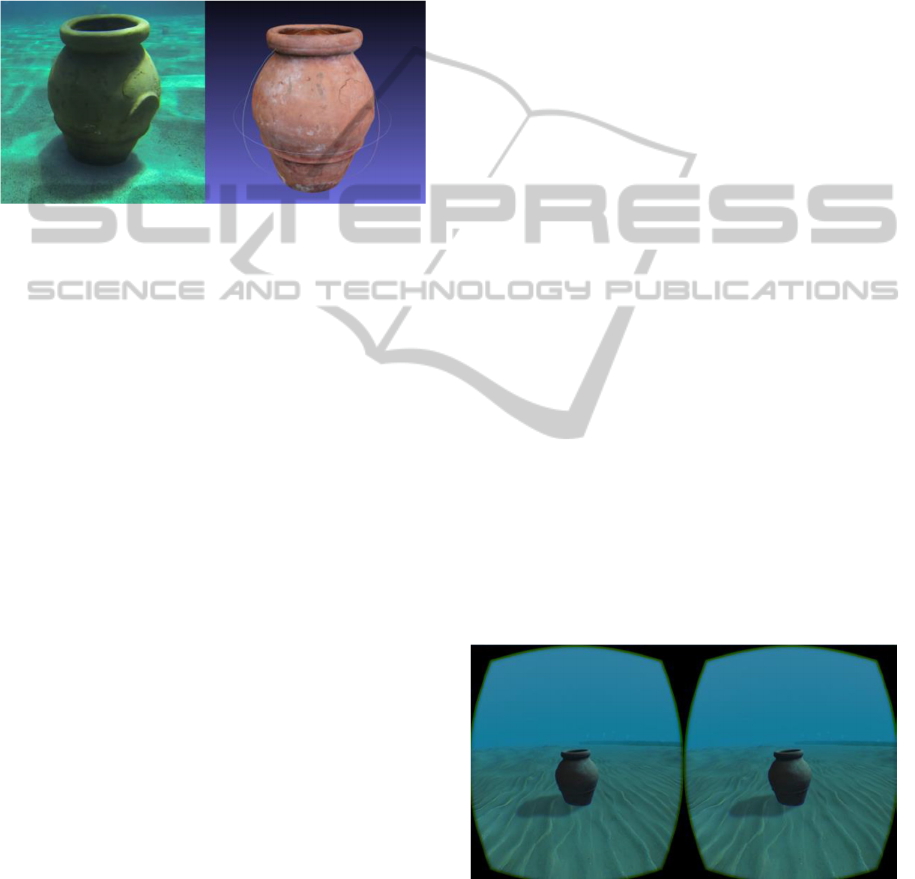

detailed 3D model of the object. Figure 1 shows the

original Jar acquired and, on the right, the final output

of the processing pipeline.

Figure 1: Reconstruction of a jar starting from a video

capture.

Intermediate results of the data processing pipeline

are made also available to perform further analysis on

them: such as the processing outputs of images that

are considered to be of high significance level or

particularly representative of an object, as well as

video excerpts to be directly displayed in 3D

modality.

As a further example, the rectified and properly

filtered optical data can be considered another

intermediate result, computationally quite simple, of

remarkable importance in terms of completeness of

the overall archaeological documentation process.

These intermediate output results turn out to be

particularly useful for the historical experts that

require an early, but at the same time “correct”,

visualization of the captured data, in order to review

and eventually correct the automated tagging

performed by the higher level object detection

algorithms.

2.2 Scene Representation and Fruition

In order to realize an immersive and freely navigable

environment, the generated models have to be placed

into a complex 3D scene. The development of a

rendering system for real-time navigation of a 3D

scene has been lightened by the exploitation of

graphics engines. The graphics engines are suites of

software that provide a set of high and low level

functionalities that also provide the support for the

transformations and event management typical of the

3D navigation: viewpoint change, zoom, object

collisions. The system implementation is based on the

Unity game engine (Unity) since it provides optimal

functionalities for an easy setup of the scene and

advanced management of the interaction with the

scene itself and the included objects.

Aiming at providing a useful tool for technicians and

archaeologists interested into the marine exploration,

the scene has to be also as relevant as possible to the

real explored underwater area. Thus, the global

terrain (seafloor) is reconstructed mainly exploiting

bathymetric sensor data while the objects of interest,

aiming at providing reliable analysis and at making

the environment more immersive and captivating, are

reconstructed exploiting optical data and

photogrammetry techniques (R. Campos, 2015 and P.

Drap, 2011).

The 3D scene navigation is an enhancing field for the

study of the newest interactive technologies,

generally related to videogames. Thanks to the Unity

compatibility with the most advanced gestural

interfaces and visors, the system integrates the

support to the usage of Kinect (Kinect), Leap Motion

(Leap Motion) and Oculus Rift (Oculus Rift). Kinect

and Leap Motion are in charge of recognize the user

gestures and to translate them into actual camera

movements and scene interaction. Oculus Rift is a

stereoscopic visor for the virtual reality that is able to

recognize the head movements and translate them

into the camera rotation inside the 3D scene.

The system exploits Kinect or Leap Motion to move

the user inside the scene and to manage the interaction

with the 3D models and exploits Oculus Rift as a

stereoscopic visor and for camera rotation. This

optional set up increases the system immersivity and

captivity and, in the other hand, provides a simple and

effective way to explore the scene and interact with

the placed objects (Figure 2).

Figure 2: A jar placed into the scene viewed through Oculus

Rift.

As described before, the system provides a set of

dedicated functionalities that allows obtaining further

information or performing analysis and

VirtualImmersiveEnvironmentsforUnderwaterArchaeologicalExploration

55

measurements on the 3D models placed into the

scene. A dedicated interface placed inside the scene

allows the access and the usage of these

functionalities. This kind of interface is available for

each 3D models and can be viewed and accessed

directly during the exploration of the scene (see

Figure 3).

Figure 3: A reconstructed jar with the interactive dedicated

interface displayed.

The system can be accessed both on a dedicated

station equipped with gestural devices and Oculus

Rift and via Web browser.

3 A USE CASE: A JAR LYING ON

A SANDY SEABED

In this section, we will describe a generic usage case

generated starting from an acquisition campaign

performed in a controlled realistic scenario. The

campaign has been focused into the acquisition of a

jar placed on the seabed.

Figure 4: The main menu of the scene navigation system.

Figure 4 shows the main menu of the system that is

displayed when user accesses to the virtual

environment. Main menu contains a button for each

represented scene and the button to quit from the

system. The background scene is static and realized

with acquired data. From the main menu, user can

access to all the reconstructed scenes.

By accessing to the desired scene, the user will have

the navigation control. From now on, the user can

freely explore the scene and interact with the object

placed in it. For instance, Figure 2 shows the interface

through which it is possible to obtain further

information about the jar. In this case, user can view

the stream of the video acquired during the campaign,

view the jar, extrapolated with the scene and without

any light effects (Figure 5), and/or obtain archive

information.

Figure 5: 3D model of the jar extrapolated from the scene

context and without light effects.

The 3D model is observable from multi point of view

and can be rotated without any restriction.

Furthermore, light effects used for increase the scene

immersivity, in this case, are turned off aiming at

obtaining a better reliability of the 3D models.

The archive information of the jar are obtained from

querying a database dedicated to manage historical

data about the object recognized during the

acquisition campaigns along with the sensor data

collected during these campaigns.

4 DISCUSSION AND

CONCLUSIONS

Our system turns out to meet all the requirements

indicated in section 2. Indeed it lets the user to access

both the whole scene and the raw or processed data

about findings in it, at different levels of detail

(depending on the user’s right of access).

The system lets the user explore freely the virtual

scene in a natural way (by the integration of

interaction devices like Kinect or Oculus Rift) and

interact with objects in the scene and to access all data

linked to them (raw data like images or videos, 3D

IMTA-52015-5thInternationalWorkshoponImageMining.TheoryandApplications

56

measurements, geotagging, 3D models, textures,

historical or archival data, links to the web).

Generally each acquisition campaign in the seas is a

high cost - high risk operation for people working in

the mission. The accurate virtual reconstruction offers

scholars and the public (not skilled for real diving) the

possibility of frequent (and safe) visits to the desired

site. Hence the archaeologist is provided with a tool

to perform indirect measurements and to formulate an

historical interpretation on the findings.

This system represents a first step towards a Google

Street View of the Seas for archaeological sites. There

already exists OCEANS Street View, which is

developing the safe and easy access to seas, but our

approach is quite different being more specific in

content (underwater archaeology), purpose

(dissemination and research), and looking for a more

immersive and interactive experience.

ACKNOWLEDGEMENTS

The activity described in this paper has been

supported by the ARROWS project. The project has

received funding from the European Union’s Seventh

Framework Programme for research, technological

development and demonstration under grant

Agreement no. 308724.

The authors would like to thank Dr. Pamela Gambogi,

Executive Archaeologist and Coordinator of the

Underwater Operational Team (N.O.S) of the

Tuscany Archaeological Superintendence.

REFERENCES

R. Campos, R. Garcia, P. Alliez, M. Yvinec. "A Surface

Reconstruction Method for In-Detail Underwater 3D

Optical Mapping". The International Journal of

Robotics Research, vol. 34 no. 1 64-89, January 2015.

eISSN: 1741-3176, ISSN: 0278-3649. DOI:

10.1177/0278364914546536.

P. Drap, D. Merad, J. Boï, W. Boubguira, A. Mahiddine, B.

Chemisky, E. Seguin, F. Alcala, and O. Bianchimani,

"ROV-3D, 3D Underwater Survey Combining Optical

and Acoustic Sensor", in Proc. VAST, 2011, pp.177-

184.

D. Moroni, M.A. Pascali., M. Reggiannini, O. Salvetti

“Curve recognition for underwater wrecks and

handmade artefacts.” In: IMTA-4 - 4th international

Workshop on Image Mining. Theory and Applications

(Barcelona, 23rd February 2013). Proceedings, pp. 14

- 21. SCITEPRESS, 2013.

D. Moroni, M.A. Pascali, M. Reggiannini, O. Salvetti

“Underwater scene understanding by optical and

acoustic data integration.” In: Proceedings of Meetings

on Acoustics (POMA), vol. 17 article n. 070085.

Acoustical Society of America through the American

Institute of Physics, 2013.

T. Nicosevici and R. Garcia. "Efficient 3D Scene Modeling

and Mosaicing", Springer, 2013. ISBN 978-3-642-

36417-4 (Book).

O. Pizarro “Large scale structure from motion for

autonomous underwater vehicle surveys.” PhD Thesis.

Massachusetts Institute of Technology, 2004.

R. Prados, R. Garcia, L. Neumann. "Image Blending

Techniques and their Application in Underwater

Mosaicing", Springer, 2014. ISBN 978-3-319-05557-2

(Book).

M. Prats, J. Perez, J.J. Fernandez, P.J. Sanz "An open

source tool for simulation and supervision of

underwater intervention missions", 2012 IEEE/RSJ

International Conference on Intelligent Robots and

Systems (IROS), pp. 2577-2582, 7-12 Oct. 2012.

Blender - link available at the address

http://www.blender.org/

Kinect V2 - link available at the address

http://www.microsoft.com/en-

us/kinectforwindows/develop/

Leap Motion - link available at the address

https://www.leapmotion.com/

Meshlab - link available at the address

http://meshlab.sourceforge.net/

OCEANS Street View - link available at the address

https://www.google.com/maps/views/streetview/ocean

s?gl=us.

Oculus Rift - link available at the address

https://www.oculus.com/

Unity - link available at the address http://unity3d.com/

VirtualImmersiveEnvironmentsforUnderwaterArchaeologicalExploration

57