Opinion Extraction from Editorial Articles based on Context

Information

Yoshimi Suzuki and Fumiyo Fukumoto

Graduate Faculty of Interdisciplinary Research, University of Yamanashi, 4-3-11 Takeda, Kofu, Japan

Keywords:

Opinion Extraction, Editorial Articles.

Abstract:

Opinion extraction supports various tasks such as sentiment analysis in user reviews for recommendations

and editorial summarization. In this paper, we address the problem of opinion extraction from newspaper

editorials. To extract author’s opinion, we used context information addition to the features within a single

sentence only. Context information are a location of the target sentence, and its preceding, and succeeding

sentences. We defined the opinion extraction task as a sequence labeling problem, using conditional random

fields (CRF). We used Japanese newspaper editorials in the experiments, and used multiple combination of

features of CRF to reveal which features are effective for opinion extraction. The experimental results show

the effectiveness of the method, especially, predicate expression, location and previous sentence are effective

for opinion extraction.

1 INTRODUCTION

Opinion extraction supports various tasks such as sen-

timent analysis in user reviews for recommendations,

document classification, and editorial summarization.

Much of the previous work on automatic opinion ex-

traction focused on sentiment or subjectivity classifi-

cation at sentence level. However, it is not sufficient

to find opinion by using features within a single sen-

tence only. For instance, in the news documents, al-

though the features of authors’ opinions are often ex-

pressed in predicates of a sentence, it is unusual to

find only one sentence containing opinion as well as

factual information (Wiebe et al., 2005).

In this paper, we focused on editorials of Japanese

newspaper, and present a method to extract authors’

opinions.

We employed conditional random fields (CRF)

(Lafferty et al., 2001) to use context information.

Context information indicates a location of the tar-

get sentence, and its preceding, and succeeding sen-

tences. In the experiments, we used multiple combi-

nation of features of CRF to reveal which features are

effective for opinion extraction. We used Japanese

newspaper editorials in the experiments. However,

the features used our method are very simple. There-

fore, our method can be applied easily to different lan-

guages given documents.

2 RELATED WORK

The analysis of opinions and emotions in language

is a practical problem as well as the process of large-

scale heterogeneous data since the World-Wide Web

is widely used. Wiebe et al. (Wiebe et al., 2005) pre-

sented annotation scheme that identifies key compo-

nents and properties of opinions and emotions in lan-

guage. They described annotation of opinion, emo-

tion, sentiment, speculations, evaluations and other

private states.

Apart from the corpus annotation, there are many

attempts on the automatic identification of opinions

(Hu and Liu, 2004; Kim and Hovy, 2004; Kobayashi

et al., 2004; Ku et al., 2006; Burfoot and Baldwin,

2009; Mihalcea and Pulman, 2007; Wicaksono and

Myaeng, 2013). The earliest work on opinion mining

is the work on Hu et al. (Hu and Liu, 2004). They

proposed a method of summarization by using opin-

ion extraction from customer reviews on the web. The

method consists of three steps: (i) mining product

features, (ii) identifying opinion sentences, and (iii)

summarizing the results. They reported that the ex-

perimental results using reviews of a number of prod-

ucts sold online demonstrated the effectiveness of the

techniques. Kim et al. focused on English words

and sentences. They proposed a template-based ap-

proach, a sentiment classifier by using thesauri (Kim

and Hovy, 2004). Kobayashi et al. (Kobayashi et al.,

Suzuki, Y. and Fukumoto, F..

Opinion Extraction from Editorial Articles based on Context Information.

In Proceedings of the 7th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2015) - Volume 2: KEOD, pages 375-380

ISBN: 978-989-758-158-8

Copyright

c

2015 by SCITEPRESS – Science and Technology Publications, Lda. All r ights reserved

375

2004) proposed a semi-automatic method to extract

evaluative expressions. They used review sites on the

Web for car and game domains, and extracted par-

ticular cooccurrence patterns of evaluated subject, fo-

cused attribute and value expressions. Balahur et al.

(Balahur et al., 2009) proposed a method for opinion

mining from quotations in newspaper articles.

Several researchers have investigated the use of

statistics and machine learning techniques. Ku et al.

(Ku et al., 2006) proposed a method for opinion ex-

traction of news and blog by using Support Vector

Machines (SVMs). Similar to Ku et al., Burfoot et

al. (Burfoot and Baldwin, 2009) proposed a method

for extracting satirical articles from newswire using

SVMs. Mihalcea et al. (Mihalcea and Pulman, 2007)

proposed a method using Naive Bayes and SVMs

for extracting humour text. However, they used fea-

tures within a sentence and ignore the relationships

between sentences. Wilson (Wilson, 2008) classified

sentences of news documents into 6 attitude types,

i.e. ’Sentiment’, ’Agreement’, ’Arguing’, ’Inten-

tion’, ’Speculation’ and ’Other ’Attitude’. They used

four types of machine learning, rule learning (Co-

hen, 1996), boosting, support vector machines, and

k-nearest neighbor. Wicaksono et al. (Wicaksono and

Myaeng, 2013) proposed a method to extract advice-

revealing and their context sentences from Web forms

based on Conditional random fields (CRFs) (Lafferty

et al., 2001). They compared their Multiple Lin-

ear CRFs (ML-CRF) and 2 dimensional CRFs Plus

(2D-CRF+) with traditional machine learning models

for advice-revealing sentences, and showed that ML-

CRF is the best approach among other models studied

in their paper. Similar to Wicaksono et al. method,

we used CRFs to extract opinion expressions from

editorial news. The difference is that we examined

the effect of multiple combination of features, while

they investigated the effect of machine learning tech-

niques.

3 OPINION EXPRESSION IN

EDITORIAL ARTICLES

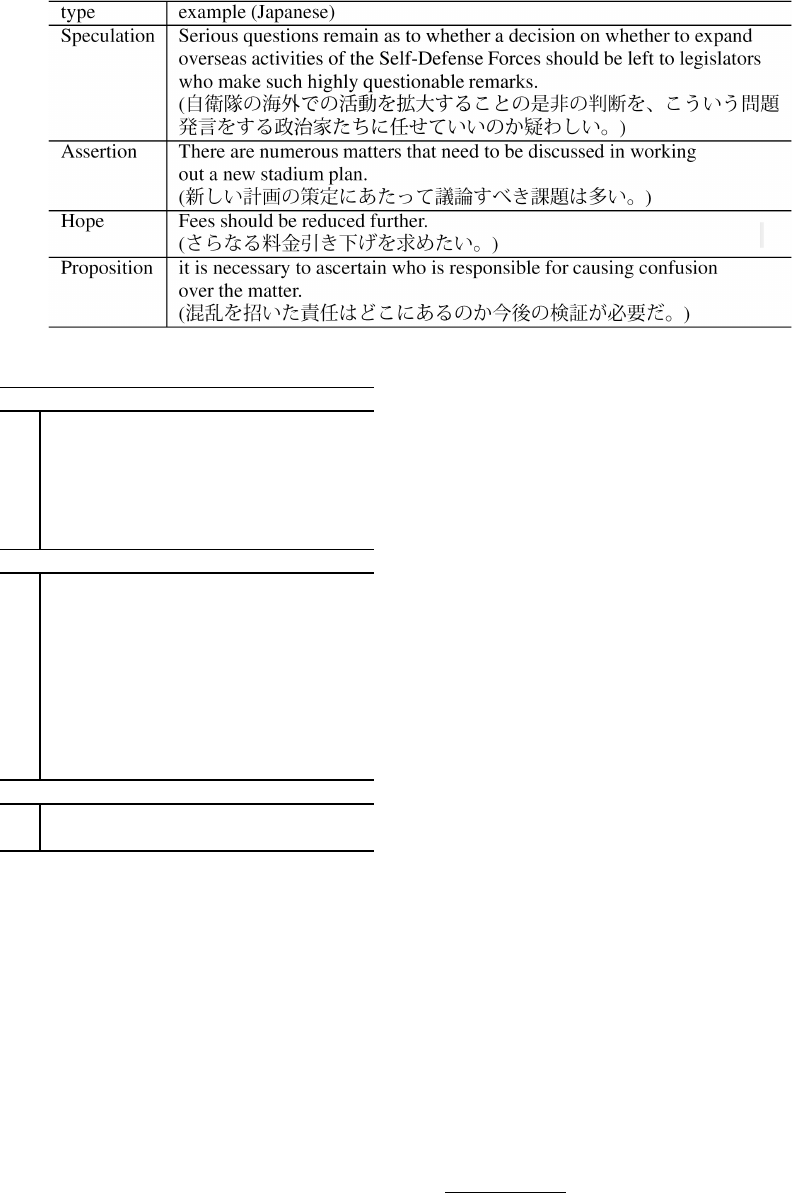

We classified authors’ opinion into 4 types, i.e. ’spec-

ulation’, ’hope’, ’proposition’ and ’assertion’. Figure

1 shows 4 types of opinion on two-dimensional sur-

face.

Table 1 shows an example of opinion sentence as-

signed to each type.

Typical expression, such as “utagawasii (Seropis

qiestopms remain)” in speculation type, and “kadai-

ha ooi (be numerous matters)” in assertion type. The

opinion defined in Figure 1 are the intersection among

Figure 1: Four types of opinion in editorial articles.

surface expression, location, and positional relation

between other opinion sentences. Most of the opin-

ions have typical expression. We assigned each sen-

tence illustrated in Table 1 to one of the four types

by using surface expression, location, and positional

relation of its preceding and succeeding sentences.

4 FEATURES FOR OPINION

EXTRACTION

The feature we defined for opinion extraction are cat-

egorized into three as shown in Table 2. From the

newspaper editorial analysis, we used the following

seven features to extract opinion. Predicate including

verbs, adjective and adverbs is an important feature

to extract opinion expression. It is often the case that

the sentence of the last part of the editorial articles in-

cludes an opinion. Let us take a look from Japanese

newspaper editorials.

5 CONDITIONAL RANDOM

FIELD (CRF)

Based on the extracted features, we identified opin-

ion expression by using CRFs. CRFs is a well known

technique for solving sequence labeling problems.

CRFs are discriminative models and can deal with

many correlated features in the inputs. CRFs have a

single exponential model for the joint probability of

the entire paths given the input sentence. Given a se-

quence X = (x

1

, x

2

, ···, x

n

), where n is the number of

sentences in the inputs, the goal is to find the sequence

of hidden labels Y = (y

1

, y

2

, ···, y

n

). The sequence of

hidden labels are obtained by a conditional distribu-

tion function given by:

p(yx) =

1

Z

x

(

∏

n−1

i=1

f(y

i

, y

i+1

, x, i)), (1)

KEOD 2015 - 7th International Conference on Knowledge Engineering and Ontology Development

376

Table 1: Examples of opinion sentences.

Table 2: Features for opinion extraction.

Syntactic Feature

1. predicate expression

PE (I would like to expect :kitai shitai)

2.

root form of predicate

RP (hope :kitai suru)

3.

subject

Subj (we, government)

Location

1. sentence location in article

LOC

partition number is 5.

2. sentence location in paragraph

LIP

partition number is 3.

3.

location of paragraph in article

LOP

partition number is 5.

Previous Sentence

1. opinion type of preceding sentence

OTP

where Z

x

is a normalization factor, and f indicates an

arbitrary feature function over i-th sequence. We used

CRFs to extract opinion expressions.

6 EXPERIMENTS

6.1 Experimental Setup

We selected editorial articles of Japanese newspaper

(Mainichi Shimbun newspaper written in Japanese)

for opinion extraction. We used one year (2011)

Mainichi Japanese Newspaper corpus for training and

test data. Table 3 shows the number of editorial arti-

cles, sentences and each opinion type in the editorial

articles.

We used 12-fold cross validation. More precisely,

we divided editorial articles into twelve months

shown in Table 3. We used eleven folds to train the

classifier, and the remaining fold to test the classifier.

The process is repeated 12 times, and we obtained the

average classification accuracy over 12 folds. We ap-

plied CaboCha (Kudo and Matsumoto, 2002) for mor-

phological analysis, and CRF++

1

. We used feature

sets described in Sec4.

6.2 Results

We examined which feature combination is effective

for opinion extraction. We thus conducted an experi-

ment using combination of seven features. The results

are shown in Table4. In Table 4, P, R, S, C, I, O and

T illustrate PE, RP, Subj, LOC, LIP, LOP and OTP of

the list in Sec 4 , respectively. In the columns of P, R,

S, C, I, O and T, “1” means that the feature is used for

opinion extraction, while “0” means that the feature

is not used for opinion extraction.

We can see from Table 4 that the best result was

when we use “PE”,“RP”, “Subj” and “LOP”, and the

F-score was 0.71. These results indicate that the com-

bination of these three features are especially effec-

tive for opinion extraction. Table 5 refers to the result

of opinion type classification when we used the best

results shown in Table 4.

7 DISCUSSION

We can see from Table 4 that the results using only

predicate expression achieved 0.83 precision, while

1

CRF++ : http://crfpp.sourceforge.net/

Opinion Extraction from Editorial Articles based on Context Information

377

Table 3: Number of editorials and sentences.

Month # of editorials # of sentences S A H P O

Jan. 52 1,437 75 97 46 20 1,199

Feb. 52 1,282 65 89 27 25 1,077

Mar.

57 1,612 24 101 55 46 1,386

Apr. 56 1,579 27 155 48 47 1,302

May

59 1,476 17 151 49 34 1,225

Jun. 57 1,397 12 171 47 35 1,132

Jul. 60 1,433 21 148 39 20 1,205

Aug.

53 1,507 53 129 38 31 1,256

Sep. 55 1,388 46 165 44 31 1,102

Oct.

60 1,418 41 151 40 20 1,166

Nov. 57 1,370 57 162 36 38 1,077

Dec.

58 1,442 67 140 32 39 1,164

Total 676 17,341 505 1,659 501 386 14,291

Table 4: Results of opinion extraction.

Features Rec Pre F0 Features Rec Pre F0

P R S C I O T P R S C I O T

1 1 1 1 1 1 1 0.80 0.62 0.70 1 0 1 1 1 1 1 0.81 0.58 0.67

1 1 1 1 1 1 0 0.80 0.63 0.70 1 0 1 1 1 1 0 0.81 0.58 0.67

1 1 1 1 1 0 1 0.79 0.60 0.68 1 0 1 1 1 0 1 0.80 0.56 0.66

1 1 1 1 1 0 0

0.78 0.61 0.68 1 0 1 1 1 0 0 0.79 0.57 0.66

1 1 1 1 0 1 1 0.80 0.62 0.70 1 0 1 1 0 1 1 0.81 0.58 0.67

1 1 1 1 0 1 0

0.80 0.63 0.70 1 0 1 1 0 1 0 0.81 0.58 0.67

1 1 1 1 0 0 1 0.79 0.60 0.68 1 0 1 1 0 0 1 0.80 0.57 0.66

1 1 1 1 0 0 0

0.76 0.63 0.69 1 0 1 1 0 0 0 0.76 0.59 0.66

1 1 1 0 1 1 1 0.80 0.63 0.71 1 0 1 0 1 1 1 0.82 0.59 0.68

1 1 1 0 1 1 0

0.80 0.63 0.71 1 0 1 0 1 1 0 0.81 0.59 0.68

1 1 1 0 1 0 1 0.79 0.62 0.69 1 0 1 0 1 0 1 0.80 0.59 0.68

1 1 1 0 1 0 0

0.78 0.62 0.69 1 0 1 0 1 0 0 0.81 0.58 0.68

1 1 1 0 0 1 1 0.81 0.63 0.71 1 0 1 0 0 1 1 0.81 0.59 0.68

1 1 1 0 0 1 0

0.81 0.63 0.71 1 0 1 0 0 1 0 0.81 0.59 0.69

1 1 1 0 0 0 1 0.79 0.62 0.69 1 0 1 0 0 0 1 0.80 0.59 0.68

1 1 1 0 0 0 0

0.76 0.65 0.70 1 0 1 0 0 0 0 0.77 0.60 0.67

1 1 0 1 1 1 1 0.83 0.45 0.58 1 0 0 1 1 1 1 0.86 0.35 0.50

1 1 0 1 1 1 0

0.84 0.45 0.58 1 0 0 1 1 1 0 0.86 0.35 0.50

1 1 0 1 1 0 1 0.83 0.43 0.57 1 0 0 1 1 0 1 0.87 0.34 0.48

1 1 0 1 1 0 0

0.81 0.44 0.57 1 0 0 1 1 0 0 0.85 0.34 0.48

1 1 0 1 0 1 1 0.84 0.45 0.59 1 0 0 1 0 1 1 0.86 0.35 0.50

1 1 0 1 0 1 0 0.84 0.44 0.58 1 0 0 1 0 1 0 0.86 0.35 0.50

1 1 0 1 0 0 1

0.82 0.43 0.57 1 0 0 1 0 0 1 0.87 0.34 0.49

1 1 0 1 0 0 0 0.70 0.49 0.57 1 0 0 1 0 0 0 0.58 0.44 0.50

1 1 0 0 1 1 1

0.83 0.44 0.58 1 0 0 0 1 1 1 0.86 0.36 0.51

1 1 0 0 1 1 0 0.84 0.44 0.58 1 0 0 0 1 1 0 0.87 0.35 0.50

1 1 0 0 1 0 1

0.83 0.43 0.57 1 0 0 0 1 0 1 0.87 0.35 0.50

1 1 0 0 1 0 0 0.82 0.43 0.57 1 0 0 0 1 0 0 0.87 0.34 0.49

1 1 0 0 0 1 1

0.84 0.45 0.58 1 0 0 0 0 1 1 0.86 0.35 0.50

1 1 0 0 0 1 0 0.84 0.45 0.58 1 0 0 0 0 1 0 0.87 0.35 0.50

1 1 0 0 0 0 1

0.82 0.44 0.57 1 0 0 0 0 0 1 0.88 0.35 0.50

1 1 0 0 0 0 0 0.53 0.53 0.53 1 0 0 0 0 0 0 0.31 0.83 0.45

KEOD 2015 - 7th International Conference on Knowledge Engineering and Ontology Development

378

Table 5: The results of opinion type classification.

Type Recall Precision F-score

Speculation 0.54 0.47 0.51

Assertion 0.75 0.47 0.58

Hope

0.80 0.82 0.81

Proposition 0.64 0.62 0.63

recall was 0.31. This shows that when the predicate

expression appeared in the test data does not appear

in the training data, the system can not extract opin-

ion sentences correctly. When we added root form of

predicate (RP) to predicate expression (PE), we ob-

tained high recall, but low precision. This is because

there are not so many kinds of inflection of predicate

in a sentence.

When we added subject (Subj) to predicate ex-

pression (PE), precision was slightly decreased. How-

ever, recall was significantly increased, and we had an

improvement of F-score. The observation shows that

the integration of features is effective for opinion ex-

traction. When we used sentence position, recall was

worse while precision was better. A sentence posi-

tion which is effective to find opinion depends on the

opinion types. For further improvement, it is neces-

sary to investigate an effective sentence position ac-

cording to each type of the opinion. A sentence lo-

cation within a paragraph, and a paragraph location

appeared in the sentence significantly improve recall.

Similarly, When we used opinion type of the preced-

ing sentence, recall was improved. These features are

also effective to improve overall performance.

The experimental results show that the best result

was the combination of P, R, S, O, and T, and the F-

scored attained at 0.71. From the above observations,

we conclude that multiple combination of features are

effective for opinion extraction.

Next, we examined how the method correctly as-

signed a sentence to each type of opinion. As can be

seen clearly from Table 5 that the best result was hope

and F-score was attained at 0.81. In contrast, it is dif-

ficult to identify opinion to speculation as the F-score

was only 0.51. It is not surprising because the training

data assigned to speculation have various expressions,

and it is not easy to classified into speculation manu-

ally.

For future work, we will extend our framework

to improve overall performance against a small num-

ber of training data. We note that we used surface

information, i.e., noun and verb words in articles as

a feature. Therefore, the method ignore the sense of

terms such as synonyms and antonyms. The earliest

known technique for smoothing the term distributions

through the use of latent classes is the Probabilistic

Latent Semantic Analysis (PLSA) (Hofmann, 1999),

and it has been shown to improve the performance of

a number of information access such as text classifi-

cation (Xue et al., 2008). It is definitely worth trying

with our method to achieve type classification accu-

racy.

8 CONCLUSIONS AND FUTURE

WORK

We proposed a method for opinion expression of ed-

itorial articles. Although training data and test data

are not so large, this study led to the following con-

clusions: (i) predicate expression, location and previ-

ous sentence are effective for opinion extraction. (ii)

results of opinion extraction are depend on the types

of opinion. Future work will include (i) incorporating

smoothing technique to use a sense as a feature, (ii)

applying the method to a large number of editorial ar-

ticles for quantitative evaluation.

ACKNOWLEDGEMENTS

The authors would like to thank anonymous review-

ers for their valuable comments. This work was sup-

ported by the Grant-in-aid for the Japan Society for

the Promotion of Science (JSPS), No.26330247.

REFERENCES

Balahur, A., Steinberger, R., van der Goot, E., Pouliquen,

B., and Kabadjov, M. (2009). Opinion mining on

newspaper quotations. In IEEE/WIC/ACM Interna-

tional Conference on Web Intelligence and Intelligent

Agent Technology - Workshops, pages 523–526.

Burfoot, C. and Baldwin, T. (2009). Automatic satire de-

tection: Are you having a laugh? In ACL-IJCNLP

2009: Proceedings of Joint conference of the 47th

Annual Meeting of the Association for Computational

Linguistics and the 4th International Joint Conference

on Natural Language Processing of the Asian Federa-

tion of Natural Language Processing, pages 161–164.

Cohen, W. (1996). Learning trees and rules with set-valued

features. In the 13th National Conference on Artificial

Intelligence, pages 709–717.

Hofmann, T. (1999). Probabilistic Latent Semantic Index-

ing. In Proc. of the 22nd Annual International ACM

SIGIR Conference on Research and Development in

Information Retrieval, pages 35–44.

Hu, M. and Liu, B. (2004). Mining and summarizing cus-

tomer reviews. In KDD’04: Proceedigs of the tenth

SIGKDD international conference on Knowledge dis-

covery and data mining, pages 168–177.

Opinion Extraction from Editorial Articles based on Context Information

379

Kim, S.-M. and Hovy, E. (2004). Determining the sentiment

of opinions. In COLING 2004, pages 1367–1373.

Kobayashi, Inui, Matsumoto, Tateishi, and Fukushima

(2004). Collecting evaluative expression for opinion

extraction. In IJCNLP 2004: Proceedings of the 6th

International Conference on Natural Language Pro-

cessing, pages 584–589.

Ku, L.-W., Liang, Y.-T., and Chen, H.-H. (2006). Opin-

ion extraction, summarization and tracking in news

and blog corpora. In Proceedings of AAAI-CAAW-06,

the Spring Symposia on Computational Approaches to

Analyzing Weblogs.

Kudo, T. and Matsumoto, Y. (2002). Japanese depen-

dency analysis using cascaded chunking. In CoNLL

2002: Proceedings of the 6th Conference on Natu-

ral Language Learning 2002 (COLING 2002 Post-

Conference Workshops), pages 63–69.

Lafferty, J., McCallum, A., and Pereira, F. (2001). Con-

ditional random fields: Probabilistic models for seg-

menting and labeling sequence data. In ICML 2001:

Proceedings of the 18th International Conference on

Machine Learning, pages 282–289.

Mihalcea, R. and Pulman, S. G. (2007). Characterizing hu-

mour: An exploration of features in humorous texts.

In CICLing 2007: Proceedings of the 8th Interna-

tional Conference on Intelligent Text Processing and

Computational Linguistics, pages 337–347.

Wicaksono, A. F. and Myaeng, S.-H. (2013). Toward ad-

vice mining: Conditional random fields for extracting

advice-revealing text units. In CIKM 2013: Proceed-

ings of ACM International Conference on Information

and Knowledge Management, pages 2039–2048.

Wiebe, J., Wilson, T., and Cardie, C. (2005). Annotating

expressions of opinions and emotions in language. In

Language Resources and Evaluation, pages 115–124.

Wilson, T. A. (2008). Fine-grained subjectivity and sen-

timent analysis: Recognizing the intensity, polarity,

and attitudes of private states. In Doctoral disserta-

tion (University of Pittsburgh), pages 165–210.

Xue, G. R., Dai, W., Yang, Q., and Yu, Y. (2008). Topic-

bridged PLSA for Cross-Domain Text Classification.

In Proc. of the 31st Annual International ACM SIGIR

Conference on Research and Development in Informa-

tion Retrieval, pages 627–634.

KEOD 2015 - 7th International Conference on Knowledge Engineering and Ontology Development

380