The Role of Information in Group Formation

Stefano Bennati, Leonard Wossnig and Johannes Thiele

Chair of Computational Social Science, ETHZ, Clausiusstrasse 50, 8092, Z

¨

urich, Switzerland

Keywords:

Group Behavior, Swarming, Information, Simulation.

Abstract:

A vast body of literature studies problems such as cooperation and coordination in groups, but the reasons why

groups exist in the first place and hold together are still not clear: in presence of within-group competition,

individuals are better off leaving the group. An environment that is advantageous to groups, e.g. better chances

of succeeding at or escaping from predation, seems to play a key role for the existence of groups. Another

recurrent explanation in the literature is between-group competition. We argue that information constraints

can foster sociable behavior, which in turn is responsible for group creation. We compare, by means of an

agent-based simulation, navigation strategies that exploit information about the behavior of others. We find

that individuals that have sociable behavior have higher fitness than individualistic individuals for certain

environmental configuration.

1 INTRODUCTION

We study the emergence of sociable behavior from a

population of zero-intelligence autonomous agents.

We define sociable behavior as taking into consid-

eration the behavior of alter, without any implication

about its effects on ego and alter.

Sociable behavior does not require any intelli-

gence as it can be purely reactive, for example bac-

teria moving in response to a chemical stimulus

(Chemotaxis).

The question we want to address is whether infor-

mation can be the driver of group creation in environ-

ments that incentivize selfish behavior.

Information plays a key role in evolution: in-

formation exchange is crucial for group behavior

(Skyrms, 2010) and collective intelligence (Garnier

et al., 2007), as well as for the evolution of the com-

plexity that supports them.

The seminal paper of Szathmary and Smith (2000)

argues that changes in transmitting information are

the reason why evolution favored increase in com-

plexity. The paper explains, for example, the tran-

sition from protists to animals by the change of

what information is heredited during cell duplication,

which enabled cell differentiation. Quoting Szath-

mary “transitions must be explained in term of im-

mediate selective advantage to individual replicators”

and this transition is explained by division of la-

bor: multicellular organisms with specialized cells are

more efficient than a comparable colony of unicellular

organisms.

The existence of multicellular organisms is hy-

pothesized to be an effect of symbiosis, either of or-

ganisms of different species or of similar organisms.

In the former case division of labor provides an evolu-

tionary advantage by increasing efficiency and allow-

ing cells to reduce in size. In the latter case the ad-

vantage comes from reproduction as colonies produce

more offspring than single cells of similar size (Sza-

thmary and Smith, 2000). These advantages are gen-

erated by increased efficiency at the individual level,

this increase is independent of the environment.

The next transition in evolution goes from single

individuals to groups. Similarly to the previous case,

groups are supported by division of labor, with (e.g.

bees) or without (e.g. humans) individual specializa-

tion.

Although symbiosis is able to sustain the forma-

tion of heterogeneous groups, because of an individ-

ual advantage given by exchange of services or re-

sources, it is not able to explain formation of hetero-

geneous (social) groups in presence of within-group

competition.

Between-group competition, as well as other fa-

vorable environmental factors, might support the for-

mation of homogeneous groups when there is within-

group competition: for example schools of fish form

groups to increase their chance to escape predation,

similarly predators hunt collectively to increase their

Bennati, S., Wossnig, L. and Thiele, J.

The Role of Information in Group Formation.

DOI: 10.5220/0005751802310235

In Proceedings of the 8th International Conference on Agents and Artificial Intelligence (ICAART 2016) - Volume 1, pages 231-235

ISBN: 978-989-758-172-4

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

231

ability to capture prey (Dawkins, 2006).

As opposed to the previous transition, the envi-

ronment seems to play a key role for emergence of

groups.

We argue that group creation can be supported

also in an environment that does not favor groups and

in presence of within-group competition. In this case

the driver of group creation is sociable behavior, that

individuals develop in response to (lack of) informa-

tion in the environment.

2 METHODS

Group formation is all about interactions, and it is an

extremely difficult problem to capture its dynamics in

a mathematical model. For this reason we study this

problem by means of a computer simulation which is

able to reproduce a vast range of dynamics.

We design an environment that does not give an

advantage to group behavior as every agent competes

for the same resources, which in our case is food.

The simulation environment is a squared grid with

circular boundary conditions, each cell can contain a

variable number of food units and agents. The number

of cells containing food is defined by a parameter and

remains constant during the simulation: anytime a

food source is depleted, a new food source is spawned

at a random location. The continuous respawning of

food sources is the mechanism that tests the quality

of an agent’s foraging strategy. The food source ca-

pacity (i.e. the maximum number of food units that

a cell can contain) is set to a value, sufficiently high

to avoid that a single agent could exaust it before any

other agent can find it. We will discuss in the next

section the effects of this parameter on our findings.

Agents are initially randomly placed in the grid.

Agent perceptions include the food in the current cell

and the agents in the surroundings. The perception

mechanism mimics the working of the retina in fish

(Strandburg-Peshkin et al., 2013): perceptions indi-

cate the number of agents in every of the cardinal di-

rections, but it is not refined enough to tell the exact

number of agents in a specific cell, nor distinguish

between stationary and moving targets. Available ac-

tions are foraging and moving of one cell in one of the

four cardinal directions.

At every simulation step agents activate in a ran-

dom order and execute one action based on their per-

ception. The order of play is crucial as agents com-

pete for the same resources with a first-come first-

served policy. Whenever an agent depletes a source

of food, a new source is immediately created some-

where else. Any other agent in that cell that is still

waiting in queue would have now to look for a new

source, the strategy they use to look for new sources

of food makes the difference for their performance.

Agent decisions are based on the output of a sim-

ple neural network that transforms a vector in the per-

ception space to a vector in the action space. The

agent executes the action that corresponds to the high-

est value of the output vector (see Figure 1). At this

stage the learning is disabled, so the weights of the

network remain constant for the whole simulation.

Figure 1: Diagram of the decision system. The weights

associated to each connection produce a vector of output

values, the action with the highest value is executed.

An important assumption is that location of food

is unknown and is disclosed to one agent only upon

entering its location. This assumptions makes the

task not-trivial: being unable to perceive food in a

distance, the only chance to improve over a random

walk is to find a foraging strategy that exploits a proxy

for food location. The intuition is that, assuming all

agents stop to eat whenever they encounter a food

source, cells with more agents are more likely to be

food sources. We call a strategy, exploiting the sig-

nal of position of others, “sociable”. Sociable strat-

egy interprets the presence of agents as increasing the

likelihood of food

In our simulation we define two types of agents:

random and sociable (SAs). Random agents, as the

name suggests, walk randomly in the environment

searching for food, independently of where other

agents are. Their behavior is determined by random

noise, added to the randomly initialized weights. So-

ciable agents like company: they favor going towards

where other agents are. For example if their percep-

tion shows the majority of agents to the east, the agent

will move to the east. They are generated from a ran-

dom agent, by increasing the weight that connect a

specific input value to a desired action. The only dif-

ference between types of agents is the average value

of the weights. All agents are initialized to have a

very high weight on the edge that connects percep-

tion ”food in the current cell” to action ”forage”, this

makes sure that agents will always forage when given

the opportunity. This assumption does not remove

generality as, in an evolutionary perspective, it is ex-

pected that agents forage whenever possible.

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

232

3 LITERATURE

A vast body of literature applies Agent Based Mod-

eling to study group and societal issues. Most Agent

Based Models of society concentrate on the problem

of cooperation (Helbing and Yu, 2009) or coordina-

tion (M

¨

as et al., 2010). Our model is designed in a

way that neither cooperation nor coordination are re-

quired to be successful: we created a foraging task

whose outcome does not depend on interactions be-

tween agents.

Our approach is similar to models of Natural Se-

lection, e.g. (Grund et al., 2013), where different

groups obtain different fitness due to their character-

istics. Unlike most natural selection models, in our

simulation the size of the groups remains constant.

Our simulation models a snapshot of a natural selec-

tion process, where the lifetime of every single agent

is much longer than the simulation length. Fitness is

used to evaluate one group versus the others at the end

of the simulation.

Our design has been inspired by biological sys-

tems, in particular schooling fish. The computational

model of fish behavior developed by Strandburg-

Peshkin et al. (2013) is able to reproduce group move-

ment as a simple reaction to changes in visual per-

ception, proportional to the size of the retina covered.

Similarly our agents react to changes in quantity in

their visual perceptions.

Some assumptions are quite common in the liter-

ature, and most models rely on at least one of them to

produce their results. As opposed to previous work,

we relax the following common assumptions:

• The environment favors cooperation, as in (Mon-

tanier and Bredeche, 2013). In our model an agent

has the same payoff whether it is in a group or

by itself. We could say that the environment ac-

tually favors individuals because a higher number

of agents using a resources would lead to its faster

depletion.

• Spatial dispersion is imposed, as in (Grund et al.,

2013). Agents are randomly placed and there is

no mechanism that keeps agents of the same kind

close together. Similarly food is created randomly

in the grid, so there is no incentive to stay close to

an empty food source.

• Kin selection is possible, as in (Smith, 1964).

Agents do not have any visible characteristic that

make them recognizable as members of a group

(e.g. Green Beard (Hamilton, 1964)) so they can-

not develop mechanisms that favor kin.

• Agents are able to learn, as in (Duan and Stanley,

2010). Although equipped with a neural network,

learning algorithms are not implemented. Every

agent behaves consistently for the whole simula-

tion.

4 RESULTS

We expect that, for some environment settings, socia-

ble strategy achieve the highest performance.

Being driven by other agents, sociable agents

(SAs) cannot explore the environment as efficiently

as others, but are are able to use the information about

the behavior of other agents to better exploit the envi-

ronment.

When perception of food is restricted, the behav-

ior of others is the only proxy to food location, ren-

dering sociable behavior advantageous with respect to

random walk.

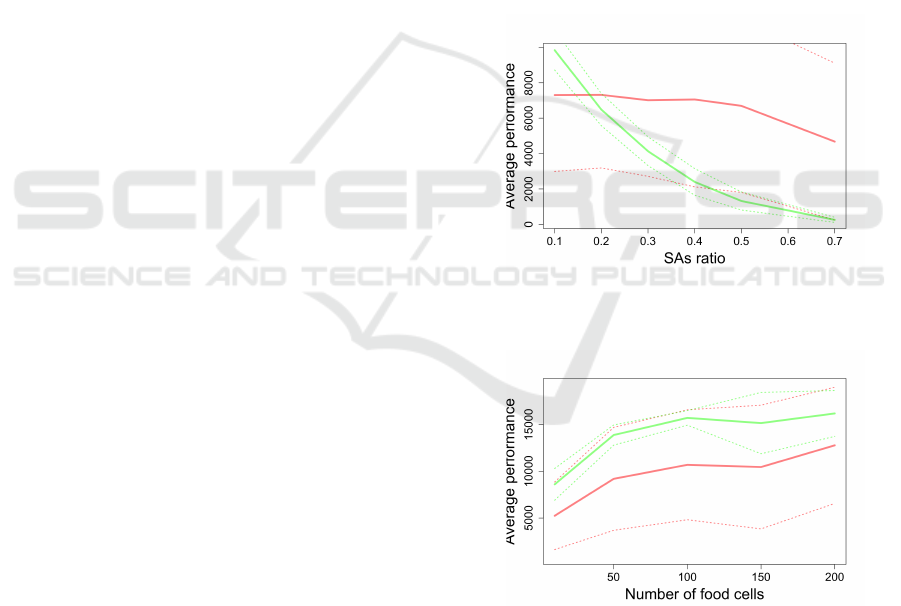

Figure 2: Performance varying population composition.

Performance of SA decreases with increasing percentage of

SAs. Legend: Red is Random, Green is SA.

Figure 3: Performance for 10 agents and food stack size

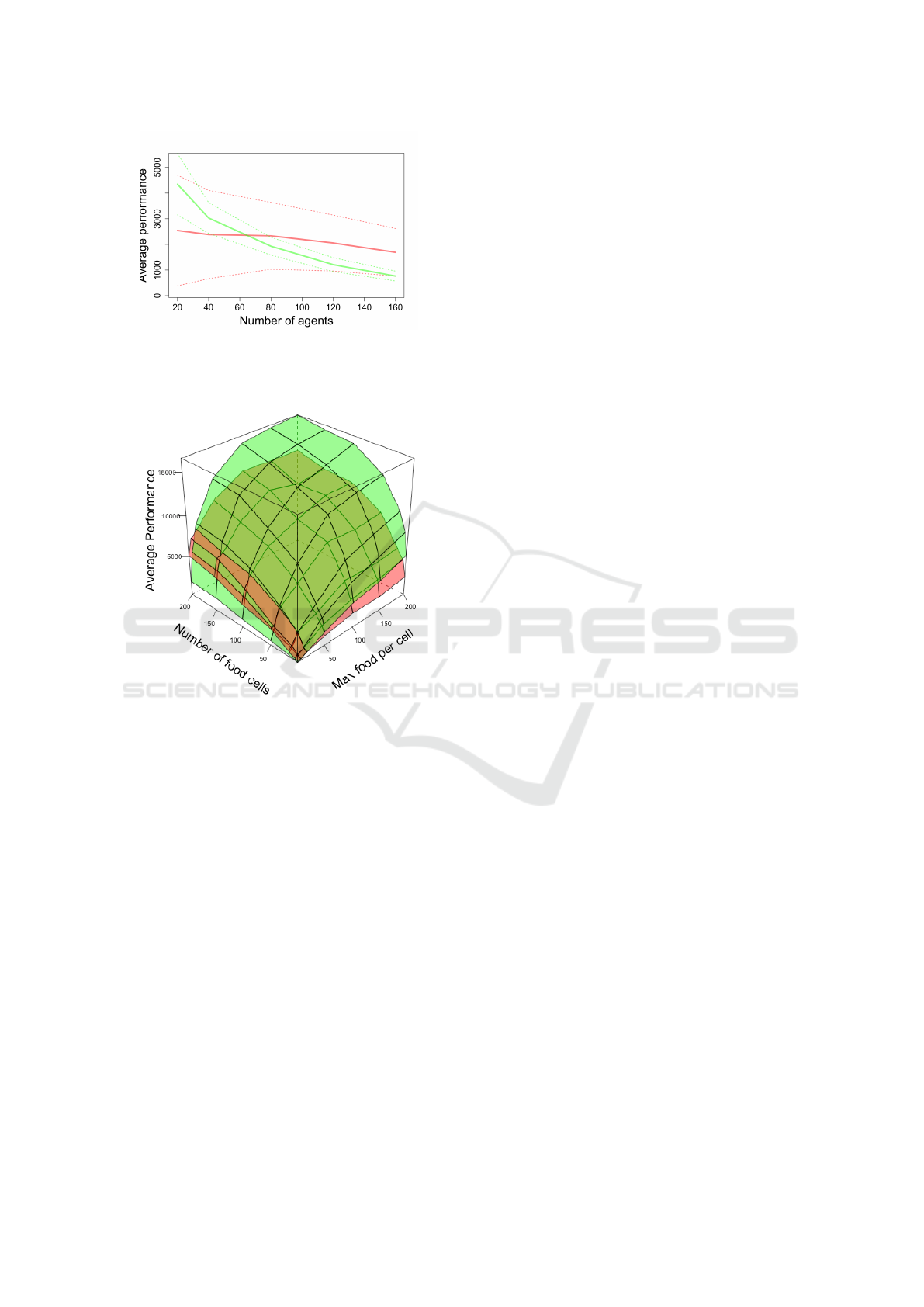

200. SA are outperforming random agents for any number

of food sources. Legend: Red is Random, Green is SA.

The results presented here are the average of 24

simulations of length 2e+4 timesteps. Performance is

defined as the number of foraging actions an agent

takes during the whole simulation. Group perfor-

mance is the average of group members’ perfor-

mance. Our first result is that performance of sociable

The Role of Information in Group Formation

233

Figure 4: Performance varying population size. For 10 food

sources of size 200. Performance of SAs decreases with

increasing population size. Legend: Red is Random, Green

is SA.

Figure 5: Effect of food scarcity on performance, for 10

agents. SAs outperform random agents for any food con-

centration and any stack size greater than 20. Legend: Red

is Random, Green is SA.

agents decreases with an increasing share of sociable

agents in the population (Figure 2).

This result is partly expected as SAs are ex-

ploiters, so they need other agents to perform explo-

ration. Our second result is that, given the optimal

population composition, SAs can significantly outper-

form the other agents for any food concentration, as

long as the number of agents is low (Figure 3).

The reason for the performance decrease with an

increasing number of agents (Figure 4) could be that

SAs are not able to efficiently spot food sources any-

more. If the grid is too crowded, a region with many

moving agents could appeal more than a region with

few stationary agents, leading the agent away from a

possible food source.

The success of SAs is driven by the scarcity of

food sources: if the source does not contain enough

units of food, SAs will not be able to reach it before

it gets depleted. We explore the effect of this param-

eter in Figure 5, we see that the stack size is less than

20 units (that is an average stack size of 10), random

agents are able to outperform the other strategies. The

number of food sources does not seem affect the per-

formance of SAs.

5 DISCUSSION

In their seminal paper, Szathmary and Smith (2000)

argue that information played a key role in building

the first multicellular organisms, as well as human so-

ciety. Although having convincing explanations for

the early and late stages of evolution, it lacks a com-

plete explanation for the emergence of groups. Our

preliminary findings build on and complement this ar-

gument by finding evidence for a role of information

in group formation: sociable behavior would emerge

as a selfish response to limited information about the

environment, leading to formation of groups, which

would enable altruistic behavior such as cooperation.

Our results speak for studying more deeply the

role of information in evolutionary biology and social

science.

The work is far from complete. The next step is

to look for emergence of group behavior. So far we

have concentrated on performance of groups. More

interesting is group behavior after a food source is de-

pleted: agents will move away, driven by the agents

surrounding them, which are most likely of the same

type. We expect SAs to show some group behavior

while looking for the next food source.

We will also study the evolutionary stability of the

strategies, of particular interest in the dynamic of the

evolution as the population’s composition varies over

time. We expect to find that changes in the population

composition, driven by natural selection, will destabi-

lize the population of SAs leading it, in the majority

of cases, to extinction. We also want to investigate

what happens if group cooperation makes foraging

more efficient.

Another interesting addition to the model would

be learning, in particular when compared to, or along-

side to an evolutionary process. We expect to see

a faster adaptation to the environment and the emer-

gence of more complex group dynamics.

REFERENCES

Dawkins, R. (2006). The selfish gene. Number 199. Oxford

university press.

Duan, W.-Q. and Stanley, H. E. (2010). Fairness emer-

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

234

gence from zero-intelligence agents. Physical Review

E, 81(2):026104.

Garnier, S., Gautrais, J., and Theraulaz, G. (2007). The

biological principles of swarm intelligence. Swarm

Intelligence, 1(1):3–31.

Grund, T., Waloszek, C., and Helbing, D. (2013). How nat-

ural selection can create both self-and other-regarding

preferences, and networked minds. Scientific reports.

Hamilton, W. (1964). The genetical evolution of social be-

haviour. i.

Helbing, D. and Yu, W. (2009). The outbreak of cooperation

among success-driven individuals under noisy condi-

tions. Proceedings of the National Academy of Sci-

ences, 106(10):3680–3685.

M

¨

as, M., Flache, A., Helbing, D., and Bergstrom, C. T.

(2010). Individualization as driving force of clus-

tering phenomena in humans. PLoS Comput Biol,

6(10):e1000959–e1000959.

Montanier, J.-M. and Bredeche, N. (2013). Evolution of

altruism and spatial dispersion: an artificial evolution-

ary ecology approach. In Advances in Artificial Life,

ECAL, volume 12, pages 260–267.

Skyrms, B. (2010). Signals: Evolution, learning, and infor-

mation. Oxford University Press.

Smith, J. M. (1964). Group selection and kin selection. Na-

ture, 201:1145–1147.

Strandburg-Peshkin, A., Twomey, C. R., Bode, N. W., Kao,

A. B., Katz, Y., Ioannou, C. C., Rosenthal, S. B., Tor-

ney, C. J., Wu, H. S., Levin, S. A., et al. (2013). Visual

sensory networks and effective information transfer in

animal groups. Current Biology, 23(17):R709–R711.

Szathmary, E. and Smith, J. M. (2000). The major evolu-

tionary transitions. Shaking the Tree: Readings from,

pages 32–47.

The Role of Information in Group Formation

235