Preliminary Evaluation of a Silent Speech Interface based on

Intra-Oral Magnetic Sensing

Lam A. Cheah

1

, Jie Bai

1

, Jose A. Gonzalez

2

, James M. Gilbert

1

, Stephen R. Ell

3

,

Phil D. Green

2

and Roger K. Moore

2

1

School of Engineering, The University of Hull, Kingston upon Hull, U.K.

2

Department of Computer Science, The University of Sheffield, Sheffield, U.K.

3

Hull and East Yorkshire Hospitals Trust, Castle Hill Hospital, Cottingham, U.K.

Keywords: Assistive Technology, Silent Speech Interface, Permanent Magnet Articulography, Intraoral Magnetic

Sensing.

Abstract: This paper addresses the hardware challenges faced in developing a practical silent speech interface (SSI)

for post-laryngectomy speech rehabilitation. Although a number of SSIs have been developed, many are

still deemed as impractical due to a high degree of intrusiveness and discomfort, hence limiting their

transition to outside of the laboratory environment. The aim of this paper is to build upon our previous

work, in developing a user-centric prototype and enhancing its desirable features. A new Permanent Magnet

Articulography (PMA) system is presented which fits within the palatal cavity of the user’s mouth, giving

unobtrusive appearance and high portability. The prototype is comprised of a miniaturised circuit

constructed using commercial off-the-shelf (COTS) components and is implemented in the form of a dental

retainer, which is mounted under roof of the user’s mouth and firmly clasps onto the upper teeth.

Preliminary evaluation via speech recognition experiments demonstrates that the intraoral prototype

achieves word recognition accuracy of 75.7%, slightly lower than its predecessor. Nonetheless, the intraoral

design is expected to improve the stability and robustness of the PMA system with a much improved

appearance since it can be completely hidden inside the user’s mouth.

1 INTRODUCTION

Speech is a key capability and the most natural form

of communication of human beings. However, there

are a variety of situations in which people wish to

communicate orally but where normal speech can be

either impossible or undesirable. For instance,

people with speech impairments who have

undergone laryngectomy: the surgical removal of

larynx as part of treatment for cancer or other

diseases affected the vocal cords. These post-

laryngectomy patients, who have lost their voice,

often find themselves struggling with their daily

communication and may experience a severe impact

on their quality of life (Braz et al., 2005; Fagan et

al., 2008). However, there are currently only a

limited number of post-laryngectomy voice

restoration methods available for these individuals:

oesophageal speech, the electrolarynx and speech

valves. Unfortunately, these methods are often

limited by their usability and abnormal voice quality

(Fagan et al., 2008; Gilbert et al., 2010). Whereas,

typing-based augmented and alternative

communication (AAC) devices are limited by slow

manual text input (Wang et al., 2012). Although

some improvements were achieved in term of

voicing quality of the electrolarynx and oesophageal

speech (Doi et al., 2010; Toda et al., 2012),

emerging assistive technologies (ATs) such as silent

speech interfaces (SSIs) have shown promising

potential in recent years as an alternate solution.

In principle, SSIs are devices that enable speech

communication to take place in the absence of

audible acoustic signals (Denby et al., 2010). Hence,

aside from use as a communication aid for speech

impaired individuals, SSIs can also be deployed in

acoustically challenging environment or where

privacy/confidentially is desirable. To date, a

number of SSIs have been proposed in an attempt to

extract non-acoustic information generated during

speech production and reproduce audible speech

using different sensing modalities. A comprehensive

108

Cheah, L., Bai, J., Gonzalez, J., Gilbert, J., Ell, S., Green, P. and Moore, R.

Preliminary Evaluation of a Silent Speech Interface based on Intra-Oral Magnetic Sensing.

DOI: 10.5220/0005824501080116

In Proceedings of the 9th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2016) - Volume 1: BIODEVICES, pages 108-116

ISBN: 978-989-758-170-0

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

summary on different SSIs technologies were

presented in (Denby et al., 2010). Permanent magnet

articulography (PMA) is a type SSI that is based on

sensing the changes in the magnetic field generated

by a set of permanent magnet markers attached onto

the vocal apparatus (i.e. lips and tongue) during

speech articulation by using an array of magnetic

sensors located around the mouth (Fagan et al.,

2008; Gilbert et al., 2010), which shares some

similarities with the electromagnetic articulography

(EMA) (Toutios and Margaritis, 2005; Toda et al.,

2008; Wang et al., 2012). In contrast to EMA, PMA

does not explicitly provide the position of the

markers, but rather a summation of the magnetic

fields from magnets that are associated with a

particular articulatory gesture. Previous work

(Gilbert et al., 2010; Hofe et al., 2013a, 2013b;

Cheah et al., 2015) demonstrated the possibility of

performing automatic speech recognition (ASR) on

PMA data.

Despite the attractive attributes of SSIs, two

major challenges of building an effective SSI exist

in the form of hardware and processing software.

Preliminary discussions on the influential factors

(e.g. invasiveness, market readiness, potential

costing and etc.) affecting the SSIs’ implementation

were presented in (Denby et al., 2010). Earlier

PMA-based prototypes (Gilbert et al., 2010; Hofe et

al., 2013b) showed acceptable speech recognition

performance, but were not particularly satisfactory

in terms of their appearances, comfort and

ergonomic factors for the users. To address these

hardware challenges, a PMA prototype in the form

of a wearable headset (design based on a customised

pair of spectacles or a headband) comprising of

minituarised sensing modules and wireless

capability was developed (Cheah et al., 2015). The

second generation prototype was re-designed based

on a user-centred approach through utilising

feedback from user questionnaires and through

discussion with stakeholders including clinicians,

potentials users and their families. The appearance

and comfort of the prototype was much improved

and it demonstrated comparable performances to its

predecessors.

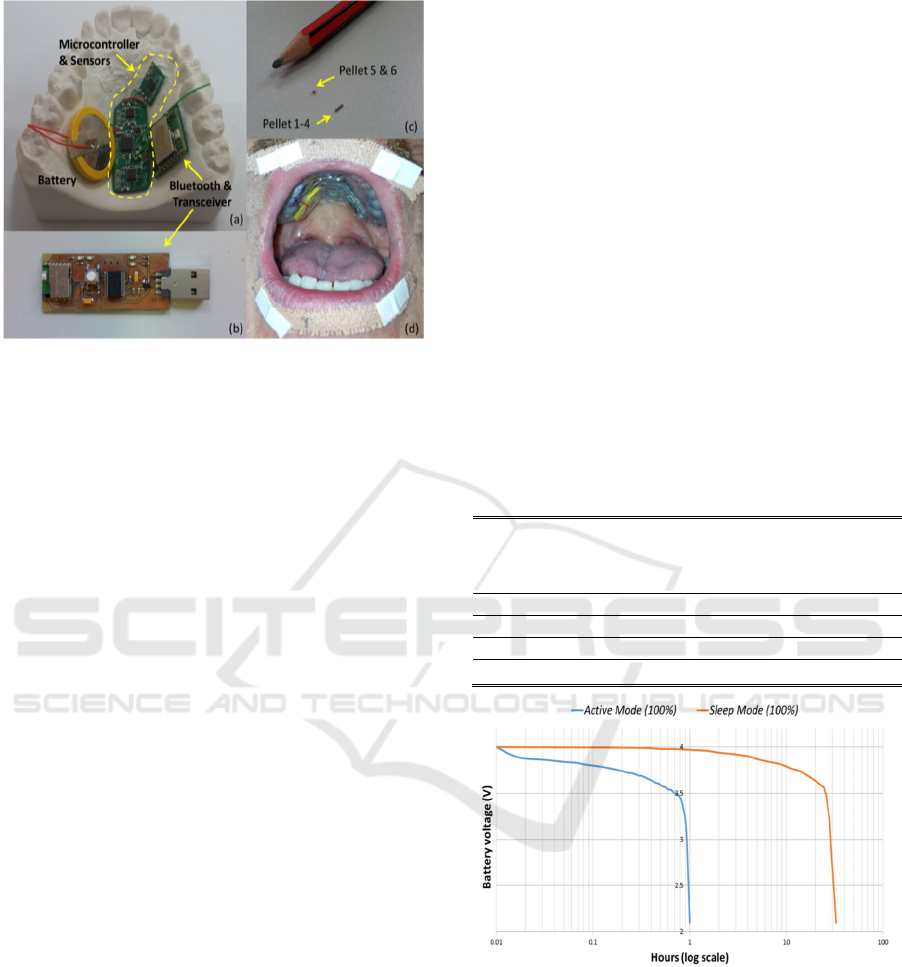

As illustrated in figure 1, the second generation

PMA system consists of a set of six cylindrical

Neodynium Iron Boron (NdFeB) permanent

magnets, four on the lips (ø1 mm × 5 mm), one at

the tongue tip (ø2 mm × 4 mm) and one on the

tongue blade (ø5 mm × 1 mm). These magnets are

currently attached using Histoacryl surgical tissue

adhesive (Braun, Melsungen, Germany) during

experimental trials, but will be surgically implanted

for long term usage. The remainder of the PMA

system is composed of a set of four tri-axial

Anisotropic Magnetoresistive (AMR) magnetic

sensors mounted on the wearable headset, a set of

microcontrollers, rechargeable battery and a

processing unit (e.g. computer/ tablet PC). Although

the prototype has many desirable hardware features,

it is not without limitations.

Figure 1: (a) A wearable PMA prototype designed in a

form of spectacles. (b) & (c) Placement of six magnets on

lips (pellets 1-4), tongue tip (pellet 5) and tongue blade

(pellet 6).

The present work builds upon the work of

(Cheah et al., 2015), to further improve and alleviate

the shortcomings from a hardware perspective. The

proposed prototype has several distinctive features,

such as being miniature in size, highly portable,

discreet and unobtrusive since it is hidden from sight

within the user’s mouth. The remainder of this paper

is structured as follows. Section 2 outlines the design

challenges of the intraoral version of the PMA

device. Section 3 describes the architecture of the

intraoral PMA prototype, followed by the

performance evaluation in Section 4. The final

section concludes this paper and provides an outlook

for future work.

2 DESIGN CHALLENGES

Despite the improvements made in the second

generation PMA prototype, it also has drawbacks.

Firstly, the performance of the external headset

cannot be maintained in certain real-life conditions

(i.e. exaggerated movement or sports activity) due to

issues with instability. If there is a considerable

movement of the headset on the user’s head, the

PMA system may need re-calibration/re-training to

avoid degradation in performance.

Secondly, wearing the headset over long periods

may not be comfortable, despite the fact that the

Preliminary Evaluation of a Silent Speech Interface based on Intra-Oral Magnetic Sensing

109

Figure 2: Simplified operation block diagram.

device was designed to be lightweight and

ergonomically friendly. Thirdly, the external version

of the PMA device may still be cosmetically

unacceptable to some users. Previous studies

indicated that the appearance is one of the most

important factors that affect the acceptability of any

AT by their potential end users (Hirsch et al., 2000;

Martin et al., 2006; Bright and Conventry, 2013).

To overcome these limitations, an intraoral

version of the PMA prototype, which fits under the

palate inside the user’s mouth in a form of a dental

retainer, was proposed. Being tightly clamped onto

the upper teeth means that the device would be more

stable than the previous wearable headset. Due to the

fact that the device is completely hidden from sight

during normal use, it is cosmetically inconspicous.

In addition, since the sensors are much closer to the

articulators than the external headset, the size of the

implants can be significantly reduced. Similar

intraoral-based designs have been previously

implemented for other non-speech related ATs with

various degree of success (Tang and Beebe, 2006;

Lontis et al., 2010; Park et al., 2012).

3 SYSTEM DESCRIPTION

3.1 Space Budget

The intraoral circuitry necessary to implement a

PMA system is made up of: three tri-axial magnetic

sensors, a wireless communication module, a

microprocessor to synchronise data capture and

communications and a suitable power source

capable of providing an appropriate operating

lifetime. This must be accommodated within the oral

cavity, without interfering with the natural tongue

articulation during speech. A recent study (Bai et al.,

2015) suggested that the palatal cavity is suitable to

house the intraoral circuitry because of its relatively

flat surfaces and proximity to the articulators. The

estimated space available in the palatal cavity on our

test subject is 59.7mm

3

.

3.2 Description of the Intraoral

Circuitry

In order to fit all necessary circuitry inside the

mouth, the size of the electronics and rechargeble

battery of the external version of PMA prototype

had to be shrunk down. The major components of

the PMA prototype are shown in figure 2. These are

implemented using a low-power ATmega328P

microcontroller, three tri-axial HMC5883L magnetic

sensors (AMR), a rechargeable Li-Ion coin battery

(capacity of 40mAh, 3.7V and 20 mm diameter ×

3.2 mm

thickness), and a wireless transceiver

(Bluetooth 2.0 module). The remainder of the

system shown in figure 3 consists of a processing

unit (e.g. computer/tablet PC) and a set six

permanent magnets (NdFeB) attached onto lips and

tongue in the same locations as illustrated in figure

1. The elements of the intraoral sensing system

(which have a total volume of 36.7 mm

3

) are

arranged as shown in figure 3(a). These may be

encapsulated and placed in the oral cavity as shown

in figure 3(d).

The positions of the magnets remained

unchanged from the earlier prototype but because of

the proximity of the sensors, significantly smaller

magnets (see Figure 3c) can be used: four on lips (ø1

mm × 4 mm), one on the tongue tip (ø1 mm × 1

mm) and one on the tongue blade (ø1 mm × 1 mm).

Note that the magnetic field strength decreases with

the cube of the distance away from the magnets.

BIODEVICES 2016 - 9th International Conference on Biomedical Electronics and Devices

110

Figure 3: (a) & (b) Circuitry of the intraoral version of the

PMA system. (c) Placement of magnets on lips (pellets 1-

4), tongue tip (pellet 5) and tongue blade (pellet 6). (d)

View of the device when worn by user.

3.3 Circuit Operation

Figure 2 shows an operational block diagram of the

intraoral version of the PMA system. A command is

sent wirelessly from the processing unit to the

intraoral sensing module via Bluetooth to trigger

data acquisition. All three tri-axial magnetic sensors

then measure the magnetic field and digitize it with

12-bit resolution. The microcontroller acquires these

measurements (9 PMA channels sampled at 80 Hz)

through managing a multiplexer using three control

signals (S0, S1 and SCL). The multiplexer acts as a

switching device to route the serial clock (SCL) to

the desired magnetic sensor through the I

2

C

interface. The acquired samples are then transmitted

back to a processing unit wirelessly via the Blutooth

transceiver and custom designed Bluetooth dongle

(Figure 3(b)) for further processing. Unlike the

external version of the PMA prototype, the intraoral

device is restricted to only operate wirelessly from

inside the mouth. Wired connectivity is impossible,

as the sensing modules are to be sealed and

packaged inside a dental retainer. A description of

the operational and timing diagrams of the sub-

modules are presented in (Bai et al., 2015).

In terms of software, an ad-hoc MATLAB-based

graphical user interface (GUI) developed in (Cheah

et al., 2015) was adapted, where all speech

processing and recognition algorithms were

embedded. During silent speech recognition, if the

acquired PMA signal is correctly matched to an

articulated gesture from the training database, the

corresponsed utterance will be identified. A text-to-

speech synthesiser (TTS) is then used to generate an

acoustic signals (e.g. pre-recorded individual’s own

voice) for the recognised utterance through built-in

speakers.

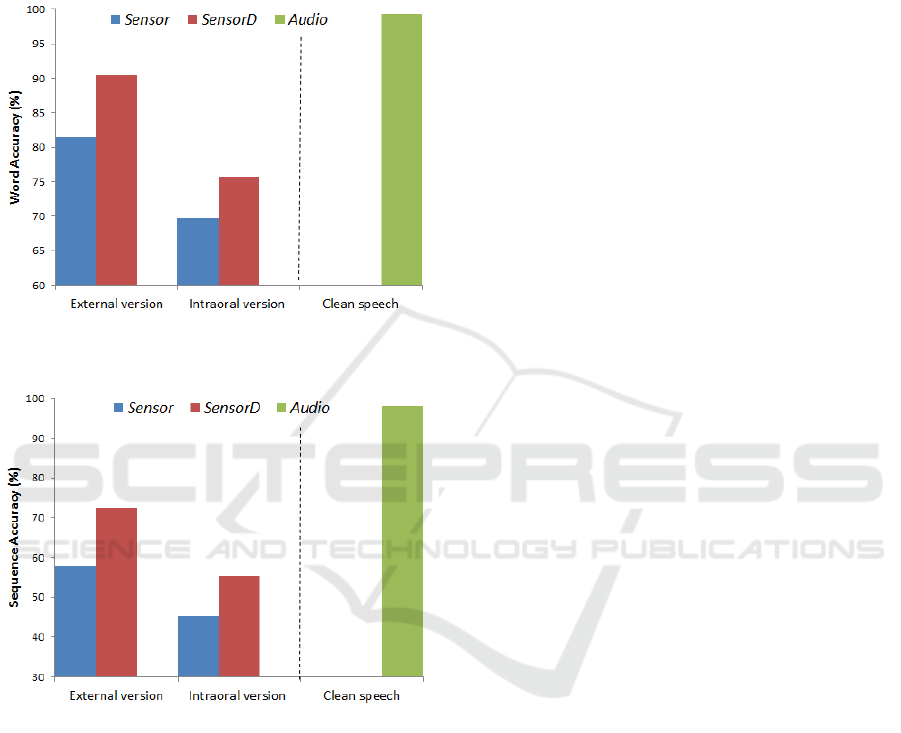

3.4 Power Budget

Since the circuitry is to be sealed into a dental

retainer, the intraoral device can only acquire power

from a battery. Given the limited space available, a

small, low capacity battery must be used (in the

current design, the battery takes 27% of the total

volume of the circuitry). In addition, any measures

to extend the battery life will be of interest. Power

hungry components such as the microcontroller, the

magnetic sensors and the Bluetooth may be set to

standby mode or sleep mode to reduce the current

consumption when they are inactive. A shown in

table 1, sleep mode gives a saving of 93% over

standby mode or a saving of 97% over active mode.

Table 1: Current consumption in difference operational

modes.

Current

Consumption

Active

mode

(mA)

Standby

mode

(mA)

Sleep

mode

(mA)

Sensors 5.1 0.006 0.006

Microcontroller 5.4 4.4 0.7

Bluetooth 19.0 7.22 0.07

Total 29.5 11.626 0.776

Figure 4: Battery discharging over time under active mode

and sleep mode.

If the system is to operate continuously (in active

mode), the battery will last approximately one hour

before being depleted below the minimum operating

voltage (cut-off voltage) required by the Bluetooth

module of 2.1V. The battery can then be recharged

through a charging point located at the bottom side

of the dental retainer. In contrast, if the system was

inactive at all times (in sleep mode), the battery

would last about 32 hours. Figure 4 shows a

Preliminary Evaluation of a Silent Speech Interface based on Intra-Oral Magnetic Sensing

111

summary of the discharging cycle of the battery in

different modes. Neither of these operating regimes

is fully representative of the expected use since they

correspond to continuous speech and no speech

respectively. Based on the measurements in table 1

and figure 4, a more realistic regime would be to

allow 30 minutes of speech with a further 16 hours

in sleep mode. Hence, the estimated usage time is

considered to be sufficient for a typical day before

charging is required.

3.5 Construction of the Dental Retainer

The circuit described in the previous section must be

encapsulated to protect it from damage and short

circuits due to saliva and to ensure it is held in place

within the palate. The retainer must be customised

according to the individual’s oral anatomy. This may

be achieved by forming it on a dental impression of

the user’s oral cavity (seen in the background of

figure 3a). The intraoral PMA prototype was

implemented in the form of dental retainers utilising

both hard and soft materials, as illustrated in figure

5.

Figure 5: PMA circuitry embedded inside a (a) Hawley

dental retainer and (b) soft bite raiser like dental retainer.

The hard retainer is similar to a Hawley retainer

and is made of dental acrylic resin, which is

commonly used in fabrication of orthodontic

appliances. On the other hand, the soft retainer is

similar to a soft bite raising appliance and is made of

polypropylene or polyvinylchloride (PVC) material.

To allow stable fitting in the palate, the Hawley

retainer utilises a set of ball clasps to tightly secure it

onto the upper teeth. In contrast, the soft bite raiser

is fitted over the entire arch of the upper teeth. Note

that only soft retainer was used in the preliminary

experiments, but similar performance is expected

from the hard retainer.

4 PERFORMANCE EVALUATION

4.1 Experimental Design and Setup

The PMA-based SSIs (both intraoral and external

version) are speaker dependant systems because

their designs need to be individually tailored based

on the speaker’s head or oral anatomy for optimal

performance. The data used for evaluating the new

intraoral prototype were collected from a male

native English speaker who is proficient in the usage

of the external PMA device. Magnets were

temporary attached on the subject using Histoacryl

surgical tissue adhesive (Braun, Melsungen,

Germany).

Recordings of PMA and audio data for training

and evaluation were performed using a bespoke

Matlab-based GUI. The software provides a visual

prompt of randomised utterances to the subject at

interval of 5 seconds during the training session. The

subject’s head was not restrained during the

recording sessions, but the subject was requested to

avoid any large head movements. This was

necessary to ensure that interference induced by

movement relative to earth’s magnetic field was at

its minimum, so that it did not corrupt or distort the

desired signal. This is because the current prototype

is not yet equipped with a non-articulatory

cancellation/removal mechanism.

For optimal sound quality, the recordings were

conducted in an acoustically isolated room. The

audio data were recorded using a shock-mounted

AKG C1000S condenser microphone via a dedicated

stereo USB-sound card (Lexicon Lambda) to a PC,

with a 16 kHz sampling rate. Meanwhile, the PMA

data were captured at a sampling frequency of 80 Hz

via the intraoral PMA device and transmitted to the

same PC wirelessly via Bluetooth, as illustrated in

figure 2. Since both data streams (PMA & audio) are

acquired from separate modality, synchronisation

between the two data streams is necessary. Hence,

an automatic timing re-alignment mechanism was

implemented utilising start-stop markers generated

in additional to both data streams.

4.2 Data Recording

Our long term goal is to explore the feasibility of

using the intraoral device for continuous speech

reconstruction. For preliminary testing, the TIDigits

database (Leonard, 1984) was selected because the

limited size of the vocabulary enables whole-word

model training from relatively sparse data and

because of the simplicity of the language involved.

BIODEVICES 2016 - 9th International Conference on Biomedical Electronics and Devices

112

The corpus consists of sequences of connected

English digits with up to seven digits per utterance.

The vocabulary is made up of eleven individual

digits, i.e. from ‘one’ to ‘nine’, plus ‘zero’ and ‘oh’

(both representing digit 0).

The experimental data were collected from two

independent sessions, with each session consisted of

four datasets containing 77 sentences each. A total

of 308 utterances containing 1012 individual digits

were recorded during each session. To prevent

subject fatigue, short breaks in between each

recording session were allowed.

4.3 HMM Training and Recognition

Prior to the training and recognition processes, the

acquired PMA data were segmented and checked

using the audio data. Inappropriate endpoints were

manually corrected if necessary. In addition, any

mis-labelled utterances were corrected using the

acquired audio data.

The PMA data was then subjected to offset

removal via median subtraction over 2s windows

with 50% overlap and followed by data

normalization. Next, the delta parameters were

computed for all PMA channels and added to its

original time series data, resulting in a feature vector

of size 18. The delta-delta parameters were not

included as part of the feature vector as they did not

produced significant improvement in performance

(Hofe et al., 2013a, 2013b). The recognition

performance based on the audio data was also

evaluated for comparison purposes. In this case, 13

Mel-frequency cepstral coefficients (MFCCs) were

extracted from the audio signals using 25ms analysis

windows with 10ms overlap. Next, the delta and

delta-delta parameters were computed and appended

to the static parameters, resulting in a feature vector

of dimension 39.

The extracted PMA and audio features were used

for training two independent speech recognisers

using the HTK toolkit (Young et al., 2009). In both

cases, the acoustic model in the recogniser uses

whole-word Hidden Markov Models (HMMs)

(Rabiner, 1989) for each of the eleven digits. Each

HMM has 21 states and 5 Gaussians per state. The

selected parameters were not optimised, but were

known for their performances based on previous

work (Hofe et al., 2013a, 2013b). The HMM

training and recognition was carried out in four

validation cycles. In each cycle, three out of four

sets within a session were used for training and the

remaining one for testing. The recognition results

were averaged over four cycles and across two

independent sessions.

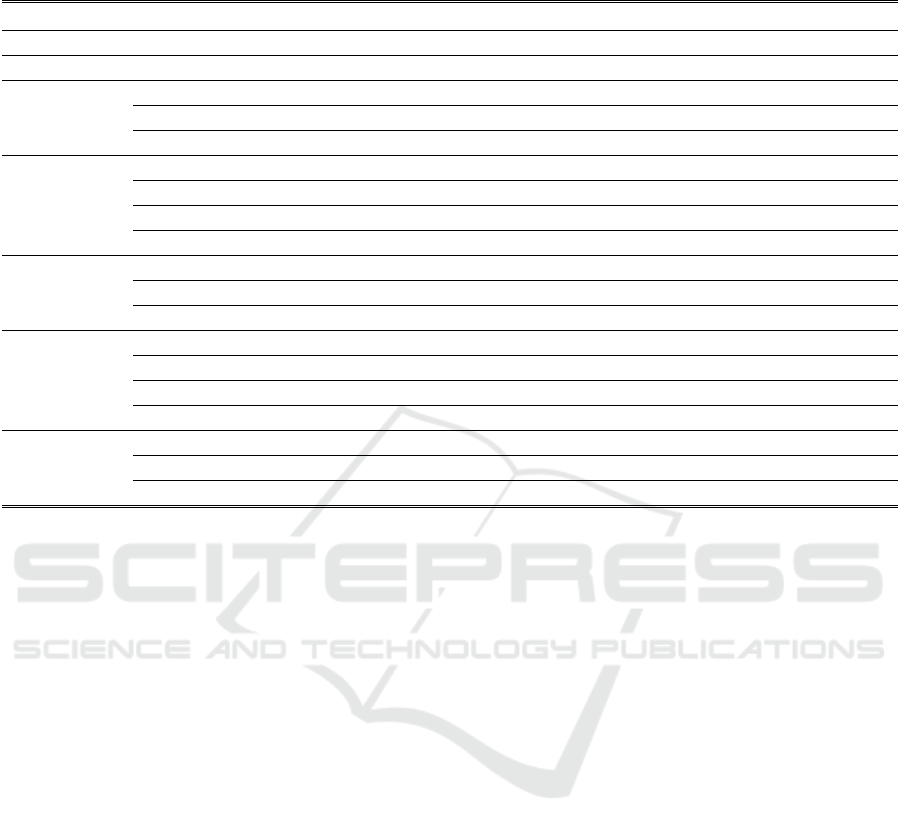

4.4 Recognition Performance

Both word and sequence accuracy results for the

intraoral and external versions of the PMA device

are presented in figure 6 and figure 7. In addition,

the performances of the PMA devices were

compared with audio-based recognition. The blue-

coloured bars indicate the performance achieved

using only static PMA data (vector size of 9),

whereas the red-coloured bars are the results

achieved using both static and dynamic features

(vector size of 18). In addition, the green-coloured

bars are the speech-recognition performance

achieved using audio data (vector size of 39). We

will refer to these three conditions as Sensor,

SensorD and Audio features, respectively.

The results reflect the mean of the data collected

across the two sessions, but were initially analysed

independently session-by-session. In order to avoid

the inconsistency of magnets placement during

individual training sessions, data were not merged

across different sessions. This however could be

solved, as magnets are to be surgically implanted for

long term usage. Alternatively session-independent

approaches, such as those presented for other SSIs

methods in (Maier-Hein et al., 2005) and (Wand and

Schultz, 2011) could be investigated.

As shown in both figure 6 and figure 7, it is quite

obvious that SensorD produced better recognition

performance on both occasions than using Sensor

alone Similar trends were also reported in (Hofe et

al., 2013b; Cheah et al., 2015). As expected, for this

simple task, recognition using Audio performed very

well (i.e. 99%). Preliminary evaluations indicated

some degradation (i.e. 15% for word accuracy and

17% for sequence accuracy) in recognition

performances in the intraoral device as compared to

the previous external version, as illustrated in figure

6 and figure 7. There are a number of possible

explanations for this degradation: 1) the presence of

the intraoral prototype affects articulation and, in

particular limits the tongue movements. This may

lead to inconsistent articulation, 2) the subject

was new to the intraoral version, but had prior

experiences on the external PMA version, 3) non-

articulatory features arise from unintentional

movements (e.g. swallowing, licking the lips, head

movements) which could have corrupted the data or

been confused with utterances, and 4) the magnets

are able to come much closer to the sensors in the

intraoral device than in the external device, resulting

Preliminary Evaluation of a Silent Speech Interface based on Intra-Oral Magnetic Sensing

113

in a more significant non-linear effect (since the

field strength decreases with cube of the distance).

This means that small unintentional articulator

movements can generate very large signals in some

instances. Further work is required to understand the

significance of each of these possible causes.

Figure 6: Comparison of word accuracy in the connected

digits.

Figure 7: Comparison of sequence accuracy in the

connected digits.

4.5 Hardware Evaluations

As discussed in section 2, one major obstacle to the

acceptability of an AT (e.g. SSI) is its appearance if

it is considered unattractive. Similar views were also

concluded through discussions with potential users

who have undergone a laryngectomy and an opinion

survey of 50 laryngectomees and their families/

friends, the appearance of the PMA-based device

was considered to be of a very high priority (Cheah

et al., 2015). To enhance its appeal to users,

influential factor such as appearance needed to be

accounted for during device development. The

challenge here is to satisfy the design objective and

continue improving on the PMA device’s

appearance but without compromising its speech

reconstruction performance. The latest intraoral

prototype employs the same functional principles as

the previous design reported in (Gonzalez et al.,

2014; Cheah et al., 2015), but implemented in a

different form. A summary of the hardware features

of the new intraoral PMA system compared to its

predecessor is presented in table 2.

Despite the improved appearance of the second

generation PMA system in the form of a wearable

headset, it might not yet to be appealing to all. To

address this shortcoming, the latest intraoral

circuitry was implemented in the form of a dental

retainer. To achieve this, the circuit was re-designed

to use fewer and smaller components. In addition,

the power consumption of the circuit was carefully

managed to allow it to operate from a small battery

suitable for inclusion within the dental retainer.

Hence, this led to a much smaller and lighter (i.e.

one tenth of previous weight) prototype as compared

to its predecessor. In addition, the intraoral prototype

is highly portable, it operates and can be controlled

wirelessly via Bluetooth using a computer/tablet PC.

Also, a higher signal-to-noise ratio (SNR) was

obtained with smaller magnetic tracers, due to their

proximity to the magnetic sensors. The tongue

magnets used with the intraoral sensor system were

16 to 25 times smaller volume than those used for

the external headset, potentially making them less

invasive when implanted.

A significant drawback with the intraoral device

is the limited battery size and capacity (i.e. 40mAh).

In contrast, the external version is less restricted in

term of size and weight of the battery. Hence, this

significantly reduces the operational time of the

intraoral device per charging. A number of steps

have been introduced to reduce its power

consumptions: a lower operational voltage is

selected and power-efficient components, lower data

sampling and transmission rates were chosen. In

addition, software was developed to switch from an

active mode to sleep mode when not in use. Using

these measures, it is estimated that the battery life

cycle could be extended from one hour to about 16.5

hours including 30 minutes of speech.

5 CONCLUSIONS

In this paper we have described a new intraoral

PMA prototype using commercial off-the-shelf

(COTS) components and embedded inside a dental

retainer constructed using the subject’s dental

BIODEVICES 2016 - 9th International Conference on Biomedical Electronics and Devices

114

Table 2: Summary of the PMA devices’ specifications and comparison [*Note that although the external sensing system has

12 channels, only 9 are used for speech recognition and 3 are used for cancellation of background magnetic fields].

Specifications Intraoral Sensing External Sensing

Appearance Dental retainer Wearable headset

Operating voltage 2.1 V 5 V

Magnets

Tongue Blade ø1 mm × 1 mm ø5 mm × 1 mm

Tongue Tip ø1 mm × 1 mm ø2 mm × 4 mm

Lips ø1 mm × 4 mm ø1 mm × 5 mm

Magnetic

Sensing

Dimension 12 × 12 × 3 mm

3

12 × 12 × 3 mm

3

Sensitivity 230 LSb/gauss 440 LSb/gauss

Samplig rate 80 Hz 100 Hz

Channels 9 12*

Data

Transmission

Type Bluetooth 2.0 Bluetooth 2.0 / USB

Frequency 2.4 GHz 2.4 GHz

Data rate 57.6 kbps 500 kbps

Power

Supply Rechargeable battery Rechargeable battery / USB

Battery Li-Ion 40 mAh Li-Ion 1080 mAh

Current consumption 30.5 mA 93.5 (wireless) / 67.1 (wired) mA

Lifetime 1 hour 10 hours

Prototype

Dimension 70 × 55 × 25 mm

3

160 × 160 × 150 mm

3

Weight 15 g 160 g

Material Acrylic resin / polypropylene VeroBlue / VeroWhitePlus resin

impression. Preliminary evaluation of the intraoral

prototype indicated a recognition performance,

slightly lower than the previous external PMA

device. However, there are a number of avenues for

further investigation to improve its performance.

Nonetheless, there are several advantages over

its predecessor. It is considered to be more stable

and robust against unintentional movement as it is

implemented in a form of a dental retainer, which

securely sits in the palatal cavity and is clasping

firmly on the upper teeth. Secondly, significantly

smaller magnets may be used for the intraoral

version (because of their proximity to the magnetic

sensors) while also giving a higher SNR. In addition,

the dental retainer can be completely hidden inside

the user’s mouth and out of sight. Hence, this would

eliminate the concern of being a sign of disability.

However, a downside of the intraoral design

would be the possibility of limiting the natural

movement of the tongue, because the device

occupies part of the user’s oral cavity. Further work

is required to assess whether users become

accustomed to the presence of the device and are

able to achieve more consistent articulation.

Encouraged by the results so far, extensive work

is needed to: 1) further reduce the size of future

intraoral prototypes, 2) improve the circuitry power

efficiency, 3) incorporate inductive charging for the

battery, and 4) introduce a background cancellation

mechanism for movement-induced interference.

Though there are still limitations, the present work

demonstrates a major step towards creating a viable

SSI that would appeal to speech impaired users. For

further information on the PMA-based SSI and its

speech restoration technique, please visit

www.hull.ac.uk/speech/disarm/demos.

ACKNOWLEDGEMENTS

The authors would like to thank Helen Dehkordy

from Hull and East Yorkshire Hospitals NHS Trust

for prototyping the dental retainers. The study is an

independent research funded by the National

Institute for Health Research (NIHR)’s Invention for

Innovation Programme. The views stated in this

report are those of the authors and should not be

interpreted as representing the official thoughts of

the sponsor.

REFERENCES

Bai, J., Cheah, L. A., Ell, S. R., and Gilbert, J. M. (2015).

Design of an intraoral device based on permanent

magnetic articulography. In Proceedings of Macau

Conference on Engineering, Technology and Applied

Science, pages 1-12, Macau, China.

Preliminary Evaluation of a Silent Speech Interface based on Intra-Oral Magnetic Sensing

115

Braz, D. S. A., Ribas, M. M., Dedivitis, R. A., Nishimoto,

I. N., and Barros, A. P. B. (2005). Quality of life and

depression in patients undergoing total and partial

laryngectomy. Clinics, 60(2):135-142.

Bright, A. K., and Conventry, L. (2013). Assistive

technology for older adults: psychological and socio-

emotional design requirements. In Proceedings of 6

th

International Conference on PErvaesive Technologies

Related to Assistive Environments, pages 1-4, Rhodes,

Greece.

Cheah, L. A., Bai, J., Gonzalez, J. A., Ell, S. R., Gilbert, J.

M., Moore, R. K., and Green, P. D. (2015). A user-

centric design of permanent magnetic articulography

based assistive speech technology. In Proceedings of

8

th

BIOSIGNALS, pages 109-116, Lisbon, Portugal.

Denby, B., Schultz, T., Honda, K., Hueber, T., Gilbert, J.

M., and Brumberg, J. S. (2010). Silent speech

interfaces. Speech Communication, 52(4):270-287.

Doi, H., Nakamura, K., Toda, T., Saruwatari, H., and

Shikano, K. (2010). Esophageal speech enhancement

based on statistical voice conversion with Gaussian

mixture model. IEICE Transactions on Information

and Systems, 93(9):2472-2482.

Fagan, M. J., Ell, S. R., Gilbert, J. M., Sarrazin, E., and

Chapman, P. M. (2008). Development of a (silent)

speech recognition system for patients following

laryngectomy. Medical Engineering & Physics,

30(4):419-425.

Gilbert, J. M., Rybchenko, S. I., Hofe, R., Ell, S. R.,

Fagan, M. J., Moore, R. K. and Green, P. D. (2010).

Isolated word recognition of silent speech using

magnetic implants and sensors. Medical Engineering

& Physics, 32(10):1189-1197.

Gonzalez, J. A., Cheah, L. A., Bai, J., Ell, S. R., Gilbert, J.

M., Moore, R. K., and Green, P. D. (2014). Analysis

of phonetic similarity in a silent speech interface based

on permanent magnetic articulography. In Proceedings

of 15

th

INTERSPEECH, pages 1018-1022, Singapore.

Hirsch, T., Forlizzi, J., Goetz, J., Stoback, J., and Kurtx, C.

(2000). The ELDer project: Social and emotional

factors in the design of eldercare technologies. In

Proceedings on the 2000 conference of Universal

Usability, pages 72-79, Arlington, USA.

Hofe, R., Bai, J., Cheah, L. A., Ell, S. R., Gilbert, J. M.,

Moore, R. K., and Green, P. D. (2013a). Performance

of the MVOCA silent speech interface across multiple

speakers. In Proceedings of 14

th

INTERSPEECH,

pages 1140-1143, Lyon, France.

Hofe, R., Ell, S. R., Fagan, M. J., Gilbert, J. M., Green, P.

D., Moore, R. K., and Rybchenko, S. I. (2013b).

Small-vocabulary speech recognition using silent

speech interface based on magnetic sensing. Speech

Communication, 55(1):22-32.

Leonard, R. G. (1984). A database for speaker-

independent digit recognition. In Proceedings of 9

th

ICASSP, pages 328-331, San Diego, USA.

Lontis, E. R., Lund, M. E., Christensen, H. V., Gaihede,

M., Caltenco, H. A., and Andreasen-Strujik, L. N.

(2010). Clinical evaluation of wireless inductive

tongue computer interface for control of computers

and assistive devices. In Proceedings of International

Conference on Engineering in Medicine and Biology

Society, pages 3365-3368, Beunos Aires, Argentina.

Maier-Hein, L., Metze, F., Schultz, T., and Waibel, A.

(2005). Session independent non-audible speech

recognition using surface electromyography. In

Automatic Speech Recognition and Understanding

Workshop, pages 331-336, Cancun, Mexico.

Martin, J. L., Murphy, E., Crowe, J. A., and Norris, B. J.

(2006). Capturing user requirements in medical

devices development: the role of ergonomics.

Physiological Measurement, 27(8):49-62.

Park, H., Kiani, M., Lee, H. M., Kim, J., Block, J.,

Gosselin, B., and Ghovanloo, M. (2012). A wireless

magnetoresistive sensing system for an intraoral

tongue-computer interface. IEEE Transactions on

Biomedical Circuits and Systems, 6(6):571:585.

Rabiner, L. R. (1989). A tutorial on Hidden Markov

Models and selected applications in speech

recognition. Proceedings of the IEEE, 77:257-286.

Tang, H., and Beebe, D. J. (2006). An oral interface for

blind navigation. IEEE Transactions on Neural

Systems and Rehabilitation Engineering, 14(1):116-

123.

Toda, T., Black, A. W., and Tokuda, K. (2008). Statistical

mapping between articulatory movements and acoustic

spectrum using a Gaussian mixture model. Speech

Communication, 50(3): 215-227.

Toda, T., Nakagiri, M., and Shikano, K. (2012). Statistical

voice conversion techniques for body-conducted

unvoiced speech enhancement. IEEE Transactions on

Audio, Speech and Language Processing, 20(9):2505-

2517.

Toutios, A., and Margaritis, K. G. (2005). A support

vector approach to the acoustic-to-articulatory

mapping. In Proceedings of 6

th

INTERSPEECH, pages

3221-3224, Lisbon, Portugal.

Wand, M., and Schultz, T. (2011). Session-independent

EMG-based speech recognition. In Proceedings of 4

th

BIOSIGNALS, pages 295-300, Rome, Italy.

Wang, J., Samal, A., Green, J. R., and Rudzicz, F. (2012).

Sentence recognition from articulatory movements for

silent speech interfaces. In Proceedings of 37

th

ICASSP, pages 4985-4988, Kyoto, Japan.

Young, S., Everman, G., Gales, M., Hain, T., Kershaw, D.,

Liu, X., Moore, G., Odell, J., Ollason, D., Povery, D.,

Valtchev, V., and Woodland, P. (2009). The HTK

Book (for HTK Version 3.4.1). Cambridge: Cambridge

University Press.

BIODEVICES 2016 - 9th International Conference on Biomedical Electronics and Devices

116