Stability Feature Selection using Cluster Representative LASSO

Niharika Gauraha

Systems Science and Informatics Unit

Indian Statistical Institute, 8th Mile, Mysore Road RVCE Post Bangalore, Bangalore, India

Keywords:

Lasso, Stability Selection, Cluster Representative Lasso, Cluster Group Lasso.

Abstract:

Variable selection in high dimensional regression problems with strongly correlated variables or with near

linear dependence among few variables remains one of the most important issues. We propose to cluster the

variables first and then do stability feature selection using Lasso for cluster representatives. The first step

involves generation of groups based on some criterion and the second step mainly performs group selection

with controlling the number of false positives. Thus, our primary emphasis is on controlling type-I error for

group variable selection in high-dimensional regression setting. We illustrate the method using simulated and

pseudo-real data, and we show that the proposed method finds an optimal and consistent solution.

1 INTRODUCTION

We consider the usual linear regression model

Y = Xβ + ε, (1)

where Y

n×1

is a univariate response vector, X

n×p

is the

design matrix, β

p×1

is the true underlying coefficient

vector and ε

n×1

is an error vector. when the num-

ber of variables (p) is much larger than the number of

observations (n), p >> n, the ordinary least squares

estimator is not unique and mostly overfits the data.

The parameter vector β can only be estimated based

on given very few observations, if β is sparse. The

Lasso (Tibshirani, 1996) and other regularized regres-

sion methods are mostly used for sparse estimation

and variable selection. However, variable selection

in situations involving high empirical correlation or

near linear dependence among few variables remains

one of the most important issues. This problem is en-

countered in many applications such as in microarray

analysis, a group of genes sharing the same biologi-

cal pathway tend to have highly correlated expression

levels (Segal et al., 2003) and it is often desirable to

identify all(rather than a few) of them if they are re-

lated to the underlying biological process.

Various algorithms have been proposed which are

based on the concept of clustering variables first and

then pursuing variable selection. In this paper, we

propose the Stability feature selection using CRL

(SCRL), an approach for first identifying clusters

among the variables using some criterion(discussed in

section 2.2) and then subsequently performing stabil-

ity feature selection on cluster representatives while

controlling the number of false positives. The sta-

bility feature selection consists of repeatedly apply-

ing the baseline feature selection method to random

data sub-samples of half-size, and finally selecting the

features that have larger selection frequency than a

predefined threshold value. Thus, The proposed al-

gorithm, SCLR, is an application of stability feature

selection where the base selection procedure is the

Lasso and the Lasso is applied on the reduced design

matrix of cluster representatives. Since, the Lasso is

repeatedly applied on the reduced design matrix, the

SCRL method is computationally fast as well.

Basically, The proposed method, SCRL is a two-

stage procedure: at the first stage we cluster the vari-

ables and at the second stage we do group selection

by stability feature selection using Lasso for cluster-

representatives. When the group sizes are all one, it

reduces to stability selection. In order to illustrate the

performance of SCRL we carry out a simple simula-

tion. We consider a fixed design matrix X

n×p

gener-

ated as

x

i

= Z

1

+ ε

x

i

, Z

1

∼ N(0, 1), i = 1, ..., 5

x

i

= Z

2

+ ε

x

i

, Z

2

∼ N(0, 1), i = 6, ..., 10

x

i

= Z

3

+ ε

x

i

, Z

3

∼ N(0, 1), i = 11, ..., 15

x

i

i.i.d. ∼ N(0, 1), i = 16, ..., 20

ε

x

i

i.i.d. ∼ N(0, 0.01), i = 1, ..., 15

In this example, the predictors are divided into three

equally important groups and within each group there

Gauraha, N.

Stability Feature Selection using Cluster Representative LASSO.

DOI: 10.5220/0005827003810386

In Proceedings of the 5th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2016), pages 381-386

ISBN: 978-989-758-173-1

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

381

are five members. The three groups have pairwise

empirical correlations ρ ≈ 0.9 and the remaining

five are noise features. The true active set is S

0

=

{1, 2, ..., 15}. we generate the response according to

y = Xβ + ε, where the elements of ε are i.i.d. draws

from a N(0, σ

2

) distribution. We simulated data with

sample size n = 100 and with predictors p = 20 and

σ = 3 and 15.

As variable selection or screening method we use

the following five methods and compare the true posi-

tives rate(TPR) and the number of false positives(FP):

The Lasso (Tibshirani, 1996), stability selection us-

ing Lasso (Meinshausen and B

¨

uhlmann, 2010), CLR

(B

¨

uhlmann et al., 2012), CGL (B

¨

uhlmann et al.,

2012) and the proposed method SCRL. For the Lasso,

CRL and CGL, we run 50 simulations and we choose

the model with the smallest prediction error among 50

runs. The results are reported in table 1.

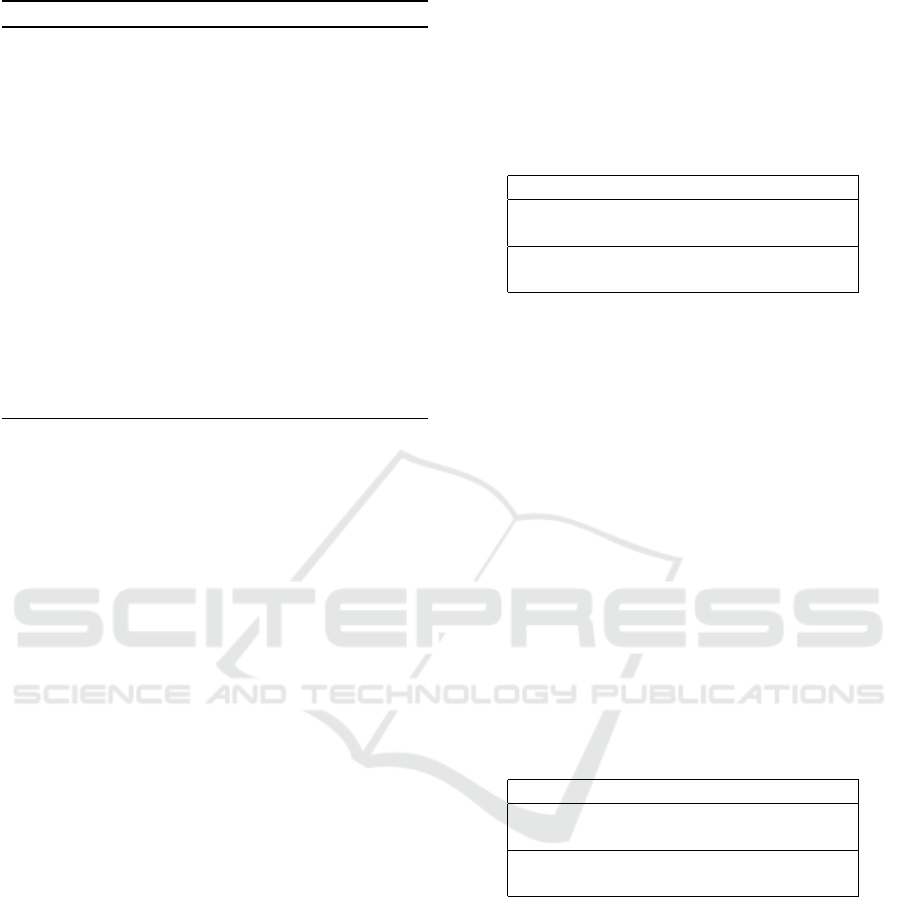

Table 1: Comparision of TPR and FP of different methods.

σ Method TPR FP

3 Lasso 0.6 4

Stability Selection 0 0

CGL 1 4

CRL 1 4

SCRL 1 0

15 Lasso 0.4 2

Stability Selection 0 0

CGL 1 1

CRL 1 1

SCRL 1 0

An ideal variable selection method would select

only 15 true predictors and no noise features. The

Lasso tends to select single variable from the group of

correlated or linearly dependent variables. In the case

of Stability Selection none is selected. CGL and CRL

select all true predictors but also select some noise

features. The SCRL selects all true variables and no

noise features. Thus, SCRL gives an optimal solution.

The rest of this paper is organized as follows. In

Section 2, we provide background, review of relevant

work and we discuss our contribution. In section 3,

we describe the proposed algorithm which mostly se-

lects more adequate models in terms of model inter-

pretation with reduced type I error(false positives). In

section 4, we provide simulation studies. Section 5

contains the computational details and we shall pro-

vide conclusion in section 6.

2 BACKGROUND AND

NOTATIONS

In this section, we state notations and define required

concepts. We also provide review of relevant work

and our contribution.

2.1 Notations and Assumptions

We mostly follow the notations in (B

¨

uhlmann and

van de Geer, 2011). We consider the usual linear re-

gression setup with univariate response variable Y ∈ R

and p-dimensional variables X ∈ R

p

:

Y

i

=

p

∑

j=1

X

( j)

i

β

j

+ ε

i

i = 1, ..., n j = 1, ..., p (2)

where ε

i

∼ N(0, σ

2

)

or, in matrix notation (as in Equation 1)

y = Xβ + ε

where β ∈ R

p

are unknown coefficients to be esti-

mated, and the components of the noise vector ε ∈ R

n

are i.i.d. N(0, σ

2

)

L1-norm is defined as:

kβk

1

=

∑

p

j=1

|β

j

| (3)

L2-norm squared is defined as:

kβk

2

2

=

∑

p

j=1

β

2

j

(4)

The infinite norm is defined as:

kβk

∞

= max

1≤i≤N|

|β

j

| (5)

The true active set is denoted as S

0

and defined as

S

0

= { j;β

j

6= 0} (6)

The estimated parameter vector is denoted as

ˆ

β. The

estimated active set is denoted as

ˆ

S and defined as

ˆ

S = { j;

ˆ

β

j

6= 0} (7)

We consider true positive rate as a measure of perfor-

mance, which is defined as:

T PR =

|

ˆ

S

T

S

0

|

|S

0

|

(8)

The number of clusters are denoted by q. The parti-

tion, G = {G

1

, ..., G

q

} with ∪

q

r=1

G

r

= {1, ..., p} and

G

r

∩ G

l

=

/

0, represents group structure among vari-

ables. The clusters G

1

, ..., G

q

are generated from the

ICPRAM 2016 - International Conference on Pattern Recognition Applications and Methods

382

design matrix X, using methods as described in sec-

tion 2.2. The representative for each cluster is then

defined as (B

¨

uhlmann et al., 2012)

¯

X

(r)

=

1

|G

r

|

∑

j∈G

r

X

( j)

, r = 1, ..., q,

where X

( j)

denotes the jth n × 1 column-vector of X.

The design matrix of cluster representatives is de-

noted as

¯

X.

2.2 Clustering of Variables

To cluster variables we use two methods: correlation

based and canonical correlation based bottom-up ag-

glomerative hierarchical clustering methods. The first

method forms groups of variables based on correla-

tions between them. The second method uses canon-

ical correlation for clustering variables. The con-

struction of groups based on canonical correlations

addresses the problem of linear dependence among

variables, whereas the standard correlation based hi-

erarchical clustering addresses only correlation prob-

lems. For further details on clustering of variables

and determining the number of clusters, we refer to

(B

¨

uhlmann et al., 2012).

2.3 The Lasso and the Group Lasso

The Least Absolute Shrinkage and Selection Operator

(Lasso), introduced by Tibshirani (Tibshirani, 1996),

is a penalized least squares method that imposes an

L1-penalty on the regression coefficients. The lasso

does both shrinkage and automatic variable selection

simultaneously due to the nature of the L1-penalty.

The lasso estimator is defined as

ˆ

β

Lasso

= argmin

β

(ky − Xβk

2

2

+ λkβk

1

) (9)

But the Lasso-estimator has some limitations, i.e.,

if some variables are highly correlated with each

other, the lasso tends to select a single variable out of

a group of correlated variables. In certain situations,

when the distinct groups or clusters among the vari-

ables are known a priory and it is desirable to select or

drop the whole group instead of single variables. The

group Lasso (Yuan and Lin, 2007) is used, that im-

poses an L2-penalty on the coefficients within each of

q known groups to achieve such group sparsity.

The Group Lasso estimator(with known q groups)

is defined as

ˆ

β

GL

= argmin

β

(ky −

j=K

∑

j=1

X

j

β

j

k

2

2

+ λ

j=q

∑

j=1

m

j

kβ

j

k

2

)

(10)

where the m

j

=

p

|G

j

| serves as balancing term

for varying group sizes. The group Lasso behaves like

the lasso at the group level, depending on the value

of the regularization parameter λ, the whole group of

variables may drop out of the model. For singleton

groups (the group sizes are all one), it reduces exactly

to the lasso.

2.4 Cluster Group Lasso

The cluster group Lasso, first identifies groups among

the variables using hierarchical clustering methods

described in section 2.2, and then applies the group

lasso(Equation 10) to the resulting clusters. For more

details on CGL, we refer to (B

¨

uhlmann et al., 2012).

2.5 Cluster Representative Lasso

Similar to the CGL, the cluster representative Lasso,

first identifies groups among the variables using hier-

archical clustering and then applies the lasso for clus-

ter representatives (B

¨

uhlmann et al., 2012).

The optimization problem for CRL is defined us-

ing response y and the design matrix of cluster repre-

sentatives

¯

X as:

ˆ

β

CRL

= argmin

β

(ky −

¯

Xβk

2

2

+ λ

CRL

kβk

1

) (11)

The selected clusters are then denoted as:

ˆ

S

clust,CRL

= {r;

ˆ

β

CRL,r

6= 0, r = 1, ...,q}

and the selected variables are obtained as the

union of the selected clusters as:

ˆ

S

CRL

= ∪

r∈

ˆ

S

clust,CRL

G

r

2.6 Stability Feature Selection

The stability feature selection, introduced by N.

Meinhausen and P. Buhlmann (Meinshausen and

B

¨

uhlmann, 2010), is a general technique for perform-

ing feature selection while controlling the type-I er-

ror. It is combination of sub-sampling and high-

dimensional feature selection algorithms, i.e., the

Lasso. It provides a framework for the baseline fea-

ture selection method, to identify a set of stable vari-

ables that are selected with high probability. Mainly,

it consists of repeatedly applying the baseline feature

selection method to random data sub-samples of half-

size, and finally selecting the variables which have se-

lection frequency larger than a fixed threshold value

(usually in the range (0.6, 0.9) ). For further details

see (Meinshausen and B

¨

uhlmann, 2010).

Stability Feature Selection using Cluster Representative LASSO

383

2.7 Review of Relevant Work and Our

Contribution

This section provides a review of relevant work in or-

der to show that how our proposal differs or extend

the previous studies.

It is known that the Lasso can not handle the situ-

ations where predictors are highly correlated or group

of predictors are linearly dependent. In order to deal

with such situations, various algorithms have been

proposed which are based on the concept of cluster-

ing variables first and then pursuing variable selec-

tion, or clustering variables and model selection si-

multaneously.

The methods that perform clustering and model

fitting simultaneously are The Elastic Net (Zou and

Hastie, 2005), Fused LASSO (Tibshirani et al., 2005),

OSCAR(octagonal shrinkage and clustering algo-

rithm for regression) (H. and B., 2008) and Mnet

(Huang et al., 2010). ENet uses a combination of the

L

1

and L

2

penalties, OSCAR uses a combination of

L

1

norm and and L

∞

norm and Mnet uses a combina-

tion of the MCP(minimum concave penalty) and L

2

penalties. As these methods are based on combina-

tion of penalties, they do not use any specific informa-

tion on the correlation pattern among the predictors,

Hence they can not handle linear dependency prob-

lem. Moreover Fused Lasso is applicable only when

the variables have a natural ordering and not suitable

to perform automated variable clustering to unordered

features.

We list few methods that perform clustering and

model fitting at different stages: Principal component

regression (M., 1957) , Tree Harvesting (Hastie et al.,

2001), Cluster Group Lasso (B

¨

uhlmann et al., 2012),

Cluster representative Lasso(CRL) (B

¨

uhlmann et al.,

2012) and the sparse Laplacian shrinkage (SLS) (J.

et al., 2011)). All these methods have been proven

to be consistent variable selection techniques but they

fail to control the false positive rate.

Since the Lasso tends to select only one variable

from the group of strongly correlated variables(even if

many or all of these variables are important), the sta-

bility feature selection using Lasso does not choose

any variable from the group of highly correlated vari-

ables because correlated variables split the vote. To

overcome this problem we propose to cluster the vari-

ables first and then do stability feature selection using

Lasso for cluster-representatives. Basically, our work

can be viewed as an extension of CRL (B

¨

uhlmann

et al., 2012) and an application of stability feature

selection (Meinshausen and B

¨

uhlmann, 2010). We

compare our algorithm with the CRL, in terms of vari-

able selection in section 4. Our simulation studies

shows that our method outperforms the CRL.

3 STABILITY FEATURE

SELECTION USING CRL

We consider high dimensional setting, where group

of variables are strongly correlated or there exist near

linearly dependency among few variables. It is known

that the Lasso tends to select only one variable from

the group of highly correlated or linearly dependent

variables even though many or all of them belong to

the active set S

0

. Various techniques based on cluster-

ing in combination with sparse estimation have been

proposed in past for variable selection, or in more

mathematical terms, to infer the true active set S

0

,

but mostly they fail to control the selection of false

positives. In this article, Our aim is to identify the

true active set and to control false positives simultane-

ously. We use the concept of clustering the correlated

or linearly dependent variables and then selecting or

dropping the whole group instead of single variables

similar to the CRG method proposed in (B

¨

uhlmann

et al., 2012). The stability feature selection has been

proven for identifying the most stable features and for

providing control on the family-wise error rate, we

recommend (Meinshausen and B

¨

uhlmann, 2010) for

theoretical proofs. In order to reduce the selection of

false positive groups, we propose to combine the CRL

with sub-sampling, we call it SCRL, stability feature

selection using CRL.

The proposed SCLR method can be seen as an

application of stability feature selection where the

base selection procedure is the Lasso and the Lasso

is applied on the reduced design matrix of clus-

ter representatives. The advantage of using reduced

design matrix of cluster representatives are as follows:

(a) The plain stability feature selection, where

the baseline feature is the Lasso and the Lasso is

applied on the whole design matrix X(there is no

pre-processing step of clustering the variables). It

is a special case of SCRL when the group sizes are

all one. The plain stability feature selection is not

suitable for variable selection when the variables are

highly correlated because the underlying selection

method Lasso tends to select single variables per

cluster, it selects none from the correlated groups

as the vote gets split within the cluster variables.

Therefore, using the reduced design matrix ensures

the most stable group selection.

(b)The Lasso is repeatedly applied on the reduced

design matrix, therefore the SCRL method is

computationally fast as well.

ICPRAM 2016 - International Conference on Pattern Recognition Applications and Methods

384

Algorithm 1: SCRL Algorithm.

Input: dataset (y, X), ClusteringMethod

Output:

ˆ

S:= set of selected variables

Steps:

stage 1:

1. Perform clustering, Denote clusters as

G

1

, ..., G

q

2. Compute the matrix of cluster

representatives, denote it as

¯

X

stage 2:

3. perform stability feature selection using

response Y and the reduced design matrix

¯

X

Denote the selected set of groups as

ˆ

S

G

= {r; cluster G

r

is selected, r = 1, ..., q}.

4. The union of the selected groups is the

selected set of variables

ˆ

S = ∪

r

r ∈

ˆ

S

cluster

return

ˆ

S

Similar to the CRL method, The proposed method

SCRL(Algorithm 1) is a two-stage procedure: the first

stage is exactly the same as the CRL, where we clus-

ter the variables based on the criterion discussed pre-

viously and compute the design matrix of cluster rep-

resentatives. At the second stage we perform group

selection by stability feature selection using Lasso for

cluster-representatives. When the group sizes are all

one, it reduces to the plain stability selection.

4 NUMERICAL RESULTS

In this section, we consider two simulation settings

and a semi-real data example. We compare the per-

formances of CRL and SCRL. In each example, data

is simulated from the linear model (Equation 1) with

fixed design X. These examples are similar to the ex-

amples used in the paper (B

¨

uhlmann et al., 2012).

We consider the true positive rate(and also the

number of false positives) as a measure of perfor-

mance, which is defined in Equation 8.

4.1 Example 1: Block Diagonal Model

We generate covariates from N

p

(0, Σ

1

), where Σ

1

con-

sists of 10 block matrices T , where T

10×10

is a block

diagonal matrix, defined as

T

j,k

=

1, j = k

.9, else

The true active set and true parameters β are

defined as: S

0

= {1, 2, ..., 20} and for any j ∈ S

0

we sample β

j

from the set {.1, .2, .3, ..., 2} without

replacement. This setup has all the active variables in

the first two blocks of highly correlated variables.

Simulation results are reported in table 2. We no-

tice that the TPR is the same for both the methods, but

SCRL has lower false positives than CRL.

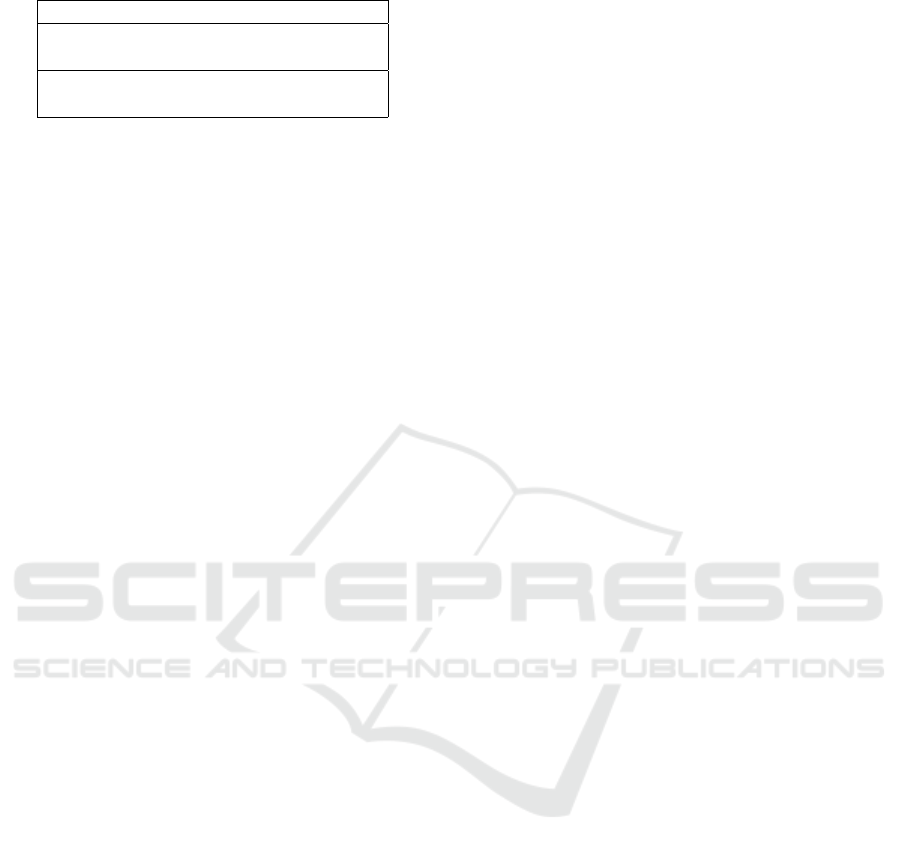

Table 2: Performance measures for example 1.

σ Method TPR FP

3 SCRL 1 0

CRL 1 40

15 SCRL 1 0

CRL 1 60

4.2 Example 2: Single Block Design

We generate covariates from N

p

(0, Σ

2

), where Σ

2

con-

sisted of a single group of strongly correlated vari-

ables of size 30, it is defined as

Σ

2; j,k

=

1, j = k

0.9 i, j ∈ {1, ..., 30} and i 6= j,

0 otherwise

The remaining 70 variables are uncorrelated. The true

active set and true parameters beta are defined as:

S

0

= {1, 2, ..., 15}∪{31, 32, ..., 35} and for any j ∈ S

0

we sample β

j

from the set {.1, .2, .3, ..., 2} without re-

placement. The first block of size 30 contains 25, the

most of the active predictors.

Simulation results are reported in table 3. The

TPR is the same for both the methods, but SCRL has

lower false positives than CRL.

Table 3: Performance measures for example 2.

σ Method TPR FP

3 SCRL 0.9 5

CRL 0.9 24

15 SCRL 0.9 5

CRL 0.9 33

4.3 Example 3: Pseudo-real Data

We consider a real dataset, riboflavin(n = 71, p =

4088) data for the design matrix X with syn-

thetic parameters beta and simulated Gaussian errors

N

n

(0, σ

2

I). See (B

¨

uhlmann et al., 2014) for details on

riboflavin dataset. We fix the size of the active set to

s

0

= 10. For the true active set, we randomly select a

variable k, and the nine variables which have highest

correlation to the variable k, and for each j ∈ S

0

we

set β

j

= 1.

The performance measures are reported in table 4.

The TPR is the same for both the methods, but SCRL

has lower false positives than CRL.

Stability Feature Selection using Cluster Representative LASSO

385

Table 4: Performance measures for semi-real dataset.

σ Method TPR FP

3 SCRL 1 0

CRL 1 5

15 SCRL 0.7 3

CRL 0.7 7

4.4 Empirical Results

We clearly see that in both of the simulation settings

and in the pseudo-real example, the SCRL method

outperform the CRL, since the number of false pos-

itives selected by CRL is much larger than the SCRL.

5 COMPUTATIONAL DETAILS

Statistical analysis was performed in R 3.2.2. We

used, the packages “glmnet” for penalized regression

methods(the Lasso) , the package “gglasso” to per-

form group Lasso, the package “ClustOfVar” for clus-

tering of variables and the package ‘hdi” for stability

selection. All mentioned packages are available from

the Comprehensive R Archive Network (CRAN) at

http://cran.r-project.org/.

6 CONCLUSIONS

In this article, we proposed a two stage procedure,

SCRL, for variable selection with controlled false

positives in high-dimensional regression model with

strongly correlated variables. At the first stage, SCRL

identifies the clusters or group structures using some

criterion and clusters representatives are computed for

each cluster. At the second stage these cluster repre-

sentatives are then used in order to more accurately

perform stability feature selection while controlling

the type-I error. Our algorithm is an application of

stability feature selection with the baseline feature se-

lection method used as the Lasso. Since the Lasso

tends to select only one variable from the group of

strongly correlated or linearly dependent variables,

the stability feature selection using Lasso selects none

of the variables from the correlated/linearly depen-

dent group because the vote gets spit among the cor-

related variables. To address this issue we use the

reduced design matrix of cluster representatives for

stability feature selection. The stability feature selec-

tion has been proven for identifying the most stable

features and for providing control on the family-wise

error rate. Therefore, the SCRL reports most stable

groups with controlled false positives. In addition,

it also offers computational advantage, as the Lasso

method only has to be applied on reduced design ma-

trix.

REFERENCES

B

¨

uhlmann, P., Kalisch, M., and Meier, L. (2014). High-

dimensional statistics with a view towards applica-

tions in biology. Annual Review of Statistics and its

Applications, 1:255–278.

B

¨

uhlmann, P., R

¨

utimann, P., van de Geer, S., and Zhang, C.-

H. (2012). Correlated variables in regression: cluster-

ing and sparse estimation. Journal of Statistical Plan-

ning and Inference, 143:1835–1871.

B

¨

uhlmann, P. and van de Geer, S. (2011). Statistics for

High-Dimensional Data: Methods, Theory and Appli-

cations. Springer Verlag.

H., B. and B., R. (2008). Simultaneous regression shrink-

age, variable selection and clustering of predictors

with oscar. Biometrics, pages 115–123.

Hastie, T., Tibshirani, R., Botstein, D., and Brown, P.

(2001). Supervised harvesting of expression trees.

Genome Biology, 2:1–12.

Huang, J., Breheny, P., Ma, S., and hui Zhang, C. (2010).

The mnet method for variable selection. Department

of Statistics and Actuarial Science, University of Iowa.

J., H., S, M., H., L., and CH., Z. (2011). The sparse lapla-

cian shrinkage estimator for high-dimensional regres-

sion. statistical signal processing, in SSP09. IEEE/SP

15th Workshop on Statistical Signal Processing, pages

2021–2046.

M., K. (1957). A course in multivariate analysis. Griffin:

London.

Meinshausen, N. and B

¨

uhlmann, P. (2010). Stability selec-

tion (with discussion). J. R. Statist. Soc, 72:417–473.

Segal, M., Dahlquist, K., and Conklin, B. (2003). Regres-

sion approaches for microarray data analysis. Journal

of Computational Biology, 10:961–980.

Tibshirani, R. (1996). Regression shrinkage and selection

via the lasso. J. R. Statist. Soc, 58:267–288.

Tibshirani, R., Saunders, M., Rosset, S., Zhu, J., and

Knight, K. (2005). Sparsity and smoothness via the

fused lasso. Journal of the Royal Statistical Society

Series B, pages 91–108.

Yuan, M. and Lin, Y. (2007). Model selection and estima-

tion in regression with grouped variables. J. R. Statist.

Soc, 68(1):49–67.

Zou, H. and Hastie, T. (2005). Regularization and variable

selection via the elastic net. J. R. Statist. Soc, 67:301–

320.

ICPRAM 2016 - International Conference on Pattern Recognition Applications and Methods

386