Inspiration from VR Gaming Technology: Deep Immersion and

Realistic Interaction for Scientific Visualization

Till Bergmann

1

, Matthias Balzer

1

, Torsten Hopp

1

, Thomas van de Kamp

2

, Andreas Kopmann

1

,

Nicholas Tan Jerome

1

and Michael Zapf

1

1

Karlsruhe Institute of Technology (KIT), Institute for Data Processing and Electronics (IPE),

Karlsruhe, Germany

2

Karlsruhe Institute of Technology (KIT), Laboratory for Applications of Synchrotron Radiation (LAS),

Karlsruhe, Germany

Keywords: Virtual Reality, Interactive Interfaces, Scientific Visualization.

Abstract: The computer gaming industry is traditionally the moving power and spirit in the development of computer

visualization hardware and software. This year, affordable and high quality virtual reality headsets became

available and the science community is eager to get benefit from it. This paper describes first experiences in

adapting the new hardware for three different visualization use cases. In all three examples existing

visualization pipelines were extended by virtual reality technology. We describe our approach, based on the

HTC Vive VR headset, the open source software Blender and the Unreal Engine 4 game engine. The use

cases are from three different fields: large-scale particle physics research, X-ray-imaging for entomology

research and medical imaging with ultrasound computer tomography. Finally we discuss benefits and limits

of the current virtual reality technology and present an outlook to future developments.

1 INTRODUCTION

During the last two years there was a huge progress

in the development of virtual reality (VR) hardware,

mainly driven by the computer game industry. Now

there are several VR devices available with high

quality at moderate prices. High quality means:

high-resolution displays, precise head tracking and

lightweight hardware. Each property is required to

achieve a high level of immersion. Scientific

visualizations can benefit from this development in

several ways: produce a realistic impression of the

magnitude of objects and dimensions, free viewpoint

navigation and natural interaction. On the other

hand, the high immersion has the risk to produce

simulation sickness (see Section 3.1). Furthermore,

as the eyes of the users are covered by the VR

HMDs (head mounted display), it is not possible to

use conventional control devices like keyboard and

mouse. These limitations require alternative means

for navigation and interaction.

In this paper, we present the extension of three

visualization use cases by VR technology from the

fields of particle physics, biology and medical

imaging. We describe the used software and

hardware configurations. The workflow from

traditional to VR visualization is discussed as well

as the benefits of several aspects of the new

visualization devices with current technology.

2 VR SETUP

The typical VR development and showcase setup

consists of a VR headset, a graphics workstation and

manipulator devices. Currently there are basically

two high-end developer VR HMDs available on the

market, the Oculus Rift and the HTC Vive. The

PlayStation VR is only available for gaming

consoles. Google VR and its various clones have a

too low quality in display resolution and computing

power and are not considered here. However, it will

shortly be discussed in Section 4. In our setup, we

use the HTC Vive, as it is the only one providing

spatial-tracked hand controllers, which we regard as

essential for control and interaction in VR

330

Bergmann T., Balzer M., Hopp T., van de Kamp T., Kopmann A., Tan Jerome N. and Zapf M.

Inspiration from VR Gaming Technology: Deep Immersion and Realistic Interaction for Scientific Visualization.

DOI: 10.5220/0006262903300334

In Proceedings of the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2017), pages 330-334

ISBN: 978-989-758-228-8

Copyright

c

2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

applications.

The basic HTC Vive system consists of the

HMD, two hand controllers and the lighthouse

stations. The display resolution is 1080 x 1200

pixels per eye at a frame rate of 90 Hz. The

lighthouse stations track an area of 5 m x 5 m from

two opposing corners to avoid occlusion of the

sensors. The hand controllers provide buttons, track

pad and trigger. After a short calibration step the

chaperone system, invented by HTC, will become

active to protect the user from collisions with the

surrounding: When approaching the border of the

tracked area, a fence-like structure will be faded into

the virtual scene to alert the user.

In contrast to HTCs recommendations to use a

NVIDIA GeForce GTX 970 or higher, we were able

to run the HTC Vive headset with a GTX 680 at

satisfying performance. The GTX 580 didn’t support

the HTC Vive.

Figure 1: The HTC Vive headset and one of the two hand

controllers. The controller provides a round track pad (left

side of controller), the grab trigger on the opposite side

and three buttons.

3 OBJECTIVES

In all our use cases we visualize 3D surface meshes

of different levels of abstraction. The traditional way

to display is rendering them on a 2D computer

display and to interact with keyboard and mouse. In

this way we loose stereoscopic viewing and natural

interaction. With 3D displays, we get a better spatial

perception, but hardly an impression of real

dimensions of objects, surroundings or locations,

simply because of the defined viewing angle, limited

by the size of the display or the projection.

From VR technology we expect to overcome

these drawbacks: the field of view is not limited, the

user can turn its head to arbitrary directions.

Manipulation of objects is transferred into the virtual

environment. Appropriate objects represent the

hands of the user, movements of the hands are

tracked by the hand controllers and displayed in the

virtual world. Thus a user can just grab virtual

objects, turn them and inspect them almost like in

the real world.

3.1 Deep Immersion and Navigation in

a Particle Physics Experiment

The goal of the KATRIN experiment is to measure

the mass of the neutrino particle (KATRIN, 2005). It

is a large-scale research facility, spread over several

buildings. Its beamline length is 70 m. There is a

large interest of the public in visiting and viewing

the experiment site. However, KATRIN has

temporary and permanent closed areas due to

hazards like high voltage, low temperature or

tritium, so a visit is not always possible. To

alternatively provide at least a virtual visit of the

experiment, a virtual visit of the whole KATRIN

setup had been constructed in a game-like

environment (Bergmann, 2014). The main objective

of the ‘game’ is to give the player a realistic

impression of the real size of the experiment.

Figure 2: A virtual visit on the KATRIN detector platform.

In the foreground the target gizmo (light blue circle)

pointing to the next teleport location.

Our first use case of applying VR technology is

the KATRIN game. The game and its geometry is

freely available under a CC license. The game has

been developed with the open source software

Blender (Blender, 2016) and originally runs under

the Blender game engine. Blender does currently

Inspiration from VR Gaming Technology: Deep Immersion and Realistic Interaction for Scientific Visualization

331

not support any VR hardware, so we decided to use

the Unreal Engine version 4 (UE4) game engine,

which is also freely available. Only for commercial

applications Epic Games Inc. charges a royalty

(Epic, 2016).

The conversion from Blender to UE4 is straight

forward using FBX as geometry format. However,

lights, sounds, animations, interactions and controls

need to be adapted manually for the UE4

environment. Mainly the collision system requires a

substantial effort and is still under construction.

Blender may use just the meshes itself for collision

detection. UE4 is highly optimized for game

performance and allows only simple and convex

collision geometries. Complex meshes need to be

subdivided and remodelled.

Controls and animations can easily be

implemented using the UE4 Blueprint visual

programming system. In the beginning we took over

the common WASD control scheme from single

player games: basically controlling the viewing

direction using the mouse and walking forward by

pressing the W key. However, in tests we found this

control scheme may result in simulation sickness. If

the visual impression of walking does not agree with

the proprioceptive impression, the brain will respond

with sickness. This experience was at that time

common to VR developers. Epic Games Inc.

collected some recommendations in its Virtual

Reality Best Practices (Epic, 2016) and recently

brought to public VR Templates for the Unreal

Engine. Instead of walking through the virtual

world, the player teleports through the world.

Teleporting is executed as follows: using the head or

hand motion the player points to a location,

visualized by a target gizmo or icon, and initiates the

teleportation by pressing a button on the controller.

Before the teleport the display fades to black and

fades back after. This way, the player does not see a

quick movement of the viewpoint and a sudden

change of the surrounding. We adapted this scheme

and got positive feedback from first users.

Figure 3: 3D view (left) and 3D explosion drawing (right)

of the weevil Trigonopterus vandekampi.

For the extension of the KATRIN game, our

main objective was to achieve a realistic immersion

and get an impression of the real size of the

experiment. Our investigations proof, that VR is able

to fulfil this requirement. Wearing the VR headset,

the player has a realistic feeling to be part of a

virtual world. The position and motion head tracking

is precise enough and the visual response so fast,

that there is no discrepancy to the real world.

3.2 VR Interaction with a 3D Model of

an Insect

Our second use case comes from entomology. X-ray

micro-tomography is a powerful technique to

resolve internal structures of opaque objects with a

spatial resolution in the micro meter scale. Again

analysing complex 3D structures is challenging with

standard displays. We use the geometry meshes of a

snout beetle (weevil, Trigonopterus vandekampi).

The main physiological properties have been

segmented (van de Kamp et al., 2014; Tan Jerome,

2017). In general, the 3D meshes are the means to

investigate the objects in detail and in this use case

we wanted to find new ways to facilitate successive

investigations.

We implemented a virtual lab, where we placed

two models of the weevil. Both can be grabbed

using the virtual hand and the controllers, the first as

one single object, the second consists of single

segmented objects, each of which can be grabbed

and moved around individually. To get a better

overview, the second specimen can be transformed

into a ‘3D explosion drawing’ of its individual

segments (see Figure 3). All 76 segments can be

picked up, moved and inspected from all sides.

Figure 4: The teleporter in the entomology virtual lab.

For navigation in the virtual world, the

navigation scheme of a new VR Template provided

with the UE4 is used. A new teleport location is

selected with the hand controller, and during this

process, a blue line or arc is displayed (see Figure

IVAPP 2017 - International Conference on Information Visualization Theory and Applications

332

4). In this way, it is also possible to teleport behind

smaller obstacles. Further, the outline of the

chaperone mesh is displayed and can be turned using

the track pad on the controller. The trigger (see

Figure 1) is used to grab and hold objects.

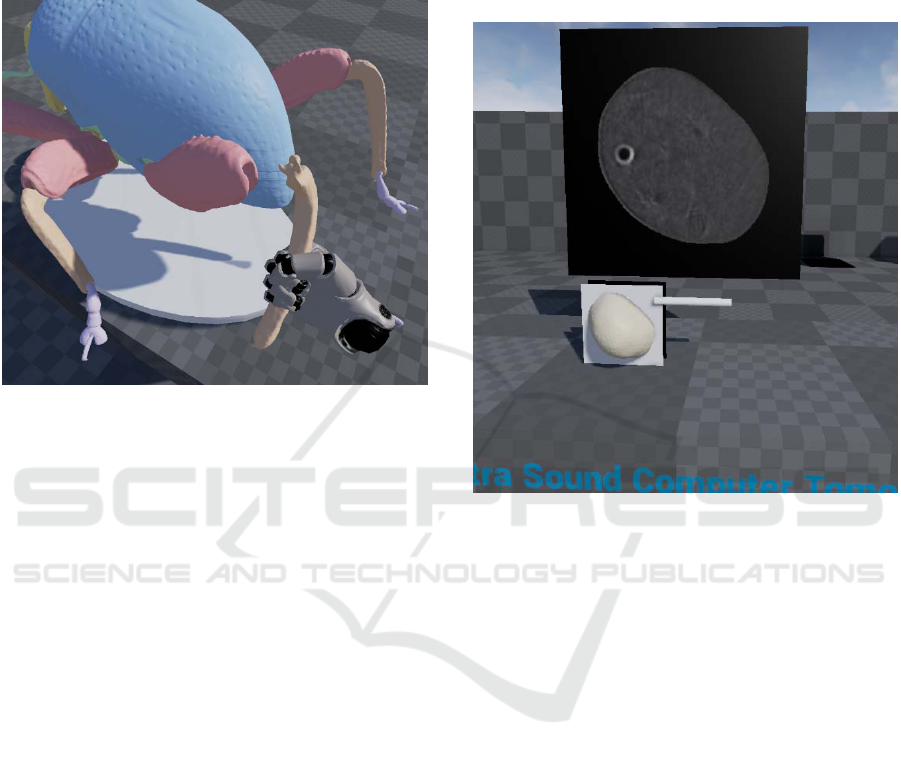

Figure 5: Grabbing and investigating the beetles leg in

detail. As visual feedback of the user hand position a robot

hand is displayed in the virtual world by the UE4 VR

blueprint template.

3.3 Combining 3D Imaging and

Projections for a Medical

Application

The objective of the project Ultrasound Computer

Tomography (USCT) is to establish a new imaging

methodology for early breast cancer detection

(Ruiter et al., 2012 and 2013). In this use case we

combine 3D meshes and the according image slices.

The images were taken from an artificial mamma

phantom containing hidden inner structures to

simulate tumour tissue of differing physical

properties. The images result from the reconstruction

process and the mesh is produced out of a

segmentation-processing step.

Our setup for this third use case has the objective

to give the spectator a spatial impression of the

object. Therefore, it is placed on a table and

‘mounted’ onto a frame. A second frame is movable

into one direction, like a slider, and can be moved

through the 3D model using the hand controller.

Depending on the position of the ‘slider’ the

according image slice is displayed on a large

polygon (see Figure 6). To browse through the

images, we use a UE4 FlipBook Blueprint to select

the according image with respect to the slider

position.

This application is currently under development.

Plans are to make the image slice movable and to

provide meta information belonging to the data set.

To investigate the inner part of the 3D mesh,

arbitrary cutting planes would be helpful.

Figure 6: A virtual USCT image viewer: segmented 3D

mesh in the foreground, the original image slice behind the

table in the background in poster size.

4 CONCLUSION AND OUTLOOK

Our question was: Do scientific applications benefit

from VR gaming technology? We used the HTC

Vive VR headset and controllers in three use cases,

which we implemented using the software Blender

and Unreal Engine 4 game engine.

VR provides some traps. It is essential to avoid

simulation sickness. On the other hand, there are so

many new possibilities. The amount of immersion is

simply impressing. If the goal is to show the user

realistic impressions of objects or locations, VR is

the method of choice.

VR can help in investigating 3D mesh models.

We developed and implemented some ideas like the

3D explosion drawing. The obvious next step is to

implement constraints to the joints of the single

segments – then we could investigate, how the

weevil moves.

For navigation and interaction in virtual reality

environments alternative techniques need to be

developed. Traditional control using keyboard and

Inspiration from VR Gaming Technology: Deep Immersion and Realistic Interaction for Scientific Visualization

333

mouse is not possible, as the VR headsets cover the

eyes of the users. One solution for navigation is the

teleport navigation, which additionally helps to

avoid simulation sickness. Tracked controllers

simplify the investigation of virtual objects like the

insect. In 2D applications, a spectator usually needs

to learn complicated combinations of mouse

movements and keyboard buttons to select, pan,

rotate and scale objects to be able to investigate it

from all possible viewing directions. With tracked

controllers, only the trigger is necessary to grab and

select an object, afterwards it can be turned and

moved around by moving the hand like in real life.

This kind of interaction ability may increase the

usability and user-friendliness of visualization

systems.

On the other hand, complex or ‘special’

interactions need new types of user interfaces. In our

use cases, we implemented a virtual button to

convert the 3D beetle mesh into a 3D explosion

drawing and a virtual slider to browse through the

tomography image stack. And the teleport indicator

acts like a virtual pointing device. Current VR

games and applications are using various additional

ways to implement virtual control elements like

virtual menus, buttons, laser pointers etc. In general,

virtual control elements are necessary to provide

additional interaction abilities and we expect to have

common ‘best practices’ in the near future. We

recommend mimicking real world interaction

schemes in the virtual reality environment to

simplify the learning process of the VR system

users.

An open issue is actor/player collision in VR

environments. The player can easily move its hands

or head into or through virtual obstacles like objects

and walls. In traditional 2D applications, the

rendering engine can just prevent such movements.

In VR applications, this could destroy the immersion

or even could result in simulation sickness.

Some visualization systems are designed to

support collaborative work of a team of e.g. domain

scientists. Using a single VR headset isolates the

active user from the remaining team. In our VR

application use cases the images inside of the VR

headset are mirrored on a separate monitor, so team

members are able to follow the user -passively- in

the virtual environment.

It is planned to extend the beetle VR use case to

a ‘virtual museum’ and present the virtual specimen

of other insects, too. In order to provide numerous

visitors the VR experience, it would be interesting to

have cheaper devices, maybe based on smartphone

headsets. As already mentioned, the Google VR

device family has too low image quality and no

controllers. However, recently Googles Daydream

View VR headset became available, providing high

quality displays and one tracked hand controller, and

we are planning to investigate its usability for our

use cases.

The VR headsets in conjunction with tracked

controllers are powerful new tools for visualization

of higher dimensional objects. In the near future, we

expect further developments of VR software, higher

resolution HMDs, more accurate tracking, more

natural 3D controllers and multi-user VR systems.

We are just at the beginning. But the current

technology is already sufficient for serious and

exciting scientific visualization.

REFERENCES

KATRIN Collaboration, 2005. KATRIN Design Report

2004. Forschungszentrum Karlsruhe, Bericht FZKA-

7090 (2005), ISSN 0947-8620: katrin.kit.edu.

Bergmann, T., Kopmann, A., Steidl, M., Wolf, J., 2014. A

Virtual Reality Visit In A Large Scale Research

Facility For Particle Physics Education And Public

Relation, INTED2014 Proceedings, Valencia, Spain,

2830-2838, 2014.

Blender, 2016. The Blender project – Free and Open 3D

Creation Software. www.blender.org.

Epic Games, Inc., 2016. The Unreal Engine 4 game

engine. www.epicgames.com,

www.unrealengine.com.

van de Kamp, T., dos Santos Rolo, T., Vagovic, P.,

Baumbach, T., Riedel, A. 2014, Three-Dimensional

Reconstructions Come to Life – Interactive 3D PDF

Animations in Functional Morphology. PLoS ONE

9(7): e102355. doi:10.1371/journal.pone.0102355.

Ruiter, N.V., Zapf, M., Hopp, T., Dapp, R., Gemmeke, H.,

2012. Phantom image results of an optimized full 3D

USCT, Proc. SPIE 8320, Medical Imaging 2012:

Ultrasonic Imaging, Tomography, and Therapy.

Ruiter, N.V., Zapf, M., Dapp, R., Hopp, T., Kaiser, W.A.,

Gemmeke, H., 2013. First results of a clinical study

with 3D ultrasound computer tomography, 2013 IEEE

International Ultrasonics Symposium (IUS), Prague,

2013, pp. 651-654.

Tan Jerome, N., Chilingaryan, S., Shkarin, A., Kopmann,

A., Zapf, M., Lizin, A., Bergmann, T., 2017. WAVE: A

3D Online Previewing Framework for Big Data

Archives. Accepted from 8

th

International Conference

on Information Visualization Theory and Applications

(IVAPP), Porto, Portugal, February 2017.

IVAPP 2017 - International Conference on Information Visualization Theory and Applications

334