Comparing Usability, User Experience and Learning Motivation

Characteristics of Two Educational Computer Games

Omar Álvarez-Xochihua

1

, Pedro J. Muñoz-Merino

2

, Mario Muñoz-Organero

2

,

Carlos Delgado Kloos

2

and José A. González-Fraga

1

1

Science Faculty, Universidad Autónoma de Baja California, Carr.Ensenada-Tijuana 3917, Ensenada, Mexico

2

Department of Telematics Engineering, Universidad Carlos III de Madrid, Av.Universidad, 30,

E-28911 Leganés, Madrid, Spain

Keywords: Usability, User Experience, Learning Motivation, Educational Computer Games.

Abstract: Educational computer games are very popular nowadays and can bring a lot of benefits to improve the

learning process. Usability, user experience and learning motivation are important factors in the design of

educational computer-based games. Although there are existing educational games designed under these

principles, there is a need of comparison between different educational tools in order to try to understand

which design criteria can make a tool more successful than another. This work presents the results of a

comparison between two competitive educational games. The study was conducted with 41 master students

evaluating two competition-based educational computer games. The study, based on quantitative and

qualitative data, has shown features that might drive to better usability, user experience and learning

motivation. Additionally, we found a strong positive correlation among usability and user experience with

learning motivation.

1 INTRODUCTION

Usability and user experience (UX) are highly

relevant and interlinked topics in Human Computer

Interaction (HCI), practice and research. These two

software evaluation approaches are focused on

assessing the experience conveyed by a computer

system to its users. On one hand, usability measures

the effectiveness and efficiency of users to carry out

specific tasks using computer systems (pragmatic

nature). While on the other hand, user experience

investigates the user’s emotions triggered by the

system (hedonic nature) (Hassenzahl, 2003).

Various instruments and methods to evaluate

pragmatic and hedonic software’s characteristics are

available, including: SUMI, QUIS, CSUQ, SUS,

UMUX and UMUX-Lite, questionnaires considering

different amount of items to measure usability of

computer systems (Lewis 2013; Lewis et al., 2013);

and the AttrakDiff 2 questionnaire (Hassenzahl et

al., 2003), addressing UX evaluation. In (Vermeeren

et al., 2010) and (Lewis et al., 2013) a

comprehensive evaluation about usability and UX

methods and instruments is presented.

Regarding computer games, due to their impact

in entertainment and their increasing influence in

education, usability and user experience are

important aspects to study. In the videogame

industry, an effective UX determines the digital

games acceptability. The Game Experience

Questionnaire (GEQ) is a psychometric instrument

used to assess specifically the UX in entertaining

games; it is recommended to be administered

immediately after the game session (IJsselsteijn et

al., 2008). However, in Educational Computer

Games (ECG), usability and user experience

evaluation is still open for research consideration.

While students expect a satisfactory pragmatic and

hedonic experience while playing, there is still their

reasonable expectation to improve learning

outcomes. Based on the previous statements, for

computer-based educational games, it is highly

important to assessing usability, user experience and

the learning motivation as three closely interrelated

factors. Learning motivation refers to the affective

domain of learning; it is about how instructional

material enhances learners’ internal perception that

motivates them to learn (Satar, 2007).

Álvarez-Xochihua, O., Muñoz-Merino, P., Muñoz-Organero, M., Kloos, C. and González-Fraga, J.

Comparing Usability, User Experience and Lear ning Motivation Characteristics of Two Educational Computer Games.

DOI: 10.5220/0006338901430150

In Proceedings of the 19th International Conference on Enterprise Information Systems (ICEIS 2017) - Volume 3, pages 143-150

ISBN: 978-989-758-249-3

Copyright © 2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

143

Although there have been several educational

games based on the principles of usability, user

experience and learning motivation, there is a need

of further comparison among educational games in

order to gain insights into the best features.

In this work, we present the results of an

evaluation and comparison of two competition-based

ECG. The evaluation was performed by 41 master

students, using a questionnaire to assess usability,

user experience and motivation to learn in ECG. In

order to have homogeneous systems evaluation

criteria, the same group of students evaluated the

two systems at two different points in time.

This paper is organized as follows: Section 2

presents the literature review; section 3 describes the

educational computer games evaluated and

compared in the study; the proposed questionnaire is

described in section 4; section 5 addresses the study

methodology and results; section 6 presents the

results and discussion of the study; and section 7

concludes the paper.

2 RELATED WORK

In HCI, usability and UX are considered similar but

different terms regarding user satisfaction. It is

understood that the system’s functional

characteristics are vital, but the user motivation to

keep using the product is critical as well

(Hassenzahl, 2003; Vermeeren et al., 2010; Lewis et

al., 2013). In fact, they complement each other. User

satisfaction could not be accomplished without

adequate system functionality, and for the user to be

willing to use the system, he/she must be stimulated

to do it. However, there are a few effective methods

to assess UX separately or in combination with

usability.

Many methods and instruments are available to

conduct usability evaluation (Lewis 2013; Lewis et

al., 2013). However, UX is still not being addressed

comprehensively (Vermeeren et al., 2010;

Hassenzahl, 2003). To understand how the user

really feels about a system is important to obtain that

information directly from him/her. Differing from

some usability methods, the use of logging to

evaluate UX could not be fully effective.

In order to know the UX evaluation methods

used in industry and academia, in (Vermeeren et al.,

2010) is described a study conducted with 35

participants of the CHI’09 conference. A total of 33

UX evaluation methods were initially considered.

However, researchers reported that only 15 methods

were evidently considering the hedonic nature of UX

in addition to the pragmatic emphasis of usability.

The paper does not include details of the names of

all the detected instruments. The identified methods

were categorized into seven groups, including lab

studies (individual or by group), field studies (short

term or longitudinal), surveys, expert evaluation and

mixed methods. In this study a mixed method was

implemented, based on the data collected through

individual surveys in a short term field study.

Specific instruments to evaluate the pragmatic

and hedonic characteristics of software are available

in the literature, including: SUMI, QUIS, CSUQ,

SUS, UMUX and UMUX-Lite (Lewis 2013; Lewis

et al., 2013), instruments to measure computer

systems usability; and the AttrakDiff 2 questionnaire

to explicitly evaluate UX (Hassenzahl et al., 2003).

Particularly, the System Usability Scale (SUS)

instrument is one of the most used questionnaires for

usability testing. The SUS is a 10 items

questionnaire (using positive and negative tone),

released about 20 years ago as a reduced version of

the instruments already proposed (Brooke, 1996).

Recently, authors of the Usability Metric for User

Experience (UMUX) (Finstad, 2010; Finstad, 2013)

and UMUX-Lite (Lewis et al., 2013), in

conformance with the ISO definition of usability

(standard 9241), introduced two even shorter

versions. However, in the HCI research field, there

is some polemic regarding reliability, validity, and

sensitivity of these two instruments (Lewis, 2013;

Pribeanu, 2016). In the presented work, in order to

include more specific questions, we opted for

elaborate our own questionnaire items. Similar to

SUS and UMUX, we elaborated an evaluation

instrument considering the constructs usability

(“…achieve specified goals with effectiveness,

efficiency and satisfaction”) and user experience

(“…users' emotions, beliefs, preferences,

perceptions, physical and psychological responses”),

based on the ISO 9241 standard (ISO 9241-11,

1998).

Regarding the learning motivation construct,

proposed in (Satar, 2007) as a new usability measure

for e-learning design, we considered the four

affective learning sub-constructs from the ARCS

Model of Motivational Design: 1) attention, arouse

and maintain interest in the game; 2) relevance,

significant for students’ needs; 3) confidence,

produce positive expectation for successful

achievement; and 4) satisfaction, reinforcement for

effort.

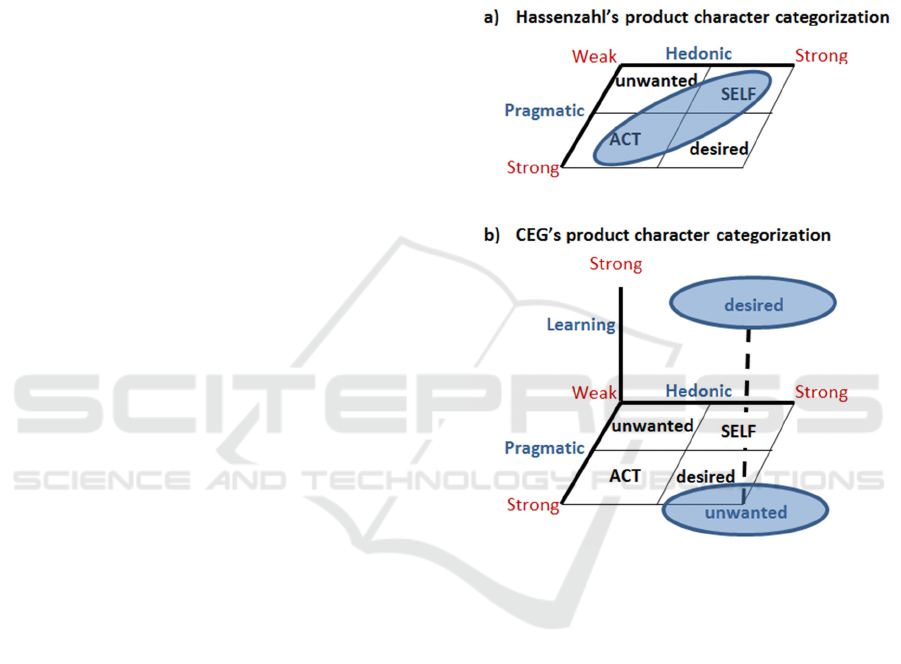

In (Hassenzahl, 2003), it is proposed an

evaluation model that combines UX elements with

functional characteristics (subjective nature of

ICEIS 2017 - 19th International Conference on Enterprise Information Systems

144

experience, product perception and emotional

responses to products in varying situations). The

model is based on the user and designer

perspectives. In addition, based on the weak or

strong perception of the pragmatic and hedonic

attributes, the product character is categorized into

four levels: unwanted, SELF, ACT and desired.

Unwanted category derives from a combination of

weak hedonic and weak pragmatic attributes.

Desired systems are those that combine strong

hedonic and pragmatic characteristics. SELF and

ACT categories imply a strong and weak

combination of hedonic and pragmatic attributes

(Hassenzahl, 2003). The proposed taxonomy for

ECG presented in this work is an extension of this

categorization scheme, where desired systems are

those combining strong hedonic, pragmatic and

learning motivation characteristics.

3 EDUCATIONAL COMPUTER

GAMES

This section includes a brief overview of the

educational computer games evaluated in the case

study: shopC and ISCARE (Information System for

Competition based on pRoblem solving in

Education).

3.1 ShopC Educational Game

Some traditional games have been adapted to

educational computer games. The games that used to

be played, or are still being played now, are a good

option to be implemented as computer-based

educational tools. One of the main advantages of

traditional games is that they have proved to be

accepted by the users and players already know the

mechanics of the game. Specifically, board games

are traditionally well adopted by a large majority.

ShopC is a computer game based on an

adaptation of the board game Monopoly. The game

board, as in the original one, includes a set of

properties to be acquired by the players, such as

restaurants, bars or jewelry stores. When a player

falls into one of the properties, he/she must answer

some questions in order to buy it; the set of

questions can be configurable from any educational

domain. The properties price is determined by the

number of correct answers provided by the students

about a specific subject.

ShopC was designed for multiple players (for

one up to four). One of the players starts the game

by rolling a dice in order to know the number of

squares to advance. Then, the mechanic used to

determine which player goes next is similar to the

original game. When a player falls into a property

previously sold, he/she must answer a question to

avoid paying the corresponding fee. The game

finishes after fifty turns for each player, or when

they lose all their money. After that, information

about the performance of players is provided.

In order to obtain a positive effect in the learning

process, the system was developed considering three

design principles: motivation, learning and gaming;

features in accordance with the factors stated in our

study. Firstly, the motivation principle includes the

elements that take students to play the game (e.g.

flow, curiosity, autonomy, rewards, feedback and a

competition scenario). Secondly, the learning

fundamentals are based on the Learning Mechanics-

Game Mechanics model (LG-GM), which considers

learning theories such as constructivism,

behaviorism and personalism (e.g. questions and

answers, instruccional guidance, action/task,

repetition and reflection and self-assessment).

Finally, the gaming features were designed to

entertain and amuse players with the game

mechanics while learning (goals and rules, player’s

control and challenges). More details about the

design of shopC are presented in (Julian-Mateos,

Muñoz-Merino, Hernández-Leo, Redondo-Martínez

and Delgado-Kloos, 2016).

3.2 ISCARE Educational Game

Problem solving is a skill required at all educational

levels. Problem representation and choosing the

problem solving procedure are recognized as being

vital elements within the framework of solving a

problem (Frederiksen, 1984). This capability allows

students to address situations using general or ad-

hoc methods to solve specific problems.

ISCARE is an educational computer game that

combines three particular features. Firstly, the

instructional materials of ISCARE are based on

problem solving educational activities. Secondly, as

an innovation within the field of competition-based

ECG, the competition functionality of the game is

based on the Swiss-system non-eliminating

tournament. According to the mechanics of this type

of competition, the system divides a tournament in

different rounds, participants are paired and then

they play the same amount of matches against each

other. Finally, ISCARE is a competition-based

Intelligent Tutoring System (ITS). The system

includes artificial intelligence algorithms for pairing

Comparing Usability, User Experience and Learning Motivation Characteristics of Two Educational Computer Games

145

students and assigning problems according to their

knowledge level (Muñoz-Merino, Fernández-

Molina, Muñoz-Organero, Delgado Kloos, 2012).

Besides the problem information, student can see

tournament statistics such as his/her round points,

tournament performance and information about the

current opponent.

In summary, both ECG are constructed under the

category of competition games. However, while

shopC is an adaptation of a board game considering

characteristics that emphasize motivation to play the

game, based on multiple learning theories and

gaming features, the ISCARE game accentuates the

importance of problem solving skills and the

competition feature of the system.

4 EVALUATION INSTRUMENT

In order to conduct our study to evaluate the design

characteristics of the described ECG, a questionnaire

has been elaborated. This instrument is based on the

questionnaire developed in (Julian-Mateos et al.,

2016). Our questionnaire has been extended with

new questions. The created instrument covers the

three key factors stated previously as relevant to

evaluate in the interaction with CEG: usability,

experience of user and learning motivation. The

different questions are classified in the mentioned

three categories, which is a different classification

than the one proposed by Julian-Mateos et al.,

(2016).

Fundamentally, in order to evaluate CEG

performance, we extended the product character

categorization proposed by Hassenzahl (2003). In

addition to the pragmatic and hedonic elements (see

Figure 1a, retrieved from the original paper),

specifically for ECG, we included the learning

motivation factor as a new element for product

character categorization (see Figure 1b).

The elaborated instrument consists of two

sections, intended to gather quantitative as well as

qualitative data. The first section is an 11 items

questionnaire, intended to assess the usability, UX

and learning motivation characteristics of the ECG;

three items addressing each assessed factor and two

additional items intended to evaluate the overall

performance of the systems. Table 1 shows the items

and the factor category to which they belong. This

instrument section is an opinion survey, with a 5-

points measurement Likert scale; from strongly

disagree to strongly agree.

Qualitative data is collected through the second

section of the instrument, a survey consisting of two

framed open-ended questions. The main objective of

these questions was to complement and validate the

users’ opinion gathered through the quantitative

survey. The two qualitative questions were the

following:

What are three positive aspects of the game?

What are three negative aspects of the game?

Figure 1: Adapted product character categorization to

classify ECG.

5 STUDY METHODOLOGY AND

RESULTS

In this study we evaluated the usability, user

experience and learning motivation factors, as well

as the general performance, of the two educational

games. The study was conducted through a

questionnaire based on available previously

validated instruments.

ICEIS 2017 - 19th International Conference on Enterprise Information Systems

146

Table 1: Questionnaire items categorized by evaluated factors.

Item Factor

1 It has been easy to understand the different functionality of the game. Usability

2

The elements of the game interface are easily identified and are illustrative of the functionality they

perform (buttons, images, etc.)

Usability

3 The tool has a nice interface. General

4

It has been easy to know my position in the game ranking (you can always know if you are winning

or losing).

Usability

5

I have had the impression that this game complements or help to improve my knowledge, skills and

experience.

Learning

Motivation

6

I think I can learn more with this game than with a traditional system of questions with a piece of

paper.

Learning

Motivation

7 I like the objectives, rules and philosophy of the game. UX

8

The outcome of the game has been according to my level of knowledge.

Learning

Motivation

9 The use of this game has increased my motivation and interest for the course. UX

10 I would like to repeat this experience. UX

11 I liked the game used. General

5.1 Study Design

This study was conducted in a threefold perspective:

evaluate the usability, user experience and learning

motivation of students while using two different

types of competition ECG. During the study we

collected both quantitative and qualitative data to

evaluate the performance and characteristics of the

two educational digital games. Qualitative data was

intended to confirm, and with the aim to obtain a

better understanding of, the quantitative outcomes.

In order to have homogeneous systems evaluation

criteria, the same group of students participated in

the evaluation of the two systems at two different

points in time.

5.2 Participants

A total of 41 master students participated in this

study. During the study period, participants were

enrolled in Telecommunications Engineering

master’s degree at Universidad Carlos III de

Madrid, taking the Network Security Fundamentals

class. The students in the class were invited to

participate voluntarily in the study, all of them

agreed to take part in the evaluation of the two ECG.

Considering that the study was conducted in two

different days, unfortunately, due to personal

reasons, two students could not attend the second

part of the evaluation process. At the end, 41

students evaluated the ISCARE game and 39 the

shopC game.

5.3 Evaluation Procedure

The study was conducted at two different days. On

the first day, students played the ISCARE game

(intervention-1), and on a different day they played

the shopC game (intervention-2). Consistently, each

intervention took an average of two hours to be

completed. During each intervention, the students

performed three main activities:

1. Students attended a one-hour class about a

specific topic of network security fundamentals

(different topic per intervention).

2. Students received instructions and the dynamic

for the game (purpose, roles, how to play,

educational goal, and so forth), after that we

defined the competition program (pairs of

students to compete), and then they started

playing for an interval of 30 minutes.

3. Students answered the quantitative and

qualitative questions.

6 RESULTS AND DISCUSSION

In this section we present and discuss separate

results by the evaluated educational games (shopC

and ISCARE) and by comparing the outcomes of

both studies. Quantitative analysis of the survey data

is presented first, and then we complement this study

with the examination of the qualitative information.

6.1 ShopC Educational Game

We used the mean and standard deviation for

quantitative analysis, qualitative data is expressed as

Comparing Usability, User Experience and Learning Motivation Characteristics of Two Educational Computer Games

147

percentages. The results of the quantitative survey

are presented in Table 2. Similar to the GEQ

instrument, factors score were computed as the

average value of its items.

According to the quantitative evaluation, shopC

game was rated positively by the study participants

(N=39). The factors’ evaluation results indicate a

significantly good rating of perceived system

usability (M

U

= 4.470, SD

U

= 0.837). Similarly, the

mean score for the UX factor was equally rated

(M

UX

= 4.491, SD

UX

= 0.766). The high ratings

assigned by participants to these two factors indicate

the effectiveness and efficiency of the game, as well

as the fulfilment of the expected level of students’

satisfaction (see Table 2).

Regarding learning motivation, students consider

this game as an adequate environment to enhance

their domain knowledge. Surprisingly, the mean

score of the learning motivation factor (M

LM

=

4.559, SD

LM

= 0.766) was slightly higher than the

rates obtained for usability and UX. As expected,

considering the shopC system’s characteristics, its

overall evaluation was rated satisfactory; a mean

score above 4.5 (up to a maximum of five) indicates

that the system is suitable for learning and

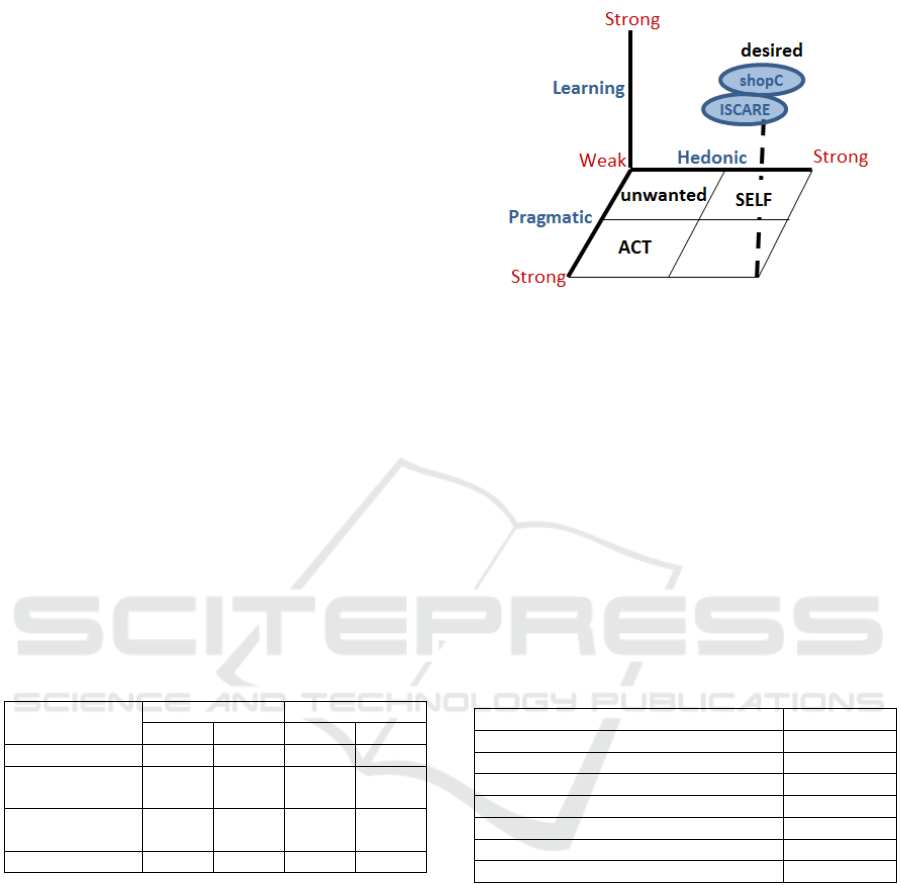

recreation. Based on the proposed product character

categorization schema, we classified shopC within

the “desired” category (see Figure 2).

Table 2: Descriptive statistics by evaluated factor.

Additionally, we analyzed how usability and UX

factors had influenced the learning motivation

conveyed by the shopC system. We found a strongly

positive correlation between the motivation to learn

by using this educational game and the perceived

system usability (Pearson’s r=.621, n=39, p=2.5E-

05), and a higher correlation between UX and

learning motivation (Pearson’s r=.770, n=39,

p=1.0E-05). These findings outline the important

role that usability and user experience play in the

design of ECG in order to enhance the motivation of

students to learn.

Figure 2: Product character category assigned to shopC

and ISCARE games.

About the qualitative questions, most of the

students provided at least one aspect per question.

Table 3 shows the three main students’

recommendations per question, based on percentage

of recommendation. For shopC game, the most

notable positive aspects detected by students

enclosed the three evaluated factors: user experience

(students felt motivated and satisfied with the game),

learning motivation (they perceived learning

outcomes while playing) and usability (they

considered the game was easy to play), in that order

of importance.

Table 3: Positive and negative aspects of shopC game.

Regarding the negative aspects, students mainly

expressed their concern about educational matters;

participants recommended an increase in the amount

of questions in the game. A number of participants

indicated that they suddenly were asked the same

question during the game. Second and third negative

aspects, with a very low percentage of participants,

were related to improve the system interface

(usability) and game mechanics (UX).

6.2 ISCARE Educational Game

The ISCARE evaluation results were lower but very

similar to those obtained in shopC game. The

findings indicate high ratings for usability (M

U

=

Factor

shopC ISCARE

Mean SD Mean SD

Usability 4.470 0.837 4.439 0.570

User

Experience

4.491

0.766

4.228

0.647

Learning

Motivation

4.559

0.766

3.911

0.712

General 4.583 0.923 4.110 0.719

Positive Aspects Students

It is an entertaining game 20.50 %

Helps to learn while playing 17.98 %

It is easy to play 12.82 %

Negative Aspects Students

Questions repetition 38.46 %

We don't know who the next player is 5.12 %

Poor user interface 5.12 %

ICEIS 2017 - 19th International Conference on Enterprise Information Systems

148

4.439, SD

U

= 0.570) and user experience (M

UX

=

4.228, SD

UX

= 0.647) factors (see Table 2). Again,

students appeared satisfied with the functionality

and experience using this game. However, ISCARE

obtained a lower mean score in the learning

motivation factor (M

LM

= 3.911, SD

LM

= 0.712). The

result of the overall evaluation of ISCARE game

was lower but acceptable as well (M

G

= 4.110, SD

G

= 0.719). Using our categorization scheme, based on

the evaluation results, ISCARE was classified within

the ECG “desired” category (see Figure 2).

Compared with shopC, ISCARE presented lower

factors correlation. However, the correlation

between usability and learning motivation

(Pearson’s r=.546, n=41, p=2.2E-04) and UX with

learning motivation (Pearson’s r=.558, n=41,

p=1.5E-04) remains stable; we can observe a

moderate positive correlation. These results

emphasize the importance of usability and UX as

elements capable of raising the learning motivation

level of students while using ECG.

The qualitative results of ISCARE game are

described in Table 4. Even though quantitative

findings were lower than the obtained in shopC,

contradictorily for the learning motivation factor,

most of the students expressed a positive opinion

regarding their motivation to learn by using this

game. More than 58% of the participants pointed out

that ISCARE helps them to learn while playing and

also motivate to study. Nevertheless, qualitative

results confirm the quantitative findings; students

complemented the study indicating an acceptable

usability and UX of the ISCARE game. With regard

to the negative aspects, the main concern was the

amount of time available for the learning experience;

slightly more than half of the students agreed that

there was not enough time to answer the questions.

This could be the reason why ISCARE obtained a

lower mean score about the learning motivation

factor.

According to the results of the quantitative

analysis, both educational games were classified

within the optimal/desired category; based on the

proposed categorization scheme. An ECG that falls

under this category implies not only the fulfilment of

usability and UX principles, but also the user

perception of positive learning outcomes and

motivation to learn.

Since both systems were evaluated by the same

group of students, and assuming that they used the

same evaluation criteria or point of view, we

consider that competition-based ECG are well

situated to address learning motivation factors. Even

though the two evaluated systems did not use the

Table 4: Positive and negative aspects of ISCARE game.

same instructional technique, their competition

feature helped to motivate students to play the game

and learn. At the same time, ensuring adequate user

experience and usability systems’ characteristics

increase the user learning motivation perception.

7 CONCLUSIONS

Empirical research on how students perceive

computer-based educational games allows

improving the performance and acceptability of this

type of educational technology. Conducting research

about usability and user experience of ECG can have

a significant impact on the implementation of future

systems. However, addressing the main goal of

ECG, the analysis of learning gains and learning

motivation is vital to understand what really enhance

students learning experience while playing

educational digital games.

This paper presents the evaluation of two

competition-based ECG systems, shopC and

ISCARE, analysing the correlation among usability

and user experience with learning motivation. The

presented work has shown how usability and UX

can be crucial factors to enhance learning motivation

of students using ECG. At the same time, based on

the quantitative and qualitative data gathered,

students reported as significant the importance of

system functionality, feelings conveyed and learning

motivation.

For future work, we plan to follow students’

recommendations to improve shopC and ISCARE

games performance, as well as evaluate the proposed

questionnaire items in order to conduct further

evaluations addressing topics from different

educational domains.

Positive Aspects Students

Helps to learn while playing/motivate

to study

58.54 %

It is easy to play 31.71 %

It is an entertaining game 31.71 %

Negative Aspects Students

There is not enough

time to answer questions

51.11 %

There is not available feedback 22.22 %

It is stressful 8.89 %

Comparing Usability, User Experience and Learning Motivation Characteristics of Two Educational Computer Games

149

ACKNOWLEDGEMENTS

Work partially funded by the RESET project under

grant no. TIN2014-53199-C3-1-R (funded by the

Spanish Ministry of Economy and Competitiveness),

and the “eMadrid” project (Regional Government of

Madrid) under grant no. S2013/ICE-2715. This work

has been also partially funded by the CONACYT

project under grant no. PDCPN2014-01/247698; and

by the PRODEP project, supported by the 2015 call

for the Integration of Thematic Networks of

Academic Collaboration.

REFERENCES

Berkman, M. I. and Karahoca, D. (2016). Re-assessing the

usability metric for user experience (UMUX) scale. J.

Usability Studies 11(3), 89-109.

Brooke, J. (1996). SUS: A "quick and dirty" usability

scale. In Jordan, P. W., Thomas, B., Weerdmeester,

B. A. and McClelland A. L. (Eds.), Usability

Evaluation in Industry. London: Taylor and Francis.

Finstad, K. (2010). The Usability Metric for User

Experience. Interact. Comput. 22, 5, 323-327.

doi:10.1016/j.intcom.2010.04.004.

Finstad, K. (2013). Response to commentaries on "the

usability metric for user experience." Interacting with

Computers, 25(4), 327-330.

Frederiksen, N. (1984). Implications of cognitive theory

for instruction in problem solving. Review of

Educational Research. 54(3), 363-407.

Hassenzahl, M. (2003). The thing and I: understanding the

relationship between user and product. In Blythe, M.,

Overbeeke, C., Monk A. F., and Wright P. C. (Eds),

Funology: From Usability to Enjoyment, (pp. 31 – 42).

Dordrecht: Kluwer.

Hassenzahl, M., Burmester, M. and Koller, F. (2003).

AttrakDiff: Ein Fragebogen zur Messung

wahrgenommener hedonischer und pragmatischer

Qualität. [AttrakDiff: A questionnaire for the

measurement of perceived hedonic and pragmatic

quality]. In Ziegler, J., Szwillus, G. (Eds.) Mensch &

Computer 2003: Interaktion in Bewegung, Teubner,

Stuttgart, Germany, pp. 187–196.

IJsselsteijn, W., Poels, K. and de Kort. Y.A.W. de (2008).

The Game Experience Questionnaire: Development of

a self-report measure to assess player experiences of

digital games. TU Eindhoven, Eindhoven, The

Netherlands.

ISO 9241-11 (1998). Ergonomic Requirements for Office

Work with Visual Display Terminals (VDTs). Part 11:

Guidance on Usability. International Standardization

Organization (ISO), Switzerland.

Julian-Mateos, M., Muñoz-Merino, P.J., Hernández-Leo,

D., Redondo-Martínez, D., Delgado Kloos, C. (2016).

Design and Evaluation of a Computer Based Game for

Education. In Proceedings of the Frontiers in

Education Conference (FIE'16). Erie, Pennsylvania,

USA, 1-8. doi= 10.1109/FIE.2016.7757356.

Lewis, J. R. (2013). Critical review of "the usability metric

for user experience." Interacting with Computers,

25(4), 320-324.

Lewis, J. R., Utesch, B. S. and Maher. D. E. (2013).

UMUX-LITE: when there's no time for the SUS.

In Proceedings of the SIGCHI Conference on Human

Factors in Computing Systems (CHI '13). ACM, New

York, NY, USA, 2099-2102.

doi:10.1145/2470654.2481287.

Muñoz-Merino, P. J., Fernández-Molina, M., Muñoz-

Organero, M. and Delgado Kloos, C. (2012). An

adaptive and innovative question-driven competition-

based intelligent tutoring system for learning. Expert

Systems Appl. 39(8), 6932-6948.

Doi=10.1016/j.eswa.2012.01.020.

Pribeanu, C. (2016). Comments on the reliability and

validity of UMUX and UMUX-LITE short scales.

In Proceedings of the ROCHI Conference in Human-

Computer Interaction (ROCHI '16), Iasi Romania,

2099-2102.

Satar, N. S. M. (2007)

Does e-learning usability attributes

correlate with learning motivation? In Proceeing of

the 21st AAOU Annual Conference, Kuala Lumpur,

29-31.

Vermeeren, A. P. O. S., Law, E. L., Roto, V., Obrist, M.,

Hoonhout, J. and Väänänen-Vainio-Mattila, K. (2010).

User experience evaluation methods: current state and

development needs. In Proceedings of the 6th Nordic

Conference on Human-Computer Interaction:

Extending Boundaries (NordiCHI '10). ACM, New

York, NY, USA, 521-530.

doi=10.1145/1868914.186897.

ICEIS 2017 - 19th International Conference on Enterprise Information Systems

150