A Platform for the Italian Bebras

Carlo Bellettini

1

, Fabrizio Carimati

2

, Violetta Lonati

1

, Riccardo Macoratti

1

, Dario Malchiodi

1

,

Mattia Monga

1

and Anna Morpurgo

1

1

Department of Computer Science, Universit

`

a degli Studi di Milano, Italy

2

Incanet s.r.l., Italy

riccardo.macoratti@studenti.unimi.it, carimati@incanet.it

Keywords:

Contest Management Systems, Bebras, Informatics Competitions.

Abstract:

The Bebras International Challenge on Informatics and Computational Thinking is a contest open to pupils of

all school levels (from primary up to upper secondary) based on tasks rooted on core informatics concepts, yet

independent of specific previous knowledge such as for instance that acquired during curricular activities. This

paper describes the design choices, the architecture, and the main features of the web-based platform used to

carry out the Italian Bebras contest. This platform includes functionalities needed by students, teachers, and

Bebras staff during the execution of the challenge, tools to support the preparation of tasks and the training

of students, instruments to evaluate the results and analyse data collected during the challenge. The platform

is online since 2015 and it has managed the participation of around 25,000 teams and a significant amount of

training sessions.

1 INTRODUCTION

The Bebras International Challenge on Informatics

and Computational Thinking (The Bebras Commu-

nity, 2017) is a yearly contest organized in sev-

eral countries since 2004 (Dagien

˙

e, 2010; Haberman

et al., 2011), with almost two million participants

worldwide. The contest, open to pupils of all school

levels (from primary up to upper secondary), is based

on tasks rooted on core informatics concepts, yet in-

dependent of specific previous knowledge such as for

instance that acquired during curricular activities.

The tasks are designed by the Bebras community,

which includes the representatives of more than 50

countries. The community organizes an international

workshop yearly, which is devoted to proposing a

pool of tasks to be used by national organizers in or-

der to set up the local contests. The national orga-

nizers then translate and possibly adapt the tasks to

their specific educational context or to their specific

way to propose tasks to the schools of their country.

For instance, the French edition is based on interactive

versions of the tasks, and pupils can repeatedly sub-

mit answers until they achieve a correct solution. Par-

ticipation is individual in some countries and team-

based in other ones; in some school systems the par-

ticipation to the Bebras is compulsory. In any case

tasks should stimulate an entertaining learning expe-

rience, thus they should be moderately challenging

and solvable in a relatively short time: the commu-

nity guidelines suggest three minutes on average. Un-

fortunately, however, it is not easy to predict the dif-

ficulty of tasks (van der Vegt, 2013; Bellettini et al.,

2015; Lonati et al., 2017b). The difficulty of tasks

is indeed a critical aspect of the challenge, as it is not

easy to predict, but crucial for the challenge’s success.

Research on task difficulty in general, and on Bebras

tasks in particular, is on going and data collection for

studying difficulties is one of our goals.

Besides being used during contests, Bebras tasks

are more and more used as the starting points for

educational activities carried out by single teach-

ers (Dagien

˙

e and Sentance, 2016; Lonati et al., 2017a;

Lonati et al., 2017c). Bebras tasks were also used to

measure improvements of students’ attitude to com-

putational thinking (Straw et al., 2017).

In Italy (ALaDDIn, 2017) the Bebras Challenge is

proposed to five categories of pupils, from primary

(4

th

grade and up) to secondary schools, who par-

ticipate in teams of at most four pupils. The set-

ting described in this paper has been used since 2015;

from 2009 to 2015 the Italian Bebras was managed

by a different organization who operated through a

Moodle-based system, now offline (Cartelli, 2009),

350

Bellettini, C., Carimati, F., Lonati, V., Macoratti, R., Malchiodi, D., Monga, M. and Morpurgo, A.

A Platform for the Italian Bebras.

DOI: 10.5220/0006775103500357

In Proceedings of the 10th International Conference on Computer Supported Education (CSEDU 2018), pages 350-357

ISBN: 978-989-758-291-2

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

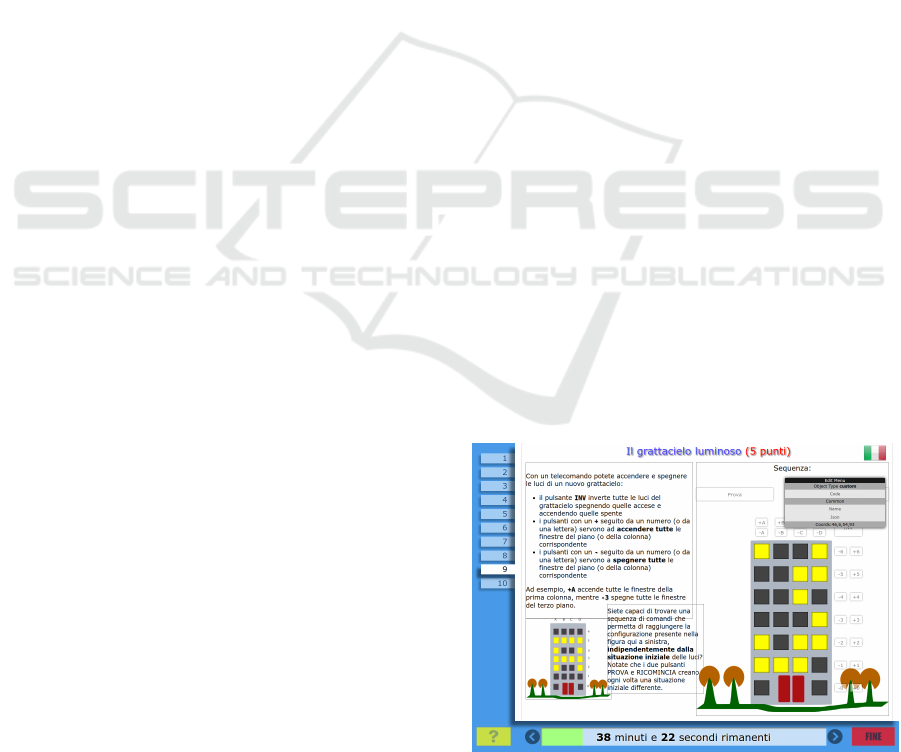

Figure 1: A typical task.

and a similar contest (the “Italian Kangourou of In-

formatics”, see below) was held in parallel: the two

started to collaborate and eventually merged into the

current Bebras Challenge. The contest now takes

place in schools during the Bebras week (usually

at mid November): under the supervision of teach-

ers, teams access a web application that presents the

tasks to be solved. Differently from some of the

other countries, in Italy normally only few tasks are

based on multiple-choice questions; most of them

are sophisticated tasks, in that they present open an-

swer questions, they are interactive, or they require

complex, combined answers (Boyle and Hutchison,

2009). Consistently, sometimes partial scores are

contemplated and there are no penalties for wrong an-

swers.

An example of interactive task is presented in Fig-

ure 1. The task has buttons which represent switches

that selectively turn on or off rows or columns of a

skyscraper’s lights. The task’s request is to write a

sequence of switch operations to get the light picture

shown in the small skyscraper on the bottom left of

the task, whatever the current state of the windows,

which changes at every trial and is in fact unpre-

dictable.

The 2017 edition saw the participation of 12,214

teams (about 45,000 pupils, corresponding to around

7 pupils every thousand who are attending an Italian

school, see Figure 2); the teams partook to the Bebras

during the week November 13–17, each category had

10 tasks to be solved within 45 minutes, for a total

contest time of 50 hours across the week.

Before 2015, we organized a contest similar to Be-

bras named “Italian Kangourou of Informatics” (Lis-

soni et al., 2008). It had two rounds, the first one

having the same format as the present Italian Bebras

Challenge, which in fact at first inherited some of the

tools already used for Kangourou. However, the in-

Figure 2: Bebras popularity in the Italian schools; the color

of each administrative region denotes the permillage of

pupils participating to the Bebras w.r.t. the total population

in school age (source of school data: National Institute for

Statistics (ISTAT), 2014).

creasing number of participants and the evolution of

technologies (the previous system was available as an

application based on Macromedia Flash and working

only on MS Windows) induced us to redesign and im-

plement a brand new system which supports all the

phases of the competition (before, during and after the

contest takes place) and that can be used on any plat-

form with a recent web browser; this paper describes

the design choices, its architecture, and main features

conceived to cope with Italian Bebras peculiarities.

The paper is organized as follows. In Section 2

we present the general features of the platform and its

architecture. In Section 3 we discuss in particular the

components of the system that are in use during the

contest, and the underlying design choices. Section 4

illustrates the life cycle of tasks which includes edit-

ing, administration, evaluation, and analysis of col-

lected tracking data. In Section 5 we survey tools used

to manage Bebras competitions in other countries and

finally in Section 6 we draw some conclusions.

2 PLATFORM DESIGN

The Italian Bebras platform supports all the phases of

the competition.

Before the contest: organizers prepare the tasks;

teachers sign up in the platform, register teams

and edit information about the school and the

team members (age, gender, optionally the

names); guests can try the tasks proposed in pre-

vious editions of the contest and get immediate

feedback about their score;

A Platform for the Italian Bebras

351

During the Bebras week: teams access the tasks

and submit their answers; organizers and teachers

can monitor the situation of teams;

After the contest: task answers are evaluated; col-

lected data are analyzed by organizers; teams can

display tasks together with the answers they sub-

mitted, (one of) the correct solution(s), an expla-

nation and some hints for further in-depth study

in a “It’s informatics” section, and the number of

points achieved for each task; teachers can display

the total scores of all their teams and print atten-

dance certificates containing the name of the team

and other optional information like the names of

team members, the team’s scores, the global rank-

ing.

Some aspects of the Italian situation we have to

deal with must be pointed out. The software, hard-

ware, and network ecosystem in Italian schools is

rather varied. Although we recommend to schools

to use a recent version of one of two popular web

browsers (Mozilla Firefox and Google Chrome), the

devices on which our Bebras platform is used are very

different with respect to form factors (several schools

use tablets), performances, and way of administering.

For example, during the 2016 edition we saw connec-

tions from several different operating systems: MS

Windows 32bit (88.5%), GNU/Linux on Intel 32bit

(2.8%), GNU/Linux on Intel 64bit (2.4%), MS Win-

dows 64bit (2.2%), Linux on ARM (2.1%), and oth-

ers (iPad, BlackBerry, . . . ). Hardware is often old and

poorly managed: it is common to have unsynchro-

nized system clocks or misconfigured locales. More-

over, very few schools have a reliable Internet connec-

tion: we cannot assume the network is available dur-

ing the whole contest or even only when the allocated

time expires. All these issues have been considered in

the design of the Italian Bebras platform.

In the following we will use the following terms:

a taskset is an ordered collection of tasks that are

proposed to some category at some Bebras edition;

whenever a team accesses the platform and down-

loads a taskset, a test is instantiated, which gathers

all information concerning the taskset (and hence the

included tasks) and the team, together with answers

and tracking data. Hence, for each team there should

be exactly one (running or concluded) test, and for

each taskset there are as many tests as the number of

participants for the corresponding category.

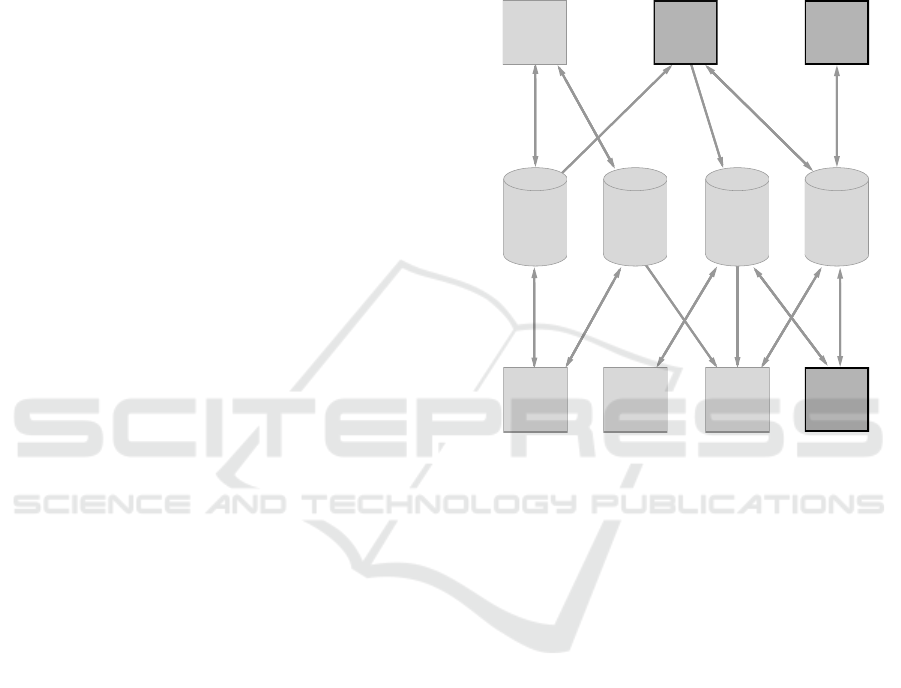

Figure 3 sketches the general architecture of the

Italian Bebras platform.

The system manages different kinds of data: Tasks

and Tasksets contains all information about categories

and tasks, including texts, images, and code for ani-

mation and interaction; Correction code defines how

tasks have to be graded; Answers & Tracking Data are

collected during the contest and include both the sub-

mitted answers and other tracking data such as switch

time among tasks or editing of previously inserted an-

swers; Teacher & Team Data contain all information

about teams (e.g., members’ name, age and gender),

schools and referring teachers.

Tasks

&

Task Sets

Answers

&

Tracking

Data

Correction

Code

Teacher

&

Team Data

Contest

Teachers

Backend

Contest

Help Desk

CorrectionEditing

Student

Training

Data

Analysis

Figure 3: The architecture of the server.

In the upper part of the figure there are the access

points designed to be used by contestants: Contest

is the main front end used by students to participate

to the contest; Teacher backend is used by teachers

to create, manage and monitor their teams; Student

training is used by students to look at and try tasks

from the previous contests.

In the lower part there are the access points de-

signed to be used by the Bebras staff: Editing is used

to collaboratively edit and code tasks, explanations

and solutions; Correction evaluates answers after sub-

mission and assigns scores accordingly; Data Analy-

sis performs statistical elaboration starting from col-

lected tracking data; Contest Help Desk is used during

the contest to monitor its evolution, detect problems

that may arise, manage teachers’ help requests.

The darker nodes in the figure are the access points

that are active during the contest; they will be dis-

cussed in the following section.

CSEDU 2018 - 10th International Conference on Computer Supported Education

352

3 DURING THE CONTEST

3.1 Contest

The Italian Bebras is delivered via HTTPS: during the

Bebras week, each team connects to a website con-

taining the HTML/CSS/Javascript for tasks (ten in the

2017 edition) and tries to solve them within a 45 min-

utes time limit.

To avoid a webpage look-and-feel and provide in-

stead a videogame-like layout, tasks are prepared so

that each of them takes up exactly the space avail-

able in the whole screen, independently of the device,

browser, or screen resolution used. A little space is

saved for the left navigation bar that allows teams to

switch among tasks, and the bottom navigation bar,

which shows the remaining time and provides buttons

to open the help page and to exit submitting the an-

swers. A screenshot of an interactive task is shown in

Figure 1.

The contest scenario, described in the previous

section, introduces several important problems to be

solved at a design level. The main problem is prob-

ably the unreliable nature of the Internet connection

available in many schools. In particular this becomes

critical when coupled to the strict time constraint for

solving the tasks. The first design choice is that all

needed resources (e.g., JSON with tasks’ definitions,

images, and so on) need to be successfully down-

loaded before the start of the test, so that the oper-

ations of browsing and solving tasks become inde-

pendent of the network. The browser’s Local Storage

mechanism (W3C, 2016) is used in order to guarantee

persistence of data not only during the normal opera-

tions of the test but also in case of computer’s crashes.

When a team finishes its test or at the expiration of the

available time, the system stops the interaction with

the tasks and tries to connect to the server in order to

send the answers. This operation has no timeout and

in general can be retried also after a switch off-on of

the computers, even in a following day. In some cases

however this solution is not feasible, because admin-

istration policies of the school labs may enforce a to-

tal reset of all caches, cookies, and local storage of

the browser at each switch on of the computers.

3.2 Help Desk

During the contest week, a phone helpline is available

to all the teachers, and Bebras staff members are con-

nected to the help desk site to monitor the advance-

ment status of tests. Due to the design restrictions

described in the previous section, the visible status

information are limited to: start of a test (with all in-

formation regarding school, referring teacher, starting

time), end of a test (with information about used time,

submitted answers and statistical data).

These simple pieces of information permit the

staff to individuate several anomalous situations:

more than one access by a single team, out-of-time

tests (e.g., time has expired but no answers have been

submitted due to network problems or errors in the

closing procedure), prematurely closed tests (espe-

cially for primary school level, some teams wrongly

terminate the tests instead of passing to the next task).

In each of these situations the help desk staff com-

piles a ticket, contacts the referring teacher, and if

needed operates on the server status to reset anoma-

lies. Teachers are guided in the operation to be ac-

complished on the client (for example resubmitting

the answers if not correctly done the first time).

3.3 Teacher Backend

Teams are enrolled to the Bebras challenge by their

teachers, who need first to sign up to the system. The

backend allows them to create teams by specifying

their name and category and by editing the data about

the team members.

During and at the end of the contest, teachers can

edit team data, for instance to rearrange teams in case

of absentees. They can also monitor their teams by

looking at a global table, and check whether their an-

swers have been correctly submitted to the Bebras

server. When something unexpected occurs (see the

cases mentioned in the section concerning Help desk),

they are notified via the backend and they can possi-

bly contact the help desk staff.

After the end of the contest week, answers are

evaluated and scores and placements appear within

the team table in the teacher backend.

Attendance certificates can be then produced as

PDF files and printed via the teacher backend. Each

certificate includes the name of the team, the school,

and the name of the supervising teacher; other data

can be optionally included team-wise, like the names

of team members, the gained score, or the overall

placement (given in terms of percentiles). Such op-

tion is given in order to not stress the competitive

aspect of the Bebras challenge: indeed teachers usu-

ally decide to make high placements public and avoid

highlighting low results. Certificates for supervising

teachers are also available.

More than a teacher from the same school may

enroll teams and, if they agree, the system allows

teachers from the same school to share information

about their teams. Not requiring a unique referring

teacher distributes the work load among several peo-

A Platform for the Italian Bebras

353

ple and permits to give credit to the many teachers

in a school who engage their classes in the Bebras

challenge by training teams and/or supervising them

during the contest. Thus the enrollment of teams is

fostered, which increases the overall number of par-

ticipants.

4 LIFE CYCLE OF A TASK

We illustrate the functionalities of the platform by

presenting the development phases of the tasks that

are included in the Italian contest.

1. The tasks are conceived by a member of the Be-

bras community, they are revised by other mem-

bers, and they are finalized during the annual In-

ternational Bebras Task Workshop.

2. A set of tasks is selected, adapted and translated

into Italian; in particular some kind of animation,

interaction or feedback is planned for most of the

tasks.

3. Each selected task text is collaboratively edited

and inserted into the Bebras platform; the edit-

ing covers also the explanation and the “It’s in-

formatics” texts, and the coding of the interactive

component of the tasks.

4. During the contest, teams access the platform to

display the tasks and insert answers; when the

time expires, answers are submitted to the server

together with tracking data.

5. After the contest, answers are automatically eval-

uated and scores are assigned to teams; the logics

to evaluate answers and assign scores is usually

implemented before the contest.

6. After the contest week, students can look at their

score, display tasks, and compare the answers

they submitted with the proposed correct solution

and related comments.

7. Answers and tracking data are analyzed in order

to study perceived difficulties, preferred tasks, dif-

ferences among age levels, and other relevant is-

sues.

Such phases are discussed in the following sec-

tion, where the task in Figure 1 is used as leading ex-

ample.

4.1 Task Editing

The platform provides a multilanguage (BBCode,

Markdown, HTML) editor with live preview which

allows the Bebras staff to collaboratively edit and

code tasks, explanations and solutions. In particular

it enables the staff to prepare and adjust the layout of

tasks, create and edit texts, make tables, provide the

JSON code for the interactive parts and the Javascript

code to check the answers, this all directly within the

platform, so that each update on a task is immediately

available to everybody.

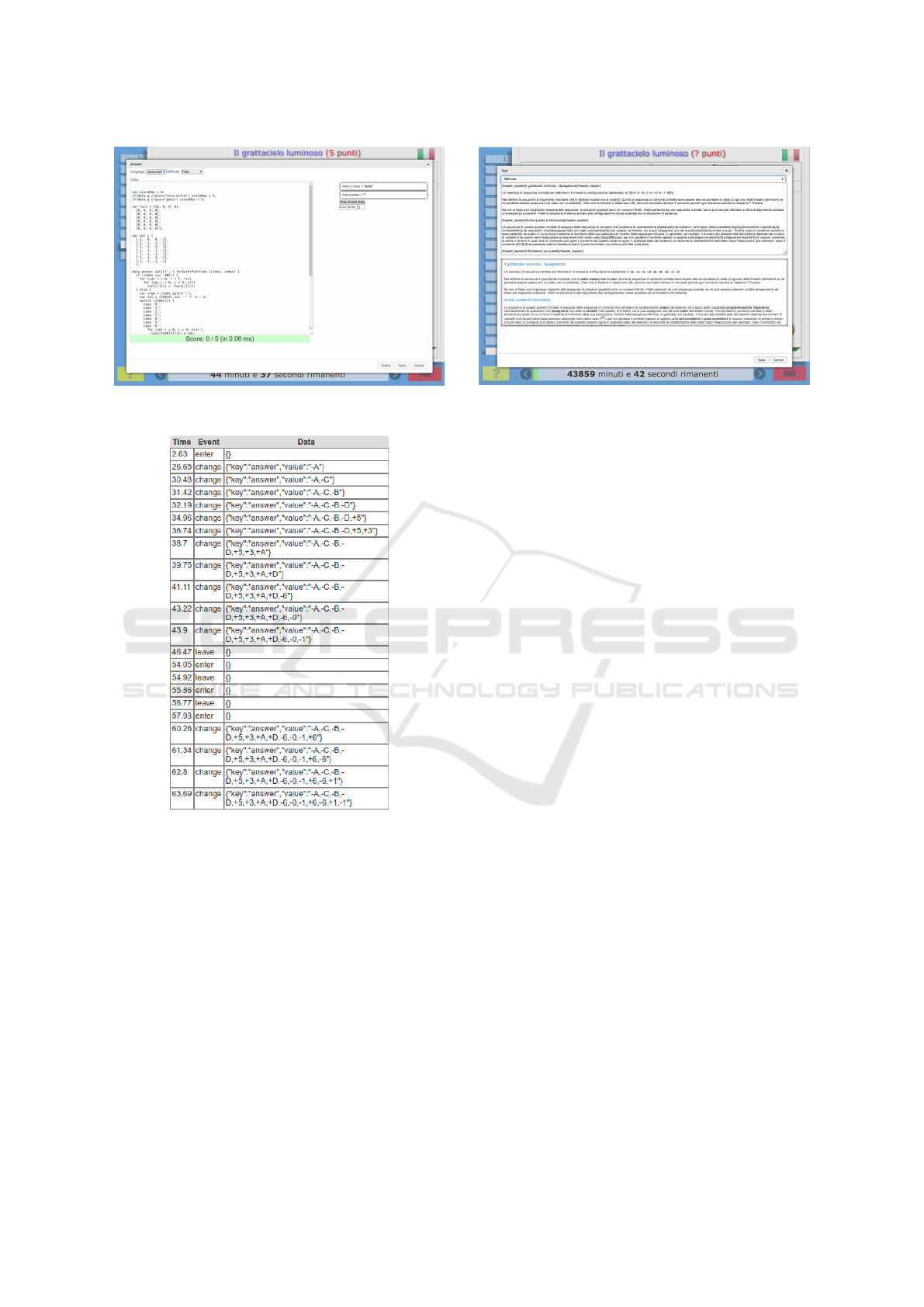

Figure 4 depicts the Skyscraper task in editing

mode: the layout of the task is shown and it is pos-

sible to click on each part to modify it, with respect

both to its layout and its content. In this figure the

interactive part was clicked on and the pop-up menu

shows the object type and allows one to select what

to view and edit, in this case its JSON and the code

associated with it. After selection of an option, a full

size editor window pops up, which closes after sav-

ing, uncovering the updated task.

By clicking outside the layout rectangles, a differ-

ent menu pops up with options to access and edit the

“checking program” and “explain and answer” (that

is the explanation and “It’s informatics”) parts of the

task.

Figure 5 shows the code windows for the

skyscraper task. It is possible to modify it and to test

it on a given answer.

Each Bebras task must have an explanation about

how to solve it and an “It’s informatics” part which

illustrates the informatics concepts on which the task

is based. In the platform these two parts belong to-

gether with the task. When accessing this part, the

editor opens a two-pane window, the top pane for the

source code, the bottom pane providing a live preview

(see Figure 7).

Figure 4: A task in editing mode: the layout of the different

parts.

CSEDU 2018 - 10th International Conference on Computer Supported Education

354

Figure 5: A task in editing mode: the answer’s checking

code.

Figure 6: Tracking data about the accesses to the task and

modifications to the answer.

4.2 Correction

Another important component of the system is the

part devoted to the correction of the tests. The de-

sign solution takes into account several requirements:

in order to guarantee the fairness of the contest no

correction/solution hint must be available on the stu-

dent client during the contest; on the contrary dur-

ing training sessions the correction could be accom-

plished client side; the answer verification and score

attribution should potentially consider several factors

like partial solutions, category of the solver (the same

task could be offered to different categories but the

score is proportional to the relative difficulty), num-

Figure 7: A task in editing mode: the explanation and “It’s

informatics” parts.

ber of trials or time used.

The task editor makes possible (see Figure 5) to

write a Javascript function returning a pair of integers

corresponding to the actual and maximum score of

the task. The system makes the context of the task

(category, runtime and answer data) available to the

function.

The Javascript language has been chosen as the

preferred language (the system permits to use also

Python and PHP) because of the possibility to easily

execute it on the client during offline training.

4.3 Data Analysis

In order to better understand and reason on contest

results, a significant amount of data is tracked during

the contest.

Time of events (e.g., entering and exiting a task,

clicking on buttons, changing text-area values) and

values of changed fields are directly available as raw

data (see Figure 6).

These data permit us to calculate interesting met-

rics: total time spent on a task, time dedicated to

the reading of a task before the first interaction, time

spent before deciding to skip a task, successive revi-

sion of an answer even after exploring other tasks.

Such data is processed to discover statistical

anomalies or other clues of cheating behaviours. We

recall that the contest is a non competitive challenge,

but identifying these situations is needed in order to

obtain clean and reliable statistical data.

Moreover, we use them to analyze the students be-

haviour and better understand what attracts their at-

tention and effort, by looking at how they peruse the

tasks.

A Platform for the Italian Bebras

355

5 RELATED WORKS

Most of the countries involved in Bebras have their

own Contest Management System, often developed

by the organizing group.

(Kristan et al., 2014) describes the Slovenian sys-

tem, used also in Serbia. It is based on Yii (a PHP web

framework, see (Yii Software LLC, 2017)) and it sup-

ports multiple choice answers and interactive tasks,

properly written in HTML/CSS/Javascript. They also

provide a task preparation system based on Django (a

Python web framework, see (Django Software Foun-

dation, 2017)) that can be used to create customized

contests. The three-tier architecture of the Slovenian

system is specially designed to be scalable and fault

tolerant: it should be able to cope with a parallel

participation of dozens of thousands of participants.

They consider several levels of administrative roles in

order to manage all the life cycle of the contest: sys-

tem, country, coordinators, and mentors. Persistence

of data is provided by MySQL databases (Oracle Cor-

poration, 2017).

France has a highly modular system (France-IOI,

2017) used both in Bebras and Algorea (an advanced

contest focused on algorithms). They have the largest

user base and their system is highly scalable on on-

demand cloud computing platforms like Amazon web

services (Amazon.com, 2017). They also provide the

review system used by the Bebras community to setup

the annual workshop. The French system is imple-

mented in PHP and tasks are to be manually written in

HTML/CSS/Javascript, possibly using their own pre-

defined libraries for common task types.

(Dagien

˙

e et al., 2017a; Dagien

˙

e et al., 2017b)

describe the Lithuanian system. This system is

also based on a three-tier architecture implemented

in MySQL, PHP and AngularJS (Google, 2017), a

Javascript web application framework. Tasks are en-

coded using HTML/CSS/Javascript and can be au-

thored with the “Bebras lodge” editor, a separate sys-

tem used also for achiving tasks. The contest man-

agement system provides the ability to export tasks in

the SCORM format (Advanced Distributed Learning,

2017), a popular standard used by many e-learning

platforms.

6 CONCLUSIONS

This paper presented the web-based platform support-

ing the Italian edition of the Bebras challenge, which

is used by participants and organizers before, during

and after the contest. The platform core has been

online for the last three years, managing the partici-

pation of around 25,000 teams, as well as a signifi-

cant amount of training sessions. The platform is a

key asset for the Italian Bebras, it enables the man-

agement of a contest involving thousands of students

with a very lean operational staff: five academics and

one professional programmer who collaborate in their

spare time, and 500 hours of helpdesk support mainly

delegated to junior collaborators.

Several features have been added during its three

years of activity, which can be summarized as fol-

lows: tasks access is web based, but the system is

quite robust since it keeps working even with poor

or intermittent Internet connections, which are quite

common in Italian schools, and allows one to re-

cover data in case of client crashes; tasks can be dis-

played from a browser running on a variety of dif-

ferent devices, spanning from computers with low-

resolution old screens to last-generation tablets and

smartphones; when rendered each task occupies ex-

actly the size of the screen, independently of the used

device, so that the contestants’ experience is more

similar to videogaming than web browsing; besides

data about team members and submitted answers,

during the contest several other data are tracked that

concern the interactions of users with the platform,

thus enabling a thorough analysis of results, also in

light of a better assessment of the difficulty of each

task (Bellettini et al., 2015; Lonati et al., 2017b);

management of teams by teachers is integrated within

the platform; help desk tools are available to moni-

tor the contest and support teachers whenever prob-

lems arise; finally, all the information related to a

given task (i.e., text, images, and code for anima-

tion/interaction/feedback and for automatic evalua-

tion) is embedded into a JSON encoding, thus allow-

ing the possibility of exporting tasks.

We plan to extend further the analyses of track-

ing data, in order to better understand how students

engage with tasks. Moreover, we want to exploit

the modularity of our platform to implement another

component of the Bebras platform, that will enable

teachers and students to make use of the tasks beyond

the contest, for instance by accessing tasks individu-

ally out of specific tasksets, exporting them in print-

able forms, or creating personal tasksets. We hope

this will foster the use of tasks in curricular activi-

ties, as educational resources to learn informatics and

computational thinking.

ACKNOWLEDGEMENTS

The authors thank the international Bebras commu-

nity and all the schools which took part in the contest.

CSEDU 2018 - 10th International Conference on Computer Supported Education

356

REFERENCES

Advanced Distributed Learning (2017). Sharable con-

tent object reference model. http://adlnet.gov/adl-

research/scorm/. Last accessed on November 2017.

ALaDDIn (2017). Bebras dell’informatica.

https://bebras.it. Last accessed on November

2017.

Amazon.com (2017). Amazon web services.

https://aws.amazon.com/. Last accessed on November

2017.

Bellettini, C., Lonati, V., Malchiodi, D., Monga, M., Mor-

purgo, A., and Torelli, M. (2015). How challenging

are Bebras tasks? an IRT analysis based on the per-

formance of Italian students. In Proceedings of the

20th Annual Conference on Innovation and Technol-

ogy in Computer Science Education ITiCSE’15, pages

27–32. ACM.

Boyle, A. and Hutchison, D. (2009). Sophisticated tasks in

e-assessment: what are they and what are their bene-

fits? Assessment & Evaluation in Higher Education,

34(3):305–319.

Cartelli, A. (2009). Il sito italiano della

competizione internazionale ”il castoro”.

http://www.competenzedigitali.it/castoro-i.htm.

Currently offline.

Dagien

˙

e, V. (2010). Sustaining informatics education by

contests. In Proceedings of ISSEP 2010, volume 5941

of Lecture Notes in Computer Science, pages 1–12,

Zurich, Switzerland. Springer.

Dagien

˙

e, V. and Sentance, S. (2016). It’s computational

thinking! bebras tasks in the curriculum. In Proceed-

ings of ISSEP 2016, volume 9973 of Lecture Notes in

Computer Science, pages 28–39, Cham. Springer.

Dagien

˙

e, V., Stupurien

˙

e, G., and Vinikien

˙

e, L. (2017a). In-

formatics based tasks development in the bebras con-

test management system. In Dama

ˇ

sevi

ˇ

cius, R. and

Mika

ˇ

syt

˙

e, V., editors, Information and Software Tech-

nologies: 23rd International Conference, ICIST 2017,

Druskininkai, Lithuania, October 12–14, 2017, Pro-

ceedings, pages 466–477, Cham. Springer Interna-

tional Publishing.

Dagien

˙

e, V., Stupurien

˙

e, G., Vinikien

˙

e, L., and Zakauskas,

R. (2017b). Introduction to bebras challenge manage-

ment: Overview and analyses of developed systems.

In Dagien

˙

e, V. and Hellas, A., editors, Informatics

in Schools: Focus on Learning Programming: 10th

International Conference on Informatics in Schools:

Situation, Evolution, and Perspectives, ISSEP 2017,

Helsinki, Finland, November 13-15, 2017, Proceed-

ings, pages 232–243, Cham. Springer International

Publishing.

Django Software Foundation (2017). Django.

https://www.djangoproject.com/. Last accessed

on November 2017.

France-IOI (2017). France-ioi software ecosys-

tem. https://france-ioi.github.io/projects-

overview/readme/. Last accessed on November

2017.

Google (2017). AngularJS. https://angularjs.org/. Last ac-

cessed on November 2017.

Haberman, B., Cohen, A., and Dagien

˙

e, V. (2011). The

beaver contest: Attracting youngsters to study com-

puting. In Proceedings of ITiCSE 2011, pages 378–

378, Darmstadt, Germany. ACM.

Kristan, N., Gosti

ˇ

sa, D., Fele-

ˇ

Zor

ˇ

z, G., and Brodnik, A.

(2014). A high-availability bebras competition sys-

tem. In International Conference on Informatics

in Schools: Situation, Evolution, and Perspectives,

pages 78–87. Springer.

Lissoni, A., Lonati, V., Monga, M., Morpurgo, A., and

Torelli, M. (2008). Working for a leap in the general

perception of computing. In Cortesi, A. and Luccio,

F., editors, Proceedings of Informatics Education Eu-

rope III, pages 134–139. ACM – IFIP.

Lonati, V., Malchiodi, D., Monga, M., and Morpurgo, A.

(2017a). Bebras as a teaching resource: classifying

the tasks corpus using computational thinking skills.

In Proceedings of the 22nd Annual Conference on In-

novation and Technology in Computer Science Educa-

tion (ITiCSE 2017), page 366.

Lonati, V., Malchiodi, D., Monga, M., and Morpurgo, A.

(2017b). How presentation affects the difficulty of

computational thinking tasks: an IRT analysis. In Pro-

ceedings of the 17th Koli Calling International Con-

ference on Computing Education Research, pages 60–

69. ACM New York, NY, USA.

Lonati, V., Monga, M., Morpurgo, A., Malchiodi, D., and

Calcagni, A. (2017c). Promoting computational think-

ing skills: would you use this Bebras task? In Pro-

ceedings of the International Conference on Infor-

matics in Schools: Situation, Evolution and Perspec-

tives (ISSEP2017), Lecture Notes in Computer Sci-

ence. Springer-Verlag. To appear.

Oracle Corporation (2017). MySQL.

https://www.mysql.com/. Last accessed on November

2017.

Straw, S., Bamford, S., and Styles, B. (2017). Ran-

domised controlled trial and process evaluation of

code clubs. Technical Report CODE01, National

Foundation for Educational Research. Available at:

https://www.nfer.ac.uk/publications/CODE01.

The Bebras Community (2017). The Bebras international

challenge on informatics and computational thinking.

https://bebras.org. Last accessed on November 2017.

van der Vegt, W. (2013). Predicting the difficulty level of a

Bebras task. Olympiads in Informatics, 7:132–139.

W3C (2016). Web Storage, second edition. W3C

Recommendation http://www.w3.org/TR/2016/REC-

webstorage-20160419/.

Yii Software LLC (2017). Yii framework.

http://www.yiiframework.com/. Last accessed

on November 2017.

A Platform for the Italian Bebras

357